It typically involves using computer programs to compute approximate solutions to Maxwell's equations to calculate antenna performance, electromagnetic compatibility, radar cross section and electromagnetic wave propagation when not in free space. A large subfield is antenna modeling computer programs, which calculate the radiation pattern and electrical properties of radio antennas, and are widely used to design antennas for specific applications.

Background

Several real-world electromagnetic problems like electromagnetic scattering, electromagnetic radiation, modeling of waveguides etc., are not analytically calculable, for the multitude of irregular geometries found in actual devices. Computational numerical techniques can overcome the inability to derive closed form solutions of Maxwell's equations under various constitutive relations of media, and boundary conditions. This makes computational electromagnetics (CEM) important to the design, and modeling of antenna, radar, satellite and other communication systems, nanophotonic devices and high speed silicon electronics, medical imaging, cell-phone antenna design, among other applications.

CEM typically solves the problem of computing the E (electric) and H (magnetic) fields across the problem domain (e.g., to calculate antenna radiation pattern for an arbitrarily shaped antenna structure). Also calculating power flow direction (Poynting vector), a waveguide's normal modes, media-generated wave dispersion, and scattering can be computed from the E and H fields. CEM models may or may not assume symmetry, simplifying real world structures to idealized cylinders, spheres, and other regular geometrical objects. CEM models extensively make use of symmetry, and solve for reduced dimensionality from 3 spatial dimensions to 2D and even 1D.

An eigenvalue problem formulation of CEM allows us to calculate steady state normal modes in a structure. Transient response and impulse field effects are more accurately modeled by CEM in time domain, by FDTD. Curved geometrical objects are treated more accurately as finite elements FEM, or non-orthogonal grids. Beam propagation method (BPM) can solve for the power flow in waveguides. CEM is application specific, even if different techniques converge to the same field and power distributions in the modeled domain.

Overview of methods

The most common numerical approach is to discretize ("mesh") the problem space in terms of grids or regular shapes ("cells"), and solve Maxwell's equations simultaneously across all cells. Discretization consumes computer memory, and solving the relevant equations takes significant time. Large-scale CEM problems face memory and CPU limitations, and combating these limitations is an active area of research. High performance clustering, vector processing, and/or parallelism is often required to make the computation practical. Some typical methods involve: time-stepping through the equations over the whole domain for each time instant; banded matrix inversion to calculate the weights of basis functions (when modeled by finite element methods); matrix products (when using transfer matrix methods); calculating numerical integrals (when using the method of moments); using fast Fourier transforms; and time iterations (when calculating by the split-step method or by BPM).

Choice of methods

Choosing the right technique for solving a problem is important, as choosing the wrong one can either result in incorrect results, or results which take excessively long to compute. However, the name of a technique does not always tell one how it is implemented, especially for commercial tools, which will often have more than one solver.

Davidson gives two tables comparing the FEM, MoM and FDTD techniques in the way they are normally implemented. One table is for both open region (radiation and scattering problems) and another table is for guided wave problems.

Maxwell's equations in hyperbolic PDE form

Maxwell's equations can be formulated as a hyperbolic system of partial differential equations. This gives access to powerful techniques for numerical solutions.

It is assumed that the waves propagate in the (x,y)-plane and restrict the direction of the magnetic field to be parallel to the z-axis and thus the electric field to be parallel to the (x,y) plane. The wave is called a transverse magnetic (TM) wave. In 2D and no polarization terms present, Maxwell's equations can then be formulated as:

where u, A, B, and C are defined as

In this representation, is the forcing function, and is in the same space as . It can be used to express an externally applied field or to describe an optimization constraint. As formulated above:

may also be explicitly defined equal to zero to simplify certain problems, or to find a characteristic solution, which is often the first step in a method to find the particular inhomogeneous solution.

Integral equation solvers

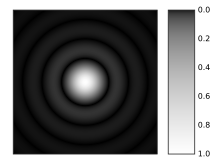

The discrete dipole approximation

The discrete dipole approximation is a flexible technique for computing scattering and absorption by targets of arbitrary geometry. The formulation is based on integral form of Maxwell equations. The DDA is an approximation of the continuum target by a finite array of polarizable points. The points acquire dipole moments in response to the local electric field. The dipoles of course interact with one another via their electric fields, so the DDA is also sometimes referred to as the coupled dipole approximation. The resulting linear system of equations is commonly solved using conjugate gradient iterations. The discretization matrix has symmetries (the integral form of Maxwell equations has form of convolution) enabling fast Fourier transform to multiply matrix times vector during conjugate gradient iterations.

Method of moments and boundary element method

The method of moments (MoM) or boundary element method (BEM) is a numerical computational method of solving linear partial differential equations which have been formulated as integral equations (i.e. in boundary integral form). It can be applied in many areas of engineering and science including fluid mechanics, acoustics, electromagnetics, fracture mechanics, and plasticity.

MoM has become more popular since the 1980s. Because it requires calculating only boundary values, rather than values throughout the space, it is significantly more efficient in terms of computational resources for problems with a small surface/volume ratio. Conceptually, it works by constructing a "mesh" over the modeled surface. However, for many problems, MoM are significantly computationally less efficient than volume-discretization methods (finite element method, finite difference method, finite volume method). Boundary element formulations typically give rise to fully populated matrices. This means that the storage requirements and computational time will tend to grow according to the square of the problem size. By contrast, finite element matrices are typically banded (elements are only locally connected) and the storage requirements for the system matrices typically grow linearly with the problem size. Compression techniques (e.g. multipole expansions or adaptive cross approximation/hierarchical matrices) can be used to ameliorate these problems, though at the cost of added complexity and with a success-rate that depends heavily on the nature and geometry of the problem.

MoM is applicable to problems for which Green's functions can be calculated. These usually involve fields in linear homogeneous media. This places considerable restrictions on the range and generality of problems suitable for boundary elements. Nonlinearities can be included in the formulation, although they generally introduce volume integrals which require the volume to be discretized before solution, removing an oft-cited advantage of MoM.

Fast multipole method

The fast multipole method (FMM) is an alternative to MoM or Ewald summation. It is an accurate simulation technique and requires less memory and processor power than MoM. The FMM was first introduced by Greengard and Rokhlin and is based on the multipole expansion technique. The first application of the FMM in computational electromagnetics was by Engheta et al.(1992). The FMM has also applications in computational bioelectromagnetics in the Charge based boundary element fast multipole method. FMM can also be used to accelerate MoM.

Plane wave time-domain

While the fast multipole method is useful for accelerating MoM solutions of integral equations with static or frequency-domain oscillatory kernels, the plane wave time-domain (PWTD) algorithm employs similar ideas to accelerate the MoM solution of time-domain integral equations involving the retarded potential. The PWTD algorithm was introduced in 1998 by Ergin, Shanker, and Michielssen.

Partial element equivalent circuit method

The partial element equivalent circuit (PEEC) is a 3D full-wave modeling method suitable for combined electromagnetic and circuit analysis. Unlike MoM, PEEC is a full spectrum method valid from dc to the maximum frequency determined by the meshing. In the PEEC method, the integral equation is interpreted as Kirchhoff's voltage law applied to a basic PEEC cell which results in a complete circuit solution for 3D geometries. The equivalent circuit formulation allows for additional SPICE type circuit elements to be easily included. Further, the models and the analysis apply to both the time and the frequency domains. The circuit equations resulting from the PEEC model are easily constructed using a modified loop analysis (MLA) or modified nodal analysis (MNA) formulation. Besides providing a direct current solution, it has several other advantages over a MoM analysis for this class of problems since any type of circuit element can be included in a straightforward way with appropriate matrix stamps. The PEEC method has recently been extended to include nonorthogonal geometries. This model extension, which is consistent with the classical orthogonal formulation, includes the Manhattan representation of the geometries in addition to the more general quadrilateral and hexahedral elements. This helps in keeping the number of unknowns at a minimum and thus reduces computational time for nonorthogonal geometries.

Cagniard-deHoop method of moments

The Cagniard-deHoop method of moments (CdH-MoM) is a 3-D full-wave time-domain integral-equation technique that is formulated via the Lorentz reciprocity theorem. Since the CdH-MoM heavily relies on the Cagniard-deHoop method, a joint-transform approach originally developed for the analytical analysis of seismic wave propagation in the crustal model of the Earth, this approach is well suited for the TD EM analysis of planarly-layered structures. The CdH-MoM has been originally applied to time-domain performance studies of cylindrical and planar antennas and, more recently, to the TD EM scattering analysis of transmission lines in the presence of thin sheets and electromagnetic metasurfaces, for example.

Differential equation solvers

Finite-Difference Frequency-Domain

Finite-difference frequency-domain (FDFD) provides a rigorous solution to Maxwell’s equations in the frequency-domain using the finite-difference method. FDFD is arguably the simplest numerical method that still provides a rigorous solution. It is incredibly versatile and able to solve virtually any problem in electromagnetics. The primary drawback of FDFD is poor efficiency compared to other methods. On modern computers, however, a huge array of problems are easily handled such as calculated guided modes in waveguides, calculating scattering from an object, calculating transmission and reflection from photonic crystals, calculate photonic band diagrams, simulating metamaterials, and much more.

FDFD may be the best "first" method to learn in computational electromagnetics (CEM). It involves almost all the concepts encountered with other methods, but in a much simpler framework. Concepts include boundary conditions, linear algebra, injecting sources, representing devices numerically, and post-processing field data to calculate meaningful things. This will help a person learn other techniques as well as provide a way to test and benchmark those other techniques.

FDFD is very similar to finite-difference time-domain (FDTD). Both methods represent space as an array of points and enforces Maxwell’s equations at each point. FDFD puts this large set of equations into a matrix and solves all the equations simultaneously using linear algebra techniques. In contrast, FDTD continually iterates over these equations to evolve a solution over time. Numerically, FDFD and FDTD are very similar, but their implementations are very different.

Finite-difference time-domain

Finite-difference time-domain (FDTD) is a popular CEM technique. It is easy to understand. It has an exceptionally simple implementation for a full wave solver. It is at least an order of magnitude less work to implement a basic FDTD solver than either an FEM or MoM solver. FDTD is the only technique where one person can realistically implement oneself in a reasonable time frame, but even then, this will be for a quite specific problem. Since it is a time-domain method, solutions can cover a wide frequency range with a single simulation run, provided the time step is small enough to satisfy the Nyquist–Shannon sampling theorem for the desired highest frequency.

FDTD belongs in the general class of grid-based differential time-domain numerical modeling methods. Maxwell's equations (in partial differential form) are modified to central-difference equations, discretized, and implemented in software. The equations are solved in a cyclic manner: the electric field is solved at a given instant in time, then the magnetic field is solved at the next instant in time, and the process is repeated over and over again.

The basic FDTD algorithm traces back to a seminal 1966 paper by Kane Yee in IEEE Transactions on Antennas and Propagation. Allen Taflove originated the descriptor "Finite-difference time-domain" and its corresponding "FDTD" acronym in a 1980 paper in IEEE Trans. Electromagn. Compat. Since about 1990, FDTD techniques have emerged as the primary means to model many scientific and engineering problems addressing electromagnetic wave interactions with material structures. An effective technique based on a time-domain finite-volume discretization procedure was introduced by Mohammadian et al. in 1991. Current FDTD modeling applications range from near-DC (ultralow-frequency geophysics involving the entire Earth-ionosphere waveguide) through microwaves (radar signature technology, antennas, wireless communications devices, digital interconnects, biomedical imaging/treatment) to visible light (photonic crystals, nanoplasmonics, solitons, and biophotonics). Approximately 30 commercial and university-developed software suites are available.

Discontinuous time-domain method

Among many time domain methods, discontinuous Galerkin time domain (DGTD) method has become popular recently since it integrates advantages of both the finite volume time domain (FVTD) method and the finite element time domain (FETD) method. Like FVTD, the numerical flux is used to exchange information between neighboring elements, thus all operations of DGTD are local and easily parallelizable. Similar to FETD, DGTD employs unstructured mesh and is capable of high-order accuracy if the high-order hierarchical basis function is adopted. With the above merits, DGTD method is widely implemented for the transient analysis of multiscale problems involving large number of unknowns.

Multiresolution time-domain

MRTD is an adaptive alternative to the finite difference time domain method (FDTD) based on wavelet analysis.

Finite element method

The finite element method (FEM) is used to find approximate solution of partial differential equations (PDE) and integral equations. The solution approach is based either on eliminating the time derivatives completely (steady state problems), or rendering the PDE into an equivalent ordinary differential equation, which is then solved using standard techniques such as finite differences, etc.

In solving partial differential equations, the primary challenge is to create an equation which approximates the equation to be studied, but which is numerically stable, meaning that errors in the input data and intermediate calculations do not accumulate and destroy the meaning of the resulting output. There are many ways of doing this, with various advantages and disadvantages. The finite element method is a good choice for solving partial differential equations over complex domains or when the desired precision varies over the entire domain.

Finite integration technique

The finite integration technique (FIT) is a spatial discretization scheme to numerically solve electromagnetic field problems in time and frequency domain. It preserves basic topological properties of the continuous equations such as conservation of charge and energy. FIT was proposed in 1977 by Thomas Weiland and has been enhanced continually over the years. This method covers the full range of electromagnetics (from static up to high frequency) and optic applications and is the basis for commercial simulation tools: CST Studio Suite developed by Computer Simulation Technology (CST AG) and Electromagnetic Simulation solutions developed by Nimbic.

The basic idea of this approach is to apply the Maxwell equations in integral form to a set of staggered grids. This method stands out due to high flexibility in geometric modeling and boundary handling as well as incorporation of arbitrary material distributions and material properties such as anisotropy, non-linearity and dispersion. Furthermore, the use of a consistent dual orthogonal grid (e.g. Cartesian grid) in conjunction with an explicit time integration scheme (e.g. leap-frog-scheme) leads to compute and memory-efficient algorithms, which are especially adapted for transient field analysis in radio frequency (RF) applications.

Pseudo-spectral time domain

This class of marching-in-time computational techniques for Maxwell's equations uses either discrete Fourier or discrete Chebyshev transforms to calculate the spatial derivatives of the electric and magnetic field vector components that are arranged in either a 2-D grid or 3-D lattice of unit cells. PSTD causes negligible numerical phase velocity anisotropy errors relative to FDTD, and therefore allows problems of much greater electrical size to be modeled.

Pseudo-spectral spatial domain

PSSD solves Maxwell's equations by propagating them forward in a chosen spatial direction. The fields are therefore held as a function of time, and (possibly) any transverse spatial dimensions. The method is pseudo-spectral because temporal derivatives are calculated in the frequency domain with the aid of FFTs. Because the fields are held as functions of time, this enables arbitrary dispersion in the propagation medium to be rapidly and accurately modelled with minimal effort. However, the choice to propagate forward in space (rather than in time) brings with it some subtleties, particularly if reflections are important.

Transmission line matrix

Transmission line matrix (TLM) can be formulated in several means as a direct set of lumped elements solvable directly by a circuit solver (ala SPICE, HSPICE, et al.), as a custom network of elements or via a scattering matrix approach. TLM is a very flexible analysis strategy akin to FDTD in capabilities, though more codes tend to be available with FDTD engines.

Locally one-dimensional

This is an implicit method. In this method, in two-dimensional case, Maxwell equations are computed in two steps, whereas in three-dimensional case Maxwell equations are divided into three spatial coordinate directions. Stability and dispersion analysis of the three-dimensional LOD-FDTD method have been discussed in detail.

Other methods

Eigenmode expansion

Eigenmode expansion (EME) is a rigorous bi-directional technique to simulate electromagnetic propagation which relies on the decomposition of the electromagnetic fields into a basis set of local eigenmodes. The eigenmodes are found by solving Maxwell's equations in each local cross-section. Eigenmode expansion can solve Maxwell's equations in 2D and 3D and can provide a fully vectorial solution provided that the mode solvers are vectorial. It offers very strong benefits compared with the FDTD method for the modelling of optical waveguides, and it is a popular tool for the modelling of fiber optics and silicon photonics devices.

Physical optics

Physical optics (PO) is the name of a high frequency approximation (short-wavelength approximation) commonly used in optics, electrical engineering and applied physics. It is an intermediate method between geometric optics, which ignores wave effects, and full wave electromagnetism, which is a precise theory. The word "physical" means that it is more physical than geometrical optics and not that it is an exact physical theory.

The approximation consists of using ray optics to estimate the field on a surface and then integrating that field over the surface to calculate the transmitted or scattered field. This resembles the Born approximation, in that the details of the problem are treated as a perturbation.

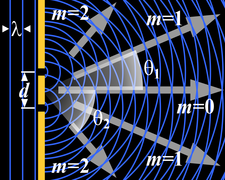

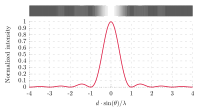

Uniform theory of diffraction

The uniform theory of diffraction (UTD) is a high frequency method for solving electromagnetic scattering problems from electrically small discontinuities or discontinuities in more than one dimension at the same point.

The uniform theory of diffraction approximates near field electromagnetic fields as quasi optical and uses ray diffraction to determine diffraction coefficients for each diffracting object-source combination. These coefficients are then used to calculate the field strength and phase for each direction away from the diffracting point. These fields are then added to the incident fields and reflected fields to obtain a total solution.

Validation

Validation is one of the key issues facing electromagnetic simulation users. The user must understand and master the validity domain of its simulation. The measure is, "how far from the reality are the results?"

Answering this question involves three steps: comparison between simulation results and analytical formulation, cross-comparison between codes, and comparison of simulation results with measurement.

Comparison between simulation results and analytical formulation

For example, assessing the value of the radar cross section of a plate with the analytical formula:

Cross-comparison between codes

One example is the cross comparison of results from method of moments and asymptotic methods in their validity domains.

Comparison of simulation results with measurement

The final validation step is made by comparison between measurements and simulation. For example, the RCS calculation and the measurement of a complex metallic object at 35 GHz. The computation implements GO, PO and PTD for the edges.

Validation processes can clearly reveal that some differences can be explained by the differences between the experimental setup and its reproduction in the simulation environment.

Light scattering codes

There are now many efficient codes for solving electromagnetic scattering problems. They are listed as:

- discrete dipole approximation codes,

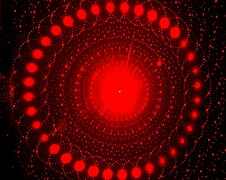

- codes for electromagnetic scattering by cylinders,

- codes for electromagnetic scattering by spheres.

Solutions which are analytical, such as Mie solution for scattering by spheres or cylinders, can be used to validate more involved techniques.

![{\displaystyle {\begin{aligned}{\bar {u}}&=\left({\begin{matrix}E_{x}\\E_{y}\\H_{z}\end{matrix}}\right),\\[1ex]A&=\left({\begin{matrix}0&0&0\\0&0&{\frac {1}{\epsilon }}\\0&{\frac {1}{\mu }}&0\end{matrix}}\right),\\[1ex]B&=\left({\begin{matrix}0&0&{\frac {-1}{\epsilon }}\\0&0&0\\{\frac {-1}{\mu }}&0&0\end{matrix}}\right),\\[1ex]C&=\left({\begin{matrix}{\frac {\sigma }{\epsilon }}&0&0\\0&{\frac {\sigma }{\epsilon }}&0\\0&0&0\end{matrix}}\right).\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/41523398447a19e2ea2e1aedb1bcbb1cf5c93186)

![Colors seen in a spider web are partially due to diffraction, according to some analyses.[17]](https://upload.wikimedia.org/wikipedia/commons/thumb/2/26/Diffraction_pattern_in_spiderweb.JPG/270px-Diffraction_pattern_in_spiderweb.JPG)

![{\displaystyle I(\theta )=I_{0}\,\operatorname {sinc} ^{2}\left[{\frac {d\pi }{\lambda }}(\sin \theta \pm \sin \theta _{\text{i}})\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1e5854942c75d71ac603f3275ce5b18866ed637f)