General relativity is a theory of gravitation that was developed by Albert Einstein between 1907 and 1915. According to general relativity, the observed gravitational effect between masses results from their warping of spacetime.

By the beginning of the 20th century, Newton's law of universal gravitation had been accepted for more than two hundred years as a valid description of the gravitational force between masses. In Newton's model, gravity is the result of an attractive force between massive objects. Although even Newton was troubled by the unknown nature of that force, the basic framework was extremely successful at describing motion.

Experiments and observations show that Einstein's description of gravitation accounts for several effects that are unexplained by Newton's law, such as minute anomalies in the orbits of Mercury and other planets. General relativity also predicts novel effects of gravity, such as gravitational waves, gravitational lensing and an effect of gravity on time known as gravitational time dilation. Many of these predictions have been confirmed by experiment or observation, most recently gravitational waves.

General relativity has developed into an essential tool in modern astrophysics. It provides the foundation for the current understanding of black holes, regions of space where the gravitational effect is strong enough that even light cannot escape. Their strong gravity is thought to be responsible for the intense radiation emitted by certain types of astronomical objects (such as active galactic nuclei or microquasars). General relativity is also part of the framework of the standard Big Bang model of cosmology.

Although general relativity is not the only relativistic theory of gravity, it is the simplest such theory that is consistent with the experimental data. Nevertheless, a number of open questions remain, the most fundamental of which is how general relativity can be reconciled with the laws of quantum physics to produce a complete and self-consistent theory of quantum gravity.

From special to general relativity

In September 1905, Albert Einstein published his theory of special relativity, which reconciles Newton's laws of motion with electrodynamics (the interaction between objects with electric charge). Special relativity introduced a new framework for all of physics by proposing new concepts of space and time. Some then-accepted physical theories were inconsistent with that framework; a key example was Newton's theory of gravity, which describes the mutual attraction experienced by bodies due to their mass.Several physicists, including Einstein, searched for a theory that would reconcile Newton's law of gravity and special relativity. Only Einstein's theory proved to be consistent with experiments and observations. To understand the theory's basic ideas, it is instructive to follow Einstein's thinking between 1907 and 1915, from his simple thought experiment involving an observer in free fall to his fully geometric theory of gravity.[1]

Equivalence principle

A person in a free-falling elevator experiences weightlessness; objects either float motionless or drift at constant speed. Since everything in the elevator is falling together, no gravitational effect can be observed. In this way, the experiences of an observer in free fall are indistinguishable from those of an observer in deep space, far from any significant source of gravity. Such observers are the privileged ("inertial") observers Einstein described in his theory of special relativity: observers for whom light travels along straight lines at constant speed.[2]Einstein hypothesized that the similar experiences of weightless observers and inertial observers in special relativity represented a fundamental property of gravity, and he made this the cornerstone of his theory of general relativity, formalized in his equivalence principle. Roughly speaking, the principle states that a person in a free-falling elevator cannot tell that they are in free fall. Every experiment in such a free-falling environment has the same results as it would for an observer at rest or moving uniformly in deep space, far from all sources of gravity.[3]

Gravity and acceleration

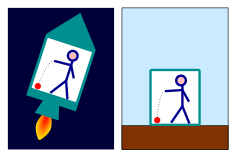

Ball falling to the floor in an accelerating rocket (left) and on Earth (right). The effect is identical.

Most effects of gravity vanish in free fall, but effects that seem the same as those of gravity can be produced by an accelerated frame of reference. An observer in a closed room cannot tell which of the following is true:

- Objects are falling to the floor because the room is resting on the surface of the Earth and the objects are being pulled down by gravity.

- Objects are falling to the floor because the room is aboard a rocket in space, which is accelerating at 9.81 m/s2 and is far from any source of gravity. The objects are being pulled towards the floor by the same "inertial force" that presses the driver of an accelerating car into the back of his seat.

An observer in an accelerated reference frame must introduce what physicists call fictitious forces to account for the acceleration experienced by himself and objects around him. One example, the force pressing the driver of an accelerating car into his or her seat, has already been mentioned; another is the force you can feel pulling your arms up and out if you attempt to spin around like a top. Einstein's master insight was that the constant, familiar pull of the Earth's gravitational field is fundamentally the same as these fictitious forces.[4] The apparent magnitude of the fictitious forces always appears to be proportional to the mass of any object on which they act – for instance, the driver's seat exerts just enough force to accelerate the driver at the same rate as the car. By analogy, Einstein proposed that an object in a gravitational field should feel a gravitational force proportional to its mass, as embodied in Newton's law of gravitation.[5]

Physical consequences

In 1907, Einstein was still eight years away from completing the general theory of relativity. Nonetheless, he was able to make a number of novel, testable predictions that were based on his starting point for developing his new theory: the equivalence principle.[6]

The gravitational redshift of a light wave as it moves upwards against a gravitational field (caused by the yellow star below).

The first new effect is the gravitational frequency shift of light. Consider two observers aboard an accelerating rocket-ship. Aboard such a ship, there is a natural concept of "up" and "down": the direction in which the ship accelerates is "up", and unattached objects accelerate in the opposite direction, falling "downward". Assume that one of the observers is "higher up" than the other. When the lower observer sends a light signal to the higher observer, the acceleration causes the light to be red-shifted, as may be calculated from special relativity; the second observer will measure a lower frequency for the light than the first. Conversely, light sent from the higher observer to the lower is blue-shifted, that is, shifted towards higher frequencies.[7] Einstein argued that such frequency shifts must also be observed in a gravitational field. This is illustrated in the figure at left, which shows a light wave that is gradually red-shifted as it works its way upwards against the gravitational acceleration. This effect has been confirmed experimentally, as described below.

This gravitational frequency shift corresponds to a gravitational time dilation: Since the "higher" observer measures the same light wave to have a lower frequency than the "lower" observer, time must be passing faster for the higher observer. Thus, time runs more slowly for observers who are lower in a gravitational field.

It is important to stress that, for each observer, there are no observable changes of the flow of time for events or processes that are at rest in his or her reference frame. Five-minute-eggs as timed by each observer's clock have the same consistency; as one year passes on each clock, each observer ages by that amount; each clock, in short, is in perfect agreement with all processes happening in its immediate vicinity. It is only when the clocks are compared between separate observers that one can notice that time runs more slowly for the lower observer than for the higher.[8] This effect is minute, but it too has been confirmed experimentally in multiple experiments, as described below.

In a similar way, Einstein predicted the gravitational deflection of light: in a gravitational field, light is deflected downward. Quantitatively, his results were off by a factor of two; the correct derivation requires a more complete formulation of the theory of general relativity, not just the equivalence principle.[9]

Tidal effects

Two bodies falling towards the center of the Earth accelerate towards each other as they fall.

The equivalence between gravitational and inertial effects does not constitute a complete theory of gravity. When it comes to explaining gravity near our own location on the Earth's surface, noting that our reference frame is not in free fall, so that fictitious forces are to be expected, provides a suitable explanation. But a freely falling reference frame on one side of the Earth cannot explain why the people on the opposite side of the Earth experience a gravitational pull in the opposite direction.

A more basic manifestation of the same effect involves two bodies that are falling side by side towards the Earth. In a reference frame that is in free fall alongside these bodies, they appear to hover weightlessly – but not exactly so. These bodies are not falling in precisely the same direction, but towards a single point in space: namely, the Earth's center of gravity. Consequently, there is a component of each body's motion towards the other (see the figure). In a small environment such as a freely falling lift, this relative acceleration is minuscule, while for skydivers on opposite sides of the Earth, the effect is large. Such differences in force are also responsible for the tides in the Earth's oceans, so the term "tidal effect" is used for this phenomenon.

The equivalence between inertia and gravity cannot explain tidal effects – it cannot explain variations in the gravitational field.[10] For that, a theory is needed which describes the way that matter (such as the large mass of the Earth) affects the inertial environment around it.

From acceleration to geometry

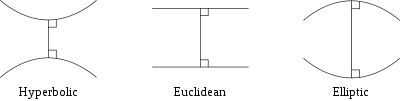

In exploring the equivalence of gravity and acceleration as well as the role of tidal forces, Einstein discovered several analogies with the geometry of surfaces. An example is the transition from an inertial reference frame (in which free particles coast along straight paths at constant speeds) to a rotating reference frame (in which extra terms corresponding to fictitious forces have to be introduced in order to explain particle motion): this is analogous to the transition from a Cartesian coordinate system (in which the coordinate lines are straight lines) to a curved coordinate system (where coordinate lines need not be straight).A deeper analogy relates tidal forces with a property of surfaces called curvature. For gravitational fields, the absence or presence of tidal forces determines whether or not the influence of gravity can be eliminated by choosing a freely falling reference frame. Similarly, the absence or presence of curvature determines whether or not a surface is equivalent to a plane. In the summer of 1912, inspired by these analogies, Einstein searched for a geometric formulation of gravity.[11]

The elementary objects of geometry – points, lines, triangles – are traditionally defined in three-dimensional space or on two-dimensional surfaces. In 1907, Hermann Minkowski, Einstein's former mathematics professor at the Swiss Federal Polytechnic, introduced a geometric formulation of Einstein's special theory of relativity where the geometry included not only space but also time. The basic entity of this new geometry is four-dimensional spacetime. The orbits of moving bodies are curves in spacetime; the orbits of bodies moving at constant speed without changing direction correspond to straight lines.[12]

For surfaces, the generalization from the geometry of a plane – a flat surface – to that of a general curved surface had been described in the early 19th century by Carl Friedrich Gauss. This description had in turn been generalized to higher-dimensional spaces in a mathematical formalism introduced by Bernhard Riemann in the 1850s. With the help of Riemannian geometry, Einstein formulated a geometric description of gravity in which Minkowski's spacetime is replaced by distorted, curved spacetime, just as curved surfaces are a generalization of ordinary plane surfaces. Embedding Diagrams are used to illustrate curved spacetime in educational contexts.[13][14]

After he had realized the validity of this geometric analogy, it took Einstein a further three years to find the missing cornerstone of his theory: the equations describing how matter influences spacetime's curvature. Having formulated what are now known as Einstein's equations (or, more precisely, his field equations of gravity), he presented his new theory of gravity at several sessions of the Prussian Academy of Sciences in late 1915, culminating in his final presentation on November 25, 1915.[15]

Geometry and gravitation

Paraphrasing John Wheeler, Einstein's geometric theory of gravity can be summarized thus:spacetime tells matter how to move; matter tells spacetime how to curve.[16] What this means is addressed in the following three sections, which explore the motion of so-called test particles, examine which properties of matter serve as a source for gravity, and, finally, introduce Einstein's equations, which relate these matter properties to the curvature of spacetime.

Probing the gravitational field

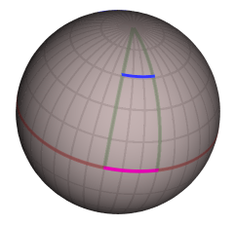

Converging geodesics: two lines of longitude (green) that start out in

parallel at the equator (red) but converge to meet at the pole.

In order to map a body's gravitational influence, it is useful to think about what physicists call probe or test particles: particles that are influenced by gravity, but are so small and light that we can neglect their own gravitational effect. In the absence of gravity and other external forces, a test particle moves along a straight line at a constant speed. In the language of spacetime, this is equivalent to saying that such test particles move along straight world lines in spacetime. In the presence of gravity, spacetime is non-Euclidean, or curved, and in curved spacetime straight world lines may not exist. Instead, test particles move along lines called geodesics, which are "as straight as possible", that is, they follow the shortest path between starting and ending points, taking the curvature into consideration.

A simple analogy is the following: In geodesy, the science of measuring Earth's size and shape, a geodesic (from Greek "geo", Earth, and "daiein", to divide) is the shortest route between two points on the Earth's surface. Approximately, such a route is a segment of a great circle, such as a line of longitude or the equator. These paths are certainly not straight, simply because they must follow the curvature of the Earth's surface. But they are as straight as is possible subject to this constraint.

The properties of geodesics differ from those of straight lines. For example, on a plane, parallel lines never meet, but this is not so for geodesics on the surface of the Earth: for example, lines of longitude are parallel at the equator, but intersect at the poles. Analogously, the world lines of test particles in free fall are spacetime geodesics, the straightest possible lines in spacetime. But still there are crucial differences between them and the truly straight lines that can be traced out in the gravity-free spacetime of special relativity. In special relativity, parallel geodesics remain parallel. In a gravitational field with tidal effects, this will not, in general, be the case. If, for example, two bodies are initially at rest relative to each other, but are then dropped in the Earth's gravitational field, they will move towards each other as they fall towards the Earth's center.[17]

Compared with planets and other astronomical bodies, the objects of everyday life (people, cars, houses, even mountains) have little mass. Where such objects are concerned, the laws governing the behavior of test particles are sufficient to describe what happens. Notably, in order to deflect a test particle from its geodesic path, an external force must be applied. A chair someone is sitting on applies an external upwards force preventing the person from falling freely towards the center of the Earth and thus following a geodesic, which they would otherwise be doing without matter in between them and the center of the Earth. In this way, general relativity explains the daily experience of gravity on the surface of the Earth not as the downwards pull of a gravitational force, but as the upwards push of external forces. These forces deflect all bodies resting on the Earth's surface from the geodesics they would otherwise follow.[18] For matter objects whose own gravitational influence cannot be neglected, the laws of motion are somewhat more complicated than for test particles, although it remains true that spacetime tells matter how to move.[19]

Sources of gravity

In Newton's description of gravity, the gravitational force is caused by matter. More precisely, it is caused by a specific property of material objects: their mass. In Einstein's theory and related theories of gravitation, curvature at every point in spacetime is also caused by whatever matter is present. Here, too, mass is a key property in determining the gravitational influence of matter. But in a relativistic theory of gravity, mass cannot be the only source of gravity. Relativity links mass with energy, and energy with momentum.The equivalence between mass and energy, as expressed by the formula E = mc2, is the most famous consequence of special relativity. In relativity, mass and energy are two different ways of describing one physical quantity. If a physical system has energy, it also has the corresponding mass, and vice versa. In particular, all properties of a body that are associated with energy, such as its temperature or the binding energy of systems such as nuclei or molecules, contribute to that body's mass, and hence act as sources of gravity.[20]

In special relativity, energy is closely connected to momentum. Just as space and time are, in that theory, different aspects of a more comprehensive entity called spacetime, energy and momentum are merely different aspects of a unified, four-dimensional quantity that physicists call four-momentum. In consequence, if energy is a source of gravity, momentum must be a source as well. The same is true for quantities that are directly related to energy and momentum, namely internal pressure and tension. Taken together, in general relativity it is mass, energy, momentum, pressure and tension that serve as sources of gravity: they are how matter tells spacetime how to curve. In the theory's mathematical formulation, all these quantities are but aspects of a more general physical quantity called the energy–momentum tensor.[21]

Einstein's equations

Einstein's equations are the centerpiece of general relativity. They provide a precise formulation of the relationship between spacetime geometry and the properties of matter, using the language of mathematics. More concretely, they are formulated using the concepts of Riemannian geometry, in which the geometric properties of a space (or a spacetime) are described by a quantity called a metric. The metric encodes the information needed to compute the fundamental geometric notions of distance and angle in a curved space (or spacetime).

Distances, at different latitudes, corresponding to 30 degrees difference in longitude.

A spherical surface like that of the Earth provides a simple example. The location of any point on the surface can be described by two coordinates: the geographic latitude and longitude. Unlike the Cartesian coordinates of the plane, coordinate differences are not the same as distances on the surface, as shown in the diagram on the right: for someone at the equator, moving 30 degrees of longitude westward (magenta line) corresponds to a distance of roughly 3,300 kilometers (2,100 mi). On the other hand, someone at a latitude of 55 degrees, moving 30 degrees of longitude westward (blue line) covers a distance of merely 1,900 kilometers (1,200 mi). Coordinates therefore do not provide enough information to describe the geometry of a spherical surface, or indeed the geometry of any more complicated space or spacetime. That information is precisely what is encoded in the metric, which is a function defined at each point of the surface (or space, or spacetime) and relates coordinate differences to differences in distance. All other quantities that are of interest in geometry, such as the length of any given curve, or the angle at which two curves meet, can be computed from this metric function.[22]

The metric function and its rate of change from point to point can be used to define a geometrical quantity called the Riemann curvature tensor, which describes exactly how the space or spacetime is curved at each point. In general relativity, the metric and the Riemann curvature tensor are quantities defined at each point in spacetime. As has already been mentioned, the matter content of the spacetime defines another quantity, the energy–momentum tensor T, and the principle that "spacetime tells matter how to move, and matter tells spacetime how to curve" means that these quantities must be related to each other. Einstein formulated this relation by using the Riemann curvature tensor and the metric to define another geometrical quantity G, now called the Einstein tensor, which describes some aspects of the way spacetime is curved. Einstein's equation then states that

This equation is often referred to in the plural as Einstein's equations, since the quantities G and T are each determined by several functions of the coordinates of spacetime, and the equations equate each of these component functions.[23] A solution of these equations describes a particular geometry of spacetime; for example, the Schwarzschild solution describes the geometry around a spherical, non-rotating mass such as a star or a black hole, whereas the Kerr solution describes a rotating black hole. Still other solutions can describe a gravitational wave or, in the case of the Friedmann–Lemaître–Robertson–Walker solution, an expanding universe. The simplest solution is the uncurved Minkowski spacetime, the spacetime described by special relativity.[24]

Experiments

No scientific theory is apodictically true; each is a model that must be checked by experiment. Newton's law of gravity was accepted because it accounted for the motion of planets and moons in the Solar System with considerable accuracy. As the precision of experimental measurements gradually improved, some discrepancies with Newton's predictions were observed, and these were accounted for in the general theory of relativity. Similarly, the predictions of general relativity must also be checked with experiment, and Einstein himself devised three tests now known as the classical tests of the theory:

Newtonian (red) vs. Einsteinian orbit (blue) of a single planet orbiting a spherical star. (Click on the image for animation.)

- Newtonian gravity predicts that the orbit which a single planet traces around a perfectly spherical star should be an ellipse. Einstein's theory predicts a more complicated curve: the planet behaves as if it were travelling around an ellipse, but at the same time, the ellipse as a whole is rotating slowly around the star. In the diagram on the right, the ellipse predicted by Newtonian gravity is shown in red, and part of the orbit predicted by Einstein in blue. For a planet orbiting the Sun, this deviation from Newton's orbits is known as the anomalous perihelion shift. The first measurement of this effect, for the planet Mercury, dates back to 1859. The most accurate results for Mercury and for other planets to date are based on measurements which were undertaken between 1966 and 1990, using radio telescopes.[25] General relativity predicts the correct anomalous perihelion shift for all planets where this can be measured accurately (Mercury, Venus and the Earth).

- According to general relativity, light does not travel along straight lines when it propagates in a gravitational field. Instead, it is deflected in the presence of massive bodies. In particular, starlight is deflected as it passes near the Sun, leading to apparent shifts of up 1.75 arc seconds in the stars' positions in the sky (an arc second is equal to 1/3600 of a degree). In the framework of Newtonian gravity, a heuristic argument can be made that leads to light deflection by half that amount. The different predictions can be tested by observing stars that are close to the Sun during a solar eclipse. In this way, a British expedition to West Africa in 1919, directed by Arthur Eddington, confirmed that Einstein's prediction was correct, and the Newtonian predictions wrong, via observation of the May 1919 eclipse. Eddington's results were not very accurate; subsequent observations of the deflection of the light of distant quasars by the Sun, which utilize highly accurate techniques of radio astronomy, have confirmed Eddington's results with significantly better precision (the first such measurements date from 1967, the most recent comprehensive analysis from 2004).[26]

- Gravitational redshift was first measured in a laboratory setting in 1959 by Pound and Rebka. It is also seen in astrophysical measurements, notably for light escaping the white dwarf Sirius B. The related gravitational time dilation effect has been measured by transporting atomic clocks to altitudes of between tens and tens of thousands of kilometers (first by Hafele and Keating in 1971; most accurately to date by Gravity Probe A launched in 1976).[27]

Gravity Probe B with solar panels folded.

Further tests of general relativity include precision measurements of the Shapiro effect or gravitational time delay for light, most recently in 2002 by the Cassini space probe. One set of tests focuses on effects predicted by general relativity for the behavior of gyroscopes travelling through space. One of these effects, geodetic precession, has been tested with the Lunar Laser Ranging Experiment (high-precision measurements of the orbit of the Moon). Another, which is related to rotating masses, is called frame-dragging. The geodetic and frame-dragging effects were both tested by the Gravity Probe B satellite experiment launched in 2004, with results confirming relativity to within 0.5% and 15%, respectively, as of December 2008.[29]

By cosmic standards, gravity throughout the solar system is weak. Since the differences between the predictions of Einstein's and Newton's theories are most pronounced when gravity is strong, physicists have long been interested in testing various relativistic effects in a setting with comparatively strong gravitational fields. This has become possible thanks to precision observations of binary pulsars. In such a star system, two highly compact neutron stars orbit each other. At least one of them is a pulsar – an astronomical object that emits a tight beam of radiowaves. These beams strike the Earth at very regular intervals, similarly to the way that the rotating beam of a lighthouse means that an observer sees the lighthouse blink, and can be observed as a highly regular series of pulses. General relativity predicts specific deviations from the regularity of these radio pulses. For instance, at times when the radio waves pass close to the other neutron star, they should be deflected by the star's gravitational field. The observed pulse patterns are impressively close to those predicted by general relativity.[30]

One particular set of observations is related to eminently useful practical applications, namely to satellite navigation systems such as the Global Positioning System that are used both for precise positioning and timekeeping. Such systems rely on two sets of atomic clocks: clocks aboard satellites orbiting the Earth, and reference clocks stationed on the Earth's surface. General relativity predicts that these two sets of clocks should tick at slightly different rates, due to their different motions (an effect already predicted by special relativity) and their different positions within the Earth's gravitational field. In order to ensure the system's accuracy, the satellite clocks are either slowed down by a relativistic factor, or that same factor is made part of the evaluation algorithm. In turn, tests of the system's accuracy (especially the very thorough measurements that are part of the definition of universal coordinated time) are testament to the validity of the relativistic predictions.[31]

A number of other tests have probed the validity of various versions of the equivalence principle; strictly speaking, all measurements of gravitational time dilation are tests of the weak version of that principle, not of general relativity itself. So far, general relativity has passed all observational tests.[32]

Astrophysical applications

Models based on general relativity play an important role in astrophysics; the success of these models is further testament to the theory's validity.Gravitational lensing

Einstein cross: four images of the same astronomical object, produced by a gravitational lens.

Since light is deflected in a gravitational field, it is possible for the light of a distant object to reach an observer along two or more paths. For instance, light of a very distant object such as a quasar can pass along one side of a massive galaxy and be deflected slightly so as to reach an observer on Earth, while light passing along the opposite side of that same galaxy is deflected as well, reaching the same observer from a slightly different direction. As a result, that particular observer will see one astronomical object in two different places in the night sky. This kind of focussing is well-known when it comes to optical lenses, and hence the corresponding gravitational effect is called gravitational lensing.[33]

Observational astronomy uses lensing effects as an important tool to infer properties of the lensing object. Even in cases where that object is not directly visible, the shape of a lensed image provides information about the mass distribution responsible for the light deflection. In particular, gravitational lensing provides one way to measure the distribution of dark matter, which does not give off light and can be observed only by its gravitational effects. One particularly interesting application are large-scale observations, where the lensing masses are spread out over a significant fraction of the observable universe, and can be used to obtain information about the large-scale properties and evolution of our cosmos.[34]

Gravitational waves

Gravitational waves, a direct consequence of Einstein's theory, are distortions of geometry that propagate at the speed of light, and can be thought of as ripples in spacetime. They should not be confused with the gravity waves of fluid dynamics, which are a different concept.In February 2016, the Advanced LIGO team announced that they had directly observed gravitational waves from a black hole merger.[35]

Indirectly, the effect of gravitational waves had been detected in observations of specific binary stars. Such pairs of stars orbit each other and, as they do so, gradually lose energy by emitting gravitational waves. For ordinary stars like the Sun, this energy loss would be too small to be detectable, but this energy loss was observed in 1974 in a binary pulsar called PSR1913+16. In such a system, one of the orbiting stars is a pulsar. This has two consequences: a pulsar is an extremely dense object known as a neutron star, for which gravitational wave emission is much stronger than for ordinary stars. Also, a pulsar emits a narrow beam of electromagnetic radiation from its magnetic poles. As the pulsar rotates, its beam sweeps over the Earth, where it is seen as a regular series of radio pulses, just as a ship at sea observes regular flashes of light from the rotating light in a lighthouse. This regular pattern of radio pulses functions as a highly accurate "clock". It can be used to time the double star's orbital period, and it reacts sensitively to distortions of spacetime in its immediate neighborhood.

The discoverers of PSR1913+16, Russell Hulse and Joseph Taylor, were awarded the Nobel Prize in Physics in 1993. Since then, several other binary pulsars have been found. The most useful are those in which both stars are pulsars, since they provide accurate tests of general relativity.[36]

Currently, a number of land-based gravitational wave detectors are in operation, and a mission to launch a space-based detector, LISA, is currently under development, with a precursor mission (LISA Pathfinder) which was launched in 2015. Gravitational wave observations can be used to obtain information about compact objects such as neutron stars and black holes, and also to probe the state of the early universe fractions of a second after the Big Bang.[37]

Black holes

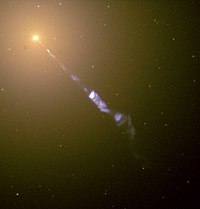

Black hole-powered jet emanating from the central region of the galaxy M87.

When mass is concentrated into a sufficiently compact region of space, general relativity predicts the formation of a black hole – a region of space with a gravitational effect so strong that not even light can escape. Certain types of black holes are thought to be the final state in the evolution of massive stars. On the other hand, supermassive black holes with the mass of millions or billions of Suns are assumed to reside in the cores of most galaxies, and they play a key role in current models of how galaxies have formed over the past billions of years.[38]

Matter falling onto a compact object is one of the most efficient mechanisms for releasing energy in the form of radiation, and matter falling onto black holes is thought to be responsible for some of the brightest astronomical phenomena imaginable. Notable examples of great interest to astronomers are quasars and other types of active galactic nuclei. Under the right conditions, falling matter accumulating around a black hole can lead to the formation of jets, in which focused beams of matter are flung away into space at speeds near that of light.[39]

There are several properties that make black holes most promising sources of gravitational waves. One reason is that black holes are the most compact objects that can orbit each other as part of a binary system; as a result, the gravitational waves emitted by such a system are especially strong. Another reason follows from what are called black-hole uniqueness theorems: over time, black holes retain only a minimal set of distinguishing features (these theorems have become known as "no-hair" theorems, since different hairstyles are a crucial part of what gives different people their different appearances). For instance, in the long term, the collapse of a hypothetical matter cube will not result in a cube-shaped black hole. Instead, the resulting black hole will be indistinguishable from a black hole formed by the collapse of a spherical mass, but with one important difference: in its transition to a spherical shape, the black hole formed by the collapse of a cube will emit gravitational waves.[40]

Cosmology

One of the most important aspects of general relativity is that it can be applied to the universe as a whole. A key point is that, on large scales, our universe appears to be constructed along very simple lines: all current observations suggest that, on average, the structure of the cosmos should be approximately the same, regardless of an observer's location or direction of observation: the universe is approximately homogeneous and isotropic. Such comparatively simple universes can be described by simple solutions of Einstein's equations. The current cosmological models of the universe are obtained by combining these simple solutions to general relativity with theories describing the properties of the universe's matter content, namely thermodynamics, nuclear- and particle physics. According to these models, our present universe emerged from an extremely dense high-temperature state – the Big Bang – roughly 14 billion years ago and has been expanding ever since.[41]

Einstein's equations can be generalized by adding a term called the cosmological constant. When this term is present, empty space itself acts as a source of attractive (or, less commonly, repulsive) gravity. Einstein originally introduced this term in his pioneering 1917 paper on cosmology, with a very specific motivation: contemporary cosmological thought held the universe to be static, and the additional term was required for constructing static model universes within the framework of general relativity. When it became apparent that the universe is not static, but expanding, Einstein was quick to discard this additional term. Since the end of the 1990s, however, astronomical evidence indicating an accelerating expansion consistent with a cosmological constant – or, equivalently, with a particular and ubiquitous kind of dark energy – has steadily been accumulating.[42]

Modern research

General relativity is very successful in providing a framework for accurate models which describe an impressive array of physical phenomena. On the other hand, there are many interesting open questions, and in particular, the theory as a whole is almost certainly incomplete.[43]In contrast to all other modern theories of fundamental interactions, general relativity is a classical theory: it does not include the effects of quantum physics. The quest for a quantum version of general relativity addresses one of the most fundamental open questions in physics. While there are promising candidates for such a theory of quantum gravity, notably string theory and loop quantum gravity, there is at present no consistent and complete theory. It has long been hoped that a theory of quantum gravity would also eliminate another problematic feature of general relativity: the presence of spacetime singularities. These singularities are boundaries ("sharp edges") of spacetime at which geometry becomes ill-defined, with the consequence that general relativity itself loses its predictive power. Furthermore, there are so-called singularity theorems which predict that such singularities must exist within the universe if the laws of general relativity were to hold without any quantum modifications. The best-known examples are the singularities associated with the model universes that describe black holes and the beginning of the universe.[44]

Other attempts to modify general relativity have been made in the context of cosmology. In the modern cosmological models, most energy in the universe is in forms that have never been detected directly, namely dark energy and dark matter. There have been several controversial proposals to remove the need for these enigmatic forms of matter and energy, by modifying the laws governing gravity and the dynamics of cosmic expansion, for example modified Newtonian dynamics.[45]

Beyond the challenges of quantum effects and cosmology, research on general relativity is rich with possibilities for further exploration: mathematical relativists explore the nature of singularities and the fundamental properties of Einstein's equations,[46] and ever more comprehensive computer simulations of specific spacetimes (such as those describing merging black holes) are run.[47] More than ninety years after the theory was first published, research is more active than ever.[48]