Holonomic brain theory is a branch of neuroscience investigating the idea that human consciousness is formed by quantum effects in or between brain cells. Holonomic refers to representations in a Hilbert phase space defined by both spectral and space-time coordinates. Holonomic brain theory is opposed by traditional neuroscience, which investigates the brain's behavior by looking at patterns of neurons and the surrounding chemistry.

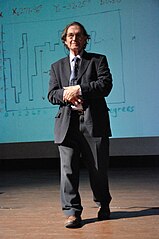

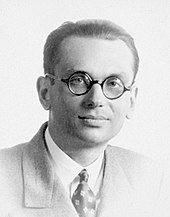

This specific theory of quantum consciousness was developed by neuroscientist Karl Pribram initially in collaboration with physicist David Bohm building on the initial theories of holograms originally formulated by Dennis Gabor. It describes human cognition by modeling the brain as a holographic storage network.

Pribram suggests these processes involve electric oscillations in the brain's fine-fibered dendritic webs, which are different from the more commonly known action potentials involving axons and synapses. These oscillations are waves and create wave interference patterns in which memory is encoded naturally, and the wave function may be analyzed by a Fourier transform.

Gabor, Pribram and others noted the similarities between these brain processes and the storage of information in a hologram, which can also be analyzed with a Fourier transform. In a hologram, any part of the hologram with sufficient size contains the whole of the stored information. In this theory, a piece of a long-term memory is similarly distributed over a dendritic arbor so that each part of the dendritic network contains all the information stored over the entire network. This model allows for important aspects of human consciousness, including the fast associative memory that allows for connections between different pieces of stored information and the non-locality of memory storage (a specific memory is not stored in a specific location, i.e. a certain cluster of neurons).

Origins and development

In 1946 Dennis Gabor invented the hologram mathematically, describing a system where an image can be reconstructed through information that is stored throughout the hologram. He demonstrated that the information pattern of a three-dimensional object can be encoded in a beam of light, which is more-or-less two-dimensional. Gabor also developed a mathematical model for demonstrating a holographic associative memory. One of Gabor's colleagues, Pieter Jacobus Van Heerden, also developed a related holographic mathematical memory model in 1963. This model contained the key aspect of non-locality, which became important years later when, in 1967, experiments by both Braitenberg and Kirschfield showed that exact localization of memory in the brain was false.

Karl Pribram had worked with psychologist Karl Lashley on Lashley's engram experiments, which used lesions to determine the exact location of specific memories in primate brains. Lashley made small lesions in the brains and found that these had little effect on memory. On the other hand, Pribram removed large areas of cortex, leading to multiple serious deficits in memory and cognitive function. Memories were not stored in a single neuron or exact location, but were spread over the entirety of a neural network. Lashley suggested that brain interference patterns could play a role in perception, but was unsure how such patterns might be generated in the brain or how they would lead to brain function.

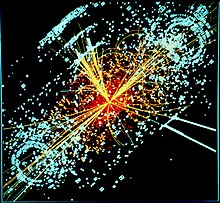

Several years later an article by neurophysiologist John Eccles described how a wave could be generated at the branching ends of pre-synaptic axons. Multiple of these waves could create interference patterns. Soon after, Emmett Leith was successful in storing visual images through the interference patterns of laser beams, inspired by Gabor's previous use of Fourier transformations to store information within a hologram. After studying the work of Eccles and that of Leith, Pribram put forward the hypothesis that memory might take the form of interference patterns that resemble laser-produced holograms. In 1980, physicist David Bohm presented his ideas of holomovement and Implicate and explicate order. Pribram became aware of Bohm's work in 1975 and realized that, since a hologram could store information within patterns of interference and then recreate that information when activated, it could serve as a strong metaphor for brain function. Pribram was further encouraged in this line of speculation by the fact that neurophysiologists Russell and Karen DeValois together established "the spatial frequency encoding displayed by cells of the visual cortex was best described as a Fourier transform of the input pattern."

Theory overview

Hologram and holonomy

A main characteristic of a hologram is that every part of the stored information is distributed over the entire hologram. Both processes of storage and retrieval are carried out in a way described by Fourier transformation equations. As long as a part of the hologram is large enough to contain the interference pattern, that part can recreate the entirety of the stored image, but the image may have unwanted changes, called noise.

An analogy to this is the broadcasting region of a radio antenna. In each smaller individual location within the entire area it is possible to access every channel, similar to how the entirety of the information of a hologram is contained within a part. Another analogy of a hologram is the way sunlight illuminates objects in the visual field of an observer. It doesn't matter how narrow the beam of sunlight is. The beam always contains all the information of the object, and when conjugated by a lens of a camera or the eyeball, produces the same full three-dimensional image. The Fourier transform formula converts spatial forms to spatial wave frequencies and vice versa, as all objects are in essence vibratory structures. Different types of lenses, acting similarly to optic lenses, can alter the frequency nature of information that is transferred.

This non-locality of information storage within the hologram is crucial, because even if most parts are damaged, the entirety will be contained within even a single remaining part of sufficient size. Pribram and others noted the similarities between an optical hologram and memory storage in the human brain. According to the holonomic brain theory, memories are stored within certain general regions, but stored non-locally within those regions. This allows the brain to maintain function and memory even when it is damaged. It is only when there exist no parts big enough to contain the whole that the memory is lost. This can also explain why some children retain normal intelligence when large portions of their brain—in some cases, half—are removed. It can also explain why memory is not lost when the brain is sliced in different cross-sections.

Pribram proposed that neural holograms were formed by the diffraction patterns of oscillating electric waves within the cortex. Representation occurs as a dynamical transformation in a distributed network of dendritic microprocesses. It is important to note the difference between the idea of a holonomic brain and a holographic one. Pribram does not suggest that the brain functions as a single hologram. Rather, the waves within smaller neural networks create localized holograms within the larger workings of the brain. This patch holography is called holonomy or windowed Fourier transformations.

A holographic model can also account for other features of memory that more traditional models cannot. The Hopfield memory model has an early memory saturation point before which memory retrieval drastically slows and becomes unreliable. On the other hand, holographic memory models have much larger theoretical storage capacities. Holographic models can also demonstrate associative memory, store complex connections between different concepts, and resemble forgetting through "lossy storage".

Synaptodendritic web

In classic brain theory the summation of electrical inputs to the dendrites and soma (cell body) of a neuron either inhibit the neuron or excite it and set off an action potential down the axon to where it synapses with the next neuron. However, this fails to account for different varieties of synapses beyond the traditional axodendritic (axon to dendrite). There is evidence for the existence of other kinds of synapses, including serial synapses and those between dendrites and soma and between different dendrites. Many synaptic locations are functionally bipolar, meaning they can both send and receive impulses from each neuron, distributing input and output over the entire group of dendrites.

Processes in this dendritic arbor, the network of teledendrons and dendrites, occur due to the oscillations of polarizations in the membrane of the fine-fibered dendrites, not due to the propagated nerve impulses associated with action potentials. Pribram posits that the length of the delay of an input signal in the dendritic arbor before it travels down the axon is related to mental awareness. The shorter the delay the more unconscious the action, while a longer delay indicates a longer period of awareness. A study by David Alkon showed that after unconscious Pavlovian conditioning there was a proportionally greater reduction in the volume of the dendritic arbor, akin to synaptic elimination when experience increases the automaticity of an action. Pribram and others theorize that, while unconscious behavior is mediated by impulses through nerve circuits, conscious behavior arises from microprocesses in the dendritic arbor.

At the same time, the dendritic network is extremely complex, able to receive 100,000 to 200,000 inputs in a single tree, due to the large amount of branching and the many dendritic spines protruding from the branches. Furthermore, synaptic hyperpolarization and depolarization remains somewhat isolated due to the resistance from the narrow dendritic spine stalk, allowing a polarization to spread without much interruption to the other spines. This spread is further aided intracellularly by the microtubules and extracellularly by glial cells. These polarizations act as waves in the synaptodendritic network, and the existence of multiple waves at once gives rise to interference patterns.

Deep and surface structure of memory

Pribram suggests that there are two layers of cortical processing: a surface structure of separated and localized neural circuits and a deep structure of the dendritic arborization that binds the surface structure together. The deep structure contains distributed memory, while the surface structure acts as the retrieval mechanism. Binding occurs through the temporal synchronization of the oscillating polarizations in the synaptodendritic web. It had been thought that binding only occurred when there was no phase lead or lag present, but a study by Saul and Humphrey found that cells in the lateral geniculate nucleus do in fact produce these. Here phase lead and lag act to enhance sensory discrimination, acting as a frame to capture important features. These filters are also similar to the lenses necessary for holographic functioning.

Pribram notes that holographic memories show large capacities, parallel processing and content addressability for rapid recognition, associative storage for perceptual completion and for associative recall. In systems endowed with memory storage, these interactions therefore lead to progressively more self-determination.

Recent studies

While Pribram originally developed the holonomic brain theory as an analogy for certain brain processes, several papers (including some more recent ones by Pribram himself) have proposed that the similarity between hologram and certain brain functions is more than just metaphorical, but actually structural. Others still maintain that the relationship is only analogical. Several studies have shown that the same series of operations used in holographic memory models are performed in certain processes concerning temporal memory and optomotor responses. This indicates at least the possibility of the existence of neurological structures with certain holonomic properties. Other studies have demonstrated the possibility that biophoton emission (biological electrical signals that are converted to weak electromagnetic waves in the visible range) may be a necessary condition for the electric activity in the brain to store holographic images. These may play a role in cell communication and certain brain processes including sleep, but further studies are needed to strengthen current ones. Other studies have shown the correlation between more advanced cognitive function and homeothermy. Taking holographic brain models into account, this temperature regulation would reduce distortion of the signal waves, an important condition for holographic systems. See: Computation approach in terms of holographic codes and processing.

Criticism and alternative models

Pribram's holonomic model of brain function did not receive widespread attention at the time, but other quantum models have been developed since, including brain dynamics by Jibu & Yasue and Vitiello's dissipative quantum brain dynamics. Though not directly related to the holonomic model, they continue to move beyond approaches based solely in classic brain theory.