Epistemology (/ɪˌpɪstəˈmɒlədʒi/ (![]() listen); from Ancient Greek ἐπιστήμη (epistḗmē) 'knowledge', and -logy), or the theory of knowledge, is the branch of philosophy concerned with knowledge. Epistemology is considered a major subfield of philosophy, along with other major subfields such as ethics, logic, and metaphysics.

listen); from Ancient Greek ἐπιστήμη (epistḗmē) 'knowledge', and -logy), or the theory of knowledge, is the branch of philosophy concerned with knowledge. Epistemology is considered a major subfield of philosophy, along with other major subfields such as ethics, logic, and metaphysics.

Epistemologists study the nature, origin, and scope of knowledge, epistemic justification, the rationality of belief, and various related issues. Debates in epistemology are generally clustered around four core areas:

- The philosophical analysis of the nature of knowledge and the conditions required for a belief to constitute knowledge, such as truth and justification

- Potential sources of knowledge and justified belief, such as perception, reason, memory, and testimony

- The structure of a body of knowledge or justified belief, including whether all justified beliefs must be derived from justified foundational beliefs or whether justification requires only a coherent set of beliefs

- Philosophical skepticism, which questions the possibility of knowledge, and related problems, such as whether skepticism poses a threat to our ordinary knowledge claims and whether it is possible to refute skeptical arguments

In these debates and others, epistemology aims to answer questions such as "What do people know?", "What does it mean to say that people know something?", "What makes justified beliefs justified?", and "How do people know that they know?" Specialties in epistemology ask questions such as "How can people create formal models about issues related to knowledge?" (in formal epistemology), "What are the historical conditions of changes in different kinds of knowledge?" (in historical epistemology), "What are the methods, aims, and subject matter of epistemological inquiry?" (in metaepistemology), and "How do people know together?" (in social epistemology).

Background

Etymology

The word epistemology is derived from the ancient Greek epistēmē, meaning "knowledge, understanding, skill, scientific knowledge", and the English suffix -ology, meaning "the science or discipline of (what is indicated by the first element)". The word "epistemology" first appeared in 1847, in a review in New York's Eclectic Magazine :

The title of one of the principal works of Fichte is 'Wissenschaftslehre,' which, after the analogy of technology ... we render epistemology.

The word was first used to present a philosophy in English by Scottish philosopher James Frederick Ferrier in 1854. It was the title of the first section of his Institutes of Metaphysics :

This section of the science is properly termed the Epistemology—the doctrine or theory of knowing, just as ontology is the science of being... It answers the general question, 'What is knowing and the known?'—or more shortly, 'What is knowledge?'

History of epistemology

Epistemology, as a distinct field of inquiry, predates the introduction of the term into the lexicon of philosophy. John Locke, for instance, described his efforts in Essay Concerning Human Understanding (1689) as an inquiry "into the original, certainty, and extent of human knowledge, together with the grounds and degrees of belief, opinion, and assent".

Almost every major historical philosopher has considered questions about what people know and how they know it. Among the Ancient Greek philosophers, Plato distinguished between inquiry regarding what people know and inquiry regarding what exists, particularly in the Republic, the Theaetetus, and the Meno. In Meno, the definition of knowledge as justified true knowledge appears for the first time. In other words, belief is required to have an explanation in order to be correct, beyond just happening to be right. A number of important epistemological concerns also appeared in the works of Aristotle.

During the subsequent Hellenistic period, philosophical schools began to appear which had a greater focus on epistemological questions, often in the form of philosophical skepticism. For instance, the Pyrrhonian skepticism of Pyrrho and Sextus Empiricus held that eudaimonia (flourishing, happiness, or "the good life") could be attained through the application of epoché (suspension of judgment) regarding all non-evident matters. Pyrrhonism was particularly concerned with undermining the epistemological dogmas of Stoicism and Epicureanism. The other major school of Hellenistic skepticism was Academic skepticism, most notably defended by Carneades and Arcesilaus, which predominated in the Platonic Academy for almost two centuries.

In ancient India the Ajñana school of ancient Indian philosophy promoted skepticism. Ajñana was a Śramaṇa movement and a major rival of early Buddhism, Jainism and the Ājīvika school. They held that it was impossible to obtain knowledge of metaphysical nature or to ascertain the truth value of philosophical propositions; and even if knowledge was possible, it was useless and disadvantageous for final salvation. They were specialized in refutation without propagating any positive doctrine of their own.

After the ancient philosophical era but before the modern philosophical era, a number of Medieval philosophers also engaged with epistemological questions at length. Most notable among the Medievals for their contributions to epistemology were Thomas Aquinas, John Duns Scotus, and William of Ockham.

During the Islamic Golden Age, one of the most prominent and influential philosophers, theologians, jurists, logicians and mystics in Islamic epistemology was Al-Ghazali. During his life, he wrote over 70 books on science, Islamic reasoning and Sufism. Al-Ghazali distributed his book The Incoherence of Philosophers, set apart as a defining moment in Islamic epistemology. He shaped a conviction that all occasions and connections are not the result of material conjunctions but are the present and prompt will of God.

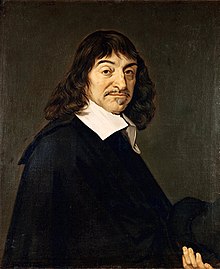

Epistemology largely came to the fore in philosophy during the early modern period, which historians of philosophy traditionally divide up into a dispute between empiricists (including Francis Bacon, John Locke, David Hume, and George Berkeley) and rationalists (including René Descartes, Baruch Spinoza, and Gottfried Leibniz). The debate between them has often been framed using the question of whether knowledge comes primarily from sensory experience (empiricism), or whether a significant portion of our knowledge is derived entirely from our faculty of reason (rationalism). According to some scholars, this dispute was resolved in the late 18th century by Immanuel Kant, whose transcendental idealism famously made room for the view that "though all our knowledge begins with experience, it by no means follows that all [knowledge] arises out of experience".

Contemporary historiography

There are a number of different methods that contemporary scholars use when trying to understand the relationship between past epistemology and contemporary epistemology. One of the most contentious questions is this: "Should we assume that the problems of epistemology are perennial, and that trying to reconstruct and evaluate Plato's or Hume's or Kant's arguments is meaningful for current debates, too?" Similarly, there is also a question of whether contemporary philosophers should aim to rationally reconstruct and evaluate historical views in epistemology, or to merely describe them. Barry Stroud claims that doing epistemology competently requires the historical study of past attempts to find philosophical understanding of the nature and scope of human knowledge. He argues that since inquiry may progress over time, we may not realize how different the questions that contemporary epistemologists ask are from questions asked at various different points in the history of philosophy.

Central concepts in epistemology

Knowledge

Nearly all debates in epistemology are in some way related to knowledge. Most generally, "knowledge" is a familiarity, awareness, or understanding of someone or something, which might include facts (propositional knowledge), skills (procedural knowledge), or objects (acquaintance knowledge). Philosophers tend to draw an important distinction between three different senses of "knowing" something: "knowing that" (knowing the truth of propositions), "knowing how" (understanding how to perform certain actions), and "knowing by acquaintance" (directly perceiving an object, being familiar with it, or otherwise coming into contact with it). Epistemology is primarily concerned with the first of these forms of knowledge, propositional knowledge. All three senses of "knowing" can be seen in our ordinary use of the word. In mathematics, you can know that 2 + 2 = 4, but there is also knowing how to add two numbers, and knowing a person (e.g., knowing other persons, or knowing oneself), place (e.g., one's hometown), thing (e.g., cars), or activity (e.g., addition). While these distinctions are not explicit in English, they are explicitly made in other languages, including French, Portuguese, Spanish, Romanian, German and Dutch (although some languages closely related to English have been said to retain these verbs, such as Scots). The theoretical interpretation and significance of these linguistic issues remains controversial.

In his paper On Denoting and his later book Problems of Philosophy, Bertrand Russell brought a great deal of attention to the distinction between "knowledge by description" and "knowledge by acquaintance". Gilbert Ryle is similarly credited with bringing more attention to the distinction between knowing how and knowing that in The Concept of Mind. In Personal Knowledge, Michael Polanyi argues for the epistemological relevance of knowledge how and knowledge that; using the example of the act of balance involved in riding a bicycle, he suggests that the theoretical knowledge of the physics involved in maintaining a state of balance cannot substitute for the practical knowledge of how to ride, and that it is important to understand how both are established and grounded. This position is essentially Ryle's, who argued that a failure to acknowledge the distinction between "knowledge that" and "knowledge how" leads to infinite regress.

A priori and a posteriori knowledge

One of the most important distinctions in epistemology is between what can be known a priori (independently of experience) and what can be known a posteriori (through experience). The terms originate from the Analytic methods of Aristotle's Organon, and may be roughly defined as follows:

- A priori knowledge is knowledge that is known independently of experience (that is, it is non-empirical, or arrived at before experience, usually by reason). It will henceforth be acquired through anything that is independent from experience.

- A posteriori knowledge is knowledge that is known by experience (that is, it is empirical, or arrived at through experience).

Views that emphasize the importance of a priori knowledge are generally classified as rationalist. Views that emphasize the importance of a posteriori knowledge are generally classified as empiricist.

Belief

One of the core concepts in epistemology is belief. A belief is an attitude that a person holds regarding anything that they take to be true. For instance, to believe that snow is white is comparable to accepting the truth of the proposition "snow is white". Beliefs can be occurrent (e.g. a person actively thinking "snow is white"), or they can be dispositional (e.g. a person who if asked about the color of snow would assert "snow is white"). While there is not universal agreement about the nature of belief, most contemporary philosophers hold the view that a disposition to express belief B qualifies as holding the belief B. There are various different ways that contemporary philosophers have tried to describe beliefs, including as representations of ways that the world could be (Jerry Fodor), as dispositions to act as if certain things are true (Roderick Chisholm), as interpretive schemes for making sense of someone's actions (Daniel Dennett and Donald Davidson), or as mental states that fill a particular function (Hilary Putnam). Some have also attempted to offer significant revisions to our notion of belief, including eliminativists about belief who argue that there is no phenomenon in the natural world which corresponds to our folk psychological concept of belief (Paul Churchland) and formal epistemologists who aim to replace our bivalent notion of belief ("either I have a belief or I don't have a belief") with the more permissive, probabilistic notion of credence ("there is an entire spectrum of degrees of belief, not a simple dichotomy between belief and non-belief").

While belief plays a significant role in epistemological debates surrounding knowledge and justification, it also has many other philosophical debates in its own right. Notable debates include: "What is the rational way to revise one's beliefs when presented with various sorts of evidence?"; "Is the content of our beliefs entirely determined by our mental states, or do the relevant facts have any bearing on our beliefs (e.g. if I believe that I'm holding a glass of water, is the non-mental fact that water is H2O part of the content of that belief)?"; "How fine-grained or coarse-grained are our beliefs?"; and "Must it be possible for a belief to be expressible in language, or are there non-linguistic beliefs?"

Truth

Truth is the property or state of being in accordance with facts or reality. On most views, truth is the correspondence of language or thought to a mind-independent world. This is called the correspondence theory of truth. Among philosophers who think that it is possible to analyze the conditions necessary for knowledge, virtually all of them accept that truth is such a condition. There is much less agreement about the extent to which a knower must know why something is true in order to know. On such views, something being known implies that it is true. However, this should not be confused for the more contentious view that one must know that one knows in order to know (the KK principle).

Epistemologists disagree about whether belief is the only truth-bearer. Other common suggestions for things that can bear the property of being true include propositions, sentences, thoughts, utterances, and judgments. Plato, in his Gorgias, argues that belief is the most commonly invoked truth-bearer.

Many of the debates regarding truth are at the crossroads of epistemology and logic. Some contemporary debates regarding truth include: How do we define truth? Is it even possible to give an informative definition of truth? What things are truth-bearers and are therefore capable of being true or false? Are truth and falsity bivalent, or are there other truth values? What are the criteria of truth that allow us to identify it and to distinguish it from falsity? What role does truth play in constituting knowledge? And is truth absolute, or is it merely relative to one's perspective?

Justification

As the term "justification" is used in epistemology, a belief is justified if one has good reason for holding it. Loosely speaking, justification is the reason that someone holds a rationally admissible belief, on the assumption that it is a good reason for holding it. Sources of justification might include perceptual experience (the evidence of the senses), reason, and authoritative testimony, among others. Importantly however, a belief being justified does not guarantee that the belief is true, since a person could be justified in forming beliefs based on very convincing evidence that was nonetheless deceiving.

Internalism and externalism

A central debate about the nature of justification is a debate between epistemological externalists on the one hand and epistemological internalists on the other. While epistemic externalism first arose in attempts to overcome the Gettier problem, it has flourished in the time since as an alternative way of conceiving of epistemic justification. The initial development of epistemic externalism is often attributed to Alvin Goldman, although numerous other philosophers have worked on the topic in the time since.

Externalists hold that factors deemed "external", meaning outside of the psychological states of those who gain knowledge, can be conditions of justification. For example, an externalist response to the Gettier problem is to say that for a justified true belief to count as knowledge, there must be a link or dependency between the belief and the state of the external world. Usually, this is understood to be a causal link. Such causation, to the extent that it is "outside" the mind, would count as an external, knowledge-yielding condition. Internalists, on the other hand, assert that all knowledge-yielding conditions are within the psychological states of those who gain knowledge.

Though unfamiliar with the internalist/externalist debate himself, many point to René Descartes as an early example of the internalist path to justification. He wrote that because the only method by which we perceive the external world is through our senses, and that, because the senses are not infallible, we should not consider our concept of knowledge infallible. The only way to find anything that could be described as "indubitably true", he advocates, would be to see things "clearly and distinctly". He argued that if there is an omnipotent, good being who made the world, then it's reasonable to believe that people are made with the ability to know. However, this does not mean that man's ability to know is perfect. God gave man the ability to know but not with omniscience. Descartes said that man must use his capacities for knowledge correctly and carefully through methodological doubt.

The dictum "Cogito ergo sum" (I think, therefore I am) is also commonly associated with Descartes's theory. In his own methodological doubt—doubting everything he previously knew so he could start from a blank slate—the first thing that he could not logically bring himself to doubt was his own existence: "I do not exist" would be a contradiction in terms. The act of saying that one does not exist assumes that someone must be making the statement in the first place. Descartes could doubt his senses, his body, and the world around him—but he could not deny his own existence, because he was able to doubt and must exist to manifest that doubt. Even if some "evil genius" were deceiving him, he would have to exist to be deceived. This one sure point provided him with what he called his Archimedean point, in order to further develop his foundation for knowledge. Simply put, Descartes's epistemological justification depended on his indubitable belief in his own existence and his clear and distinct knowledge of God.

Defining knowledge

A central issue in epistemology is the question of what the nature of knowledge is or how to define it. Sometimes the expressions "theory of knowledge" and "analysis of knowledge" are used specifically for this form of inquiry. The term "knowledge" has various meanings in natural language. It can refer to an awareness of facts, as in knowing that Mars is a planet, to a possession of skills, as in knowing how to swim, or to an experiential acquaintance, as in knowing Daniel Craig personally. Factual knowledge, also referred to as propositional knowledge or descriptive knowledge, plays a special role in epistemology. On the linguistic level, it is distinguished from the other forms of knowledge since it can be expressed through a that-clause, i.e. using a formulation like "They know that..." followed by the known proposition.

Some features of factual knowledge are widely accepted: it is a form of cognitive success that establishes epistemic contact with reality. However, there are still various disagreements about its exact nature even though it has been studied intensely. Different factors are responsible for these disagreements. Some theorists try to furnish a practically useful definition by describing its most noteworthy and easily identifiable features. Others engage in an analysis of knowledge, which aims to provide a theoretically precise definition that identifies the set of essential features characteristic for all instances of knowledge and only for them. Differences in the methodology may also cause disagreements. In this regard, some epistemologists use abstract and general intuitions in order to arrive at their definitions. A different approach is to start from concrete individual cases of knowledge to determine what all of them have in common. Yet another method is to focus on linguistic evidence by studying how the term "knowledge" is commonly used. Different standards of knowledge are further sources of disagreement. A few theorists set these standards very high by demanding that absolute certainty or infallibility is necessary. On such a view, knowledge is a very rare thing. Theorists more in tune with ordinary language usually demand lower standards and see knowledge as something commonly found in everyday life.

As justified true belief

The historically most influential definition, discussed since ancient Greek philosophy, characterizes knowledge in relation to three essential features: as (1) a belief that is (2) true and (3) justified. There is still wide acceptance that the first two features are correct, i.e. that knowledge is a mental state that affirms a true proposition. However, there is a lot of dispute about the third feature: justification. This feature is usually included to distinguish knowledge from true beliefs that rest on superstition, lucky guesses, or faulty reasoning. This expresses the idea that knowledge is not the same as being right about something. Traditionally, justification is understood as the possession of evidence: a belief is justified if the believer has good evidence supporting it. Such evidence could be a perceptual experience, a memory, or a second belief.

Gettier problem and alternative definitions

The justified-true-belief account of knowledge came under severe criticism in the second half of the 20th century, when Edmund Gettier proposed various counterexamples. In a famous so-called Gettier-case, a person is driving on a country road. There are many barn façades along this road and only one real barn. But it is not possible to tell the difference between them from the road. The person then stops by a fortuitous coincidence in front of the only real barn and forms the belief that it is a barn. The idea behind this thought experiment is that this is not knowledge even though the belief is both justified and true. The reason is that it is just a lucky accident since the person cannot tell the difference: they would have formed exactly the same justified belief if they had stopped at another site, in which case the belief would have been false.

Various additional examples were proposed along similar lines. Most of them involve a justified true belief that apparently fails to amount to knowledge because the belief's justification is in some sense not relevant to its truth. These counterexamples have provoked very diverse responses. Some theorists think that one only needs to modify one's conception of justification to avoid them. But the more common approach is to search for an additional criterion. On this view, all cases of knowledge involve a justified true belief but some justified true beliefs do not amount to knowledge since they lack this additional feature. There are diverse suggestions for this fourth criterion. Some epistemologists require that no false belief is involved in the justification or that no defeater of the belief is present. A different approach is to require that the belief tracks truth, i.e. that the person would not have the belief if it was false. Some even require that the justification has to be infallible, i.e. that it necessitates the belief's truth.

A quite different approach is to affirm that the justified-true-belief account of knowledge is deeply flawed and to seek a complete reconceptualization of knowledge. These reconceptualizations often do not require justification at all. One such approach is to require that the true belief was produced by a reliable process. Naturalized epistemologists often hold that the believed fact has to cause the belief. Virtue theorists are also interested in how the belief is produced. For them, the belief must be a manifestation of a cognitive virtue.

The value problem

We generally assume that knowledge is more valuable than mere true belief. If so, what is the explanation? A formulation of the value problem in epistemology first occurs in Plato's Meno. Socrates points out to Meno that a man who knew the way to Larissa could lead others there correctly. But so, too, could a man who had true beliefs about how to get there, even if he had not gone there or had any knowledge of Larissa. Socrates says that it seems that both knowledge and true opinion can guide action. Meno then wonders why knowledge is valued more than true belief and why knowledge and true belief are different. Socrates responds that knowledge is more valuable than mere true belief because it is tethered or justified. Justification, or working out the reason for a true belief, locks down true belief.

The problem is to identify what (if anything) makes knowledge more valuable than mere true belief, or that makes knowledge more valuable than a mere minimal conjunction of its components, such as justification, safety, sensitivity, statistical likelihood, and anti-Gettier conditions, on a particular analysis of knowledge that conceives of knowledge as divided into components (to which knowledge-first epistemological theories, which posit knowledge as fundamental, are notable exceptions). The value problem re-emerged in the philosophical literature on epistemology in the twenty-first century following the rise of virtue epistemology in the 1980s, partly because of the obvious link to the concept of value in ethics.

Virtue epistemology

In contemporary philosophy, epistemologists including Ernest Sosa, John Greco, Jonathan Kvanvig, Linda Zagzebski, and Duncan Pritchard have defended virtue epistemology as a solution to the value problem. They argue that epistemology should also evaluate the "properties" of people as epistemic agents (i.e. intellectual virtues), rather than merely the properties of propositions and propositional mental attitudes.

The value problem has been presented as an argument against epistemic reliabilism by Linda Zagzebski, Wayne Riggs, and Richard Swinburne, among others. Zagzebski analogizes the value of knowledge to the value of espresso produced by an espresso maker: "The liquid in this cup is not improved by the fact that it comes from a reliable espresso maker. If the espresso tastes good, it makes no difference if it comes from an unreliable machine." For Zagzebski, the value of knowledge deflates to the value of mere true belief. She assumes that reliability in itself has no value or disvalue, but Goldman and Olsson disagree. They point out that Zagzebski's conclusion rests on the assumption of veritism: all that matters is the acquisition of true belief. To the contrary, they argue that a reliable process for acquiring a true belief adds value to the mere true belief by making it more likely that future beliefs of a similar kind will be true. By analogy, having a reliable espresso maker that produced a good cup of espresso would be more valuable than having an unreliable one that luckily produced a good cup because the reliable one would more likely produce good future cups compared to the unreliable one.

The value problem is important to assessing the adequacy of theories of knowledge that conceive of knowledge as consisting of true belief and other components. According to Kvanvig, an adequate account of knowledge should resist counterexamples and allow an explanation of the value of knowledge over mere true belief. Should a theory of knowledge fail to do so, it would prove inadequate.

One of the more influential responses to the problem is that knowledge is not particularly valuable and is not what ought to be the main focus of epistemology. Instead, epistemologists ought to focus on other mental states, such as understanding. Advocates of virtue epistemology have argued that the value of knowledge comes from an internal relationship between the knower and the mental state of believing.

Acquiring knowledge

Sources of knowledge

There are many proposed sources of knowledge and justified belief which we take to be actual sources of knowledge in our everyday lives. Some of the most commonly discussed include perception, reason, memory, and testimony.

Important distinctions

A priori–a posteriori distinction

As mentioned above, epistemologists draw a distinction between what can be known a priori (independently of experience) and what can only be known a posteriori (through experience). Much of what we call a priori knowledge is thought to be attained through reason alone, as featured prominently in rationalism. This might also include a non-rational faculty of intuition, as defended by proponents of innatism. In contrast, a posteriori knowledge is derived entirely through experience or as a result of experience, as emphasized in empiricism. This also includes cases where knowledge can be traced back to an earlier experience, as in memory or testimony.

A way to look at the difference between the two is through an example. Bruce Russell gives two propositions in which the reader decides which one he believes more. Option A: All crows are birds. Option B: All crows are black. If you believe option A, then you are a priori justified in believing it because you don't have to see a crow to know it's a bird. If you believe in option B, then you are posteriori justified to believe it because you have seen many crows therefore knowing they are black. He goes on to say that it doesn't matter if the statement is true or not, only that if you believe in one or the other that matters.

The idea of a priori knowledge is that it is based on intuition or rational insights. Laurence BonJour says in his article "The Structure of Empirical Knowledge", that a "rational insight is an immediate, non-inferential grasp, apprehension or 'seeing' that some proposition is necessarily true." (3) Going back to the crow example, by Laurence BonJour's definition the reason you would believe in option A is because you have an immediate knowledge that a crow is a bird, without ever experiencing one.

Evolutionary psychology takes a novel approach to the problem. It says that there is an innate predisposition for certain types of learning. "Only small parts of the brain resemble a tabula rasa; this is true even for human beings. The remainder is more like an exposed negative waiting to be dipped into a developer fluid".

Analytic–synthetic distinction

Immanuel Kant, in his Critique of Pure Reason, drew a distinction between "analytic" and "synthetic" propositions. He contended that some propositions are such that we can know they are true just by understanding their meaning. For example, consider, "My father's brother is my uncle." We can know it is true solely by virtue of our understanding in what its terms mean. Philosophers call such propositions "analytic". Synthetic propositions, on the other hand, have distinct subjects and predicates. An example would be, "My father's brother has black hair." Kant stated that all mathematical and scientific statements are synthetic a priori propositions because they are necessarily true but our knowledge about the attributes of the mathematical or physical subjects we can only get by logical inference.

While this distinction is first and foremost about meaning and is therefore most relevant to the philosophy of language, the distinction has significant epistemological consequences, seen most prominently in the works of the logical positivists. In particular, if the set of propositions which can only be known a posteriori is coextensive with the set of propositions which are synthetically true, and if the set of propositions which can be known a priori is coextensive with the set of propositions which are analytically true (or in other words, which are true by definition), then there can only be two kinds of successful inquiry: Logico-mathematical inquiry, which investigates what is true by definition, and empirical inquiry, which investigates what is true in the world. Most notably, this would exclude the possibility that branches of philosophy like metaphysics could ever provide informative accounts of what actually exists.

The American philosopher Willard Van Orman Quine, in his paper "Two Dogmas of Empiricism", famously challenged the analytic-synthetic distinction, arguing that the boundary between the two is too blurry to provide a clear division between propositions that are true by definition and propositions that are not. While some contemporary philosophers take themselves to have offered more sustainable accounts of the distinction that are not vulnerable to Quine's objections, there is no consensus about whether or not these succeed.

Science as knowledge acquisition

Science is often considered to be a refined, formalized, systematic, institutionalized form of the pursuit and acquisition of empirical knowledge. As such, the philosophy of science may be viewed as an application of the principles of epistemology and as a foundation for epistemological inquiry.

The regress problem

The regress problem (also known as Agrippa's Trilemma) is the problem of providing a complete logical foundation for human knowledge. The traditional way of supporting a rational argument is to appeal to other rational arguments, typically using chains of reason and rules of logic. A classic example that goes back to Aristotle is deducing that Socrates is mortal. We have a logical rule that says All humans are mortal and an assertion that Socrates is human and we deduce that Socrates is mortal. In this example how do we know that Socrates is human? Presumably we apply other rules such as: All born from human females are human. Which then leaves open the question how do we know that all born from humans are human? This is the regress problem: how can we eventually terminate a logical argument with some statements that do not require further justification but can still be considered rational and justified? As John Pollock stated:

... to justify a belief one must appeal to a further justified belief. This means that one of two things can be the case. Either there are some beliefs that we can be justified for holding, without being able to justify them on the basis of any other belief, or else for each justified belief there is an infinite regress of (potential) justification [the nebula theory]. On this theory there is no rock bottom of justification. Justification just meanders in and out through our network of beliefs, stopping nowhere.

The apparent impossibility of completing an infinite chain of reasoning is thought by some to support skepticism. It is also the impetus for Descartes's famous dictum: I think, therefore I am. Descartes was looking for some logical statement that could be true without appeal to other statements.

Responses to the regress problem

Many epistemologists studying justification have attempted to argue for various types of chains of reasoning that can escape the regress problem.

Foundationalism

Foundationalists respond to the regress problem by asserting that certain "foundations" or "basic beliefs" support other beliefs but do not themselves require justification from other beliefs. These beliefs might be justified because they are self-evident, infallible, or derive from reliable cognitive mechanisms. Perception, memory, and a priori intuition are often considered possible examples of basic beliefs.

The chief criticism of foundationalism is that if a belief is not supported by other beliefs, accepting it may be arbitrary or unjustified.

Coherentism

Another response to the regress problem is coherentism, which is the rejection of the assumption that the regress proceeds according to a pattern of linear justification. To avoid the charge of circularity, coherentists hold that an individual belief is justified circularly by the way it fits together (coheres) with the rest of the belief system of which it is a part. This theory has the advantage of avoiding the infinite regress without claiming special, possibly arbitrary status for some particular class of beliefs. Yet, since a system can be coherent while also being wrong, coherentists face the difficulty of ensuring that the whole system corresponds to reality. Additionally, most logicians agree that any argument that is circular is, at best, only trivially valid. That is, to be illuminating, arguments must operate with information from multiple premises, not simply conclude by reiterating a premise.

Nigel Warburton writes in Thinking from A to Z that "[c]ircular arguments are not invalid; in other words, from a logical point of view there is nothing intrinsically wrong with them. However, they are, when viciously circular, spectacularly uninformative."

Infinitism

An alternative resolution to the regress problem is known as "infinitism". Infinitists take the infinite series to be merely potential, in the sense that an individual may have indefinitely many reasons available to them, without having consciously thought through all of these reasons when the need arises. This position is motivated in part by the desire to avoid what is seen as the arbitrariness and circularity of its chief competitors, foundationalism and coherentism. The most prominent defense of infinitism has been given by Peter Klein.

Foundherentism

An intermediate position, known as "foundherentism", is advanced by Susan Haack. Foundherentism is meant to unify foundationalism and coherentism. Haack explains the view by using a crossword puzzle as an analogy. Whereas, for example, infinitists regard the regress of reasons as taking the form of a single line that continues indefinitely, Haack has argued that chains of properly justified beliefs look more like a crossword puzzle, with various different lines mutually supporting each other. Thus, Haack's view leaves room for both chains of beliefs that are "vertical" (terminating in foundational beliefs) and chains that are "horizontal" (deriving their justification from coherence with beliefs that are also members of foundationalist chains of belief).

Schools of thought in epistemology

Empiricism

Empiricism is a view in the theory of knowledge which focuses on the role of experience, especially experience based on perceptual observations by the senses, in the generation of knowledge. Certain forms exempt disciplines such as mathematics and logic from these requirements.

There are many variants of empiricism, including British empiricism, logical empiricism, phenomenalism, and some versions of common sense philosophy. Most forms of empiricism give epistemologically privileged status to sensory impressions or sense data, although this plays out very differently in different cases. Some of the most famous historical empiricists include John Locke, David Hume, George Berkeley, Francis Bacon, John Stuart Mill, Rudolf Carnap, and Bertrand Russell.

Rationalism

Rationalism is the epistemological view that reason is the chief source of knowledge and the main determinant of what constitutes knowledge. More broadly, it can also refer to any view which appeals to reason as a source of knowledge or justification. Rationalism is one of the two classical views in epistemology, the other being empiricism. Rationalists claim that the mind, through the use of reason, can directly grasp certain truths in various domains, including logic, mathematics, ethics, and metaphysics. Rationalist views can range from modest views in mathematics and logic (such as that of Gottlob Frege) to ambitious metaphysical systems (such as that of Baruch Spinoza).

Some of the most famous rationalists include Plato, René Descartes, Baruch Spinoza, and Gottfried Leibniz.

Skepticism

Skepticism is a position that questions the possibility of human knowledge, either in particular domains or on a general level. Skepticism does not refer to any one specific school of philosophy, but is rather a thread that runs through many epistemological debates. Ancient Greek skepticism began during the Hellenistic period in philosophy, which featured both Pyrrhonism (notably defended by Pyrrho, Sextus Empiricus, and Aenesidemus) and Academic skepticism (notably defended by Arcesilaus and Carneades). Among ancient Indian philosophers, skepticism was notably defended by the Ajñana school and in the Buddhist Madhyamika tradition. In modern philosophy, René Descartes' famous inquiry into mind and body began as an exercise in skepticism, in which he started by trying to doubt all purported cases of knowledge in order to search for something that was known with absolute certainty.

Epistemic skepticism questions whether knowledge is possible at all. Generally speaking, skeptics argue that knowledge requires certainty, and that most or all of our beliefs are fallible (meaning that our grounds for holding them always, or almost always, fall short of certainty), which would together entail that knowledge is always or almost always impossible for us. Characterizing knowledge as strong or weak is dependent on a person's viewpoint and their characterization of knowledge. Much of modern epistemology is derived from attempts to better understand and address philosophical skepticism.

Pyrrhonism

One of the oldest forms of epistemic skepticism can be found in Agrippa's trilemma (named after the Pyrrhonist philosopher Agrippa the Skeptic) which demonstrates that certainty can not be achieved with regard to beliefs. Pyrrhonism dates back to Pyrrho of Elis from the 4th century BCE, although most of what we know about Pyrrhonism today is from the surviving works of Sextus Empiricus. Pyrrhonists claim that for any argument for a non-evident proposition, an equally convincing argument for a contradictory proposition can be produced. Pyrrhonists do not dogmatically deny the possibility of knowledge, but instead point out that beliefs about non-evident matters cannot be substantiated.

Cartesian skepticism

The Cartesian evil demon problem, first raised by René Descartes, supposes that our sensory impressions may be controlled by some external power rather than the result of ordinary veridical perception. In such a scenario, nothing we sense would actually exist, but would instead be mere illusion. As a result, we would never be able to know anything about the world, since we would be systematically deceived about everything. The conclusion often drawn from evil demon skepticism is that even if we are not completely deceived, all of the information provided by our senses is still compatible with skeptical scenarios in which we are completely deceived, and that we must therefore either be able to exclude the possibility of deception or else must deny the possibility of infallible knowledge (that is, knowledge which is completely certain) beyond our immediate sensory impressions. While the view that no beliefs are beyond doubt other than our immediate sensory impressions is often ascribed to Descartes, he in fact thought that we can exclude the possibility that we are systematically deceived, although his reasons for thinking this are based on a highly contentious ontological argument for the existence of a benevolent God who would not allow such deception to occur.

Responses to philosophical skepticism

Epistemological skepticism can be classified as either "mitigated" or "unmitigated" skepticism. Mitigated skepticism rejects "strong" or "strict" knowledge claims but does approve weaker ones, which can be considered "virtual knowledge", but only with regard to justified beliefs. Unmitigated skepticism rejects claims of both virtual and strong knowledge. Characterizing knowledge as strong, weak, virtual or genuine can be determined differently depending on a person's viewpoint as well as their characterization of knowledge. Some of the most notable attempts to respond to unmitigated skepticism include direct realism, disjunctivism, common sense philosophy, pragmatism, fideism, and fictionalism.

Pragmatism

Pragmatism is a fallibilist epistemology that emphasizes the role of action in knowing. Different interpretations of pragmatism variously emphasize: truth as the final outcome of ideal scientific inquiry and experimentation, truth as closely related to usefulness, experience as transacting with (instead of representing) nature, and human practices as the foundation of language. Pragmatism's origins are often attributed to Charles Sanders Peirce, William James, and John Dewey. In 1878, Peirce formulated the maxim: "Consider what effects, that might conceivably have practical bearings, we conceive the object of our conception to have. Then, our conception of these effects is the whole of our conception of the object."

William James suggested that through a pragmatist epistemology, theories "become instruments, not answers to enigmas in which we can rest". In James's pragmatic method, which he adapted from Peirce, metaphysical disputes can be settled by tracing the practical consequences of the different sides of the argument. If this process does not resolve the dispute, then "the dispute is idle".

Contemporary versions of pragmatism have been developed by thinkers such as Richard Rorty and Hilary Putnam. Rorty proposed that values were historically contingent and dependent upon their utility within a given historical period. Contemporary philosophers working in pragmatism are called neopragmatists, and also include Nicholas Rescher, Robert Brandom, Susan Haack, and Cornel West.

Naturalized epistemology

In certain respects an intellectual descendant of pragmatism, naturalized epistemology considers the evolutionary role of knowledge for agents living and evolving in the world. It de-emphasizes the questions around justification and truth, and instead asks, empirically, how reliable beliefs are formed and the role that evolution played in the development of such processes. It suggests a more empirical approach to the subject as a whole, leaving behind philosophical definitions and consistency arguments, and instead using psychological methods to study and understand how "knowledge" is actually formed and is used in the natural world. As such, it does not attempt to answer the analytic questions of traditional epistemology, but rather replace them with new empirical ones.

Naturalized epistemology was first proposed in "Epistemology Naturalized", a seminal paper by W.V.O. Quine. A less radical view has been defended by Hilary Kornblith in Knowledge and its Place in Nature, in which he seeks to turn epistemology towards empirical investigation without completely abandoning traditional epistemic concepts.

Epistemic relativism

Epistemic relativism is the view that what is true, rational, or justified for one person need not be true, rational, or justified for another person. Epistemic relativists therefore assert that while there are relative facts about truth, rationality, justification, and so on, there is no perspective-independent fact of the matter. Note that this is distinct from epistemic contextualism, which holds that the meaning of epistemic terms vary across contexts (e.g. "I know" might mean something different in everyday contexts and skeptical contexts). In contrast, epistemic relativism holds that the relevant facts vary, not just linguistic meaning. Relativism about truth may also be a form of ontological relativism, insofar as relativists about truth hold that facts about what exists vary based on perspective.

Epistemic constructivism

Constructivism is a view in philosophy according to which all "knowledge is a compilation of human-made constructions", "not the neutral discovery of an objective truth". Whereas objectivism is concerned with the "object of our knowledge", constructivism emphasizes "how we construct knowledge". Constructivism proposes new definitions for knowledge and truth, which emphasize intersubjectivity rather than objectivity, and viability rather than truth. The constructivist point of view is in many ways comparable to certain forms of pragmatism.

Epistemic idealism

Idealism is a broad term referring to both an ontological view about the world being in some sense mind-dependent and a corresponding epistemological view that everything people know can be reduced to mental phenomena. First and foremost, "idealism" is a metaphysical doctrine. As an epistemological doctrine, idealism shares a great deal with both empiricism and rationalism. Some of the most famous empiricists have been classified as idealists (particularly Berkeley), and yet the subjectivism inherent to idealism also resembles that of Descartes in many respects. Many idealists believe that knowledge is primarily (at least in some areas) acquired by a priori processes, or that it is innate—for example, in the form of concepts not derived from experience. The relevant theoretical concepts may purportedly be part of the structure of the human mind (as in Kant's theory of transcendental idealism), or they may be said to exist independently of the mind (as in Plato's theory of Forms).

Some of the most famous forms of idealism include transcendental idealism (developed by Immanuel Kant), subjective idealism (developed by George Berkeley), and absolute idealism (developed by Georg Wilhelm Friedrich Hegel and Friedrich Schelling).

Bayesian epistemology

Bayesian epistemology is a formal approach to various topics in epistemology that has its roots in Thomas Bayes' work in the field of probability theory. One advantage of its formal method in contrast to traditional epistemology is that its concepts and theorems can be defined with a high degree of precision. It is based on the idea that beliefs can be interpreted as subjective probabilities. As such, they are subject to the laws of probability theory, which act as the norms of rationality. These norms can be divided into static constraints, governing the rationality of beliefs at any moment, and dynamic constraints, governing how rational agents should change their beliefs upon receiving new evidence. The most characteristic Bayesian expression of these principles is found in the form of Dutch books, which illustrate irrationality in agents through a series of bets that lead to a loss for the agent no matter which of the probabilistic events occurs. Bayesians have applied these fundamental principles to various epistemological topics but Bayesianism does not cover all topics of traditional epistemology.

Feminist epistemology

Feminist epistemology is a subfield of epistemology which applies feminist theory to epistemological questions. It began to emerge as a distinct subfield in the 20th century. Prominent feminist epistemologists include Miranda Fricker (who developed the concept of epistemic injustice), Donna Haraway (who first proposed the concept of situated knowledge), Sandra Harding, and Elizabeth Anderson. Harding proposes that feminist epistemology can be broken into three distinct categories: Feminist empiricism, standpoint epistemology, and postmodern epistemology.

Feminist epistemology has also played a significant role in the development of many debates in social epistemology.

Decolonial epistemology

Epistemicide is a term used in decolonisation studies that describes the killing of knowledge systems under systemic oppression such as colonisation and slavery. The term was coined by Boaventura de Sousa Santos, who presented the significance of such physical violence creating the centering of Western knowledge in the current world. This term challenges the thought of what is seen as knowledge in academia today.

Indian pramana

Indian schools of philosophy, such as the Hindu Nyaya and Carvaka schools, and the Jain and Buddhist philosophical schools, developed an epistemological tradition independently of the Western philosophical tradition called "pramana". Pramana can be translated as "instrument of knowledge" and refers to various means or sources of knowledge that Indian philosophers held to be reliable. Each school of Indian philosophy had their own theories about which pramanas were valid means to knowledge and which were unreliable (and why). A Vedic text, Taittirīya Āraṇyaka (c. 9th–6th centuries BCE), lists "four means of attaining correct knowledge": smṛti ("tradition" or "scripture"), pratyakṣa ("perception"), aitihya ("communication by one who is expert", or "tradition"), and anumāna ("reasoning" or "inference").

In the Indian traditions, the most widely discussed pramanas are: Pratyakṣa (perception), Anumāṇa (inference), Upamāṇa (comparison and analogy), Arthāpatti (postulation, derivation from circumstances), Anupalabdi (non-perception, negative/cognitive proof) and Śabda (word, testimony of past or present reliable experts). While the Nyaya school (beginning with the Nyāya Sūtras of Gotama, between 6th-century BCE and 2nd-century CE) were a proponent of realism and supported four pramanas (perception, inference, comparison/analogy and testimony), the Buddhist epistemologists (Dignaga and Dharmakirti) generally accepted only perception and inference. The Carvaka school of materialists only accepted the pramana of perception, and hence were among the first empiricists in the Indian traditions. Another school, the Ajñana, included notable proponents of philosophical skepticism.

The theory of knowledge of the Buddha in the early Buddhist texts has been interpreted as a form of pragmatism as well as a form of correspondence theory. Likewise, the Buddhist philosopher Dharmakirti has been interpreted both as holding a form of pragmatism or correspondence theory for his view that what is true is what has effective power (arthakriya). The Buddhist Madhyamika school's theory of emptiness (shunyata) meanwhile has been interpreted as a form of philosophical skepticism.

The main contribution to epistemology by the Jains has been their theory of "many sided-ness" or "multi-perspectivism" (Anekantavada), which says that since the world is multifaceted, any single viewpoint is limited (naya – a partial standpoint). This has been interpreted as a kind of pluralism or perspectivism. According to Jain epistemology, none of the pramanas gives absolute or perfect knowledge since they are each limited points of view.

Domains of inquiry in epistemology

Formal epistemology

Formal epistemology uses formal tools and methods from decision theory, logic, probability theory and computability theory to model and reason about issues of epistemological interest. Work in this area spans several academic fields, including philosophy, computer science, economics, and statistics. The focus of formal epistemology has tended to differ somewhat from that of traditional epistemology, with topics like uncertainty, induction, and belief revision garnering more attention than the analysis of knowledge, skepticism, and issues with justification.

Historical epistemology

Historical epistemology is the study of the historical conditions of, and changes in, different kinds of knowledge. There are many versions of or approaches to historical epistemology, which is different from history of epistemology. Twentieth-century French historical epistemologists like Abel Rey, Gaston Bachelard, Jean Cavaillès, and Georges Canguilhem focused specifically on changes in scientific discourse.

Metaepistemology

Metaepistemology is the metaphilosophical study of the methods, aims, and subject matter of epistemology. In general, metaepistemology aims to better understand our first-order epistemological inquiry. Some goals of metaepistemology are identifying inaccurate assumptions made in epistemological debates and determining whether the questions asked in mainline epistemology are the right epistemological questions to be asking.

Social epistemology

Social epistemology deals with questions about knowledge in contexts where our knowledge attributions cannot be explained by simply examining individuals in isolation from one another, meaning that the scope of our knowledge attributions must be widened to include broader social contexts. It also explores the ways in which interpersonal beliefs can be justified in social contexts. The most common topics discussed in contemporary social epistemology are testimony, which deals with the conditions under which a belief "x is true" which resulted from being told "x is true" constitutes knowledge; peer disagreement, which deals with when and how I should revise my beliefs in light of other people holding beliefs that contradict mine; and group epistemology, which deals with what it means to attribute knowledge to groups rather than individuals, and when group knowledge attributions are appropriate.