From Wikipedia, the free encyclopedia

Alzheimer's disease (AD), also known as Alzheimer disease, or just Alzheimer's, accounts for 60% to 70% of cases of dementia.[1][2] It is a chronic neurodegenerative disease that usually starts slowly and gets worse over time.[1][2] The most common early symptom is difficulty in remembering recent events (short term memory loss).[1] As the disease advances, symptoms can include: problems with language, disorientation (including easily getting lost), mood swings, loss of motivation, not managing self care, and behavioural issues.[2][1] As a person's condition declines they often withdraw from family and society.[1] Gradually, bodily functions are lost, ultimately leading to death.[3] Although the speed of progression can vary, the average life expectancy following diagnosis is three to nine years.[4][5]

The cause of Alzheimer's disease is poorly understood.[1] About 70% of the risk is believed to be genetic with many genes usually involved.[6] Other risk factors include: a history of head injuries, depression or hypertension.[1] The disease process is associated with plaques and tangles in the brain.[6] A probable diagnosis is based on the history of the illness and cognitive testing with medical imaging and blood tests to rule out other possible causes.[7] Initial symptoms are often mistaken for normal ageing.[1] Examination of brain tissue is needed for a definite diagnosis.[6] Mental and physical exercise, and avoiding obesity may decrease the risk of AD.[6] There are no medications or supplements with evidence to support their use.[8]

No treatments stop or reverse its progression, though some may temporarily improve symptoms.[2] Affected people increasingly rely on others for assistance often placing a burden on the caregiver; the pressures can include social, psychological, physical, and economic elements.[9] Exercise programs are beneficial with respect to activities of daily living and potentially improve outcomes.[10] Treatment of behavioral problems or psychosis due to dementia with antipsychotics is common but not usually recommended due to there often being little benefit and an increased risk of early death.[11][12]

In 2010, there were between 21 and 35 million people worldwide with AD.[4][2] It most often begins in people over 65 years of age, although 4% to 5% of cases are early-onset Alzheimer's which begin before this.[13] It affects about 6% of people 65 years and older.[1] In 2010 dementia resulted in about 486,000 deaths.[14] It was first described by, and later named after, German psychiatrist and pathologist Alois Alzheimer in 1906.[15] In developed countries, AD is one of the most financially costly diseases.[16][17]

Characteristics

Stages of Alzheimer's disease

- Effects of ageing on memory but not AD

- Forgetting things occasionally[18]

- Misplacing items sometimes[18]

- Minor short-term memory loss[18]

- Forgetting that memory lapses happened[18]

- Early stage Alzheimer's

- Absent-mindedness[18]

- Forgetting appointments[18]

- Slight changes seen by close loved ones[18]

- Some confusion in situations outside the familiar[18]

- Middle stage Alzheimer's

- Deeper difficulty remembering recently learned information[18]

- Deepening confusion in many circumstances[18]

- Speech impairment[18]

- Repeatedly initiating the same conversation[18]

- Late stage Alzheimer's

- More aggressive or passive[18]

- Some loss of self-awareness[18]

- Debilitating cognitive deficit[18]

- More abusive, anxious, or paranoid[18]

Pre-dementia

The first symptoms are often mistakenly attributed to ageing or stress.[19] Detailed neuropsychological testing can reveal mild cognitive difficulties up to eight years before a person fulfills the clinical criteria for diagnosis of AD.[20] These early symptoms can affect the most complex daily living activities.[21] The most noticeable deficit is short term memory loss, which shows up as difficulty in remembering recently learned facts and inability to acquire new information.[20][22]Subtle problems with the executive functions of attentiveness, planning, flexibility, and abstract thinking, or impairments in semantic memory (memory of meanings, and concept relationships) can also be symptomatic of the early stages of AD.[20] Apathy can be observed at this stage, and remains the most persistent neuropsychiatric symptom throughout the course of the disease.[23] Depressive symptoms, irritability and reduced awareness of subtle memory difficulties are also common.[24] The preclinical stage of the disease has also been termed mild cognitive impairment (MCI).[22]This is often found to be a transitional stage between normal ageing and dementia. MCI can present with a variety of symptoms, and when memory loss is the predominant symptom it is termed "amnestic MCI" and is frequently seen as a prodromal stage of Alzheimer's disease.[25]

Early

In people with AD the increasing impairment of learning and memory eventually leads to a definitive diagnosis. In a small percentage, difficulties with language, executive functions, perception (agnosia), or execution of movements (apraxia) are more prominent than memory problems.[26] AD does not affect all memory capacities equally. Older memories of the person's life (episodic memory), facts learned (semantic memory), and implicit memory (the memory of the body on how to do things, such as using a fork to eat) are affected to a lesser degree than new facts or memories.[27][28]Language problems are mainly characterised by a shrinking vocabulary and decreased word fluency, which lead to a general impoverishment of oral and written language.[26][29] In this stage, the person with Alzheimer's is usually capable of communicating basic ideas adequately.[26][29][30] While performing fine motor tasks such as writing, drawing or dressing, certain movement coordination and planning difficulties (apraxia) may be present but they are commonly unnoticed.[26] As the disease progresses, people with AD can often continue to perform many tasks independently, but may need assistance or supervision with the most cognitively demanding activities.[26]

Moderate

Progressive deterioration eventually hinders independence, with subjects being unable to perform most common activities of daily living.[26] Speech difficulties become evident due to an inability to recall vocabulary, which leads to frequent incorrect word substitutions (paraphasias). Reading and writing skills are also progressively lost.[26][30] Complex motor sequences become less coordinated as time passes and AD progresses, so the risk of falling increases.[26] During this phase, memory problems worsen, and the person may fail to recognise close relatives.[26] Long-term memory, which was previously intact, becomes impaired.[26]Behavioural and neuropsychiatric changes become more prevalent. Common manifestations are wandering, irritability and labile affect, leading to crying, outbursts of unpremeditated aggression, or resistance to caregiving.[26] Sundowning can also appear.[31] Approximately 30% of people with AD develop illusionary misidentifications and other delusional symptoms.[26] Subjects also lose insight of their disease process and limitations (anosognosia).[26] Urinary incontinence can develop.[26] These symptoms create stress for relatives and carers, which can be reduced by moving the person from home care to other long-term care facilities.[26][32]

Advanced

During the final stages, the patient is completely dependent upon caregivers.[26] Language is reduced to simple phrases or even single words, eventually leading to complete loss of speech.[26][30] Despite the loss of verbal language abilities, people can often understand and return emotional signals.Although aggressiveness can still be present, extreme apathy and exhaustion are much more common symptoms. People with Alzheimer's disease will ultimately not be able to perform even the simplest tasks independently; muscle mass and mobility deteriorate to the point where they are bedridden and unable to feed themselves. The cause of death is usually an external factor, such as infection of pressure ulcers or pneumonia, not the disease itself.[26]

Cause

The cause for most Alzheimer's cases is still mostly unknown except for 1% to 5% of cases where genetic differences have been identified.[33] Several competing hypotheses exist trying to explain the cause of the disease:Genetics

The genetic heritability of Alzheimer's disease (and memory components thereof), based on reviews of twin and family studies, range from 49% to 79%.[34][35] Around 0.1% of the cases are familial forms of autosomal (not sex-linked) dominant inheritance, which have an onset before age 65.[36] This form of the disease is known as early onset familial Alzheimer's disease. Most of autosomal dominant familial AD can be attributed to mutations in one of three genes: those encoding amyloid precursor protein (APP) and presenilins 1 and 2.[37] Most mutations in the APP and presenilin genes increase the production of a small protein called Aβ42, which is the main component of senile plaques.[38] Some of the mutations merely alter the ratio between Aβ42 and the other major forms—e.g., Aβ40—without increasing Aβ42 levels.[38][39] This suggests that presenilin mutations can cause disease even if they lower the total amount of Aβ produced and may point to other roles of presenilin or a role for alterations in the function of APP and/or its fragments other than Aβ. There exist variants of the APP gene which are protective.[40]Most cases of Alzheimer's disease do not exhibit autosomal-dominant inheritance and are termed sporadic AD, in which environmental and genetic differences may act as risk factors. The best known genetic risk factor is the inheritance of the ε4 allele of the apolipoprotein E (APOE).[41][42] Between 40 and 80% of people with AD possess at least one APOEε4 allele.[42] The APOEε4 allele increases the risk of the disease by three times in heterozygotes and by 15 times in homozygotes.[36] Like many human diseases, environmental effects and genetic modifiers result in incomplete penetrance. For example, certain Nigerian populations do not show the relationship between dose of APOEε4 and incidence or age-of-onset for Alzheimer's disease seen in other human populations.[43][44] Early attempts to screen up to 400 candidate genes for association with late-onset sporadic AD (LOAD) resulted in a low yield,[36][37] More recent genome-wide association studies (GWAS) have found 19 areas in genes that appear to affect the risk.[45] These genes include: CASS4, CELF1, FERMT2, HLA-DRB5, INPP5D, MEF2C, NME8, PTK2B, SORL1, ZCWPW1, SlC24A4, CLU, PICALM, CR1, BIN1, MS4A, ABCA7, EPHA1, and CD2AP.[45]

Mutations in the TREM2 gene have been associated with a 3 to 5 times higher risk of developing Alzheimer's disease.[46][47] A suggested mechanism of action is that when TREM2 is mutated, white blood cells in the brain are no longer able to control the amount of beta amyloid present.

Cholinergic hypothesis

The oldest, on which most currently available drug therapies are based, is the cholinergic hypothesis,[48] which proposes that AD is caused by reduced synthesis of the neurotransmitter acetylcholine. The cholinergic hypothesis has not maintained widespread support, largely because medications intended to treat acetylcholine deficiency have not been very effective. Other cholinergic effects have also been proposed, for example, initiation of large-scale aggregation of amyloid,[49] leading to generalised neuroinflammation.[50]Amyloid hypothesis

In 1991, the amyloid hypothesis postulated that extracellular amyloid beta (Aβ) deposits are the fundamental cause of the disease.[51][52] Support for this postulate comes from the location of the gene for the amyloid precursor protein (APP) on chromosome 21, together with the fact that people with trisomy 21 (Down Syndrome) who have an extra gene copy almost universally exhibit AD by 40 years of age.[53][54] Also, a specific isoform of apolipoprotein, APOE4, is a major genetic risk factor for AD. Whilst apolipoproteins enhance the breakdown of beta amyloid, some isoforms are not very effective at this task (such as APOE4), leading to excess amyloid buildup in the brain.[55]Further evidence comes from the finding that transgenic mice that express a mutant form of the human APP gene develop fibrillar amyloid plaques and Alzheimer's-like brain pathology with spatial learning deficits.[56]

An experimental vaccine was found to clear the amyloid plaques in early human trials, but it did not have any significant effect on dementia.[57] Researchers have been led to suspect non-plaque Aβ oligomers (aggregates of many monomers) as the primary pathogenic form of Aβ. These toxic oligomers, also referred to as amyloid-derived diffusible ligands (ADDLs), bind to a surface receptor on neurons and change the structure of the synapse, thereby disrupting neuronal communication.[58] One receptor for Aβ oligomers may be the prion protein, the same protein that has been linked to mad cow disease and the related human condition, Creutzfeldt–Jakob disease, thus potentially linking the underlying mechanism of these neurodegenerative disorders with that of Alzheimer's disease.[59]

In 2009, this theory was updated, suggesting that a close relative of the beta-amyloid protein, and not necessarily the beta-amyloid itself, may be a major culprit in the disease. The theory holds that an amyloid-related mechanism that prunes neuronal connections in the brain in the fast-growth phase of early life may be triggered by ageing-related processes in later life to cause the neuronal withering of Alzheimer's disease.[60] N-APP, a fragment of APP from the peptide's N-terminus, is adjacent to beta-amyloid and is cleaved from APP by one of the same enzymes. N-APP triggers the self-destruct pathway by binding to a neuronal receptor called death receptor 6 (DR6, also known as TNFRSF21).[60] DR6 is highly expressed in the human brain regions most affected by Alzheimer's, so it is possible that the N-APP/DR6 pathway might be hijacked in the ageing brain to cause damage. In this model, beta-amyloid plays a complementary role, by depressing synaptic function.

Tau hypothesis

The tau hypothesis proposes that tau protein abnormalities initiate the disease cascade.[52] In this model, hyperphosphorylated tau begins to pair with other threads of tau. Eventually, they form neurofibrillary tangles inside nerve cell bodies.[61] When this occurs, the microtubules disintegrate, destroying the structure of the cell's cytoskeleton which collapses the neuron's transport system.[62] This may result first in malfunctions in biochemical communication between neurons and later in the death of the cells.[63]

Other hypotheses

Herpes simplex virus type 1 has been proposed to play a causative role in people carrying the susceptible versions of the apoE gene.[64]The cellular homeostasis of ionic copper, iron, and zinc is disrupted in AD, though it remains unclear whether this is produced by or causes the changes in proteins. These ions affect and are affected by tau, APP, and APOE.[65] Some studies have shown an increased risk of developing AD with environmental factors such as the intake of metals, particularly aluminium.[66] The quality of some of these studies has been criticised,[67] and other studies have concluded that there is no relationship between these environmental factors and the development of AD.[68] Some have hypothesised that dietary copper may play a causal role.[69]

While some studies suggest that extremely low frequency electromagnetic fields may increase the risk for Alzheimer's disease,[70] reviewers found that further epidemiological and laboratory investigations of this hypothesis are needed.[71] Smoking is a significant AD risk factor.[72] Systemic markers of the innate immune system are risk factors for late-onset AD.[73]

Another hypothesis asserts that the disease may be caused by age-related myelin breakdown in the brain. Iron released during myelin breakdown is hypothesised to cause further damage. Homeostatic myelin repair processes contribute to the development of proteinaceous deposits such as beta-amyloid and tau.[74][75][76]

Oxidative stress and dys-homeostasis of biometal metabolism may be significant in the formation of the pathology.[77][78][79] In this point of view, low molecular weight antioxidants such as melatonin would be promising.[80]

AD individuals show 70% loss of locus coeruleus cells that provide norepinephrine (in addition to its neurotransmitter role) that locally diffuses from "varicosities" as an endogenous anti-inflammatory agent in the microenvironment around the neurons, glial cells, and blood vessels in the neocortex and hippocampus.[81] It has been shown that norepinephrine stimulates mouse microglia to suppress Aβ-induced production of cytokines and their phagocytosis of Aβ.[81] This suggests that degeneration of the locus ceruleus might be responsible for increased Aβ deposition in AD brains.[81]

There is tentative evidence that exposure to air pollution may be a contributing factor to the development of Alzheimer's disease.[82]

Pathophysiology

Histopathologic image of senile plaques seen in the cerebral cortex of a person with Alzheimer's disease of presenile onset. Silver impregnation.

Neuropathology

Alzheimer's disease is characterised by loss of neurons and synapses in the cerebral cortex and certain subcortical regions. This loss results in gross atrophy of the affected regions, including degeneration in the temporal lobe and parietal lobe, and parts of the frontal cortex and cingulate gyrus.[50]Degeneration is also present in brainstem nuclei like the locus coeruleus.[83] Studies using MRI and PET have documented reductions in the size of specific brain regions in people with AD as they progressed from mild cognitive impairment to Alzheimer's disease, and in comparison with similar images from healthy older adults.[84][85]

Both amyloid plaques and neurofibrillary tangles are clearly visible by microscopy in brains of those afflicted by AD.[86] Plaques are dense, mostly insoluble deposits of beta-amyloid peptide and cellular material outside and around neurons. Tangles (neurofibrillary tangles) are aggregates of the microtubule-associated protein tau which has become hyperphosphorylated and accumulate inside the cells themselves. Although many older individuals develop some plaques and tangles as a consequence of ageing, the brains of people with AD have a greater number of them in specific brain regions such as the temporal lobe.[87] Lewy bodies are not rare in the brains of people with AD.[88]

Biochemistry

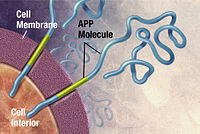

Alzheimer's disease has been identified as a protein misfolding disease (proteopathy), caused by plaque accumulation of abnormally folded amyloid beta protein, and tau protein in the brain.[89] Plaques are made up of small peptides, 39–43 amino acids in length, called amyloid beta (Aβ). Aβ is a fragment from the larger amyloid precursor protein (APP). APP is a transmembrane protein that penetrates through the neuron's membrane. APP is critical to neuron growth, survival and post-injury repair.[90][91] In Alzheimer's disease, an unknown enzyme in a proteolytic process causes APP to be divided into smaller fragments.[92] One of these fragments gives rise to fibrils of amyloid beta, which then form clumps that deposit outside neurons in dense formations known as senile plaques.[86][93]

AD is also considered a tauopathy due to abnormal aggregation of the tau protein. Every neuron has a cytoskeleton, an internal support structure partly made up of structures called microtubules. These microtubules act like tracks, guiding nutrients and molecules from the body of the cell to the ends of the axon and back. A protein called tau stabilises the microtubules when phosphorylated, and is therefore called a microtubule-associated protein. In AD, tau undergoes chemical changes, becoming hyperphosphorylated; it then begins to pair with other threads, creating neurofibrillary tangles and disintegrating the neuron's transport system.[94]

Disease mechanism

Exactly how disturbances of production and aggregation of the beta-amyloid peptide gives rise to the pathology of AD is not known.[95][96] The amyloid hypothesis traditionally points to the accumulation of beta-amyloid peptides as the central event triggering neuron degeneration.Accumulation of aggregated amyloid fibrils, which are believed to be the toxic form of the protein responsible for disrupting the cell's calcium ion homeostasis, induces programmed cell death (apoptosis).[97] It is also known that Aβ selectively builds up in the mitochondria in the cells of Alzheimer's-affected brains, and it also inhibits certain enzyme functions and the utilisation of glucose by neurons.[98]

Various inflammatory processes and cytokines may also have a role in the pathology of Alzheimer's disease. Inflammation is a general marker of tissue damage in any disease, and may be either secondary to tissue damage in AD or a marker of an immunological response.[99]

Alterations in the distribution of different neurotrophic factors and in the expression of their receptors such as the brain-derived neurotrophic factor (BDNF) have been described in AD.[100][101]

Diagnosis

Alzheimer's disease is usually diagnosed based on the person's medical history, history from relatives, and behavioural observations. The presence of characteristic neurological and neuropsychological features and the absence of alternative conditions is supportive.[102][103] Advanced medical imaging with computed tomography (CT) or magnetic resonance imaging (MRI), and with single-photon emission computed tomography (SPECT) or positron emission tomography (PET) can be used to help exclude other cerebral pathology or subtypes of dementia.[104] Moreover, it may predict conversion from prodromal stages (mild cognitive impairment) to Alzheimer's disease.[105]

Assessment of intellectual functioning including memory testing can further characterise the state of the disease.[19] Medical organisations have created diagnostic criteria to ease and standardise the diagnostic process for practising physicians. The diagnosis can be confirmed with very high accuracy post-mortem when brain material is available and can be examined histologically.[106]

Criteria

The National Institute of Neurological and Communicative Disorders and Stroke (NINCDS) and the Alzheimer's Disease and Related Disorders Association (ADRDA, now known as the Alzheimer's Association) established the most commonly used NINCDS-ADRDA Alzheimer's Criteria for diagnosis in 1984,[106] extensively updated in 2007.[107] These criteria require that the presence of cognitive impairment, and a suspected dementia syndrome, be confirmed by neuropsychological testing for a clinical diagnosis of possible or probable AD. A histopathologic confirmation including a microscopic examination of brain tissue is required for a definitive diagnosis. Good statistical reliability and validity have been shown between the diagnostic criteria and definitive histopathological confirmation.[108] Eight cognitive domains are most commonly impaired in AD—memory, language, perceptual skills, attention, constructive abilities, orientation, problem solving and functional abilities. These domains are equivalent to the NINCDS-ADRDA Alzheimer's Criteria as listed in the Diagnostic and Statistical Manual of Mental Disorders (DSM-IV-TR) published by the American Psychiatric Association.[109][110]Techniques

Neuropsychological screening tests can help in the diagnosis of AD. In the tests, people are instructed to copy drawings similar to the one shown in the picture, remember words, read, and subtract serial numbers.

Neuropsychological tests such as the mini–mental state examination (MMSE) are widely used to evaluate the cognitive impairments needed for diagnosis. More comprehensive test arrays are necessary for high reliability of results, particularly in the earliest stages of the disease.[111][112] Neurological examination in early AD will usually provide normal results, except for obvious cognitive impairment, which may not differ from that resulting from other diseases processes, including other causes of dementia.

Further neurological examinations are crucial in the differential diagnosis of AD and other diseases.[19] Interviews with family members are also utilised in the assessment of the disease. Caregivers can supply important information on the daily living abilities, as well as on the decrease, over time, of the person's mental function.[105] A caregiver's viewpoint is particularly important, since a person with AD is commonly unaware of his own deficits.[113] Many times, families also have difficulties in the detection of initial dementia symptoms and may not communicate accurate information to a physician.[114]

Supplemental testing provides extra information on some features of the disease or is used to rule out other diagnoses. Blood tests can identify other causes for dementia than AD[19]—causes which may, in rare cases, be reversible.[115] It is common to perform thyroid function tests, assess B12, rule out syphilis, rule out metabolic problems (including tests for kidney function, electrolyte levels and for diabetes), assess levels of heavy metals (e.g. lead, mercury) and anaemia. (See differential diagnosis for Dementia). (It is also necessary to rule out delirium).

Psychological tests for depression are employed, since depression can either be concurrent with AD (see Depression of Alzheimer disease), an early sign of cognitive impairment,[116] or even the cause.[117][118]

Early diagnosis

Emphasis in Alzheimer's research has been placed on diagnosing the condition before symptoms begin.[119] A number of biochemical tests have been developed to allow for early detection. One such test involves the analysis of cerebrospinal fluid for beta-amyloid or tau proteins,[120] both total tau protein and phosphorylated tau181P protein concentrations.[121][122] Searching for these proteins using a spinal tap can predict the onset of Alzheimer's with a sensitivity of between 94% and 100%.[121] When used in conjunction with existing neuroimaging techniques, doctors can identify people with significant memory loss who are already developing the disease.[121]Prevention

Intellectual activities such as playing chess or regular social interaction have been linked to a reduced risk of AD in epidemiological studies, although no causal relationship has been found.

At present, there is no definitive evidence to support that any particular measure is effective in preventing AD.[123] Global studies of measures to prevent or delay the onset of AD have often produced inconsistent results. Epidemiological studies have proposed relationships between certain modifiable factors, such as diet, cardiovascular risk, pharmaceutical products, or intellectual activities among others, and a population's likelihood of developing AD. Only further research, including clinical trials, will reveal whether these factors can help to prevent AD.[124]

Medication

Although cardiovascular risk factors, such as hypercholesterolaemia, hypertension, diabetes, and smoking, are associated with a higher risk of onset and course of AD,[125][126] statins, which are cholesterol lowering drugs, have not been effective in preventing or improving the course of the disease.[127][128]Long-term usage of non-steroidal anti-inflammatory drugs (NSAIDs) is associated with a reduced likelihood of developing AD.[129] Evidence also support the notion that NSAIDs can reduce inflammation related to amyloid plaques.[129] No prevention trial has been completed.[129] They do not appear to be useful as a treatment.[130] Hormone replacement therapy, although previously used, may increase the risk of dementia.[131]

Lifestyle

People who engage in intellectual activities such as reading, playing board games, completing crossword puzzles, playing musical instruments, or regular social interaction show a reduced risk for Alzheimer's disease.[132] This is compatible with the cognitive reserve theory, which states that some life experiences result in more efficient neural functioning providing the individual a cognitive reserve that delays the onset of dementia manifestations.[132] Education delays the onset of AD syndrome, but is not related to earlier death after diagnosis.[133] Learning a second language even later in life seems to delay getting Alzheimer disease.[134] Physical activity is also associated with a reduced risk of AD.[133] A 2015 review suggests that mindfulness-based interventions may prevent or delay the onset of mild cognitive impairment and Alzheimer's disease.[135]Diet

People who eat a healthy, Japanese or mediterranean diet have a lower risk of AD,[136] and a mediterranean diet may improve outcomes in those with the disease.[137] Those who eat a diet high in saturated fats and simple carbohydrates have a higher risk.[138] The mediterranean diet's beneficial cardiovascular effect has been proposed as the mechanism of action.[139]Conclusions on dietary components have at times been difficult to ascertain as results have differed between population-based studies and randomised controlled trials.[136] There is limited evidence that light to moderate use of alcohol, particularly red wine, is associated with lower risk of AD.[140] There is tentative evidence that caffeine may be protective.[141] A number of foods high in flavonoids such as cocoa, red wine, and tea may decrease the risk of AD.[142][143]

Reviews on the use of vitamins and minerals have not found enough consistent evidence to recommend them. This includes vitamin A,[144][145] C,[146][147] E,[147][148] selenium,[149] zinc,[150] and folic acid with or without vitamin B12.[151] Additionally vitamin E is associated with health risks.[147] Trials examining folic acid (B9) and other B vitamins failed to show any significant association with cognitive decline.[152] In those already affected with AD adding docosahexaenoic acid, an Omega 3 fatty acid, to the diet has not been found to slow decline.[153]

Curcumin as of 2010 has not shown benefit in people even though there is tentative evidence in animals.[154] There is inconsistent and unconvincing evidence that ginkgo has any positive effect on cognitive impairment and dementia.[155] As of 2008 there is no concrete evidence that cannabinoids are effective in improving the symptoms of AD or dementia.[156] Some research in its early stages however looks promising.[157]

Management

There is no cure for Alzheimer's disease; available treatments offer relatively small symptomatic benefit but remain palliative in nature. Current treatments can be divided into pharmaceutical, psychosocial and caregiving.Medications

Three-dimensional molecular model of donepezil, an acetylcholinesterase inhibitor used in the treatment of AD symptoms

Five medications are currently used to treat the cognitive problems of AD: four are acetylcholinesterase inhibitors (tacrine, rivastigmine, galantamine and donepezil) and the other (memantine) is an NMDA receptor antagonist.[158] The benefit from their use is small.[159][160] No medication has been clearly shown to delay or halt the progression of the disease.

Reduction in the activity of the cholinergic neurons is a well-known feature of Alzheimer's disease.[161] Acetylcholinesterase inhibitors are employed to reduce the rate at which acetylcholine (ACh) is broken down, thereby increasing the concentration of ACh in the brain and combating the loss of ACh caused by the death of cholinergic neurons.[162] There is evidence for the efficacy of these medications in mild to moderate Alzheimer's disease,[163][164] and some evidence for their use in the advanced stage. Only donepezil is approved for treatment of advanced AD dementia.[165] The use of these drugs in mild cognitive impairment has not shown any effect in a delay of the onset of AD.[166] The most common side effects are nausea and vomiting, both of which are linked to cholinergic excess. These side effects arise in approximately 10–20% of users, are mild to moderate in severity, and can be managed by slowly adjusting medication doses.[167] Less common secondary effects include muscle cramps, decreased heart rate (bradycardia), decreased appetite and weight, and increased gastric acid production.[168]

Glutamate is a useful excitatory neurotransmitter of the nervous system, although excessive amounts in the brain can lead to cell death through a process called excitotoxicity which consists of the overstimulation of glutamate receptors. Excitotoxicity occurs not only in Alzheimer's disease, but also in other neurological diseases such as Parkinson's disease and multiple sclerosis.[169] Memantine is a noncompetitive NMDA receptor antagonist first used as an anti-influenza agent. It acts on the glutamatergic system by blocking NMDA receptors and inhibiting their overstimulation by glutamate.[169][170] Memantine has been shown to be moderately efficacious in the treatment of moderate to severe Alzheimer's disease. Its effects in the initial stages of AD are unknown.[171] Reported adverse events with memantine are infrequent and mild, including hallucinations, confusion, dizziness, headache and fatigue.[172] The combination of memantine and donepezil has been shown to be "of statistically significant but clinically marginal effectiveness".[173]

Antipsychotic drugs are modestly useful in reducing aggression and psychosis in Alzheimer's disease with behavioural problems, but are associated with serious adverse effects, such as stroke, movement difficulties or cognitive decline, that do not permit their routine use.[174][175] When used in the long-term, they have been shown to associate with increased mortality.[175]

Huperzine A while promising, requires further evidence before it use can be recommended.[176]

Psychosocial intervention

A specifically designed room for sensory integration therapy, also called snoezelen; an emotion-oriented psychosocial intervention for people with dementia

Psychosocial interventions are used as an adjunct to pharmaceutical treatment and can be classified within behaviour-, emotion-, cognition- or stimulation-oriented approaches. Research on efficacy is unavailable and rarely specific to AD, focusing instead on dementia in general.[177]

Behavioural interventions attempt to identify and reduce the antecedents and consequences of problem behaviours. This approach has not shown success in improving overall functioning,[178] but can help to reduce some specific problem behaviours, such as incontinence.[179] There is a lack of high quality data on the effectiveness of these techniques in other behaviour problems such as wandering.[180][181]

Emotion-oriented interventions include reminiscence therapy, validation therapy, supportive psychotherapy, sensory integration, also called snoezelen, and simulated presence therapy. Supportive psychotherapy has received little or no formal scientific study, but some clinicians find it useful in helping mildly impaired people adjust to their illness.[177] Reminiscence therapy (RT) involves the discussion of past experiences individually or in group, many times with the aid of photographs, household items, music and sound recordings, or other familiar items from the past. Although there are few quality studies on the effectiveness of RT, it may be beneficial for cognition and mood.[182] Simulated presence therapy (SPT) is based on attachment theories and involves playing a recording with voices of the closest relatives of the person with Alzheimer's disease. There is partial evidence indicating that SPT may reduce challenging behaviours.[183] Finally, validation therapy is based on acceptance of the reality and personal truth of another's experience, while sensory integration is based on exercises aimed to stimulate senses. There is no evidence to support the usefulness of these

therapies.[184][185]

The aim of cognition-oriented treatments, which include reality orientation and cognitive retraining, is the reduction of cognitive deficits. Reality orientation consists in the presentation of information about time, place or person to ease the understanding of the person about its surroundings and his or her place in them. On the other hand cognitive retraining tries to improve impaired capacities by exercitation of mental abilities. Both have shown some efficacy improving cognitive capacities,[186][187] although in some studies these effects were transient and negative effects, such as frustration, have also been reported.[177]

Stimulation-oriented treatments include art, music and pet therapies, exercise, and any other kind of recreational activities. Stimulation has modest support for improving behaviour, mood, and, to a lesser extent, function. Nevertheless, as important as these effects are, the main support for the use of stimulation therapies is the change in the person's routine.[177]

Caregiving

Since Alzheimer's has no cure and it gradually renders people incapable of tending for their own needs, caregiving essentially is the treatment and must be carefully managed over the course of the disease.During the early and moderate stages, modifications to the living environment and lifestyle can increase patient safety and reduce caretaker burden.[188][189] Examples of such modifications are the adherence to simplified routines, the placing of safety locks, the labelling of household items to cue the person with the disease or the use of modified daily life objects.[177][190][191]If eating becomes problematic, food will need to be prepared in smaller pieces or even pureed.[192] When swallowing difficulties arise, the use of feeding tubes may be required. In such cases, the medical efficacy and ethics of continuing feeding is an important consideration of the caregivers and family members.[193][194] The use of physical restraints is rarely indicated in any stage of the disease, although there are situations when they are necessary to prevent harm to the person with AD or their caregivers.[177]

As the disease progresses, different medical issues can appear, such as oral and dental disease, pressure ulcers, malnutrition, hygiene problems, or respiratory, skin, or eye infections. Careful management can prevent them, while professional treatment is needed when they do arise.[195][196] During the final stages of the disease, treatment is centred on relieving discomfort until death.[197]

A small recent study in the US concluded that people whose caregivers had a realistic understanding of the prognosis and clinical complications of late dementia were less likely to receive aggressive treatment near the end of life. [198]

Feeding tubes

People with Alzheimer's disease (and other forms of dementia) often develop problems with eating, due to difficulties in swallowing, reduced appetite or the inability to recognise food. Their carers and families often request they have some form of feeding tube. However, there is no evidence that this helps people with advanced Alzheimer's to gain weight, regain strength or improve their quality of life. In fact, their use might carry an increased risk of aspiration pneumonia.[199]Prognosis

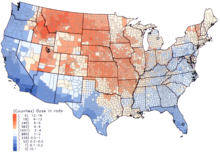

Disability-adjusted life year for Alzheimer and other dementias per 100,000 inhabitants in 2004.

No data

≤ 50

50–70

70–90

90–110

110–130

130–150

|

150–170

170–190

190–210

210–230

230–250

≥ 250

|

The early stages of Alzheimer's disease are difficult to diagnose. A definitive diagnosis is usually made once cognitive impairment compromises daily living activities, although the person may still be living independently. The symptoms will progress from mild cognitive problems, such as memory loss through increasing stages of cognitive and non-cognitive disturbances, eliminating any possibility of independent living, especially in the late stages of the disease.[26]

Life expectancy of the population with the disease is reduced.[200][201][202] The mean life expectancy following diagnosis is approximately seven years.[200] Fewer than 3% of people live more than fourteen years.[203] Disease features significantly associated with reduced survival are an increased severity of cognitive impairment, decreased functional level, history of falls, and disturbances in the neurological examination. Other coincident diseases such as heart problems, diabetes or history of alcohol abuse are also related with shortened survival.[201][204][205] While the earlier the age at onset the higher the total survival years, life expectancy is particularly reduced when compared to the healthy population among those who are younger.[202] Men have a less favourable survival prognosis than women.[203][206]

The disease is the underlying cause of death in 68% of all cases.[200] Pneumonia and dehydration are the most frequent immediate causes of death brought by AD, while cancer is a less frequent cause of death than in the general population.[200][206]

Epidemiology

| Age | New affected per thousand person–years |

|---|---|

| 65–69 | 3 |

| 70–74 | 6 |

| 75–79 | 9 |

| 80–84 | 23 |

| 85–89 | 40 |

| 90– | 69 |

Regarding incidence, cohort longitudinal studies (studies where a disease-free population is followed over the years) provide rates between 10 and 15 per thousand person–years for all dementias and 5–8 for AD,[207][208] which means that half of new dementia cases each year are AD. Advancing age is a primary risk factor for the disease and incidence rates are not equal for all ages: every five years after the age of 65, the risk of acquiring the disease approximately doubles, increasing from 3 to as much as 69 per thousand person years.[207][208] There are also sex differences in the incidence rates, women having a higher risk of developing AD particularly in the population older than 85.[208][209] The risk of dying from Alzheimer’s disease is twenty-six percent higher among the non-Hispanic white population than among the non-Hispanic black population, whereas the Hispanic population has a thirty percent lower risk than the non-Hispanic white population.[210]

Prevalence of AD in populations is dependent upon different factors including incidence and survival. Since the incidence of AD increases with age, it is particularly important to include the mean age of the population of interest. In the United States, Alzheimer prevalence was estimated to be 1.6% in 2000 both overall and in the 65–74 age group, with the rate increasing to 19% in the 75–84 group and to 42% in the greater than 84 group.[211] Prevalence rates in less developed regions are lower.[212] The World Health Organization estimated that in 2005, 0.379% of people worldwide had dementia, and that the prevalence would increase to 0.441% in 2015 and to 0.556% in 2030.[213] Other studies have reached similar conclusions.[212] Another study estimated that in 2006, 0.40% of the world population (range 0.17–0.89%; absolute number 26.6 million, range 11.4–59.4 million) were afflicted by AD, and that the prevalence rate would triple and the absolute number would quadruple by 2050.[214]

History

Alois Alzheimer's patient Auguste Deter in 1902. Hers was the first described case of what became known as Alzheimer's disease.

The ancient Greek and Roman philosophers and physicians associated old age with increasing dementia.[15] It was not until 1901 that German psychiatrist Alois Alzheimer identified the first case of what became known as Alzheimer's disease in a fifty-year-old woman he called Auguste D. He followed her case until she died in 1906, when he first reported publicly on it.[215] During the next five years, eleven similar cases were reported in the medical literature, some of them already using the term Alzheimer's disease.[15] The disease was first described as a distinctive disease by Emil Kraepelin after suppressing some of the clinical (delusions and hallucinations) and pathological features (arteriosclerotic changes) contained in the original report of Auguste D.[216] He included Alzheimer's disease, also named presenile dementia by Kraepelin, as a subtype of senile dementia in the eighth edition of his Textbook of Psychiatry, published on 15 July, 1910.[217]

For most of the 20th century, the diagnosis of Alzheimer's disease was reserved for individuals between the ages of 45 and 65 who developed symptoms of dementia. The terminology changed after 1977 when a conference on AD concluded that the clinical and pathological manifestations of presenile and senile dementia were almost identical, although the authors also added that this did not rule out the possibility that they had different causes.[218] This eventually led to the diagnosis of Alzheimer's disease independently of age.[219] The term senile dementia of the Alzheimer type (SDAT) was used for a time to describe the condition in those over 65, with classical Alzheimer's disease being used for those younger. Eventually, the term Alzheimer's disease was formally adopted in medical nomenclature to describe individuals of all ages with a characteristic common symptom pattern, disease course, and neuropathology.[220]

Society and culture

Social costs

Dementia, and specifically Alzheimer's disease, may be among the most costly diseases for society in Europe and the United States,[16][17] while their cost in other countries such as Argentina,[221] or South Korea,[222] is also high and rising. These costs will probably increase with the ageing of society, becoming an important social problem. AD-associated costs include direct medical costs such as nursing home care, direct nonmedical costs such as in-home day care, and indirect costs such as lost productivity of both patient and caregiver.[17] Numbers vary between studies but dementia costs worldwide have been calculated around $160 billion,[223] while costs of Alzheimer's disease in the United States may be $100 billion each year.[17]The greatest origin of costs for society is the long-term care by health care professionals and particularly institutionalisation, which corresponds to 2/3 of the total costs for society.[16] The cost of living at home is also very high,[16] especially when informal costs for the family, such as caregiving time and caregiver's lost earnings, are taken into account.[224]

Costs increase with dementia severity and the presence of behavioural disturbances,[225] and are related to the increased caregiving time required for the provision of physical care.[224] Therefore any treatment that slows cognitive decline, delays institutionalisation or reduces caregivers' hours will have economic benefits. Economic evaluations of current treatments have shown positive results.[17]

Caregiving burden

The role of the main caregiver is often taken by the spouse or a close relative.[226] Alzheimer's disease is known for placing a great burden on caregivers which includes social, psychological, physical or economic aspects.[9][227][228] Home care is usually preferred by people with AD and their families.[229] This option also delays or eliminates the need for more professional and costly levels of care.[229][230] Nevertheless two-thirds of nursing home residents have dementias.[177]Dementia caregivers are subject to high rates of physical and mental disorders.[231] Factors associated with greater psychosocial problems of the primary caregivers include having an affected person at home, the carer being a spouse, demanding behaviours of the cared person such as depression, behavioural disturbances, hallucinations, sleep problems or walking disruptions and social isolation.[232][233] Regarding economic problems, family caregivers often give up time from work to spend 47 hours per week on average with the person with AD, while the costs of caring for them are high. Direct and indirect costs of caring for an Alzheimer's patient average between $18,000 and $77,500 per year in the United States, depending on the study.[226][224]

Cognitive behavioural therapy and the teaching of coping strategies either individually or in group have demonstrated their efficacy in improving caregivers' psychological health.[9][234]

Notable cases

Charlton Heston and Ronald Reagan at a meeting in the White House. Both of them would later be diagnosed with Alzheimer's disease.

As Alzheimer's disease is highly prevalent, many notable people have developed it. Well-known examples are former United States President Ronald Reagan and Irish writer Iris Murdoch, both of whom were the subjects of scientific articles examining how their cognitive capacities deteriorated with the disease.[235][236][237] Other cases include the retired footballer Ferenc Puskás,[238] the former Prime Ministers Harold Wilson (United Kingdom) and Adolfo Suárez (Spain),[239][240] the actress Rita Hayworth,[241] the actor Charlton Heston,[242] the novelist Terry Pratchett,[243] the author Harnett Kane was stricken in his middle fifties and unable to write for the last seventeen years of his life,[244] Indian politician George Fernandes,[245] and the 2009 Nobel Prize in Physics recipient Charles K. Kao.[246]

AD has also been portrayed in films such as: Iris (2001), based on John Bayley's memoir of his wife Iris Murdoch;[247] The Notebook (2004), based on Nicholas Sparks' 1996 novel of the same name;[248] A Moment to Remember (2004);Thanmathra (2005);[249] Memories of Tomorrow (Ashita no Kioku) (2006), based on Hiroshi Ogiwara's novel of the same name;[250] Away from Her (2006), based on Alice Munro's short story "The Bear Came over the Mountain".[251] Documentaries on Alzheimer's disease include Malcolm and Barbara: A Love Story (1999) and Malcolm and Barbara: Love's Farewell (2007), both featuring Malcolm Pointon.[252]

Research directions

As of 2014[update], the safety and efficacy of more than 400 pharmaceutical treatments had been or were being investigated in over 1,500 clinical trials worldwide, and approximately a quarter of these compounds are in Phase III trials, the last step prior to review by regulatory agencies.[253]One area of clinical research is focused on treating the underlying disease pathology. Reduction of beta-amyloid levels is a common target of compounds[254] (such as apomorphine) under investigation. Immunotherapy or vaccination for the amyloid protein is one treatment modality under study.[255] Unlike preventative vaccination, the putative therapy would be used to treat people already diagnosed. It is based upon the concept of training the immune system to recognise, attack, and reverse deposition of amyloid, thereby altering the course of the disease.[256] An example of such a vaccine under investigation was ACC-001,[257][258] although the trials were suspended in 2008.[259]Another similar agent is bapineuzumab, an antibody designed as identical to the naturally induced anti-amyloid antibody.[260] Other approaches are neuroprotective agents, such as AL-108,[261] and metal-protein interaction attenuation agents, such as PBT2.[262] A TNFα receptor-blocking fusion protein, etanercept has showed encouraging results.[263]

In 2008, two separate clinical trials showed positive results in modifying the course of disease in mild to moderate AD with methylthioninium chloride (trade name rember), a drug that inhibits tau aggregation,[264][265] and dimebon, an antihistamine.[266] The consecutive phase-III trial of dimebon failed to show positive effects in the primary and secondary endpoints.[267][268][269] Work with methylthioninium chloride showed that bioavailability of methylthioninium from the gut was affected by feeding and by stomach acidity, leading to unexpectedly variable dosing.[270] A new stabilized formulation, as the prodrug LMTX, is in phase-III trials.[271]

The common herpes simplex virus HSV-1 has been found to colocate with amyloid plaques.[272] This suggested the possibility that AD could be treated or prevented with antiviral medication.[272][273]

Preliminary research on the effects of meditation on retrieving memory and cognitive functions have been encouraging. Limitations of this research can be addressed in future studies with more detailed analyses.[274][unreliable medical source?]

An FDA panel voted unanimously to recommend approval of florbetapir, which is currently used in an investigational study. The imaging agent can help to detect Alzheimer's brain plaques, but will require additional clinical research before it can be made available commercially.[275]

Imaging

Of the many medical imaging techniques available, single photon emission computed tomography (SPECT) appears to be superior in differentiating Alzheimer's disease from other types of dementia, and this has been shown to give a greater level of accuracy compared with mental testing and medical history analysis.[276] Advances have led to the proposal of new diagnostic criteria.[19][107]PiB PET remains investigational, but a similar PET scanning radiopharmaceutical called florbetapir, containing the longer-lasting radionuclide fluorine-18, has recently been tested as a diagnostic tool in Alzheimer's disease, and given FDA approval for this use.[277][278]

Amyloid imaging is likely to be used in conjunction with other markers rather than as an alternative.[279] Volumetric MRI can detect changes in the size of brain regions. Measuring those regions that atrophy during the progress of Alzheimer's disease is showing promise as a diagnostic indicator. It may prove less expensive than other imaging methods currently under study.[280]