Insignia for the Deep Space Network's 40th anniversary celebrations, 1998.

| |

| Alternative names | NASA Deep Space Network |

|---|---|

| Organization | Interplanetary Network Directorate (NASA / JPL) |

| Location | United States of America, Spain, Australia |

| Coordinates | 34°12′3″N 118°10′18″WCoordinates: 34°12′3″N 118°10′18″W |

| Established | October 1, 1958 |

| Website | https://deepspace.jpl.nasa.gov/ |

| Telescopes | |

The NASA Deep Space Network (DSN) is a worldwide network of U.S. spacecraft communication facilities, located in the United States (California), Spain (Madrid), and Australia (Canberra), that supports NASA's interplanetary spacecraft missions. It also performs radio and radar astronomy observations for the exploration of the Solar System and the universe, and supports selected Earth-orbiting missions. DSN is part of the NASA Jet Propulsion Laboratory (JPL). Similar networks are run by Russia, China, India, Japan and the European Space Agency.

General information

Deep Space Network Operations Center at JPL, Pasadena (California) in 1993.

DSN currently consists of three deep-space communications facilities placed approximately 120 degrees apart around the Earth. They are:

- the Goldstone Deep Space Communications Complex (35°25′36″N 116°53′24″W) outside Barstow, California. For details of Goldstone's contribution to the early days of space probe tracking, see Project Space Track;

- the Madrid Deep Space Communications Complex (40°25′53″N 4°14′53″W), 60 kilometres (37 mi) west of Madrid, Spain; and

- the Canberra Deep Space Communication Complex (CDSCC) in the Australian Capital Territory (35°24′05″S 148°58′54″E), 40 kilometres (25 mi) southwest of Canberra, Australia near the Tidbinbilla Nature Reserve.

Each facility is situated in semi-mountainous, bowl-shaped terrain to help shield against radio frequency interference.

The strategic placement with nearly 120-degree separation permits

constant observation of spacecraft as the Earth rotates, which helps to

make the DSN the largest and most sensitive scientific

telecommunications system in the world.

The DSN supports NASA's contribution to the scientific investigation of the Solar System: It provides a two-way communications link that guides and controls various NASA unmanned interplanetary space probes, and brings back the images and new scientific information these probes collect. All DSN antennas are steerable, high-gain, parabolic reflector antennas.

The antennas and data delivery systems make it possible to:

- acquire telemetry data from spacecraft.

- transmit commands to spacecraft.

- upload software modifications to spacecraft.

- track spacecraft position and velocity.

- perform Very Long Baseline Interferometry observations.

- measure variations in radio waves for radio science experiments.

- gather science data.

- monitor and control the performance of the network.

Operations control center

The antennas at all three DSN Complexes communicate directly with the

Deep Space Operations Center (also known as Deep Space Network

operations control center) located at the JPL facilities in Pasadena, California.

In the early years, the operations control center did not have a

permanent facility. It was a provisional setup with numerous desks and

phones installed in a large room near the computers used to calculate

orbits. In July 1961, NASA started the construction of the permanent

facility, Space Flight Operations Facility (SFOF). The facility was

completed in October 1963 and dedicated on May 14, 1964. In the initial

setup of the SFOF, there were 31 consoles, 100 closed-circuit television

cameras, and more than 200 television displays to support Ranger 6 to Ranger 9 and Mariner 4.

Currently, the operations center personnel at SFOF monitor and

direct operations, and oversee the quality of spacecraft telemetry and

navigation data delivered to network users. In addition to the DSN

complexes and the operations center, a ground communications facility

provides communications that link the three complexes to the operations

center at JPL, to space flight control centers in the United States and

overseas, and to scientists around the world.

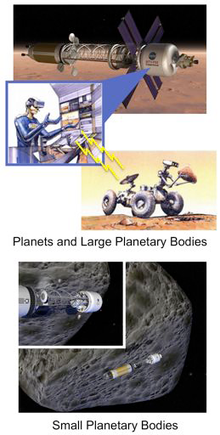

Deep space

View

from the Earth's north pole, showing the field of view of the main DSN

antenna locations. Once a mission gets more than 30,000 km from Earth,

it is always in view of at least one of the stations.

Tracking vehicles in deep space is quite different from tracking missions in low Earth orbit

(LEO). Deep space missions are visible for long periods of time from a

large portion of the Earth's surface, and so require few stations (the

DSN has only three main sites). These few stations, however, require

huge antennas, ultra-sensitive receivers, and powerful transmitters in

order to transmit and receive over the vast distances involved.

Deep space is defined in several different ways. According to a

1975 NASA report, the DSN was designed to communicate with "spacecraft

traveling approximately 16,000 km (10,000 miles) from Earth to the

farthest planets of the solar system." JPL diagrams state that at an altitude of 30,000 km, a spacecraft is always in the field of view of one of the tracking stations.

The International Telecommunications Union,

which sets aside various frequency bands for deep space and near Earth

use, defines "deep space" to start at a distance of 2 million km from

the Earth's surface.

This definition means that missions to the Moon, and the Earth–Sun Lagrangian points L1 and L2, are considered near space and cannot use the ITU's deep space bands. Other Lagrangian points may or may not be subject to this rule due to distance.

History

The forerunner of the DSN was established in January 1958, when JPL, then under contract to the U.S. Army, deployed portable radio tracking stations in Nigeria, Singapore, and California to receive telemetry and plot the orbit of the Army-launched Explorer 1, the first successful U.S. satellite. NASA

was officially established on October 1, 1958, to consolidate the

separately developing space-exploration programs of the US Army, US Navy, and US Air Force into one civilian organization.

On December 3, 1958, JPL was transferred from the US Army to NASA

and given responsibility for the design and execution of lunar and

planetary exploration programs using remotely controlled spacecraft.

Shortly after the transfer, NASA established the concept of the Deep

Space Network as a separately managed and operated communications system

that would accommodate all deep space

missions, thereby avoiding the need for each flight project to acquire

and operate its own specialized space communications network. The DSN

was given responsibility for its own research, development, and

operation in support of all of its users. Under this concept, it has

become a world leader in the development of low-noise receivers; large

parabolic-dish antennas; tracking, telemetry, and command systems;

digital signal processing; and deep space navigation. The Deep Space

Network formally announced its intention to send missions into deep

space on Christmas Eve 1963; it has remained in continuous operation in

one capacity or another ever since.

The largest antennas of the DSN are often called on during

spacecraft emergencies. Almost all spacecraft are designed so normal

operation can be conducted on the smaller (and more economical) antennas

of the DSN, but during an emergency the use of the largest antennas is

crucial. This is because a troubled spacecraft may be forced to use less

than its normal transmitter power, attitude control problems may preclude the use of high-gain antennas,

and recovering every bit of telemetry is critical to assessing the

health of the spacecraft and planning the recovery. The most famous

example is the Apollo 13

mission, where limited battery power and inability to use the

spacecraft's high-gain antennas reduced signal levels below the

capability of the Manned Space Flight Network, and the use of the biggest DSN antennas (and the Australian Parkes Observatory

radio telescope) was critical to saving the lives of the astronauts.

While Apollo was also a US mission, DSN provides this emergency service

to other space agencies as well, in a spirit of inter-agency and

international cooperation. For example, the recovery of the Solar and Heliospheric Observatory (SOHO) mission of the European Space Agency (ESA) would not have been possible without the use of the largest DSN facilities.

DSN and the Apollo program

Although

normally tasked with tracking unmanned spacecraft, the Deep Space

Network (DSN) also contributed to the communication and tracking of Apollo missions to the Moon, although primary responsibility was held by the Manned Space Flight Network.

The DSN designed the MSFN stations for lunar communication and provided

a second antenna at each MSFN site (the MSFN sites were near the DSN

sites for just this reason). Two antennas at each site were needed both

for redundancy and because the beam widths of the large antennas needed

were too small to encompass both the lunar orbiter and the lander at the

same time. DSN also supplied some larger antennas as needed, in

particular for television broadcasts from the Moon, and emergency

communications such as Apollo 13.

Excerpt from a NASA report describing how the DSN and MSFN cooperated for Apollo:

Another critical step in the evolution of the Apollo Network came in 1965 with the advent of the DSN Wing concept. Originally, the participation of DSN 26-m antennas during an Apollo Mission was to be limited to a backup role. This was one reason why the MSFN 26-m sites were collocated with the DSN sites at Goldstone, Madrid, and Canberra. However, the presence of two, well-separated spacecraft during lunar operations stimulated the rethinking of the tracking and communication problem. One thought was to add a dual S-band RF system to each of the three 26-m MSFN antennas, leaving the nearby DSN 26-m antennas still in a backup role. Calculations showed, though, that a 26-m antenna pattern centered on the landed Lunar Module would suffer a 9-to-12 db loss at the lunar horizon, making tracking and data acquisition of the orbiting Command Service Module difficult, perhaps impossible. It made sense to use both the MSFN and DSN antennas simultaneously during the all-important lunar operations. JPL was naturally reluctant to compromise the objectives of its many unmanned spacecraft by turning three of its DSN stations over to the MSFN for long periods. How could the goals of both Apollo and deep space exploration be achieved without building a third 26-m antenna at each of the three sites or undercutting planetary science missions?

The solution came in early 1965 at a meeting at NASA Headquarters, when Eberhardt Rechtin suggested what is now known as the "wing concept". The wing approach involves constructing a new section or "wing" to the main building at each of the three involved DSN sites. The wing would include a MSFN control room and the necessary interface equipment to accomplish the following:

With this arrangement, the DSN station could be quickly switched from a deep-space mission to Apollo and back again. GSFC personnel would operate the MSFN equipment completely independently of DSN personnel. Deep space missions would not be compromised nearly as much as if the entire station's equipment and personnel were turned over to Apollo for several weeks.

- Permit tracking and two-way data transfer with either spacecraft during lunar operations.

- Permit tracking and two-way data transfer with the combined spacecraft during the flight to the Moon.

- Provide backup for the collocated MSFN site passive track (spacecraft to ground RF links) of the Apollo spacecraft during trans-lunar and trans-earth phases.

The details of this cooperation and operation are available in a two-volume technical report from JPL.

Management

The network is a NASA facility and is managed and operated for NASA by JPL, which is part of the California Institute of Technology

(Caltech). The Interplanetary Network Directorate (IND) manages the

program within JPL and is charged with the development and operation of

it. The IND is considered to be JPL's focal point for all matters

relating to telecommunications, interplanetary navigation, information

systems, information technology, computing, software engineering, and

other relevant technologies. While the IND is best known for its duties

relating to the Deep Space Network, the organization also maintains the

JPL Advanced Multi-Mission Operations System (AMMOS) and JPL's Institutional Computing and Information Services (ICIS).

Harris Corporation is under a 5-year contract to JPL for the

DSN's operations and maintenance. Harris has responsibility for managing

the Goldstone complex, operating the DSOC, and for DSN operations,

mission planning, operations engineering, and logistics.

Antennas

70 m antenna at Goldstone, California.

Each complex consists of at least four deep space terminals equipped

with ultra-sensitive receiving systems and large parabolic-dish

antennas. There are:

- One 34-meter (112 ft) diameter High Efficiency antenna (HEF).

- Two or more 34-meter (112 ft) Beam waveguide antennas (BWG) (three operational at the Goldstone Complex, two at the Robledo de Chavela complex (near Madrid), and two at the Canberra Complex).

- One 26-meter (85 ft) antenna.

- One 70-meter (230 ft) antenna.

Five of the 34-meter (112 ft) beam waveguide antennas were added to

the system in the late 1990s. Three were located at Goldstone, and one

each at Canberra and Madrid. A second 34-meter (112 ft) beam waveguide

antenna (the network's sixth) was completed at the Madrid complex in

2004.

In order to meet the current and future needs of deep space

communication services, a number of new Deep Space Station antennas need

to be built at the existing Deep Space Network sites. At the Canberra

Deep Space Communication Complex the first of these was completed

October 2014 (DSS35), with a second becoming operational in October 2016

(DSS36).

Construction has also begun on an additional antenna at the Madrid Deep

Space Communications Complex. By 2025, the 70 meter antennas at all

three locations will be decommissioned and replaced with 34 meter BWG

antennas that will be arrayed. All systems will be upgraded to have

X-band uplink capabilities and both X and Ka-band downlink capabilities.

Current signal processing capabilities

The Canberra Deep Space Communication Complex in 2008

The general capabilities of the DSN have not substantially changed since the beginning of the Voyager

Interstellar Mission in the early 1990s. However, many advancements in

digital signal processing, arraying and error correction have been

adopted by the DSN.

The ability to array several antennas was incorporated to improve the data returned from the Voyager 2 Neptune encounter, and extensively used for the Galileo spacecraft, when the high-gain antenna did not deploy correctly.

The DSN array currently available since the Galileo

mission can link the 70-meter (230 ft) dish antenna at the Deep Space

Network complex in Goldstone, California, with an identical antenna

located in Australia, in addition to two 34-meter (112 ft) antennas at

the Canberra complex. The California and Australia sites were used

concurrently to pick up communications with Galileo.

Arraying of antennas within the three DSN locations is also used.

For example, a 70-meter (230 ft) dish antenna can be arrayed with a

34-meter dish. For especially vital missions, like Voyager 2, non-DSN facilities normally used for radio astronomy can be added to the array. In particular, the Canberra 70-meter (230 ft) dish can be arrayed with the Parkes Radio Telescope in Australia; and the Goldstone 70-meter dish can be arrayed with the Very Large Array of antennas in New Mexico. Also, two or more 34-meter (112 ft) dishes at one DSN location are commonly arrayed together.

All the stations are remotely operated from a centralized Signal

Processing Center at each complex. These Centers house the electronic

subsystems that point and control the antennas, receive and process the

telemetry data, transmit commands, and generate the spacecraft

navigation data. Once the data is processed at the complexes, it is

transmitted to JPL for further processing and for distribution to

science teams over a modern communications network.

Especially at Mars, there are often many spacecraft within the

beam width of an antenna. For operational efficiency, a single antenna

can receive signals from multiple spacecraft at the same time. This

capability is called Multiple Spacecraft Per Aperture, or MSPA.

Currently the DSN can receive up to 4 spacecraft signals at the same

time, or MSPA-4. However, apertures cannot currently be shared for

uplink. When two or more high power carriers are used simultaneously,

very high order intermodulation products fall in the receiver bands,

causing interference to the much (25 orders of magnitude) weaker

received signals. Therefore only one spacecraft at a time can get an uplink, though up to 4 can be received.

Network limitations and challenges

70m antenna in Robledo de Chavela, Community of Madrid, Spain

There are a number of limitations to the current DSN, and a number of challenges going forward.

- The Deep Space Network is something of a misnomer, as there are no current plans, nor future plans, for exclusive communication satellites anywhere in space to handle multiparty, multi-mission use. All the transmitting and receiving equipment are Earth-based. Therefore, data transmission rates from/to any and all spacecrafts and space probes are severely constrained due to the distances from Earth.

- The need to support "legacy" missions that have remained operational beyond their original lifetimes but are still returning scientific data. Programs such as Voyager have been operating long past their original mission termination date. They also need some of the largest antennas.

- Replacing major components can cause problems as it can leave an antenna out of service for months at a time.

- The older 70M & HEF antennas are reaching the end of their lives. At some point these will need to be replaced. The leading candidate for 70M replacement had been an array of smaller dishes, but more recently the decision was taken to expand the provision of 34-meter (112 ft) BWG antennas at each complex to a total of 4.

- New spacecraft intended for missions beyond geocentric orbits are being equipped to use the beacon mode service, which allows such missions to operate without the DSN most of the time.

DSN and radio science

Illustration of Juno and Jupiter. Juno

is in a polar orbit that takes it close to Jupiter as it passes from

north to south, getting a view of both poles. During the GS experiment

it must point its antenna at the Deep Space Network on Earth to pick up a

special signal sent from DSN.

The DSN forms one portion of the radio sciences experiment included

on most deep space missions, where radio links between spacecraft and

Earth are used to investigate planetary science, space physics and

fundamental physics. The experiments include radio occultations,

gravity field determination and celestial mechanics, bistatic

scattering, doppler wind experiments, solar corona characterization, and

tests of fundamental physics.

For example, the Deep Space Network forms one component of the gravity science experiment on Juno. This includes special communication hardware on Juno and uses its communication system. The DSN radiates a Ka-band uplink, which is picked up by Juno's

Ka-Band communication system and then processed by a special

communication box called KaTS, and then this new signal is sent back the

DSN.

This allows the velocity of the spacecraft over time to be determined

with a level of precision that allows a more accurate determination of

the gravity field at planet Jupiter.

Another radio science experiment is REX on the New Horizons

spacecraft to Pluto-Charon. REX received a signal from Earth as it was

occulted by Pluto to take various measurements of that systems of

bodies.