Economics focuses on the behaviour and interactions of economic agents and how economies work. Microeconomics analyzes basic elements in the economy, including individual agents and markets, their interactions, and the outcomes of interactions. Individual agents may include, for example, households, firms, buyers, and sellers. Macroeconomics analyzes the economy as a system where production, consumption, saving, and investment interact, and factors affecting it: employment of the resources of labour, capital, and land, currency inflation, economic growth, and public policies that have impact on these elements.

Other broad distinctions within economics include those between positive economics, describing "what is", and normative economics, advocating "what ought to be"; between economic theory and applied economics; between rational and behavioural economics; and between mainstream economics and heterodox economics.

Economic analysis can be applied throughout society, in real estate, business, finance, health care, engineering and government. Economic analysis is also applied to such diverse subjects as crime, education, the family, law, politics, religion, social institutions, war, science, and the environment.

The multiple aspects of economics

The earlier term for the discipline was 'political economy'. In the late 19th century, primarily due to Alfred Marshall, it was renamed 'economics', as a shorter term for 'economic science'. At that time, it was becoming more open to rigorous thinking, including increased use of mathematics, which helped support efforts to have it accepted as a science separate from political science and other social sciences.

There are a variety of modern definitions of economics; some reflect evolving views of the subject or different views among economists. Scottish philosopher Adam Smith (1776) defined what was then called political economy as "an inquiry into the nature and causes of the wealth of nations", in particular as:

a branch of the science of a statesman or legislator [with the twofold objectives of providing] a plentiful revenue or subsistence for the people ... [and] to supply the state or commonwealth with a revenue for the publick services.

Jean-Baptiste Say (1803), distinguishing the subject from its public-policy uses, defines it as the science of production, distribution, and consumption of wealth. On the satirical side, Thomas Carlyle (1849) coined "the dismal science" as an epithet for classical economics, in this context, commonly linked to the pessimistic analysis of Malthus (1798). John Stuart Mill (1844) defines the subject in a social context as:

The science which traces the laws of such of the phenomena of society as arise from the combined operations of mankind for the production of wealth, in so far as those phenomena are not modified by the pursuit of any other object.

Alfred Marshall provides a still widely cited definition in his textbook Principles of Economics (1890) that extends analysis beyond wealth and from the societal to the microeconomic level:

Economics is a study of man in the ordinary business of life. It enquires how he gets his income and how he uses it. Thus, it is on the one side, the study of wealth and on the other and more important side, a part of the study of man.

Lionel Robbins (1932) developed implications of what has been termed "[p]erhaps the most commonly accepted current definition of the subject":

Economics is a science which studies human behaviour as a relationship between ends and scarce means which have alternative uses.

Robbins describes the definition as not classificatory in "pick[ing] out certain kinds of behaviour" but rather analytical in "focus[ing] attention on a particular aspect of behaviour, the form imposed by the influence of scarcity." He affirmed that previous economists have usually centred their studies on the analysis of wealth: how wealth is created (production), distributed, and consumed; and how wealth can grow. But he said that economics can be used to study other things, such as war, that are outside its usual focus. This is because war has as the goal winning it (as a sought after end), generates both cost and benefits; and, resources (human life and other costs) are used to attain the goal. If the war is not winnable or if the expected costs outweigh the benefits, the deciding actors (assuming they are rational) may never go to war (a decision) but rather explore other alternatives. We cannot define economics as the science that studies wealth, war, crime, education, and any other field economic analysis can be applied to; but, as the science that studies a particular common aspect of each of those subjects (they all use scarce resources to attain a sought after end).

Some subsequent comments criticized the definition as overly broad in failing to limit its subject matter to analysis of markets. From the 1960s, however, such comments abated as the economic theory of maximizing behaviour and rational-choice modelling expanded the domain of the subject to areas previously treated in other fields. There are other criticisms as well, such as in scarcity not accounting for the macroeconomics of high unemployment.

Gary Becker, a contributor to the expansion of economics into new areas, describes the approach he favours as "combin[ing the] assumptions of maximizing behaviour, stable preferences, and market equilibrium, used relentlessly and unflinchingly." One commentary characterizes the remark as making economics an approach rather than a subject matter but with great specificity as to the "choice process and the type of social interaction that [such] analysis involves." The same source reviews a range of definitions included in principles of economics textbooks and concludes that the lack of agreement need not affect the subject-matter that the texts treat. Among economists more generally, it argues that a particular definition presented may reflect the direction toward which the author believes economics is evolving, or should evolve.

History

Economic writings date from earlier Mesopotamian, Greek, Roman, Indian subcontinent, Chinese, Persian, and Arab civilizations. Economic precepts occur throughout the writings of the Boeotian poet Hesiod and several economic historians have described Hesiod himself as the "first economist". Other notable writers from Antiquity through to the Renaissance include Aristotle, Xenophon, Chanakya (also known as Kautilya), Qin Shi Huang, Thomas Aquinas, and Ibn Khaldun. Joseph Schumpeter described Aquinas as "coming nearer than any other group to being the "founders' of scientific economics" as to monetary, interest, and value theory within a natural-law perspective.

Two groups, who later were called "mercantilists" and "physiocrats", more directly influenced the subsequent development of the subject. Both groups were associated with the rise of economic nationalism and modern capitalism in Europe. Mercantilism was an economic doctrine that flourished from the 16th to 18th century in a prolific pamphlet literature, whether of merchants or statesmen. It held that a nation's wealth depended on its accumulation of gold and silver. Nations without access to mines could obtain gold and silver from trade only by selling goods abroad and restricting imports other than of gold and silver. The doctrine called for importing cheap raw materials to be used in manufacturing goods, which could be exported, and for state regulation to impose protective tariffs on foreign manufactured goods and prohibit manufacturing in the colonies.

Physiocrats, a group of 18th-century French thinkers and writers, developed the idea of the economy as a circular flow of income and output. Physiocrats believed that only agricultural production generated a clear surplus over cost, so that agriculture was the basis of all wealth. Thus, they opposed the mercantilist policy of promoting manufacturing and trade at the expense of agriculture, including import tariffs. Physiocrats advocated replacing administratively costly tax collections with a single tax on income of land owners. In reaction against copious mercantilist trade regulations, the physiocrats advocated a policy of laissez-faire, which called for minimal government intervention in the economy.

Adam Smith (1723–1790) was an early economic theorist. Smith was harshly critical of the mercantilists but described the physiocratic system "with all its imperfections" as "perhaps the purest approximation to the truth that has yet been published" on the subject.

Classical political economy

The publication of Adam Smith's The Wealth of Nations in 1776, has been described as "the effective birth of economics as a separate discipline." The book identified land, labour, and capital as the three factors of production and the major contributors to a nation's wealth, as distinct from the physiocratic idea that only agriculture was productive.

Smith discusses potential benefits of specialization by division of labour, including increased labour productivity and gains from trade, whether between town and country or across countries. His "theorem" that "the division of labor is limited by the extent of the market" has been described as the "core of a theory of the functions of firm and industry" and a "fundamental principle of economic organization." To Smith has also been ascribed "the most important substantive proposition in all of economics" and foundation of resource-allocation theory – that, under competition, resource owners (of labour, land, and capital) seek their most profitable uses, resulting in an equal rate of return for all uses in equilibrium (adjusted for apparent differences arising from such factors as training and unemployment).

In an argument that includes "one of the most famous passages in all economics," Smith represents every individual as trying to employ any capital they might command for their own advantage, not that of the society, and for the sake of profit, which is necessary at some level for employing capital in domestic industry, and positively related to the value of produce. In this:

He generally, indeed, neither intends to promote the public interest, nor knows how much he is promoting it. By preferring the support of domestic to that of foreign industry, he intends only his own security; and by directing that industry in such a manner as its produce may be of the greatest value, he intends only his own gain, and he is in this, as in many other cases, led by an invisible hand to promote an end which was no part of his intention. Nor is it always the worse for the society that it was no part of it. By pursuing his own interest he frequently promotes that of the society more effectually than when he really intends to promote it.

The Rev. Thomas Robert Malthus (1798) used the concept of diminishing returns to explain low living standards. Human population, he argued, tended to increase geometrically, outstripping the production of food, which increased arithmetically. The force of a rapidly growing population against a limited amount of land meant diminishing returns to labour. The result, he claimed, was chronically low wages, which prevented the standard of living for most of the population from rising above the subsistence level. Economist Julian Lincoln Simon has criticized Malthus's conclusions.

While Adam Smith emphasized the production of income, David Ricardo (1817) focused on the distribution of income among landowners, workers, and capitalists. Ricardo saw an inherent conflict between landowners on the one hand and labour and capital on the other. He posited that the growth of population and capital, pressing against a fixed supply of land, pushes up rents and holds down wages and profits. Ricardo was the first to state and prove the principle of comparative advantage, according to which each country should specialize in producing and exporting goods in that it has a lower relative cost of production, rather relying only on its own production. It has been termed a "fundamental analytical explanation" for gains from trade.

Coming at the end of the classical tradition, John Stuart Mill (1848) parted company with the earlier classical economists on the inevitability of the distribution of income produced by the market system. Mill pointed to a distinct difference between the market's two roles: allocation of resources and distribution of income. The market might be efficient in allocating resources but not in distributing income, he wrote, making it necessary for society to intervene.

Value theory was important in classical theory. Smith wrote that the "real price of every thing ... is the toil and trouble of acquiring it". Smith maintained that, with rent and profit, other costs besides wages also enter the price of a commodity. Other classical economists presented variations on Smith, termed the 'labour theory of value'. Classical economics focused on the tendency of any market economy to settle in a final stationary state made up of a constant stock of physical wealth (capital) and a constant population size.

Marxian economics

Marxist (later, Marxian) economics descends from classical economics and it derives from the work of Karl Marx. The first volume of Marx's major work, Das Kapital, was published in German in 1867. In it, Marx focused on the labour theory of value and the theory of surplus value which, he believed, explained the exploitation of labour by capital. The labour theory of value held that the value of an exchanged commodity was determined by the labour that went into its production and the theory of surplus value demonstrated how the workers only got paid a proportion of the value their work had created.

Neoclassical economics

At the dawn as a social science, economics was defined and discussed at length as the study of production, distribution, and consumption of wealth by Jean-Baptiste Say in his Treatise on Political Economy or, The Production, Distribution, and Consumption of Wealth (1803). These three items are considered by the science only in relation to the increase or diminution of wealth, and not in reference to their processes of execution. Say's definition has prevailed up to our time, saved by substituting the word "wealth" for "goods and services" meaning that wealth may include non-material objects as well. One hundred and thirty years later, Lionel Robbins noticed that this definition no longer sufficed, because many economists were making theoretical and philosophical inroads in other areas of human activity. In his Essay on the Nature and Significance of Economic Science, he proposed a definition of economics as a study of a particular aspect of human behaviour, the one that falls under the influence of scarcity, which forces people to choose, allocate scarce resources to competing ends, and economize (seeking the greatest welfare while avoiding the wasting of scarce resources). For Robbins, the insufficiency was solved, and his definition allows us to proclaim, with an easy conscience, education economics, safety and security economics, health economics, war economics, and of course, production, distribution and consumption economics as valid subjects of the economic science." Citing Robbins: "Economics is the science which studies human behavior as a relationship between ends and scarce means which have alternative uses". After discussing it for decades, Robbins' definition became widely accepted by mainstream economists, and it has opened way into current textbooks. Although far from unanimous, most mainstream economists would accept some version of Robbins' definition, even though many have raised serious objections to the scope and method of economics, emanating from that definition. Due to the lack of strong consensus, and that production, distribution and consumption of goods and services is the prime area of study of economics, the old definition still stands in many quarters.

A body of theory later termed "neoclassical economics" or "marginalism" formed from about 1870 to 1910. The term "economics" was popularized by such neoclassical economists as Alfred Marshall as a concise synonym for "economic science" and a substitute for the earlier "political economy". This corresponded to the influence on the subject of mathematical methods used in the natural sciences.

Neoclassical economics systematized supply and demand as joint determinants of price and quantity in market equilibrium, affecting both the allocation of output and the distribution of income. It dispensed with the labour theory of value inherited from classical economics in favour of a marginal utility theory of value on the demand side and a more general theory of costs on the supply side. In the 20th century, neoclassical theorists moved away from an earlier notion suggesting that total utility for a society could be measured in favour of ordinal utility, which hypothesizes merely behaviour-based relations across persons.

In microeconomics, neoclassical economics represents incentives and costs as playing a pervasive role in shaping decision making. An immediate example of this is the consumer theory of individual demand, which isolates how prices (as costs) and income affect quantity demanded. In macroeconomics it is reflected in an early and lasting neoclassical synthesis with Keynesian macroeconomics.

Neoclassical economics is occasionally referred as orthodox economics whether by its critics or sympathizers. Modern mainstream economics builds on neoclassical economics but with many refinements that either supplement or generalize earlier analysis, such as econometrics, game theory, analysis of market failure and imperfect competition, and the neoclassical model of economic growth for analysing long-run variables affecting national income.

Neoclassical economics studies the behaviour of individuals, households, and organizations (called economic actors, players, or agents), when they manage or use scarce resources, which have alternative uses, to achieve desired ends. Agents are assumed to act rationally, have multiple desirable ends in sight, limited resources to obtain these ends, a set of stable preferences, a definite overall guiding objective, and the capability of making a choice. There exists an economic problem, subject to study by economic science, when a decision (choice) is made by one or more resource-controlling players to attain the best possible outcome under bounded rational conditions. In other words, resource-controlling agents maximize value subject to the constraints imposed by the information the agents have, their cognitive limitations, and the finite amount of time they have to make and execute a decision. Economic science centres on the activities of the economic agents that comprise society. They are the focus of economic analysis.

An approach to understanding these processes, through the study of agent behaviour under scarcity, may go as follows:

The continuous interplay (exchange or trade) done by economic actors in all markets sets the prices for all goods and services which, in turn, make the rational managing of scarce resources possible. At the same time, the decisions (choices) made by the same actors, while they are pursuing their own interest, determine the level of output (production), consumption, savings, and investment, in an economy, as well as the remuneration (distribution) paid to the owners of labour (in the form of wages), capital (in the form of profits) and land (in the form of rent). Each period, as if they were in a giant feedback system, economic players influence the pricing processes and the economy, and are in turn influenced by them until a steady state (equilibrium) of all variables involved is reached or until an external shock throws the system toward a new equilibrium point. Because of the autonomous actions of rational interacting agents, the economy is a complex adaptive system.

Keynesian economics

Keynesian economics derives from John Maynard Keynes, in particular his book The General Theory of Employment, Interest and Money (1936), which ushered in contemporary macroeconomics as a distinct field. The book focused on determinants of national income in the short run when prices are relatively inflexible. Keynes attempted to explain in broad theoretical detail why high labour-market unemployment might not be self-correcting due to low "effective demand" and why even price flexibility and monetary policy might be unavailing. The term "revolutionary" has been applied to the book in its impact on economic analysis.

Keynesian economics has two successors. Post-Keynesian economics also concentrates on macroeconomic rigidities and adjustment processes. Research on micro foundations for their models is represented as based on real-life practices rather than simple optimizing models. It is generally associated with the University of Cambridge and the work of Joan Robinson.

New-Keynesian economics is also associated with developments in the Keynesian fashion. Within this group researchers tend to share with other economists the emphasis on models employing micro foundations and optimizing behaviour but with a narrower focus on standard Keynesian themes such as price and wage rigidity. These are usually made to be endogenous features of the models, rather than simply assumed as in older Keynesian-style ones.

Chicago school of economics

The Chicago School of economics is best known for its free market advocacy and monetarist ideas. According to Milton Friedman and monetarists, market economies are inherently stable if the money supply does not greatly expand or contract. Ben Bernanke, former Chairman of the Federal Reserve, is among the economists today generally accepting Friedman's analysis of the causes of the Great Depression.

Milton Friedman effectively took many of the basic principles set forth by Adam Smith and the classical economists and modernized them. One example of this is his article in the 13 September 1970 issue of The New York Times Magazine, in which he claims that the social responsibility of business should be "to use its resources and engage in activities designed to increase its profits ... (through) open and free competition without deception or fraud."

Other schools and approaches

Other well-known schools or trends of thought referring to a particular style of economics practised at and disseminated from well-defined groups of academicians that have become known worldwide, include the Austrian School, the Freiburg School, the School of Lausanne, post-Keynesian economics and the Stockholm school. Contemporary mainstream economics is sometimes separated into the Saltwater approach of those universities along the Eastern and Western coasts of the US, and the Freshwater, or Chicago-school approach.

Within macroeconomics there is, in general order of their historical appearance in the literature; classical economics, neoclassical economics, Keynesian economics, the neoclassical synthesis, monetarism, new classical economics, New Keynesian economics and the new neoclassical synthesis. In general, alternative developments include ecological economics, constitutional economics, institutional economics, evolutionary economics, dependency theory, structuralist economics, world systems theory, econophysics, feminist economics and biophysical economics.

Methodology

Theoretical research

Mainstream economic theory relies upon a priori quantitative economic models, which employ a variety of concepts. Theory typically proceeds with an assumption of ceteris paribus, which means holding constant explanatory variables other than the one under consideration. When creating theories, the objective is to find ones which are at least as simple in information requirements, more precise in predictions, and more fruitful in generating additional research than prior theories. While neoclassical economic theory constitutes both the dominant or orthodox theoretical as well as methodological framework, economic theory can also take the form of other schools of thought such as in heterodox economic theories.

In microeconomics, principal concepts include supply and demand, marginalism, rational choice theory, opportunity cost, budget constraints, utility, and the theory of the firm. Early macroeconomic models focused on modelling the relationships between aggregate variables, but as the relationships appeared to change over time macroeconomists, including new Keynesians, reformulated their models in microfoundations.

The aforementioned microeconomic concepts play a major part in macroeconomic models – for instance, in monetary theory, the quantity theory of money predicts that increases in the growth rate of the money supply increase inflation, and inflation is assumed to be influenced by rational expectations. In development economics, slower growth in developed nations has been sometimes predicted because of the declining marginal returns of investment and capital, and this has been observed in the Four Asian Tigers. Sometimes an economic hypothesis is only qualitative, not quantitative.

Expositions of economic reasoning often use two-dimensional graphs to illustrate theoretical relationships. At a higher level of generality, Paul Samuelson's treatise Foundations of Economic Analysis (1947) used mathematical methods beyond graphs to represent the theory, particularly as to maximizing behavioural relations of agents reaching equilibrium. The book focused on examining the class of statements called operationally meaningful theorems in economics, which are theorems that can conceivably be refuted by empirical data.

Empirical research

Economic theories are frequently tested empirically, largely through the use of econometrics using economic data. The controlled experiments common to the physical sciences are difficult and uncommon in economics, and instead broad data is observationally studied; this type of testing is typically regarded as less rigorous than controlled experimentation, and the conclusions typically more tentative. However, the field of experimental economics is growing, and increasing use is being made of natural experiments.

Statistical methods such as regression analysis are common. Practitioners use such methods to estimate the size, economic significance, and statistical significance ("signal strength") of the hypothesized relation(s) and to adjust for noise from other variables. By such means, a hypothesis may gain acceptance, although in a probabilistic, rather than certain, sense. Acceptance is dependent upon the falsifiable hypothesis surviving tests. Use of commonly accepted methods need not produce a final conclusion or even a consensus on a particular question, given different tests, data sets, and prior beliefs.

Criticisms based on professional standards and non-replicability of results serve as further checks against bias, errors, and over-generalization, although much economic research has been accused of being non-replicable, and prestigious journals have been accused of not facilitating replication through the provision of the code and data. Like theories, uses of test statistics are themselves open to critical analysis, although critical commentary on papers in economics in prestigious journals such as the American Economic Review has declined precipitously in the past 40 years. This has been attributed to journals' incentives to maximize citations in order to rank higher on the Social Science Citation Index (SSCI).

In applied economics, input–output models employing linear programming methods are quite common. Large amounts of data are run through computer programs to analyse the impact of certain policies; IMPLAN is one well-known example.

Experimental economics has promoted the use of scientifically controlled experiments. This has reduced the long-noted distinction of economics from natural sciences because it allows direct tests of what were previously taken as axioms. In some cases these have found that the axioms are not entirely correct; for example, the ultimatum game has revealed that people reject unequal offers.

In behavioural economics, psychologist Daniel Kahneman won the Nobel Prize in economics in 2002 for his and Amos Tversky's empirical discovery of several cognitive biases and heuristics. Similar empirical testing occurs in neuroeconomics. Another example is the assumption of narrowly selfish preferences versus a model that tests for selfish, altruistic, and cooperative preferences. These techniques have led some to argue that economics is a "genuine science".

Branches of economics

Microeconomics

Microeconomics examines how entities, forming a market structure, interact within a market to create a market system. These entities include private and public players with various classifications, typically operating under scarcity of tradable units and light government regulation. The item traded may be a tangible product such as apples or a service such as repair services, legal counsel, or entertainment.

In theory, in a free market the aggregates (sum of) of quantity demanded by buyers and quantity supplied by sellers may reach economic equilibrium over time in reaction to price changes; in practice, various issues may prevent equilibrium, and any equilibrium reached may not necessarily be morally equitable. For example, if the supply of healthcare services is limited by external factors, the equilibrium price may be unaffordable for many who desire it but cannot pay for it.

Various market structures exist. In perfectly competitive markets, no participants are large enough to have the market power to set the price of a homogeneous product. In other words, every participant is a "price taker" as no participant influences the price of a product. In the real world, markets often experience imperfect competition.

Forms include monopoly (in which there is only one seller of a good), duopoly (in which there are only two sellers of a good), oligopoly (in which there are few sellers of a good), monopolistic competition (in which there are many sellers producing highly differentiated goods), monopsony (in which there is only one buyer of a good), and oligopsony (in which there are few buyers of a good). Unlike perfect competition, imperfect competition invariably means market power is unequally distributed. Firms under imperfect competition have the potential to be "price makers", which means that, by holding a disproportionately high share of market power, they can influence the prices of their products.

Microeconomics studies individual markets by simplifying the economic system by assuming that activity in the market being analysed does not affect other markets. This method of analysis is known as partial-equilibrium analysis (supply and demand). This method aggregates (the sum of all activity) in only one market. General-equilibrium theory studies various markets and their behaviour. It aggregates (the sum of all activity) across all markets. This method studies both changes in markets and their interactions leading towards equilibrium.

Production, cost, and efficiency

In microeconomics, production is the conversion of inputs into outputs. It is an economic process that uses inputs to create a commodity or a service for exchange or direct use. Production is a flow and thus a rate of output per period of time. Distinctions include such production alternatives as for consumption (food, haircuts, etc.) vs. investment goods (new tractors, buildings, roads, etc.), public goods (national defence, smallpox vaccinations, etc.) or private goods (new computers, bananas, etc.), and "guns" vs "butter".

Opportunity cost is the economic cost of production: the value of the next best opportunity foregone. Choices must be made between desirable yet mutually exclusive actions. It has been described as expressing "the basic relationship between scarcity and choice". For example, if a baker uses a sack of flour to make pretzels one morning, then the baker cannot use either the flour or the morning to make bagels instead. Part of the cost of making pretzels is that neither the flour nor the morning are available any longer, for use in some other way. The opportunity cost of an activity is an element in ensuring that scarce resources are used efficiently, such that the cost is weighed against the value of that activity in deciding on more or less of it. Opportunity costs are not restricted to monetary or financial costs but could be measured by the real cost of output forgone, leisure, or anything else that provides the alternative benefit (utility).

Inputs used in the production process include such primary factors of production as labour services, capital (durable produced goods used in production, such as an existing factory), and land (including natural resources). Other inputs may include intermediate goods used in production of final goods, such as the steel in a new car.

Economic efficiency measures how well a system generates desired output with a given set of inputs and available technology. Efficiency is improved if more output is generated without changing inputs, or in other words, the amount of "waste" is reduced. A widely accepted general standard is Pareto efficiency, which is reached when no further change can make someone better off without making someone else worse off.

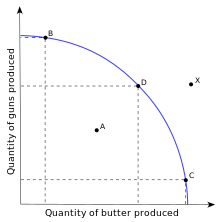

The production–possibility frontier (PPF) is an expository figure for representing scarcity, cost, and efficiency. In the simplest case an economy can produce just two goods (say "guns" and "butter"). The PPF is a table or graph (as at the right) showing the different quantity combinations of the two goods producible with a given technology and total factor inputs, which limit feasible total output. Each point on the curve shows potential total output for the economy, which is the maximum feasible output of one good, given a feasible output quantity of the other good.

Scarcity is represented in the figure by people being willing but unable in the aggregate to consume beyond the PPF (such as at X) and by the negative slope of the curve. If production of one good increases along the curve, production of the other good decreases, an inverse relationship. This is because increasing output of one good requires transferring inputs to it from production of the other good, decreasing the latter.

The slope of the curve at a point on it gives the trade-off between the two goods. It measures what an additional unit of one good costs in units forgone of the other good, an example of a real opportunity cost. Thus, if one more Gun costs 100 units of butter, the opportunity cost of one Gun is 100 Butter. Along the PPF, scarcity implies that choosing more of one good in the aggregate entails doing with less of the other good. Still, in a market economy, movement along the curve may indicate that the choice of the increased output is anticipated to be worth the cost to the agents.

By construction, each point on the curve shows productive efficiency in maximizing output for given total inputs. A point inside the curve (as at A), is feasible but represents production inefficiency (wasteful use of inputs), in that output of one or both goods could increase by moving in a northeast direction to a point on the curve. Examples cited of such inefficiency include high unemployment during a business-cycle recession or economic organization of a country that discourages full use of resources. Being on the curve might still not fully satisfy allocative efficiency (also called Pareto efficiency) if it does not produce a mix of goods that consumers prefer over other points.

Much applied economics in public policy is concerned with determining how the efficiency of an economy can be improved. Recognizing the reality of scarcity and then figuring out how to organize society for the most efficient use of resources has been described as the "essence of economics", where the subject "makes its unique contribution."

Specialization

Specialization is considered key to economic efficiency based on theoretical and empirical considerations. Different individuals or nations may have different real opportunity costs of production, say from differences in stocks of human capital per worker or capital/labour ratios. According to theory, this may give a comparative advantage in production of goods that make more intensive use of the relatively more abundant, thus relatively cheaper, input.

Even if one region has an absolute advantage as to the ratio of its outputs to inputs in every type of output, it may still specialize in the output in which it has a comparative advantage and thereby gain from trading with a region that lacks any absolute advantage but has a comparative advantage in producing something else.

It has been observed that a high volume of trade occurs among regions even with access to a similar technology and mix of factor inputs, including high-income countries. This has led to investigation of economies of scale and agglomeration to explain specialization in similar but differentiated product lines, to the overall benefit of respective trading parties or regions.

The general theory of specialization applies to trade among individuals, farms, manufacturers, service providers, and economies. Among each of these production systems, there may be a corresponding division of labour with different work groups specializing, or correspondingly different types of capital equipment and differentiated land uses.

An example that combines features above is a country that specializes in the production of high-tech knowledge products, as developed countries do, and trades with developing nations for goods produced in factories where labour is relatively cheap and plentiful, resulting in different in opportunity costs of production. More total output and utility thereby results from specializing in production and trading than if each country produced its own high-tech and low-tech products.

Theory and observation set out the conditions such that market prices of outputs and productive inputs select an allocation of factor inputs by comparative advantage, so that (relatively) low-cost inputs go to producing low-cost outputs. In the process, aggregate output may increase as a by-product or by design. Such specialization of production creates opportunities for gains from trade whereby resource owners benefit from trade in the sale of one type of output for other, more highly valued goods. A measure of gains from trade is the increased income levels that trade may facilitate.

Supply and demand

Prices and quantities have been described as the most directly observable attributes of goods produced and exchanged in a market economy. The theory of supply and demand is an organizing principle for explaining how prices coordinate the amounts produced and consumed. In microeconomics, it applies to price and output determination for a market with perfect competition, which includes the condition of no buyers or sellers large enough to have price-setting power.

For a given market of a commodity, demand is the relation of the quantity that all buyers would be prepared to purchase at each unit price of the good. Demand is often represented by a table or a graph showing price and quantity demanded (as in the figure). Demand theory describes individual consumers as rationally choosing the most preferred quantity of each good, given income, prices, tastes, etc. A term for this is "constrained utility maximization" (with income and wealth as the constraints on demand). Here, utility refers to the hypothesized relation of each individual consumer for ranking different commodity bundles as more or less preferred.

The law of demand states that, in general, price and quantity demanded in a given market are inversely related. That is, the higher the price of a product, the less of it people would be prepared to buy (other things unchanged). As the price of a commodity falls, consumers move toward it from relatively more expensive goods (the substitution effect). In addition, purchasing power from the price decline increases ability to buy (the income effect). Other factors can change demand; for example an increase in income will shift the demand curve for a normal good outward relative to the origin, as in the figure. All determinants are predominantly taken as constant factors of demand and supply.

Supply is the relation between the price of a good and the quantity available for sale at that price. It may be represented as a table or graph relating price and quantity supplied. Producers, for example business firms, are hypothesized to be profit maximizers, meaning that they attempt to produce and supply the amount of goods that will bring them the highest profit. Supply is typically represented as a function relating price and quantity, if other factors are unchanged.

That is, the higher the price at which the good can be sold, the more of it producers will supply, as in the figure. The higher price makes it profitable to increase production. Just as on the demand side, the position of the supply can shift, say from a change in the price of a productive input or a technical improvement. The "Law of Supply" states that, in general, a rise in price leads to an expansion in supply and a fall in price leads to a contraction in supply. Here as well, the determinants of supply, such as price of substitutes, cost of production, technology applied and various factors inputs of production are all taken to be constant for a specific time period of evaluation of supply.

Market equilibrium occurs where quantity supplied equals quantity demanded, the intersection of the supply and demand curves in the figure above. At a price below equilibrium, there is a shortage of quantity supplied compared to quantity demanded. This is posited to bid the price up. At a price above equilibrium, there is a surplus of quantity supplied compared to quantity demanded. This pushes the price down. The model of supply and demand predicts that for given supply and demand curves, price and quantity will stabilize at the price that makes quantity supplied equal to quantity demanded. Similarly, demand-and-supply theory predicts a new price-quantity combination from a shift in demand (as to the figure), or in supply.

Firms

People frequently do not trade directly on markets. Instead, on the supply side, they may work in and produce through firms. The most obvious kinds of firms are corporations, partnerships and trusts. According to Ronald Coase, people begin to organize their production in firms when the costs of doing business becomes lower than doing it on the market. Firms combine labour and capital, and can achieve far greater economies of scale (when the average cost per unit declines as more units are produced) than individual market trading.

In perfectly competitive markets studied in the theory of supply and demand, there are many producers, none of which significantly influence price. Industrial organization generalizes from that special case to study the strategic behaviour of firms that do have significant control of price. It considers the structure of such markets and their interactions. Common market structures studied besides perfect competition include monopolistic competition, various forms of oligopoly, and monopoly.

Managerial economics applies microeconomic analysis to specific decisions in business firms or other management units. It draws heavily from quantitative methods such as operations research and programming and from statistical methods such as regression analysis in the absence of certainty and perfect knowledge. A unifying theme is the attempt to optimize business decisions, including unit-cost minimization and profit maximization, given the firm's objectives and constraints imposed by technology and market conditions.

Uncertainty and game theory

Uncertainty in economics is an unknown prospect of gain or loss, whether quantifiable as risk or not. Without it, household behaviour would be unaffected by uncertain employment and income prospects, financial and capital markets would reduce to exchange of a single instrument in each market period, and there would be no communications industry. Given its different forms, there are various ways of representing uncertainty and modelling economic agents' responses to it.

Game theory is a branch of applied mathematics that considers strategic interactions between agents, one kind of uncertainty. It provides a mathematical foundation of industrial organization, discussed above, to model different types of firm behaviour, for example in a solipsistic industry (few sellers), but equally applicable to wage negotiations, bargaining, contract design, and any situation where individual agents are few enough to have perceptible effects on each other. In behavioural economics, it has been used to model the strategies agents choose when interacting with others whose interests are at least partially adverse to their own.

In this, it generalizes maximization approaches developed to analyse market actors such as in the supply and demand model and allows for incomplete information of actors. The field dates from the 1944 classic Theory of Games and Economic Behavior by John von Neumann and Oskar Morgenstern. It has significant applications seemingly outside of economics in such diverse subjects as formulation of nuclear strategies, ethics, political science, and evolutionary biology.

Risk aversion may stimulate activity that in well-functioning markets smooths out risk and communicates information about risk, as in markets for insurance, commodity futures contracts, and financial instruments. Financial economics or simply finance describes the allocation of financial resources. It also analyses the pricing of financial instruments, the financial structure of companies, the efficiency and fragility of financial markets, financial crises, and related government policy or regulation.

Some market organizations may give rise to inefficiencies associated with uncertainty. Based on George Akerlof's "Market for Lemons" article, the paradigm example is of a dodgy second-hand car market. Customers without knowledge of whether a car is a "lemon" depress its price below what a quality second-hand car would be. Information asymmetry arises here, if the seller has more relevant information than the buyer but no incentive to disclose it. Related problems in insurance are adverse selection, such that those at most risk are most likely to insure (say reckless drivers), and moral hazard, such that insurance results in riskier behaviour (say more reckless driving).

Both problems may raise insurance costs and reduce efficiency by driving otherwise willing transactors from the market ("incomplete markets"). Moreover, attempting to reduce one problem, say adverse selection by mandating insurance, may add to another, say moral hazard. Information economics, which studies such problems, has relevance in subjects such as insurance, contract law, mechanism design, monetary economics, and health care. Applied subjects include market and legal remedies to spread or reduce risk, such as warranties, government-mandated partial insurance, restructuring or bankruptcy law, inspection, and regulation for quality and information disclosure.

Market failure

The term "market failure" encompasses several problems which may undermine standard economic assumptions. Although economists categorize market failures differently, the following categories emerge in the main texts.

Information asymmetries and incomplete markets may result in economic inefficiency but also a possibility of improving efficiency through market, legal, and regulatory remedies, as discussed above.

Natural monopoly, or the overlapping concepts of "practical" and "technical" monopoly, is an extreme case of failure of competition as a restraint on producers. Extreme economies of scale are one possible cause.

Public goods are goods which are under-supplied in a typical market. The defining features are that people can consume public goods without having to pay for them and that more than one person can consume the good at the same time.

Externalities occur where there are significant social costs or benefits from production or consumption that are not reflected in market prices. For example, air pollution may generate a negative externality, and education may generate a positive externality (less crime, etc.). Governments often tax and otherwise restrict the sale of goods that have negative externalities and subsidize or otherwise promote the purchase of goods that have positive externalities in an effort to correct the price distortions caused by these externalities. Elementary demand-and-supply theory predicts equilibrium but not the speed of adjustment for changes of equilibrium due to a shift in demand or supply.

In many areas, some form of price stickiness is postulated to account for quantities, rather than prices, adjusting in the short run to changes on the demand side or the supply side. This includes standard analysis of the business cycle in macroeconomics. Analysis often revolves around causes of such price stickiness and their implications for reaching a hypothesized long-run equilibrium. Examples of such price stickiness in particular markets include wage rates in labour markets and posted prices in markets deviating from perfect competition.

Some specialized fields of economics deal in market failure more than others. The economics of the public sector is one example. Much environmental economics concerns externalities or "public bads".

Policy options include regulations that reflect cost-benefit analysis or market solutions that change incentives, such as emission fees or redefinition of property rights.

Welfare

Welfare economics uses microeconomics techniques to evaluate well-being from allocation of productive factors as to desirability and economic efficiency within an economy, often relative to competitive general equilibrium. It analyzes social welfare, however measured, in terms of economic activities of the individuals that compose the theoretical society considered. Accordingly, individuals, with associated economic activities, are the basic units for aggregating to social welfare, whether of a group, a community, or a society, and there is no "social welfare" apart from the "welfare" associated with its individual units.

Macroeconomics

Macroeconomics examines the economy as a whole to explain broad aggregates and their interactions "top down", that is, using a simplified form of general-equilibrium theory. Such aggregates include national income and output, the unemployment rate, and price inflation and subaggregates like total consumption and investment spending and their components. It also studies effects of monetary policy and fiscal policy.

Since at least the 1960s, macroeconomics has been characterized by further integration as to micro-based modelling of sectors, including rationality of players, efficient use of market information, and imperfect competition. This has addressed a long-standing concern about inconsistent developments of the same subject.

Macroeconomic analysis also considers factors affecting the long-term level and growth of national income. Such factors include capital accumulation, technological change and labour force growth.

Growth

Growth economics studies factors that explain economic growth – the increase in output per capita of a country over a long period of time. The same factors are used to explain differences in the level of output per capita between countries, in particular why some countries grow faster than others, and whether countries converge at the same rates of growth.

Much-studied factors include the rate of investment, population growth, and technological change. These are represented in theoretical and empirical forms (as in the neoclassical and endogenous growth models) and in growth accounting.

Business cycle

The economics of a depression were the spur for the creation of "macroeconomics" as a separate discipline. During the Great Depression of the 1930s, John Maynard Keynes authored a book entitled The General Theory of Employment, Interest and Money outlining the key theories of Keynesian economics. Keynes contended that aggregate demand for goods might be insufficient during economic downturns, leading to unnecessarily high unemployment and losses of potential output.

He therefore advocated active policy responses by the public sector, including monetary policy actions by the central bank and fiscal policy actions by the government to stabilize output over the business cycle. Thus, a central conclusion of Keynesian economics is that, in some situations, no strong automatic mechanism moves output and employment towards full employment levels. John Hicks' IS/LM model has been the most influential interpretation of The General Theory.

Over the years, understanding of the business cycle has branched into various research programmes, mostly related to or distinct from Keynesianism. The neoclassical synthesis refers to the reconciliation of Keynesian economics with neoclassical economics, stating that Keynesianism is correct in the short run but qualified by neoclassical-like considerations in the intermediate and long run.

New classical macroeconomics, as distinct from the Keynesian view of the business cycle, posits market clearing with imperfect information. It includes Friedman's permanent income hypothesis on consumption and "rational expectations" theory, led by Robert Lucas, and real business cycle theory.

In contrast, the new Keynesian approach retains the rational expectations assumption, however it assumes a variety of market failures. In particular, New Keynesians assume prices and wages are "sticky", which means they do not adjust instantaneously to changes in economic conditions.

Thus, the new classicals assume that prices and wages adjust automatically to attain full employment, whereas the new Keynesians see full employment as being automatically achieved only in the long run, and hence government and central-bank policies are needed because the "long run" may be very long.

Unemployment

The amount of unemployment in an economy is measured by the unemployment rate, the percentage of workers without jobs in the labour force. The labour force only includes workers actively looking for jobs. People who are retired, pursuing education, or discouraged from seeking work by a lack of job prospects are excluded from the labour force. Unemployment can be generally broken down into several types that are related to different causes.

Classical models of unemployment occurs when wages are too high for employers to be willing to hire more workers. Consistent with classical unemployment, frictional unemployment occurs when appropriate job vacancies exist for a worker, but the length of time needed to search for and find the job leads to a period of unemployment.

Structural unemployment covers a variety of possible causes of unemployment including a mismatch between workers' skills and the skills required for open jobs. Large amounts of structural unemployment can occur when an economy is transitioning industries and workers find their previous set of skills are no longer in demand. Structural unemployment is similar to frictional unemployment since both reflect the problem of matching workers with job vacancies, but structural unemployment covers the time needed to acquire new skills not just the short term search process.

While some types of unemployment may occur regardless of the condition of the economy, cyclical unemployment occurs when growth stagnates. Okun's law represents the empirical relationship between unemployment and economic growth. The original version of Okun's law states that a 3% increase in output would lead to a 1% decrease in unemployment.

Inflation and monetary policy

Money is a means of final payment for goods in most price system economies, and is the unit of account in which prices are typically stated. Money has general acceptability, relative consistency in value, divisibility, durability, portability, elasticity in supply, and longevity with mass public confidence. It includes currency held by the nonbank public and checkable deposits. It has been described as a social convention, like language, useful to one largely because it is useful to others. In the words of Francis Amasa Walker, a well-known 19th-century economist, "Money is what money does" ("Money is that money does" in the original).

As a medium of exchange, money facilitates trade. It is essentially a measure of value and more importantly, a store of value being a basis for credit creation. Its economic function can be contrasted with barter (non-monetary exchange). Given a diverse array of produced goods and specialized producers, barter may entail a hard-to-locate double coincidence of wants as to what is exchanged, say apples and a book. Money can reduce the transaction cost of exchange because of its ready acceptability. Then it is less costly for the seller to accept money in exchange, rather than what the buyer produces.

At the level of an economy, theory and evidence are consistent with a positive relationship running from the total money supply to the nominal value of total output and to the general price level. For this reason, management of the money supply is a key aspect of monetary policy.

Fiscal policy

Governments implement fiscal policy to influence macroeconomic conditions by adjusting spending and taxation policies to alter aggregate demand. When aggregate demand falls below the potential output of the economy, there is an output gap where some productive capacity is left unemployed. Governments increase spending and cut taxes to boost aggregate demand. Resources that have been idled can be used by the government.

For example, unemployed home builders can be hired to expand highways. Tax cuts allow consumers to increase their spending, which boosts aggregate demand. Both tax cuts and spending have multiplier effects where the initial increase in demand from the policy percolates through the economy and generates additional economic activity.

The effects of fiscal policy can be limited by crowding out. When there is no output gap, the economy is producing at full capacity and there are no excess productive resources. If the government increases spending in this situation, the government uses resources that otherwise would have been used by the private sector, so there is no increase in overall output. Some economists think that crowding out is always an issue while others do not think it is a major issue when output is depressed.

Sceptics of fiscal policy also make the argument of Ricardian equivalence. They argue that an increase in debt will have to be paid for with future tax increases, which will cause people to reduce their consumption and save money to pay for the future tax increase. Under Ricardian equivalence, any boost in demand from tax cuts will be offset by the increased saving intended to pay for future higher taxes.

Public economics

Public economics is the field of economics that deals with economic activities of a public sector, usually government. The subject addresses such matters as tax incidence (who really pays a particular tax), cost-benefit analysis of government programmes, effects on economic efficiency and income distribution of different kinds of spending and taxes, and fiscal politics. The latter, an aspect of public choice theory, models public-sector behaviour analogously to microeconomics, involving interactions of self-interested voters, politicians, and bureaucrats.

Much of economics is positive, seeking to describe and predict economic phenomena. Normative economics seeks to identify what economies ought to be like.

Welfare economics is a normative branch of economics that uses microeconomic techniques to simultaneously determine the allocative efficiency within an economy and the income distribution associated with it. It attempts to measure social welfare by examining the economic activities of the individuals that comprise society.

International economics

International trade studies determinants of goods-and-services flows across international boundaries. It also concerns the size and distribution of gains from trade. Policy applications include estimating the effects of changing tariff rates and trade quotas. International finance is a macroeconomic field which examines the flow of capital across international borders, and the effects of these movements on exchange rates. Increased trade in goods, services and capital between countries is a major effect of contemporary globalization.

Labor economics

Labor economics seeks to understand the functioning and dynamics of the markets for wage labor. Labor markets function through the interaction of workers and employers. Labor economics looks at the suppliers of labor services (workers), the demands of labor services (employers), and attempts to understand the resulting pattern of wages, employment, and income. In economics, labor is a measure of the work done by human beings. It is conventionally contrasted with such other factors of production as land and capital. There are theories which have developed a concept called human capital (referring to the skills that workers possess, not necessarily their actual work), although there are also counter posing macro-economic system theories that think human capital is a contradiction in terms.

Development economics

Development economics examines economic aspects of the economic development process in relatively low-income countries focusing on structural change, poverty, and economic growth. Approaches in development economics frequently incorporate social and political factors.

Agreements

According to various random and anonymous surveys of members of the American Economic Association, economists have agreement about the following propositions by percentage:

- A ceiling on rents reduces the quantity and quality of housing available. (93% agree)

- Tariffs and import quotas usually reduce general economic welfare. (93% agree)

- Flexible and floating exchange rates offer an effective international monetary arrangement. (90% agree)

- Fiscal policy (e.g., tax cut and/or government expenditure increase) has a significant stimulative impact on a less than fully employed economy. (90% agree)

- The United States should not restrict employers from outsourcing work to foreign countries. (90% agree)

- Economic growth in developed countries like the United States leads to greater levels of well-being. (88% agree)

- The United States should eliminate agricultural subsidies. (85% agree)

- An appropriately designed fiscal policy can increase the long-run rate of capital formation. (85% agree)

- Local and state governments should eliminate subsidies to professional sports franchises. (85% agree)

- If the federal budget is to be balanced, it should be done over the business cycle rather than yearly. (85% agree)

- The gap between Social Security funds and expenditures will become unsustainably large within the next fifty years if current policies remain unchanged. (85% agree)

- Cash payments increase the welfare of recipients to a greater degree than do transfers-in-kind of equal cash value. (84% agree)

- A large federal budget deficit has an adverse effect on the economy. (83% agree)

- The redistribution of income in the United States is a legitimate role for the government. (83% agree)

- Inflation is caused primarily by too much growth in the money supply. (83% agree)

- The United States should not ban genetically modified crops. (82% agree)

- A minimum wage increases unemployment among young and unskilled workers. (79% agree)

- The government should restructure the welfare system along the lines of a "negative income tax". (79% agree)

- Effluent taxes and marketable pollution permits represent a better approach to pollution control than imposition of pollution ceilings. (78% agree)

- Government subsidies on ethanol in the United States should be reduced or eliminated. (78% agree)

Criticisms

General criticisms

"The dismal science" is a derogatory alternative name for economics devised by the Victorian historian Thomas Carlyle in the 19th century. It is often stated that Carlyle gave economics the nickname "the dismal science" as a response to the late 18th century writings of The Reverend Thomas Robert Malthus, who grimly predicted that starvation would result, as projected population growth exceeded the rate of increase in the food supply. However, the actual phrase was coined by Carlyle in the context of a debate with John Stuart Mill on slavery, in which Carlyle argued for slavery, while Mill opposed it.

In The Wealth of Nations, Adam Smith addressed many issues that are currently also the subject of debate and dispute. Smith repeatedly attacks groups of politically aligned individuals who attempt to use their collective influence to manipulate a government into doing their bidding. In Smith's day, these were referred to as factions, but are now more commonly called special interests, a term which can comprise international bankers, corporate conglomerations, outright oligopolies, monopolies, trade unions and other groups.

Economics per se, as a social science, is independent of the political acts of any government or other decision-making organization; however, many policymakers or individuals holding highly ranked positions that can influence other people's lives are known for arbitrarily using a plethora of economic concepts and rhetoric as vehicles to legitimize agendas and value systems, and do not limit their remarks to matters relevant to their responsibilities. The close relation of economic theory and practice with politics is a focus of contention that may shade or distort the most unpretentious original tenets of economics, and is often confused with specific social agendas and value systems.

Notwithstanding, economics legitimately has a role in informing government policy. It is, indeed, in some ways an outgrowth of the older field of political economy. Some academic economic journals have increased their efforts to gauge the consensus of economists regarding certain policy issues in hopes of effecting a more informed political environment. Often there exists a low approval rate from professional economists regarding many public policies. Policy issues featured in one survey of American Economic Association economists include trade restrictions, social insurance for those put out of work by international competition, genetically modified foods, curbside recycling, health insurance (several questions), medical malpractice, barriers to entering the medical profession, organ donations, unhealthy foods, mortgage deductions, taxing internet sales, Wal-Mart, casinos, ethanol subsidies, and inflation targeting.

Issues like central bank independence, central bank policies and rhetoric in central bank governors discourse or the premises of macroeconomic policies (monetary and fiscal policy) of the state, are focus of contention and criticism.

Deirdre McCloskey has argued that many empirical economic studies are poorly reported, and she and Stephen Ziliak argue that although her critique has been well-received, practice has not improved. This latter contention is controversial.

Criticisms of assumptions

Economics has historically been subject to criticism that it relies on unrealistic, unverifiable, or highly simplified assumptions, in some cases because these assumptions simplify the proofs of desired conclusions. Examples of such assumptions include perfect information, profit maximization and rational choices, axioms of neoclassical economics. Such criticisms often conflate neoclassical economics with all of contemporary economics. The field of information economics includes both mathematical-economical research and also behavioural economics, akin to studies in behavioural psychology, and confounding factors to the neoclassical assumptions are the subject of substantial study in many areas of economics.

Prominent historical mainstream economists such as Keynes and Joskow observed that much of the economics of their time was conceptual rather than quantitative, and difficult to model and formalize quantitatively. In a discussion on oligopoly research, Paul Joskow pointed out in 1975 that in practice, serious students of actual economies tended to use "informal models" based upon qualitative factors specific to particular industries. Joskow had a strong feeling that the important work in oligopoly was done through informal observations while formal models were "trotted out ex post". He argued that formal models were largely not important in the empirical work, either, and that the fundamental factor behind the theory of the firm, behaviour, was neglected. Woodford noted in 2009 that this was no longer the case, and that modelling had improved significantly in both theoretical rigour and empiricism, with a strong focus on testable quantitative work.

In the 1990s, feminist critiques of neoclassical economic models gained prominence, leading to the formation of feminist economics. Feminist economists call attention to the social construction of economics and claims to highlight the ways in which its models and methods reflect masculine preferences. Primary criticisms focus on alleged failures to account for: the selfish nature of actors (homo economicus); exogenous tastes; the impossibility of utility comparisons; the exclusion of unpaid work; and the exclusion of class and gender considerations.

Related subjects

Economics is one social science among several and has fields bordering on other areas, including economic geography, economic history, public choice, energy economics, cultural economics, family economics and institutional economics.

Law and economics, or economic analysis of law, is an approach to legal theory that applies methods of economics to law. It includes the use of economic concepts to explain the effects of legal rules, to assess which legal rules are economically efficient, and to predict what the legal rules will be. A seminal article by Ronald Coase published in 1961 suggested that well-defined property rights could overcome the problems of externalities.

Political economy is the interdisciplinary study that combines economics, law, and political science in explaining how political institutions, the political environment, and the economic system (capitalist, socialist, mixed) influence each other. It studies questions such as how monopoly, rent-seeking behaviour, and externalities should impact government policy. Historians have employed political economy to explore the ways in the past that persons and groups with common economic interests have used politics to effect changes beneficial to their interests.

Energy economics is a broad scientific subject area which includes topics related to energy supply and energy demand. Georgescu-Roegen reintroduced the concept of entropy in relation to economics and energy from thermodynamics, as distinguished from what he viewed as the mechanistic foundation of neoclassical economics drawn from Newtonian physics. His work contributed significantly to thermoeconomics and to ecological economics. He also did foundational work which later developed into evolutionary economics.

The sociological subfield of economic sociology arose, primarily through the work of Émile Durkheim, Max Weber and Georg Simmel, as an approach to analysing the effects of economic phenomena in relation to the overarching social paradigm (i.e. modernity). Classic works include Max Weber's The Protestant Ethic and the Spirit of Capitalism (1905) and Georg Simmel's The Philosophy of Money (1900). More recently, the works of Mark Granovetter, Peter Hedstrom and Richard Swedberg have been influential in this field.

Profession

The professionalization of economics, reflected in the growth of graduate programmes on the subject, has been described as "the main change in economics since around 1900". Most major universities and many colleges have a major, school, or department in which academic degrees are awarded in the subject, whether in the liberal arts, business, or for professional study. See Bachelor of Economics and Master of Economics.

In the private sector, professional economists are employed as consultants and in industry, including banking and finance. Economists also work for various government departments and agencies, for example, the national treasury, central bank or National Bureau of Statistics.

There are dozens of prizes awarded to economists each year for outstanding intellectual contributions to the field, the most prominent of which is the Nobel Memorial Prize in Economic Sciences, though it is not a Nobel Prize.

Contemporary economics uses mathematics. Economists draw on the tools of calculus, linear algebra, statistics, game theory, and computer science. Professional economists are expected to be familiar with these tools, while a minority specialize in econometrics and mathematical methods.

See also

- Business ethics

- Critical juncture theory

- Economics terminology that differs from common usage

- Economic ideology

- Economic policy

- Economic union

- Free trade

- Happiness economics

- Humanistic economics

- List of multilateral free trade agreements

- List of economics films

- List of economics awards

- List of free trade agreements

- Socioeconomics

- Gross National Happiness

- Liquidationism (economics)