In mathematics, a group is a set and an operation that combines any two elements of the set to produce a third element of the set, in such a way that the operation is associative, an identity element exists and every element has an inverse. These three axioms hold for number systems and many other mathematical structures. For example, the integers together with the addition operation form a group. The concept of a group and the axioms that define it were elaborated for handling, in a unified way, essential structural properties of very different mathematical entities such as numbers, geometric shapes and polynomial roots. Because the concept of groups is ubiquitous in numerous areas both within and outside mathematics, some authors consider it as a central organizing principle of contemporary mathematics.

In geometry groups arise naturally in the study of symmetries and geometric transformations: The symmetries of an object form a group, called the symmetry group of the object, and the transformations of a given type form a general group. Lie groups appear in symmetry groups in geometry, and also in the Standard Model of particle physics. The Poincaré group is a Lie group consisting of the symmetries of spacetime in special relativity. Point groups describe symmetry in molecular chemistry.

The concept of a group arose in the study of polynomial equations, starting with Évariste Galois in the 1830s, who introduced the term group (French: groupe) for the symmetry group of the roots of an equation, now called a Galois group. After contributions from other fields such as number theory and geometry, the group notion was generalized and firmly established around 1870. Modern group theory—an active mathematical discipline—studies groups in their own right. To explore groups, mathematicians have devised various notions to break groups into smaller, better-understandable pieces, such as subgroups, quotient groups and simple groups. In addition to their abstract properties, group theorists also study the different ways in which a group can be expressed concretely, both from a point of view of representation theory (that is, through the representations of the group) and of computational group theory. A theory has been developed for finite groups, which culminated with the classification of finite simple groups, completed in 2004. Since the mid-1980s, geometric group theory, which studies finitely generated groups as geometric objects, has become an active area in group theory.

Definition and illustration

First example: the integers

One of the more familiar groups is the set of integers

- For all integers , and , one has . Expressed in words, adding to first, and then adding the result to gives the same final result as adding to the sum of and . This property is known as associativity.

- If is any integer, then and . Zero is called the identity element of addition because adding it to any integer returns the same integer.

- For every integer , there is an integer such that and . The integer is called the inverse element of the integer and is denoted .

The integers, together with the operation , form a mathematical object belonging to a broad class sharing similar structural aspects. To appropriately understand these structures as a collective, the following definition is developed.

Definition

The axioms for a group are short and natural... Yet somehow hidden behind these axioms is the monster simple group, a huge and extraordinary mathematical object, which appears to rely on numerous bizarre coincidences to exist. The axioms for groups give no obvious hint that anything like this exists.

Richard Borcherds in Mathematicians: An Outer View of the Inner World

A group is a set together with a binary operation on , here denoted "", that combines any two elements and to form an element of , denoted , such that the following three requirements, known as group axioms, are satisfied:

- Associativity

- For all , , in , one has .

- Identity element

- There exists an element in such that, for every in , one has and .

- Such an element is unique (see below). It is called the identity element of the group.

- Inverse element

- For each in , there exists an element in such that and , where is the identity element.

- For each , the element is unique (see below); it is called the inverse of and is commonly denoted .

Notation and terminology

Formally, the group is the ordered pair of a set and a binary operation on this set that satisfies the group axioms. The set is called the underlying set of the group, and the operation is called the group operation or the group law.

A group and its underlying set are thus two different mathematical objects. To avoid cumbersome notation, it is common to abuse notation by using the same symbol to denote both. This reflects also an informal way of thinking: that the group is the same as the set except that it has been enriched by additional structure provided by the operation.

For example, consider the set of real numbers , which has the operations of addition and multiplication . Formally, is a set, is a group, and is a field. But it is common to write to denote any of these three objects.

The additive group of the field is the group whose underlying set is and whose operation is addition. The multiplicative group of the field is the group whose underlying set is the set of nonzero real numbers and whose operation is multiplication.

More generally, one speaks of an additive group whenever the group operation is notated as addition; in this case, the identity is typically denoted , and the inverse of an element is denoted . Similarly, one speaks of a multiplicative group whenever the group operation is notated as multiplication; in this case, the identity is typically denoted , and the inverse of an element is denoted . In a multiplicative group, the operation symbol is usually omitted entirely, so that the operation is denoted by juxtaposition, instead of .

The definition of a group does not require that for all elements and in . If this additional condition holds, then the operation is said to be commutative, and the group is called an abelian group. It is a common convention that for an abelian group either additive or multiplicative notation may be used, but for a nonabelian group only multiplicative notation is used.

Several other notations are commonly used for groups whose elements are not numbers. For a group whose elements are functions, the operation is often function composition ; then the identity may be denoted id. In the more specific cases of geometric transformation groups, symmetry groups, permutation groups, and automorphism groups, the symbol is often omitted, as for multiplicative groups. Many other variants of notation may be encountered.

Second example: a symmetry group

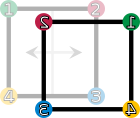

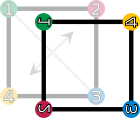

Two figures in the plane are congruent if one can be changed into the other using a combination of rotations, reflections, and translations. Any figure is congruent to itself. However, some figures are congruent to themselves in more than one way, and these extra congruences are called symmetries. A square has eight symmetries. These are:

- the identity operation leaving everything unchanged, denoted id;

- rotations of the square around its center by 90°, 180°, and 270° clockwise, denoted by , and , respectively;

- reflections about the horizontal and vertical middle line ( and ), or through the two diagonals ( and ).

These symmetries are functions. Each sends a point in the square to the corresponding point under the symmetry. For example, sends a point to its rotation 90° clockwise around the square's center, and sends a point to its reflection across the square's vertical middle line. Composing two of these symmetries gives another symmetry. These symmetries determine a group called the dihedral group of degree four, denoted . The underlying set of the group is the above set of symmetries, and the group operation is function composition. Two symmetries are combined by composing them as functions, that is, applying the first one to the square, and the second one to the result of the first application. The result of performing first and then is written symbolically from right to left as ("apply the symmetry after performing the symmetry "). This is the usual notation for composition of functions.

The group table lists the results of all such compositions possible. For example, rotating by 270° clockwise () and then reflecting horizontally () is the same as performing a reflection along the diagonal (). Using the above symbols, highlighted in blue in the group table:

| The elements , , , and form a subgroup whose group table is highlighted in red (upper left region). A left and right coset of this subgroup are highlighted in green (in the last row) and yellow (last column), respectively. The result of the composition , the symmetry , is highlighted in blue (below table center). | ||||||||

Given this set of symmetries and the described operation, the group axioms can be understood as follows.

Binary operation: Composition is a binary operation. That is, is a symmetry for any two symmetries and . For example,

Associativity: The associativity axiom deals with composing more than two symmetries: Starting with three elements , and of , there are two possible ways of using these three symmetries in this order to determine a symmetry of the square. One of these ways is to first compose and into a single symmetry, then to compose that symmetry with . The other way is to first compose and , then to compose the resulting symmetry with . These two ways must give always the same result, that is,

Identity element: The identity element is , as it does not change any symmetry when composed with it either on the left or on the right.

Inverse element: Each symmetry has an inverse: , the reflections , , , and the 180° rotation are their own inverse, because performing them twice brings the square back to its original orientation. The rotations and are each other's inverses, because rotating 90° and then rotation 270° (or vice versa) yields a rotation over 360° which leaves the square unchanged. This is easily verified on the table.

In contrast to the group of integers above, where the order of the operation is immaterial, it does matter in , as, for example, but . In other words, is not abelian.

History

The modern concept of an abstract group developed out of several fields of mathematics. The original motivation for group theory was the quest for solutions of polynomial equations of degree higher than 4. The 19th-century French mathematician Évariste Galois, extending prior work of Paolo Ruffini and Joseph-Louis Lagrange, gave a criterion for the solvability of a particular polynomial equation in terms of the symmetry group of its roots (solutions). The elements of such a Galois group correspond to certain permutations of the roots. At first, Galois's ideas were rejected by his contemporaries, and published only posthumously. More general permutation groups were investigated in particular by Augustin Louis Cauchy. Arthur Cayley's On the theory of groups, as depending on the symbolic equation (1854) gives the first abstract definition of a finite group.

Geometry was a second field in which groups were used systematically, especially symmetry groups as part of Felix Klein's 1872 Erlangen program. After novel geometries such as hyperbolic and projective geometry had emerged, Klein used group theory to organize them in a more coherent way. Further advancing these ideas, Sophus Lie founded the study of Lie groups in 1884.

The third field contributing to group theory was number theory. Certain abelian group structures had been used implicitly in Carl Friedrich Gauss's number-theoretical work Disquisitiones Arithmeticae (1798), and more explicitly by Leopold Kronecker. In 1847, Ernst Kummer made early attempts to prove Fermat's Last Theorem by developing groups describing factorization into prime numbers.

The convergence of these various sources into a uniform theory of groups started with Camille Jordan's Traité des substitutions et des équations algébriques (1870). Walther von Dyck (1882) introduced the idea of specifying a group by means of generators and relations, and was also the first to give an axiomatic definition of an "abstract group", in the terminology of the time. As of the 20th century, groups gained wide recognition by the pioneering work of Ferdinand Georg Frobenius and William Burnside, who worked on representation theory of finite groups, Richard Brauer's modular representation theory and Issai Schur's papers. The theory of Lie groups, and more generally locally compact groups was studied by Hermann Weyl, Élie Cartan and many others. Its algebraic counterpart, the theory of algebraic groups, was first shaped by Claude Chevalley (from the late 1930s) and later by the work of Armand Borel and Jacques Tits.

The University of Chicago's 1960–61 Group Theory Year brought together group theorists such as Daniel Gorenstein, John G. Thompson and Walter Feit, laying the foundation of a collaboration that, with input from numerous other mathematicians, led to the classification of finite simple groups, with the final step taken by Aschbacher and Smith in 2004. This project exceeded previous mathematical endeavours by its sheer size, in both length of proof and number of researchers. Research concerning this classification proof is ongoing. Group theory remains a highly active mathematical branch, impacting many other fields, as the examples below illustrate.

Elementary consequences of the group axioms

Basic facts about all groups that can be obtained directly from the group axioms are commonly subsumed under elementary group theory. For example, repeated applications of the associativity axiom show that the unambiguity of

Individual axioms may be "weakened" to assert only the existence of a left identity and left inverses. From these one-sided axioms, one can prove that the left identity is also a right identity and a left inverse is also a right inverse for the same element. Since they define exactly the same structures as groups, collectively the axioms are no weaker.

Uniqueness of identity element

The group axioms imply that the identity element is unique: If and are identity elements of a group, then . Therefore, it is customary to speak of the identity.

Uniqueness of inverses

The group axioms also imply that the inverse of each element is unique: If a group element has both and as inverses, then

| since is the identity element | ||||

| since is an inverse of , so | ||||

| by associativity, which allows rearranging the parentheses | ||||

| since is an inverse of , so | ||||

| since is the identity element. |

Therefore, it is customary to speak of the inverse of an element.

Division

Given elements and of a group , there is a unique solution in to the equation , namely . (One usually avoids using fraction notation unless is abelian, because of the ambiguity of whether it means or .) It follows that for each in , the function that maps each to is a bijection; it is called left multiplication by or left translation by .

Similarly, given and , the unique solution to is . For each , the function that maps each to is a bijection called right multiplication by or right translation by .

Basic concepts

When studying sets, one uses concepts such as subset, function, and quotient by an equivalence relation. When studying groups, one uses instead subgroups, homomorphisms, and quotient groups. These are the analogues that take the group structure into account.

Group homomorphisms

Group homomorphisms are functions that respect group structure; they may be used to relate two groups. A homomorphism from a group to a group is a function such that

It would be natural to require also that respect identities, , and inverses, for all in . However, these additional requirements need not be included in the definition of homomorphisms, because they are already implied by the requirement of respecting the group operation.

The identity homomorphism of a group is the homomorphism that maps each element of to itself. An inverse homomorphism of a homomorphism is a homomorphism such that and , that is, such that for all in and such that for all in . An isomorphism is a homomorphism that has an inverse homomorphism; equivalently, it is a bijective homomorphism. Groups and are called isomorphic if there exists an isomorphism . In this case, can be obtained from simply by renaming its elements according to the function ; then any statement true for is true for , provided that any specific elements mentioned in the statement are also renamed.

The collection of all groups, together with the homomorphisms between them, form a category, the category of groups.

Subgroups

Informally, a subgroup is a group contained within a bigger one, : it has a subset of the elements of , with the same operation. Concretely, this means that the identity element of must be contained in , and whenever and are both in , then so are and , so the elements of , equipped with the group operation on restricted to , indeed form a group. In this case, the inclusion map is a homomorphism.

In the example of symmetries of a square, the identity and the rotations constitute a subgroup , highlighted in red in the group table of the example: any two rotations composed are still a rotation, and a rotation can be undone by (i.e., is inverse to) the complementary rotations 270° for 90°, 180° for 180°, and 90° for 270°. The subgroup test provides a necessary and sufficient condition for a nonempty subset H of a group G to be a subgroup: it is sufficient to check that for all elements and in . Knowing a group's subgroups is important in understanding the group as a whole.

Given any subset of a group , the subgroup generated by consists of all products of elements of and their inverses. It is the smallest subgroup of containing . In the example of symmetries of a square, the subgroup generated by and consists of these two elements, the identity element , and the element . Again, this is a subgroup, because combining any two of these four elements or their inverses (which are, in this particular case, these same elements) yields an element of this subgroup.

An injective homomorphism factors canonically as an isomorphism followed by an inclusion, for some subgroup H of G. Injective homomorphisms are the monomorphisms in the category of groups.

Cosets

In many situations it is desirable to consider two group elements the same if they differ by an element of a given subgroup. For example, in the symmetry group of a square, once any reflection is performed, rotations alone cannot return the square to its original position, so one can think of the reflected positions of the square as all being equivalent to each other, and as inequivalent to the unreflected positions; the rotation operations are irrelevant to the question whether a reflection has been performed. Cosets are used to formalize this insight: a subgroup determines left and right cosets, which can be thought of as translations of by an arbitrary group element . In symbolic terms, the left and right cosets of , containing an element , are

The left cosets of any subgroup form a partition of ; that is, the union of all left cosets is equal to and two left cosets are either equal or have an empty intersection. The first case happens precisely when , i.e., when the two elements differ by an element of . Similar considerations apply to the right cosets of . The left cosets of may or may not be the same as its right cosets. If they are (that is, if all in satisfy ), then is said to be a normal subgroup.

In , the group of symmetries of a square, with its subgroup of rotations, the left cosets are either equal to , if is an element of itself, or otherwise equal to (highlighted in green in the group table of ). The subgroup is normal, because and similarly for the other elements of the group. (In fact, in the case of , the cosets generated by reflections are all equal: .)

Quotient groups

Suppose that is a normal subgroup of a group , and

The elements of the quotient group are and . The group operation on the quotient is shown in the table. For example, . Both the subgroup and the quotient are abelian, but is not. Sometimes a group can be reconstructed from a subgroup and quotient (plus some additional data), by the semidirect product construction; is an example.

The first isomorphism theorem implies that any surjective homomorphism factors canonically as a quotient homomorphism followed by an isomorphism: . Surjective homomorphisms are the epimorphisms in the category of groups.

Presentations

Every group is isomorphic to a quotient of a free group, in many ways.

For example, the dihedral group is generated by the right rotation and the reflection in a vertical line (every element of is a finite product of copies of these and their inverses). Hence there is a surjective homomorphism φ from the free group on two generators to sending to and to . Elements in are called relations; examples include . In fact, it turns out that is the smallest normal subgroup of containing these three elements; in other words, all relations are consequences of these three. The quotient of the free group by this normal subgroup is denoted . This is called a presentation of by generators and relations, because the first isomorphism theorem for φ yields an isomorphism .

A presentation of a group can be used to construct the Cayley graph, a graphical depiction of a discrete group.

Examples and applications

Examples and applications of groups abound. A starting point is the group of integers with addition as group operation, introduced above. If instead of addition multiplication is considered, one obtains multiplicative groups. These groups are predecessors of important constructions in abstract algebra.

Groups are also applied in many other mathematical areas. Mathematical objects are often examined by associating groups to them and studying the properties of the corresponding groups. For example, Henri Poincaré founded what is now called algebraic topology by introducing the fundamental group. By means of this connection, topological properties such as proximity and continuity translate into properties of groups. For example, elements of the fundamental group are represented by loops. The second image shows some loops in a plane minus a point. The blue loop is considered null-homotopic (and thus irrelevant), because it can be continuously shrunk to a point. The presence of the hole prevents the orange loop from being shrunk to a point. The fundamental group of the plane with a point deleted turns out to be infinite cyclic, generated by the orange loop (or any other loop winding once around the hole). This way, the fundamental group detects the hole.

In more recent applications, the influence has also been reversed to motivate geometric constructions by a group-theoretical background. In a similar vein, geometric group theory employs geometric concepts, for example in the study of hyperbolic groups. Further branches crucially applying groups include algebraic geometry and number theory.

In addition to the above theoretical applications, many practical applications of groups exist. Cryptography relies on the combination of the abstract group theory approach together with algorithmical knowledge obtained in computational group theory, in particular when implemented for finite groups. Applications of group theory are not restricted to mathematics; sciences such as physics, chemistry and computer science benefit from the concept.

Numbers

Many number systems, such as the integers and the rationals, enjoy a naturally given group structure. In some cases, such as with the rationals, both addition and multiplication operations give rise to group structures. Such number systems are predecessors to more general algebraic structures known as rings and fields. Further abstract algebraic concepts such as modules, vector spaces and algebras also form groups.

Integers

The group of integers under addition, denoted , has been described above. The integers, with the operation of multiplication instead of addition, do not form a group. The associativity and identity axioms are satisfied, but inverses do not exist: for example, is an integer, but the only solution to the equation in this case is , which is a rational number, but not an integer. Hence not every element of has a (multiplicative) inverse.

Rationals

The desire for the existence of multiplicative inverses suggests considering fractions

However, the set of all nonzero rational numbers does form an abelian group under multiplication, also denoted . Associativity and identity element axioms follow from the properties of integers. The closure requirement still holds true after removing zero, because the product of two nonzero rationals is never zero. Finally, the inverse of is , therefore the axiom of the inverse element is satisfied.

The rational numbers (including zero) also form a group under addition. Intertwining addition and multiplication operations yields more complicated structures called rings and – if division by other than zero is possible, such as in – fields, which occupy a central position in abstract algebra. Group theoretic arguments therefore underlie parts of the theory of those entities.

Modular arithmetic

Modular arithmetic for a modulus defines any two elements and that differ by a multiple of to be equivalent, denoted by . Every integer is equivalent to one of the integers from to , and the operations of modular arithmetic modify normal arithmetic by replacing the result of any operation by its equivalent representative. Modular addition, defined in this way for the integers from to , forms a group, denoted as or , with as the identity element and as the inverse element of .

A familiar example is addition of hours on the face of a clock, where 12 rather than 0 is chosen as the representative of the identity. If the hour hand is on and is advanced hours, it ends up on , as shown in the illustration. This is expressed by saying that is congruent to "modulo " or, in symbols,

For any prime number , there is also the multiplicative group of integers modulo . Its elements can be represented by to . The group operation, multiplication modulo , replaces the usual product by its representative, the remainder of division by . For example, for , the four group elements can be represented by . In this group, , because the usual product is equivalent to : when divided by it yields a remainder of . The primality of ensures that the usual product of two representatives is not divisible by , and therefore that the modular product is nonzero. The identity element is represented by , and associativity follows from the corresponding property of the integers. Finally, the inverse element axiom requires that given an integer not divisible by , there exists an integer such that

Cyclic groups

A cyclic group is a group all of whose elements are powers of a particular element . In multiplicative notation, the elements of the group are

In the groups introduced above, the element is primitive, so these groups are cyclic. Indeed, each element is expressible as a sum all of whose terms are . Any cyclic group with elements is isomorphic to this group. A second example for cyclic groups is the group of th complex roots of unity, given by complex numbers satisfying . These numbers can be visualized as the vertices on a regular -gon, as shown in blue in the image for . The group operation is multiplication of complex numbers. In the picture, multiplying with corresponds to a counter-clockwise rotation by 60°. From field theory, the group is cyclic for prime : for example, if , is a generator since , , , and .

Some cyclic groups have an infinite number of elements. In these groups, for every non-zero element , all the powers of are distinct; despite the name "cyclic group", the powers of the elements do not cycle. An infinite cyclic group is isomorphic to , the group of integers under addition introduced above. As these two prototypes are both abelian, so are all cyclic groups.

The study of finitely generated abelian groups is quite mature, including the fundamental theorem of finitely generated abelian groups; and reflecting this state of affairs, many group-related notions, such as center and commutator, describe the extent to which a given group is not abelian.

Symmetry groups

Symmetry groups are groups consisting of symmetries of given mathematical objects, principally geometric entities, such as the symmetry group of the square given as an introductory example above, although they also arise in algebra such as the symmetries among the roots of polynomial equations dealt with in Galois theory (see below). Conceptually, group theory can be thought of as the study of symmetry. Symmetries in mathematics greatly simplify the study of geometrical or analytical objects. A group is said to act on another mathematical object X if every group element can be associated to some operation on X and the composition of these operations follows the group law. For example, an element of the (2,3,7) triangle group acts on a triangular tiling of the hyperbolic plane by permuting the triangles. By a group action, the group pattern is connected to the structure of the object being acted on.

In chemical fields, such as crystallography, space groups and point groups describe molecular symmetries and crystal symmetries. These symmetries underlie the chemical and physical behavior of these systems, and group theory enables simplification of quantum mechanical analysis of these properties. For example, group theory is used to show that optical transitions between certain quantum levels cannot occur simply because of the symmetry of the states involved.

Group theory helps predict the changes in physical properties that occur when a material undergoes a phase transition, for example, from a cubic to a tetrahedral crystalline form. An example is ferroelectric materials, where the change from a paraelectric to a ferroelectric state occurs at the Curie temperature and is related to a change from the high-symmetry paraelectric state to the lower symmetry ferroelectric state, accompanied by a so-called soft phonon mode, a vibrational lattice mode that goes to zero frequency at the transition.

Such spontaneous symmetry breaking has found further application in elementary particle physics, where its occurrence is related to the appearance of Goldstone bosons.

|

|

|

|

| Buckminsterfullerene displays icosahedral symmetry |

Ammonia, NH3. Its symmetry group is of order 6, generated by a 120° rotation and a reflection. | Cubane C8H8 features octahedral symmetry. |

The tetrachloroplatinate(II) ion, [PtCl4]2- exhibits square-planar geometry |

Finite symmetry groups such as the Mathieu groups are used in coding theory, which is in turn applied in error correction of transmitted data, and in CD players. Another application is differential Galois theory, which characterizes functions having antiderivatives of a prescribed form, giving group-theoretic criteria for when solutions of certain differential equations are well-behaved. Geometric properties that remain stable under group actions are investigated in (geometric) invariant theory.

General linear group and representation theory

Matrix groups consist of matrices together with matrix multiplication. The general linear group consists of all invertible -by- matrices with real entries. Its subgroups are referred to as matrix groups or linear groups. The dihedral group example mentioned above can be viewed as a (very small) matrix group. Another important matrix group is the special orthogonal group . It describes all possible rotations in dimensions. Rotation matrices in this group are used in computer graphics.

Representation theory is both an application of the group concept and important for a deeper understanding of groups. It studies the group by its group actions on other spaces. A broad class of group representations are linear representations in which the group acts on a vector space, such as the three-dimensional Euclidean space . A representation of a group on an -dimensional real vector space is simply a group homomorphism from the group to the general linear group. This way, the group operation, which may be abstractly given, translates to the multiplication of matrices making it accessible to explicit computations.

A group action gives further means to study the object being acted on. On the other hand, it also yields information about the group. Group representations are an organizing principle in the theory of finite groups, Lie groups, algebraic groups and topological groups, especially (locally) compact groups.

Galois groups

Galois groups were developed to help solve polynomial equations by capturing their symmetry features. For example, the solutions of the quadratic equation are given by

Modern Galois theory generalizes the above type of Galois groups by shifting to field theory and considering field extensions formed as the splitting field of a polynomial. This theory establishes—via the fundamental theorem of Galois theory—a precise relationship between fields and groups, underlining once again the ubiquity of groups in mathematics.

Finite groups

A group is called finite if it has a finite number of elements. The number of elements is called the order of the group. An important class is the symmetric groups , the groups of permutations of objects. For example, the symmetric group on 3 letters is the group of all possible reorderings of the objects. The three letters ABC can be reordered into ABC, ACB, BAC, BCA, CAB, CBA, forming in total 6 (factorial of 3) elements. The group operation is composition of these reorderings, and the identity element is the reordering operation that leaves the order unchanged. This class is fundamental insofar as any finite group can be expressed as a subgroup of a symmetric group for a suitable integer , according to Cayley's theorem. Parallel to the group of symmetries of the square above, can also be interpreted as the group of symmetries of an equilateral triangle.

The order of an element in a group is the least positive integer such that , where represents

More sophisticated counting techniques, for example, counting cosets, yield more precise statements about finite groups: Lagrange's Theorem states that for a finite group the order of any finite subgroup divides the order of . The Sylow theorems give a partial converse.

The dihedral group of symmetries of a square is a finite group of order 8. In this group, the order of is 4, as is the order of the subgroup that this element generates. The order of the reflection elements etc. is 2. Both orders divide 8, as predicted by Lagrange's theorem. The groups of multiplication modulo a prime have order .

Finite abelian groups

Any finite abelian group is isomorphic to a product of finite cyclic groups; this statement is part of the fundamental theorem of finitely generated abelian groups.

Any group of prime order is isomorphic to the cyclic group (a consequence of Lagrange's theorem). Any group of order is abelian, isomorphic to or . But there exist nonabelian groups of order ; the dihedral group of order above is an example.

Simple groups

When a group has a normal subgroup other than and itself, questions about can sometimes be reduced to questions about and . A nontrivial group is called simple if it has no such normal subgroup. Finite simple groups are to finite groups as prime numbers are to positive integers: they serve as building blocks, in a sense made precise by the Jordan–Hölder theorem.

Classification of finite simple groups

Computer algebra systems have been used to list all groups of order up to 2000. But classifying all finite groups is a problem considered too hard to be solved.

The classification of all finite simple groups was a major achievement in contemporary group theory. There are several infinite families of such groups, as well as 26 "sporadic groups" that do not belong to any of the families. The largest sporadic group is called the monster group. The monstrous moonshine conjectures, proved by Richard Borcherds, relate the monster group to certain modular functions.

The gap between the classification of simple groups and the classification of all groups lies in the extension problem.

Groups with additional structure

An equivalent definition of group consists of replacing the "there exist" part of the group axioms by operations whose result is the element that must exist. So, a group is a set equipped with a binary operation (the group operation), a unary operation (which provides the inverse) and a nullary operation, which has no operand and results in the identity element. Otherwise, the group axioms are exactly the same. This variant of the definition avoids existential quantifiers and is used in computing with groups and for computer-aided proofs.

This way of defining groups lends itself to generalizations such as the notion of group object in a category. Briefly, this is an object with morphisms that mimic the group axioms.

Topological groups

Some topological spaces may be endowed with a group law. In order for the group law and the topology to interweave well, the group operations must be continuous functions; informally, and must not vary wildly if and vary only a little. Such groups are called topological groups, and they are the group objects in the category of topological spaces. The most basic examples are the group of real numbers under addition and the group of nonzero real numbers under multiplication. Similar examples can be formed from any other topological field, such as the field of complex numbers or the field of p-adic numbers. These examples are locally compact, so they have Haar measures and can be studied via harmonic analysis. Other locally compact topological groups include the group of points of an algebraic group over a local field or adele ring; these are basic to number theory Galois groups of infinite algebraic field extensions are equipped with the Krull topology, which plays a role in infinite Galois theory. A generalization used in algebraic geometry is the étale fundamental group.

Lie groups

A Lie group is a group that also has the structure of a differentiable manifold; informally, this means that it looks locally like a Euclidean space of some fixed dimension. Again, the definition requires the additional structure, here the manifold structure, to be compatible: the multiplication and inverse maps are required to be smooth.

A standard example is the general linear group introduced above: it is an open subset of the space of all -by- matrices, because it is given by the inequality

Lie groups are of fundamental importance in modern physics: Noether's theorem links continuous symmetries to conserved quantities. Rotation, as well as translations in space and time, are basic symmetries of the laws of mechanics. They can, for instance, be used to construct simple models—imposing, say, axial symmetry on a situation will typically lead to significant simplification in the equations one needs to solve to provide a physical description. Another example is the group of Lorentz transformations, which relate measurements of time and velocity of two observers in motion relative to each other. They can be deduced in a purely group-theoretical way, by expressing the transformations as a rotational symmetry of Minkowski space. The latter serves—in the absence of significant gravitation—as a model of spacetime in special relativity. The full symmetry group of Minkowski space, i.e., including translations, is known as the Poincaré group. By the above, it plays a pivotal role in special relativity and, by implication, for quantum field theories. Symmetries that vary with location are central to the modern description of physical interactions with the help of gauge theory. An important example of a gauge theory is the Standard Model, which describes three of the four known fundamental forces and classifies all known elementary particles.

Generalizations

| Group-like structures | |||||

|---|---|---|---|---|---|

|

|

Totality | Associativity | Identity | Division | Commutativity |

| Semigroupoid | Unneeded | Required | Unneeded | Unneeded | Unneeded |

| Small category | Unneeded | Required | Required | Unneeded | Unneeded |

| Groupoid | Unneeded | Required | Required | Required | Unneeded |

| Magma | Required | Unneeded | Unneeded | Unneeded | Unneeded |

| Quasigroup | Required | Unneeded | Unneeded | Required | Unneeded |

| Unital magma | Required | Unneeded | Required | Unneeded | Unneeded |

| Semigroup | Required | Required | Unneeded | Unneeded | Unneeded |

| Loop | Required | Unneeded | Required | Required | Unneeded |

| Monoid | Required | Required | Required | Unneeded | Unneeded |

| Group | Required | Required | Required | Required | Unneeded |

| Commutative monoid | Required | Required | Required | Unneeded | Required |

| Abelian group | Required | Required | Required | Required | Required |

| ^α The closure axiom, used by many sources and defined differently, is equivalent. | |||||

In abstract algebra, more general structures are defined by relaxing some of the axioms defining a group. For example, if the requirement that every element has an inverse is eliminated, the resulting algebraic structure is called a monoid. The natural numbers (including zero) under addition form a monoid, as do the nonzero integers under multiplication , see above. There is a general method to formally add inverses to elements to any (abelian) monoid, much the same way as is derived from , known as the Grothendieck group. Groupoids are similar to groups except that the composition need not be defined for all and . They arise in the study of more complicated forms of symmetry, often in topological and analytical structures, such as the fundamental groupoid or stacks. Finally, it is possible to generalize any of these concepts by replacing the binary operation with an arbitrary n-ary one (i.e., an operation taking n arguments). With the proper generalization of the group axioms this gives rise to an n-ary group. The table gives a list of several structures generalizing groups.