| Third Industrial Revolution | |

|---|---|

| 1947–Present | |

A laptop connects to the Internet to display information from Wikipedia; long-distance communication between computer systems is a hallmark of the Information Age The Information Age (also known as the Third Industrial Revolution, Computer Age, Digital Age, Silicon Age, New Media Age, Internet Age, or the Digital Revolution) is a historical period that began in the mid-20th century. It is characterized by a rapid shift from traditional industries, as established during the Industrial Revolution, to an economy centered on information technology. The onset of the Information Age has been linked to the development of the transistor in 1947 and the optical amplifier in 1957. These technological advances have had a significant impact on the way information is processed and transmitted. |

According to the United Nations Public Administration Network, the Information Age was formed by capitalizing on computer microminiaturization advances, which led to modernized information systems and internet communications as the driving force of social evolution.

Many debate if or when the Third Industrial Revolution ended and the Fourth Industrial Revolution began, ranging from 2000 to 2020.

History

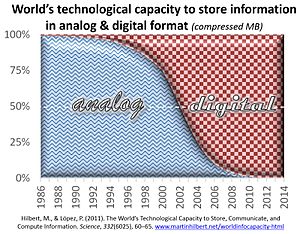

The digital revolution converted technology from analog format to digital format. By doing this, it became possible to make copies that were identical to the original. In digital communications, for example, repeating hardware was able to amplify the digital signal and pass it on with no loss of information in the signal. Of equal importance to the revolution was the ability to easily move the digital information between media, and to access or distribute it remotely.

One turning point of the revolution was the change from analog to digitally recorded music. During the 1980s the digital format of optical compact discs gradually replaced analog formats, such as vinyl records and cassette tapes, as the popular medium of choice.

Previous inventions

Humans have manufactured tools for counting and calculating since ancient times, such as the abacus, astrolabe, equatorium, and mechanical timekeeping devices. More complicated devices started appearing in the 1600s, including the slide rule and mechanical calculators. By the early 1800s, the First Industrial Revolution had produced mass-market calculators like the arithmometer and the enabling technology of the punch card. Charles Babbage proposed a mechanical general-purpose computer called the Analytical Engine, but it was never successfully built, and was largely forgotten by the 20th century and unknown to most of the inventors of modern computers.

The Second Industrial Revolution in the last quarter of the 19th century developed useful electrical circuits and the telegraph. In the 1880s, Herman Hollerith developed electromechanical tabulating and calculating devices using punch cards and unit record equipment, which became widespread in business and government.

Meanwhile, various analog computer systems used electrical, mechanical, or hydraulic systems to model problems and calculate answers. These included an 1872 tide-predicting machine, differential analysers, perpetual calendar machines, the Deltar for water management in the Netherlands, network analyzers for electrical systems, and various machines for aiming military guns and bombs. The construction of problem-specific analog computers continued in the late 1940s and beyond, with FERMIAC for neutron transport, Project Cyclone for various military applications, and the Phillips Machine for economic modeling.

Building on the complexity of the Z1 and Z2, German inventor Konrad Zuse use electromechanical systems to complete in 1941 the Z3, the world's first working programmable, fully automatic digital computer. Also during World War II, Allied engineers constructed electromechanical bombes to break German Enigma machine encoding. The base-10 electromechanical Harvard Mark I was completed in 1944, and was to some degree improved with inspiration from Charles Babbage's designs.

1947–1969: Origins

In 1947, the first working transistor, the germanium-based point-contact transistor, was invented by John Bardeen and Walter Houser Brattain while working under William Shockley at Bell Labs. This led the way to more advanced digital computers. From the late 1940s, universities, military, and businesses developed computer systems to digitally replicate and automate previously manually performed mathematical calculations, with the LEO being the first commercially available general-purpose computer.

Digital communication became economical for widespread adoption after the invention of the personal computer in the 1970s. Claude Shannon, a Bell Labs mathematician, is credited for having laid out the foundations of digitalization in his pioneering 1948 article, A Mathematical Theory of Communication.

Other important technological developments included the invention of the monolithic integrated circuit chip by Robert Noyce at Fairchild Semiconductor in 1959 (made possible by the planar process developed by Jean Hoerni), the first successful metal–oxide–semiconductor field-effect transistor (MOSFET, or MOS transistor) by Mohamed Atalla and Dawon Kahng at Bell Labs in 1959, and the development of the complementary MOS (CMOS) process by Frank Wanlass and Chih-Tang Sah at Fairchild in 1963.

In 1962 AT&T deployed the T-carrier for long-haul pulse-code modulation (PCM) digital voice transmission. The T1 format carried 24 pulse-code modulated, time-division multiplexed speech signals each encoded in 64 kbit/s streams, leaving 8 kbit/s of framing information which facilitated the synchronization and demultiplexing at the receiver. Over the subsequent decades the digitisation of voice became the norm for all but the last mile (where analogue continued to be the norm right into the late 1990s).

Following the development of MOS integrated circuit chips in the early 1960s, MOS chips reached higher transistor density and lower manufacturing costs than bipolar integrated circuits by 1964. MOS chips further increased in complexity at a rate predicted by Moore's law, leading to large-scale integration (LSI) with hundreds of transistors on a single MOS chip by the late 1960s. The application of MOS LSI chips to computing was the basis for the first microprocessors, as engineers began recognizing that a complete computer processor could be contained on a single MOS LSI chip. In 1968, Fairchild engineer Federico Faggin improved MOS technology with his development of the silicon-gate MOS chip, which he later used to develop the Intel 4004, the first single-chip microprocessor. It was released by Intel in 1971, and laid the foundations for the microcomputer revolution that began in the 1970s.

MOS technology also led to the development of semiconductor image sensors suitable for digital cameras. The first such image sensor was the charge-coupled device, developed by Willard S. Boyle and George E. Smith at Bell Labs in 1969, based on MOS capacitor technology.

1969–1989: Invention of the internet, rise of home computers

The public was first introduced to the concepts that led to the Internet when a message was sent over the ARPANET in 1969. Packet switched networks such as ARPANET, Mark I, CYCLADES, Merit Network, Tymnet, and Telenet, were developed in the late 1960s and early 1970s using a variety of protocols. The ARPANET in particular led to the development of protocols for internetworking, in which multiple separate networks could be joined into a network of networks.

The Whole Earth movement of the 1960s advocated the use of new technology.

In the 1970s, the home computer was introduced, time-sharing computers, the video game console, the first coin-op video games, and the golden age of arcade video games began with Space Invaders. As digital technology proliferated, and the switch from analog to digital record keeping became the new standard in business, a relatively new job description was popularized, the data entry clerk. Culled from the ranks of secretaries and typists from earlier decades, the data entry clerk's job was to convert analog data (customer records, invoices, etc.) into digital data.

In developed nations, computers achieved semi-ubiquity during the 1980s as they made their way into schools, homes, business, and industry. Automated teller machines, industrial robots, CGI in film and television, electronic music, bulletin board systems, and video games all fueled what became the zeitgeist of the 1980s. Millions of people purchased home computers, making household names of early personal computer manufacturers such as Apple, Commodore, and Tandy. To this day the Commodore 64 is often cited as the best selling computer of all time, having sold 17 million units (by some accounts) between 1982 and 1994.

In 1984, the U.S. Census Bureau began collecting data on computer and Internet use in the United States; their first survey showed that 8.2% of all U.S. households owned a personal computer in 1984, and that households with children under the age of 18 were nearly twice as likely to own one at 15.3% (middle and upper middle class households were the most likely to own one, at 22.9%). By 1989, 15% of all U.S. households owned a computer, and nearly 30% of households with children under the age of 18 owned one. By the late 1980s, many businesses were dependent on computers and digital technology.

Motorola created the first mobile phone, Motorola DynaTac, in 1983. However, this device used analog communication - digital cell phones were not sold commercially until 1991 when the 2G network started to be opened in Finland to accommodate the unexpected demand for cell phones that was becoming apparent in the late 1980s.

Compute! magazine predicted that CD-ROM would be the centerpiece of the revolution, with multiple household devices reading the discs.

The first true digital camera was created in 1988, and the first were marketed in December 1989 in Japan and in 1990 in the United States. By the mid-2000s, digital cameras had eclipsed traditional film in popularity.

Digital ink was also invented in the late 1980s. Disney's CAPS system (created 1988) was used for a scene in 1989's The Little Mermaid and for all their animation films between 1990's The Rescuers Down Under and 2004's Home on the Range.

1989–2005: Invention of the World Wide Web, mainstreaming of the Internet, Web 1.0

Tim Berners-Lee invented the World Wide Web in 1989.

The first public digital HDTV broadcast was of the 1990 World Cup that June; it was played in 10 theaters in Spain and Italy. However, HDTV did not become a standard until the mid-2000s outside Japan.

The World Wide Web became publicly accessible in 1991, which had been available only to government and universities. In 1993 Marc Andreessen and Eric Bina introduced Mosaic, the first web browser capable of displaying inline images and the basis for later browsers such as Netscape Navigator and Internet Explorer. Stanford Federal Credit Union was the first financial institution to offer online internet banking services to all of its members in October 1994. In 1996 OP Financial Group, also a cooperative bank, became the second online bank in the world and the first in Europe. The Internet expanded quickly, and by 1996, it was part of mass culture and many businesses listed websites in their ads. By 1999, almost every country had a connection, and nearly half of Americans and people in several other countries used the Internet on a regular basis. However throughout the 1990s, "getting online" entailed complicated configuration, and dial-up was the only connection type affordable by individual users; the present day mass Internet culture was not possible.

In 1989, about 15% of all households in the United States owned a personal computer. For households with children, nearly 30% owned a computer in 1989, and in 2000, 65% owned one.

Cell phones became as ubiquitous as computers by the early 2000s, with movie theaters beginning to show ads telling people to silence their phones. They also became much more advanced than phones of the 1990s, most of which only took calls or at most allowed for the playing of simple games.

Text messaging became widely used in the late 1990s worldwide, except for in the United States of America where text messaging didn't become commonplace till the early 2000s.

The digital revolution became truly global in this time as well - after revolutionizing society in the developed world in the 1990s, the digital revolution spread to the masses in the developing world in the 2000s.

By 2000, a majority of U.S. households had at least one personal computer and internet access the following year. In 2002, a majority of U.S. survey respondents reported having a mobile phone.

2005–2020: Web 2.0, social media, smartphones, digital TV

In late 2005 the population of the Internet reached 1 billion, and 3 billion people worldwide used cell phones by the end of the decade. HDTV became the standard television broadcasting format in many countries by the end of the decade. In September and December 2006 respectively, Luxembourg and the Netherlands became the first countries to completely transition from analog to digital television. In September 2007, a majority of U.S. survey respondents reported having broadband internet at home. According to estimates from the Nielsen Media Research, approximately 45.7 million U.S. households in 2006 (or approximately 40 percent of approximately 114.4 million) owned a dedicated home video game console, and by 2015, 51 percent of U.S. households owned a dedicated home video game console according to an Entertainment Software Association annual industry report. By 2012, over 2 billion people used the Internet, twice the number using it in 2007. Cloud computing had entered the mainstream by the early 2010s. In January 2013, a majority of U.S. survey respondents reported owning a smartphone. By 2016, half of the world's population was connected and as of 2020, that number has risen to 67%.

Rise in digital technology use of computers

In the late 1980s, less than 1% of the world's technologically stored information was in digital format, while it was 94% in 2007, with more than 99% by 2014.

It is estimated that the world's capacity to store information has increased from 2.6 (optimally compressed) exabytes in 1986, to some 5,000 exabytes in 2014 (5 zettabytes).

1990

- Cell phone subscribers: 12.5 million (0.25% of world population in 1990)

- Internet users: 2.8 million (0.05% of world population in 1990)

2000

- Cell phone subscribers: 1.5 billion (19% of world population in 2002)

- Internet users: 631 million (11% of world population in 2002)

2010

- Cell phone subscribers: 4 billion (68% of world population in 2010)

- Internet users: 1.8 billion (26.6% of world population in 2010)

2020

- Cell phone subscribers: 4.78 billion (62% of world population in 2020)

- Internet users: 4.54 billion (59% of world population in 2020)

Overview of early developments

Library expansion and Moore's law

Library expansion was calculated in 1945 by Fremont Rider to double in capacity every 16 years where sufficient space made available. He advocated replacing bulky, decaying printed works with miniaturized microform analog photographs, which could be duplicated on-demand for library patrons and other institutions.

Rider did not foresee, however, the digital technology that would follow decades later to replace analog microform with digital imaging, storage, and transmission media, whereby vast increases in the rapidity of information growth would be made possible through automated, potentially-lossless digital technologies. Accordingly, Moore's law, formulated around 1965, would calculate that the number of transistors in a dense integrated circuit doubles approximately every two years.

By the early 1980s, along with improvements in computing power, the proliferation of the smaller and less expensive personal computers allowed for immediate access to information and the ability to share and store it. Connectivity between computers within organizations enabled access to greater amounts of information.

Information storage and Kryder's law

The world's technological capacity to store information grew from 2.6 (optimally compressed) exabytes (EB) in 1986 to 15.8 EB in 1993; over 54.5 EB in 2000; and to 295 (optimally compressed) EB in 2007. This is the informational equivalent to less than one 730-megabyte (MB) CD-ROM per person in 1986 (539 MB per person); roughly four CD-ROM per person in 1993; twelve CD-ROM per person in the year 2000; and almost sixty-one CD-ROM per person in 2007. It is estimated that the world's capacity to store information has reached 5 zettabytes in 2014, the informational equivalent of 4,500 stacks of printed books from the earth to the sun.

The amount of digital data stored appears to be growing approximately exponentially, reminiscent of Moore's law. As such, Kryder's law prescribes that the amount of storage space available appears to be growing approximately exponentially.

Information transmission

The world's technological capacity to receive information through one-way broadcast networks was 432 exabytes of (optimally compressed) information in 1986; 715 (optimally compressed) exabytes in 1993; 1.2 (optimally compressed) zettabytes in 2000; and 1.9 zettabytes in 2007, the information equivalent of 174 newspapers per person per day.

The world's effective capacity to exchange information through two-way telecommunication networks was 281 petabytes of (optimally compressed) information in 1986; 471 petabytes in 1993; 2.2 (optimally compressed) exabytes in 2000; and 65 (optimally compressed) exabytes in 2007, the information equivalent of six newspapers per person per day. In the 1990s, the spread of the Internet caused a sudden leap in access to and ability to share information in businesses and homes globally. A computer that cost $3000 in 1997 would cost $2000 two years later and $1000 the following year, due to the rapid advancement of technology.

Computation

The world's technological capacity to compute information with human-guided general-purpose computers grew from 3.0 × 108 MIPS in 1986, to 4.4 × 109 MIPS in 1993; to 2.9 × 1011 MIPS in 2000; to 6.4 × 1012 MIPS in 2007. An article featured in the journal Trends in Ecology and Evolution in 2016 reported that:

Digital technology has vastly exceeded the cognitive capacity of any single human being and has done so a decade earlier than predicted. In terms of capacity, there are two measures of importance: the number of operations a system can perform and the amount of information that can be stored. The number of synaptic operations per second in a human brain has been estimated to lie between 10^15 and 10^17. While this number is impressive, even in 2007 humanity's general-purpose computers were capable of performing well over 10^18 instructions per second. Estimates suggest that the storage capacity of an individual human brain is about 10^12 bytes. On a per capita basis, this is matched by current digital storage (5x10^21 bytes per 7.2x10^9 people).

Genetic information

Genetic code may also be considered part of the information revolution. Now that sequencing has been computerized, genome can be rendered and manipulated as data. This started with DNA sequencing, invented by Walter Gilbert and Allan Maxam in 1976-1977 and Frederick Sanger in 1977, grew steadily with the Human Genome Project, initially conceived by Gilbert and finally, the practical applications of sequencing, such as gene testing, after the discovery by Myriad Genetics of the BRCA1 breast cancer gene mutation. Sequence data in Genbank has grown from the 606 genome sequences registered in December 1982 to the 231 million genomes in August 2021. An additional 13 trillion incomplete sequences are registered in the Whole Genome Shotgun submission database as of August 2021. The information contained in these registered sequences has doubled every 18 months.

Different stage conceptualizations

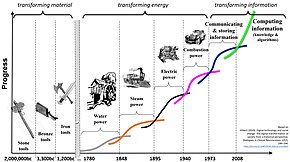

During rare times in human history, there have been periods of innovation that have transformed human life. The Neolithic Age, the Scientific Age and the Industrial Age all, ultimately, induced discontinuous and irreversible changes in the economic, social and cultural elements of the daily life of most people. Traditionally, these epochs have taken place over hundreds, or in the case of the Neolithic Revolution, thousands of years, whereas the Information Age swept to all parts of the globe in just a few years, as a result of the rapidly advancing speed of information exchange.

Between 7,000 and 10,000 years ago during the Neolithic period, humans began to domesticate animals, began to farm grains and to replace stone tools with ones made of metal. These innovations allowed nomadic hunter-gatherers to settle down. Villages formed along the Yangtze River in China in 6,500 B.C., the Nile River region of Africa and in Mesopotamia (Iraq) in 6,000 B.C. Cities emerged between 6,000 B.C. and 3,500 B.C. The development of written communication (cuneiform in Sumeria and hieroglyphs in Egypt in 3,500 B.C. and writing in Egypt in 2,560 B.C. and in Minoa and China around 1,450 B.C.) enabled ideas to be preserved for extended periods to spread extensively. In all, Neolithic developments, augmented by writing as an information tool, laid the groundwork for the advent of civilization.

The Scientific Age began in the period between Galileo's 1543 proof that the planets orbit the Sun and Newton's publication of the laws of motion and gravity in Principia in 1697. This age of discovery continued through the 18th century, accelerated by widespread use of the moveable type printing press by Johannes Gutenberg.

The Industrial Age began in Great Britain in 1760 and continued into the mid-19th century. The invention of machines such as the mechanical textile weaver by Edmund Cartwrite, the rotating shaft steam engine by James Watt and the cotton gin by Eli Whitney, along with processes for mass manufacturing, came to serve the needs of a growing global population. The Industrial Age harnessed steam and waterpower to reduce the dependence on animal and human physical labor as the primary means of production. Thus, the core of the Industrial Revolution was the generation and distribution of energy from coal and water to produce steam and, later in the 20th century, electricity.

The Information Age also requires electricity to power the global networks of computers that process and store data. However, what dramatically accelerated the pace of The Information Age’s adoption, as compared to previous ones, was the speed by which knowledge could be transferred and pervaded the entire human family in a few short decades. This acceleration came about with the adoptions of a new form of power. Beginning in 1972, engineers devised ways to harness light to convey data through fiber optic cable. Today, light-based optical networking systems at the heart of telecom networks and the Internet span the globe and carry most of the information traffic to and from users and data storage systems.

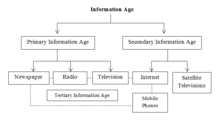

There are different conceptualizations of the Information Age. Some focus on the evolution of information over the ages, distinguishing between the Primary Information Age and the Secondary Information Age. Information in the Primary Information Age was handled by newspapers, radio and television. The Secondary Information Age was developed by the Internet, satellite televisions and mobile phones. The Tertiary Information Age was emerged by media of the Primary Information Age interconnected with media of the Secondary Information Age as presently experienced.

Others classify it in terms of the well-established Schumpeterian long waves or Kondratiev waves. Here authors distinguish three different long-term metaparadigms, each with different long waves. The first focused on the transformation of material, including stone, bronze, and iron. The second, often referred to as industrial revolution, was dedicated to the transformation of energy, including water, steam, electric, and combustion power. Finally, the most recent metaparadigm aims at transforming information. It started out with the proliferation of communication and stored data and has now entered the age of algorithms, which aims at creating automated processes to convert the existing information into actionable knowledge.

Information in social and economic activities

The main feature of the information revolution is the growing economic, social and technological role of information. Information-related activities did not come up with the Information Revolution. They existed, in one form or the other, in all human societies, and eventually developed into institutions, such as the Platonic Academy, Aristotle's Peripatetic school in the Lyceum, the Musaeum and the Library of Alexandria, or the schools of Babylonian astronomy. The Agricultural Revolution and the Industrial Revolution came up when new informational inputs were produced by individual innovators, or by scientific and technical institutions. During the Information Revolution all these activities are experiencing continuous growth, while other information-oriented activities are emerging.

Information is the central theme of several new sciences, which emerged in the 1940s, including Shannon's (1949) Information Theory and Wiener's (1948) Cybernetics. Wiener stated: "information is information not matter or energy". This aphorism suggests that information should be considered along with matter and energy as the third constituent part of the Universe; information is carried by matter or by energy. By the 1990s some writers believed that changes implied by the Information revolution will lead to not only a fiscal crisis for governments but also the disintegration of all "large structures".

The theory of information revolution

The term information revolution may relate to, or contrast with, such widely used terms as Industrial Revolution and Agricultural Revolution. Note, however, that you may prefer mentalist to materialist paradigm. The following fundamental aspects of the theory of information revolution can be given:

- The object of economic activities can be conceptualized according to the fundamental distinction between matter, energy, and information. These apply both to the object of each economic activity, as well as within each economic activity or enterprise. For instance, an industry may process matter (e.g. iron) using energy and information (production and process technologies, management, etc.).

- Information is a factor of production (along with capital, labor, land (economics)), as well as a product sold in the market, that is, a commodity. As such, it acquires use value and exchange value, and therefore a price.

- All products have use value, exchange value, and informational value. The latter can be measured by the information content of the product, in terms of innovation, design, etc.

- Industries develop information-generating activities, the so-called Research and Development (R&D) functions.

- Enterprises, and society at large, develop the information control and processing functions, in the form of management structures; these are also called "white-collar workers", "bureaucracy", "managerial functions", etc.

- Labor can be classified according to the object of labor, into information labor and non-information labor.

- Information activities constitute a large, new economic sector, the information sector along with the traditional primary sector, secondary sector, and tertiary sector, according to the three-sector hypothesis. These should be restated because they are based on the ambiguous definitions made by Colin Clark (1940), who included in the tertiary sector all activities that have not been included in the primary (agriculture, forestry, etc.) and secondary (manufacturing) sectors. The quaternary sector and the quinary sector of the economy attempt to classify these new activities, but their definitions are not based on a clear conceptual scheme, although the latter is considered by some as equivalent with the information sector.

- From a strategic point of view, sectors can be defined as information sector, means of production, means of consumption, thus extending the classical Ricardo-Marx model of the Capitalist mode of production (see Influences on Karl Marx). Marx stressed in many occasions the role of the "intellectual element" in production, but failed to find a place for it into his model.ds of production, patents, etc. Diffusion of innovations manifests saturation effects (related term: market saturation), following certain cyclical patterns and creating "economic waves", also referred to as "business cycles". There are various types of waves, such as Kondratiev wave (54 years), Kuznets swing (18 years), Juglar cycle (9 years) and Kitchin (about 4 years, see also Joseph Schumpeter) distinguished by their nature, duration, and, thus, economic impact.

- Diffusion of innovations causes structural-sectoral shifts in the economy, which can be smooth or can create crisis and renewal, a process which Joseph Schumpeter called vividly "creative destruction".

From a different perspective, Irving E. Fang (1997) identified six 'Information Revolutions': writing, printing, mass media, entertainment, the 'tool shed' (which we call 'home' now), and the information highway. In this work the term 'information revolution' is used in a narrow sense, to describe trends in communication media.

Measuring and modeling the information revolution

Porat (1976) measured the information sector in the US using the input-output analysis; OECD has included statistics on the information sector in the economic reports of its member countries. Veneris (1984, 1990) explored the theoretical, economic and regional aspects of the informational revolution and developed a systems dynamics simulation computer model.

These works can be seen as following the path originated with the work of Fritz Machlup who in his (1962) book "The Production and Distribution of Knowledge in the United States", claimed that the "knowledge industry represented 29% of the US gross national product", which he saw as evidence that the Information Age had begun. He defines knowledge as a commodity and attempts to measure the magnitude of the production and distribution of this commodity within a modern economy. Machlup divided information use into three classes: instrumental, intellectual, and pastime knowledge. He identified also five types of knowledge: practical knowledge; intellectual knowledge, that is, general culture and the satisfying of intellectual curiosity; pastime knowledge, that is, knowledge satisfying non-intellectual curiosity or the desire for light entertainment and emotional stimulation; spiritual or religious knowledge; unwanted knowledge, accidentally acquired and aimlessly retained.

More recent estimates have reached the following results:

- the world's technological capacity to receive information through one-way broadcast networks grew at a sustained compound annual growth rate of 7% between 1986 and 2007;

- the world's technological capacity to store information grew at a sustained compound annual growth rate of 25% between 1986 and 2007;

- the world's effective capacity to exchange information through two-way telecommunication networks grew at a sustained compound annual growth rate of 30% during the same two decades;

- the world's technological capacity to compute information with the help of humanly guided general-purpose computers grew at a sustained compound annual growth rate of 61% during the same period.

Economics

Eventually, Information and communication technology (ICT)—i.e. computers, computerized machinery, fiber optics, communication satellites, the Internet, and other ICT tools—became a significant part of the world economy, as the development of optical networking and microcomputers greatly changed many businesses and industries. Nicholas Negroponte captured the essence of these changes in his 1995 book, Being Digital, in which he discusses the similarities and differences between products made of atoms and products made of bits.

Jobs and income distribution

The Information Age has affected the workforce in several ways, such as compelling workers to compete in a global job market. One of the most evident concerns is the replacement of human labor by computers that can do their jobs faster and more effectively, thus creating a situation in which individuals who perform tasks that can easily be automated are forced to find employment where their labor is not as disposable. This especially creates issue for those in industrial cities, where solutions typically involve lowering working time, which is often highly resisted. Thus, individuals who lose their jobs may be pressed to move up into more indispensable professions (e.g. engineers, doctors, lawyers, teachers, professors, scientists, executives, journalists, consultants), who are able to compete successfully in the world market and receive (relatively) high wages.

Along with automation, jobs traditionally associated with the middle class (e.g. assembly line, data processing, management, and supervision) have also begun to disappear as result of outsourcing. Unable to compete with those in developing countries, production and service workers in post-industrial (i.e. developed) societies either lose their jobs through outsourcing, accept wage cuts, or settle for low-skill, low-wage service jobs. In the past, the economic fate of individuals would be tied to that of their nation's. For example, workers in the United States were once well paid in comparison to those in other countries. With the advent of the Information Age and improvements in communication, this is no longer the case, as workers must now compete in a global job market, whereby wages are less dependent on the success or failure of individual economies.

In effectuating a globalized workforce, the internet has just as well allowed for increased opportunity in developing countries, making it possible for workers in such places to provide in-person services, therefore competing directly with their counterparts in other nations. This competitive advantage translates into increased opportunities and higher wages.

Automation, productivity, and job gain

The Information Age has affected the workforce in that automation and computerization have resulted in higher productivity coupled with net job loss in manufacturing. In the United States, for example, from January 1972 to August 2010, the number of people employed in manufacturing jobs fell from 17,500,000 to 11,500,000 while manufacturing value rose 270%. Although it initially appeared that job loss in the industrial sector might be partially offset by the rapid growth of jobs in information technology, the recession of March 2001 foreshadowed a sharp drop in the number of jobs in the sector. This pattern of decrease in jobs would continue until 2003, and data has shown that, overall, technology creates more jobs than it destroys even in the short run.

Information-intensive industry

Industry has become more information-intensive while less labor- and capital-intensive. This has left important implications for the workforce, as workers have become increasingly productive as the value of their labor decreases. For the system of capitalism itself, the value of labor decreases, the value of capital increases.

In the classical model, investments in human and financial capital are important predictors of the performance of a new venture. However, as demonstrated by Mark Zuckerberg and Facebook, it now seems possible for a group of relatively inexperienced people with limited capital to succeed on a large scale.

Innovations

The Information Age was enabled by technology developed in the Digital Revolution, which was itself enabled by building on the developments of the Technological Revolution.

Transistors

The onset of the Information Age can be associated with the development of transistor technology. The concept of a field-effect transistor was first theorized by Julius Edgar Lilienfeld in 1925. The first practical transistor was the point-contact transistor, invented by the engineers Walter Houser Brattain and John Bardeen while working for William Shockley at Bell Labs in 1947. This was a breakthrough that laid the foundations for modern technology. Shockley's research team also invented the bipolar junction transistor in 1952. The most widely used type of transistor is the metal–oxide–semiconductor field-effect transistor (MOSFET), invented by Mohamed M. Atalla and Dawon Kahng at Bell Labs in 1960. The complementary MOS (CMOS) fabrication process was developed by Frank Wanlass and Chih-Tang Sah in 1963.

Computers

Before the advent of electronics, mechanical computers, like the Analytical Engine in 1837, were designed to provide routine mathematical calculation and simple decision-making capabilities. Military needs during World War II drove development of the first electronic computers, based on vacuum tubes, including the Z3, the Atanasoff–Berry Computer, Colossus computer, and ENIAC.

The invention of the transistor enabled the era of mainframe computers (1950s–1970s), typified by the IBM 360. These large, room-sized computers provided data calculation and manipulation that was much faster than humanly possible, but were expensive to buy and maintain, so were initially limited to a few scientific institutions, large corporations, and government agencies.

The germanium integrated circuit (IC) was invented by Jack Kilby at Texas Instruments in 1958. The silicon integrated circuit was then invented in 1959 by Robert Noyce at Fairchild Semiconductor, using the planar process developed by Jean Hoerni, who was in turn building on Mohamed Atalla's silicon surface passivation method developed at Bell Labs in 1957. Following the invention of the MOS transistor by Mohamed Atalla and Dawon Kahng at Bell Labs in 1959, the MOS integrated circuit was developed by Fred Heiman and Steven Hofstein at RCA in 1962. The silicon-gate MOS IC was later developed by Federico Faggin at Fairchild Semiconductor in 1968. With the advent of the MOS transistor and the MOS IC, transistor technology rapidly improved, and the ratio of computing power to size increased dramatically, giving direct access to computers to ever smaller groups of people.

The first commercial single-chip microprocessor launched in 1971, the Intel 4004, which was developed by Federico Faggin using his silicon-gate MOS IC technology, along with Marcian Hoff, Masatoshi Shima and Stan Mazor.

Along with electronic arcade machines and home video game consoles pioneered by Nolan Bushnell in the 1970s, the development of personal computers like the Commodore PET and Apple II (both in 1977) gave individuals access to the computer. However, data sharing between individual computers was either non-existent or largely manual, at first using punched cards and magnetic tape, and later floppy disks.

Data

The first developments for storing data were initially based on photographs, starting with microphotography in 1851 and then microform in the 1920s, with the ability to store documents on film, making them much more compact. Early information theory and Hamming codes were developed about 1950, but awaited technical innovations in data transmission and storage to be put to full use.

Magnetic-core memory was developed from the research of Frederick W. Viehe in 1947 and An Wang at Harvard University in 1949. With the advent of the MOS transistor, MOS semiconductor memory was developed by John Schmidt at Fairchild Semiconductor in 1964. In 1967, Dawon Kahng and Simon Sze at Bell Labs described in 1967 how the floating gate of an MOS semiconductor device could be used for the cell of a reprogrammable ROM. Following the invention of flash memory by Fujio Masuoka at Toshiba in 1980, Toshiba commercialized NAND flash memory in 1987.

Copper wire cables transmitting digital data connected computer terminals and peripherals to mainframes, and special message-sharing systems leading to email, were first developed in the 1960s. Independent computer-to-computer networking began with ARPANET in 1969. This expanded to become the Internet (coined in 1974). Access to the Internet improved with the invention of the World Wide Web in 1991. The capacity expansion from dense wave division multiplexing, optical amplification and optical networking in the mid-1990s led to record data transfer rates. By 2018, optical networks routinely delivered 30.4 terabits/s over a fiber optic pair, the data equivalent of 1.2 million simultaneous 4K HD video streams.

MOSFET scaling, the rapid miniaturization of MOSFETs at a rate predicted by Moore's law, led to computers becoming smaller and more powerful, to the point where they could be carried. During the 1980s–1990s, laptops were developed as a form of portable computer, and personal digital assistants (PDAs) could be used while standing or walking. Pagers, widely used by the 1980s, were largely replaced by mobile phones beginning in the late 1990s, providing mobile networking features to some computers. Now commonplace, this technology is extended to digital cameras and other wearable devices. Starting in the late 1990s, tablets and then smartphones combined and extended these abilities of computing, mobility, and information sharing. Metal–oxide–semiconductor (MOS) image sensors, which first began appearing in the late 1960s, led to the transition from analog to digital imaging, and from analog to digital cameras, during the 1980s–1990s. The most common image sensors are the charge-coupled device (CCD) sensor and the CMOS (complementary MOS) active-pixel sensor (CMOS sensor).

Electronic paper, which has origins in the 1970s, allows digital information to appear as paper documents.

Personal computers

By 1976, there were several firms racing to introduce the first truly successful commercial personal computers. Three machines, the Apple II, Commodore PET 2001 and TRS-80 were all released in 1977, becoming the most popular by late 1978. Byte magazine later referred to Commodore, Apple, and Tandy as the "1977 Trinity". Also in 1977, Sord Computer Corporation released the Sord M200 Smart Home Computer in Japan.

Apple II

Steve Wozniak (known as "Woz"), a regular visitor to Homebrew Computer Club meetings, designed the single-board Apple I computer and first demonstrated it there. With specifications in hand and an order for 100 machines at US$500 each from the Byte Shop, Woz and his friend Steve Jobs founded Apple Computer.

About 200 of the machines sold before the company announced the Apple II as a complete computer. It had color graphics, a full QWERTY keyboard, and internal slots for expansion, which were mounted in a high quality streamlined plastic case. The monitor and I/O devices were sold separately. The original Apple II operating system was only the built-in BASIC interpreter contained in ROM. Apple DOS was added to support the diskette drive; the last version was "Apple DOS 3.3".

Its higher price and lack of floating point BASIC, along with a lack of retail distribution sites, caused it to lag in sales behind the other Trinity machines until 1979, when it surpassed the PET. It was again pushed into 4th place when Atari, Inc. introduced its Atari 8-bit computers.

Despite slow initial sales, the lifetime of the Apple II series was about eight years longer than other machines, and so accumulated the highest total sales. By 1985, 2.1 million had sold and more than 4 million Apple II's were shipped by the end of its production in 1993.

Optical networking

Optical communication plays a crucial role in communication networks. Optical communication provides the transmission backbone for the telecommunications and computer networks that underlie the Internet, the foundation for the Digital Revolution and Information Age.

The two core technologies are the optical fiber and light amplification (the optical amplifier). In 1953, Bram van Heel demonstrated image transmission through bundles of optical fibers with a transparent cladding. The same year, Harold Hopkins and Narinder Singh Kapany at Imperial College succeeded in making image-transmitting bundles with over 10,000 optical fibers, and subsequently achieved image transmission through a 75 cm long bundle which combined several thousand fibers.

Gordon Gould invented the optical amplifier and the laser, and also established the first optical telecommunications company, Optelecom, to design communication systems. The firm was a co-founder in Ciena Corp., the venture that popularized the optical amplifier with the introduction of the first dense wave division multiplexing system. This massive scale communication technology has emerged as the common basis of all telecommunication networks and, thus, a foundation of the Information Age.

Economy, society and culture

Manuel Castells captures the significance of the Information Age in The Information Age: Economy, Society and Culture when he writes of our global interdependence and the new relationships between economy, state and society, what he calls "a new society-in-the-making." He cautions that just because humans have dominated the material world, does not mean that the Information Age is the end of history:

Thomas Chatterton Williams wrote about the dangers of anti-intellectualism in the Information Age in a piece for The Atlantic. Although access to information has never been greater, most information is irrelevant or insubstantial. The Information Age's emphasis on speed over expertise contributes to "superficial culture in which even the elite will openly disparage as pointless our main repositories for the very best that has been thought.""It is in fact, quite the opposite: history is just beginning, if by history we understand the moment when, after millennia of a prehistoric battle with Nature, first to survive, then to conquer it, our species has reached the level of knowledge and social organization that will allow us to live in a predominantly social world. It is the beginning of a new existence, and indeed the beginning of a new age, The Information Age, marked by the autonomy of culture vis-à-vis the material basis of our existence."