Self-replication is any behavior of a dynamical system that yields construction of an identical copy of itself. Biological cells, given suitable environments, reproduce by cell division. During cell division, DNA is replicated and can be transmitted to offspring during reproduction. Biological viruses can replicate, but only by commandeering the reproductive machinery of cells through a process of infection. Harmful prion proteins can replicate by converting normal proteins into rogue forms. Computer viruses reproduce using the hardware and software already present on computers. Self-replication in robotics has been an area of research and a subject of interest in science fiction. Any self-replicating mechanism which does not make a perfect copy will experience genetic variation and will create variants of itself. These variants will be subject to natural selection, since some will be better at surviving in their current environment than others and will out-breed them.

Overview

Theory

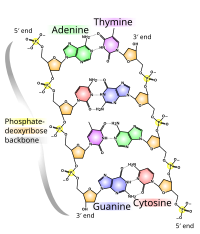

Early research by John von Neumann[2] established that replicators have several parts:- A coded representation of the replicator

- A mechanism to copy the coded representation

- A mechanism for effecting construction within the host environment of the replicator

However, the simplest possible case is that only a genome exists. Without some specification of the self-reproducing steps, a genome-only system is probably better characterized as something like a crystal.

Classes of self-replication

Recent research[3] has begun to categorize replicators, often based on the amount of support they require.- Natural replicators have all or most of their design from nonhuman sources. Such systems include natural life forms.

- Autotrophic replicators can reproduce themselves "in the wild". They mine their own materials. It is conjectured that non-biological autotrophic replicators could be designed by humans, and could easily accept specifications for human products.

- Self-reproductive systems are conjectured systems which would produce copies of themselves from industrial feedstocks such as metal bar and wire.

- Self-assembling systems assemble copies of themselves from finished, delivered parts. Simple examples of such systems have been demonstrated at the macro scale.

A self-replicating computer program

In computer science a quine is a self-reproducing computer program that, when executed, outputs its own code. For example, a quine in the Python programming language is:a='a=%r;print a%%a';print a%a

In many programming languages an empty program is legal, and executes without producing errors or other output. The output is thus the same as the source code, so the program is trivially self-reproducing.

Self-replicating tiling

In geometry a self-replicating tiling is a tiling pattern in which several congruent tiles may be joined together to form a larger tile that is similar to the original. This is an aspect of the field of study known as tessellation. The "sphinx" hexiamond is the only known self-replicating pentagon.[5] For example, four such concave pentagons can be joined together to make one with twice the dimensions.[6] Solomon W. Golomb coined the term rep-tiles for self-replicating tilings.In 2012, Lee Sallows identified rep-tiles as a special instance of a self-tiling tile set or setiset. A setiset of order n is a set of n shapes that can be assembled in n different ways so as to form larger replicas of themselves. Setisets in which every shape is distinct are called 'perfect'. A rep-n rep-tile is just a setiset composed of n identical pieces.

Four 'sphinx' hexiamonds can be put together to form another sphinx.

A perfect setiset of order 4

Applications

It is a long-term goal of some engineering sciences to achieve a clanking replicator, a material device that can self-replicate. The usual reason is to achieve a low cost per item while retaining the utility of a manufactured good. Many authorities say that in the limit, the cost of self-replicating items should approach the cost-per-weight of wood or other biological substances, because self-replication avoids the costs of labor, capital and distribution in conventional manufactured goods.A fully novel artificial replicator is a reasonable near-term goal. A NASA study recently placed the complexity of a clanking replicator at approximately that of Intel's Pentium 4 CPU.[7] That is, the technology is achievable with a relatively small engineering group in a reasonable commercial time-scale at a reasonable cost.

Given the currently keen interest in biotechnology and the high levels of funding in that field, attempts to exploit the replicative ability of existing cells are timely, and may easily lead to significant insights and advances.

A variation of self replication is of practical relevance in compiler construction, where a similar bootstrapping problem occurs as in natural self replication. A compiler (phenotype) can be applied on the compiler's own source code (genotype) producing the compiler itself. During compiler development, a modified (mutated) source is used to create the next generation of the compiler. This process differs from natural self-replication in that the process is directed by an engineer, not by the subject itself.

Mechanical self-replication

An activity in the field of robots is the self-replication of machines. Since all robots (at least in modern times) have a fair number of the same features, a self-replicating robot (or possibly a hive of robots) would need to do the following:- Obtain construction materials

- Manufacture new parts including its smallest parts and thinking apparatus

- Provide a consistent power source

- Program the new members

- error correct any mistakes in the offspring

The Foresight Institute has published guidelines for researchers in mechanical self-replication.[8] The guidelines recommend that researchers use several specific techniques for preventing mechanical replicators from getting out of control, such as using a broadcast architecture.

For a detailed article on mechanical reproduction as it relates to the industrial age see mass production.

Fields

Research has occurred in the following areas:- Biology studies natural replication and replicators, and their interaction. These can be an important guide to avoid design difficulties in self-replicating machinery.

- In Chemistry self-replication studies are typically about how a specific set of molecules can act together to replicate each other within the set [9] (often part of Systems chemistry field).

- Memetics studies ideas and how they propagate in human culture. Memes require only small amounts of material, and therefore have theoretical similarities to viruses and are often described as viral.

- Nanotechnology or more precisely, molecular nanotechnology is concerned with making nano scale assemblers. Without self-replication, capital and assembly costs of molecular machines become impossibly large.

- Space resources: NASA has sponsored a number of design studies to develop self-replicating mechanisms to mine space resources. Most of these designs include computer-controlled machinery that copies itself.

- Computer security: Many computer security problems are caused by self-reproducing computer programs that infect computers — computer worms and computer viruses.

- In parallel computing, it takes a long time to manually load a new program on every node of a large computer cluster or distributed computing system. Automatically loading new programs using mobile agents can save the system administrator a lot of time and give users their results much quicker, as long as they don't get out of control.

In industry

Space exploration and manufacturing

The goal of self-replication in space systems is to exploit large amounts of matter with a low launch mass. For example, an autotrophic self-replicating machine could cover a moon or planet with solar cells, and beam the power to the Earth using microwaves. Once in place, the same machinery that built itself could also produce raw materials or manufactured objects, including transportation systems to ship the products. Another model of self-replicating machine would copy itself through the galaxy and universe, sending information back.In general, since these systems are autotrophic, they are the most difficult and complex known replicators. They are also thought to be the most hazardous, because they do not require any inputs from human beings in order to reproduce.

A classic theoretical study of replicators in space is the 1980 NASA study of autotrophic clanking replicators, edited by Robert Freitas.[10]

Much of the design study was concerned with a simple, flexible chemical system for processing lunar regolith, and the differences between the ratio of elements needed by the replicator, and the ratios available in regolith. The limiting element was Chlorine, an essential element to process regolith for Aluminium. Chlorine is very rare in lunar regolith, and a substantially faster rate of reproduction could be assured by importing modest amounts.

The reference design specified small computer-controlled electric carts running on rails. Each cart could have a simple hand or a small bull-dozer shovel, forming a basic robot.

Power would be provided by a "canopy" of solar cells supported on pillars. The other machinery could run under the canopy.

A "casting robot" would use a robotic arm with a few sculpting tools to make plaster molds. Plaster molds are easy to make, and make precise parts with good surface finishes. The robot would then cast most of the parts either from non-conductive molten rock (basalt) or purified metals. An electric oven melted the materials.

A speculative, more complex "chip factory" was specified to produce the computer and electronic systems, but the designers also said that it might prove practical to ship the chips from Earth as if they were "vitamins".

Molecular manufacturing

Nanotechnologists in particular believe that their work will likely fail to reach a state of maturity until human beings design a self-replicating assembler of nanometer dimensions [1].These systems are substantially simpler than autotrophic systems, because they are provided with purified feedstocks and energy. They do not have to reproduce them. This distinction is at the root of some of the controversy about whether molecular manufacturing is possible or not. Many authorities who find it impossible are clearly citing sources for complex autotrophic self-replicating systems. Many of the authorities who find it possible are clearly citing sources for much simpler self-assembling systems, which have been demonstrated. In the meantime, a Lego-built autonomous robot able to follow a pre-set track and assemble an exact copy of itself, starting from four externally provided components, was demonstrated experimentally in 2003 [2].

Merely exploiting the replicative abilities of existing cells is insufficient, because of limitations in the process of protein biosynthesis (also see the listing for RNA). What is required is the rational design of an entirely novel replicator with a much wider range of synthesis capabilities.

In 2011, New York University scientists have developed artificial structures that can self-replicate, a process that has the potential to yield new types of materials. They have demonstrated that it is possible to replicate not just molecules like cellular DNA or RNA, but discrete structures that could in principle assume many different shapes, have many different functional features, and be associated with many different types of chemical species.[11][12]

For a discussion of other chemical bases for hypothetical self-replicating systems, see alternative biochemistry.