| Transcranial magnetic stimulation | |

|---|---|

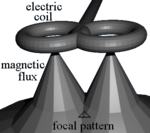

Transcranial magnetic stimulation (schematic diagram)

|

|

| MeSH | D050781 |

TMS is used diagnostically to measure the connection between the central nervous system and skeletal muscle to evaluate damage in a wide variety of disease states, including stroke, multiple sclerosis, amyotrophic lateral sclerosis, movement disorders, and motor neuron diseases.[3]

Evidence suggests it is useful for neuropathic pain[4] and treatment-resistant major depressive disorder.[4][5] A 2015 Cochrane review found that there was not enough evidence to determine its effectiveness in treating schizophrenia.[6] For negative symptoms another review found possible efficacy.[4] As of 2014, all other investigated uses of repetitive TMS have only possible or no clinical efficacy.[4]

Matching the discomfort of TMS to distinguish true effects from placebo is an important and challenging issue that influences the results of clinical trials.[4][7][8][9] Adverse effects of TMS are uncommon, and include fainting and rarely seizure.[7] Other adverse effects of TMS include discomfort or pain, hypomania, cognitive changes, hearing loss, and inadvertent current induction in implanted devices such as pacemakers or defibrillators.[7]

Medical uses

The use of TMS can be divided into diagnostic and therapeutic uses.Diagnosis

TMS can be used clinically to measure activity and function of specific brain circuits in humans.[3] The most robust and widely accepted use is in measuring the connection between the primary motor cortex and a muscle to evaluate damage from stroke, multiple sclerosis, amyotrophic lateral sclerosis, movement disorders, motor neuron disease and injuries and other disorders affecting the facial and other cranial nerves and the spinal cord.[3][10][11][12] TMS has been suggested as a means of assessing short-interval intracortical inhibition (SICI) which measures the internal pathways of the motor cortex but this use has not yet been validated.[13]Treatment

For neuropathic pain, for which there is little effective treatment, high-frequency (HF) repetitive TMS (rTMS) appears effective.[4] For treatment-resistant major depressive disorder, HF-rTMS of the left dorsolateral prefrontal cortex (DLPFC) appears effective and low-frequency (LF) rTMS of the right DLPFC has probable efficacy.[4][5] The Royal Australia and New Zealand College of Psychiatrists has endorsed rTMS for treatment resistant MDD.[14] As of October 2008, the US Food and Drug Administration authorized the use of rTMS as an effective treatment for clinical depression.[15]Adverse effects

Although TMS is generally regarded as safe, risks increase for therapeutic rTMS compared to single or paired TMS for diagnostic purposes.[16] In the field of therapeutic TMS, risks increase with higher frequencies.[7]The greatest immediate risk is the rare occurrence of syncope (fainting) and even less commonly, induced seizures.[7][17]

Other adverse short-term effects of TMS include discomfort or pain, transient induction of hypomania, transient cognitive changes, transient hearing loss, transient impairment of working memory, and induced currents in electrical circuits in implanted devices.[7]

Devices and procedure

During a transcranial magnetic stimulation (TMS) procedure, a magnetic field generator, or "coil" is placed near the head of the person receiving the treatment.[1]:3 The coil produces small electric currents in the region of the brain just under the coil via electromagnetic induction. The coil is positioned by finding anatomical landmarks on the skull including, but not limited to, the inion or the nasion.[18] The coil is connected to a pulse generator, or stimulator, that delivers electric current to the coil.[2]Most devices provide a shallow magnetic field that affects neurons mostly on the surface of the brain, delivered with coil shaped like the number eight. Some devices can provide magnetic fields that can penetrate deeper, are used for "deep TMS", and have different types of coils including the H-coil the C-core coil, and the circular crown coil; as of 2013 the H coil used in devices made by Brainsway were the most developed.[19]

Theta-burst stimulation

Theta-burst stimulation (TBS) is a popular protocol, as opposed to stimulation patterns based on other neural oscillation patterns (e.g. alpha-burst) used in transcranial magnetic stimulation. It was originally described by Huang in 2005.[20] The protocol has been used clinical for multiple types of disorders. A specific example, for major depressive disorder with stimulation of both right and left dorsolateral prefrontal cortex (DLPFC) is as follows: The left is stimulated intermediately (iTBS) while the right is inhibited via continuous stimulation (cTBS). In the theta-burst stimulation pattern, 3 pulses are administered at 50 Hz, every 200 ms. In the intermittent theta burst stimulation pattern (iTBS), a 2-second train of TBS is repeated every 10 s for a total of 190 s (600 pulses). In the continuous theta burst stimulation paradigm (cTBS), a 40 s train of uninterrupted TBS is given (600 pulses).In a March 2015 publication, Bakker[21] demonstrated with 185 patients evenly divided between the standard 10 Hz protocol (30 min) and the theta-burst stimulation, that the outcome (reduction of Ham-D and BDI scores) was the same.

Society and culture

Regulatory approvals

Neurosurgery planning

Nexstim obtained 510(k) FDA clearance for the assessment of the primary motor cortex for pre-procedural planning in December 2009[22] and for neurosurgical planning in June 2011.[23]Depression

A number of deep TMS have received FDA 510k clearance to market for use in adults with treatment resistant major depressive disorders.[24][25][26][27][28]Migraine

The use of single-pulse TMS was approved by the FDA for treatment of migraines in December 2013.[29] It is approved as a Class II medical device under the "de novo pathway".[30][31]Others

In the European Economic Area, various versions of Deep TMS H-coils has CE marking for Alzheimer's disease,[32] autism,[32] bipolar disorder,[33] epilepsy [34] chronic pain[33] major depressive disorder[33] Parkinson's disease,[33][35] posttraumatic stress disorder (PTSD),[33] schizophrenia (negative symptoms)[33] and to aid smoking cessation.[32] One review found tentative benefit for cognitive enhancement in healthy people.[36]Health insurance

United States

Commercial health insurance

In 2013, several commercial health insurance plans in the United States, including Anthem, Health Net, and Blue Cross Blue Shield of Nebraska and of Rhode Island, covered TMS for the treatment of depression for the first time.[37] In contrast, UnitedHealthcare issued a medical policy for TMS in 2013 that stated there is insufficient evidence that the procedure is beneficial for health outcomes in patients with depression. UnitedHealthcare noted that methodological concerns raised about the scientific evidence studying TMS for depression include small sample size, lack of a validated sham comparison in randomized controlled studies, and variable uses of outcome measures.[38] Other commercial insurance plans whose 2013 medical coverage policies stated that the role of TMS in the treatment of depression and other disorders had not been clearly established or remained investigational included Aetna, Cigna and Regence.[39]Medicare

Policies for Medicare coverage vary among local jurisdictions within the Medicare system,[40] and Medicare coverage for TMS has varied among jurisdictions and with time. For example:- In early 2012 in New England, Medicare covered TMS for the first time in the United States.[41] However, that jurisdiction later decided to end coverage after October, 2013.[42]

- In August 2012, the jurisdiction covering Arkansas, Louisiana, Mississippi, Colorado, Texas, Oklahoma, and New Mexico determined that there was insufficient evidence to cover the treatment,[43] but the same jurisdiction subsequently determined that Medicare would cover TMS for the treatment of depression after December 2013.[44]

United Kingdom's National Health Service

The United Kingdom's National Institute for Health and Care Excellence (NICE) issues guidance to the National Health Service (NHS) in England, Wales, Scotland and Northern Ireland. NICE guidance does not cover whether or not the NHS should fund a procedure. Local NHS bodies (primary care trusts and hospital trusts) make decisions about funding after considering the clinical effectiveness of the procedure and whether the procedure represents value for money for the NHS.[45]NICE evaluated TMS for severe depression (IPG 242) in 2007, and subsequently considered TMS for reassessment in January 2011 but did not change its evaluation.[46] The Institute found that TMS is safe, but there is insufficient evidence for its efficacy.[46]

In January 2014, NICE reported the results of an evaluation of TMS for treating and preventing migraine (IPG 477). NICE found that short-term TMS is safe but there is insufficient evidence to evaluate safety for long-term and frequent uses. It found that evidence on the efficacy of TMS for the treatment of migraine is limited in quantity, that evidence for the prevention of migraine is limited in both quality and quantity.[47]

Technical information

TMS – Butterfly Coils

TMS uses electromagnetic induction to generate an electric current across the scalp and skull without physical contact.[48] A plastic-enclosed coil of wire is held next to the skull and when activated, produces a magnetic field oriented orthogonally to the plane of the coil. The magnetic field passes unimpeded through the skin and skull, inducing an oppositely directed current in the brain that activates nearby nerve cells in much the same way as currents applied directly to the cortical surface.[49]

The path of this current is difficult to model because the brain is irregularly shaped and electricity and magnetism are not conducted uniformly throughout its tissues. The magnetic field is about the same strength as an MRI, and the pulse generally reaches no more than 5 centimeters into the brain unless using the deep transcranial magnetic stimulation variant of TMS.[50] Deep TMS can reach up to 6 cm into the brain to stimulate deeper layers of the motor cortex, such as that which controls leg motion.[51]

Mechanism of action

it has been shown that a current through a wire generates a magnetic field around that wire. Transcranial magnetic stimulation is achieved by quickly discharging current from a large capacitor into a coil to produce pulsed magnetic fields between 2 and 3 T.[52] By directing the magnetic field pulse at a targeted area of the brain, one can either depolarize or hyperpolarize neurons in the brain. The magnetic flux density pulse generated by the current pulse through the coil causes an electric field as explained by the Maxwell-Faraday equation,

.

.This electric field causes a change in the transmembrane current of the neuron, which leads to the depolarization or hyperpolarization of the neuron and the firing of an action potential.[52]

The exact details of how TMS functions are still being explored. The effects of TMS can be divided into two types depending on the mode of stimulation:

- Single or paired pulse TMS causes neurons in the neocortex under the site of stimulation to depolarize and discharge an action potential. If used in the primary motor cortex, it produces muscle activity referred to as a motor evoked potential (MEP) which can be recorded on electromyography. If used on the occipital cortex, 'phosphenes' (flashes of light) might be perceived by the subject. In most other areas of the cortex, the participant does not consciously experience any effect, but his or her behaviour may be slightly altered (e.g., slower reaction time on a cognitive task), or changes in brain activity may be detected using sensing equipment.[53]

- Repetitive TMS produces longer-lasting effects which persist past the initial period of stimulation. rTMS can increase or decrease the excitability of the corticospinal tract depending on the intensity of stimulation, coil orientation, and frequency. The mechanism of these effects is not clear, though it is widely believed to reflect changes in synaptic efficacy akin to long-term potentiation (LTP) and long-term depression (LTD).[54]

Coil types

The design of transcranial magnetic stimulation coils used in either treatment or diagnostic/experimental studies may differ in a variety of ways. These differences should be considered in the interpretation of any study result, and the type of coil used should be specified in the study methods for any published reports.The most important considerations include:

- the type of material used to construct the core of the coil

- the geometry of the coil configuration

- the biophysical characteristics of the pulse produced by the coil.

A number of different types of coils exist, each of which produce different magnetic field patterns. Some examples:

- round coil: the original type of TMS coil

- figure-eight coil (i.e., butterfly coil): results in a more focal pattern of activation

- double-cone coil: conforms to shape of head, useful for deeper stimulation

- four-leaf coil: for focal stimulation of peripheral nerves[59]

- H-coil: for deep transcranial magnetic stimulation

History

Luigi Galvani did pioneering research on the effects of electricity on the body in the late 1700s, and laid the foundations for the field of electrophysiology.[60] In the 1800s Michael Faraday discovered that an electrical current had a corresponding magnetic field, and that changing one, could change the other.[61] Work to directly stimulate the human brain with electricity started in the late 1800s, and by the 1930s electroconvulsive therapy has been developed by Italian physicians Cerletti and Bini.[60] ECT became widely used to treat mental illness and became overused as it began to be seen as a "psychiatric panacea", and a backlash against it grew in the 1970s.[60] Around that time Anthony T. Barker began exploring use of magnetic fields to alter electrical signalling in the brain, and the first stable TMS devices were developed around 1985.[60][61] They were originally intended as diagnostic and research devices, and only later were therapeutic uses explored.[60][61] The first TMS devices were approved by the FDA in October 2008.[60]Research

TMS research in animal studies is limited due to early FDA approval of TMS treatment of drug-resistant depression. Because of this, there has been no specific coils for animal models. Hence, there are limited number of TMS coils that can be used for animal studies.[62] There are some attempts in the literature showing new coil designs for mice with an improved stimulation profile.[63]Areas of research include:

- rehabilitation of aphasia and motor disability after stroke,[4][7][11][12][64]

- tinnitus,[4][65]

- anxiety disorders,[4] including panic disorder[66] and obsessive-compulsive disorder.[4] The most promising areas to target for OCD appear to be the orbitofrontal cortex and the supplementary motor area.[67] Older protocols that targeted the prefrontal dorsal cortex were less successful in treating OCD.[68]

- amyotrophic lateral sclerosis,[4][69]

- multiple sclerosis,[4]

- epilepsy,[4][70]

- Alzheimer's disease,[4]

- Parkinson's disease,[71]

- schizophrenia,[4][6]

- substance abuse,[4] addiction,[4][72] and posttraumatic stress disorder (PTSD).[4]

- autism[73]

- brain death, coma, and other persistent vegetative states.[4]

- Functional connectivity between the cerebellum and other areas of the brain[74]

- Traumatic brain injury[75]

- Stroke[75]

Study blinding

It is difficult to establish a convincing form of "sham" TMS to test for placebo effects during controlled trials in conscious individuals, due to the neck pain, headache and twitching in the scalp or upper face associated with the intervention.[4][7] "Sham" TMS manipulations can affect cerebral glucose metabolism and MEPs, which may confound results.[76] This problem is exacerbated when using subjective measures of improvement.[7] Placebo responses in trials of rTMS in major depression are negatively associated with refractoriness to treatment, vary among studies and can influence results.[77]A 2011 review found that only 13.5% of 96 randomized control studies of rTMS to the dorsolateral prefrontal cortex had reported blinding success and that, in those studies, people in real rTMS groups were significantly more likely to think that they had received real TMS, compared with those in sham rTMS groups.[78] Depending on the research question asked and the experimental design, matching the discomfort of rTMS to distinguish true effects from placebo can be an important and challenging issue.