Neuroeconomics is an interdisciplinary field that seeks to explain human decision-making, the ability to process multiple alternatives and to follow through on a plan of action. It studies how economic behavior can shape our understanding of the brain, and how neuroscientific discoveries can guide models of economics.

It combines research from neuroscience, experimental and behavioral economics, and cognitive and social psychology. As research into decision-making behavior becomes increasingly computational, it has also incorporated new approaches from theoretical biology, computer science, and mathematics. Neuroeconomics studies decision-making by using a combination of tools from these fields so as to avoid the shortcomings that arise from a single-perspective approach. In mainstream economics, expected utility (EU) and the concept of rational agents are still being used. Neuroscience has the potential to reduce the reliance on this flawed assumption by inferring what emotions, habits, biases, heuristics and environmental factors contribute to individual, and societal preferences. Economists can thereby make more accurate predictions of human behavior in their models.

Behavioral economics was the first subfield to emerge to account for these anomalies by integrating social and cognitive factors in understanding economic decisions. Neuroeconomics adds another layer by using neuroscience and psychology to understand the root of decision-making. This involves researching what occurs within the brain when making economic decisions. The economic decisions researched can cover diverse circumstances such as buying a first home, voting in an election, choosing to marry a partner or go on a diet. Using tools from various fields, neuroeconomics works toward an integrated account of economic decision-making.

History

In 1989, Paul Glimcher joined the Center for Neural Science at NYU. Initial forays into neuroeconomic topics occurred in the late 1990s thanks, in part, to the rising prevalence of cognitive neuroscience research. Improvements in brain imaging technology suddenly allowed for crossover between behavioral and neurobiological enquiry. At the same time, critical tension was building between neoclassical and behavioral schools of economics seeking to produce superior predictive models of human behavior. Behavioral economists, in particular, sought to challenge neo-classicists by looking for alternative computational and psychological processes that validated their counter-findings of irrational choice. These converging trends set the stage for the sub-discipline of neuroeconomics to emerge, with varying and complementary motivations from each parent discipline.

Behavioral economists and cognitive psychologists looked towards functional brain imaging to experiment and develop their alternative theories of decision-making. While groups of physiologists and neuroscientists looked towards economics to develop their algorithmic models of neural hardware pertaining to choice. This split approach characterised the formation of neuroeconomics as an academic pursuit - not without criticism however. Numerous neurobiologists claimed that attempting to synchronise complex models of economics to real human and animal behavior would be futile. Neoclassical economists also argued that this merge would be unlikely to improve the predictive power of the existing revealed preference theory.

Despite the early criticisms, neuroeconomics grew rapidly from its inception in the late 1990s through to the 2000s. Leading many more scholars from father fields of economics, neuroscience and psychology to take notice of the possibilities of such interdisciplinary collaboration. Meetings between scholars and early researchers in neuroeconomics began to take place through the early 2000s. Important among them was a meeting that took place during 2002 at Princeton University. Organized by neuroscientist Jonathan Cohen and economist Christina Paxson, the Princeton meeting gained significant traction for the field and is often credited as the formative beginning of the present-day Society for Neuroeconomics.

The subsequent momentum continued throughout the decade of the 2000s in which research was steadily increasing and the number of publications containing the words "decision making" and "brain" rose impressively. A critical point in 2008 was reached when the first edition of Neuroeconomics: Decision Making and the Brain was published. This marked a watershed moment for the field as it accumulated the growing wealth of research into a widely accessible textbook. The success of this publication sharply increased the visibility of Neuroeconomics and helped affirm its place in economic teachings worldwide.

Major research areas

The field of decision-making is largely concerned with the processes by which individuals make a single choice from among many options. These processes are generally assumed to proceed in a logical manner such that the decision itself is largely independent of context. Different options are first translated into a common currency, such as monetary value, and are then compared to one another and the option with the largest overall utility value is the one that should be chosen. While there has been support for this economic view of decision-making, there are also situations where the assumptions of optimal decision-making seem to be violated.

The field of neuroeconomics arose out of this controversy. By determining which brain areas are active in which types of decision processes, neuroeconomists hope to better understand the nature of what seem to be suboptimal and illogical decisions. While most of these scientists are using human subjects in this research, others are using animal models where studies can be more tightly controlled and the assumptions of the economic model can be tested directly.

For example, Padoa-Schioppa & Assad tracked the firing rates of individual neurons in the monkey orbitofrontal cortex while the animals chose between two kinds of juice. The firing rate of the neurons was directly correlated with the utility of the food items and did not differ when other types of food were offered. This suggests that, in accordance with the economic theory of decision-making, neurons are directly comparing some form of utility across different options and choosing the one with the highest value. Similarly, a common measure of prefrontal cortex dysfunction, the FrSBe, is correlated with multiple different measures of economic attitudes and behavior, supporting the idea that brain activation can display important aspects of the decision process.

Neuroeconomics studies the neurobiological along with the computational bases of decision-making. A framework of basic computations which may be applied to Neuroeconomics studies is proposed by A. Rangel, C. Camerer, and P. R. Montague. It divides the process of decision-making into five stages implemented by a subject. First, a representation of the problem is formed. This includes analysis of internal states, external states and potential course of action. Second, values are assigned to potential actions. Third, based on the valuations, one of the actions is selected. Fourth, the subject evaluates how desirable the outcome is. In the final stage, learning, includes updating all of the above processes in order to improve future decisions.

Decision-making under risk and ambiguity

Most of our decisions are made under some form of uncertainty. Decision sciences such as psychology and economics usually define risk as the uncertainty about several possible outcomes when the probability of each is known. When the probabilities are unknown, uncertainty takes the form of ambiguity. Utility maximization, first proposed by Daniel Bernoulli in 1738, is used to explain decision-making under risk. The theory assumes that humans are rational and will assess options based on the expected utility they will gain from each.

Research and experience uncovered a wide range of expected utility anomalies and common patterns of behavior that are inconsistent with the principle of utility maximization – for example, the tendency to overweight small probabilities and underweight large ones. Daniel Kahneman and Amos Tversky proposed prospect theory to encompass these observations and offer an alternative model.

There seem to be multiple brain areas involved in dealing with situations of uncertainty. In tasks requiring individuals to make predictions when there is some degree of uncertainty about the outcome, there is an increase in activity in area BA8 of the frontomedian cortex as well as a more generalized increase in activity of the mesial prefrontal cortex and the frontoparietal cortex. The prefrontal cortex is generally involved in all reasoning and understanding, so these particular areas may be specifically involved in determining the best course of action when not all relevant information is available.

The Iowa Gambling Task developing in 1994, involved picking from 4 decks of cards where 2 decks were riskier, containing higher payoffs accompanied by much heftier penalties. Most individuals realise after a few rounds of picking cards that the less risky decks have higher payoffs in the long run due to the small losses, however individuals with damage to the ventromedial prefrontal cortex continue picking from the riskier decks. These results suggested the ventromedial prefrontal region of the brain in strongly associated with recognising the long term consequences of risky behavior as patients with damage to the region struggled to make decisions that prioritised the future over the potential for immediate gain.

In situations that involve known risk rather than ambiguity, the insular cortex seems to be highly active. For example, when subjects played a 'double or nothing' game in which they could either stop the game and keep accumulated winnings or take a risky option resulting in either a complete loss or doubling of winnings, activation of the right insula increased when individuals took the gamble. It is hypothesized that the main role of the insular cortex in risky decision-making is to simulate potential negative consequences of taking a gamble. Neuroscience has found the insular is activated when thinking about or experiencing something uncomfortable or painful.

In addition to the importance of specific brain areas to the decision process, there is also evidence that the neurotransmitter dopamine may transmit information about uncertainty throughout the cortex. Dopaminergic neurons are strongly involved in the reward process and become highly active after an unexpected reward occurs. In monkeys, the level of dopaminergic activity is highly correlated with the level of uncertainty such that the activity increases with uncertainty. Furthermore, rats with lesions to the nucleus accumbens, which is an important part of the dopamine reward pathway through the brain, are far more risk averse than normal rats. This suggests that dopamine may be an important mediator of risky behavior.

Individual level of risk aversion among humans is influenced by testosterone concentration. There are studies exhibiting correlation between the choice of a risky career (financial trading, business) and testosterone exposure. In addition, daily achievements of traders with lower digit ratio are more sensitive to circulating testosterone. A long-term study of risk aversion and risky career choice was conducted for a representative group of MBA students. It revealed that females are in average more risk averse, but the difference between genders vanishes for low organizational and activational testosterone exposure leading to risk-averse behavior. Students with high salivary testosterone concentration and low digit ratio, disregarding the gender, tend to choose risky career in finance (e.g. trading or investment banking).

Serial and functionally localized model vs distributed, hierarchical model

In 2017 March, Laurence T. Hunt and Benjamin Y. Hayden argued an alternative viewpoint of the mechanistic model to explain how we evaluate options and choose the best course of action. Many accounts of reward-based choice argue for distinct component processes that are serial and functionally localized. The component processes typically include the evaluation of options, the comparison of option values in the absence of any other factors, the selection of an appropriate action plan and the monitoring of the outcome of the choice. They emphasized how several features of neuroanatomy may support the implementation of choice, including mutual inhibition in recurrent neural networks and the hierarchical organization of timescales for information processing across the cortex.

Loss aversion

One aspect of human decision-making is a strong aversion to potential loss. Under loss aversion, the perceived cost of loss is experienced more intensely than an equivalent gain. For example, if there was a 50/50 chance of either winning $100 or losing $100, and a loss occurred, the accompanying reaction would emulate losing $200; that is the sum of both losing $100 and the possibility of winning $100. This was first discovered in Prospect Theory by Daniel Kahneman and Amos Tversky.

One of the main controversies in understanding loss aversion is whether the phenomenon manifests in the brain, perhaps as increased attention and arousal with losses. Another area of research is whether loss aversion is evident in sub-cortices, such as the limbic system, thereby involving emotional arousal.

A basic controversy in loss aversion research is whether losses are actually experienced more negatively than equivalent gains or merely predicted to be more painful but actually experienced equivalently. Neuroeconomic research has attempted to distinguish between these hypotheses by measuring different physiological changes in response to both loss and gain. Studies have found that skin conductance, pupil dilation and heart rate are all higher in response to monetary loss than to equivalent gain. All three measures are involved in stress responses, so one might argue that losing a particular amount of money is experienced more strongly than gaining the same amount. On the other hand, in some of these studies, there was no physiological signals of loss aversion. That may suggest that the experience of losses is merely on attention (what is known as loss attention); such attentional orienting responses also lead to increased autonomic signals.

Brain studies have initially suggested that there is increased medial prefrontal and anterior cingulate cortex rapid response following losses compared to gains, which was interpreted as a neural signature of loss aversion. However, subsequent reviews have noticed that in this paradigm individuals do not actually show behavioral loss aversion casting doubts on the interpretability of these findings. With respect to fMRI studies, while one study found no evidence for an increase in activation in areas related to negative emotional reactions in response to loss aversion another found that individuals with damaged amygdalas had a lack of loss aversion even though they had normal levels of general risk aversion, suggesting that the behavior was specific to potential losses. These conflicting studies suggest that more research needs to be done to determine whether brain response to losses is due to loss aversion or merely to an alerting or orienting aspect of losses; as well as to examine if there are areas in the brain that respond specifically to potential losses .

Intertemporal choice

In addition to risk preference, another central concept in economics is intertemporal choices which are decisions that involve costs and benefits that are distributed over time. Intertemporal choice research studies the expected utility that humans assign to events occurring at different times. The dominant model in economics which explains it is discounted utility (DU). DU assumes that humans have consistent time preference and will assign value to events regardless of when they occur. Similar to EU in explaining risky decision-making, DU is inadequate in explaining intertemporal choice.

For example, DU assumes that people who value a bar of candy today more than 2 bars tomorrow, will also value 1 bar received 100 days from now more than 2 bars received after 101 days. There is strong evidence against this last part in both humans and animals, and hyperbolic discounting has been proposed as an alternative model. Under this model, valuations fall very rapidly for small delay periods, but then fall slowly for longer delay periods. This better explains why most people who would choose 1 candy bar now over 2 candy bars tomorrow, would, in fact, choose 2 candy bars received after 101 days rather than the 1 candy bar received after 100 days which DU assumes.

Neuroeconomic research in intertemporal choice is largely aimed at understanding what mediates observed behaviors such as future discounting and impulsively choosing smaller sooner rather than larger later rewards. The process of choosing between immediate and delayed rewards seems to be mediated by an interaction between two brain areas. In choices involving both primary (fruit juice) and secondary rewards (money), the limbic system is highly active when choosing the immediate reward while the lateral prefrontal cortex was equally active when making either choice. Furthermore, the ratio of limbic to cortex activity decreased as a function of the amount of time until reward. This suggests that the limbic system, which forms part of the dopamine reward pathway, is most involved in making impulsive decisions while the cortex is responsible for the more general aspects of the intertemporal decision process.

The neurotransmitter serotonin seems to play an important role in modulating future discounting. In rats, reducing serotonin levels increases future discounting while not affecting decision-making under uncertainty. It seems, then, that while the dopamine system is involved in probabilistic uncertainty, serotonin may be responsible for temporal uncertainty since delayed reward involves a potentially uncertain future. In addition to neurotransmitters, intertemporal choice is also modulated by hormones in the brain. In humans, a reduction in cortisol, released by the hypothalamus in response to stress, is correlated with a higher degree of impulsivity in intertemporal choice tasks. Drug addicts tend to have lower levels of cortisol than the general population, which may explain why they seem to discount the future negative effects of taking drugs and opt for the immediate positive reward.

Social decision-making

While most research on decision-making tends to focus on individuals making choices outside of a social context, it is also important to consider decisions that involve social interactions. The types of behavior that decision theorists study are as diverse as altruism, cooperation, punishment, and retribution. One of the most frequently utilized tasks in social decision-making is the prisoner's dilemma.

In this situation, the payoff for a particular choice is dependent not only on the decision of the individual but also on that of another individual playing the game. An individual can choose to either cooperate with his partner or defect against the partner. Over the course of a typical game, individuals tend to prefer mutual cooperation even though defection would lead to a higher overall payout. This suggests that individuals are motivated not only by monetary gains but also by some reward derived from cooperating in social situations.

This idea is supported by neural imaging studies demonstrating a high degree of activation in the ventral striatum when individuals cooperate with another person but that this is not the case when people play the same prisoner's dilemma against a computer. The ventral striatum is part of the reward pathway, so this research suggests that there may be areas of the reward system that are activated specifically when cooperating in social situations. Further support for this idea comes from research demonstrating that activation in the striatum and the ventral tegmental area show similar patterns of activation when receiving money and when donating money to charity. In both cases, the level of activation increases as the amount of money increases, suggesting that both giving and receiving money results in neural reward.

An important aspect of social interactions such as the prisoner's dilemma is trust. The likelihood of one individual cooperating with another is directly related to how much the first individual trusts the second to cooperate; if the other individual is expected to defect, there is no reason to cooperate with them. Trust behavior may be related to the presence of oxytocin, a hormone involved in maternal behavior and pair bonding in many species. When oxytocin levels were increased in humans, they were more trusting of other individuals than a control group even though their overall levels of risk-taking were unaffected suggesting that oxytocin is specifically implicated in the social aspects of risk taking. However this research has recently been questioned.

One more important paradigm for neuroeconomic studies is ultimatum game. In this game Player 1 gets a sum of money and decides how much he wants to offer Player 2. Player 2 either accepts or rejects the offer. If he accepts both players get the amount as proposed by Player 1, if he rejects nobody gets anything. Rational strategy for Player 2 would be to accept any offer because it has more value than zero. However, it has been shown that people often reject offers that they consider as unfair. Neuroimaging studies indicated several brain regions that are activated in response to unfairness in ultimatum game. They include bilateral mid-anterior insula, anterior cingulate cortex (ACC), medial supplementary motor area (SMA), cerebellum and right dorsolateral prefrontal cortex (DLPFC). It has been shown that low-frequency repetitive transcranial magnetic stimulation of DLPFC increases the likelihood of accepting unfair offers in the ultimatum game.

Another issue in the field of neuroeconomics is represented by role of reputation acquisition in social decision-making. Social exchange theory claims that prosocial behavior originates from the intention to maximize social rewards and minimize social costs. In this case, approval from others may be viewed as a significant positive reinforcer - i.e., a reward. Neuroimaging studies have provided evidence supporting this idea – it was shown that processing of social rewards activates striatum, especially left putamen and left caudate nucleus, in the same fashion these areas are activated during the processing of monetary rewards. These findings also support so-called "common neural currency" idea, which assumes existence of shared neural basis for processing of different types of reward.

Sexual decision-making

Regarding the choice of sexual partner, research studies have been conducted on humans and on nonhuman primates. Notably, Cheney & Seyfarth 1990, Deaner et al. 2005, and Hayden et al. 2007 suggest a persistent willingness to accept fewer physical goods or higher prices in return for access to socially high-ranking individuals, including physically attractive individuals, whereas increasingly high rewards are demanded if asked to relate to low-ranking individuals.

Cordelia Fine is most well known for her research on gendered minds and sexual decision-making. In her book Testosterone Rex she critiques sex differences in the brain and goes into detail on the economic cost and benefits of finding a partner, as interpreted and analysed by our brains. Her showcases an interesting sub-topic of neuroeconomics.

The neurobiological basis for this preference includes neurons of the lateral intraparietal cortex (LIP), which is related to eye movement, and which is operative in situations of two-alternative forced choices.

Methodology

Behavioral economics experiments record the subject's decisions over various design parameters and use the data to generate formal models that predict performance. Neuroeconomics extends this approach by adding states of the nervous system to the set of explanatory variables. The goal of neuroeconomics is to help explain decisions and to enrich the data sets available for testing predictions.

Furthermore, neuroeconomic research is being used to understand and explain aspects of human behavior that do not conform to traditional economic models. While these behavior patterns are generally dismissed as 'fallacious' or 'illogical' by economists, neuroeconomic researchers are trying to determine the biological reasons for these behaviors. By using this approach, researchers may be able to find explanations for why people often act sub-optimally. Richard Thaler provides a prime example in his book Misbehaving, detailing a scenario where an appetiser is served before a meal and guest accidentally fill up of it. Most people need the appetiser to be completely hidden in order to stop themselves from the temptation, where as a rational agent would simply stop and wait for the meal. Temptation is just one of the many irrationalities that have been ignored due to difficulties of studying them.

Neurobiological research techniques

There are several different techniques that can be utilized to understand the biological basis of economic behavior. Neural imaging is used in human subjects to determine which areas of the brain are most active during particular tasks. Some of these techniques, such as fMRI or PET are best suited to giving detailed pictures of the brain which can give information about specific structures involved in a task. Other techniques, such as ERP (event-related potentials) and oscillatory brain activity are used to gain detailed knowledge of the time course of events within a more general area of the brain. If a specific region of the brain is suspected to be involved in a type of economic decision-making, researchers may use Transcranial Magnetic Stimulation (TMS) to temporarily disrupt that region, and compare the results to when the brain was allowed to function normally. More recently, there has been interest in the role that brain structure, such as white matter connectivity between brain areas, plays in determining individual differences in reward-based decision-making.

Neuroscience does not always involve observing the brain directly, as brain activity can also be interpret by physiological measurements such skin conductance, heart rate, hormones, pupil dilation and muscle contraction known as electromyography, especially of the face to infer emotions linked to decisions.

Neuroeconomics of addiction

In addition to studying areas of the brain, some studies are aimed at understanding the functions of different brain chemicals in relation to behavior. This can be done by either correlating existing chemical levels with different behavior patterns or by changing the amount of the chemical in the brain and noting any resulting behavioral changes. For example, the neurotransmitter serotonin seems to be involved in making decisions involving intertemporal choice while dopamine is utilized when individuals make judgments involving uncertainty. Furthermore, artificially increasing oxytocin levels increases trust behavior in humans while individuals with lower cortisol levels tend to be more impulsive and exhibit more future discounting.

In addition to studying the behavior of neurologically normal individuals in decision-making tasks, some research involves comparing that behavior to individuals with damage to areas of the brain expected to be involved in decision-making. In humans, this means finding individuals with specific types of neural impairment. These case studies may have things like amygdala damage, leading to a decrease in loss aversion compared to controls. Also, scores from a survey measuring prefrontal cortex dysfunction are correlated with general economic attitudes, like risk preferences.

Previous studies investigated the behavioral patterns of patients with psychiatric disorders, or neuroeconomics of addiction, such as schizophrenia, autism, depression,,to get the insights of their pathophysiology. In animal studies, highly controlled experiments can get more specific information about the importance of brain areas to economic behavior. This can involve either lesioning entire brain areas and measuring resulting behavior changes or using electrodes to measure the firing of individual neurons in response to particular stimuli.

Experiments

As explained in Methodologies above, in a typical behavioral economics experiment, a subject is asked to make a series of economic decisions. For example, a subject may be asked whether they prefer to have 45 cents or a gamble with a 50% chance to win one dollar. Many experiments involve the participant completing games where they either make one-off of repeated decisions and psychological responses and reaction time is measured. For example, it is common to test peoples relationship with the future, known as future discounting, by asking them questions such as "would you prefer $10 today, or $50 in a year from today?" The experimenter will then measure different variables in order to determine what is going on in the subject's brain as they make the decision. Some authors have demonstrated that neuroeconomics may be useful to describe not only experiments involving rewards but also common psychiatric syndromes involving addiction or delusion.

Criticisms

From the beginnings of neuroeconomics and throughout its swift academic rise, criticisms have been voiced over the field's validity and usefulness. Glenn W. Harris and Emanuel Donchin have both criticized the emerging field, with the former publishing his concerns in 2008 with the paper 'Neuroeconomics: A Critical Reconsideration'. Harris surmises that much of the neuroscience-assisted insights into economic modelling is "academic marketing hype" and that the true substance of the field is yet to present itself and needs to be seriously reconsidered. He also mentions that methodologically, many of the studies in neuroeconomics are flawed by their small sample sizes and limited applicability.

A review of the learnings of neuroeconomics, published in 2016 by Arkady Konovalov, shared the sentiment that the field suffers from experimental shortcomings. Primary among them being a lack of analogous ties between specific brain regions and some psychological constructs such as "value". The review mentions that although early neuroeconomic fMRI studies assumed that specific brain regions were singularly responsible for one function in the decision-making process, they have subsequently been shown to be recruiting in multiple different functions. The practice of reverse inference has therefore seen much less use and has hurt the field. Instead, FMRI should not be a standalone methodology, but rather be collected and connected to self-reports and behavioral data. The validity of using functional neuroimaging in consumer neuroscience can be improved by carefully designing studies, conducting meta-analyses, and connecting psychometric and behavioral data with data from neuroimaging.

Ariel Rubinstein, an economist at the University of Tel Aviv, spoke about neuroeconomic research, saying "standard experiments provide little information about the procedures of choice as it is difficult to extrapolate from a few choice observations to the entire choice function. If we want to know more about human procedures of choice we need to look somewhere else". These comments echo a salient and consistent argument of traditional economists against the neuroeconomic approach that the use of non-choice data, such as response times, eye-tracking and neural signals that people generate during decision-making, should be excluded from any economic analysis.

Other critiques have also included claims that neuroeconomics is "a field that oversells itself"; or that neuroeconomic studies "misunderstand and underestimate traditional economic models".

Applications

Currently, the real-world applications and predictions of neuroeconomics are still unknown or under-developed as the burgeoning field continues to grow. Some criticisms have been made that the accumulation of research and its findings have so far produced little in the way of pertinent recommendations to economic policy-makers. But many neuroeconomists insist that the potential of the field to enhance our understanding of the brain's machinations with decision-making may prove highly influential in the future.

In particular, the findings of specific neurological markers of individual preferences may have important implications for well-known economic models and paradigms. An example of this is the finding that an increase in computational capacity (likely related to increased gray matter volume) could lead to higher risk tolerance by loosening the constraints that govern subjective representations of probabilities and rewards in lottery tasks.

Economists are also looking at neuroeconomics to assist with explanations of group aggregate behavior that have market-level implications. For example, many researchers anticipate that neurobiological data may be used to detect when individuals or groups of individuals are likely to exhibit economically problematic behavior. This may be applied to the concept of market bubbles. These occurrences are majorly consequential in modern society and regulators could gain substantial insights into their formulation and lack of prediction/prevention.

Neuroeconomic work has also seen a close relationship with academic investigations of addiction. Researchers acknowledged, in the 2010 publication 'Advances in the Neuroscience of Addiction: 2nd Edition', that the neuroeconomic approach serves as a "powerful new conceptual method that is likely to be critical for progress in understanding addictive behavior".

German neuroscientist Tania Singer spoke at the World Economic Forum in 2015 about her research in compassion training. While economics and neuroscience are largely spilt, her research is an example of how they can meld together. Her study revealed a preference change toward prosocial behavior after 3 months of compassion training. She also demonstrated a structural change in the grey matter of the brain indicating new neural connections had formed as a result of the mental training. She showed that if economists utilised predictors other than consumption, they could model and predict a more diverse range of economic behaviors. She also advocated that neuroeconomics could vastly improve policymaking as we can create contexts that predictably lead to positive behavioral outcomes such as prosocial behavior when caring emotions are primed. Her research demonstrates the impact neuroeconomics could have on our individual psyches, our societal norms and political landscapes at large.

Neuromarketing is another applied example of a distinct discipline closely related to neuroeconomics. While broader neuroeconomics has more academic aims, since it studies the basic mechanisms of decision-making, neuromarketing is an applied sub-field which uses neuroimaging tools for market investigations. Insights derived from brain imaging technologies (fMRI) are typically used to analyse the brain's response to particular marketing stimuli.

Another neuroscientist, Emily Falk contributed to the neuroeconomics and neuromarketing fields by researching how the brain reacts to marketing aimed at evoking behavioral change. Specifically, her paper on anti-smoking advertisements highlighted the disparity between what advertisements we believe will be convincing and what the brain responds to most positively. The advertisement the experts and trial audience agreed on as being the most effective anti-smoking campaign actually elicited very little behavioral change in smokers. Meanwhile, the campaign ranked least likely to be effective by the experts and audience, generated the strongest neural response in the medial prefrontal cortex and resulted in the largest number of people deciding to quit smoking. This revealed that often our brains may know better than us when it comes to what motivators lead to behavioral change. It also emphasises the importance of integrating neuroscience into mainstream and behavioral economics to generate more wholistic models and accurate predictions. This research could have impacts in promoting healthier diets, more exercise, or encouraging people to make behavioral changes that benefit the environment and reduce climate change.

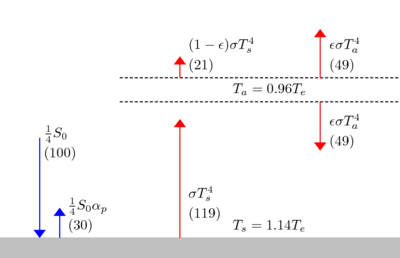

![{\displaystyle T_{s}=\left[{\frac {S_{0}(1-\alpha _{p})}{4\sigma }}{\frac {1}{1-{\epsilon \over 2}}}\right]^{\frac {1}{4}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/95232bef370ba744115539a14dfb600559feed48)

![{\displaystyle T_{e}\equiv \left[{\frac {{\frac {1}{4}}S_{0}(1-\alpha _{p})}{\sigma }}\right]^{\frac {1}{4}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/fa796120e6b61fee258c6326a85816634bf3b45d)

![{\displaystyle T_{s}=T_{e}\left[{\frac {1}{1-{\epsilon \over 2}}}\right]^{\frac {1}{4}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/aa4c3f30f5b7b229c563d4e300c898bfeae68bc8)