| |

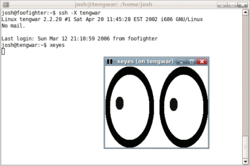

twm, the default X11 window manager |

The X Window System (X11, or simply X; stylized 𝕏) is a windowing system for bitmap displays, common on Unix-like operating systems.

X originated as part of Project Athena at Massachusetts Institute of Technology (MIT) in 1984. The X protocol has been at version 11 (hence "X11") since September 1987. The X.Org Foundation leads the X project, with the current reference implementation, X.Org Server, available as free and open-source software under the MIT License and similar permissive licenses.

Purpose and abilities

X is an architecture-independent system for remote graphical user interfaces and input device capabilities. Each person using a networked terminal has the ability to interact with the display with any type of user input device.

In its standard distribution it is a complete, albeit simple, display and interface solution which delivers a standard toolkit and protocol stack for building graphical user interfaces on most Unix-like operating systems and OpenVMS, and has been ported to many other contemporary general purpose operating systems.

X provides the basic framework, or primitives, for building such GUI environments: drawing and moving windows on the display and interacting with a mouse, keyboard or touchscreen. X does not mandate the user interface; individual client programs handle this. Programs may use X's graphical abilities with no user interface. As such, the visual styling of X-based environments varies greatly; different programs may present radically different interfaces.

Unlike most earlier display protocols, X was specifically designed to be used over network connections rather than on an integral or attached display device. X features network transparency, which means an X program running on a computer somewhere on a network (such as the Internet) can display its user interface on an X server running on some other computer on the network. The X server is typically the provider of graphics resources and keyboard/mouse events to X clients, meaning that the X server is usually running on the computer in front of a human user, while the X client applications run anywhere on the network and communicate with the user's computer to request the rendering of graphics content and receive events from input devices including keyboards and mice.

The fact that the term "server" is applied to the software in front of the user is often surprising to users accustomed to their programs being clients to services on remote computers. Here, rather than a remote database being the resource for a local app, the user's graphic display and input devices become resources made available by the local X server to both local and remotely hosted X client programs who need to share the user's graphics and input devices to communicate with the user.

X's network protocol is based on X command primitives. This approach allows both 2D and (through extensions like GLX) 3D operations by an X client application which might be running on a different computer to still be fully accelerated on the X server's display. For example, in classic OpenGL (before version 3.0), display lists containing large numbers of objects could be constructed and stored entirely in the X server by a remote X client program, and each then rendered by sending a single glCallList(which) across the network.

X provides no native support for audio; several projects exist to fill this niche, some also providing transparent network support.

Software architecture

X uses a client–server model: an X server communicates with various client programs. The server accepts requests for graphical output (windows) and sends back user input (from keyboard, mouse, or touchscreen). The server may function as:

- an application displaying to a window of another display system

- a system program controlling the video output of a PC

- a dedicated piece of hardware

This client–server terminology – the user's terminal being the server and the applications being the clients – often confuses new X users, because the terms appear reversed. But X takes the perspective of the application, rather than that of the end-user: X provides display and I/O services to applications, so it is a server; applications use these services, thus they are clients.

The communication protocol between server and client operates network-transparently: the client and server may run on the same machine or on different ones, possibly with different architectures and operating systems. A client and server can even communicate securely over the Internet by tunneling the connection over an encrypted network session.

An X client itself may emulate an X server by providing display services to other clients. This is known as "X nesting". Open-source clients such as Xnest and Xephyr support such X nesting.

Remote desktop

To run an X client application on a remote machine, the user may do the following:

- on the local machine, open a terminal window

- use

ssh -Xcommand to connect to the remote machine - request a local display/input service (e.g., export DISPLAY=[user's machine]:0 if not using SSH with X forwarding enabled)

The remote X client application will then make a connection to the user's local X server, providing display and input to the user.

Alternatively, the local machine may run a small program that connects to the remote machine and starts the client application.

Practical examples of remote clients include:

- administering a remote machine graphically (similar to using remote desktop, but with single windows)

- using a client application to join with large numbers of other terminal users in collaborative workgroups

- running a computationally intensive simulation on a remote machine and displaying the results on a local desktop machine

- running graphical software on several machines at once, controlled by a single display, keyboard and mouse

User interfaces

X primarily defines protocol and graphics primitives – it deliberately contains no specification for application user-interface design, such as button, menu, or window title-bar styles. Instead, application software – such as window managers, GUI widget toolkits and desktop environments, or application-specific graphical user interfaces – define and provide such details. As a result, there is no typical X interface and several different desktop environments have become popular among users.

A window manager controls the placement and appearance of application windows. This may result in desktop interfaces reminiscent of those of Microsoft Windows or of the Apple Macintosh (examples include GNOME 2, KDE Plasma, Xfce) or have radically different controls (such as a tiling window manager, like wmii or Ratpoison). Some interfaces such as Sugar or ChromeOS eschew the desktop metaphor altogether, simplifying their interfaces for specialized applications. Window managers range in sophistication and complexity from the bare-bones (e.g., twm, the basic window manager supplied with X, or evilwm, an extremely light window manager) to the more comprehensive desktop environments such as Enlightenment and even to application-specific window managers for vertical markets such as point-of-sale.

Many users use X with a desktop environment, which, aside from the window manager, includes various applications using a consistent user interface. Popular desktop environments include GNOME, KDE Plasma and Xfce. The UNIX 98 standard environment is the Common Desktop Environment (CDE). The freedesktop.org initiative addresses interoperability between desktops and the components needed for a competitive X desktop.

Implementations

The X.Org implementation is the canonical implementation of X. Owing to liberal licensing, a number of variations, both free and open source and proprietary, have appeared. Commercial Unix vendors have tended to take the reference implementation and adapt it for their hardware, usually customizing it and adding proprietary extensions.

Until 2004, XFree86 provided the most common X variant on free Unix-like systems. XFree86 started as a port of X to 386-compatible PCs and, by the end of the 1990s, had become the greatest source of technical innovation in X and the de facto standard of X development. Since 2004, however, the X.Org Server, a fork of XFree86, has become predominant.

While it is common to associate X with Unix, X servers also exist natively within other graphical environments. VMS Software Inc.'s OpenVMS operating system includes a version of X with Common Desktop Environment (CDE), known as DECwindows, as its standard desktop environment. Apple originally ported X to macOS in the form of X11.app, but that has been deprecated in favor of the XQuartz implementation. Third-party servers under Apple's older operating systems in the 1990s, System 7, and Mac OS 8 and 9, included Apple's MacX and White Pine Software's eXodus.

Microsoft Windows is not shipped with support for X, but many third-party implementations exist, as free and open source software such as Cygwin/X, and proprietary products such as Exceed, MKS X/Server, Reflection X, X-Win32 and Xming.

There are also Java implementations of X servers. WeirdX runs on any platform supporting Swing 1.1, and will run as an applet within most browsers. The Android X Server is an open source Java implementation that runs on Android devices.

When an operating system with a native windowing system hosts X in addition, the X system can either use its own normal desktop in a separate host window or it can run rootless, meaning the X desktop is hidden and the host windowing environment manages the geometry and appearance of the hosted X windows within the host screen.

X terminals

An X terminal is a thin client that only runs an X server. This architecture became popular for building inexpensive terminal parks for many users to simultaneously use the same large computer server to execute application programs as clients of each user's X terminal. This use is very much aligned with the original intention of the MIT project.

X terminals explore the network (the local broadcast domain) using the X Display Manager Control Protocol to generate a list of available hosts that are allowed as clients. One of the client hosts should run an X display manager.

A limitation of X terminals and most thin clients is that they are not capable of any input or output other than the keyboard, mouse, and display. All relevant data is assumed to exist solely on the remote server, and the X terminal user has no methods available to save or load data from a local peripheral device.

Dedicated (hardware) X terminals have fallen out of use; a PC or modern thin client with an X server typically provides the same functionality at the same, or lower, cost.

Limitations and criticism

The Unix-Haters Handbook (1994) devoted a full chapter to the problems of X. Why X Is Not Our Ideal Window System (1990) by Gajewska, Manasse and McCormack detailed problems in the protocol with recommendations for improvement.

User interface issues

The lack of design guidelines in X has resulted in several vastly different interfaces, and in applications that have not always worked well together. The Inter-Client Communication Conventions Manual (ICCCM), a specification for client interoperability, has a reputation for being difficult to implement correctly. Further standards efforts such as Motif and CDE did not alleviate problems. This has frustrated users and programmers. Graphics programmers now generally address consistency of application look and feel and communication by coding to a specific desktop environment or to a specific widget toolkit, which also avoids having to deal directly with the ICCCM.

X also lacks native support for user-defined stored procedures on the X server, in the manner of NeWS – there is no Turing-complete scripting facility. Various desktop environments may thus offer their own (usually mutually incompatible) facilities.

Computer accessibility related issues

Systems built upon X may have accessibility issues that make utilization of a computer difficult for disabled users, including right click, double click, middle click, mouse-over, and focus stealing. Some X11 clients deal with accessibility issues better than others, so persons with accessibility problems are not locked out of using X11. However, there is no accessibility standard or accessibility guidelines for X11. Within the X11 standards process there is no working group on accessibility; however, accessibility needs are being addressed by software projects to provide these features on top of X.

The Orca project adds accessibility support to the X Window System, including implementing an API (AT-SPI). This is coupled with GNOME's ATK to allow for accessibility features to be implemented in X programs using the GNOME/GTK APIs. KDE provides a different set of accessibility software, including a text-to-speech converter and a screen magnifier. The other major desktops (LXDE, Xfce and Enlightenment) attempt to be compatible with ATK.

Network

An X client cannot generally be detached from one server and reattached to another unless its code specifically provides for it (Emacs is one of the few common programs with this ability). As such, moving an entire session from one X server to another is generally not possible. However, approaches like Virtual Network Computing (VNC), NX and Xpra allow a virtual session to be reached from different X servers (in a manner similar to GNU Screen in relation to terminals), and other applications and toolkits provide related facilities. Workarounds like x11vnc (VNC :0 viewers), Xpra's shadow mode and NX's nxagent shadow mode also exist to make the current X-server screen available. This ability allows the user interface (mouse, keyboard, monitor) of a running application to be switched from one location to another without stopping and restarting the application.

Network traffic between an X server and remote X clients is not encrypted by default. An attacker with a packet sniffer can intercept it, making it possible to view anything displayed to or sent from the user's screen. The most common way to encrypt X traffic is to establish a Secure Shell (SSH) tunnel for communication.

Like all thin clients, when using X across a network, bandwidth limitations can impede the use of bitmap-intensive applications that require rapidly updating large portions of the screen with low latency, such as 3D animation or photo editing. Even a relatively small uncompressed 640×480×24 bit 30 fps video stream (~211 Mbit/s) can easily outstrip the bandwidth of a 100 Mbit/s network for a single client. In contrast, modern versions of X generally have extensions such as Mesa allowing local display of a local program's graphics to be optimized to bypass the network model and directly control the video card, for use of full-screen video, rendered 3D applications, and other such applications.

Client–server separation

X's design requires the clients and server to operate separately, and device independence and the separation of client and server incur overhead. Most of the overhead comes from network round-trip delay time between client and server (latency) rather than from the protocol itself: the best solutions to performance issues depend on efficient application design. A common criticism of X is that its network features result in excessive complexity and decreased performance if only used locally.

Modern X implementations use Unix domain sockets for efficient connections on the same host. Additionally shared memory (via the MIT-SHM extension) can be employed for faster client–server communication. However, the programmer must still explicitly activate and use the shared memory extension. It is also necessary to provide fallback paths in order to stay compatible with older implementations, and in order to communicate with non-local X servers.

Competitors

Some people have attempted writing alternatives to and replacements for X. Historical alternatives include Sun's NeWS and NeXT's Display PostScript, both PostScript-based systems supporting user-definable display-side procedures, which X lacked. Current alternatives include:

- macOS (and its mobile counterpart, iOS) implements its windowing system, which is known as Quartz. When Apple Computer bought NeXT, and used NeXTSTEP to construct Mac OS X, it replaced Display PostScript with Quartz. Mike Paquette, one of the authors of Quartz, explained that if Apple had added support for all the features it wanted to include into X11, it would not bear much resemblance to X11 nor be compatible with other servers anyway.

- Wayland is being developed by several X.Org developers as a prospective replacement for X. It works directly with the GPU hardware, via DRI. Wayland can run an X server as a Wayland compositor, which can be rootless. The project reached version 1.0 in 2012. Like Android, Wayland is EGL-based.

- Mir was a project from Canonical Ltd. with goals similar to Wayland. Mir was intended to work with mobile devices using ARM chipsets (a stated goal was compatibility with Android device-drivers) as well as x86 desktops. Like Android, Mir/UnityNext were EGL-based. Backwards compatibility with X client-applications was accomplished via Xmir. The project has since moved to being a Wayland compositor instead of being an alternative display server.

- Other alternatives attempt to avoid the overhead of X by working directly with the hardware; such projects include DirectFB. The Direct Rendering Infrastructure (DRI) provides a kernel-level interface to the framebuffer.

Additional ways to achieve a functional form of the "network transparency" feature of X, via network transmissibility of graphical services, include:

- Virtual Network Computing (VNC), a very low-level system which sends compressed bitmaps across the network; the Unix implementation includes an X server

- Remote Desktop Protocol (RDP), which is similar to VNC in purpose, but originated on Microsoft Windows before being ported to Unix-like systems, e.g. NX

- Citrix XenApp, an X-like protocol and application stack for Microsoft Windows

- Tarantella, which provides a Java-based remote-gui-client for use in web browsers

History

Predecessors

Several bitmap display systems preceded X. From Xerox came the Alto (1973) and the Star (1981). From Apollo Computer came Display Manager (1981). From Apple came the Lisa (1983) and the Macintosh (1984). The Unix world had the Andrew Project (1982) and Rob Pike's Blit terminal (1982).

Carnegie Mellon University produced a remote-access application called Alto Terminal, that displayed overlapping windows on the Xerox Alto, and made remote hosts (typically DEC VAX systems running Unix) responsible for handling window-exposure events and refreshing window contents as necessary.

X derives its name as a successor to a pre-1983 window system called W (the letter preceding X in the English alphabet). W ran under the V operating system. W used a network protocol supporting terminal and graphics windows, the server maintaining display lists.

Origin and early development

From: rws@mit-bold (Robert W. Scheifler) To: window@athena Subject: window system X Date: 19 June 1984 0907-EDT (Tuesday) I've spent the last couple weeks writing a window system for the VS100. I stole a fair amount of code from W, surrounded it with an asynchronous rather than a synchronous interface, and called it X. Overall performance appears to be about twice that of W. The code seems fairly solid at this point, although there are still some deficiencies to be fixed up. We at LCS have stopped using W, and are now actively building applications on X. Anyone else using W should seriously consider switching. This is not the ultimate window system, but I believe it is a good starting point for experimentation. Right at the moment there is a CLU (and an Argus) interface to X; a C interface is in the works. The three existing applications are a text editor (TED), an Argus I/O interface, and a primitive window manager. There is no documentation yet; anyone crazy enough to volunteer? I may get around to it eventually. Anyone interested in seeing a demo can drop by NE43-531, although you may want to call 3-1945 first. Anyone who wants the code can come by with a tape. Anyone interested in hacking deficiencies, feel free to get in touch.

The original idea of X emerged at MIT in 1984 as a collaboration between Jim Gettys (of Project Athena) and Bob Scheifler (of the MIT Laboratory for Computer Science). Scheifler needed a usable display environment for debugging the Argus system. Project Athena (a joint project between DEC, MIT and IBM to provide easy access to computing resources for all students) needed a platform-independent graphics system to link together its heterogeneous multiple-vendor systems; the window system then under development in Carnegie Mellon University's Andrew Project did not make licenses available, and no alternatives existed.

The project solved this by creating a protocol that could both run local applications and call on remote resources. In mid-1983 an initial port of W to Unix ran at one-fifth of its speed under V; in May 1984, Scheifler replaced the synchronous protocol of W with an asynchronous protocol and the display lists with immediate mode graphics to make X version 1. X became the first windowing system environment to offer true hardware independence and vendor independence.

Scheifler, Gettys and Ron Newman set to work and X progressed rapidly. They released Version 6 in January 1985. DEC, then preparing to release its first Ultrix workstation, judged X the only windowing system likely to become available in time. DEC engineers ported X6 to DEC's QVSS display on MicroVAX.

In the second quarter of 1985, X acquired color support to function in the DEC VAXstation-II/GPX, forming what became version 9.

A group at Brown University ported version 9 to the IBM RT PC, but problems with reading unaligned data on the RT forced an incompatible protocol change, leading to version 10 in late 1985. X10R1 was released in 1985. By 1986, outside organizations had begun asking for X. X10R2 was released in January 1986, then X10R3 in February 1986. Although MIT had licensed X6 to some outside groups for a fee, it decided at this time to license X10R3 and future versions under what became known as the MIT License, intending to popularize X further and, in return, hoping that many more applications would become available. X10R3 became the first version to achieve wide deployment, with both DEC and Hewlett-Packard releasing products based on it. Other groups ported X10 to Apollo and to Sun workstations and even to the IBM PC/AT. Demonstrations of the first commercial application for X (a mechanical computer-aided engineering system from Cognition Inc. that ran on VAXes and remotely displayed on PCs running an X server ported by Jim Fulton and Jan Hardenbergh) took place at the Autofact trade show at that time. The last version of X10, X10R4, appeared in December 1986. Attempts were made to enable X servers as real-time collaboration devices, much as Virtual Network Computing (VNC) would later allow a desktop to be shared. One such early effort was Philip J. Gust's SharedX tool.

Although X10 offered interesting and powerful functionality, it had become obvious that the X protocol could use a more hardware-neutral redesign before it became too widely deployed, but MIT alone would not have the resources available for such a complete redesign. As it happened, DEC's Western Software Laboratory found itself between projects with an experienced team. Smokey Wallace of DEC WSL and Jim Gettys proposed that DEC WSL build X11 and make it freely available under the same terms as X9 and X10. This process started in May 1986, with the protocol finalized in August. Alpha testing of the software started in February 1987, beta-testing in May; the release of X11 finally occurred on 15 September 1987.

The X11 protocol design, led by Scheifler, was extensively discussed on open mailing lists on the nascent Internet that were bridged to USENET newsgroups. Gettys moved to California to help lead the X11 development work at WSL from DEC's Systems Research Center, where Phil Karlton and Susan Angebrandt led the X11 sample server design and implementation. X therefore represents one of the first very large-scale distributed free and open source software projects.

The MIT X Consortium and the X Consortium, Inc.

By the late 1980s X was, Simson Garfinkel wrote in 1989, "Athena's most important single achievement to date". DEC reportedly believed that its development alone had made the company's donation to MIT worthwhile. Gettys joined the design team for the VAXstation 2000 to ensure that X—which DEC called DECwindows—would run on it, and the company assigned 1,200 employees to port X to both Ultrix and VMS. In 1987, with the success of X11 becoming apparent, MIT wished to relinquish the stewardship of X, but at a June 1987 meeting with nine vendors, the vendors told MIT that they believed in the need for a neutral party to keep X from fragmenting in the marketplace. In January 1988, the MIT X Consortium formed as a non-profit vendor group, with Scheifler as director, to direct the future development of X in a neutral atmosphere inclusive of commercial and educational interests.

Jim Fulton joined in January 1988 and Keith Packard in March 1988 as senior developers, with Jim focusing on Xlib, fonts, window managers, and utilities; and Keith re-implementing the server. Donna Converse, Chris D. Peterson, and Stephen Gildea joined later that year, focusing on toolkits and widget sets, working closely with Ralph Swick of MIT Project Athena. The MIT X Consortium produced several significant revisions to X11, the first (Release 2 – X11R2) in February 1988. Jay Hersh joined the staff in January 1991 to work on the PEX and X113D functionality. He was followed soon after by Ralph Mor (who also worked on PEX) and Dave Sternlicht. In 1993, as the MIT X Consortium prepared to depart from MIT, the staff were joined by R. Gary Cutbill, Kaleb Keithley, and David Wiggins.

In 1993, the X Consortium, Inc. (a non-profit corporation) formed as the successor to the MIT X Consortium. It released X11R6 on 16 May 1994. In 1995 it took on the development of the Motif toolkit and of the Common Desktop Environment for Unix systems. The X Consortium dissolved at the end of 1996, producing a final revision, X11R6.3, and a legacy of increasing commercial influence in the development.

The Open Group

In January 1997, the X Consortium passed stewardship of X to The Open Group, a vendor group formed in early 1996 by the merger of the Open Software Foundation and X/Open.

The Open Group released X11R6.4 in early 1998. Controversially, X11R6.4 departed from the traditional liberal licensing terms, as the Open Group sought to assure funding for the development of X, and specifically cited XFree86 as not significantly contributing to X. The new terms would have made X no longer free software: zero-cost for noncommercial use, but a fee otherwise. After XFree86 seemed poised to fork, the Open Group relicensed X11R6.4 under the traditional license in September 1998. The Open Group's last release came as X11R6.4 patch 3.

X.Org and XFree86

XFree86 originated in 1992 from the X386 server for IBM PC compatibles included with X11R5 in 1991, written by Thomas Roell and Mark W. Snitily and donated to the MIT X Consortium by Snitily Graphics Consulting Services (SGCS). XFree86 evolved over time from just one port of X to the leading and most popular implementation and the de facto standard of X's development.

In May 1999, The Open Group formed X.Org. X.Org supervised the release of versions X11R6.5.1 onward. X development at this time had become moribund; most technical innovation since the X Consortium had dissolved had taken place in the XFree86 project. In 1999, the XFree86 team joined X.Org as an honorary (non-paying) member, encouraged by various hardware companies interested in using XFree86 with Linux and in its status as the most popular version of X.

By 2003, while the popularity of Linux (and hence the installed base of X) surged, X.Org remained inactive, and active development took place largely within XFree86. However, considerable dissent developed within XFree86. The XFree86 project suffered from a perception of a far too cathedral-like development model; developers could not get CVS commit access and vendors had to maintain extensive patch sets. In March 2003, the XFree86 organization expelled Keith Packard, who had joined XFree86 after the end of the original MIT X Consortium, with considerable ill feeling.

X.Org and XFree86 began discussing a reorganisation suited to properly nurturing the development of X. Jim Gettys had been pushing strongly for an open development model since at least 2000. Gettys, Packard and several others began discussing in detail the requirements for the effective governance of X with open development.

Finally, in an echo of the X11R6.4 licensing dispute, XFree86 released version 4.4 in February 2004 under a more restrictive license which many projects relying on X found unacceptable. The added clause to the license was based on the original BSD license's advertising clause, which was viewed by the Free Software Foundation and Debian as incompatible with the GNU General Public License. Other groups saw it as against the spirit of the original X. Theo de Raadt of OpenBSD, for instance, threatened to fork XFree86 citing license concerns. The license issue, combined with the difficulties in getting changes in, left many feeling the time was ripe for a fork.

The X.Org Foundation

In early 2004, various people from X.Org and freedesktop.org formed the X.Org Foundation, and the Open Group gave it control of the x.org domain name.

This marked a radical change in the governance of X. Whereas the

stewards of X since 1988 (including the prior X.Org) had been vendor

organizations, the Foundation was led by software developers and used

community development based on the bazaar model,

which relies on outside involvement. Membership was opened to

individuals, with corporate membership being in the form of sponsorship.

Several major corporations such as Hewlett-Packard currently support the X.Org Foundation.

The Foundation takes an oversight role over X development: technical decisions are made on their merits by achieving rough consensus among community members. Technical decisions are not made by the board of directors; in this sense, it is strongly modelled on the technically non-interventionist GNOME Foundation. The Foundation employs no developers. The Foundation released X11R6.7, the X.Org Server, in April 2004, based on XFree86 4.4RC2 with X11R6.6 changes merged. Gettys and Packard had taken the last version of XFree86 under the old license and, by making a point of an open development model and retaining GPL compatibility, brought many of the old XFree86 developers on board.

While X11 had received extensions such as OpenGL support during the 1990s, its architecture had remained fundamentally unchanged during the decade. In the early part of the 2000s, however, it was overhauled to resolve a number of problems that had surfaced over the years, including a "flawed" font architecture, a 2D graphics system "which had always been intended to be augmented and/or replaced", and latency issues. X11R6.8 came out in September 2004. It added significant new features, including preliminary support for translucent windows and other sophisticated visual effects, screen magnifiers and thumbnailers, and facilities to integrate with 3D immersive display systems such as Sun's Project Looking Glass and the Croquet project. External applications called compositing window managers provide policy for the visual appearance.

On 21 December 2005, X.Org released X11R6.9, the monolithic source tree for legacy users, and X11R7.0, the same source code separated into independent modules, each maintainable in separate projects. The Foundation released X11R7.1 on 22 May 2006, about four months after 7.0, with considerable feature improvements.

XFree86 development continued for a few more years, 4.8.0 being released on 15 December 2008.

Nomenclature

The proper names for the system are listed in the manual page as X; X Window System; X Version 11; X Window System, Version 11; or X11.

The term "X-Windows" (in the manner of the subsequently released "Microsoft Windows") is not officially endorsed – with X Consortium release manager Matt Landau stating in 1993, "There is no such thing as 'X Windows' or 'X Window', despite the repeated misuse of the forms by the trade rags" – though it has been in common informal use since early in the history of X and has been used deliberately for provocative effect, for example in the Unix-Haters Handbook.

Key terms

The X Window System has nuanced usage of a number of terms when compared to common usage, particularly "display" and "screen", a subset of which is given here for convenience:

- device

- A graphics device such as a computer graphics card or a computer motherboard's integrated graphics chipset.

- monitor

- A physical device such as a CRT or a flat screen computer display.

- screen

- An area into which graphics may be rendered, either through software alone into system memory as with VNC, or within a graphics device, some of which can render into more than one screen simultaneously, either viewable simultaneously or interchangeably. Interchangeable screens are often set up to be notionally left and right from one another, flipping from one to the next as the mouse pointer reaches the edge of the monitor.

- virtual screen

- Two different meanings are associated with this term:

- A technique allowing panning a monitor around a screen running at a larger resolution than the monitor is currently displaying.

- An effect simulated by a window manager by maintaining window position information in a larger coordinate system than the screen and allowing panning by simply moving the windows in response to the user.

- display

- A collection of screens, often involving multiple monitors, generally configured to allow the mouse to move the pointer to any position within them. Linux-based workstations are usually capable of having multiple displays, among which the user can switch with a special keyboard combination such as control-alt-function-key, simultaneously flipping all the monitors from showing the screens of one display to the screens in another.

The term "display" should not be confused with the more specialized jargon "Zaphod display". The latter is a rare configuration allowing multiple users of a single computer to each have an independent set of display, mouse, and keyboard, as though they were using separate computers, but at a lower per-seat cost.

Release history

| Version | Release date | Most important changes |

|---|---|---|

| X1 | June 1984 | First use of the name "X"; fundamental changes distinguishing the product from W. |

| X6 | January 1985 | First version licensed to a handful of outside companies. |

| X9 | September 1985 | Color. First release under MIT License. |

| X10 | November 1985 | IBM RT PC, AT (running DOS), and others. |

| X10R2 | January 1986 |

|

| X10R3 | February 1986 | First freely redistributable X release. Earlier releases required a BSD source license to cover code changes to init/getty to support login. uwm made standard window manager. |

| X10R4 | December 1986 | Last version of X10. |

| X11 | 15 September 1987 | First release of the current protocol. |

| X11R2 | February 1988 | First X Consortium release. |

| X11R3 | 25 October 1988 | XDM. |

| X11R4 | 22 December 1989 | XDMCP, twm (from the beginning known as Tom's window manager) brought in as standard window manager, application improvements, shape extension, new fonts. |

| X11R5 | 5 September 1991 | X386 1.2, PEX, Xcms (color management), font server, X video extension. |

| X11R6 | 16 May 1994 | ICCCM v2.0; Inter-Client Exchange; X Session Management; X Synchronization extension; X Image extension; XTEST extension; X Input; X Big Requests; XC-MISC; XFree86 changes. |

| X11R6.1 | 14 March 1996 | X Double Buffer extension; X keyboard extension; X Record extension. |

| X11R6.2 X11R6.3 |

23 December 1996 | Web functionality, LBX. Last X Consortium release. X11R6.2 is the tag for a subset of X11R6.3 (Broadway) with the only new features over R6.1 being XPrint and the Xlib implementation of vertical writing and user-defined character support. Broadway was the code name for running X applications on a web browser via a browser plugin and Low Bandwidth X. |

| X11R6.4 | 31 March 1998 | Xinerama. |

| X11R6.5 | 2000 | Internal X.org release; not made publicly available. |

| X11R6.5.1 | 20 August 2000 |

|

| X11R6.6 | 4 April 2001 | Bug fixes, XFree86 changes. |

| X11R6.7.0 | 6 April 2004 | First X.Org Foundation release, incorporating XFree86 4.4rc2. Full end-user distribution. Removal of XIE, PEX and libxml2. |

| X11R6.8.0 | 8 September 2004 | Window translucency, XDamage, Distributed Multihead X, XFixes, Composite, XEvIE. |

| X11R6.8.1 | 17 September 2004 | Security fix in libxpm. |

| X11R6.8.2 | 10 February 2005 | Bug fixes, driver updates. |

| X11R6.9 X11R7.0 |

21 December 2005 | XServer 1.0.1, EXA, major source code refactoring. From the same source-code base, the modular autotooled version became 7.0 and the monolithic imake version was frozen at 6.9. |

| X11R7.1 | 22 May 2006 | XServer 1.1.0, EXA enhancements, KDrive integrated, AIGLX, OS and platform support enhancements. |

| X11R7.2 | 15 February 2007 | XServer 1.2.0, Removal of LBX and the built-in keyboard driver, X-ACE, XCB, autoconfig improvements, cleanups. |

| X11R7.3 | 6 September 2007 | XServer 1.4.0, Input hotplug, output hotplug (RandR 1.2), DTrace probes, PCI domain support. |

| X11R7.4 | 23 September 2008 | XServer 1.5.1, XACE, PCI-rework, EXA speed-ups, _X_EXPORT, GLX 1.4, faster startup and shutdown. |

| X11R7.5 | 26 October 2009 | XServer 1.7.1, Xi 2, XGE, E-EDID support, RandR 1.3, MPX, predictable pointer acceleration, DRI2 memory manager, SELinux security module, further removal of obsolete libraries and extensions. |

| X11R7.6 | 20 December 2010 | X Server 1.9.3, XCB requirement. |

| X11R7.7 | 6 June 2012 | X Server 1.12.2; Sync extension 3.1: adds Fence object support; Xi 2.2 multitouch support; XFixes 5.0: Pointer Barriers. |

Old version Latest version | ||

On the prospect of future versions, the X.org website states:

X.Org continues to develop and release the X Window System software components.

These are released individually as each component is ready, without waiting for a overall X Window System "katamari" release schedule - see the individual X.Org releases directory for downloads, and the xorg-announce archives or git repositories for details on included changes.

No release plan for a X11R7.8 rollup katamari release has been proposed.