Up to 10% of invasive cancers are related to radiation exposure, including both ionizing radiation and non-ionizing radiation. Additionally, the vast majority of non-invasive cancers are non-melanoma skin cancers caused by non-ionizing ultraviolet radiation.

Ultraviolet's position on the electromagnetic spectrum is on the

boundary between ionizing and non-ionizing radiation. Non-ionizing radio frequency radiation from mobile phones, electric power transmission, and other similar sources have been described as a possible carcinogen by the World Health Organization's International Agency for Research on Cancer, but the link remains unproven.

Exposure to ionizing radiation is known to increase the future incidence of cancer, particularly leukemia. The mechanism by which this occurs is well understood, but quantitative models predicting the level of risk remain controversial. The most widely accepted model posits that the incidence of cancers due to ionizing radiation increases linearly with effective radiation dose at a rate of 5.5% per sievert. If the linear model is correct, then natural background radiation is the most hazardous source of radiation to general public health, followed by medical imaging as a close second.

Exposure to ionizing radiation is known to increase the future incidence of cancer, particularly leukemia. The mechanism by which this occurs is well understood, but quantitative models predicting the level of risk remain controversial. The most widely accepted model posits that the incidence of cancers due to ionizing radiation increases linearly with effective radiation dose at a rate of 5.5% per sievert. If the linear model is correct, then natural background radiation is the most hazardous source of radiation to general public health, followed by medical imaging as a close second.

Causes

According

to the prevalent model, any radiation exposure can increase the risk of

cancer. Typical contributors to such risk include natural background

radiation, medical procedures, occupational exposures, nuclear

accidents, and many others. Some major contributors are discussed below.

Radon

Radon is responsible for the worldwide majority of the mean public exposure to ionizing radiation.

It is often the single largest contributor to an individual's

background radiation dose, and is the most variable from location to

location. Radon gas from natural sources can accumulate in buildings,

especially in confined areas such as attics, and basements. It can also

be found in some spring waters and hot springs.

Epidemiological evidence shows a clear link between lung cancer

and high concentrations of radon, with 21,000 radon-induced U.S. lung

cancer deaths per year—second only to cigarette smoking—according to the

United States Environmental Protection Agency. Thus in geographic areas where radon is present in heightened concentrations, radon is considered a significant indoor air contaminant.

Residential exposure to radon gas has similar cancer risks as passive smoking.

Radiation is a more potent source of cancer when it is combined with

other cancer-causing agents, such as radon gas exposure plus smoking

tobacco.

Medical

In industrialized countries, Medical imaging

contributes almost as much radiation dose to the public as natural

background radiation. Collective dose to Americans from medical imaging

grew by a factor of six from 1990 to 2006, mostly due to growing use of

3D scans that impart much more dose per procedure than traditional radiographs.

CT scans alone, which account for half the medical imaging dose to the

public, are estimated to be responsible for 0.4% of current cancers in

the United States, and this may increase to as high as 1.5-2% with 2007

rates of CT usage; however, this estimate is disputed. Other nuclear medicine techniques involve the injection of radioactive pharmaceuticals directly into the bloodstream, and radiotherapy treatments deliberately deliver lethal doses (on a cellular level) to tumors and surrounding tissues.

It has been estimated that CT scans performed in the US in 2007 alone will result in 29,000 new cancer cases in future years. This estimate is criticized by the American College of Radiology

(ACR), which maintains that the life expectancy of CT scanned patients

is not that of the general population and that the model of calculating

cancer is based on total-body radiation exposure and thus faulty.

Occupational

In

accordance with ICRP recommendations, most regulators permit nuclear

energy workers to receive up to 20 times more radiation dose than is

permitted for the general public.

Higher doses are usually permitted when responding to an emergency. The

majority of workers are routinely kept well within regulatory limits,

while a few essential technicians will routinely approach their maximum

each year. Accidental overexposures beyond regulatory limits happen

globally several times a year. Astronauts on long missions are at higher risk of cancer, see cancer and spaceflight.

Some occupations are exposed to radiation without being classed

as nuclear energy workers. Airline crews receive occupational exposures

from cosmic radiation

because of reduced atmospheric shielding at altitude. Mine workers

receive occupational exposures to radon, especially in uranium mines.

Anyone working in a granite building, such as the US Capitol, is likely to receive a dose from natural uranium in the granite.

Accidental

Chernobyl radiation map from 1996

Nuclear accidents can have dramatic consequences to their

surroundings, but their global impact on cancer is less than that of

natural and medical exposures.

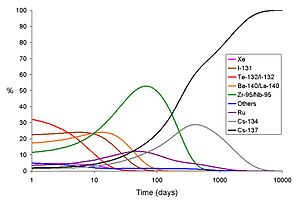

The most severe nuclear accident is probably the Chernobyl disaster. In addition to conventional fatalities and acute radiation syndrome fatalities, nine children died of thyroid cancer,

and it is estimated that there may be up to 4,000 excess cancer deaths

among the approximately 600,000 most highly exposed people. Of the 100 million curies (4 exabecquerels) of radioactive material, the short lived radioactive isotopes such as 131I

Chernobyl released were initially the most dangerous. Due to their

short half-lives of 5 and 8 days they have now decayed, leaving the more

long-lived 137Cs (with a half-life of 30.07 years) and 90Sr (with a half-life of 28.78 years) as main dangers.

In March 2011, an earthquake and tsunami caused damage that led to explosions and partial meltdowns at the Fukushima I Nuclear Power Plant

in Japan. Significant release of radioactive material took place

following hydrogen explosions at three reactors, as technicians tried

to pump in seawater to keep the uranium fuel rods cool, and bled

radioactive gas from the reactors in order to make room for the

seawater.

Concerns about the large-scale release of radioactivity resulted in

20 km exclusion zone being set up around the power plant and people

within the 20–30 km zone being advised to stay indoors. On March 24,

2011, Japanese officials announced that "radioactive iodine-131

exceeding safety limits for infants had been detected at 18

water-purification plants in Tokyo and five other prefectures".

Other serious radiation accidents include the Kyshtym disaster (estimated 49 to 55 cancer deaths), and the Windscale fire (an estimated 33 cancer deaths).

Mechanism

Cancer is a stochastic effect of radiation, meaning that the probability of occurrence increases with effective radiation dose, but the severity of the cancer is independent of dose. The speed at which cancer advances, the prognosis,

the degree of pain, and every other feature of the disease are not

functions of the radiation dose to which the person is exposed. This

contrasts with the deterministic effects of acute radiation syndrome which increase in severity with dose above a threshold. Cancer starts with a single cell whose operation is disrupted. Normal cell operation is controlled by the chemical structure of DNA molecules, also called chromosomes.

When radiation deposits enough energy in organic tissue to cause ionization,

this tends to break molecular bonds, and thus alter the molecular

structure of the irradiated molecules. Less energetic radiation, such as

visible light, only causes excitation,

not ionization, which is usually dissipated as heat with relatively

little chemical damage. Ultraviolet light is usually categorized as

non-ionizing, but it is actually in an intermediate range that produces

some ionization and chemical damage. Hence the carcinogenic mechanism of

ultraviolet radiation is similar to that of ionizing radiation.

Unlike chemical or physical triggers for cancer, penetrating radiation hits molecules within cells randomly. Molecules broken by radiation can become highly reactive free radicals that cause further chemical damage. Some of this direct and indirect damage will eventually impact chromosomes and epigenetic

factors that control the expression of genes. Cellular mechanisms will

repair some of this damage, but some repairs will be incorrect and some chromosome abnormalities will turn out to be irreversible.

DNA double-strand breaks (DSBs) are generally accepted to be the most biologically significant lesion by which ionizing radiation causes cancer. In vitro experiments show that ionizing radiation cause DSBs at a rate of 35 DSBs per cell per Gray, and removes a portion of the epigenetic markers of the DNA, which regulate the gene expression. Most of the induced DSBs are repaired

within 24h after exposure, however, 25% of the repaired strands are

repaired incorrectly and about 20% of fibroblast cells that were exposed

to 200 mGy died within 4 days after exposure.

A portion of the population possess a flawed DNA repair mechanism, and

thus suffer a greater insult due to exposure to radiation.

Major damage normally results in the cell dying

or being unable to reproduce. This effect is responsible for acute

radiation syndrome, but these heavily damaged cells cannot become

cancerous. Lighter damage may leave a stable, partly functional cell

that may be capable of proliferating and eventually developing into

cancer, especially if tumor suppressor genes are damaged.

The latest research suggests that mutagenic events do not occur

immediately after irradiation. Instead, surviving cells appear to have

acquired a genomic instability which causes an increased rate of

mutations in future generations. The cell will then progress through

multiple stages of neoplastic transformation

that may culminate into a tumor after years of incubation. The

neoplastic transformation can be divided into three major independent

stages: morphological changes to the cell, acquisition of cellular immortality (losing normal, life-limiting cell regulatory processes), and adaptations that favor formation of a tumor.

In some cases, a small radiation dose reduces the impact of a

subsequent, larger radiation dose. This has been termed an 'adaptive

response' and is related to hypothetical mechanisms of hormesis.

A latent period

of decades may elapse between radiation exposure and the detection of

cancer. Those cancers that may develop as a result of radiation exposure

are indistinguishable from those that occur naturally or as a result of

exposure to other carcinogens. Furthermore, National Cancer Institute literature indicates that chemical and physical hazards and lifestyle factors, such as smoking, alcohol

consumption, and diet, significantly contribute to many of these same

diseases. Evidence from uranium miners suggests that smoking may have a

multiplicative, rather than additive, interaction with radiation.

Evaluations of radiation's contribution to cancer incidence can only be

done through large epidemiological studies with thorough data about all

other confounding risk factors.

Skin cancer

Prolonged exposure to ultraviolet radiation from the sun can lead to melanoma and other skin malignancies. Clear evidence establishes ultraviolet radiation, especially the non-ionizing medium wave UVB, as the cause of most non-melanoma skin cancers, which are the most common forms of cancer in the world.

Skin cancer may occur following ionizing radiation exposure following a latent period averaging 20 to 40 years. A Chronic radiation keratosis is a precancerous keratotic skin lesion that may arise on the skin many years after exposure to ionizing radiation. Various malignancies may develop, most frequency basal-cell carcinoma followed by squamous-cell carcinoma. Elevated risk is confined to the site of radiation exposure. Several studies have also suggested the possibility of a causal relationship between melanoma and ionizing radiation exposure.

The degree of carcinogenic risk arising from low levels of exposure is

more contentious, but the available evidence points to an increased

risk that is approximately proportional to the dose received. Radiologists and radiographers

are among the earliest occupational groups exposed to radiation. It was

the observation of the earliest radiologists that led to the

recognition of radiation-induced skin cancer—the first solid cancer

linked to radiation—in 1902.

While the incidence of skin cancer secondary to medical ionizing

radiation was higher in the past, there is also some evidence that risks

of certain cancers, notably skin cancer, may be increased among more

recent medical radiation workers, and this may be related to specific or

changing radiologic practices. Available evidence indicates that the excess risk of skin cancer lasts for 45 years or more following irradiation.

Epidemiology

Cancer

is a stochastic effect of radiation, meaning that it only has a

probability of occurrence, as opposed to deterministic effects which

always happen over a certain dose threshold. The consensus of the

nuclear industry, nuclear regulators, and governments, is that the

incidence of cancers due to ionizing radiation can be modeled as

increasing linearly with effective radiation dose at a rate of 5.5% per sievert.

Individual studies, alternate models, and earlier versions of the

industry consensus have produced other risk estimates scattered around

this consensus model. There is general agreement that the risk is much

higher for infants and fetuses than adults, higher for the middle-aged

than for seniors, and higher for women than for men, though there is no

quantitative consensus about this.

This model is widely accepted for external radiation, but its

application to internal contamination is disputed. For example, the

model fails to account for the low rates of cancer in early workers at Los Alamos National Laboratory who were exposed to plutonium dust, and the high rates of thyroid cancer in children following the Chernobyl accident,

both of which were internal exposure events. The European Committee on

Radiation Risk calls the ICRP model "fatally flawed" when it comes to

internal exposure.

Radiation can cause cancer in most parts of the body, in all

animals, and at any age, although radiation-induced solid tumors usually

take 10–15 years, and can take up to 40 years, to become clinically

manifest, and radiation-induced leukemias typically require 2–9 years to appear. Some people, such as those with nevoid basal cell carcinoma syndrome or retinoblastoma, are more susceptible than average to developing cancer from radiation exposure.

Children and adolescents are twice as likely to develop

radiation-induced leukemia as adults; radiation exposure before birth

has ten times the effect.

Radiation exposure can cause cancer in any living tissue, but

high-dose whole-body external exposure is most closely associated with leukemia, reflecting the high radiosensitivity of bone marrow. Internal exposures tend to cause cancer in the organs where the radioactive material concentrates, so that radon predominantly causes lung cancer, iodine-131 for thyroid cancer is most likely to cause leukemia.

Data sources

Increased Risk of Solid Cancer with Dose for A-bomb survivors

The associations between ionizing radiation exposure and the development of cancer are based primarily on the "LSS cohort" of Japanese atomic bomb survivors,

the largest human population ever exposed to high levels of ionizing

radiation. However this cohort was also exposed to high heat, both from

the initial nuclear flash of infrared light and following the blast due their exposure to the firestorm and general fires that developed in both cities respectively, so the survivors also underwent Hyperthermia therapy

to various degrees. Hyperthermia, or heat exposure following

irradiation is well known in the field of radiation therapy to markedly

increase the severity of free-radical insults to cells following

irradiation. Presently however no attempts have been made to cater for

this confounding factor, it is not included or corrected for in the dose-response curves for this group.

Additional data has been collected from recipients of selected medical procedures and the 1986 Chernobyl disaster. There is a clear link (see the UNSCEAR 2000 Report, Volume 2: Effects)

between the Chernobyl accident and the unusually large number,

approximately 1,800, of thyroid cancers reported in contaminated areas,

mostly in children.

For low levels of radiation, the biological effects are so small

they may not be detected in epidemiological studies. Although radiation

may cause cancer at high doses and high dose rates, public health

data regarding lower levels of exposure, below about 10 mSv (1,000

mrem), are harder to interpret. To assess the health impacts of lower radiation doses,

researchers rely on models of the process by which radiation causes

cancer; several models that predict differing levels of risk have

emerged.

Studies of occupational workers exposed to chronic low levels of

radiation, above normal background, have provided mixed evidence

regarding cancer and transgenerational effects. Cancer results, although

uncertain, are consistent with estimates of risk based on atomic bomb

survivors and suggest that these workers do face a small increase in the

probability of developing leukemia and other cancers. One of the most

recent and extensive studies of workers was published by Cardis, et al. in 2005 . There is evidence that low level, brief radiation exposures are not harmful.

Modelling

Alternative

assumptions for the extrapolation of the cancer risk vs. radiation dose

to low-dose levels, given a known risk at a high dose: supra-linearity

(A), linear (B), linear-quadratic (C) and hormesis (D).

The linear dose-response model suggests that any increase in dose, no

matter how small, results in an incremental increase in risk. The linear no-threshold model (LNT) hypothesis is accepted by the International Commission on Radiological Protection (ICRP) and regulators around the world. According to this model, about 1% of the global population develop cancer as a result of natural background radiation

at some point in their lifetime. For comparison, 13% of deaths in 2008

are attributed to cancer, so background radiation could plausibly be a

small contributor.

Many parties have criticized the ICRP's adoption of the linear

no-threshold model for exaggerating the effects of low radiation doses.

The most frequently cited alternatives are the “linear quadratic” model

and the “hormesis” model. The linear quadratic model is widely viewed in

radiotherapy as the best model of cellular survival, and it is the best fit to leukemia data from the LSS cohort.

| Linear no-threshold | F(D)=α⋅D |

| Linear quadratic | F(D)=α⋅D+β⋅D2 |

| Hormesis | F(D)=α⋅[D−β] |

In all three cases, the values of alpha and beta must be determined

by regression from human exposure data. Laboratory experiments on

animals and tissue samples is of limited value. Most of the high quality

human data available is from high dose individuals, above 0.1 Sv, so

any use of the models at low doses is an extrapolation that might be

under-conservative or over-conservative. There is not enough human data

available to settle decisively which of these model might be most

accurate at low doses. The consensus has been to assume linear

no-threshold because it the simplest and most conservative of the three.

Radiation hormesis

is the conjecture that a low level of ionizing radiation (i.e., near

the level of Earth's natural background radiation) helps "immunize"

cells against DNA damage from other causes (such as free radicals or

larger doses of ionizing radiation), and decreases the risk of cancer.

The theory proposes that such low levels activate the body's DNA repair

mechanisms, causing higher levels of cellular DNA-repair proteins to be

present in the body, improving the body's ability to repair DNA damage.

This assertion is very difficult to prove in humans (using, for example,

statistical cancer studies) because the effects of very low ionizing

radiation levels are too small to be statistically measured amid the

"noise" of normal cancer rates.

The idea of radiation hormesis is considered unproven by

regulatory bodies. If the hormesis model turns out to be accurate, it is

conceivable that current regulations based on the LNT model will

prevent or limit the hormetic effect, and thus have a negative impact on

health.

Other non-linear effects have been observed, particularly for internal doses. For example, iodine-131 is notable in that high doses of the isotope are sometimes less dangerous than low doses, since they tend to kill thyroid

tissues that would otherwise become cancerous as a result of the

radiation. Most studies of very-high-dose I-131 for treatment of Graves disease

have failed to find any increase in thyroid cancer, even though there

is linear increase in thyroid cancer risk with I-131 absorption at

moderate doses.

Public safety

Low-dose exposures, such as living near a nuclear power plant or a coal-fired power plant,

which has higher emissions than nuclear plants, are generally believed

to have no or very little effect on cancer development, barring

accidents. Greater concerns include radon in buildings and overuse of medical imaging.

The International Commission on Radiological Protection

(ICRP) recommends limiting artificial irradiation of the public to an

average of 1 mSv (0.001 Sv) of effective dose per year, not including

medical and occupational exposures.

For comparison, radiation levels inside the US capitol building are

0.85 mSv/yr, close to the regulatory limit, because of the uranium

content of the granite structure.

According to the ICRP model, someone who spent 20 years inside the

capitol building would have an extra one in a thousand chance of getting

cancer, over and above any other existing risk. (20 yr X 0.85 mSv/yr X

0.001 Sv/mSv X 5.5%/Sv = ~0.1%) That "existing risk" is much higher; an

average American would have a one in ten chance of getting cancer during

this same 20-year period, even without any exposure to artificial

radiation.

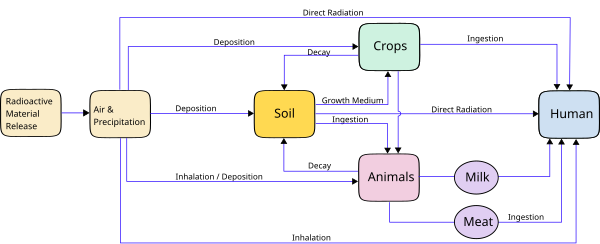

Internal contamination due to ingestion, inhalation, injection,

or absorption is a particular concern because the radioactive material

may stay in the body for an extended period of time, "committing" the

subject to accumulating dose long after the initial exposure has ceased,

albeit at low dose rates. Over a hundred people, including Eben Byers and the radium girls, have received committed doses

in excess of 10 Gy and went on to die of cancer or natural causes,

whereas the same amount of acute external dose would invariably cause an

earlier death by acute radiation syndrome.

Internal exposure of the public is controlled by regulatory

limits on the radioactive content of food and water. These limits are

typically expressed in becquerel/kilogram, with different limits set for each contaminant.

History

Although

radiation was discovered in late 19th century, the dangers of

radioactivity and of radiation were not immediately recognized. Acute

effects of radiation were first observed in the use of X-rays when Wilhelm Röntgen

intentionally subjected his fingers to X-rays in 1895. He published his

observations concerning the burns that developed, though he attributed

them to ozone rather than to X-rays. His injuries healed later.

The genetic effects of radiation, including the effects on cancer risk, were recognized much later. In 1927 Hermann Joseph Muller published research showing genetic effects, and in 1946 was awarded the Nobel prize for his findings. Radiation was soon linked to bone cancer in the radium dial painters,

but this was not confirmed until large-scale animal studies after World

War II. The risk was then quantified through long-term studies of atomic bomb survivors.

Before the biological effects of radiation were known, many

physicians and corporations had begun marketing radioactive substances

as patent medicine and radioactive quackery. Examples were radium enema treatments, and radium-containing waters to be drunk as tonics. Marie Curie

spoke out against this sort of treatment, warning that the effects of

radiation on the human body were not well understood. Curie later died

of aplastic anemia, not cancer. Eben Byers, a famous American socialite, died of multiple cancers in 1932 after consuming large quantities of radium

over several years; his death drew public attention to dangers of

radiation. By the 1930s, after a number of cases of bone necrosis and

death in enthusiasts, radium-containing medical products had nearly

vanished from the market.

In the United States, the experience of the so-called Radium Girls,

where thousands of radium-dial painters contracted oral cancers,

popularized the warnings of occupational health associated with

radiation hazards. Robley D. Evans, at MIT, developed the first standard for permissible body burden of radium, a key step in the establishment of nuclear medicine as a field of study. With the development of nuclear reactors and nuclear weapons in the 1940s, heightened scientific attention was given to the study of all manner of radiation effects.