From Wikipedia, the free encyclopedia

A theory of everything (TOE or ToE), final theory, ultimate theory, or master theory is a hypothetical single, all-encompassing, coherent theoretical framework of physics that fully explains and links together all physical aspects of the universe. Finding a TOE is one of the major unsolved problems in physics. String theory and M-theory

have been proposed as theories of everything. Over the past few

centuries, two theoretical frameworks have been developed that,

together, most closely resemble a TOE. These two theories upon which all

modern physics rests are general relativity and quantum mechanics. General relativity is a theoretical framework that only focuses on gravity

for understanding the universe in regions of both large scale and high

mass: stars, galaxies, clusters of galaxies, etc. On the other hand,

quantum mechanics is a theoretical framework that only focuses on three

non-gravitational forces for understanding the universe in regions of

both small scale and low mass: sub-atomic particles, atoms, molecules,

etc. Quantum mechanics successfully implemented the Standard Model that describes the three non-gravitational forces – strong nuclear, weak nuclear, and electromagnetic force – as well as all observed elementary particles.

General relativity and quantum mechanics have been thoroughly

proven in their separate fields of relevance. Since the usual domains of

applicability of general relativity and quantum mechanics are so

different, most situations require that only one of the two theories be

used. However, the two theories are considered incompatible in regions of extremely small scale – the Planck scale

– such as those that exist within a black hole or during the beginning

stages of the universe (i.e., the moment immediately following the Big Bang).

To resolve the incompatibility, a theoretical framework revealing a

deeper underlying reality, unifying gravity with the other three

interactions, must be discovered to harmoniously integrate the realms of

general relativity and quantum mechanics into a seamless whole: the TOE

is a single theory that, in principle, is capable of describing all

phenomena in the universe.

In pursuit of this goal, quantum gravity has become one area of active research. One example is string theory, which evolved into a candidate for the TOE, but not without drawbacks (most notably, its lack of currently testable predictions) and controversy. String theory posits that at the beginning of the universe (up to 10−43

seconds after the Big Bang), the four fundamental forces were once a

single fundamental force. According to string theory, every particle in

the universe, at its most microscopic level (Planck length),

consists of varying combinations of vibrating strings (or strands) with

preferred patterns of vibration. String theory further claims that it

is through these specific oscillatory patterns of strings that a

particle of unique mass and force charge is created (that is to say, the

electron is a type of string that vibrates one way, while the up quark is a type of string vibrating another way, and so forth).

Name

Initially, the term theory of everything was used with an ironic reference to various overgeneralized theories. For example, a grandfather of Ijon Tichy – a character from a cycle of Stanisław Lem's science fiction stories of the 1960s – was known to work on the "General Theory of Everything". Physicist Harald Fritzsch used the term in his 1977 lectures in Varenna. Physicist John Ellis claims to have introduced the term into the technical literature in an article in Nature in 1986. Over time, the term stuck in popularizations of theoretical physics research.

Historical antecedents

Antiquity to 19th century

Ancient Babylonian astronomers studied the pattern of the Seven Classical Planets against the background of stars, with their interest being to relate celestial movement to human events (astrology),

and the goal being to predict events by recording events against a time

measure and then look for recurrent patterns. The debate between the

universe having either a beginning or eternal cycles can be traced back to ancient Babylonia.

The natural philosophy of atomism appeared in several ancient traditions. In ancient Greek philosophy, the pre-Socratic philosophers

speculated that the apparent diversity of observed phenomena was due to

a single type of interaction, namely the motions and collisions of

atoms. The concept of 'atom' proposed by Democritus was an early philosophical attempt to unify phenomena observed in nature. The concept of 'atom' also appeared in the Nyaya-Vaisheshika school of ancient Indian philosophy.

Archimedes

was possibly the first philosopher to have described nature with axioms

(or principles) and then deduce new results from them. Any "theory of

everything" is similarly expected to be based on axioms and to deduce

all observable phenomena from them.

Following earlier atomistic thought, the mechanical philosophy of the 17th century posited that all forces could be ultimately reduced to contact forces between the atoms, then imagined as tiny solid particles.

In the late 17th century, Isaac Newton's

description of the long-distance force of gravity implied that not all

forces in nature result from things coming into contact. Newton's work

in his Mathematical Principles of Natural Philosophy dealt with this in a further example of unification, in this case unifying Galileo's work on terrestrial gravity, Kepler's laws of planetary motion and the phenomenon of tides by explaining these apparent actions at a distance under one single law: the law of universal gravitation.

In 1814, building on these results, Laplace famously suggested that a sufficiently powerful intellect

could, if it knew the position and velocity of every particle at a

given time, along with the laws of nature, calculate the position of any

particle at any other time:

An intellect which at a certain

moment would know all forces that set nature in motion, and all

positions of all items of which nature is composed, if this intellect

were also vast enough to submit these data to analysis, it would embrace

in a single formula the movements of the greatest bodies of the

universe and those of the tiniest atom; for such an intellect nothing

would be uncertain and the future just like the past would be present

before its eyes.

— Essai philosophique sur les probabilités, Introduction. 1814

Laplace thus envisaged a combination of gravitation and mechanics as a theory of everything. Modern quantum mechanics implies that uncertainty is inescapable,

and thus that Laplace's vision has to be amended: a theory of

everything must include gravitation and quantum mechanics. Even ignoring

quantum mechanics, chaos theory is sufficient to guarantee that the future of any sufficiently complex mechanical or astronomical system is unpredictable.

In 1820, Hans Christian Ørsted discovered a connection between electricity and magnetism, triggering decades of work that culminated in 1865, in James Clerk Maxwell's theory of electromagnetism. During the 19th and early 20th centuries, it gradually became apparent that many common examples of forces – contact forces, elasticity, viscosity, friction, and pressure – result from electrical interactions between the smallest particles of matter.

In his experiments of 1849–50, Michael Faraday was the first to search for a unification of gravity with electricity and magnetism. However, he found no connection.

In 1900, David Hilbert published a famous list of mathematical problems. In Hilbert's sixth problem,

he challenged researchers to find an axiomatic basis to all of physics.

In this problem he thus asked for what today would be called a theory

of everything.

Early 20th century

In the late 1920s, the new quantum mechanics showed that the chemical bonds between atoms were examples of (quantum) electrical forces, justifying Dirac's

boast that "the underlying physical laws necessary for the mathematical

theory of a large part of physics and the whole of chemistry are thus

completely known".

After 1915, when Albert Einstein published the theory of gravity (general relativity), the search for a unified field theory

combining gravity with electromagnetism began with a renewed interest.

In Einstein's day, the strong and the weak forces had not yet been

discovered, yet, he found the potential existence of two other distinct

forces -gravity and electromagnetism- far more alluring. This launched

his thirty-year voyage in search of the so-called "unified field theory"

that he hoped would show that these two forces are really

manifestations of one grand underlying principle. During these last few

decades of his life, this quixotic quest isolated Einstein from the

mainstream of physics.

Understandably, the mainstream was instead far

more excited about the newly emerging framework of quantum mechanics.

Einstein wrote to a friend in the early 1940s, "I have become a lonely

old chap who is mainly known because he doesn't wear socks and who is

exhibited as a curiosity on special occasions." Prominent contributors

were Gunnar Nordström, Hermann Weyl, Arthur Eddington, David Hilbert, Theodor Kaluza, Oskar Klein (see Kaluza–Klein theory),

and most notably, Albert Einstein and his collaborators. Einstein

intensely searched for, but ultimately failed to find, a unifying

theory.

More than a half a century later, Einstein's dream of discovering a

unified theory has become the Holy Grail of modern physics.

Late 20th century and the nuclear interactions

In the twentieth century, the search for a unifying theory was interrupted by the discovery of the strong and weak

nuclear forces (or interactions), which differ both from gravity and

from electromagnetism. A further hurdle was the acceptance that in a

TOE, quantum mechanics had to be incorporated from the start, rather

than emerging as a consequence of a deterministic unified theory, as

Einstein had hoped.

Gravity and electromagnetism could always peacefully coexist as

entries in a list of classical forces, but for many years it seemed that

gravity could not even be incorporated into the quantum framework, let

alone unified with the other fundamental forces. For this reason, work

on unification, for much of the twentieth century, focused on

understanding the three "quantum" forces: electromagnetism and the weak

and strong forces. The first two were combined in 1967–68 by Sheldon Glashow, Steven Weinberg, and Abdus Salam into the "electroweak" force.

Electroweak unification is a broken symmetry: the electromagnetic and weak forces appear distinct at low energies because the particles carrying the weak force, the W and Z bosons, have non-zero masses of 80.4 GeV/c2 and 91.2 GeV/c2, whereas the photon, which carries the electromagnetic force, is massless. At higher energies Ws and Zs can be created easily and the unified nature of the force becomes apparent.

While the strong and electroweak forces peacefully coexist in the Standard Model

of particle physics, they remain distinct. So far, the quest for a

theory of everything is thus unsuccessful on two points: neither a

unification of the strong and electroweak forces – which Laplace would

have called 'contact forces' – nor a unification of these forces with

gravitation has been achieved.

Modern physics

Conventional sequence of theories

A Theory of Everything would unify all the fundamental interactions of nature: gravitation, strong interaction, weak interaction, and electromagnetism. Because the weak interaction can transform elementary particles

from one kind into another, the TOE should also yield a deep

understanding of the various different kinds of possible particles. The

usual assumed path of theories is given in the following graph, where

each unification step leads one level up:

In this graph, electroweak unification occurs at around 100 GeV, grand unification is predicted to occur at 1016 GeV, and unification of the GUT force with gravity is expected at the Planck energy, roughly 1019 GeV.

Several Grand Unified Theories

(GUTs) have been proposed to unify electromagnetism and the weak and

strong forces. Grand unification would imply the existence of an

electronuclear force; it is expected to set in at energies of the order

of 1016 GeV, far greater than could be reached by any possible Earth-based particle accelerator. Although the simplest GUTs have been experimentally ruled out, the general idea, especially when linked with supersymmetry,

remains a favorite candidate in the theoretical physics community.

Supersymmetric GUTs seem plausible not only for their theoretical

"beauty", but because they naturally produce large quantities of dark

matter, and because the inflationary force may be related to GUT physics

(although it does not seem to form an inevitable part of the theory).

Yet GUTs are clearly not the final answer; both the current standard

model and all proposed GUTs are quantum field theories which require the problematic technique of renormalization to yield sensible answers. This is usually regarded as a sign that these are only effective field theories, omitting crucial phenomena relevant only at very high energies.

The final step in the graph requires resolving the separation between quantum mechanics and gravitation, often equated with general relativity. Numerous researchers concentrate their efforts on this specific step; nevertheless, no accepted theory of quantum gravity –

and thus no accepted theory of everything – has emerged yet. It is

usually assumed that the TOE will also solve the remaining problems of

GUTs.

In addition to explaining the forces listed in the graph, a TOE

may also explain the status of at least two candidate forces suggested

by modern cosmology: an inflationary force and dark energy. Furthermore, cosmological experiments also suggest the existence of dark matter,

supposedly composed of fundamental particles outside the scheme of the

standard model. However, the existence of these forces and particles has

not been proven.

String theory and M-theory

Since the 1990s, some physicists such as Edward Witten believe that 11-dimensional M-theory, which is described in some limits by one of the five perturbative superstring theories, and in another by the maximally-supersymmetric 11-dimensional supergravity, is the theory of everything. However, there is no widespread consensus on this issue.

A surprising property of string/M-theory

is that extra dimensions are required for the theory's consistency. In

this regard, string theory can be seen as building on the insights of

the Kaluza–Klein theory,

in which it was realized that applying general relativity to a

five-dimensional universe (with one of them small and curled up) looks from the four-dimensional perspective like the usual general relativity together with Maxwell's electrodynamics. This lent credence to the idea of unifying gauge and gravity

interactions, and to extra dimensions, but did not address the detailed

experimental requirements. Another important property of string theory

is its supersymmetry, which together with extra dimensions are the two main proposals for resolving the hierarchy problem of the standard model,

which is (roughly) the question of why gravity is so much weaker than

any other force. The extra-dimensional solution involves allowing

gravity to propagate into the other dimensions while keeping other

forces confined to a four-dimensional spacetime, an idea that has been

realized with explicit stringy mechanisms.

Research into string theory has been encouraged by a variety of

theoretical and experimental factors. On the experimental side, the

particle content of the standard model supplemented with neutrino masses fits into a spinor representation of SO(10), a subgroup of E8 that routinely emerges in string theory, such as in heterotic string theory or (sometimes equivalently) in F-theory. String theory has mechanisms that may explain why fermions come in three hierarchical generations, and explain the mixing rates between quark generations. On the theoretical side, it has begun to address some of the key questions in quantum gravity, such as resolving the black hole information paradox, counting the correct entropy of black holes and allowing for topology-changing processes. It has also led to many insights in pure mathematics and in ordinary, strongly-coupled gauge theory due to the Gauge/String duality.

In the late 1990s, it was noted that one major hurdle in this

endeavor is that the number of possible four-dimensional universes is

incredibly large. The small, "curled up" extra dimensions can be compactified in an enormous number of different ways (one estimate is 10500 ) each of which leads to different properties for the low-energy particles and forces. This array of models is known as the string theory landscape.

One proposed solution is that many or all of these possibilities

are realised in one or another of a huge number of universes, but that

only a small number of them are habitable. Hence what we normally

conceive as the fundamental constants of the universe are ultimately the

result of the anthropic principle rather than dictated by theory. This has led to criticism of string theory, arguing that it cannot make useful (i.e., original, falsifiable, and verifiable) predictions and regarding it as a pseudoscience. Others disagree, and string theory remains an active topic of investigation in theoretical physics.

Loop quantum gravity

Current research on loop quantum gravity may eventually play a fundamental role in a TOE, but that is not its primary aim. Also loop quantum gravity introduces a lower bound on the possible length scales.

There have been recent claims that loop quantum gravity may be able to reproduce features resembling the Standard Model. So far only the first generation of fermions (leptons and quarks) with correct parity properties have been modelled by Sundance Bilson-Thompson using preons constituted of braids of spacetime as the building blocks. However, there is no derivation of the Lagrangian

that would describe the interactions of such particles, nor is it

possible to show that such particles are fermions, nor that the gauge

groups or interactions of the Standard Model are realised. Utilization

of quantum computing concepts made it possible to demonstrate that the particles are able to survive quantum fluctuations.

This model leads to an interpretation of electric and colour

charge as topological quantities (electric as number and chirality of

twists carried on the individual ribbons and colour as variants of such

twisting for fixed electric charge).

Bilson-Thompson's original paper suggested that the

higher-generation fermions could be represented by more complicated

braidings, although explicit constructions of these structures were not

given. The electric charge, colour, and parity properties of such

fermions would arise in the same way as for the first generation. The

model was expressly generalized for an infinite number of generations

and for the weak force bosons (but not for photons or gluons) in a 2008

paper by Bilson-Thompson, Hackett, Kauffman and Smolin.

Other attempts

Among other attempts to develop a theory of everything is the theory of causal fermion systems, giving the two current physical theories (general relativity and quantum field theory) as limiting cases.

Another theory is called Causal Sets.

As some of the approaches mentioned above, its direct goal isn't

necessarily to achieve a TOE but primarily a working theory of quantum

gravity, which might eventually include the standard model and become a

candidate for a TOE. Its founding principle is that spacetime is

fundamentally discrete and that the spacetime events are related by a partial order. This partial order has the physical meaning of the causality relations between relative past and future distinguishing spacetime events.

Outside the previously mentioned attempts there is Garrett Lisi's E8 proposal.

This theory attempts to construct general relativity and the standard

model within the Lie group E8. The theory doesn't provide a novel

quantization procedure and the author suggests its quantization might

follow the Loop Quantum Gravity approach above mentioned.

Causal dynamical triangulation

does not assume any pre-existing arena (dimensional space), but rather

attempts to show how the spacetime fabric itself evolves.

Christoph Schiller's Strand Model attempts to account for the gauge symmetry of the Standard Model of particle physics, U(1)×SU(2)×SU(3), with the three Reidemeister moves of knot theory by equating each elementary particle to a different tangle of one, two, or three strands (selectively a long prime knot or unknotted curve, a rational tangle, or a braided tangle respectively).

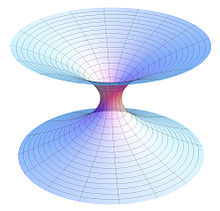

Another attempt may be related to ER=EPR, a conjecture in physics stating that entangled particles are connected by a wormhole (or Einstein–Rosen bridge).

Present status

At

present, there is no candidate theory of everything that includes the

standard model of particle physics and general relativity and that, at

the same time, is able to calculate the fine structure constant or the mass of the electron. Most particle physicists expect that the outcome of the ongoing experiments – the search for new particles at the large particle accelerators and for dark matter – are needed in order to provide further input for a TOE.

Arguments against

In parallel to the intense search for a TOE, various scholars have seriously debated the possibility of its discovery.

Gödel's incompleteness theorem

A number of scholars claim that Gödel's incompleteness theorem

suggests that any attempt to construct a TOE is bound to fail. Gödel's

theorem, informally stated, asserts that any formal theory sufficient to

express elementary arithmetical facts and strong enough for them to be

proved is either inconsistent (both a statement and its denial can be

derived from its axioms) or incomplete, in the sense that there is a

true statement that can't be derived in the formal theory.

Stanley Jaki, in his 1966 book The Relevance of Physics,

pointed out that, because any "theory of everything" will certainly be a

consistent non-trivial mathematical theory, it must be incomplete. He

claims that this dooms searches for a deterministic theory of

everything.

Freeman Dyson

has stated that "Gödel's theorem implies that pure mathematics is

inexhaustible. No matter how many problems we solve, there will always

be other problems that cannot be solved within the existing rules. […]

Because of Gödel's theorem, physics is inexhaustible too. The laws of

physics are a finite set of rules, and include the rules for doing

mathematics, so that Gödel's theorem applies to them."

Stephen Hawking

was originally a believer in the Theory of Everything, but after

considering Gödel's Theorem, he concluded that one was not obtainable.

"Some people will be very disappointed if there is not an ultimate

theory that can be formulated as a finite number of principles. I used

to belong to that camp, but I have changed my mind."

Jürgen Schmidhuber (1997) has argued against this view; he points out that Gödel's theorems are irrelevant for computable physics. In 2000, Schmidhuber explicitly constructed limit-computable, deterministic universes whose pseudo-randomness based on undecidable, Gödel-like halting problems is extremely hard to detect but does not at all prevent formal TOEs describable by very few bits of information.

Related critique was offered by Solomon Feferman, among others. Douglas S. Robertson offers Conway's game of life as an example:

The underlying rules are simple and complete, but there are formally

undecidable questions about the game's behaviors. Analogously, it may

(or may not) be possible to completely state the underlying rules of

physics with a finite number of well-defined laws, but there is little

doubt that there are questions about the behavior of physical systems

which are formally undecidable on the basis of those underlying laws.

Since most physicists would consider the statement of the

underlying rules to suffice as the definition of a "theory of

everything", most physicists argue that Gödel's Theorem does not

mean that a TOE cannot exist. On the other hand, the scholars invoking

Gödel's Theorem appear, at least in some cases, to be referring not to

the underlying rules, but to the understandability of the behavior of

all physical systems, as when Hawking mentions arranging blocks into

rectangles, turning the computation of prime numbers into a physical question. This definitional discrepancy may explain some of the disagreement among researchers.

Fundamental limits in accuracy

No

physical theory to date is believed to be precisely accurate. Instead,

physics has proceeded by a series of "successive approximations"

allowing more and more accurate predictions over a wider and wider range

of phenomena. Some physicists believe that it

is therefore a mistake to confuse theoretical models with the true

nature of reality, and

hold that the series of approximations will never terminate in the

"truth". Einstein himself

expressed this view on occasions. Following this view, we may reasonably hope for a

theory of everything which self-consistently incorporates all currently

known forces, but we should not expect it to be the final answer.

On the other hand, it is often claimed that, despite the

apparently ever-increasing complexity of the mathematics of each new

theory, in a deep sense associated with their underlying gauge symmetry and the number of dimensionless physical constants, the theories are becoming simpler. If this is the case, the process of simplification cannot continue indefinitely.

Lack of fundamental laws

There is a philosophical debate within the physics community as to whether a theory of everything deserves to be called the fundamental law of the universe. One view is the hard reductionist

position that the TOE is the fundamental law and that all other

theories that apply within the universe are a consequence of the TOE.

Another view is that emergent laws, which govern the behavior of complex systems, should be seen as equally fundamental. Examples of emergent laws are the second law of thermodynamics and the theory of natural selection.

The advocates of emergence argue that emergent laws, especially those

describing complex or living systems are independent of the low-level,

microscopic laws. In this view, emergent laws are as fundamental as a

TOE.

The debates do not make the point at issue clear. Possibly the

only issue at stake is the right to apply the high-status term

"fundamental" to the respective subjects of research. A well-known

debate over this took place between Steven Weinberg and Philip Anderson.

Impossibility of being "of everything"

Although

the name "theory of everything" suggests the determinism of Laplace's

quotation, this gives a very misleading impression. Determinism is

frustrated by the probabilistic nature of quantum mechanical

predictions, by the extreme sensitivity to initial conditions that leads

to mathematical chaos,

by the limitations due to event horizons, and by the extreme

mathematical difficulty of applying the theory. Thus, although the

current standard model of particle physics "in principle" predicts

almost all known non-gravitational phenomena, in practice only a few

quantitative results have been derived from the full theory (e.g., the

masses of some of the simplest hadrons),

and these results (especially the particle masses which are most

relevant for low-energy physics) are less accurate than existing

experimental measurements. The TOE would almost certainly be even harder

to apply for the prediction of experimental results, and thus might be

of limited use.

A motive for seeking a TOE,

apart from the pure intellectual satisfaction of completing a

centuries-long quest, is that prior examples of unification have

predicted new phenomena, some of which (e.g., electrical generators)

have proved of great practical importance. And like in these prior

examples of unification, the TOE would probably allow us to confidently

define the domain of validity and residual error of low-energy

approximations to the full theory.

The theories generally do not account for the apparent phenomena of consciousness or free will, which are instead often the subject of philosophy and religion.

Infinite number of onion layers

Frank Close regularly argues that the layers of nature may be like the layers of an onion, and that the number of layers might be infinite. This would imply an infinite sequence of physical theories.

Impossibility of calculation

Weinberg

points out that calculating the precise motion of an actual projectile

in the Earth's atmosphere is impossible. So how can we know we have an

adequate theory for describing the motion of projectiles? Weinberg

suggests that we know

principles (Newton's laws of motion and

gravitation) that work "well enough" for simple examples, like the

motion of planets in empty space. These principles have worked so well

on simple examples that we can be reasonably confident they will work

for more complex examples. For example, although

general relativity

includes equations that do not have exact solutions, it is widely

accepted as a valid theory because all of its equations with exact

solutions have been experimentally verified. Likewise, a TOE must work

for a wide range of simple examples in such a way that we can be

reasonably confident it will work for every situation in physics.