In calculus, the differential represents the principal part of the change in a function with respect to changes in the independent variable. The differential is defined by

holds, where the derivative is represented in the Leibniz notation , and this is consistent with regarding the derivative as the quotient of the differentials. One also writes

The precise meaning of the variables and depends on the context of the application and the required level of mathematical rigor. The domain of these variables may take on a particular geometrical significance if the differential is regarded as a particular differential form, or analytical significance if the differential is regarded as a linear approximation to the increment of a function. Traditionally, the variables and are considered to be very small (infinitesimal), and this interpretation is made rigorous in non-standard analysis.

History and usage

The differential was first introduced via an intuitive or heuristic definition by Isaac Newton and furthered by Gottfried Leibniz, who thought of the differential dy as an infinitely small (or infinitesimal) change in the value y of the function, corresponding to an infinitely small change dx in the function's argument x. For that reason, the instantaneous rate of change of y with respect to x, which is the value of the derivative of the function, is denoted by the fraction

The use of infinitesimals in this form was widely criticized, for instance by the famous pamphlet The Analyst by Bishop Berkeley. Augustin-Louis Cauchy (1823) defined the differential without appeal to the atomism of Leibniz's infinitesimals. Instead, Cauchy, following d'Alembert, inverted the logical order of Leibniz and his successors: the derivative itself became the fundamental object, defined as a limit of difference quotients, and the differentials were then defined in terms of it. That is, one was free to define the differential by an expression

According to Boyer (1959, p. 12), Cauchy's approach was a significant logical improvement over the infinitesimal approach of Leibniz because, instead of invoking the metaphysical notion of infinitesimals, the quantities and could now be manipulated in exactly the same manner as any other real quantities in a meaningful way. Cauchy's overall conceptual approach to differentials remains the standard one in modern analytical treatments, although the final word on rigor, a fully modern notion of the limit, was ultimately due to Karl Weierstrass.

In physical treatments, such as those applied to the theory of thermodynamics, the infinitesimal view still prevails. Courant & John (1999, p. 184) reconcile the physical use of infinitesimal differentials with the mathematical impossibility of them as follows. The differentials represent finite non-zero values that are smaller than the degree of accuracy required for the particular purpose for which they are intended. Thus "physical infinitesimals" need not appeal to a corresponding mathematical infinitesimal in order to have a precise sense.

Following twentieth-century developments in mathematical analysis and differential geometry, it became clear that the notion of the differential of a function could be extended in a variety of ways. In real analysis, it is more desirable to deal directly with the differential as the principal part of the increment of a function. This leads directly to the notion that the differential of a function at a point is a linear functional of an increment . This approach allows the differential (as a linear map) to be developed for a variety of more sophisticated spaces, ultimately giving rise to such notions as the Fréchet or Gateaux derivative. Likewise, in differential geometry, the differential of a function at a point is a linear function of a tangent vector (an "infinitely small displacement"), which exhibits it as a kind of one-form: the exterior derivative of the function. In non-standard calculus, differentials are regarded as infinitesimals, which can themselves be put on a rigorous footing (see differential (infinitesimal)).

Definition

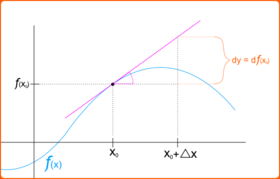

The differential is defined in modern treatments of differential calculus as follows. The differential of a function of a single real variable is the function of two independent real variables and given by

One or both of the arguments may be suppressed, i.e., one may see or simply . If , the differential may also be written as . Since , it is conventional to write so that the following equality holds:

This notion of differential is broadly applicable when a linear approximation to a function is sought, in which the value of the increment is small enough. More precisely, if is a differentiable function at , then the difference in -values

satisfies

where the error in the approximation satisfies as . In other words, one has the approximate identity

in which the error can be made as small as desired relative to by constraining to be sufficiently small; that is to say,

Differentials in several variables

| Operator / Function | ||

|---|---|---|

| Differential | 1: | 2: |

| Partial derivative | ||

| Total derivative |

Following Goursat (1904, I, §15), for functions of more than one independent variable,

the partial differential of y with respect to any one of the variables x1 is the principal part of the change in y resulting from a change dx1 in that one variable. The partial differential is therefore

involving the partial derivative of y with respect to x1. The sum of the partial differentials with respect to all of the independent variables is the total differential

which is the principal part of the change in y resulting from changes in the independent variables xi.

More precisely, in the context of multivariable calculus, following Courant (1937b), if f is a differentiable function, then by the definition of differentiability, the increment

where the error terms ε i tend to zero as the increments Δxi jointly tend to zero. The total differential is then rigorously defined as

Since, with this definition,

As in the case of one variable, the approximate identity holds

in which the total error can be made as small as desired relative to by confining attention to sufficiently small increments.

Application of the total differential to error estimation

In measurement, the total differential is used in estimating the error of a function based on the errors of the parameters . Assuming that the interval is short enough for the change to be approximately linear:

and that all variables are independent, then for all variables,

This is because the derivative with respect to the particular parameter gives the sensitivity of the function to a change in , in particular the error . As they are assumed to be independent, the analysis describes the worst-case scenario. The absolute values of the component errors are used, because after simple computation, the derivative may have a negative sign. From this principle the error rules of summation, multiplication etc. are derived, e.g.:

That is to say, in multiplication, the total relative error is the sum of the relative errors of the parameters.

To illustrate how this depends on the function considered, consider the case where the function is instead. Then, it can be computed that the error estimate is

Higher-order differentials

Higher-order differentials of a function y = f(x) of a single variable x can be defined via:

Similar considerations apply to defining higher order differentials of functions of several variables. For example, if f is a function of two variables x and y, then

Higher order differentials in several variables also become more complicated when the independent variables are themselves allowed to depend on other variables. For instance, for a function f of x and y which are allowed to depend on auxiliary variables, one has

Because of this notational awkwardness, the use of higher order differentials was roundly criticized by Hadamard (1935), who concluded:

Enfin, que signifie ou que représente l'égalité

A mon avis, rien du tout.

That is: Finally, what is meant, or represented, by the equality [...]? In my opinion, nothing at all. In spite of this skepticism, higher order differentials did emerge as an important tool in analysis.

In these contexts, the n-th order differential of the function f applied to an increment Δx is defined by

This definition makes sense as well if f is a function of several variables (for simplicity taken here as a vector argument). Then the n-th differential defined in this way is a homogeneous function of degree n in the vector increment Δx. Furthermore, the Taylor series of f at the point x is given by

Properties

A number of properties of the differential follow in a straightforward manner from the corresponding properties of the derivative, partial derivative, and total derivative. These include:

- Linearity: For constants a and b and differentiable functions f and g,

- Product rule: For two differentiable functions f and g,

An operation d with these two properties is known in abstract algebra as a derivation. They imply the power rule

- If y = f(u) is a differentiable function of the variable u and u = g(x) is a differentiable function of x, then

- If y = f(x1, ..., xn) and all of the variables x1, ..., xn depend on another variable t, then by the chain rule for partial derivatives, one has Heuristically, the chain rule for several variables can itself be understood by dividing through both sides of this equation by the infinitely small quantity dt.

- More general analogous expressions hold, in which the intermediate variables xi depend on more than one variable.

General formulation

A consistent notion of differential can be developed for a function f : Rn → Rm between two Euclidean spaces. Let x,Δx ∈ Rn be a pair of Euclidean vectors. The increment in the function f is

Another fruitful point of view is to define the differential directly as a kind of directional derivative:

Other approaches

Although the notion of having an infinitesimal increment dx is not well-defined in modern mathematical analysis, a variety of techniques exist for defining the infinitesimal differential so that the differential of a function can be handled in a manner that does not clash with the Leibniz notation. These include:

- Defining the differential as a kind of differential form, specifically the exterior derivative of a function. The infinitesimal increments are then identified with vectors in the tangent space at a point. This approach is popular in differential geometry and related fields, because it readily generalizes to mappings between differentiable manifolds.

- Differentials as nilpotent elements of commutative rings. This approach is popular in algebraic geometry.

- Differentials in smooth models of set theory. This approach is known as synthetic differential geometry or smooth infinitesimal analysis and is closely related to the algebraic geometric approach, except that ideas from topos theory are used to hide the mechanisms by which nilpotent infinitesimals are introduced.

- Differentials as infinitesimals in hyperreal number systems, which are extensions of the real numbers which contain invertible infinitesimals and infinitely large numbers. This is the approach of nonstandard analysis pioneered by Abraham Robinson.

Examples and applications

Differentials may be effectively used in numerical analysis to study the propagation of experimental errors in a calculation, and thus the overall numerical stability of a problem (Courant 1937a). Suppose that the variable x represents the outcome of an experiment and y is the result of a numerical computation applied to x. The question is to what extent errors in the measurement of x influence the outcome of the computation of y. If the x is known to within Δx of its true value, then Taylor's theorem gives the following estimate on the error Δy in the computation of y:

The differential is often useful to rewrite a differential equation

![{\displaystyle {\begin{aligned}d^{2}y&=f''(x)\,(dx)^{2}+f'(x)d^{2}x\\[1ex]d^{3}y&=f'''(x)\,(dx)^{3}+3f''(x)dx\,d^{2}x+f'(x)d^{3}x\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1893542067e7bd5f43906eb783f1246de12e6b6e)

![{\displaystyle {\begin{aligned}dy={\frac {dy}{dt}}dt&={\frac {\partial y}{\partial x_{1}}}dx_{1}+\cdots +{\frac {\partial y}{\partial x_{n}}}dx_{n}\\[1ex]&={\frac {\partial y}{\partial x_{1}}}{\frac {dx_{1}}{dt}}\,dt+\cdots +{\frac {\partial y}{\partial x_{n}}}{\frac {dx_{n}}{dt}}\,dt.\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9c3740517b1bfcd59463170b44f560c92ad975d4)

![{\displaystyle fc={\frac {k_{\text{s}}q_{\text{up}}[S_{\text{tot}}]}{I_{c}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/92767aff703c9811fcd59e4023389b4a8bdf304f)