Atheism

From Wikipedia, the free encyclopedia

Atheism is, in a broad sense, the rejection of belief in the existence of deities.[1][2] In a narrower sense, atheism is specifically the position that there are no deities.[3][4][5] Most inclusively, atheism is the absence of belief that any deities exist.[4][5][6][7] Atheism is contrasted with theism,[8][9] which in its most general form is the belief that at least one deity exists.[9][10]

The term atheism originated from the Greek ἄθεος (atheos), meaning "without god(s)", used as a pejorative term applied to those thought to reject the gods worshiped by the larger society. With the spread of freethought, skeptical inquiry, and subsequent increase in criticism of religion, application of the term narrowed in scope. The first individuals to identify themselves using the word "atheist" lived in the 18th century.[11] Some ancient and modern religions are referred to as atheistic, as they either have no concepts of deities or deny a creator deity, yet still revere other god-like entities.

Arguments for atheism range from the philosophical to social and historical approaches. Rationales for not believing in any supernatural deity include the lack of empirical evidence,[12][13] the problem of evil, the argument from inconsistent revelations, rejection of concepts which cannot be falsified, and the argument from nonbelief.[12][14] Although some atheists have adopted secular philosophies,[15][16] there is no one ideology or set of behaviors to which all atheists adhere.[17] Many atheists hold that atheism is a more parsimonious worldview than theism, and therefore the burden of proof lies not on the atheist to disprove the existence of God, but on the theist to provide a rationale for theism.[18]

Since conceptions of atheism vary, determining how many atheists exist in the world today is difficult.[19] According to one 2007 estimate, atheists make up about 2.3% of the world's population, while a further 11.9% are nonreligious.[20] According to a 2012 global poll conducted by WIN/GIA, 13% of the participants say they are atheists.[21] According to other studies, rates of atheism are among the highest in Western nations, again to varying degrees: United States (4%)[22] and Canada (28%).[23] The figures for a 2010 Eurobarometer survey in the European Union (EU), reported that 20% of the EU population do not believe in "any sort of spirit, God or life force".[24]

Writers disagree how best to define and classify atheism,[25] contesting what supernatural entities it applies to, whether it is an assertion in its own right or merely the absence of one, and whether it requires a conscious, explicit rejection. Atheism has been regarded as compatible with agnosticism,[26][27][28][29][30][31][32] and has also been contrasted with it.[33][34][35] A variety of categories have been used to distinguish the different forms of atheism.

With respect to the range of phenomena being rejected, atheism may counter anything from the existence of a deity, to the existence of any spiritual, supernatural, or transcendental concepts, such as those of Buddhism, Hinduism, Jainism and Taoism.[37]

While Martin, for example, asserts that agnosticism entails negative atheism,[29] many agnostics see their view as distinct from atheism,[43][44] which they may consider no more justified than theism or requiring an equal conviction.[43] The assertion of unattainability of knowledge for or against the existence of gods is sometimes seen as indication that atheism requires a leap of faith.[45][46] Common atheist responses to this argument include that unproven religious propositions deserve as much disbelief as all other unproven propositions,[47] and that the unprovability of a god's existence does not imply equal probability of either possibility.[48] Scottish philosopher J. J. C. Smart even argues that "sometimes a person who is really an atheist may describe herself, even passionately, as an agnostic because of unreasonable generalised philosophical skepticism which would preclude us from saying that we know anything whatever, except perhaps the truths of mathematics and formal logic."[49] Consequently, some atheist authors such as Richard Dawkins prefer distinguishing theist, agnostic and atheist positions along a spectrum of theistic probability—the likelihood that each assigns to the statement "God exists".[50]

There is also a position claiming that atheists are quick to believe in God in times of crisis, that atheists make deathbed conversions, or that "there are no atheists in foxholes".[52] There have however been examples to the contrary, among them examples of literal "atheists in foxholes".[53]

Some atheists have doubted the very need for the term "atheism". In his book Letter to a Christian Nation, Sam Harris wrote:

The broadest demarcation of atheistic rationale is between practical and theoretical atheism.

The broadest demarcation of atheistic rationale is between practical and theoretical atheism.

Practical atheism can take various forms:

Other arguments for atheism that can be classified as epistemological or ontological, including logical positivism and ignosticism, assert the meaninglessness or unintelligibility of basic terms such as "God" and statements such as "God is all-powerful." Theological noncognitivism holds that the statement "God exists" does not express a proposition, but is nonsensical or cognitively meaningless. It has been argued both ways as to whether such individuals can be classified into some form of atheism or agnosticism. Philosophers A. J. Ayer and Theodore M. Drange reject both categories, stating that both camps accept "God exists" as a proposition; they instead place noncognitivism in its own category.[60][61]

Theodicean atheists believe that the world as they experience it cannot be reconciled with the qualities commonly ascribed to God and gods by theologians. They argue that an omniscient, omnipotent, and omnibenevolent God is not compatible with a world where there is evil and suffering, and where divine love is hidden from many people.[14] A similar argument is attributed to Siddhartha Gautama, the founder of Buddhism.[64]

French philosopher Jean-Paul Sartre identified himself as a representative of an "atheist existentialism"[78] concerned less with denying the existence of God than with establishing that "man needs ... to find himself again and to understand that nothing can save him from himself, not even a valid proof of the existence of God."[79] Sartre said a corollary of his atheism was that "if God does not exist, there is at least one being in whom existence precedes essence, a being who exists before he can be defined by any concept, and ... this being is man."[78] The practical consequence of this atheism was described by Sartre as meaning that there are no a priori rules or absolute values that can be invoked to govern human conduct, and that humans are "condemned" to invent these for themselves, making "man" absolutely "responsible for everything he does".[80]

People who self-identify as atheists are often assumed to be irreligious, but some sects within major religions reject the existence of a personal, creator deity.[86] In recent years, certain religious denominations have accumulated a number of openly atheistic followers, such as atheistic or humanistic Judaism[87][88] and Christian atheists.[89][90][91]

The strictest sense of positive atheism does not entail any specific beliefs outside of disbelief in any deity; as such, atheists can hold any number of spiritual beliefs. For the same reason, atheists can hold a wide variety of ethical beliefs, ranging from the moral universalism of humanism, which holds that a moral code should be applied consistently to all humans, to moral nihilism, which holds that morality is meaningless.[92]

Philosophers such as Georges Bataille, Slavoj Žižek,[93] Alain de Botton,[94] and Alexander Bard and Jan Söderqvist,[95] have all argued that atheists should reclaim religion as an act of defiance against theism, precisely not to leave religion as an unwarranted monopoly to theists.

There exist normative ethical systems that do not require principles and rules to be given by a deity.

Some include virtue ethics, social contract, Kantian ethics, utilitarianism, and Objectivism. Sam Harris has proposed that moral prescription (ethical rule making) is not just an issue to be explored by philosophy, but that we can meaningfully practice a science of morality. Any such scientific system must, nevertheless, respond to the criticism embodied in the naturalistic fallacy.[103]

Philosophers Susan Neiman[104] and Julian Baggini[105] (among others) assert that behaving ethically only because of divine mandate is not true ethical behavior but merely blind obedience. Baggini argues that atheism is a superior basis for ethics, claiming that a moral basis external to religious imperatives is necessary to evaluate the morality of the imperatives themselves—to be able to discern, for example, that "thou shalt steal" is immoral even if one's religion instructs it—and that atheists, therefore, have the advantage of being more inclined to make such evaluations.[106] The contemporary British political philosopher Martin Cohen has offered the more historically telling example of Biblical injunctions in favour of torture and slavery as evidence of how religious injunctions follow political and social customs, rather than vice versa, but also noted that the same tendency seems to be true of supposedly dispassionate and objective philosophers.[107] Cohen extends this argument in more detail in Political Philosophy from Plato to Mao, where he argues that the Qur'an played a role in perpetuating social codes from the early 7th century despite changes in secular society.[108]

The 19th-century German political theorist and sociologist Karl Marx criticised religion as "the sigh of the oppressed creature, the heart of a heartless world, and the soul of soulless conditions. It is the opium of the people". He goes on to say, "The abolition of religion as the illusory happiness of the people is the demand for their real happiness. To call on them to give up their illusions about their condition is to call on them to give up a condition that requires illusions. The criticism of religion is, therefore, in embryo, the criticism of that vale of tears of which religion is the halo.[110] Lenin said that "every religious idea and every idea of God "is unutterable vileness ... of the most dangerous kind, 'contagion' of the most abominable kind. Millions of sins, filthy deeds, acts of violence and physical contagions ... are far less dangerous than the subtle, spiritual idea of God decked out in the smartest ideological constumes ..."[111]

Sam Harris criticises Western religion's reliance on divine authority as lending itself to authoritarianism and dogmatism.[112] There is a correlation between religious fundamentalism and extrinsic religion (when religion is held because it serves ulterior interests)[113] and authoritarianism, dogmatism, and prejudice.[114] These arguments—combined with historical events that are argued to demonstrate the dangers of religion, such as the Crusades, inquisitions, witch trials, and terrorist attacks—have been used in response to claims of beneficial effects of belief in religion.[115] Believers counter-argue that some regimes that espouse atheism, such as in Soviet Russia, have also been guilty of mass murder.[116][117] In response to those claims, atheists such as Sam Harris and Richard

Dawkins have stated that Stalin's atrocities were influenced not by atheism but by dogmatic Marxism, and that while Stalin and Mao happened to be atheists, they did not do their deeds in the name of atheism.[118][119]

In early ancient Greek, the adjective átheos (ἄθεος, from the privative ἀ- + θεός "god") meant "godless". It was first used as a term of censure roughly meaning "ungodly" or "impious". In the 5th century BCE, the word began to indicate more deliberate and active godlessness in the sense of "severing relations with the gods" or "denying the gods". The term ἀσεβής (asebēs) then came to be applied against those who impiously denied or disrespected the local gods, even if they believed in other gods. Modern translations of classical texts sometimes render átheos as "atheistic". As an abstract noun, there was also ἀθεότης (atheotēs), "atheism". Cicero transliterated the Greek word into the Latin átheos. The term found frequent use in the debate between early Christians and Hellenists, with each side attributing it, in the pejorative sense, to the other.[121]

The term atheist (from Fr. athée), in the sense of "one who ... denies the existence of God or gods",[122] predates atheism in English, being first found as early as 1566,[123] and again in 1571.[124] Atheist as a label of practical godlessness was used at least as early as 1577.[125] The term atheism was derived from the French athéisme,[126] and appears in English about 1587.[127] An earlier work, from about 1534, used the term atheonism.[128][129] Related words emerged later: deist in 1621,[130] theist in 1662,[131] deism in 1675,[132] and theism in 1678.[133] At that time "deist" and "deism" already carried their modern meaning. The term theism came to be contrasted with deism.

Karen Armstrong writes that "During the sixteenth and seventeenth centuries, the word 'atheist' was still reserved exclusively for polemic ... The term 'atheist' was an insult. Nobody would have dreamed of calling himself an atheist."[11]

Atheism was first used to describe a self-avowed belief in late 18th-century Europe, specifically denoting disbelief in the monotheistic Abrahamic god.[134][135] In the 20th century, globalization contributed to the expansion of the term to refer to disbelief in all deities, though it remains common in Western society to describe atheism as simply "disbelief in God".[36]

Western atheism has its roots in pre-Socratic Greek philosophy, but did not emerge as a distinct world-view until the late Enlightenment.[141] The 5th-century BCE Greek philosopher Diagoras is known as the "first atheist",[142] and is cited as such by Cicero in his De Natura Deorum.[143] Atomists such as Democritus attempted to explain the world in a purely materialistic way, without reference to the spiritual or mystical. Critias viewed religion as a human invention used to frighten people into following moral order[144] and Prodicus also appears to have made clear atheistic statements in his work. Philodemus reports that Prodicus believed that "the gods of popular belief do not exist nor do they know, but primitive man, [out of admiration, deified] the fruits of the earth and virtually everything that contributed to his existence". Protagoras has sometimes been taken to be an atheist but rather espoused agnostic views, commenting that "Concerning the gods I am unable to discover whether they exist or not, or what they are like in form; for there are many hindrances to knowledge, the obscurity of the subject and the brevity of human life."[145] In the 3rd-century BCE the Greek philosophers Theodorus Cyrenaicus[143][146] and Strato of Lampsacus[147] did not believe gods exist.

Socrates (c. 470–399 BCE) was associated in the Athenian public mind with the trends in pre-Socratic philosophy towards naturalistic inquiry and the rejection of divine explanations for phenomena. Although such an interpretation misrepresents his thought he was portrayed in such a way in Aristophanes' comic play Clouds and was later to be tried and executed for impiety and corrupting the young. At his trial Socrates is reported as vehemently denying that he was an atheist and contemporary scholarship provides little reason to doubt this claim.[148][149]

Euhemerus (c. 300 BCE) published his view that the gods were only the deified rulers, conquerors and founders of the past, and that their cults and religions were in essence the continuation of vanished kingdoms and earlier political structures.[150] Although not strictly an atheist, Euhemerus was later criticized for having "spread atheism over the whole inhabited earth by obliterating the gods".[151]

Also important in the history of atheism was Epicurus (c. 300 BCE). Drawing on the ideas of Democritus and the Atomists, he espoused a materialistic philosophy according to which the universe was governed by the laws of chance without the need for divine intervention (see scientific determinism). Although he stated that deities existed, he believed that they were uninterested in human existence. The aim of the Epicureans was to attain peace of mind and one important way of doing this was by exposing fear of divine wrath as irrational. The Epicureans also denied the existence of an afterlife and the need to fear divine punishment after death.[152]

The Roman philosopher Sextus Empiricus held that one should suspend judgment about virtually all beliefs—a form of skepticism known as Pyrrhonism—that nothing was inherently evil, and that ataraxia ("peace of mind") is attainable by withholding one's judgment. His relatively large volume of surviving works had a lasting influence on later philosophers.[153]

The meaning of "atheist" changed over the course of classical antiquity. The early Christians were labeled atheists by non-Christians because of their disbelief in pagan gods.[154] During the Roman Empire, Christians were executed for their rejection of the Roman gods in general and Emperor-worship in particular. When Christianity became the state religion of Rome under Theodosius I in 381, heresy became a punishable offense.[155]

In Europe, the espousal of atheistic views was rare during the Early Middle Ages and Middle Ages (see Medieval Inquisition); metaphysics and theology were the dominant interests pertaining to religion.[159] There were, however, movements within this period that furthered heterodox conceptions of the Christian god, including differing views of the nature, transcendence, and knowability of God. Individuals and groups such as Johannes Scotus Eriugena, David of Dinant, Amalric of Bena, and the Brethren of the Free Spirit maintained Christian viewpoints with pantheistic tendencies. Nicholas of Cusa held to a form of fideism he called docta ignorantia ("learned ignorance"), asserting that God is beyond human categorization, and thus our knowledge of him is limited to conjecture. William of Ockham inspired anti-metaphysical tendencies with his nominalistic limitation of human knowledge to singular objects, and asserted that the divine essence could not be intuitively or rationally apprehended by human intellect. Followers of Ockham, such as John of Mirecourt and Nicholas of Autrecourt furthered this view. The resulting division between faith and reason influenced later radical and reformist theologians such as John Wycliffe, Jan Hus, and Martin Luther.[159]

The Renaissance did much to expand the scope of free thought and skeptical inquiry. Individuals such as Leonardo da Vinci sought experimentation as a means of explanation, and opposed arguments from religious authority. Other critics of religion and the Church during this time included Niccolò Machiavelli, Bonaventure des Périers, and François Rabelais.[153]

Criticism of Christianity became increasingly frequent in the 17th and 18th centuries, especially in France and England, where there appears to have been a religious malaise, according to contemporary sources. Some Protestant thinkers, such as Thomas Hobbes, espoused a materialist philosophy and skepticism toward supernatural occurrences, while the Jewish-Dutch philosopher Spinoza rejected divine providence in favour of a panentheistic naturalism. By the late 17th century, deism came to be openly espoused by intellectuals such as John Toland who coined the term "pantheist".[162]

The first known explicit atheist was the German critic of religion Matthias Knutzen in his three writings of 1674.[163] He was followed by two other explicit atheist writers, the Polish ex-Jesuit philosopher Kazimierz Łyszczyński and in the 1720s by the French priest Jean Meslier.[164] In the course of the 18th century, other openly atheistic thinkers followed, such as Baron d'Holbach, Jacques-André Naigeon, and other French materialists.[165] John Locke in contrast, though an advocate of tolerance, urged authorities not to tolerate atheism, believing that the denial of God's existence would undermine the social order and lead to chaos.[166]

The philosopher David Hume developed a skeptical epistemology grounded in empiricism, and Immanuel Kant's philosophy has strongly questioned the very possibility of a metaphysical knowledge. Both philosophers undermined the metaphysical basis of natural theology and criticized classical arguments for the existence of God.

Blainey notes that, although Voltaire is widely considered to have strongly contributed to atheistic thinking during the Revolution, he also considered fear of God to have discouraged further disorder, having said "If God did not exist, it would be necessary to invent him."[167] In Reflections on the Revolution in France (1790), the philosopher Edmund Burke denounced atheism, writing of a "literary cabal" who had "some years ago formed something like a regular plan for the destruction of the Christian religion. This object they pursued with a degree of zeal which hitherto had been discovered only in the propagators of some system of piety ... These atheistical fathers have a bigotry of their own ...". But, Burke asserted, "man is by his constitution a religious animal" and "atheism is against, not only our reason, but our instincts; and ... it cannot prevail long".[168]

Baron d'Holbach was a prominent figure in the French Enlightenment who is best known for his atheism and for his voluminous writings against religion, the most famous of them being The System of Nature (1770) but also Christianity Unveiled. One goal of the French Revolution was a restructuring and subordination of the clergy with respect to the state through the Civil Constitution of the Clergy. Attempts to enforce it led to anti-clerical violence and the expulsion of many clergy from France, lasting until the Thermidorian Reaction. The radical Jacobins seized power in 1793, ushering in the Reign of Terror. The Jacobins were deists and introduced the Cult of the Supreme Being as a new French state religion. Some atheists surrounding Jacques Hébert instead sought to establish a Cult of Reason, a form of atheistic pseudo-religion with a goddess personifying reason.

The Napoleonic era further institutionalized the secularization of French society, and exported the revolution to northern Italy, in the hopes of creating pliable republics.

In the latter half of the 19th century, atheism rose to prominence under the influence of rationalistic and freethinking philosophers. Many prominent German philosophers of this era denied the existence of deities and were critical of religion, including Ludwig Feuerbach, Arthur Schopenhauer, Max Stirner, Karl Marx, and Friedrich Nietzsche.[169]

Atheism in the 20th century, particularly in the form of practical atheism, advanced in many societies. Atheistic thought found recognition in a wide variety of other, broader philosophies, such as existentialism, objectivism, secular humanism, nihilism, anarchism, logical positivism, Marxism, feminism,[170] and the general scientific and rationalist movement. In addition, state atheism emerged in Eastern Europe and Asia during that period, particularly in the Soviet Union under Vladimir Lenin and Joseph Stalin, and in Communist China under Mao Zedong. Atheist and anti-religious policies in the Soviet Union included numerous legislative acts, the outlawing of religious instruction in the schools, and the emergence of the League of Militant Atheists.[171][172]

Blainey wrote that during the twentieth century, atheists in Western societies became more active and even militant, though they often "relied essentially on arguments used by numerous radical Christians since at least the eighteenth century". They rejected the idea of an interventionist God, and said that Christianity promoted war and violence, though "the most ruthless leaders in the Second World War were atheists and secularists who were intensely hostile to both Judaism and Christianity" and "Later massive atrocities were committed in the East by those ardent atheists, Pol Pot and Mao Zedong". Some scientists were meanwhile articulating a view that as the world becomes more educated, religion would be superseded.[173]

Logical positivism and scientism paved the way for neopositivism, analytical philosophy, structuralism, and naturalism. Neopositivism and analytical philosophy discarded classical rationalism and metaphysics in favor of strict empiricism and epistemological nominalism.

Proponents such as Bertrand Russell emphatically rejected belief in God. In his early work, Ludwig Wittgenstein attempted to separate metaphysical and supernatural language from rational discourse. A. J. Ayer asserted the unverifiability and meaninglessness of religious statements, citing his adherence to the empirical sciences. Relatedly the applied structuralism of Lévi-Strauss sourced religious language to the human subconscious in denying its transcendental meaning. J. N. Findlay and J. J. C. Smart argued that the existence of God is not logically necessary. Naturalists and materialistic monists such as John Dewey considered the natural world to be the basis of everything, denying the existence of God or immortality.[49][174]

Other leaders like E. V. Ramasami Naicker (Periyar), a prominent atheist leader of India, fought against Hinduism and Brahmins for discriminating and dividing people in the name of caste and religion.[175] This was highlighted in 1956 when he arranged for the erection of a statue depicting a Hindu god in a humble representation and made antitheistic statements.[176]

Atheist Vashti McCollum was the plaintiff in a landmark 1948 Supreme Court case that struck down religious education in US public schools.[177] Madalyn Murray O'Hair was perhaps one of the most influential American atheists; she brought forth the 1963 Supreme Court case Murray v. Curlett which banned compulsory prayer in public schools.[178] In 1966, Time magazine asked "Is God Dead?"[179] in response to the Death of God theological movement, citing the estimation that nearly half of all people in the world lived under an anti-religious power, and millions more in Africa, Asia, and South America seemed to lack knowledge of the Christian view of theology.[180] The Freedom From Religion Foundation was co-founded by Anne Nicol Gaylor and her daughter, Annie Laurie Gaylor, in 1976 in the United States, and incorporated nationally in 1978. It promotes the separation of church and state.[181][182]

Since the fall of the Berlin Wall, the number of actively anti-religious regimes has reduced considerably. In 2006, Timothy Shah of the Pew Forum noted "a worldwide trend across all major religious groups, in which God-based and faith-based movements in general are experiencing increasing confidence and influence vis-à-vis secular movements and ideologies."[183] However, Gregory S. Paul and Phil Zuckerman consider this a myth and suggest that the actual situation is much more complex and nuanced.[184]

A 2010 survey found that those identifying themselves as atheists or agnostics are on average more knowledgeable about religion than followers of major faiths. Nonbelievers scored better on questions about tenets central to Protestant and Catholic faiths. Only Mormon and Jewish faithful scored as well as atheists and agnostics.[185]

In 2012, the first "Women in Secularism" conference was held in Arlington, Virginia.[186] Secular Woman was organized in 2012 as a national organization focused on nonreligious women.[187] The atheist feminist movement has also become increasingly focused on fighting sexism and sexual harassment within the atheist movement itself.[188] In August 2012, Jennifer McCreight (the organizer of Boobquake) founded a movement within atheism known as Atheism Plus, or A+, that "applies skepticism to everything, including social issues like sexism, racism, politics, poverty, and crime".[189][190][191]

In 2013 the first atheist monument on American government property was unveiled at the Bradford County Courthouse in Florida: a 1,500-pound granite bench and plinth inscribed with quotes by Thomas Jefferson, Benjamin Franklin, and Madalyn Murray O'Hair.[192][193]

These atheists generally seek to disassociate themselves from the mass political atheism that gained ascendency in various nations in the 20th century. In best selling books, the religiously motivated terrorist events of 9/11 and the partially successful attempts of the Discovery Institute to change the American science curriculum to include creationist ideas, together with support for those ideas from George W. Bush in 2005, have been cited by authors such as Harris, Dennett, Dawkins, Stenger and Hitchens as evidence of a need to move society towards atheism.[197]

It is difficult to quantify the number of atheists in the world. Respondents to religious-belief polls may define "atheism" differently or draw different distinctions between atheism, non-religious beliefs, and non-theistic religious and spiritual beliefs.[199] A Hindu atheist would declare oneself as a Hindu, although also being an atheist at the same time.[200] A 2010 survey published in Encyclopædia Britannica found that the non-religious made up about 9.6% of the world's population, and atheists about 2.0%, with a very large majority based in Asia. This figure did not include those who follow atheistic religions, such as some Buddhists.[201] The average annual change for atheism from 2000 to 2010 was −0.17%.[201] A broad figure estimates the number of atheists and agnostics on Earth at 1.1 billion.[202]

The 2012 Gallup Global Index of Religiosity and Atheism, measured the percentage of people who viewed themselves as "a religious person, not a religious person or a convinced atheist?" 13% reported to be "convinced atheists".[203] The top ten countries with people who viewed themselves as "convinced atheists" were China (47%), Japan (31%), the Czech Republic (30%), France (29%), South Korea (15%), Germany (15%), Netherlands (14%), Austria (10%), Iceland (10%), Australia (10%) and the Republic of Ireland (10%). In contrast, the top ten countries with people who described themselves as "a religious person" were Ghana (96%), Nigeria (93%), Armenia (92%), Fiji 92%, Macedonia (90%), Romania (89%), Iraq (88%), Kenya (88%), Peru (86%), and Brazil (85%).[204]

According to the 2010 Eurobarometer Poll, the percentages of the population that agreed with the stand "You don't believe there is any sort of spirit, God or life force" ranged from: France (40%), Czech Republic (37%), Sweden (34%), Netherlands (30%), and Estonia (29%), down to Poland (5%), Greece (4%), Cyprus (3%), Malta (2%), and Romania (1%), with the European Union as a whole at 20%.[24] According to the Australian Bureau of Statistics, 22% of Australians have "no religion", a category that includes atheists.[205]

In the United States, there was a 1% to 5% increase in self-reported atheism from 2005 to 2012, and a larger drop in those who self-identified as "religious", down by 13%, from 73% to 60%.[206]

According to a 2012 report by the Pew Research Center, 2.4% of the US adult population identify as atheist, up from 1.6% in 2007, and within the religiously unaffiliated (or "no religion") demographic, atheists made up 12%.[207]

A study noted positive correlations between levels of education and secularity, including atheism, in America.[83]

According to evolutionary psychologist Nigel Barber, atheism blossoms in places where most people feel economically secure, particularly in the social democracies of Europe, as there is less uncertainty about the future with extensive social safety nets and better health care resulting in a greater quality of life and higher life expectancy. By contrast, in underdeveloped countries, there are virtually no atheists.[208]

A letter published in Nature in 1998 reported a survey suggesting that belief in a personal god or afterlife was at an all-time low among the members of the U.S. National Academy of Science, 7.0% of whom believed in a personal god as compared with more than 85% of the general U.S. population,[209] although this study has been criticized by Rodney Stark and Roger Finke for its definition of belief in God. The definition was "I believe in a God to whom one may pray in the expectation of receiving an answer".[210]

An article published by The University of Chicago Chronicle that discussed the above study, stated that 76% of physicians in the United States believe in God, more than the 7% of scientists above, but still less than the 85% of the general population.[211] Another study assessing religiosity among scientists who are members of the American Association for the Advancement of Science found that "just over half of scientists (51%) believe in some form of deity or higher power; specifically, 33% of scientists say they believe in God, while 18% believe in a universal spirit or higher power."[212]

Frank Sulloway of the Massachusetts Institute of Technology and Michael Shermer of California State University conducted a study which found in their polling sample of "credentialed" U.S. adults (12% had Ph.Ds and 62% were college graduates) 64% believed in God, and there was a correlation indicating that religious conviction diminished with education level.[213]

In 1958, Professor Michael Argyle of the University of Oxford analyzed seven research studies that had investigated correlation between attitude to religion and measured intelligence among school and college students from the U.S. Although a clear negative correlation was found, the analysis did not identify causality but noted that factors such as authoritarian family background and social class may also have played a part.[214] Sociologist Philip Schwadel found that higher levels of education are associated with increased religious participation and religious practice in daily life, but also correlate with greater tolerance for atheists' public opposition to religion and greater skepticism of "exclusivist religious viewpoints and biblical literalism".[215] Other studies have also examined the relationship between religiosity and intelligence; in a meta-analysis, 53 of 63 studies found that analytical intelligence correlated negatively with religiosity, with 35 of the 53 reaching statistical significance, while 10 studies found a positive correlation, 2 of which reached significance.[216]

The term atheism originated from the Greek ἄθεος (atheos), meaning "without god(s)", used as a pejorative term applied to those thought to reject the gods worshiped by the larger society. With the spread of freethought, skeptical inquiry, and subsequent increase in criticism of religion, application of the term narrowed in scope. The first individuals to identify themselves using the word "atheist" lived in the 18th century.[11] Some ancient and modern religions are referred to as atheistic, as they either have no concepts of deities or deny a creator deity, yet still revere other god-like entities.

Arguments for atheism range from the philosophical to social and historical approaches. Rationales for not believing in any supernatural deity include the lack of empirical evidence,[12][13] the problem of evil, the argument from inconsistent revelations, rejection of concepts which cannot be falsified, and the argument from nonbelief.[12][14] Although some atheists have adopted secular philosophies,[15][16] there is no one ideology or set of behaviors to which all atheists adhere.[17] Many atheists hold that atheism is a more parsimonious worldview than theism, and therefore the burden of proof lies not on the atheist to disprove the existence of God, but on the theist to provide a rationale for theism.[18]

Since conceptions of atheism vary, determining how many atheists exist in the world today is difficult.[19] According to one 2007 estimate, atheists make up about 2.3% of the world's population, while a further 11.9% are nonreligious.[20] According to a 2012 global poll conducted by WIN/GIA, 13% of the participants say they are atheists.[21] According to other studies, rates of atheism are among the highest in Western nations, again to varying degrees: United States (4%)[22] and Canada (28%).[23] The figures for a 2010 Eurobarometer survey in the European Union (EU), reported that 20% of the EU population do not believe in "any sort of spirit, God or life force".[24]

Definitions and distinctions

A diagram showing the relationship between the definitions of weak/strong and implicit/explicit atheism.

Explicit strong/positive/hard atheists (in purple on the right) assert that "at least one deity exists" is a false statement.

Explicit weak/negative/soft atheists (in blue on the right) reject or eschew belief that any deities exist without actually asserting that "at least one deity exists" is a false statement.

Implicit weak/negative atheists (in blue on the left) would include people (such as young children and some agnostics) who do not believe in a deity, but have not explicitly rejected such belief.

(Sizes in the diagram are not meant to indicate relative sizes within a population.)

Explicit strong/positive/hard atheists (in purple on the right) assert that "at least one deity exists" is a false statement.

Explicit weak/negative/soft atheists (in blue on the right) reject or eschew belief that any deities exist without actually asserting that "at least one deity exists" is a false statement.

Implicit weak/negative atheists (in blue on the left) would include people (such as young children and some agnostics) who do not believe in a deity, but have not explicitly rejected such belief.

(Sizes in the diagram are not meant to indicate relative sizes within a population.)

Writers disagree how best to define and classify atheism,[25] contesting what supernatural entities it applies to, whether it is an assertion in its own right or merely the absence of one, and whether it requires a conscious, explicit rejection. Atheism has been regarded as compatible with agnosticism,[26][27][28][29][30][31][32] and has also been contrasted with it.[33][34][35] A variety of categories have been used to distinguish the different forms of atheism.

Range

Some of the ambiguity and controversy involved in defining atheism arises from difficulty in reaching a consensus for the definitions of words like deity and god. The plurality of wildly different conceptions of God and deities leads to differing ideas regarding atheism's applicability. The ancient Romans accused Christians of being atheists for not worshiping the pagan deities. Gradually, this view fell into disfavor as theism came to be understood as encompassing belief in any divinity.[36]With respect to the range of phenomena being rejected, atheism may counter anything from the existence of a deity, to the existence of any spiritual, supernatural, or transcendental concepts, such as those of Buddhism, Hinduism, Jainism and Taoism.[37]

Implicit vs. explicit

Definitions of atheism also vary in the degree of consideration a person must put to the idea of gods to be considered an atheist. Atheism has sometimes been defined to include the simple absence of belief that any deities exist. This broad definition would include newborns and other people who have not been exposed to theistic ideas. As far back as 1772, Baron d'Holbach said that "All children are born Atheists; they have no idea of God."[38] Similarly, George H. Smith (1979) suggested that: "The man who is unacquainted with theism is an atheist because he does not believe in a god. This category would also include the child with the conceptual capacity to grasp the issues involved, but who is still unaware of those issues. The fact that this child does not believe in god qualifies him as an atheist."[39] Smith coined the term implicit atheism to refer to "the absence of theistic belief without a conscious rejection of it" and explicit atheism to refer to the more common definition of conscious disbelief. Ernest Nagel contradicts Smith's definition of atheism as merely "absence of theism", acknowledging only explicit atheism as true "atheism".[40]Positive vs. negative

Philosophers such as Antony Flew[41] and Michael Martin[36] have contrasted positive (strong/hard) atheism with negative (weak/soft) atheism. Positive atheism is the explicit affirmation that gods do not exist. Negative atheism includes all other forms of non-theism. According to this categorization, anyone who is not a theist is either a negative or a positive atheist. The terms weak and strong are relatively recent, while the terms negative and positive atheism are of older origin, having been used (in slightly different ways) in the philosophical literature[41] and in Catholic apologetics.[42] Under this demarcation of atheism, most agnostics qualify as negative atheists.While Martin, for example, asserts that agnosticism entails negative atheism,[29] many agnostics see their view as distinct from atheism,[43][44] which they may consider no more justified than theism or requiring an equal conviction.[43] The assertion of unattainability of knowledge for or against the existence of gods is sometimes seen as indication that atheism requires a leap of faith.[45][46] Common atheist responses to this argument include that unproven religious propositions deserve as much disbelief as all other unproven propositions,[47] and that the unprovability of a god's existence does not imply equal probability of either possibility.[48] Scottish philosopher J. J. C. Smart even argues that "sometimes a person who is really an atheist may describe herself, even passionately, as an agnostic because of unreasonable generalised philosophical skepticism which would preclude us from saying that we know anything whatever, except perhaps the truths of mathematics and formal logic."[49] Consequently, some atheist authors such as Richard Dawkins prefer distinguishing theist, agnostic and atheist positions along a spectrum of theistic probability—the likelihood that each assigns to the statement "God exists".[50]

Definition as impossible or impermanent

Before the 18th century, the existence of God was so universally accepted in the western world that even the possibility of true atheism was questioned. This is called theistic innatism—the notion that all people believe in God from birth; within this view was the connotation that atheists are simply in denial.[51]There is also a position claiming that atheists are quick to believe in God in times of crisis, that atheists make deathbed conversions, or that "there are no atheists in foxholes".[52] There have however been examples to the contrary, among them examples of literal "atheists in foxholes".[53]

Some atheists have doubted the very need for the term "atheism". In his book Letter to a Christian Nation, Sam Harris wrote:

In fact, "atheism" is a term that should not even exist. No one ever needs to identify himself as a "non-astrologer" or a "non-alchemist". We do not have words for people who doubt that Elvis is still alive or that aliens have traversed the galaxy only to molest ranchers and their cattle. Atheism is nothing more than the noises reasonable people make in the presence of unjustified religious beliefs.[54]

Concepts

Paul Henri Thiry, Baron d'Holbach, an 18th-century advocate of atheism.

The source of man's unhappiness is his ignorance of Nature. The pertinacity with which he clings to blind opinions imbibed in his infancy, which interweave themselves with his existence, the consequent prejudice that warps his mind, that prevents its expansion, that renders him the slave of fiction, appears to doom him to continual error.

—d'Holbach, The System of Nature[55]

Practical atheism

In practical or pragmatic atheism, also known as apatheism, individuals live as if there are no gods and explain natural phenomena without reference to any deities. The existence of gods is not rejected, but may be designated unnecessary or useless; gods neither provide purpose to life, nor influence everyday life, according to this view.[56] A form of practical atheism with implications for the scientific community is methodological naturalism—the "tacit adoption or assumption of philosophical naturalism within scientific method with or without fully accepting or believing it."[57]Practical atheism can take various forms:

- Absence of religious motivation—belief in gods does not motivate moral action, religious action, or any other form of action;

- Active exclusion of the problem of gods and religion from intellectual pursuit and practical action;

- Indifference—the absence of any interest in the problems of gods and religion; or

- Unawareness of the concept of a deity.[58]

Theoretical atheism

Ontological arguments

Theoretical (or theoric) atheism explicitly posits arguments against the existence of gods, responding to common theistic arguments such as the argument from design or Pascal's Wager. Theoretical atheism is mainly an ontology, precisely a physical ontology.Epistemological arguments

Epistemological atheism argues that people cannot know a God or determine the existence of a God. The foundation of epistemological atheism is agnosticism, which takes a variety of forms. In the philosophy of immanence, divinity is inseparable from the world itself, including a person's mind, and each person's consciousness is locked in the subject. According to this form of agnosticism, this limitation in perspective prevents any objective inference from belief in a god to assertions of its existence. The rationalistic agnosticism of Kant and the Enlightenment only accepts knowledge deduced with human rationality; this form of atheism holds that gods are not discernible as a matter of principle, and therefore cannot be known to exist. Skepticism, based on the ideas of Hume, asserts that certainty about anything is impossible, so one can never know for sure whether or not a god exists. Hume, however, held that such unobservable metaphysical concepts should be rejected as "sophistry and illusion".[59] The allocation of agnosticism to atheism is disputed; it can also be regarded as an independent, basic worldview.[56]Other arguments for atheism that can be classified as epistemological or ontological, including logical positivism and ignosticism, assert the meaninglessness or unintelligibility of basic terms such as "God" and statements such as "God is all-powerful." Theological noncognitivism holds that the statement "God exists" does not express a proposition, but is nonsensical or cognitively meaningless. It has been argued both ways as to whether such individuals can be classified into some form of atheism or agnosticism. Philosophers A. J. Ayer and Theodore M. Drange reject both categories, stating that both camps accept "God exists" as a proposition; they instead place noncognitivism in its own category.[60][61]

Metaphysical arguments

One author writes:"Metaphysical atheism ... includes all doctrines that hold to metaphysical monism (the homogeneity of reality). Metaphysical atheism may be either: a) absolute — an explicit denial of God's existence associated with materialistic monism (all materialistic trends, both in ancient and modern times); b) relative — the implicit denial of God in all philosophies that, while they accept the existence of an absolute, conceive of the absolute as not possessing any of the attributes proper to God: transcendence, a personal character or unity. Relative atheism is associated with idealistic monism (pantheism, panentheism, deism)."[62]

Epicurus is credited with first expounding the problem of evil. David Hume in his Dialogues concerning Natural Religion (1779) cited Epicurus in stating the argument as a series of questions:[63] "Is God willing to prevent evil, but not able? Then he is impotent. Is he able, but not willing? Then he is malevolent. Is he both able and willing? Then whence cometh evil? Is he neither able nor willing? Then why call him God?"

Logical arguments

Logical atheism holds that the various conceptions of gods, such as the personal god of Christianity, are ascribed logically inconsistent qualities. Such atheists present deductive arguments against the existence of God, which assert the incompatibility between certain traits, such as perfection, creator-status, immutability, omniscience, omnipresence, omnipotence, omnibenevolence, transcendence, personhood (a personal being), nonphysicality, justice, and mercy.[12]Theodicean atheists believe that the world as they experience it cannot be reconciled with the qualities commonly ascribed to God and gods by theologians. They argue that an omniscient, omnipotent, and omnibenevolent God is not compatible with a world where there is evil and suffering, and where divine love is hidden from many people.[14] A similar argument is attributed to Siddhartha Gautama, the founder of Buddhism.[64]

Reductionary accounts of religion

Philosopher Ludwig Feuerbach[65] and psychoanalyst Sigmund Freud have argued that God and other religious beliefs are human inventions, created to fulfill various psychological and emotional wants or needs. This is also a view of many Buddhists.[66] Karl Marx and Friedrich Engels, influenced by the work of Feuerbach, argued that belief in God and religion are social functions, used by those in power to oppress the working class. According to Mikhail Bakunin, "the idea of God implies the abdication of human reason and justice; it is the most decisive negation of human liberty, and necessarily ends in the enslavement of mankind, in theory and practice." He reversed Voltaire's famous aphorism that if God did not exist, it would be necessary to invent him, writing instead that "if God really existed, it would be necessary to abolish him."[67]Atheism within religions

Atheism is acceptable within some religious and spiritual belief systems, including Hinduism, Jainism, Buddhism, Raelism,[68] and Neopagan movements[69] such as Wicca.[70] Āstika schools in Hinduism hold atheism to be a valid path to moksha, but extremely difficult, for the atheist can not expect any help from the divine on their journey.[71] Jainism believes the universe is eternal and has no need for a creator deity, however Tirthankaras are revered that can transcend space and time [72] and have more power than the god Indra.[73] Secular Buddhism does not advocate belief in gods. Early Buddhism was atheistic as Gautama Buddha's path involved no mention of gods. Later conceptions of Buddhism consider Buddha himself a god, suggest adherents can attain godhood, and revere Bodhisattvas[74] and Eternal Buddhas.Atheist philosophies

Axiological, or constructive, atheism rejects the existence of gods in favor of a "higher absolute", such as humanity. This form of atheism favors humanity as the absolute source of ethics and values, and permits individuals to resolve moral problems without resorting to God. Marx and Freud used this argument to convey messages of liberation, full-development, and unfettered happiness.[56] One of the most common criticisms of atheism has been to the contrary—that denying the existence of a god leads to moral relativism, leaving one with no moral or ethical foundation,[75] or renders life meaningless and miserable.[76] Blaise Pascal argued this view in his Pensées.[77]French philosopher Jean-Paul Sartre identified himself as a representative of an "atheist existentialism"[78] concerned less with denying the existence of God than with establishing that "man needs ... to find himself again and to understand that nothing can save him from himself, not even a valid proof of the existence of God."[79] Sartre said a corollary of his atheism was that "if God does not exist, there is at least one being in whom existence precedes essence, a being who exists before he can be defined by any concept, and ... this being is man."[78] The practical consequence of this atheism was described by Sartre as meaning that there are no a priori rules or absolute values that can be invoked to govern human conduct, and that humans are "condemned" to invent these for themselves, making "man" absolutely "responsible for everything he does".[80]

Atheism, religion, and morality

Association with world views and social behaviors

Sociologist Phil Zuckerman analyzed previous social science research on secularity and non-belief, and concluded that societal well-being is positively correlated with irreligion. He found that there are much lower concentrations of atheism and secularity in poorer, less developed nations (particularly in Africa and South America) than in the richer industrialized democracies.[81][82] His findings relating specifically to atheism in the US were that compared to religious people in the US, "atheists and secular people" are less nationalistic, prejudiced, antisemitic, racist, dogmatic, ethnocentric, closed-minded, and authoritarian, and in US states with the highest percentages of atheists, the murder rate is lower than average. In the most religious states, the murder rate is higher than average.[83][84]Atheism and irreligion

Buddhism is sometimes described as nontheistic because of the absence of a creator god, but that can be too simplistic a view.[85]

People who self-identify as atheists are often assumed to be irreligious, but some sects within major religions reject the existence of a personal, creator deity.[86] In recent years, certain religious denominations have accumulated a number of openly atheistic followers, such as atheistic or humanistic Judaism[87][88] and Christian atheists.[89][90][91]

The strictest sense of positive atheism does not entail any specific beliefs outside of disbelief in any deity; as such, atheists can hold any number of spiritual beliefs. For the same reason, atheists can hold a wide variety of ethical beliefs, ranging from the moral universalism of humanism, which holds that a moral code should be applied consistently to all humans, to moral nihilism, which holds that morality is meaningless.[92]

Philosophers such as Georges Bataille, Slavoj Žižek,[93] Alain de Botton,[94] and Alexander Bard and Jan Söderqvist,[95] have all argued that atheists should reclaim religion as an act of defiance against theism, precisely not to leave religion as an unwarranted monopoly to theists.

Divine command vs. ethics

According to Plato's Euthyphro dilemma, the role of the gods in determining right from wrong is either unnecessary or arbitrary. The argument that morality must be derived from God, and cannot exist without a wise creator, has been a persistent feature of political if not so much philosophical debate.[96][97][98] Moral precepts such as "murder is wrong" are seen as divine laws, requiring a divine lawmaker and judge. However, many atheists argue that treating morality legalistically involves a false analogy, and that morality does not depend on a lawmaker in the same way that laws do.[99] Friedrich Nietzsche believed in a morality independent of theistic belief, and stated that morality based upon God "has truth only if God is truth—it stands or falls with faith in God."[100][101][102]There exist normative ethical systems that do not require principles and rules to be given by a deity.

Some include virtue ethics, social contract, Kantian ethics, utilitarianism, and Objectivism. Sam Harris has proposed that moral prescription (ethical rule making) is not just an issue to be explored by philosophy, but that we can meaningfully practice a science of morality. Any such scientific system must, nevertheless, respond to the criticism embodied in the naturalistic fallacy.[103]

Philosophers Susan Neiman[104] and Julian Baggini[105] (among others) assert that behaving ethically only because of divine mandate is not true ethical behavior but merely blind obedience. Baggini argues that atheism is a superior basis for ethics, claiming that a moral basis external to religious imperatives is necessary to evaluate the morality of the imperatives themselves—to be able to discern, for example, that "thou shalt steal" is immoral even if one's religion instructs it—and that atheists, therefore, have the advantage of being more inclined to make such evaluations.[106] The contemporary British political philosopher Martin Cohen has offered the more historically telling example of Biblical injunctions in favour of torture and slavery as evidence of how religious injunctions follow political and social customs, rather than vice versa, but also noted that the same tendency seems to be true of supposedly dispassionate and objective philosophers.[107] Cohen extends this argument in more detail in Political Philosophy from Plato to Mao, where he argues that the Qur'an played a role in perpetuating social codes from the early 7th century despite changes in secular society.[108]

Dangers of religions

Some prominent atheists—most recently Christopher Hitchens, Daniel Dennett, Sam Harris and Richard Dawkins, and following such thinkers as Bertrand Russell, Robert G. Ingersoll, Voltaire, and novelist José Saramago—have criticized religions, citing harmful aspects of religious practices and doctrines.[109]The 19th-century German political theorist and sociologist Karl Marx criticised religion as "the sigh of the oppressed creature, the heart of a heartless world, and the soul of soulless conditions. It is the opium of the people". He goes on to say, "The abolition of religion as the illusory happiness of the people is the demand for their real happiness. To call on them to give up their illusions about their condition is to call on them to give up a condition that requires illusions. The criticism of religion is, therefore, in embryo, the criticism of that vale of tears of which religion is the halo.[110] Lenin said that "every religious idea and every idea of God "is unutterable vileness ... of the most dangerous kind, 'contagion' of the most abominable kind. Millions of sins, filthy deeds, acts of violence and physical contagions ... are far less dangerous than the subtle, spiritual idea of God decked out in the smartest ideological constumes ..."[111]

Sam Harris criticises Western religion's reliance on divine authority as lending itself to authoritarianism and dogmatism.[112] There is a correlation between religious fundamentalism and extrinsic religion (when religion is held because it serves ulterior interests)[113] and authoritarianism, dogmatism, and prejudice.[114] These arguments—combined with historical events that are argued to demonstrate the dangers of religion, such as the Crusades, inquisitions, witch trials, and terrorist attacks—have been used in response to claims of beneficial effects of belief in religion.[115] Believers counter-argue that some regimes that espouse atheism, such as in Soviet Russia, have also been guilty of mass murder.[116][117] In response to those claims, atheists such as Sam Harris and Richard

Dawkins have stated that Stalin's atrocities were influenced not by atheism but by dogmatic Marxism, and that while Stalin and Mao happened to be atheists, they did not do their deeds in the name of atheism.[118][119]

Etymology

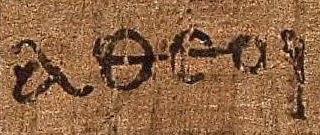

The Greek word αθεοι (atheoi), as it appears in the Epistle to the Ephesians (2:12) on the early 3rd-century Papyrus 46. It is usually translated into English as "[those who are] without God".[120]

In early ancient Greek, the adjective átheos (ἄθεος, from the privative ἀ- + θεός "god") meant "godless". It was first used as a term of censure roughly meaning "ungodly" or "impious". In the 5th century BCE, the word began to indicate more deliberate and active godlessness in the sense of "severing relations with the gods" or "denying the gods". The term ἀσεβής (asebēs) then came to be applied against those who impiously denied or disrespected the local gods, even if they believed in other gods. Modern translations of classical texts sometimes render átheos as "atheistic". As an abstract noun, there was also ἀθεότης (atheotēs), "atheism". Cicero transliterated the Greek word into the Latin átheos. The term found frequent use in the debate between early Christians and Hellenists, with each side attributing it, in the pejorative sense, to the other.[121]

The term atheist (from Fr. athée), in the sense of "one who ... denies the existence of God or gods",[122] predates atheism in English, being first found as early as 1566,[123] and again in 1571.[124] Atheist as a label of practical godlessness was used at least as early as 1577.[125] The term atheism was derived from the French athéisme,[126] and appears in English about 1587.[127] An earlier work, from about 1534, used the term atheonism.[128][129] Related words emerged later: deist in 1621,[130] theist in 1662,[131] deism in 1675,[132] and theism in 1678.[133] At that time "deist" and "deism" already carried their modern meaning. The term theism came to be contrasted with deism.

Karen Armstrong writes that "During the sixteenth and seventeenth centuries, the word 'atheist' was still reserved exclusively for polemic ... The term 'atheist' was an insult. Nobody would have dreamed of calling himself an atheist."[11]

Atheism was first used to describe a self-avowed belief in late 18th-century Europe, specifically denoting disbelief in the monotheistic Abrahamic god.[134][135] In the 20th century, globalization contributed to the expansion of the term to refer to disbelief in all deities, though it remains common in Western society to describe atheism as simply "disbelief in God".[36]

History

While the earliest-found usage of the term atheism is in 16th-century France,[126][127] ideas that would be recognized today as atheistic are documented from the Vedic period and the classical antiquity.Early Indic religion

Atheistic schools are found in early Indian thought and have existed from the times of the historical Vedic religion.[136] Among the six orthodox schools of Hindu philosophy, Samkhya, the oldest philosophical school of thought, does not accept God, and the early Mimamsa also rejected the notion of God.[137] The thoroughly materialistic and anti-theistic philosophical Cārvāka (also called Nastika or Lokaiata) school that originated in India around the 6th century BCE is probably the most explicitly atheistic school of philosophy in India, similar to the Greek Cyrenaic school. This branch of Indian philosophy is classified as heterodox due to its rejection of the authority of Vedas and hence is not considered part of the six orthodox schools of Hinduism, but it is noteworthy as evidence of a materialistic movement within Hinduism.[138] Chatterjee and Datta explain that our understanding of Cārvāka philosophy is fragmentary, based largely on criticism of the ideas by other schools, and that it is not a living tradition:"Though materialism in some form or other has always been present in India, and occasional references are found in the Vedas, the Buddhistic literature, the Epics, as well as in the later philosophical works we do not find any systematic work on materialism, nor any organized school of followers as the other philosophical schools possess. But almost every work of the other schools states, for refutation, the materialistic views. Our knowledge of Indian materialism is chiefly based on these."[139]Other Indian philosophies generally regarded as atheistic include Classical Samkhya and Purva Mimamsa. The rejection of a personal creator God is also seen in Jainism and Buddhism in India.[140]

Classical antiquity

Western atheism has its roots in pre-Socratic Greek philosophy, but did not emerge as a distinct world-view until the late Enlightenment.[141] The 5th-century BCE Greek philosopher Diagoras is known as the "first atheist",[142] and is cited as such by Cicero in his De Natura Deorum.[143] Atomists such as Democritus attempted to explain the world in a purely materialistic way, without reference to the spiritual or mystical. Critias viewed religion as a human invention used to frighten people into following moral order[144] and Prodicus also appears to have made clear atheistic statements in his work. Philodemus reports that Prodicus believed that "the gods of popular belief do not exist nor do they know, but primitive man, [out of admiration, deified] the fruits of the earth and virtually everything that contributed to his existence". Protagoras has sometimes been taken to be an atheist but rather espoused agnostic views, commenting that "Concerning the gods I am unable to discover whether they exist or not, or what they are like in form; for there are many hindrances to knowledge, the obscurity of the subject and the brevity of human life."[145] In the 3rd-century BCE the Greek philosophers Theodorus Cyrenaicus[143][146] and Strato of Lampsacus[147] did not believe gods exist.

Socrates (c. 470–399 BCE) was associated in the Athenian public mind with the trends in pre-Socratic philosophy towards naturalistic inquiry and the rejection of divine explanations for phenomena. Although such an interpretation misrepresents his thought he was portrayed in such a way in Aristophanes' comic play Clouds and was later to be tried and executed for impiety and corrupting the young. At his trial Socrates is reported as vehemently denying that he was an atheist and contemporary scholarship provides little reason to doubt this claim.[148][149]

Euhemerus (c. 300 BCE) published his view that the gods were only the deified rulers, conquerors and founders of the past, and that their cults and religions were in essence the continuation of vanished kingdoms and earlier political structures.[150] Although not strictly an atheist, Euhemerus was later criticized for having "spread atheism over the whole inhabited earth by obliterating the gods".[151]

Also important in the history of atheism was Epicurus (c. 300 BCE). Drawing on the ideas of Democritus and the Atomists, he espoused a materialistic philosophy according to which the universe was governed by the laws of chance without the need for divine intervention (see scientific determinism). Although he stated that deities existed, he believed that they were uninterested in human existence. The aim of the Epicureans was to attain peace of mind and one important way of doing this was by exposing fear of divine wrath as irrational. The Epicureans also denied the existence of an afterlife and the need to fear divine punishment after death.[152]

The Roman philosopher Sextus Empiricus held that one should suspend judgment about virtually all beliefs—a form of skepticism known as Pyrrhonism—that nothing was inherently evil, and that ataraxia ("peace of mind") is attainable by withholding one's judgment. His relatively large volume of surviving works had a lasting influence on later philosophers.[153]

The meaning of "atheist" changed over the course of classical antiquity. The early Christians were labeled atheists by non-Christians because of their disbelief in pagan gods.[154] During the Roman Empire, Christians were executed for their rejection of the Roman gods in general and Emperor-worship in particular. When Christianity became the state religion of Rome under Theodosius I in 381, heresy became a punishable offense.[155]

Early Middle Ages to the Renaissance

During the Early Middle Ages, the Islamic world underwent a Golden Age. With the associated advances in science and philosophy, Arab and Persian lands produced outspoken rationalists and atheists, including Muhammad al Warraq (fl. 7th century), Ibn al-Rawandi (827–911), Al-Razi (854–925) and Al-Maʿarri (973–1058). Al-Ma'arri wrote and taught that religion itself was a "fable invented by the ancients"[156] and that humans were "of two sorts: those with brains, but no religion, and those with religion, but no brains."[157] Despite being relatively prolific writers, nearly none of their writing survives to the modern day, most of what little remains being preserved through quotations and excerpts in later works by Muslim apologists attempting to refute them.[158] Other prominent Golden Age scholars have been associated with rationalist thought and atheism as well, although the current intellectual atmosphere in the Islamic world, and the scant evidence that survives from the era, make this point a contentious one today.In Europe, the espousal of atheistic views was rare during the Early Middle Ages and Middle Ages (see Medieval Inquisition); metaphysics and theology were the dominant interests pertaining to religion.[159] There were, however, movements within this period that furthered heterodox conceptions of the Christian god, including differing views of the nature, transcendence, and knowability of God. Individuals and groups such as Johannes Scotus Eriugena, David of Dinant, Amalric of Bena, and the Brethren of the Free Spirit maintained Christian viewpoints with pantheistic tendencies. Nicholas of Cusa held to a form of fideism he called docta ignorantia ("learned ignorance"), asserting that God is beyond human categorization, and thus our knowledge of him is limited to conjecture. William of Ockham inspired anti-metaphysical tendencies with his nominalistic limitation of human knowledge to singular objects, and asserted that the divine essence could not be intuitively or rationally apprehended by human intellect. Followers of Ockham, such as John of Mirecourt and Nicholas of Autrecourt furthered this view. The resulting division between faith and reason influenced later radical and reformist theologians such as John Wycliffe, Jan Hus, and Martin Luther.[159]

The Renaissance did much to expand the scope of free thought and skeptical inquiry. Individuals such as Leonardo da Vinci sought experimentation as a means of explanation, and opposed arguments from religious authority. Other critics of religion and the Church during this time included Niccolò Machiavelli, Bonaventure des Périers, and François Rabelais.[153]

Early modern period

Historian Geoffrey Blainey wrote that the Reformation had paved the way for atheists by attacking the authority of the Catholic Church, which in turn "quietly inspired other thinkers to attack the authority of the new Protestant churches".[160] Deism gained influence in France, Prussia, and England. The Dutch philosopher Baruch Spinoza was "probably the first well known 'semi-atheist' to announce himself in a Christian land in the modern era", according to Blainey. Spinoza believed that natural laws explained the workings of the universe. In 1661 he published his Short Treatise on God.[161]Criticism of Christianity became increasingly frequent in the 17th and 18th centuries, especially in France and England, where there appears to have been a religious malaise, according to contemporary sources. Some Protestant thinkers, such as Thomas Hobbes, espoused a materialist philosophy and skepticism toward supernatural occurrences, while the Jewish-Dutch philosopher Spinoza rejected divine providence in favour of a panentheistic naturalism. By the late 17th century, deism came to be openly espoused by intellectuals such as John Toland who coined the term "pantheist".[162]

The first known explicit atheist was the German critic of religion Matthias Knutzen in his three writings of 1674.[163] He was followed by two other explicit atheist writers, the Polish ex-Jesuit philosopher Kazimierz Łyszczyński and in the 1720s by the French priest Jean Meslier.[164] In the course of the 18th century, other openly atheistic thinkers followed, such as Baron d'Holbach, Jacques-André Naigeon, and other French materialists.[165] John Locke in contrast, though an advocate of tolerance, urged authorities not to tolerate atheism, believing that the denial of God's existence would undermine the social order and lead to chaos.[166]

The philosopher David Hume developed a skeptical epistemology grounded in empiricism, and Immanuel Kant's philosophy has strongly questioned the very possibility of a metaphysical knowledge. Both philosophers undermined the metaphysical basis of natural theology and criticized classical arguments for the existence of God.

Ludwig Feuerbach's The Essence of Christianity (1841) would greatly influence philosophers such as Engels, Marx, David Strauss, Nietzsche, and Max Stirner. He considered God to be a human invention and religious activities to be wish-fulfillment. For this he is considered the founding father of modern anthropology of religion.

Blainey notes that, although Voltaire is widely considered to have strongly contributed to atheistic thinking during the Revolution, he also considered fear of God to have discouraged further disorder, having said "If God did not exist, it would be necessary to invent him."[167] In Reflections on the Revolution in France (1790), the philosopher Edmund Burke denounced atheism, writing of a "literary cabal" who had "some years ago formed something like a regular plan for the destruction of the Christian religion. This object they pursued with a degree of zeal which hitherto had been discovered only in the propagators of some system of piety ... These atheistical fathers have a bigotry of their own ...". But, Burke asserted, "man is by his constitution a religious animal" and "atheism is against, not only our reason, but our instincts; and ... it cannot prevail long".[168]

Baron d'Holbach was a prominent figure in the French Enlightenment who is best known for his atheism and for his voluminous writings against religion, the most famous of them being The System of Nature (1770) but also Christianity Unveiled. One goal of the French Revolution was a restructuring and subordination of the clergy with respect to the state through the Civil Constitution of the Clergy. Attempts to enforce it led to anti-clerical violence and the expulsion of many clergy from France, lasting until the Thermidorian Reaction. The radical Jacobins seized power in 1793, ushering in the Reign of Terror. The Jacobins were deists and introduced the Cult of the Supreme Being as a new French state religion. Some atheists surrounding Jacques Hébert instead sought to establish a Cult of Reason, a form of atheistic pseudo-religion with a goddess personifying reason.

The Napoleonic era further institutionalized the secularization of French society, and exported the revolution to northern Italy, in the hopes of creating pliable republics.

In the latter half of the 19th century, atheism rose to prominence under the influence of rationalistic and freethinking philosophers. Many prominent German philosophers of this era denied the existence of deities and were critical of religion, including Ludwig Feuerbach, Arthur Schopenhauer, Max Stirner, Karl Marx, and Friedrich Nietzsche.[169]

Since 1900

Vladimir Lenin, the first leader of the Soviet Union. Marxist‒Leninist atheism was influential in the 20th century.

Atheism in the 20th century, particularly in the form of practical atheism, advanced in many societies. Atheistic thought found recognition in a wide variety of other, broader philosophies, such as existentialism, objectivism, secular humanism, nihilism, anarchism, logical positivism, Marxism, feminism,[170] and the general scientific and rationalist movement. In addition, state atheism emerged in Eastern Europe and Asia during that period, particularly in the Soviet Union under Vladimir Lenin and Joseph Stalin, and in Communist China under Mao Zedong. Atheist and anti-religious policies in the Soviet Union included numerous legislative acts, the outlawing of religious instruction in the schools, and the emergence of the League of Militant Atheists.[171][172]

1929 cover of the USSR League of Militant Atheists magazine, showing the gods of the Abrahamic religions being crushed by the Communist 5-year plan

Blainey wrote that during the twentieth century, atheists in Western societies became more active and even militant, though they often "relied essentially on arguments used by numerous radical Christians since at least the eighteenth century". They rejected the idea of an interventionist God, and said that Christianity promoted war and violence, though "the most ruthless leaders in the Second World War were atheists and secularists who were intensely hostile to both Judaism and Christianity" and "Later massive atrocities were committed in the East by those ardent atheists, Pol Pot and Mao Zedong". Some scientists were meanwhile articulating a view that as the world becomes more educated, religion would be superseded.[173]

Logical positivism and scientism paved the way for neopositivism, analytical philosophy, structuralism, and naturalism. Neopositivism and analytical philosophy discarded classical rationalism and metaphysics in favor of strict empiricism and epistemological nominalism.

Proponents such as Bertrand Russell emphatically rejected belief in God. In his early work, Ludwig Wittgenstein attempted to separate metaphysical and supernatural language from rational discourse. A. J. Ayer asserted the unverifiability and meaninglessness of religious statements, citing his adherence to the empirical sciences. Relatedly the applied structuralism of Lévi-Strauss sourced religious language to the human subconscious in denying its transcendental meaning. J. N. Findlay and J. J. C. Smart argued that the existence of God is not logically necessary. Naturalists and materialistic monists such as John Dewey considered the natural world to be the basis of everything, denying the existence of God or immortality.[49][174]

Other developments

The British philosopher Bertrand Russell

Other leaders like E. V. Ramasami Naicker (Periyar), a prominent atheist leader of India, fought against Hinduism and Brahmins for discriminating and dividing people in the name of caste and religion.[175] This was highlighted in 1956 when he arranged for the erection of a statue depicting a Hindu god in a humble representation and made antitheistic statements.[176]