From Wikipedia, the free encyclopedia

Dark matter is invisible. Based on the effect of gravitational lensing, a ring of dark matter has been detected in this image of a galaxy cluster (CL0024+17) and is represented in blue.[1]

Dark matter is a hypothetical kind of matter that cannot be seen with telescopes but accounts for most of the matter in the Universe. The existence and properties of dark matter are inferred from its gravitational effects on visible matter, radiation, and the large-scale structure of the Universe. It has not been detected directly, making it one of the greatest mysteries in modern astrophysics.

Dark matter neither emits nor absorbs light or any other electromagnetic radiation at any significant level. According to the Planck mission team, and based on the standard model of cosmology, the total mass–energy of the known universe contains 4.9% ordinary matter, 26.8% dark matter and 68.3% dark energy.[2][3] Thus, dark matter is estimated to constitute 84.5% of the total matter in the Universe, while dark energy plus dark matter constitute 95.1% of the total mass–energy content of the Universe.[4][5]

Astrophysicists hypothesized dark matter because of discrepancies between the mass of large astronomical objects determined from their gravitational effects and the mass calculated from the observable matter (stars, gas, and dust) that they can be seen to contain. Dark matter was postulated by Jan Oort in 1932, albeit based upon flawed or inadequate evidence, to account for the orbital velocities of stars in the Milky Way and by Fritz Zwicky in 1933 to account for evidence of "missing mass" in the orbital velocities of galaxies in clusters. Adequate evidence from galaxy rotation curves was discovered by Horace W. Babcock in 1939, but was not attributed to dark matter. The first to postulate dark matter based upon robust evidence was Vera Rubin in the 1960s–1970s, using galaxy rotation curves.[6][7] Subsequently many other observations have indicated the presence of dark matter in the Universe, including gravitational lensing of background objects by galaxy clusters such as the Bullet Cluster, the temperature distribution of hot gas in galaxies and clusters of galaxies and, more recently, the pattern of anisotropies in the cosmic microwave background. According to consensus among cosmologists, dark matter is composed primarily of a not yet characterized type of subatomic particle.[8][9] The search for this particle, by a variety of means, is one of the major efforts in particle physics today.[10]

Although the existence of dark matter is generally accepted by the mainstream scientific community, some alternative theories of gravity have been proposed, such as MOND and TeVeS, which try to account for the anomalous observations without requiring additional matter.

Overview

Estimated distribution of matter and energy in the universe, today (top) and when the CMB was released (bottom)

Dark matter's existence is inferred from gravitational effects on visible matter and gravitational lensing of background radiation, and was originally hypothesized to account for discrepancies between calculations of the mass of galaxies, clusters of galaxies and the entire universe made through dynamical and general relativistic means, and calculations based on the mass of the visible "luminous" matter these objects contain: stars and the gas and dust of the interstellar and intergalactic medium.[11]

The most widely accepted explanation for these phenomena is that dark matter exists and that it is most probably[8] composed of weakly interacting massive particles (WIMPs) that interact only through gravity and the weak force. Alternative explanations have been proposed, and there is not yet sufficient experimental evidence to determine whether any of them are correct. Many experiments to detect proposed dark matter particles through non-gravitational means are under way.[10]

One other theory suggests the existence of a “Hidden Valley”, a parallel world made of dark matter having very little in common with matter we know,[12] and that could only interact with our visible universe through gravity.[13][14]

According to observations of structures larger than star systems, as well as Big Bang cosmology interpreted under the Friedmann equations and the Friedmann–Lemaître–Robertson–Walker metric, dark matter accounts for 26.8% of the mass-energy content of the observable universe. In comparison, ordinary (baryonic) matter accounts for only 4.9% of the mass-energy content of the observable universe, with the remainder being attributable to dark energy.[3] From these figures, matter accounts for 31.7% of the mass-energy content of the Universe, and 84.5% of the matter is dark matter.

Dark matter plays a central role in state-of-the-art modeling of cosmic structure formation and Galaxy formation and evolution and has measurable effects on the anisotropies observed in the cosmic microwave background. All these lines of evidence suggest that galaxies, clusters of galaxies, and the Universe as a whole contain far more matter than that which is easily visible with electromagnetic radiation.[13]

Important as dark matter is thought to be in the cosmos, direct evidence of its existence and a concrete understanding of its nature have remained elusive. Though the theory of dark matter remains the most widely accepted theory to explain the anomalies in observed galactic rotation, some alternative theoretical approaches have been developed which broadly fall into the categories of modified gravitational laws and quantum gravitational laws.[15]

Baryonic and nonbaryonic dark matter

Fermi-LAT observations of dwarf galaxies provide new insights on dark matter.

There are three separate lines of evidence that the majority of dark matter is not made of baryons (ordinary matter including protons and neutrons):

- The theory of Big Bang nucleosynthesis, which very accurately predicts the observed abundance of the chemical elements,[16] predicts that baryonic matter accounts for around 4–5 percent of the critical density of the Universe. In contrast, evidence from large-scale structure and other observations indicates that the total matter density is about 30% of the critical density.

- Large astronomical searches for gravitational microlensing, including the MACHO, EROS and OGLE projects, have shown that only a small fraction of the dark matter in the Milky Way can be hiding in dark compact objects; the excluded range covers objects above half the Earth's mass up to 30 solar masses, excluding nearly all the plausible candidates.

- Detailed analysis of the small irregularities (anisotropies) in the cosmic microwave background observed by WMAP and Planck shows that around five-sixths of the total matter is in a form which does not interact significantly with ordinary matter or photons except through gravitational effects.

Candidates for nonbaryonic dark matter are hypothetical particles such as axions, or supersymmetric particles; neutrinos can only form a small fraction of the dark matter, due to limits from large-scale structure and high-redshift galaxies. Unlike baryonic dark matter, nonbaryonic dark matter does not contribute to the formation of the elements in the early universe ("Big Bang nucleosynthesis")[8] and so its presence is revealed only via its gravitational attraction. In addition, if the particles of which it is composed are supersymmetric, they can undergo annihilation interactions with themselves, possibly resulting in observable by-products such as gamma rays and neutrinos ("indirect detection").[18]

Nonbaryonic dark matter is classified in terms of the mass of the particle(s) that is assumed to make it up, and/or the typical velocity dispersion of those particles (since more massive particles move more slowly). There are three prominent hypotheses on nonbaryonic dark matter, called cold dark matter (CDM), warm dark matter (WDM), and hot dark matter (HDM); some combination of these is also possible. The most widely discussed models for nonbaryonic dark matter are based on the cold dark matter hypothesis, and the corresponding particle is most commonly assumed to be a weakly interacting massive particle (WIMP). Hot dark matter may include (massive) neutrinos, but observations imply that only a small fraction of dark matter can be hot. Cold dark matter leads to a "bottom-up" formation of structure in the Universe while hot dark matter would result in a "top-down" formation scenario; since the late 1990s, the latter has been ruled out by observations of high-redshift galaxies such as the Hubble Ultra-Deep Field.[10]

Observational evidence

This artist’s impression shows the expected distribution of dark matter in the Milky Way galaxy as a blue halo of material surrounding the galaxy.[19]

The first person to interpret evidence and infer the presence of dark matter was Dutch astronomer Jan Oort, a pioneer in radio astronomy, in 1932.[20] Oort was studying stellar motions in the local galactic neighbourhood and found that the mass in the galactic plane must be more than the material that could be seen, but this measurement was later determined to be essentially erroneous.[21] In 1933, the Swiss astrophysicist Fritz Zwicky, who studied clusters of galaxies while working at the California Institute of Technology, made a similar inference.[22][23] Zwicky applied the virial theorem to the Coma cluster of galaxies and obtained evidence of unseen mass. Zwicky estimated the cluster's total mass based on the motions of galaxies near its edge and compared that estimate to one based on the number of galaxies and total brightness of the cluster. He found that there was about 400 times more estimated mass than was visually observable. The gravity of the visible galaxies in the cluster would be far too small for such fast orbits, so something extra was required. This is known as the "missing mass problem". Based on these conclusions, Zwicky inferred that there must be some non-visible form of matter which would provide enough of the mass and gravity to hold the cluster together. Zwicky's estimates are off by more than an order of magnitude. Had he erred in the opposite direction by as much, he would have had to try explain the opposite – why there was too much visible matter relative to the gravitational observations – and his observations would have indicated dark energy rather than dark matter.[24]

Much of the evidence for dark matter comes from the study of the motions of galaxies.[25] Many of these appear to be fairly uniform, so by the virial theorem, the total kinetic energy should be half the total gravitational binding energy of the galaxies. Observationally, however, the total kinetic energy is found to be much greater: in particular, assuming the gravitational mass is due to only the visible matter of the galaxy, stars far from the center of galaxies have much higher velocities than predicted by the virial theorem. Galactic rotation curves, which illustrate the velocity of rotation versus the distance from the galactic center, show the well known phenomenology that cannot be explained by only the visible matter. Assuming that the visible material makes up only a small part of the cluster is the most straightforward way of accounting for this. Galaxies show signs of being composed largely of a roughly spherically symmetric, centrally concentrated halo of dark matter with the visible matter concentrated in a disc at the center. Low surface brightness dwarf galaxies are important sources of information for studying dark matter, as they have an uncommonly low ratio of visible matter to dark matter, and have few bright stars at the center which would otherwise impair observations of the rotation curve of outlying stars.

Gravitational lensing observations of galaxy clusters allow direct estimates of the gravitational mass based on its effect on light from background galaxies, since large collections of matter (dark or otherwise) will gravitationally deflect light. In clusters such as Abell 1689, lensing observations confirm the presence of considerably more mass than is indicated by the clusters' light alone. In the Bullet Cluster, lensing observations show that much of the lensing mass is separated from the X-ray-emitting baryonic mass. In July 2012, lensing observations were used to identify a "filament" of dark matter between two clusters of galaxies, as cosmological simulations have predicted.[26]

Galaxy rotation curves

Rotation curve of a typical spiral galaxy: predicted (A) and observed (B). Dark matter can explain the 'flat' appearance of the velocity curve out to a large radius

The first robust indications that the mass to light ratio was anything other than unity came from measurements of galaxy rotation curves. In 1939, Horace W. Babcock reported in his PhD thesis measurements of the rotation curve for the Andromeda nebula which suggested that the mass-to-luminosity ratio increases radially.[27] He, however, attributed it to either absorption of light within the galaxy or modified dynamics in the outer portions of the spiral and not to any form of missing matter.

In the late 1960s and early 1970s, Vera Rubin at the Department of Terrestrial Magnetism at the Carnegie Institution of Washington was the first to both make robust measurements indicating the existence of dark matter and attribute them to dark matter. Rubin worked with a new sensitive spectrograph that could measure the velocity curve of edge-on spiral galaxies to a greater degree of accuracy than had ever before been achieved.[7] Together with fellow staff-member Kent Ford, Rubin announced at a 1975 meeting of the American Astronomical Society the discovery that most stars in spiral galaxies orbit at roughly the same speed, which implied that the mass densities of the galaxies were uniform well beyond the regions containing most of the stars (the galactic bulge), a result independently found in 1978.[28] An influential paper presented Rubin's results in 1980.[29] Rubin's observations and calculations showed that most galaxies must contain about six times as much “dark” mass as can be accounted for by the visible stars. Eventually other astronomers began to corroborate her work and it soon became well-established that most galaxies were dominated by "dark matter":

- Low Surface Brightness (LSB) galaxies.[30] LSBs are probably everywhere dark matter-dominated, with the observed stellar populations making only a small contribution to rotation curves. Such a property is extremely important because it allows one to avoid the difficulties associated with the deprojection and disentanglement of the dark and visible contributions to the rotation curves.[10]

- Spiral Galaxies.[31] Rotation curves of both low and high surface luminosity galaxies appear to suggest a universal rotation curve, which can be expressed as the sum of an exponential thin stellar disk, and a spherical dark matter halo with a flat core of radius r0 and density ρ0 = 4.5 × 10−2(r0/kpc)−2/3 M☉pc−3.

- Elliptical galaxies. Some elliptical galaxies show evidence for dark matter via strong gravitational lensing,[32] X-ray evidence reveals the presence of extended atmospheres of hot gas that fill the dark haloes of isolated ellipticals and whose hydrostatic support provides evidence for dark matter. Other ellipticals have low velocities in their outskirts (tracked for example by planetary nebulae) and were interpreted as not having dark matter haloes.[10] However, simulations of disk-galaxy mergers indicate that stars were torn by tidal forces from their original galaxies during the first close passage and put on outgoing trajectories, explaining the low velocities even with a DM halo.[33] More research is needed to clarify this situation.

Exceptions to this general picture of dark matter haloes for galaxies appear to be galaxies with mass-to-light ratios close to that of stars.[citation needed] Subsequent to this, numerous observations have been made that do indicate the presence of dark matter in various parts of the cosmos, such as observations of the cosmic microwave background, of supernovas used as distance measures, of gravitational lensing at various scales, and many types of sky survey. Starting with Rubin's findings for spiral galaxies, the robust observational evidence for dark matter has been collecting over the decades to the point that by the 1980s most astrophysicists accepted its existence.[35] As a unifying concept, dark matter is one of the dominant features considered in the analysis of structures on the order of galactic scale and larger.

Velocity dispersions of galaxies

In astronomy, the velocity dispersion σ, is the range of velocities about the mean velocity for a group of objects, such as a cluster of stars about a galaxy.Rubin's pioneering work has stood the test of time. Measurements of velocity curves in spiral galaxies were soon followed up with velocity dispersions of elliptical galaxies.[36] While sometimes appearing with lower mass-to-light ratios, measurements of ellipticals still indicate a relatively high dark matter content. Likewise, measurements of the diffuse interstellar gas found at the edge of galaxies indicate not only dark matter distributions that extend beyond the visible limit of the galaxies, but also that the galaxies are virialized (i.e. gravitationally bound with velocities which appear to disproportionately correspond to predicted orbital velocities of general relativity) up to ten times their visible radii.[37] This has the effect of pushing up the dark matter as a fraction of the total amount of gravitating matter from 50% measured by Rubin to the now accepted value of nearly 95%.

There are places where dark matter seems to be a small component or totally absent. Globular clusters show little evidence that they contain dark matter,[38] though their orbital interactions with galaxies do show evidence for galactic dark matter.[citation needed] For some time, measurements of the velocity profile of stars seemed to indicate concentration of dark matter in the disk of the Milky Way. It now appears, however, that the high concentration of baryonic matter in the disk of the galaxy (especially in the interstellar medium) can account for this motion. Galaxy mass profiles are thought to look very different from the light profiles. The typical model for dark matter galaxies is a smooth, spherical distribution in virialized halos. Such would have to be the case to avoid small-scale (stellar) dynamical effects. Recent research reported in January 2006 from the University of Massachusetts Amherst would explain the previously mysterious warp in the disk of the Milky Way by the interaction of the Large and Small Magellanic Clouds and the predicted 20 fold increase in mass of the Milky Way taking into account dark matter.[39]

In 2005, astronomers from Cardiff University claimed to have discovered a galaxy made almost entirely of dark matter, 50 million light years away in the Virgo Cluster, which was named VIRGOHI21.[40] Unusually, VIRGOHI21 does not appear to contain any visible stars: it was seen with radio frequency observations of hydrogen. Based on rotation profiles, the scientists estimate that this object contains approximately 1000 times more dark matter than hydrogen and has a total mass of about 1/10 that of the Milky Way. For comparison, the Milky Way is estimated to have roughly 10 times as much dark matter as ordinary matter. Models of the Big Bang and structure formation have suggested that such dark galaxies should be very common in the Universe[citation needed], but none had previously been detected. If the existence of this dark galaxy is confirmed, it provides strong evidence for the theory of galaxy formation and poses problems for alternative explanations of dark matter.

There are some galaxies whose velocity profile indicates an absence of dark matter, such as NGC 3379.[41]

Galaxy clusters and gravitational lensing

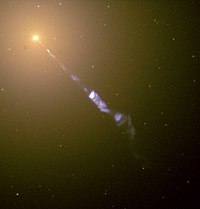

Strong gravitational lensing as observed by the Hubble Space Telescope in Abell 1689 indicates the presence of dark matter—enlarge the image to see the lensing arcs.

Galaxy clusters are especially important for dark matter studies since their masses can be estimated in three independent ways:

- From the scatter in radial velocities of the galaxies within them (as in Zwicky's early observations, but with accurate measurements and much larger samples).

- From X-rays emitted by very hot gas within the clusters. The temperature and density of the gas can be estimated from the energy and flux of the X-rays, hence the gas pressure; assuming pressure and gravity balance, this enables the mass profile of the cluster to be derived. Many of the experiments of the Chandra X-ray Observatory use this technique to independently determine the mass of clusters. These observations generally indicate a ratio of baryonic to total mass approximately 12–15 percent, in reasonable agreement with the Planck spacecraft cosmic average of 15.5–16 percent.[42]

- From their gravitational lensing effects on background objects, usually more distant galaxies. This is observed as "strong lensing" (multiple images) near the cluster core, and weak lensing (shape distortions) in the outer parts. Several large Hubble projects have used this method to measure cluster masses.

A gravitational lens is formed when the light from a more distant source (such as a quasar) is "bent" around a massive object (such as a cluster of galaxies) between the source object and the observer. The process is known as gravitational lensing.

The galaxy cluster Abell 2029 is composed of thousands of galaxies enveloped in a cloud of hot gas, and an amount of dark matter equivalent to more than 1014 M☉. At the center of this cluster is an enormous, elliptically shaped galaxy that is thought to have been formed from the mergers of many smaller galaxies.[43] The measured orbital velocities of galaxies within galactic clusters have been found to be consistent with dark matter observations.

Another important tool for future dark matter observations is gravitational lensing. Lensing relies on the effects of general relativity to predict masses without relying on dynamics, and so is a completely independent means of measuring the dark matter. Strong lensing, the observed distortion of background galaxies into arcs when the light passes through a gravitational lens, has been observed around a few distant clusters including Abell 1689 (pictured).[44] By measuring the distortion geometry, the mass of the cluster causing the phenomena can be obtained. In the dozens of cases where this has been done, the mass-to-light ratios obtained correspond to the dynamical dark matter measurements of clusters.[45]

Weak gravitational lensing looks at minute distortions of galaxies observed in vast galaxy surveys due to foreground objects through statistical analyses. By examining the apparent shear deformation of the adjacent background galaxies, astrophysicists can characterize the mean distribution of dark matter by statistical means and have found mass-to-light ratios that correspond to dark matter densities predicted by other large-scale structure measurements.[46] The correspondence of the two gravitational lens techniques to other dark matter measurements has convinced almost all astrophysicists that dark matter actually exists as a major component of the Universe's composition.

The Bullet Cluster: HST image with overlays. The total projected mass distribution reconstructed from strong and weak gravitational lensing is shown in blue, while the X-ray emitting hot gas observed with Chandra is shown in red.

The most direct observational evidence to date for dark matter is in a system known as the Bullet Cluster. In most regions of the Universe, dark matter and visible material are found together,[47] as expected because of their mutual gravitational attraction. In the Bullet Cluster, a collision between two galaxy clusters appears to have caused a separation of dark matter and baryonic matter. X-ray observations show that much of the baryonic matter (in the form of 107–108 Kelvin[48] gas or plasma) in the system is concentrated in the center of the system. Electromagnetic interactions between passing gas particles caused them to slow down and settle near the point of impact. However, weak gravitational lensing observations of the same system show that much of the mass resides outside of the central region of baryonic gas. Because dark matter does not interact by electromagnetic forces, it would not have been slowed in the same way as the X-ray visible gas, so the dark matter components of the two clusters passed through each other without slowing down substantially. This accounts for the separation. Unlike the galactic rotation curves, this evidence for dark matter is independent of the details of Newtonian gravity, so it is claimed to be direct evidence of the existence of dark matter.[48]

Another galaxy cluster, known as the Train Wreck Cluster/Abell 520, initially appeared to have an unusually massive and dark core containing few of the cluster's galaxies, which presented problems for standard dark matter models.[49] However, more precise observations since this time have shown that the earlier observations were misleading, and that the distribution of dark matter and its ratio to normal matter are very similar to those in galaxies in general, making novel explanations unnecessary.[48]

The observed behavior of dark matter in clusters constrains whether and how much dark matter scatters off other dark matter particles, quantified as its self-interaction cross section. More simply, the question is whether the dark matter has pressure, and thus can be described as a perfect fluid.[50] The distribution of mass (and thus dark matter) in galaxy clusters has been used to argue both for[51] and against[52] the existence of significant self-interaction in dark matter. Specifically, the distribution of dark matter in merging clusters such as the Bullet Cluster shows that dark matter scatters off other dark matter particles only very weakly if at all.[53]

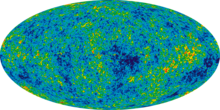

Cosmic microwave background

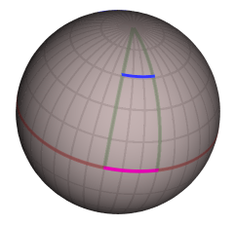

Angular fluctuations in the cosmic microwave background (CMB) spectrum provide evidence for dark matter. Since the 1964 discovery and confirmation of the CMB radiation,[54] many measurements of the CMB have supported and constrained this theory. The NASA Cosmic Background Explorer (COBE) found that the CMB spectrum is a blackbody spectrum with a temperature of 2.726 K. In 1992, COBE detected fluctuations (anisotropies) in the CMB spectrum, at a level of about one part in 105.[55] During the following decade, CMB anisotropies were further investigated by a large number of ground-based and balloon experiments. The primary goal of these experiments was to measure the angular scale of the first acoustic peak of the power spectrum of the anisotropies, for which COBE did not have sufficient resolution. In 2000–2001, several experiments, most notably BOOMERanG[56] found the Universe to be almost spatially flat by measuring the typical angular size (the size on the sky) of the anisotropies. During the 1990s, the first peak was measured with increasing sensitivity and by 2000 the BOOMERanG experiment reported that the highest power fluctuations occur at scales of approximately one degree. These measurements were able to rule out cosmic strings as the leading theory of cosmic structure formation, and suggested cosmic inflation was the right theory.

A number of ground-based interferometers provided measurements of the fluctuations with higher accuracy over the next three years, including the Very Small Array, the Degree Angular Scale Interferometer (DASI) and the Cosmic Background Imager (CBI). DASI made the first detection of the polarization of the CMB,[57][58] and the CBI provided the first E-mode polarization spectrum with compelling evidence that it is out of phase with the T-mode spectrum.[59] COBE's successor, the Wilkinson Microwave Anisotropy Probe (WMAP) has provided the most detailed measurements of (large-scale) anisotropies in the CMB as of 2009 with ESA's Planck spacecraft returning more detailed results in 2012-2014.[60] WMAP's measurements played the key role in establishing the current Standard Model of Cosmology, namely the Lambda-CDM model, a flat universe dominated by dark energy, supplemented by dark matter and atoms with density fluctuations seeded by a Gaussian, adiabatic, nearly scale invariant process. The basic properties of this universe are determined by five numbers: the density of matter, the density of atoms, the age of the Universe (or equivalently, the Hubble constant today), the amplitude of the initial fluctuations, and their scale dependence.

A successful Big Bang cosmology theory must fit with all available astronomical observations, including the CMB. In cosmology, the CMB is explained as relic radiation from shortly after the big bang. The anisotropies in the CMB are explained as acoustic oscillations in the photon-baryon plasma (prior to the emission of the CMB after the photons decouple from the baryons at 379,000 years after the Big Bang) whose restoring force is gravity.[61] Ordinary (baryonic) matter interacts strongly with radiation whereas, by definition, dark matter does not. Both affect the oscillations by their gravity, so the two forms of matter will have different effects. The typical angular scales of the oscillations in the CMB, measured as the power spectrum of the CMB anisotropies, thus reveal the different effects of baryonic matter and dark matter. The CMB power spectrum shows a large first peak and smaller successive peaks, with three peaks resolved as of 2009.[60] The first peak tells mostly about the density of baryonic matter and the third peak mostly about the density of dark matter, measuring the density of matter and the density of atoms in the Universe.

Sky surveys and baryon acoustic oscillations

The acoustic oscillations in the early universe (see the previous section) leave their imprint in the visible matter by Baryon Acoustic Oscillation (BAO) clustering, in a way that can be measured with sky surveys such as the Sloan Digital Sky Survey and the 2dF Galaxy Redshift Survey.[62] These measurements are consistent with those of the CMB derived from the WMAP spacecraft and further constrain the Lambda CDM model and dark matter. Note that the CMB data and the BAO data measure the acoustic oscillations at very different distance scales.[61]Type Ia supernovae distance measurements

Type Ia supernovae can be used as "standard candles" to measure extragalactic distances, and extensive data sets of these supernovae can be used to constrain cosmological models.[63] They constrain the dark energy density ΩΛ = ~0.713 for a flat, Lambda CDM Universe and the parameter w for a quintessence model. Once again, the values obtained are roughly consistent with those derived from the WMAP observations and further constrain the Lambda CDM model and (indirectly) dark matter.[61]Lyman-alpha forest

In astronomical spectroscopy, the Lyman-alpha forest is the sum of absorption lines arising from the Lyman-alpha transition of the neutral hydrogen in the spectra of distant galaxies and quasars.

Observations of the Lyman-alpha forest can also be used to constrain cosmological models.[64] These constraints are again in agreement with those obtained from WMAP data.

Dark matter is crucial to the Big Bang model of cosmology as a component which corresponds directly to measurements of the parameters associated with Friedmann cosmology solutions to general relativity. In particular, measurements of the cosmic microwave background anisotropies correspond to a cosmology where much of the matter interacts with photons more weakly than the known forces that couple light interactions to baryonic matter. Likewise, a significant amount of non-baryonic, cold matter is necessary to explain the large-scale structure of the universe.

Observations suggest that structure formation in the Universe proceeds hierarchically, with the smallest structures collapsing first and followed by galaxies and then clusters of galaxies. As the structures collapse in the evolving universe, they begin to "light up" as the baryonic matter heats up through gravitational contraction and the object approaches hydrostatic pressure balance. Ordinary baryonic matter had too high a temperature, and too much pressure left over from the Big Bang to collapse and form smaller structures, such as stars, via the Jeans instability. Dark matter acts as a compactor of structure. This model not only corresponds with statistical surveying of the visible structure in the Universe but also corresponds precisely to the dark matter predictions of the cosmic microwave background.

This bottom up model of structure formation requires something like cold dark matter to succeed. Large computer simulations of billions of dark matter particles have been used[66] to confirm that the cold dark matter model of structure formation is consistent with the structures observed in the Universe through galaxy surveys, such as the Sloan Digital Sky Survey and 2dF Galaxy Redshift Survey, as well as observations of the Lyman-alpha forest. These studies have been crucial in constructing the Lambda-CDM model which measures the cosmological parameters, including the fraction of the Universe made up of baryons and dark matter.

There are, however, several points of tension between observation and simulations of structure formation driven by dark matter. There is evidence that there are 10 to 100 times fewer small galaxies than permitted by what the dark matter theory of galaxy formation predicts.[67][68] This is known as the dwarf galaxy problem. In addition, the simulations predict dark matter distributions with a very dense cusp near the centers of galaxies, but the observed halos are smoother than predicted.

Although dark matter had historically been inferred by many astronomical observations, its composition long remained speculative. Early theories of dark matter concentrated on hidden heavy normal objects (such as black holes, neutron stars, faint old white dwarfs, and brown dwarfs) as the possible candidates for dark matter, collectively known as massive compact halo objects or MACHOs. Astronomical surveys for gravitational microlensing, including the MACHO, EROS and OGLE projects, along with Hubble telescope searches for ultra-faint stars, have not found enough of these hidden MACHOs.[69][70][71] Some hard-to-detect baryonic matter, such as MACHOs and some forms of gas, were additionally speculated to make a contribution to the overall dark matter content, but evidence indicated such would constitute only a small portion.[72][73][74]

Furthermore, data from a number of lines of other evidence, including galaxy rotation curves, gravitational lensing, structure formation, and the fraction of baryons in clusters and the cluster abundance combined with independent evidence for the baryon density, indicated that 85–90% of the mass in the Universe does not interact with the electromagnetic force. This "nonbaryonic dark matter" is evident through its gravitational effect. Consequently, the most commonly held view was that dark matter is primarily non-baryonic, made of one or more elementary particles other than the usual electrons, protons, neutrons, and known neutrinos. The most commonly proposed particles then became WIMPs (Weakly Interacting Massive Particles, including neutralinos), axions, or sterile neutrinos, though many other possible candidates have been proposed.

The dark matter component has much more mass than the "visible" component of the universe.[75] Only about 4.6% of the mass-energy of the Universe is ordinary matter. About 23% is thought to be composed of dark matter. The remaining 72% is thought to consist of dark energy, an even stranger component, distributed almost uniformly in space and with energy density non-evolving or slowly evolving with time.[76] Determining the nature of this dark matter is one of the most important problems in modern cosmology and particle physics. It has been noted that the names "dark matter" and "dark energy" serve mainly as expressions of human ignorance, much like the marking of early maps with "terra incognita".[76]

Dark matter candidates can be approximately divided into three classes, called cold, warm and hot dark matter.[77]

These categories do not correspond to an actual temperature, but instead refer to how fast the particles were moving, thus how far they moved due to random motions in the early universe, before they slowed down due to the expansion of the Universe – this is an important distance called the "free streaming length". Primordial density fluctuations smaller than this free-streaming length get washed out as particles move from overdense to underdense regions, while fluctuations larger than the free-streaming length are unaffected; therefore this free-streaming length sets a minimum scale for structure formation.

As an example, Davis et al. wrote in 1985:

The above temperature 2.7 million K which gives a typical photon energy of 250 electron-volts, so this sets a typical mass scale for "warm" dark matter: particles much more massive than this, such as GeV – TeV mass WIMPs, would become non-relativistic much earlier than 1 year after the Big Bang, thus have a free-streaming length which is much smaller than a proto-galaxy and effectively negligible (thus cold dark matter). Conversely, much lighter particles (e.g. neutrinos of mass ~ few eV) have a free-streaming length much larger than a proto-galaxy (thus hot dark matter).

The composition of the constituents of cold dark matter is currently unknown. Possibilities range from large objects like MACHOs (such as black holes[81]) or RAMBOs, to new particles like WIMPs and axions. Possibilities involving normal baryonic matter include brown dwarfs, other stellar remnants such as white dwarfs, or perhaps small, dense chunks of heavy elements.

Studies of big bang nucleosynthesis and gravitational lensing have convinced most scientists[10][82][83][84][85][86] that MACHOs of any type cannot be more than a small fraction of the total dark matter.[8][82] Black holes of nearly any mass are ruled out as a primary dark matter constituent by a variety of searches and constraints.[82][84] According to A. Peter: "...the only really plausible dark-matter candidates are new particles."[83]

The DAMA/NaI experiment and its successor DAMA/LIBRA have claimed to directly detect dark matter particles passing through the Earth, but many scientists remain skeptical, as negative results from similar experiments seem incompatible with the DAMA results.

Many supersymmetric models naturally give rise to stable dark matter candidates in the form of the Lightest Supersymmetric Particle (LSP). Separately, heavy sterile neutrinos exist in non-supersymmetric extensions to the standard model that explain the small neutrino mass through the seesaw mechanism.

There have been no particles discovered so far that can be categorized as warm dark matter. There is a postulated candidate for the warm dark matter category, which is the sterile neutrino: a heavier, slower form of neutrino which does not even interact through the Weak force unlike regular neutrinos. Interestingly, some modified gravity theories, such as Scalar-tensor-vector gravity, also require that a warm dark matter exist to make their equations work out.

An example of hot dark matter is already known: the neutrino. Neutrinos were discovered quite separately from the search for dark matter, and long before it seriously began: they were first postulated in 1930, and first detected in 1956. Neutrinos have a very small mass: at least 100,000 times less massive than an electron. Other than gravity, neutrinos only interact with normal matter via the weak force making them very difficult to detect (the weak force only works over a small distance, thus a neutrino will only trigger a weak force event if it hits a nucleus directly head-on). This would make them 'weakly interacting light particles' (WILPs), as opposed to cold dark matter's theoretical candidates, the weakly interacting massive particles (WIMPs).

There are three different known flavors of neutrinos (i.e. the electron, muon, and tau neutrinos), and their masses are slightly different. The resolution to the solar neutrino problem demonstrated that these three types of neutrinos actually change and oscillate from one flavor to the others and back as they are in-flight. It's hard to determine an exact upper bound on the collective average mass of the three neutrinos (let alone a mass for any of the three individually). For example, if the average neutrino mass were chosen to be over 50 eV/c2 (which is still less than 1/10,000th of the mass of an electron), just by the sheer number of them in the Universe, the Universe would collapse due to their mass. So other observations have served to estimate an upper-bound for the neutrino mass. Using cosmic microwave background data and other methods, the current conclusion is that their average mass probably does not exceed 0.3 eV/c2 Thus, the normal forms of neutrinos cannot be responsible for the measured dark matter component from cosmology.[87]

Hot dark matter was popular for a time in the early 1980s, but it suffers from a severe problem: because all galaxy-size density fluctuations get washed out by free-streaming, the first objects that can form are huge supercluster-size pancakes, which then were theorised somehow to fragment into galaxies. Deep-field observations clearly show that galaxies formed at early times, with clusters and superclusters forming later as galaxies clump together, so any model dominated by hot dark matter is seriously in conflict with observations.

These experiments can be divided into two classes: direct detection experiments, which search for the scattering of dark matter particles off atomic nuclei within a detector; and indirect detection, which look for the products of WIMP annihilations.[18]

An alternative approach to the detection of WIMPs in nature is to produce them in the laboratory. Experiments with the Large Hadron Collider (LHC) may be able to detect WIMPs produced in collisions of the LHC proton beams. Because a WIMP has negligible interactions with matter, it may be detected indirectly as (large amounts of) missing energy and momentum which escape the LHC detectors, provided all the other (non-negligible) collision products are detected.[90] These experiments could show that WIMPs can be created, but it would still require a direct detection experiment to show that they exist in sufficient numbers in the galaxy to account for dark matter.

The majority of present experiments use one of two detector technologies: cryogenic detectors, operating at temperatures below 100mK, detect the heat produced when a particle hits an atom in a crystal absorber such as germanium. Noble liquid detectors detect the flash of scintillation light produced by a particle collision in liquid xenon or argon. Cryogenic detector experiments include: CDMS, CRESST, EDELWEISS, EURECA. Noble liquid experiments include ZEPLIN, XENON, DEAP, ArDM, WARP, DarkSide, PandaX, and LUX, the Large Underground Xenon Detector. Both of these detector techniques are capable of distinguishing background particles which scatter off electrons, from dark matter particles which scatter off nuclei. Other experiments include SIMPLE and PICASSO.

The DAMA/NaI, DAMA/LIBRA experiments have detected an annual modulation in the event rate,[91] which they claim is due to dark matter particles. (As the Earth orbits the Sun, the velocity of the detector relative to the dark matter halo will vary by a small amount depending on the time of year). This claim is so far unconfirmed and difficult to reconcile with the negative results of other experiments assuming that the WIMP scenario is correct.[92]

Directional detection of dark matter is a search strategy based on the motion of the Solar System around the galactic center.[93][94][95][96]

By using a low pressure TPC, it is possible to access information on recoiling tracks (3D reconstruction if possible) and to constrain the WIMP-nucleus kinematics. WIMPs coming from the direction in which the Sun is travelling (roughly in the direction of the Cygnus constellation) may then be separated from background noise, which should be isotropic. Directional dark matter experiments include DMTPC, DRIFT, Newage and MIMAC.

On 17 December 2009 CDMS researchers reported two possible WIMP candidate events. They estimate that the probability that these events are due to a known background (neutrons or misidentified beta or gamma events) is 23%, and conclude "this analysis cannot be interpreted as significant evidence for WIMP interactions, but we cannot reject either event as signal."[97]

More recently, on 4 September 2011, researchers using the CRESST detectors presented evidence[98] of 67 collisions occurring in detector crystals from sub-atomic particles, calculating there is a less than 1 in 10,000 chance that all were caused by known sources of interference or contamination. It is quite possible then that many of these collisions were caused by WIMPs, and/or other unknown particles.

The EGRET gamma ray telescope observed more gamma rays than expected from the Milky Way, but scientists concluded that this was most likely due to a mis-estimation of the telescope's sensitivity.[99]

The Fermi Gamma-ray Space Telescope, launched 11 June 2008, is searching for gamma rays from dark matter annihilation and decay.[100] In April 2012, an analysis[101] of previously available data from its Large Area Telescope instrument produced strong statistical evidence of a 130 GeV line in the gamma radiation coming from the center of the Milky Way. At the time, WIMP annihilation was the most probable explanation for that line.[102]

At higher energies, ground-based gamma-ray telescopes have set limits on the annihilation of dark matter in dwarf spheroidal galaxies[103] and in clusters of galaxies.[104]

The PAMELA experiment (launched 2006) has detected a larger number of positrons than expected. These extra positrons could be produced by dark matter annihilation, but may also come from pulsars. No excess of anti-protons has been observed.[105] The Alpha Magnetic Spectrometer on the International Space Station is designed to directly measure the fraction of cosmic rays which are positrons. The first results, published in April 2013, indicate an excess of high-energy cosmic rays which could potentially be due to annihilation of dark matter.[106][107][108][109][110][111]

A few of the WIMPs passing through the Sun or Earth may scatter off atoms and lose energy. This way a large population of WIMPs may accumulate at the center of these bodies, increasing the chance that two will collide and annihilate. This could produce a distinctive signal in the form of high-energy neutrinos originating from the center of the Sun or Earth.[112] It is generally considered that the detection of such a signal would be the strongest indirect proof of WIMP dark matter.[10] High-energy neutrino telescopes such as AMANDA, IceCube and ANTARES are searching for this signal.

WIMP annihilation from the Milky Way Galaxy as a whole may also be detected in the form of various annihilation products.[113] The Galactic center is a particularly good place to look because the density of dark matter may be very high there.[114]

In 2014, two independent and separate groups, one led by Harvard astrophysicist Esra Bulbul and the other by Leiden astrophysicist Alexey Boyarsky, reported an unidentified X-ray emission line around 3.5 keV in the spectra of clusters of galaxies; it is possible this could be an indirect signal from dark matter and that it could be a new particle, a sterile neutrino which has mass.[115]

In that case, dark matter would be a perfect candidate for matter that would exist in other dimensions and that could only interact with the matter on our dimensions through gravity. That dark matter located on different dimensions could potentially aggregate in the same way as the matter in our visible universe does, forming exotic galaxies.[13]

The earliest modified gravity model to emerge was Mordehai Milgrom's Modified Newtonian Dynamics (MOND) in 1983, which adjusts Newton's laws to create a stronger gravitational field when gravitational acceleration levels become tiny (such as near the rim of a galaxy). It had some success explaining galactic-scale features, such as rotational velocity curves of elliptical galaxies, and dwarf elliptical galaxies, but did not successfully explain galaxy cluster gravitational lensing. However, MOND was not relativistic, since it was just a straight adjustment of the older Newtonian account of gravitation, not of the newer account in Einstein's general relativity. Soon after 1983, attempts were made to bring MOND into conformity with general relativity; this is an ongoing process, and many competing hypotheses have emerged based around the original MOND model—including TeVeS, MOG or STV gravity, and phenomenological covariant approach,[117] among others.

In 2007, John W. Moffat proposed a modified gravity hypothesis based on the nonsymmetric gravitational theory (NGT) that claims to account for the behavior of colliding galaxies.[118] This model requires the presence of non-relativistic neutrinos, or other candidates for (cold) dark matter, to work.

Another proposal uses a gravitational backreaction in an emerging theoretical field that seeks to explain gravity between objects as an action, a reaction, and then a back-reaction. Simply, an object A affects an object B, and the object B then re-affects object A, and so on: creating a sort of feedback loop that strengthens gravity.[119]

Recently, another group has proposed a modification of large-scale gravity in a hypothesis named "dark fluid". In this formulation, the attractive gravitational effects attributed to dark matter are instead a side-effect of dark energy. Dark fluid combines dark matter and dark energy in a single energy field that produces different effects at different scales. This treatment is a simplified approach to a previous fluid-like model called the generalized Chaplygin gas model where the whole of spacetime is a compressible gas.[120] Dark fluid can be compared to an atmospheric system. Atmospheric pressure causes air to expand, but part of the air can collapse to form clouds. In the same way, the dark fluid might generally expand, but it also could collect around galaxies to help hold them together.[120]

Another set of proposals is based on the possibility of a double metric tensor for space-time.[121] It has been argued that time-reversed solutions in general relativity require such double metric for consistency, and that both dark matter and dark energy can be understood in terms of time-reversed solutions of general relativity.[122]

Structure formation

3D map of the large-scale distribution of dark matter, reconstructed from measurements of weak gravitational lensing with the Hubble Space Telescope. [65]

Observations suggest that structure formation in the Universe proceeds hierarchically, with the smallest structures collapsing first and followed by galaxies and then clusters of galaxies. As the structures collapse in the evolving universe, they begin to "light up" as the baryonic matter heats up through gravitational contraction and the object approaches hydrostatic pressure balance. Ordinary baryonic matter had too high a temperature, and too much pressure left over from the Big Bang to collapse and form smaller structures, such as stars, via the Jeans instability. Dark matter acts as a compactor of structure. This model not only corresponds with statistical surveying of the visible structure in the Universe but also corresponds precisely to the dark matter predictions of the cosmic microwave background.

This bottom up model of structure formation requires something like cold dark matter to succeed. Large computer simulations of billions of dark matter particles have been used[66] to confirm that the cold dark matter model of structure formation is consistent with the structures observed in the Universe through galaxy surveys, such as the Sloan Digital Sky Survey and 2dF Galaxy Redshift Survey, as well as observations of the Lyman-alpha forest. These studies have been crucial in constructing the Lambda-CDM model which measures the cosmological parameters, including the fraction of the Universe made up of baryons and dark matter.

There are, however, several points of tension between observation and simulations of structure formation driven by dark matter. There is evidence that there are 10 to 100 times fewer small galaxies than permitted by what the dark matter theory of galaxy formation predicts.[67][68] This is known as the dwarf galaxy problem. In addition, the simulations predict dark matter distributions with a very dense cusp near the centers of galaxies, but the observed halos are smoother than predicted.

History of the search for its composition

What is dark matter? How is it generated? Is it related to supersymmetry?

|

Furthermore, data from a number of lines of other evidence, including galaxy rotation curves, gravitational lensing, structure formation, and the fraction of baryons in clusters and the cluster abundance combined with independent evidence for the baryon density, indicated that 85–90% of the mass in the Universe does not interact with the electromagnetic force. This "nonbaryonic dark matter" is evident through its gravitational effect. Consequently, the most commonly held view was that dark matter is primarily non-baryonic, made of one or more elementary particles other than the usual electrons, protons, neutrons, and known neutrinos. The most commonly proposed particles then became WIMPs (Weakly Interacting Massive Particles, including neutralinos), axions, or sterile neutrinos, though many other possible candidates have been proposed.

The dark matter component has much more mass than the "visible" component of the universe.[75] Only about 4.6% of the mass-energy of the Universe is ordinary matter. About 23% is thought to be composed of dark matter. The remaining 72% is thought to consist of dark energy, an even stranger component, distributed almost uniformly in space and with energy density non-evolving or slowly evolving with time.[76] Determining the nature of this dark matter is one of the most important problems in modern cosmology and particle physics. It has been noted that the names "dark matter" and "dark energy" serve mainly as expressions of human ignorance, much like the marking of early maps with "terra incognita".[76]

Dark matter candidates can be approximately divided into three classes, called cold, warm and hot dark matter.[77]

These categories do not correspond to an actual temperature, but instead refer to how fast the particles were moving, thus how far they moved due to random motions in the early universe, before they slowed down due to the expansion of the Universe – this is an important distance called the "free streaming length". Primordial density fluctuations smaller than this free-streaming length get washed out as particles move from overdense to underdense regions, while fluctuations larger than the free-streaming length are unaffected; therefore this free-streaming length sets a minimum scale for structure formation.

- Cold dark matter – objects with a free-streaming length much smaller than a protogalaxy.[78]

- Warm dark matter – particles with a free-streaming length similar to a protogalaxy.

- Hot dark matter – particles with a free-streaming length much larger than a protogalaxy.[79]

As an example, Davis et al. wrote in 1985:

Candidate particles can be grouped into three categories on the basis of their effect on the fluctuation spectrum (Bond et al. 1983). If the dark matter is composed of abundant light particles which remain relativistic until shortly before recombination, then it may be termed "hot". The best candidate for hot dark matter is a neutrino ... A second possibility is for the dark matter particles to interact more weakly than neutrinos, to be less abundant, and to have a mass of order 1 keV. Such particles are termed "warm dark matter", because they have lower thermal velocities than massive neutrinos ... there are at present few candidate particles which fit this description. Gravitinos and photinos have been suggested (Pagels and Primack 1982; Bond, Szalay and Turner 1982) ... Any particles which became nonrelativistic very early, and so were able to diffuse a negligible distance, are termed "cold" dark matter (CDM). There are many candidates for CDM including supersymmetric particles.[80]The full calculations are quite technical, but an approximate dividing line is that "warm" dark matter particles became non-relativistic when the Universe was approximately 1 year old and 1 millionth of its present size; standard hot big bang theory implies the Universe was then in the radiation-dominated era (photons and neutrinos), with a photon temperature 2.7 million K. Standard physical cosmology gives the particle horizon size as 2ct in the radiation-dominated era, thus 2 light-years, and a region of this size would expand to 2 million light years today (if there were no structure formation). The actual free-streaming length is roughly 5 times larger than the above length, since the free-streaming length continues to grow slowly as particle velocities decrease inversely with the scale factor after they become non-relativistic; therefore, in this example the free-streaming length would correspond to 10 million light-years or 3 Mpc today, which is around the size containing on average the mass of a large galaxy.

The above temperature 2.7 million K which gives a typical photon energy of 250 electron-volts, so this sets a typical mass scale for "warm" dark matter: particles much more massive than this, such as GeV – TeV mass WIMPs, would become non-relativistic much earlier than 1 year after the Big Bang, thus have a free-streaming length which is much smaller than a proto-galaxy and effectively negligible (thus cold dark matter). Conversely, much lighter particles (e.g. neutrinos of mass ~ few eV) have a free-streaming length much larger than a proto-galaxy (thus hot dark matter).

Cold dark matter

Today, cold dark matter is the simplest explanation for most cosmological observations. "Cold" dark matter is dark matter composed of constituents with a free-streaming length much smaller than the ancestor of a galaxy-scale perturbation. This is currently the area of greatest interest for dark matter research, as hot dark matter does not seem to be viable for galaxy and galaxy cluster formation, and most particle candidates become non-relativistic at very early times, hence are classified as cold.The composition of the constituents of cold dark matter is currently unknown. Possibilities range from large objects like MACHOs (such as black holes[81]) or RAMBOs, to new particles like WIMPs and axions. Possibilities involving normal baryonic matter include brown dwarfs, other stellar remnants such as white dwarfs, or perhaps small, dense chunks of heavy elements.

Studies of big bang nucleosynthesis and gravitational lensing have convinced most scientists[10][82][83][84][85][86] that MACHOs of any type cannot be more than a small fraction of the total dark matter.[8][82] Black holes of nearly any mass are ruled out as a primary dark matter constituent by a variety of searches and constraints.[82][84] According to A. Peter: "...the only really plausible dark-matter candidates are new particles."[83]

The DAMA/NaI experiment and its successor DAMA/LIBRA have claimed to directly detect dark matter particles passing through the Earth, but many scientists remain skeptical, as negative results from similar experiments seem incompatible with the DAMA results.

Many supersymmetric models naturally give rise to stable dark matter candidates in the form of the Lightest Supersymmetric Particle (LSP). Separately, heavy sterile neutrinos exist in non-supersymmetric extensions to the standard model that explain the small neutrino mass through the seesaw mechanism.

Warm dark matter

Warm dark matter refers to particles with a free-streaming length comparable to the size of a region which subsequently evolved into a dwarf galaxy. This leads to predictions which are very similar to cold dark matter on large scales, including the CMB, galaxy clustering and large galaxy rotation curves, but with less small-scale density perturbations. This reduces the predicted abundance of dwarf galaxies and may lead to lower density of dark matter in the central parts of large galaxies; some researchers consider this may be a better fit to observations. A challenge for this model is that there are no very well-motivated particle physics candidates with the required mass ~ 300 eV to 3000 eV.There have been no particles discovered so far that can be categorized as warm dark matter. There is a postulated candidate for the warm dark matter category, which is the sterile neutrino: a heavier, slower form of neutrino which does not even interact through the Weak force unlike regular neutrinos. Interestingly, some modified gravity theories, such as Scalar-tensor-vector gravity, also require that a warm dark matter exist to make their equations work out.

Hot dark matter

Hot dark matter consists of particles that have a free-streaming length much larger than that of a proto-galaxy.An example of hot dark matter is already known: the neutrino. Neutrinos were discovered quite separately from the search for dark matter, and long before it seriously began: they were first postulated in 1930, and first detected in 1956. Neutrinos have a very small mass: at least 100,000 times less massive than an electron. Other than gravity, neutrinos only interact with normal matter via the weak force making them very difficult to detect (the weak force only works over a small distance, thus a neutrino will only trigger a weak force event if it hits a nucleus directly head-on). This would make them 'weakly interacting light particles' (WILPs), as opposed to cold dark matter's theoretical candidates, the weakly interacting massive particles (WIMPs).

There are three different known flavors of neutrinos (i.e. the electron, muon, and tau neutrinos), and their masses are slightly different. The resolution to the solar neutrino problem demonstrated that these three types of neutrinos actually change and oscillate from one flavor to the others and back as they are in-flight. It's hard to determine an exact upper bound on the collective average mass of the three neutrinos (let alone a mass for any of the three individually). For example, if the average neutrino mass were chosen to be over 50 eV/c2 (which is still less than 1/10,000th of the mass of an electron), just by the sheer number of them in the Universe, the Universe would collapse due to their mass. So other observations have served to estimate an upper-bound for the neutrino mass. Using cosmic microwave background data and other methods, the current conclusion is that their average mass probably does not exceed 0.3 eV/c2 Thus, the normal forms of neutrinos cannot be responsible for the measured dark matter component from cosmology.[87]

Hot dark matter was popular for a time in the early 1980s, but it suffers from a severe problem: because all galaxy-size density fluctuations get washed out by free-streaming, the first objects that can form are huge supercluster-size pancakes, which then were theorised somehow to fragment into galaxies. Deep-field observations clearly show that galaxies formed at early times, with clusters and superclusters forming later as galaxies clump together, so any model dominated by hot dark matter is seriously in conflict with observations.

Mixed dark matter

Mixed dark matter is a now obsolete model, with a specifically chosen mass ratio of 80% cold dark matter and 20% hot dark matter (neutrinos) content. Though it is presumable that hot dark matter coexists with cold dark matter in any case, there was a very specific reason for choosing this particular ratio of hot to cold dark matter in this model. During the early 1990s it became steadily clear that a Universe with critical density of cold dark matter did not fit the COBE and large-scale galaxy clustering observations; either the 80/20 mixed dark matter model, or LambdaCDM, were able to reconcile these. With the discovery of the accelerating universe from supernovae, and more accurate measurements of CMB anisotropy and galaxy clustering, the mixed dark matter model was essentially ruled out while the concordance LambdaCDM model remained a good fit.Detection

If the dark matter within our galaxy is made up of Weakly Interacting Massive Particles (WIMPs), then millions, possibly billions, of WIMPs must pass through every square centimeter of the Earth each second.[88][89] There are many experiments currently running, or planned, aiming to test this hypothesis by searching for WIMPs. Although WIMPs are the historically more popular dark matter candidate for searches,[10] there are experiments searching for other particle candidates; the Axion Dark Matter eXperiment (ADMX) is currently searching for the dark matter axion, a well-motivated and constrained dark matter source. It is also possible that dark matter consists of very heavy hidden sector particles which only interact with ordinary matter via gravity.These experiments can be divided into two classes: direct detection experiments, which search for the scattering of dark matter particles off atomic nuclei within a detector; and indirect detection, which look for the products of WIMP annihilations.[18]

An alternative approach to the detection of WIMPs in nature is to produce them in the laboratory. Experiments with the Large Hadron Collider (LHC) may be able to detect WIMPs produced in collisions of the LHC proton beams. Because a WIMP has negligible interactions with matter, it may be detected indirectly as (large amounts of) missing energy and momentum which escape the LHC detectors, provided all the other (non-negligible) collision products are detected.[90] These experiments could show that WIMPs can be created, but it would still require a direct detection experiment to show that they exist in sufficient numbers in the galaxy to account for dark matter.

Direct detection experiments

Direct detection experiments usually operate in deep underground laboratories to reduce the background from cosmic rays. These include: the Soudan mine; the SNOLAB underground laboratory at Sudbury, Ontario (Canada); the Gran Sasso National Laboratory (Italy); the Canfranc Underground Laboratory (Spain); the Boulby Underground Laboratory (UK); the Deep Underground Science and Engineering Laboratory, South Dakota (US); and the Particle and Astrophysical Xenon Detector (China).The majority of present experiments use one of two detector technologies: cryogenic detectors, operating at temperatures below 100mK, detect the heat produced when a particle hits an atom in a crystal absorber such as germanium. Noble liquid detectors detect the flash of scintillation light produced by a particle collision in liquid xenon or argon. Cryogenic detector experiments include: CDMS, CRESST, EDELWEISS, EURECA. Noble liquid experiments include ZEPLIN, XENON, DEAP, ArDM, WARP, DarkSide, PandaX, and LUX, the Large Underground Xenon Detector. Both of these detector techniques are capable of distinguishing background particles which scatter off electrons, from dark matter particles which scatter off nuclei. Other experiments include SIMPLE and PICASSO.

The DAMA/NaI, DAMA/LIBRA experiments have detected an annual modulation in the event rate,[91] which they claim is due to dark matter particles. (As the Earth orbits the Sun, the velocity of the detector relative to the dark matter halo will vary by a small amount depending on the time of year). This claim is so far unconfirmed and difficult to reconcile with the negative results of other experiments assuming that the WIMP scenario is correct.[92]

Directional detection of dark matter is a search strategy based on the motion of the Solar System around the galactic center.[93][94][95][96]

By using a low pressure TPC, it is possible to access information on recoiling tracks (3D reconstruction if possible) and to constrain the WIMP-nucleus kinematics. WIMPs coming from the direction in which the Sun is travelling (roughly in the direction of the Cygnus constellation) may then be separated from background noise, which should be isotropic. Directional dark matter experiments include DMTPC, DRIFT, Newage and MIMAC.

On 17 December 2009 CDMS researchers reported two possible WIMP candidate events. They estimate that the probability that these events are due to a known background (neutrons or misidentified beta or gamma events) is 23%, and conclude "this analysis cannot be interpreted as significant evidence for WIMP interactions, but we cannot reject either event as signal."[97]

More recently, on 4 September 2011, researchers using the CRESST detectors presented evidence[98] of 67 collisions occurring in detector crystals from sub-atomic particles, calculating there is a less than 1 in 10,000 chance that all were caused by known sources of interference or contamination. It is quite possible then that many of these collisions were caused by WIMPs, and/or other unknown particles.

Indirect detection experiments

Indirect detection experiments search for the products of WIMP annihilation or decay. If WIMPs are Majorana particles (WIMPs are their own antiparticle) then two WIMPs could annihilate to produce gamma rays or Standard Model particle-antiparticle pairs. Additionally, if the WIMP is unstable, WIMPs could decay into standard model particles. These processes could be detected indirectly through an excess of gamma rays, antiprotons or positrons emanating from regions of high dark matter density. The detection of such a signal is not conclusive evidence for dark matter, as the production of gamma rays from other sources is not fully understood.[10][18]The EGRET gamma ray telescope observed more gamma rays than expected from the Milky Way, but scientists concluded that this was most likely due to a mis-estimation of the telescope's sensitivity.[99]

The Fermi Gamma-ray Space Telescope, launched 11 June 2008, is searching for gamma rays from dark matter annihilation and decay.[100] In April 2012, an analysis[101] of previously available data from its Large Area Telescope instrument produced strong statistical evidence of a 130 GeV line in the gamma radiation coming from the center of the Milky Way. At the time, WIMP annihilation was the most probable explanation for that line.[102]

At higher energies, ground-based gamma-ray telescopes have set limits on the annihilation of dark matter in dwarf spheroidal galaxies[103] and in clusters of galaxies.[104]

The PAMELA experiment (launched 2006) has detected a larger number of positrons than expected. These extra positrons could be produced by dark matter annihilation, but may also come from pulsars. No excess of anti-protons has been observed.[105] The Alpha Magnetic Spectrometer on the International Space Station is designed to directly measure the fraction of cosmic rays which are positrons. The first results, published in April 2013, indicate an excess of high-energy cosmic rays which could potentially be due to annihilation of dark matter.[106][107][108][109][110][111]

A few of the WIMPs passing through the Sun or Earth may scatter off atoms and lose energy. This way a large population of WIMPs may accumulate at the center of these bodies, increasing the chance that two will collide and annihilate. This could produce a distinctive signal in the form of high-energy neutrinos originating from the center of the Sun or Earth.[112] It is generally considered that the detection of such a signal would be the strongest indirect proof of WIMP dark matter.[10] High-energy neutrino telescopes such as AMANDA, IceCube and ANTARES are searching for this signal.

WIMP annihilation from the Milky Way Galaxy as a whole may also be detected in the form of various annihilation products.[113] The Galactic center is a particularly good place to look because the density of dark matter may be very high there.[114]

In 2014, two independent and separate groups, one led by Harvard astrophysicist Esra Bulbul and the other by Leiden astrophysicist Alexey Boyarsky, reported an unidentified X-ray emission line around 3.5 keV in the spectra of clusters of galaxies; it is possible this could be an indirect signal from dark matter and that it could be a new particle, a sterile neutrino which has mass.[115]

Alternative theories

Mass in extra dimensions

In some multidimensional theories, the force of gravity is the unique force able to have an effect across all the various extra dimensions,[14] which would explain the relative weakness of the force of gravity compared to the other known forces of nature that would not be able to cross into extra dimensions: electromagnetism, strong interaction, and weak interaction.In that case, dark matter would be a perfect candidate for matter that would exist in other dimensions and that could only interact with the matter on our dimensions through gravity. That dark matter located on different dimensions could potentially aggregate in the same way as the matter in our visible universe does, forming exotic galaxies.[13]

Topological defects

Dark matter could consist of primordial defects (defects originating with the birth of the Universe) in the topology of quantum fields, which would contain energy and therefore gravitate. This possibility may be investigated by the use of an orbital network of atomic clocks, which would register the passage of topological defects by monitoring the synchronization of the clocks. The Global Positioning System may be able to operate as such a network.[116]Modified gravity

Numerous alternative theories have been proposed to explain these observations without the need for a large amount of undetected matter. Most of these theories modify the laws of gravity established by Newton and Einstein in some way.The earliest modified gravity model to emerge was Mordehai Milgrom's Modified Newtonian Dynamics (MOND) in 1983, which adjusts Newton's laws to create a stronger gravitational field when gravitational acceleration levels become tiny (such as near the rim of a galaxy). It had some success explaining galactic-scale features, such as rotational velocity curves of elliptical galaxies, and dwarf elliptical galaxies, but did not successfully explain galaxy cluster gravitational lensing. However, MOND was not relativistic, since it was just a straight adjustment of the older Newtonian account of gravitation, not of the newer account in Einstein's general relativity. Soon after 1983, attempts were made to bring MOND into conformity with general relativity; this is an ongoing process, and many competing hypotheses have emerged based around the original MOND model—including TeVeS, MOG or STV gravity, and phenomenological covariant approach,[117] among others.

In 2007, John W. Moffat proposed a modified gravity hypothesis based on the nonsymmetric gravitational theory (NGT) that claims to account for the behavior of colliding galaxies.[118] This model requires the presence of non-relativistic neutrinos, or other candidates for (cold) dark matter, to work.

Another proposal uses a gravitational backreaction in an emerging theoretical field that seeks to explain gravity between objects as an action, a reaction, and then a back-reaction. Simply, an object A affects an object B, and the object B then re-affects object A, and so on: creating a sort of feedback loop that strengthens gravity.[119]

Recently, another group has proposed a modification of large-scale gravity in a hypothesis named "dark fluid". In this formulation, the attractive gravitational effects attributed to dark matter are instead a side-effect of dark energy. Dark fluid combines dark matter and dark energy in a single energy field that produces different effects at different scales. This treatment is a simplified approach to a previous fluid-like model called the generalized Chaplygin gas model where the whole of spacetime is a compressible gas.[120] Dark fluid can be compared to an atmospheric system. Atmospheric pressure causes air to expand, but part of the air can collapse to form clouds. In the same way, the dark fluid might generally expand, but it also could collect around galaxies to help hold them together.[120]

Another set of proposals is based on the possibility of a double metric tensor for space-time.[121] It has been argued that time-reversed solutions in general relativity require such double metric for consistency, and that both dark matter and dark energy can be understood in terms of time-reversed solutions of general relativity.[122]