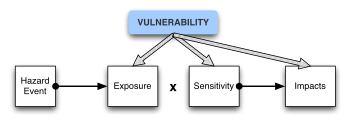

Different

assumptions on the extrapolation of the cancer risk vs. radiation dose

to low-dose levels, given a known risk at a high dose:

(A) supra-linearity, (B) linear

(C) linear-quadratic, (D) hormesis

(A) supra-linearity, (B) linear

(C) linear-quadratic, (D) hormesis

The linear no-threshold model (LNT) is a dose-response model used in radiation protection to estimate stochastic health effects such as radiation-induced cancer, genetic mutations and teratogenic effects on the human body due to exposure to ionizing radiation.

Stochastic health effects are those that occur by chance, and whose probability is proportional to the dose, but whose severity is independent of the dose.

The LNT model assumes there is no lower threshold at which stochastic

effects start, and assumes a linear relationship between dose and the

stochastic health risk. In other words, LNT assumes that radiation has

the potential to cause harm at any dose level, and the sum of several

very small exposures is just as likely to cause a stochastic health

effect as a single larger exposure of equal dose value. In contrast, deterministic health effects are radiation-induced effects such as acute radiation syndrome,

which are caused by tissue damage. Deterministic effects reliably

occur above a threshold dose and their severity increases with dose.

Because of the inherent differences, LNT is not a model for

deterministic effects, which are instead characterized by other types of

dose-response relationships.

LNT is a common model to calculate the probability of radiation-induced cancer both at high doses where epidemiology studies support its application but, controversially, also at low doses, which is a dose region that has a lower predictive statistical confidence.

Nonetheless, regulatory bodies commonly use LNT as a basis for

regulatory dose limits to protect against stochastic health effects, as

found in many public health policies.

There are three active (as of 2016) challenges to the LNT model currently being considered by the US Nuclear Regulatory Commission. One was filed by Nuclear Medicine Professor Carol Marcus of UCLA, who calls the LNT model scientific "baloney".

Whether the model describes the reality for small-dose exposures is disputed. It opposes two competing schools of thought: the threshold model, which assumes that very small exposures are harmless, and the radiation hormesis

model, which claims that radiation at very small doses can be

beneficial. Because the current data are inconclusive, scientists

disagree on which model should be used. Pending any definitive answer to

these questions and the precautionary principle, the model is sometimes used to quantify the cancerous effect of collective doses

of low-level radioactive contaminations, even though it estimates a

positive number of excess deaths at levels that would have had zero

deaths, or saved lives, in the two other models. Such practice has been

condemned by the International Commission on Radiological Protection.

One of the organizations for establishing recommendations on radiation protection guidelines internationally, the UNSCEAR,

recommended policies in 2014 that do not agree with the LNT model at

exposure levels below background levels. The recommendation states "the

Scientific Committee does not recommend multiplying very low doses by

large numbers of individuals to estimate numbers of radiation-induced

health effects within a population exposed to incremental doses at

levels equivalent to or lower than natural background levels." This is a

reversal from previous recommendations by the same organization.

The LNT model is sometimes applied to other cancer hazards such as polychlorinated biphenyls in drinking water.

Origins

Increased Risk of Solid Cancer with Dose for A-bomb survivors,

from BEIR report. Notably this exposure pathway occurred from

essentially a massive spike or pulse of radiation, a result of the brief

instant that the bomb exploded, which while somewhat similar to the

environment of a CT scan, it is wholly unlike the low dose rate of living in a contaminated area such as Chernobyl, were the dose rate is orders of magnitude smaller. However LNT does not consider dose rate and is an unsubstantiated one size fits all approach based solely on total absorbed dose.

When the two environments and cell effects are vastly different.

Likewise, it has also been pointed out that bomb survivors inhaled

carcinogenic benzopyrene from the burning cities, yet this is not factored in.

The association of exposure to radiation with cancer had been observed as early as 1902, six years after the discovery of X-ray by Wilhelm Röntgen and radioactivity by Henri Becquerel. In 1927, Hermann Muller demonstrated that radiation may cause genetic mutation. He also suggested mutation as a cause of cancer. Muller, who received a Nobel Prize for his work on the mutagenic

effect of radiation in 1946, asserted in his Nobel Lecture, "The

Production of Mutation", that mutation frequency is "directly and simply

proportional to the dose of irradiation applied" and that there is "no

threshold dose".

The early studies were based on relatively high levels of

radiation that made it hard to establish the safety of low level of

radiation, and many scientists at that time believed that there may be a

tolerance level, and that low doses of radiation may not be harmful. A

later study in 1955 on mice exposed to low dose of radiation suggest

that they may outlive control animals. The interest in the effect of radiation intensified after the dropping of atomic bombs on Hiroshima and Nagasaki,

and studies were conducted on the survivors. Although compelling

evidence on the effect of low dosage of radiation was hard to come by,

by the late 1940s, the idea of LNT became more popular due to its

mathematical simplicity. In 1954, the National Council on Radiation Protection and Measurements (NCRP) introduced the concept of maximum permissible dose. In 1958, United Nations Scientific Committee on the Effects of Atomic Radiation

(UNSCEAR) assessed the LNT model and a threshold model, but noted the

difficulty in acquiring "reliable information about the correlation

between small doses and their effects either in individuals or in large

populations". The United States Congress Joint Committee on Atomic Energy

(JCAE) similarly could not establish if there is a threshold or "safe"

level for exposure, nevertheless it introduced the concept of "As Low As Reasonably Achievable"

(ALARA). ALARA would become a fundamental principle in radiation

protection policy that implicitly accepts the validity of LNT. In 1959,

United States Federal Radiation Council (FRC) supported the concept of

the LNT extrapolation down to the low dose region in its first report.

By the 1970s, the LNT model had become accepted as the standard in radiation protection practice by a number of bodies. In 1972, the first report of National Academy of Sciences (NAS) Biological Effects of Ionizing Radiation

(BEIR), an expert panel who reviewed available peer reviewed

literature, supported the LNT model on pragmatic grounds, noting that

while "dose-effect relationship for x rays and gamma rays may not be a

linear function", the "use of linear extrapolation . . . may be

justified on pragmatic grounds as a basis for risk estimation." In its

seventh report of 2006, NAS BEIR VII writes, "the committee concludes

that the preponderance of information indicates that there will be some

risk, even at low doses".

Radiation precautions and public policy

Radiation precautions have led to sunlight being listed as a carcinogen at all sun exposure rates, due to the ultraviolet

component of sunlight, with no safe level of sunlight exposure being

suggested, following the precautionary LNT model. According to a 2007

study submitted by the University of Ottawa to the Department of Health

and Human Services in Washington, D.C., there is not enough information

to determine a safe level of sun exposure at this time.

If a particular dose of radiation is found to produce one extra

case of a type of cancer in every thousand people exposed, LNT projects

that one thousandth of this dose will produce one extra case in every

million people so exposed, and that one millionth of the original dose

will produce one extra case in every billion people exposed. The

conclusion is that any given dose equivalent of radiation will produce the same number of cancers, no matter how thinly it is spread. This allows the summation by dosimeters of all radiation exposure, without taking into consideration dose levels or dose rates.

The model is simple to apply: a quantity of radiation can be

translated into a number of deaths without any adjustment for the

distribution of exposure, including the distribution of exposure within a

single exposed individual. For example, a hot particle embedded in an organ (such as lung) results in a very high dose in the cells directly adjacent to the hot particle,

but a much lower whole-organ and whole-body dose. Thus, even if a safe

low dose threshold was found to exist at cellular level for

radiation-induced mutagenesis,

the threshold would not exist for environmental pollution with hot

particles, and could not be safely assumed to exist when the

distribution of dose is unknown.

The linear no-threshold model is used to extrapolate the expected number of extra deaths caused by exposure to environmental radiation, and it therefore has a great impact on public policy. The model is used to translate any radiation release, like that from a "dirty bomb", into a number of lives lost, while any reduction in radiation exposure, for example as a consequence of radon

detection, is translated into a number of lives saved. When the doses

are very low, at natural background levels, in the absence of evidence,

the model predicts via extrapolation, new cancers only in a very small

fraction of the population, but for a large population, the number of

lives is extrapolated into hundreds or thousands, and this can sway

public policy.

A linear model has long been used in health physics to set maximum acceptable radiation exposures.

The United States-based National Council on Radiation Protection and Measurements (NCRP), a body commissioned by the United States Congress,

recently released a report written by the national experts in the field

which states that, radiation's effects should be considered to be

proportional to the dose an individual receives, regardless of how small

the dose is.

A 1958 analysis of two decades of research on the mutation rate

of 1 million lab mice showed that six major hypotheses about ionizing

radiation and gene mutation were not supported by data. Its data was used in 1972 by the Biological Effects of Ionizing Radiation I

committee to support the LNT model. However, it has been claimed that

the data contained a fundamental error that was not revealed to the

committee, and would not support the LNT model on the issue of mutations

and may suggest a threshold dose rate under which radiation does not produce any mutations. The acceptance of the LNT model has been challenged by a number of scientists, see controversy section below.

Fieldwork

The

LNT model and the alternatives to it each have plausible mechanisms

that could bring them about, but definitive conclusions are hard to make

given the difficulty of doing longitudinal studies involving large cohorts over long periods.

A 2003 review of the various studies published in the authoritative Proceedings of the National Academy of Sciences

concludes that "given our current state of knowledge, the most

reasonable assumption is that the cancer risks from low doses of x- or

gamma-rays decrease linearly with decreasing dose."

A 2005 study of Ramsar, Iran

(a region with very high levels of natural background radiation) showed

that lung cancer incidence was lower in the high-radiation area than in

seven surrounding regions with lower levels of natural background

radiation. A fuller epidemiological study of the same region showed no difference in mortality for males, and a statistically insignificant increase for females.

A 2009 study by researchers that looks at Swedish children exposed to fallout from Chernobyl while they were fetuses between 8 and 25 weeks gestation concluded that the reduction in IQ

at very low doses was greater than expected, given a simple LNT model

for radiation damage, indicating that the LNT model may be too

conservative when it comes to neurological damage. However, in medical journals, studies detail that in Sweden in the year of the Chernobyl accident, the birth rate, both increased and shifted to those of "higher maternal age" in 1986. More advanced maternal age in Swedish mothers was linked with a reduction in offspring IQ, in a paper published in 2013. Neurological damage has a different biology than cancer.

In a 2009 study

cancer rates among UK radiation workers were found to increase with

higher recorded occupational radiation doses. The doses examined varied

between 0 and 500 mSv received over their working lives. These results

exclude the possibilities of no increase in risk or that the risk is 2-3

times that for A-bomb survivors with a confidence level of 90%. The

cancer risk for these radiation workers was still less than the average

for persons in the UK due to the healthy worker effect.

A 2009 study focusing on the naturally high background radiation region of Karunagappalli, India concluded: "our cancer incidence study, together with previously reported cancer mortality studies in the HBR area of Yangjiang, China, suggests it is unlikely that estimates of risk at low doses are substantially greater than currently believed."

A 2011 meta-analysis further concluded that the "Total whole body

radiation doses received over 70 years from the natural environment high

background radiation areas in Kerala, India and Yanjiang, China are

much smaller than [the non-tumour dose, "defined as the highest dose of

radiation at which no statistically significant tumour increase was

observed above the control level"] for the respective dose-rates in each

district."

In 2011 an in vitro time-lapse study of the cellular

response to low doses of radiation showed a strongly non-linear response

of certain cellular repair mechanisms called radiation-induced foci

(RIF). The study found that low doses of radiation prompted higher rates

of RIF formation than high doses, and that after low-dose exposure RIF

continued to form after the radiation had ended.

In 2012 a historical cohort study of >175 000 patients without

previous cancer who were examined with CT head scans in UK between 1985

and 2002 was published.

The study, which investigated leukaemia and brain cancer, indicated a

linear dose response in the low dose region and had qualitative

estimates of risk that were in agreement with the Life Span Study (Epidemiology data for low-linear energy transfer radiation).

In 2013 a data linkage study of 11 million Australians with

>680 000 people exposed to CT scans between 1985 and 2005 was

published.

The study confirmed the results of the 2012 UK study for leukaemia and

brain cancer but also investigated other cancer types. The authors

conclude that their results were generally consistent with the linear no

threshold theory.

Controversy

The LNT model has been contested by a number of scientists. It is been claimed that the early proponent of the model Hermann Joseph Muller

intentionally ignored an early study that did not support the LNT model

when he gave his 1946 Nobel Prize address advocating the model.

It is also argued that LNT model had caused an irrational fear of radiation. In the wake of the 1986 Chernobyl accident in Ukraine,

Europe-wide anxieties were fomented in pregnant mothers over the

perception enforced by the LNT model that their children would be born

with a higher rate of mutations. As far afield as the country of Denmark, hundreds of excess induced abortions were performed on the healthy unborn, out of this no-threshold fear. Following the accident however, studies of data sets approaching a million births in the EUROCAT

database, divided into "exposed" and control groups were assessed in

1999. As no Chernobyl impacts were detected, the researchers conclude

"in retrospect the widespread fear in the population about the possible

effects of exposure on the unborn was not justified".

Despite studies from Germany and Turkey, the only robust evidence of

negative pregnancy outcomes that transpired after the accident were

these elective abortion indirect effects, in Greece, Denmark, Italy

etc., due to the anxieties created.

In very high dose radiation therapy,

it was known at the time that radiation can cause a physiological

increase in the rate of pregnancy anomalies, however, human exposure

data and animal testing suggests that the "malformation of organs

appears to be a deterministic effect with a threshold dose" below which, no rate increase is observed. A review in 1999 on the link between the Chernobyl accident and teratology

(birth defects) concludes that "there is no substantive proof regarding

radiation‐induced teratogenic effects from the Chernobyl accident". It is argued that the human body has defense mechanisms, such as DNA repair and programmed cell death, that would protect it against carcinogenesis due to low-dose exposures of carcinogens.

Ramsar, located in Iran,

is often quoted as being a counter example to LNT. Based on preliminary

results, it was considered as having the highest natural background

radiation levels on Earth, several times higher than the ICRP-recommended radiation dose limits for radiation workers, whilst the local population did not seem to suffer any ill effects. However, the population of the high-radiation districts is small (about 1800 inhabitants) and only receive an average of 6 millisieverts per year, so that cancer epidemiology data are too imprecise to draw any conclusions. On the other hand, there may be non-cancer effects from the background radiation such as

chromosomal aberrations or female infertility.

A 2011 research of the cellular repair mechanisms support the evidence against the linear no-threshold model.

According to its authors, this study published in the Proceedings of

the National Academy of Sciences of the United States of America "casts

considerable doubt on the general assumption that risk to ionizing

radiation is proportional to dose".

However, a 2011 review of studies addressing childhood leukaemia

following exposure to ionizing radiation, including both diagnostic

exposure and natural background exposure, concluded that existing risk

factors, excess relative risk per Sv (ERR/Sv), is "broadly applicable"

to low dose or low dose-rate exposure.

Several expert scientific panels have been convened on the

accuracy of the LNT model at low dosage, and various organizations and

bodies have stated their positions on this topic:

- Support

- In 2004 the United States National Research Council (part of the National Academy of Sciences) supported the linear no threshold model and stated regarding Radiation hormesis:

The assumption that any stimulatory hormetic effects from low doses of ionizing radiation will have a significant health benefit to humans that exceeds potential detrimental effects from the radiation exposure is unwarranted at this time.

- In 2005 the United States National Academies' National Research Council published its comprehensive meta-analysis of low-dose radiation research BEIR VII, Phase 2. In its press release the Academies stated:

The scientific research base shows that there is no threshold of exposure below which low levels of ionizing radiation can be demonstrated to be harmless or beneficial.

- The National Council on Radiation Protection and Measurements (a body commissioned by the United States Congress). endorsed the LNT model in a 2001 report that attempted to survey existing literature critical of the model.

- The United Nations Scientific Committee on the Effects of Atomic Radiation (UNSCEAR) wrote in its 2000 report.

Until the [...] uncertainties on low-dose response are resolved, the Committee believes that an increase in the risk of tumour induction proportionate to the radiation dose is consistent with developing knowledge and that it remains, accordingly, the most scientifically defensible approximation of low-dose response. However, a strictly linear dose response should not be expected in all circumstances.

- the United States Environmental Protection Agency also endorses the LNT model in its 2011 report on radiogenic cancer risk:

Underlying the risk models is a large body of epidemiological and radiobiological data. In general, results from both lines of research are consistent with a linear, no-threshold dose (LNT) response model in which the risk of inducing a cancer in an irradiated tissue by low doses of radiation is proportional to the dose to that tissue.

- Oppose

A number of organisations disagree with using the Linear no-threshold

model to estimate risk from environmental and occupational low-level

radiation exposure:

- The French Academy of Sciences (Académie des Sciences) and the National Academy of Medicine (Académie Nationale de Médecine) published a report in 2005 (at the same time as BEIR VII report in the United States) that rejected the Linear no-threshold model in favor of a threshold dose response and a significantly reduced risk at low radiation exposure:

In conclusion, this report raises doubts on the validity of using LNT for evaluating the carcinogenic risk of low doses (< 100 mSv) and even more for very low doses (< 10 mSv). The LNT concept can be a useful pragmatic tool for assessing rules in radioprotection for doses above 10 mSv; however since it is not based on biological concepts of our current knowledge, it should not be used without precaution for assessing by extrapolation the risks associated with low and even more so, with very low doses (< 10 mSv), especially for benefit-risk assessments imposed on radiologists by the European directive 97-43.

- The Health Physics Society's position statement first adopted in January 1996, as revised in July 2010, states:

In accordance with current knowledge of radiation health risks, the Health Physics Society recommends against quantitative estimation of health risks below an individual dose of 5 rem (50 mSv) in one year or a lifetime dose of 10 rem (100 mSv) above that received from natural sources. Doses from natural background radiation in the United States average about 0.3 rem (3 mSv) per year. A dose of 5 rem (50 mSv) will be accumulated in the first 17 years of life and about 25 rem (250 mSv) in a lifetime of 80 years. Estimation of health risk associated with radiation doses that are of similar magnitude as those received from natural sources should be strictly qualitative and encompass a range of hypothetical health outcomes, including the possibility of no adverse health effects at such low levels.

- The American Nuclear Society

recommended further research on the Linear No Threshold Hypothesis

before making adjustments to current radiation protection guidelines,

concurring with the Health Physics Society's position that:

There is substantial and convincing scientific evidence for health risks at high dose. Below 10 rem or 100 mSv (which includes occupational and environmental exposures) risks of health effects are either too small to be observed or are non-existent.

- Intermediate

The US Nuclear Regulatory Commission

takes the intermediate position that "accepts the LNT hypothesis as a

conservative model for estimating radiation risk", but noting that

"public health data do not absolutely establish the occurrence of cancer

following exposure to low doses and dose rates — below about 10,000

mrem (100 mSv). Studies of occupational workers who are chronically

exposed to low levels of radiation above normal background have shown no

adverse biological effects."

Mental health effects

The consequences of low-level radiation are often more psychological

than radiological. Because damage from very-low-level radiation cannot

be detected, people exposed to it are left in anguished uncertainty

about what will happen to them. Many believe they have been

fundamentally contaminated for life and may refuse to have children for

fear of birth defects. They may be shunned by others in their community who fear a sort of mysterious contagion.

Forced evacuation from a radiation or nuclear accident may lead

to social isolation, anxiety, depression, psychosomatic medical

problems, reckless behavior, even suicide. Such was the outcome of the

1986 Chernobyl nuclear disaster

in the Ukraine. A comprehensive 2005 study concluded that "the mental

health impact of Chernobyl is the largest public health problem

unleashed by the accident to date". Frank N. von Hippel, a U.S. scientist, commented on the 2011 Fukushima nuclear disaster,

saying that "fear of ionizing radiation could have long-term

psychological effects on a large portion of the population in the

contaminated areas".

Such great psychological danger does not accompany other

materials that put people at risk of cancer and other deadly illness.

Visceral fear is not widely aroused by, for example, the daily emissions

from coal burning, although, as a National Academy of Sciences study

found, this causes 10,000 premature deaths a year in the US. It is "only

nuclear radiation that bears a huge psychological burden — for it

carries a unique historical legacy".