Deepfakes (a portmanteau of 'deep learning' and 'fake') are images, videos, or audio which are edited or generated using artificial intelligence tools, and which may depict real or non-existent people. They are a type of synthetic media and modern form of a Media prank.

While the act of creating fake content is not new, deepfakes uniquely leverage the technological tools and techniques of machine learning and artificial intelligence, including facial recognition algorithms and artificial neural networks such as variational autoencoders (VAEs) and generative adversarial networks (GANs).

In turn the field of image forensics develops techniques to detect manipulated images. Deepfakes have garnered widespread attention for their potential use in creating child sexual abuse material, celebrity pornographic videos, revenge porn, fake news, hoaxes, bullying, and financial fraud.

Academics have raised concerns about the potential for deep fakes to be used to promote disinformation and hate speech, and interfere with elections. The information technology industry and governments have responded with recommendations to detect and limit their use.

From traditional entertainment to gaming, deepfake technology has evolved to be increasingly convincing and available to the public, allowing for the disruption of the entertainment and media industries.

History

Photo manipulation was developed in the 19th century and soon applied to motion pictures. Technology steadily improved during the 20th century, and more quickly with the advent of digital video.

Deepfake technology has been developed by researchers at academic institutions beginning in the 1990s, and later by amateurs in online communities. More recently the methods have been adopted by industry.

Academic research

Academic research related to deepfakes is split between the field of computer vision, a sub-field of computer science, which develops techniques for creating and identifying deepfakes, and humanities and social science approaches that study the social, ethical and aesthetic implications of deepfakes.

Social science and humanities approaches to deepfakes

In cinema studies, deepfakes demonstrate how "the human face is emerging as a central object of ambivalence in the digital age". Video artists have used deepfakes to "playfully rewrite film history by retrofitting canonical cinema with new star performers". Film scholar Christopher Holliday analyses how switching out the gender and race of performers in familiar movie scenes destabilizes gender classifications and categories. The idea of "queering" deepfakes is also discussed in Oliver M. Gingrich's discussion of media artworks that use deepfakes to reframe gender, including British artist Jake Elwes' Zizi: Queering the Dataset, an artwork that uses deepfakes of drag queens to intentionally play with gender. The aesthetic potentials of deepfakes are also beginning to be explored. Theatre historian John Fletcher notes that early demonstrations of deepfakes are presented as performances, and situates these in the context of theater, discussing "some of the more troubling paradigm shifts" that deepfakes represent as a performance genre.

Philosophers and media scholars have discussed the ethics of deepfakes especially in relation to pornography. Media scholar Emily van der Nagel draws upon research in photography studies on manipulated images to discuss verification systems, that allow women to consent to uses of their images.

Beyond pornography, deepfakes have been framed by philosophers as an "epistemic threat" to knowledge and thus to society. There are several other suggestions for how to deal with the risks deepfakes give rise beyond pornography, but also to corporations, politicians and others, of "exploitation, intimidation, and personal sabotage", and there are several scholarly discussions of potential legal and regulatory responses both in legal studies and media studies. In psychology and media studies, scholars discuss the effects of disinformation that uses deepfakes, and the social impact of deepfakes.

While most English-language academic studies of deepfakes focus on the Western anxieties about disinformation and pornography, digital anthropologist Gabriele de Seta has analyzed the Chinese reception of deepfakes, which are known as huanlian, which translates to "changing faces". The Chinese term does not contain the "fake" of the English deepfake, and de Seta argues that this cultural context may explain why the Chinese response has been more about practical regulatory responses to "fraud risks, image rights, economic profit, and ethical imbalances".

Computer science research on deepfakes

An early landmark project was the Video Rewrite program, published in 1997. The program modified existing video footage of a person speaking to depict that person mouthing the words contained in a different audio track. It was the first system to fully automate this kind of facial reanimation, and it did so using machine learning techniques to make connections between the sounds produced by a video's subject and the shape of the subject's face.

Contemporary academic projects have focused on creating more realistic videos and on improving techniques. The "Synthesizing Obama" program, published in 2017, modifies video footage of former president Barack Obama to depict him mouthing the words contained in a separate audio track. The project lists as a main research contribution its photorealistic technique for synthesizing mouth shapes from audio. The Face2Face program, published in 2016, modifies video footage of a person's face to depict them mimicking the facial expressions of another person in real time. The project lists as a main research contribution the first method for re-enacting facial expressions in real time using a camera that does not capture depth, making it possible for the technique to be performed using common consumer cameras.

In August 2018, researchers at the University of California, Berkeley published a paper introducing a fake dancing app that can create the impression of masterful dancing ability using AI. This project expands the application of deepfakes to the entire body; previous works focused on the head or parts of the face.

Researchers have also shown that deepfakes are expanding into other domains such as tampering with medical imagery. In this work, it was shown how an attacker can automatically inject or remove lung cancer in a patient's 3D CT scan. The result was so convincing that it fooled three radiologists and a state-of-the-art lung cancer detection AI. To demonstrate the threat, the authors successfully performed the attack on a hospital in a White hat penetration test.

A survey of deepfakes, published in May 2020, provides a timeline of how the creation and detection deepfakes have advanced over the last few years. The survey identifies that researchers have been focusing on resolving the following challenges of deepfake creation:

- Generalization. High-quality deepfakes are often achieved by training on hours of footage of the target. This challenge is to minimize the amount of training data and the time to train the model required to produce quality images and to enable the execution of trained models on new identities (unseen during training).

- Paired Training. Training a supervised model can produce high-quality results, but requires data pairing. This is the process of finding examples of inputs and their desired outputs for the model to learn from. Data pairing is laborious and impractical when training on multiple identities and facial behaviors. Some solutions include self-supervised training (using frames from the same video), the use of unpaired networks such as Cycle-GAN, or the manipulation of network embeddings.

- Identity leakage. This is where the identity of the driver (i.e., the actor controlling the face in a reenactment) is partially transferred to the generated face. Some solutions proposed include attention mechanisms, few-shot learning, disentanglement, boundary conversions, and skip connections.

- Occlusions. When part of the face is obstructed with a hand, hair, glasses, or any other item then artifacts can occur. A common occlusion is a closed mouth which hides the inside of the mouth and the teeth. Some solutions include image segmentation during training and in-painting.

- Temporal coherence. In videos containing deepfakes, artifacts such as flickering and jitter can occur because the network has no context of the preceding frames. Some researchers provide this context or use novel temporal coherence losses to help improve realism. As the technology improves, the interference is diminishing.

Overall, deepfakes are expected to have several implications in media and society, media production, media representations, media audiences, gender, law, and regulation, and politics.

Amateur development

The term deepfakes originated around the end of 2017 from a Reddit user named "deepfakes". He, as well as others in the Reddit community r/deepfakes, shared deepfakes they created; many videos involved celebrities' faces swapped onto the bodies of actors in pornographic videos, while non-pornographic content included many videos with actor Nicolas Cage's face swapped into various movies.

Other online communities remain, including Reddit communities that do not share pornography, such as r/SFWdeepfakes (short for "safe for work deepfakes"), in which community members share deepfakes depicting celebrities, politicians, and others in non-pornographic scenarios. Other online communities continue to share pornography on platforms that have not banned deepfake pornography.

Commercial development

In January 2018, a proprietary desktop application called FakeApp was launched. This app allows users to easily create and share videos with their faces swapped with each other. As of 2019, FakeApp has been superseded by open-source alternatives such as Faceswap, command line-based DeepFaceLab, and web-based apps such as DeepfakesWeb.com.

Larger companies started to use deepfakes. Corporate training videos can be created using deepfaked avatars and their voices, for example Synthesia, which uses deepfake technology with avatars to create personalized videos. The mobile app Momo created the application Zao which allows users to superimpose their face on television and movie clips with a single picture. As of 2019 the Japanese AI company DataGrid made a full body deepfake that could create a person from scratch.

As of 2020 audio deepfakes, and AI software capable of detecting deepfakes and cloning human voices after 5 seconds of listening time also exist. A mobile deepfake app, Impressions, was launched in March 2020. It was the first app for the creation of celebrity deepfake videos from mobile phones.

Resurrection

Deepfake technology's ability to fabricate messages and actions of others can include deceased individuals. On 29 October 2020, Kim Kardashian posted a video featuring a hologram of her late father Robert Kardashian created by the company Kaleida, which used a combination of performance, motion tracking, SFX, VFX and DeepFake technologies to create the illusion.

In 2020, a deepfake video of Joaquin Oliver, a victim of the Parkland shooting was created as part of a gun safety campaign. Oliver's parents partnered with nonprofit Change the Ref and McCann Health to produce a video in which Oliver to encourage people to support gun safety legislation and politicians who back do so as well.

In 2022, a deepfake video of Elvis Presley was used on the program America's Got Talent 17.

A TV commercial used a deepfake video of Beatles member John Lennon, who was murdered in 1980.

Techniques

Deepfakes rely on a type of neural network called an auto encoder. These consist of an encoder, which reduces an image to a lower dimensional latent space, and a decoder, which reconstructs the image from the latent representation. Deepfakes utilize this architecture by having a universal encoder which encodes a person in to the latent space. The latent representation contains key features about their facial features and body posture. This can then be decoded with a model trained specifically for the target. This means the target's detailed information will be superimposed on the underlying facial and body features of the original video, represented in the latent space.

A popular upgrade to this architecture attaches a generative adversarial network to the decoder. A GAN trains a generator, in this case the decoder, and a discriminator in an adversarial relationship. The generator creates new images from the latent representation of the source material, while the discriminator attempts to determine whether or not the image is generated. This causes the generator to create images that mimic reality extremely well as any defects would be caught by the discriminator. Both algorithms improve constantly in a zero sum game. This makes deepfakes difficult to combat as they are constantly evolving; any time a defect is determined, it can be corrected.

Applications

Acting

Digital clones of professional actors have appeared in films before, and progress in deepfake technology is expected to further the accessibility and effectiveness of such clones. The use of AI technology was a major issue in the 2023 SAG-AFTRA strike, as new techniques enabled the capability of generating and storing a digital likeness to use in place of actors.

Disney has improved their visual effects using high-resolution deepfake face swapping technology. Disney improved their technology through progressive training programmed to identify facial expressions, implementing a face-swapping feature, and iterating in order to stabilize and refine the output. This high-resolution deepfake technology saves significant operational and production costs. Disney's deepfake generation model can produce AI-generated media at a 1024 x 1024 resolution, as opposed to common models that produce media at a 256 x 256 resolution. The technology allows Disney to de-age characters or revive deceased actors. Similar technology was initially used by fans to unofficially insert faces into existing media, such as overlaying Harrison Ford's young face onto Han Solo's face in Solo: A Star Wars Story. Disney used deepfakes for the characters of Princess Leia and Grand Moff Tarkin in Rogue One.

The 2020 documentary Welcome to Chechnya used deepfake technology to obscure the identity of the people interviewed, so as to protect them from retaliation.

Creative Artists Agency has developed a facility to capture the likeness of an actor "in a single day", to develop a digital clone of the actor, which would be controlled by the actor or their estate alongside other personality rights.

Companies which have used digital clones of professional actors in advertisements include Puma, Nike and Procter & Gamble.

Deep fake allowed portray David Beckham to able to publish in a campaign in nearly nine languages to raise awareness the fight against Malaria.

In the 2024 Indian Tamil science fiction action thriller The Greatest of All Time, the teenage version of Vijay's character Jeevan is portrayed by Ayaz Khan. Vijay's teenage face was then attained by AI deepfake.

Art

In March 2018 the multidisciplinary artist Joseph Ayerle published the video artwork Un'emozione per sempre 2.0 (English title: The Italian Game). The artist worked with Deepfake technology to create an AI actor, a synthetic version of 80s movie star Ornella Muti, Deepfakes are also being used in education and media to create realistic videos and interactive content, which offer new ways to engage audiences. However, they also bring risks, especially for spreading false information, which has led to calls for responsible use and clear rules. traveling in time from 1978 to 2018. The Massachusetts Institute of Technology referred this artwork in the study "Collective Wisdom". The artist used Ornella Muti's time travel to explore generational reflections, while also investigating questions about the role of provocation in the world of art. For the technical realization Ayerle used scenes of photo model Kendall Jenner. The program replaced Jenner's face by an AI calculated face of Ornella Muti. As a result, the AI actor has the face of the Italian actor Ornella Muti and the body of Kendall Jenner.

Deepfakes have been widely used in satire or to parody celebrities and politicians. The 2020 webseries Sassy Justice, created by Trey Parker and Matt Stone, heavily features the use of deepfaked public figures to satirize current events and raise awareness of deepfake technology.

Blackmail

Deepfakes can be used to generate blackmail materials that falsely incriminate a victim. A report by the American Congressional Research Service warned that deepfakes could be used to blackmail elected officials or those with access to classified information for espionage or influence purposes.

Alternatively, since the fakes cannot reliably be distinguished from genuine materials, victims of actual blackmail can now claim that the true artifacts are fakes, granting them plausible deniability. The effect is to void credibility of existing blackmail materials, which erases loyalty to blackmailers and destroys the blackmailer's control. This phenomenon can be termed "blackmail inflation", since it "devalues" real blackmail, rendering it worthless. It is possible to utilize commodity GPU hardware with a small software program to generate this blackmail content for any number of subjects in huge quantities, driving up the supply of fake blackmail content limitlessly and in highly scalable fashion.

Entertainment

On June 8, 2022, Daniel Emmet, a former AGT contestant, teamed up with the AI startup Metaphysic AI, to create a hyperrealistic deepfake to make it appear as Simon Cowell. Cowell, notoriously known for severely critiquing contestants, was on stage interpreting "You're The Inspiration" by Chicago. Emmet sang on stage as an image of Simon Cowell emerged on the screen behind him in flawless synchronicity.

On August 30, 2022, Metaphysic AI had 'deep-fake' Simon Cowell, Howie Mandel and Terry Crews singing opera on stage.

On September 13, 2022, Metaphysic AI performed with a synthetic version of Elvis Presley for the finals of America's Got Talent.

The MIT artificial intelligence project 15.ai has been used for content creation for multiple Internet fandoms, particularly on social media.

In 2023 the bands ABBA and KISS partnered with Industrial Light & Magic and Pophouse Entertainment to develop deepfake avatars capable of performing virtual concerts.

Fraud and scams

Fraudsters and scammers make use of deepfakes to trick people into fake investment schemes, financial fraud, cryptocurrencies, sending money, and following endorsements. The likenesses of celebrities and politicians have been used for large-scale scams, as well as those of private individuals, which are used in spearphishing attacks. According to the Better Business Bureau, deepfake scams are becoming more prevalent. These scams are responsible for an estimated $12 billion in fraud losses globally. According to a recent report these numbers are expected to reach $40 Billion over the next three years.

Fake endorsements have misused the identities of celebrities like Taylor Swift, Tom Hanks, Oprah Winfrey, and Elon Musk; news anchors like Gayle King and Sally Bundock; and politicians like Lee Hsien Loong and Jim Chalmers. Videos of them have appeared in online advertisements on YouTube, Facebook, and TikTok, who have policies against synthetic and manipulated media. Ads running these videos are seen by millions of people. A single Medicare fraud campaign had been viewed more than 195 million times across thousands of videos. Deepfakes have been used for: a fake giveaway of Le Creuset cookware for a "shipping fee" without receiving the products, except for hidden monthly charges; weight-loss gummies that charge significantly more than what was said; a fake iPhone giveaway; and fraudulent get-rich-quick, investment, and cryptocurrency schemes.

Many ads pair AI voice cloning with "decontextualized video of the celebrity" to mimic authenticity. Others use a whole clip from a celebrity before moving to a different actor or voice. Some scams may involve real-time deepfakes.

Celebrities have been warning people of these fake endorsements, and to be more vigilant against them. Celebrities are unlikely to file lawsuits against every person operating deepfake scams, as "finding and suing anonymous social media users is resource intensive," though cease and desist letters to social media companies work in getting videos and ads taken down.

Audio deepfakes have been used as part of social engineering scams, fooling people into thinking they are receiving instructions from a trusted individual. In 2019, a U.K.-based energy firm's CEO was scammed over the phone when he was ordered to transfer €220,000 into a Hungarian bank account by an individual who reportedly used audio deepfake technology to impersonate the voice of the firm's parent company's chief executive.

As of 2023, the combination advances in deepfake technology, which could clone an individual's voice from a recording of a few seconds to a minute, and new text generation tools, enabled automated impersonation scams, targeting victims using a convincing digital clone of a friend or relative.

Identity masking

Audio deepfakes can be used to mask a user's real identity. In online gaming, for example, a player may want to choose a voice that sounds like their in-game character when speaking to other players. Those who are subject to harassment, such as women, children, and transgender people, can use these "voice skins" to hide their gender or age.

Memes

In 2020, an internet meme emerged utilizing deepfakes to generate videos of people singing the chorus of "Baka Mitai" (ばかみたい), a song from the game Yakuza 0 in the video game series Like a Dragon. In the series, the melancholic song is sung by the player in a karaoke minigame. Most iterations of this meme use a 2017 video uploaded by user Dobbsyrules, who lip syncs the song, as a template.

Politics

Deepfakes have been used to misrepresent well-known politicians in videos.

- In February 2018, in separate videos, the face of the Argentine President Mauricio Macri had been replaced by the face of Adolf Hitler, and Angela Merkel's face has been replaced with Donald Trump's.

- In April 2018, Jordan Peele collaborated with Buzzfeed to create a deepfake of Barack Obama with Peele's voice; it served as a public service announcement to increase awareness of deepfakes.

- In January 2019, Fox affiliate KCPQ aired a deepfake of Trump during his Oval Office address, mocking his appearance and skin colour. The employee found responsible for the video was subsequently fired.

- In June 2019, the United States House Intelligence Committee held hearings on the potential malicious use of deepfakes to sway elections.

- In April 2020, the Belgian branch of Extinction Rebellion published a deepfake video of Belgian Prime Minister Sophie Wilmès on Facebook. The video promoted a possible link between deforestation and COVID-19. It had more than 100,000 views within 24 hours and received many comments. On the Facebook page where the video appeared, many users interpreted the deepfake video as genuine.

- During the 2020 US presidential campaign, many deep fakes surfaced purporting Joe Biden in cognitive decline—falling asleep during an interview, getting lost, and misspeaking—all bolstering rumors of his decline.

- During the 2020 Delhi Legislative Assembly election campaign, the Delhi Bharatiya Janata Party used similar technology to distribute a version of an English-language campaign advertisement by its leader, Manoj Tiwari, translated into Haryanvi to target Haryana voters. A voiceover was provided by an actor, and AI trained using video of Tiwari speeches was used to lip-sync the video to the new voiceover. A party staff member described it as a "positive" use of deepfake technology, which allowed them to "convincingly approach the target audience even if the candidate didn't speak the language of the voter."

- In 2020, Bruno Sartori produced deepfakes parodying politicians like Jair Bolsonaro and Donald Trump.

- In April 2021, politicians in a number of European countries were approached by pranksters Vovan and Lexus, who are accused by critics of working for the Russian state. They impersonated Leonid Volkov, a Russian opposition politician and chief of staff of the Russian opposition leader Alexei Navalny's campaign, allegedly through deepfake technology. However, the pair told The Verge that they did not use deepfakes, and just used a look-alike.

- In May 2023, a deepfake video of Vice President Kamala Harris supposedly slurring her words and speaking nonsensically about today, tomorrow and yesterday went viral on social media.

- In June 2023, in the United States, Ron DeSantis's presidential campaign used a deepfake to misrepresent Donald Trump.

- In March 2024, during India's state assembly elections, deepfake technology was widely employed by political candidates to reach out to voters. Many politicians used AI-generated deepfakes created by an Indian startup The Indian Deepfaker, founder by Divyendra Singh Jadoun to translate their speeches into multiple regional languages, allowing them to engage with diverse linguistic communities across the country. This surge in the use of deepfakes for political campaigns marked a significant shift in electioneering tactics in India.

Pornography

In 2017, Deepfake pornography prominently surfaced on the Internet, particularly on Reddit. As of 2019, many deepfakes on the internet feature pornography of female celebrities whose likeness is typically used without their consent. A report published in October 2019 by Dutch cybersecurity startup Deeptrace estimated that 96% of all deepfakes online were pornographic. As of 2018, a Daisy Ridley deepfake first captured attention, among others. As of October 2019, most of the deepfake subjects on the internet were British and American actors. However, around a quarter of the subjects are South Korean, the majority of which are K-pop stars.

In June 2019, a downloadable Windows and Linux application called DeepNude was released that used neural networks, specifically generative adversarial networks, to remove clothing from images of women. The app had both a paid and unpaid version, the paid version costing $50. On 27 June the creators removed the application and refunded consumers.

Female celebrities are often a main target when it comes to deepfake pornography. In 2023, deepfake porn videos appeared online of Emma Watson and Scarlett Johansson in a face swapping app. In 2024, deepfake porn images circulated online of Taylor Swift.

Academic studies have reported that women, LGBT people and people of colour (particularly activists, politicians and those questioning power) are at higher risk of being targets of promulgation of deepfake pornography.

Social media

Deepfakes have begun to see use in popular social media platforms, notably through Zao, a Chinese deepfake app that allows users to substitute their own faces onto those of characters in scenes from films and television shows such as Romeo + Juliet and Game of Thrones. The app originally faced scrutiny over its invasive user data and privacy policy, after which the company put out a statement claiming it would revise the policy. In January 2020 Facebook announced that it was introducing new measures to counter this on its platforms.

The Congressional Research Service cited unspecified evidence as showing that foreign intelligence operatives used deepfakes to create social media accounts with the purposes of recruiting individuals with access to classified information.

In 2021, realistic deepfake videos of actor Tom Cruise were released on TikTok, which went viral and garnered more than tens of millions of views. The deepfake videos featured an "artificial intelligence-generated doppelganger" of Cruise doing various activities such as teeing off at the golf course, showing off a coin trick, and biting into a lollipop. The creator of the clips, Belgian VFX Artist Chris Umé, said he first got interested in deepfakes in 2018 and saw the "creative potential" of them.

Sockpuppets

Deepfake photographs can be used to create sockpuppets, non-existent people, who are active both online and in traditional media. A deepfake photograph appears to have been generated together with a legend for an apparently non-existent person named Oliver Taylor, whose identity was described as a university student in the United Kingdom. The Oliver Taylor persona submitted opinion pieces in several newspapers and was active in online media attacking a British legal academic and his wife, as "terrorist sympathizers." The academic had drawn international attention in 2018 when he commenced a lawsuit in Israel against NSO, a surveillance company, on behalf of people in Mexico who alleged they were victims of NSO's phone hacking technology. Reuters could find only scant records for Oliver Taylor and "his" university had no records for him. Many experts agreed that the profile photo is a deepfake. Several newspapers have not retracted articles attributed to him or removed them from their websites. It is feared that such techniques are a new battleground in disinformation.

Collections of deepfake photographs of non-existent people on social networks have also been deployed as part of Israeli partisan propaganda. The Facebook page "Zionist Spring" featured photos of non-existent persons along with their "testimonies" purporting to explain why they have abandoned their left-leaning politics to embrace right-wing politics, and the page also contained large numbers of posts from Prime Minister of Israel Benjamin Netanyahu and his son and from other Israeli right wing sources. The photographs appear to have been generated by "human image synthesis" technology, computer software that takes data from photos of real people to produce a realistic composite image of a non-existent person. In much of the "testimonies," the reason given for embracing the political right was the shock of learning of alleged incitement to violence against the prime minister. Right wing Israeli television broadcasters then broadcast the "testimonies" of these non-existent people based on the fact that they were being "shared" online. The broadcasters aired these "testimonies" despite being unable to find such people, explaining "Why does the origin matter?" Other Facebook fake profiles—profiles of fictitious individuals—contained material that allegedly contained such incitement against the right wing prime minister, in response to which the prime minister complained that there was a plot to murder him.

Concerns and countermeasures

Though fake photos have long been plentiful, faking motion pictures has been more difficult, and the presence of deepfakes increases the difficulty of classifying videos as genuine or not. AI researcher Alex Champandard has said people should know how fast things can be corrupted with deepfake technology, and that the problem is not a technical one, but rather one to be solved by trust in information and journalism. Computer science associate professor Hao Li of the University of Southern California states that deepfakes created for malicious use, such as fake news, will be even more harmful if nothing is done to spread awareness of deepfake technology. Li predicted that genuine videos and deepfakes would become indistinguishable in as soon as half a year, as of October 2019, due to rapid advancement in artificial intelligence and computer graphics. Former Google fraud czar Shuman Ghosemajumder has called deepfakes an area of "societal concern" and said that they will inevitably evolve to a point at which they can be generated automatically, and an individual could use that technology to produce millions of deepfake videos.

Credibility of information

A primary pitfall is that humanity could fall into an age in which it can no longer be determined whether a medium's content corresponds to the truth. Deepfakes are one of a number of tools for disinformation attack, creating doubt, and undermining trust. They have a potential to interfere with democratic functions in societies, such as identifying collective agendas, debating issues, informing decisions, and solving problems though the exercise of political will. People may also start to dismiss real events as fake.

Defamation

Deepfakes possess the ability to damage individual entities tremendously. This is because deepfakes are often targeted at one individual, and/or their relations to others in hopes to create a narrative powerful enough to influence public opinion or beliefs. This can be done through deepfake voice phishing, which manipulates audio to create fake phone calls or conversations. Another method of deepfake use is fabricated private remarks, which manipulate media to convey individuals voicing damaging comments. The quality of a negative video or audio does not need to be that high. As long as someone's likeness and actions are recognizable, a deepfake can hurt their reputation.

In September 2020 Microsoft made public that they are developing a Deepfake detection software tool.

Detection

Audio

Detecting fake audio is a highly complex task that requires careful attention to the audio signal in order to achieve good performance. Using deep learning, preprocessing of feature design and masking augmentation have been proven effective in improving performance.

Video

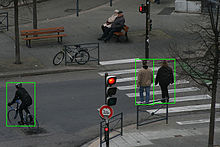

Most of the academic research surrounding deepfakes focuses on the detection of deepfake videos. One approach to deepfake detection is to use algorithms to recognize patterns and pick up subtle inconsistencies that arise in deepfake videos. For example, researchers have developed automatic systems that examine videos for errors such as irregular blinking patterns of lighting. This approach has been criticized because deepfake detection is characterized by a "moving goal post" where the production of deepfakes continues to change and improve as algorithms to detect deepfakes improve. In order to assess the most effective algorithms for detecting deepfakes, a coalition of leading technology companies hosted the Deepfake Detection Challenge to accelerate the technology for identifying manipulated content. The winning model of the Deepfake Detection Challenge was 65% accurate on the holdout set of 4,000 videos. A team at Massachusetts Institute of Technology published a paper in December 2021 demonstrating that ordinary humans are 69–72% accurate at identifying a random sample of 50 of these videos.

A team at the University of Buffalo published a paper in October 2020 outlining their technique of using reflections of light in the eyes of those depicted to spot deepfakes with a high rate of success, even without the use of an AI detection tool, at least for the time being.

In the case of well-documented individuals such as political leaders, algorithms have been developed to distinguish identity-based features such as patterns of facial, gestural, and vocal mannerisms and detect deep-fake impersonators.

Another team led by Wael AbdAlmageed with Visual Intelligence and Multimedia Analytics Laboratory (VIMAL) of the Information Sciences Institute at the University Of Southern California developed two generations of deepfake detectors based on convolutional neural networks. The first generation used recurrent neural networks to spot spatio-temporal inconsistencies to identify visual artifacts left by the deepfake generation process. The algorithm achieved 96% accuracy on FaceForensics++, the only large-scale deepfake benchmark available at that time. The second generation used end-to-end deep networks to differentiate between artifacts and high-level semantic facial information using two-branch networks. The first branch propagates colour information while the other branch suppresses facial content and amplifies low-level frequencies using Laplacian of Gaussian (LoG). Further, they included a new loss function that learns a compact representation of bona fide faces, while dispersing the representations (i.e. features) of deepfakes. VIMAL's approach showed state-of-the-art performance on FaceForensics++ and Celeb-DF benchmarks, and on March 16, 2022 (the same day of the release), was used to identify the deepfake of Volodymyr Zelensky out-of-the-box without any retraining or knowledge of the algorithm with which the deepfake was created.

Other techniques suggest that blockchain could be used to verify the source of the media. For instance, a video might have to be verified through the ledger before it is shown on social media platforms. With this technology, only videos from trusted sources would be approved, decreasing the spread of possibly harmful deepfake media.

Digitally signing of all video and imagery by cameras and video cameras, including smartphone cameras, was suggested to fight deepfakes. That allows tracing every photograph or video back to its original owner that can be used to pursue dissidents.

One easy way to uncover deepfake video calls consists in asking the caller to turn sideways.

Prevention

Henry Ajder who works for Deeptrace, a company that detects deepfakes, says there are several ways to protect against deepfakes in the workplace. Semantic passwords or secret questions can be used when holding important conversations. Voice authentication and other biometric security features should be up to date. Educate employees about deepfakes.

Controversies

In March 2024, a video clip was shown from the Buckingham Palace, where Kate Middleton had cancer and she was undergoing chemotherapy. However, the clip fuelled rumours that the woman in that clip was an AI deepfake. UCLA's race director Johnathan Perkins doubted she had cancer, and further speculated that she could be in critical condition or dead.

Example events

- Barack Obama

- On April 17, 2018, American actor Jordan Peele, BuzzFeed, and Monkeypaw Productions posted a deepfake of Barack Obama to YouTube, which depicted Barack Obama cursing and calling Donald Trump names. In this deepfake, Peele's voice and face were transformed and manipulated into those of Obama. The intent of this video was to portray the dangerous consequences and power of deepfakes, and how deepfakes can make anyone say anything.

- Donald Trump

- On May 5, 2019, Derpfakes posted a deepfake of Donald Trump to YouTube, based on a skit Jimmy Fallon performed on The Tonight Show. In the original skit (aired May 4, 2016), Jimmy Fallon dressed as Donald Trump and pretended to participate in a phone call with Barack Obama, conversing in a manner that presented him to be bragging about his primary win in Indiana. In the deepfake, Jimmy Fallon's face was transformed into Donald Trump's face, with the audio remaining the same. This deepfake video was produced by Derpfakes with a comedic intent. In March 2023, a series of images appeared to show New York Police Department officers restraining Trump. The images, created using Midjourney, were initially posted on Twitter by Eliot Higgins but were later re-shared without context, leading some viewers to believe they were real photographs.

- Nancy Pelosi

- In 2019, a clip from Nancy Pelosi's speech at the Center for American Progress (given on May 22, 2019) in which the video was slowed down, in addition to the pitch of the audio being altered, to make it seem as if she were drunk, was widely distributed on social media. Critics argue that this was not a deepfake, but a shallowfake—a less sophisticated form of video manipulation.[190]

- Mark Zuckerberg

- In May 2019, two artists collaborating with the company CannyAI created a deepfake video of Facebook founder Mark Zuckerberg talking about harvesting and controlling data from billions of people. The video was part of an exhibit to educate the public about the dangers of artificial intelligence.

- Kim Jong-un and Vladimir Putin

- On September 29, 2020, deepfakes of North Korean leader Kim Jong-un and Russian President Vladimir Putin were uploaded to YouTube, created by a nonpartisan advocacy group RepresentUs. The deepfakes of Kim and Putin were meant to air publicly as commercials to relay the notion that interference by these leaders in US elections would be detrimental to the United States' democracy. The commercials also aimed to shock Americans to realize how fragile democracy is, and how media and news can significantly influence the country's path regardless of credibility. However, while the commercials included an ending comment detailing that the footage was not real, they ultimately did not air due to fears and sensitivity regarding how Americans may react. On June 5, 2023, an unknown source broadcast a reported deepfake of Vladimir Putin on multiple radio and television networks. In the clip, Putin appears to deliver a speech announcing the invasion of Russia and calling for a general mobilization of the army.

- Volodymyr Zelenskyy

- On March 16, 2022, a one-minute long deepfake video depicting Ukraine's president Volodymyr Zelenskyy seemingly telling his soldiers to lay down their arms and surrender during the 2022 Russian invasion of Ukraine was circulated on social media. Russian social media boosted it, but after it was debunked, Facebook and YouTube removed it. Twitter allowed the video in tweets where it was exposed as a fake, but said it would be taken down if posted to deceive people. Hackers inserted the disinformation into a live scrolling-text news crawl on TV station Ukraine 24, and the video appeared briefly on the station's website in addition to false claims that Zelenskyy had fled his country's capital, Kyiv. It was not immediately clear who created the deepfake, to which Zelenskyy responded with his own video, saying, "We don't plan to lay down any arms. Until our victory."

- Wolf News

- In late 2022, pro-China propagandists started spreading deepfake videos purporting to be from "Wolf News" that used synthetic actors. The technology was developed by a London company called Synthesia, which markets it as a cheap alternative to live actors for training and HR videos.

- Pope Francis

- In March 2023, an anonymous construction worker from Chicago used Midjourney to create a fake image of Pope Francis in a white Balenciaga puffer jacket. The image went viral, receiving over twenty million views. Writer Ryan Broderick dubbed it "the first real mass-level AI misinformation case". Experts consulted by Slate characterized the image as unsophisticated: "you could have made it on Photoshop five years ago".

- Keir Starmer

- In October 2023, a deepfake audio clip of the UK Labour Party leader Keir Starmer abusing staffers was released on the first day of a Labour Party conference. The clip purported to be an audio tape of Starmer abusing his staffers.

- Rashmika Mandanna

- In early November 2023, a famous South Indian actor, Rashmika Mandanna fell prey to DeepFake when a morphed video of a famous British-Indian influencer, Zara Patel, with Rashmika's face started to float on social media. Zara Patel claims to not be involved in its creation.

- Bongbong Marcos

- In April 2024, a deepfake video misrepresenting Philippine President Bongbong Marcos was released. It is a slideshow accompanied by a deepfake audio of Marcos purportedly ordering the Armed Forces of the Philippines and special task force to act "however appropriate" should China attack the Philippines. The video was released amidst tensions related to the South China Sea dispute. The Presidential Communications Office has said that there is no such directive from the president and said a foreign actor might be behind the fabricated media. Criminal charges have been filed by the Kapisanan ng mga Brodkaster ng Pilipinas in relation to the deepfake media. On July 22, 2024, a video of Marcos purportedly snorting illegal drugs was released by Claire Contreras, a former supporter of Marcos. Dubbed as the polvoron video, the media noted its consistency with the insinuation of Marcos' predecessor—Rodrigo Duterte—that Marcos is a drug addict; the video was also shown at a Hakbang ng Maisug rally organized by people aligned with Duterte. Two days later, the Philippine National Police and the National Bureau of Investigation, based on their own findings, concluded that the video was created using AI; they further pointed out inconsistencies with the person on the video with Marcos, such as details on the two people's ears.

- Joe Biden

- Prior to the 2024 United States presidential election, phone calls imitating the voice of the incumbent Joe Biden were made to dissuade people from voting for him. The person responsible for the calls was charged with voter suppression and impersonating a candidate. The FCC proposed to fine him US$6 million and Lingo Telecom, the company that allegedly relayed the calls, $2 million.

Responses

Social media platforms

Twitter (later X) is taking active measures to handle synthetic and manipulated media on their platform. In order to prevent disinformation from spreading, Twitter is placing a notice on tweets that contain manipulated media and/or deepfakes that signal to viewers that the media is manipulated. There will also be a warning that appears to users who plan on retweeting, liking, or engaging with the tweet. Twitter will also work to provide users a link next to the tweet containing manipulated or synthetic media that links to a Twitter Moment or credible news article on the related topic—as a debunking action. Twitter also has the ability to remove any tweets containing deepfakes or manipulated media that may pose a harm to users' safety. In order to better improve Twitter's detection of deepfakes and manipulated media, Twitter asked users who are interested in partnering with them to work on deepfake detection solutions to fill out a form.

"In August 2024, the secretaries of state of Minnesota, Pennsylvania, Washington, Michigan and New Mexico penned an open letter to X owner Elon Musk urging modifications to its AI chatbot Grok's new text-to-video generator, added in August 2024, stating that it had disseminated election misinformation.

Facebook has taken efforts towards encouraging the creation of deepfakes in order to develop state of the art deepfake detection software. Facebook was the prominent partner in hosting the Deepfake Detection Challenge (DFDC), held December 2019, to 2114 participants who generated more than 35,000 models. The top performing models with the highest detection accuracy were analyzed for similarities and differences; these findings are areas of interest in further research to improve and refine deepfake detection models. Facebook has also detailed that the platform will be taking down media generated with artificial intelligence used to alter an individual's speech. However, media that has been edited to alter the order or context of words in one's message would remain on the site but be labeled as false, since it was not generated by artificial intelligence.

On 31 January 2018, Gfycat began removing all deepfakes from its site. On Reddit, the r/deepfakes subreddit was banned on 7 February 2018, due to the policy violation of "involuntary pornography". In the same month, representatives from Twitter stated that they would suspend accounts suspected of posting non-consensual deepfake content. Chat site Discord has taken action against deepfakes in the past, and has taken a general stance against deepfakes. In September 2018, Google added "involuntary synthetic pornographic imagery" to its ban list, allowing anyone to request the block of results showing their fake nudes.

In February 2018, Pornhub said that it would ban deepfake videos on its website because it is considered "non consensual content" which violates their terms of service. They also stated previously to Mashable that they will take down content flagged as deepfakes. Writers from Motherboard reported that searching "deepfakes" on Pornhub still returned multiple recent deepfake videos.

Facebook has previously stated that they would not remove deepfakes from their platforms. The videos will instead be flagged as fake by third-parties and then have a lessened priority in user's feeds. This response was prompted in June 2019 after a deepfake featuring a 2016 video of Mark Zuckerberg circulated on Facebook and Instagram.

In May 2022, Google officially changed the terms of service for their Jupyter Notebook colabs, banning the use of their colab service for the purpose of creating deepfakes. This came a few days after a VICE article had been published, claiming that "most deepfakes are non-consensual porn" and that the main use of popular deepfake software DeepFaceLab (DFL), "the most important technology powering the vast majority of this generation of deepfakes" which often was used in combination with Google colabs, would be to create non-consensual pornography, by pointing to the fact that among many other well-known examples of third-party DFL implementations such as deepfakes commissioned by The Walt Disney Company, official music videos, and web series Sassy Justice by the creators of South Park, DFL's GitHub page also links to deepfake porn website Mr.Deepfakes and participants of the DFL Discord server also participate on Mr.Deepfakes.

Legislation

In the United States, there have been some responses to the problems posed by deepfakes. In 2018, the Malicious Deep Fake Prohibition Act was introduced to the US Senate; in 2019, the Deepfakes Accountability Act was introduced in the 116th United States Congress by U.S. representative for New York's 9th congressional district Yvette Clarke. Several states have also introduced legislation regarding deepfakes, including Virginia, Texas, California, and New York; charges as varied as identity theft, cyberstalking, and revenge porn have been pursued, while more comprehensive statutes are urged.

Among U.S. legislative efforts, on 3 October 2019, California governor Gavin Newsom signed into law Assembly Bills No. 602 and No. 730. Assembly Bill No. 602 provides individuals targeted by sexually explicit deepfake content made without their consent with a cause of action against the content's creator. Assembly Bill No. 730 prohibits the distribution of malicious deepfake audio or visual media targeting a candidate running for public office within 60 days of their election. U.S. representative Yvette Clarke introduced H.R. 5586: Deepfakes Accountability Act into the 118th United States Congress on September 20, 2023 in an effort to protect national security from threats posed by deepfake technology. U.S. representative María Salazar introduced H.R. 6943: No AI Fraud Act into the 118th United States Congress on January 10, 2024, to establish specific property rights of individual physicality, including voice.

In November 2019, China announced that deepfakes and other synthetically faked footage should bear a clear notice about their fakeness starting in 2020. Failure to comply could be considered a crime the Cyberspace Administration of China stated on its website. The Chinese government seems to be reserving the right to prosecute both users and online video platforms failing to abide by the rules. The Cyberspace Administration of China, the Ministry of Industry and Information Technology, and the Ministry of Public Security jointly issued the Provision on the Administration of Deep Synthesis Internet Information Service in November 2022. China's updated Deep Synthesis Provisions (Administrative Provisions on Deep Synthesis in Internet-Based Information Services) went into effect in January 2023.

In the United Kingdom, producers of deepfake material could be prosecuted for harassment, but deepfake production was not a specific crime until 2023, when the Online Safety Act was passed, which made deepfakes illegal; the UK plans to expand the Act's scope to criminalize deepfakes created with "intention to cause distress" in 2024.

In Canada, in 2019, the Communications Security Establishment released a report which said that deepfakes could be used to interfere in Canadian politics, particularly to discredit politicians and influence voters. As a result, there are multiple ways for citizens in Canada to deal with deepfakes if they are targeted by them. In February 2024, bill C-63 was tabled in the 44th Canadian Parliament in order to enact the Online Harms Act, which would amend Criminal Code, and other Acts. An earlier version of the Bill, C-36, was ended by the dissolution of the 43rd Canadian Parliament in September 2021.

In India, there are no direct laws or regulation on AI or deepfakes, but there are provisions under the Indian Penal Code and Information Technology Act 2000/2008, which can be looked at for legal remedies, and the new proposed Digital India Act will have a chapter on AI and deepfakes in particular, as per the MoS Rajeev Chandrasekhar.

In Europe, the European Union's 2024 Artificial Intelligence Act (AI Act) takes a risk-based approach to regulating AI systems, including deepfakes. It establishes categories of "unacceptable risk," "high risk," "specific/limited or transparency risk", and "minimal risk" to determine the level of regulatory obligations for AI providers and users. However, the lack of clear definitions for these risk categories in the context of deepfakes creates potential challenges for effective implementation. Legal scholars have raised concerns about the classification of deepfakes intended for political misinformation or the creation of non-consensual intimate imagery. Debate exists over whether such uses should always be considered "high-risk" AI systems, which would lead to stricter regulatory requirements.

In August 2024, the Irish Data Protection Commission (DPC) launched court proceedings against X for its unlawful use of the personal data of over 60 million EU/EEA users, in order to train its AI technologies, such as its chatbot Grok.

Response from DARPA

In 2016, the Defense Advanced Research Projects Agency (DARPA) launched the Media Forensics (MediFor) program which was funded through 2020. MediFor aimed at automatically spotting digital manipulation in images and videos, including Deepfakes. In the summer of 2018, MediFor held an event where individuals competed to create AI-generated videos, audio, and images as well as automated tools to detect these deepfakes. According to the MediFor program, it established a framework of three tiers of information - digital integrity, physical integrity and semantic integrity - to generate one integrity score in an effort to enable accurate detection of manipulated media.

In 2019, DARPA hosted a "proposers day" for the Semantic Forensics (SemaFor) program where researchers were driven to prevent viral spread of AI-manipulated media. DARPA and the Semantic Forensics Program were also working together to detect AI-manipulated media through efforts in training computers to utilize common sense, logical reasoning. Built on the MediFor's technologies, SemaFor's attribution algorithms infer if digital media originates from a particular organization or individual, while characterization algorithms determine whether media was generated or manipulated for malicious purposes. In March 2024, SemaFor published an analytic catalog that offers the public access to open-source resources developed under SemaFor.

International Panel on the Information Environment

The International Panel on the Information Environment was launched in 2023 as a consortium of over 250 scientists working to develop effective countermeasures to deepfakes and other problems created by perverse incentives in organizations disseminating information via the Internet.

In popular culture

- The 1986 mid-December issue of Analog magazine published the novelette "Picaper" by Jack Wodhams. Its plot revolves around digitally enhanced or digitally generated videos produced by skilled hackers serving unscrupulous lawyers and political figures.

- The 1987 film The Running Man starring Arnold Schwarzenegger depicts an autocratic government using computers to digitally replace the faces of actors with those of wanted fugitives to make it appear the fugitives had been neutralized.

- In the 1992 techno-thriller A Philosophical Investigation by Philip Kerr, "Wittgenstein", the main character and a serial killer, makes use of both a software similar to deepfake and a virtual reality suit for having sex with an avatar of Isadora "Jake" Jakowicz, the female police lieutenant assigned to catch him.

- The 1993 film Rising Sun starring Sean Connery and Wesley Snipes depicts another character, Jingo Asakuma, who reveals that a computer disc has digitally altered personal identities to implicate a competitor.

- Deepfake technology is part of the plot of the 2019 BBC One TV series The Capture. The first series follows former British Army sergeant Shaun Emery, who is accused of assaulting and abducting his barrister. Expertly doctored CCTV footage is revealed to have framed him and mislead the police investigating the case. The second series follows politician Isaac Turner who discovers that another deepfake is tarnishing his reputation until the "correction" is eventually exposed to the public.

- In June 2020, YouTube deepfake artist Shamook created a deepfake of the 1994 film Forrest Gump by replacing the face of beloved actor Tom Hanks with John Travolta's. He created this piece using 6,000 high-quality still images of John Travolta's face from several of his films released around the same time as Forrest Gump. Shamook, then, created a 180 degree facial profile that he fed into a machine learning piece of software (DeepFaceLabs), along with Tom Hanks' face from Forrest Gump. The humor and irony of this deepfake traces back to 2007 when John Travolta revealed he turned down the chance to play the lead role in Forrest Gump because he had said yes to Pulp Fiction instead.

- Al Davis vs. the NFL: The narrative structure of this 2021 documentary, part of ESPN's 30 for 30 documentary series, uses deepfake versions of the film's two central characters, both deceased—Al Davis, who owned the Las Vegas Raiders during the team's tenure in Oakland and Los Angeles, and Pete Rozelle, the NFL commissioner who frequently clashed with Davis.

- Deepfake technology is featured in "Impawster Syndrome", the 57th episode of the Canadian police series Hudson & Rex, first broadcast on 6 January 2022, in which a member of the St. John's police team is investigated on suspicion of robbery and assault due to doctored CCTV footage using his likeness.

- Using deepfake technology in his music video for his 2022 single, "The Heart Part 5", musician Kendrick Lamar transformed into figures resembling Nipsey Hussle, O.J. Simpson, and Kanye West, among others. The deepfake technology in the video was created by Deep Voodoo, a studio led by Trey Parker and Matt Stone, who created South Park.

- Aloe Blacc honored his long-time collaborator Avicii four years after his death by performing their song "Wake Me Up" in English, Spanish, and Mandarin, using deepfake technologies.

- In January 2023, ITVX released the series Deep Fake Neighbour Wars, in which various celebrities were played by actors experiencing inane conflicts, the celebrity's face deepfaked onto them.

- In October 2023, Tom Hanks shared a photo of an apparent deepfake likeness depicting him promoting "some dental plan" to his Instagram page. Hanks warned his fans, "BEWARE . . . I have nothing to do with it."