Acute and late radiation damage to the central nervous system (CNS) may lead to changes in motor function and behavior or neurological disorders. Radiation and synergistic effects of radiation with other space flight factors may affect neural tissues, which in turn may lead to changes in function or behavior. Data specific to the spaceflight environment must be compiled to quantify the magnitude of this risk. If this is identified as a risk of high enough magnitude then appropriate protection strategies should be employed.A vigorous ground-based cellular and animal model research program will help quantify the risk to the CNS from space radiation exposure on future long distance space missions and promote the development of optimized countermeasures.

— Human Research Program Requirements Document, HRP-47052, Rev. C, dated Jan 2009.

Possible acute and late risks to the CNS from galactic cosmic rays (GCRs) and solar proton events (SPEs) are a documented concern for human exploration of our solar system. In the past, the risks to the CNS of adults who were exposed to low to moderate doses of ionizing radiation (0 to 2 Gy (Gray) (Gy = 100 rad)) have not been a major consideration. However, the heavy ion component of space radiation presents distinct biophysical challenges to cells and tissues as compared to the physical challenges that are presented by terrestrial forms of radiation. Soon after the discovery of cosmic rays, the concern for CNS risks originated with the prediction of the light flash phenomenon from single HZE nuclei traversals of the retina; this phenomenon was confirmed by the Apollo astronauts in 1970 and 1973. HZE nuclei are capable of producing a column of heavily damaged cells, or a microlesion, along their path through tissues, thereby raising concern over serious impacts on the CNS. In recent years, other concerns have arisen with the discovery of neurogenesis and its impact by HZE nuclei, which have been observed in experimental models of the CNS.

Human epidemiology is used as a basis for risk estimation for cancer, acute radiation risks, and cataracts. This approach is not viable for estimating CNS risks from space radiation, however. At doses above a few Gy, detrimental CNS changes occur in humans who are treated with radiation (e.g., gamma rays and protons) for cancer. Treatment doses of 50 Gy are typical, which is well above the exposures in space even if a large SPE were to occur. Thus, of the four categories of space radiation risks (cancer, CNS, degenerative, and acute radiation syndromes), the CNS risk relies most extensively on experimental data with animals for its evidence base. Understanding and mitigating CNS risks requires a vigorous research program that will draw on the basic understanding that is gained from cellular and animal models, and on the development of approaches to extrapolate risks and the potential benefits of countermeasures for astronauts.

Several experimental studies, which use heavy ion beams simulating space radiation, provide constructive evidence of the CNS risks from space radiation. First, exposure to HZE nuclei at low doses ( less than 50 cGy) significantly induces neurocognitive deficits, such as learning and behavioral changes as well as operant reactions in the mouse and rat. Exposures to equal or higher doses of low-LET radiation (e.g., gamma or X rays) do not show similar effects. The threshold of performance deficit following exposure to HZE nuclei depends on both the physical characteristics of the particles, such as linear energy transfer (LET), and the animal age at exposure. A performance deficit has been shown to occur at doses that are similar to the ones that will occur on a Mars mission (less than 0.5 Gy). The neurocognitive deficits with the dopaminergic nervous system are similar to aging and appear to be unique to space radiation. Second, exposure to HZE disrupts neurogenesis in mice at low doses (less than 1 Gy), showing a significant dose-related reduction of new neurons and oligodendrocytes in the subgranular zone (SGZ) of the hippocampal dentate gyrus. Third, reactive oxygen species (ROS) in neuronal precursor cells arise following exposure to HZE nuclei and protons at low dose, and can persist for several months. Antioxidants and anti-inflammatory agents can possibly reduce these changes. Fourth, neuroinflammation arises from the CNS following exposure to HZE nuclei and protons. In addition, age-related genetic changes increase the sensitivity of the CNS to radiation.

Research with animal models that are irradiated with HZE nuclei has shown that important changes to the CNS occur with the dose levels that are of concern to NASA. However, the significance of these results on the morbidity to astronauts has not been elucidated. One model of late tissue effects suggests that significant effects will occur at lower doses, but with increased latency. It is to be noted that the studies that have been conducted to date have been carried out with relatively small numbers of animals (less than 10 per dose group); therefore, testing of dose threshold effects at lower doses (less than 0.5 Gy) has not been carried out sufficiently at this time. As the problem of extrapolating space radiation effects in animals to humans will be a challenge for space radiation research, such research could become limited by the population size that is used in animal studies. Furthermore, the role of dose protraction has not been studied to date. An approach to extrapolate existing observations to possible cognitive changes, performance degradation, or late CNS effects in astronauts has not been discovered. New approaches in systems biology offer an exciting tool to tackle this challenge. Recently, eight gaps were identified for projecting CNS risks. Research on new approaches to risk assessment may be needed to provide the necessary data and knowledge to develop risk projection models of the CNS from space radiation.

Introduction

Both GCRs and SPEs are of concern for CNS risks. The major GCRs are composed of protons, α-particles, and particles of HZE nuclei with a broad energy spectra ranging from a few tens to above 10,000 MeV/u. In interplanetary space, GCR organ dose and dose-equivalent of more than 0.2 Gy or 0.6 Sv per year, respectively, are expected. The high energies of GCRs allow them to penetrate to hundreds of centimeters of any material, thus precluding radiation shielding as a plausible mitigation measure to GCR risks on the CNS. For SPEs, the possibility exists for an absorbed dose of over 1 Gy from an SPE if crew members are in a thinly shielded spacecraft or performing a spacewalk. The energies of SPEs, although substantial (tens to hundreds of MeV), do not preclude radiation shielding as a potential countermeasure. However, the costs of shielding may be high to protect against the largest events.The fluence of charged particles hitting the brain of an astronaut has been estimated several times in the past. One estimate is that during a 3-year mission to Mars at solar minimum (assuming the 1972 spectrum of GCR), 20 million out of 43 million hippocampus cells and 230 thousand out of 1.3 million thalamus cell nuclei will be directly hit by one or more particles with charge Z> 15.These numbers do not include the additional cell hits by energetic electrons (delta rays) that are produced along the track of HZE nuclei or correlated cellular damage. The contributions of delta rays from GCR and correlated cellular damage increase the number of damaged cells two- to three-fold from estimates of the primary track alone and present the possibility of heterogeneously damaged regions, respectively. The importance of such additional damage is poorly understood.

At this time, the possible detrimental effects to an astronaut’s CNS from the HZE component of GCR have yet to be identified. This is largely due to the lack of a human epidemiological basis with which to estimate risks and the relatively small number of published experimental studies with animals. RBE factors are combined with human data to estimate cancer risks for low-LET radiation exposure. Since this approach is not possible for CNS risks, new approaches to risk estimation will be needed. Thus, biological research is required to establish risk levels and risk projection models and, if the risk levels are found to be significant, to design countermeasures.

Description of central nervous system risks of concern to NASA

Acute and late CNS risks from space radiation are of concern for Exploration missions to the moon or Mars. Acute CNS risks include: altered cognitive function, reduced motor function, and behavioral changes, all of which may affect performance and human health. Late CNS risks are possible neurological disorders such as Alzheimer’s disease, dementia, or premature aging. The effect of the protracted exposure of the CNS to the low dose-rate (< 50 mGy h–1) of proton, HZE particles, and neutrons of the relevant energies for doses up to 2 Gy is of concern.Current NASA permissible exposure limits

PELs for short-term and career astronaut exposure to space radiation have been approved by the NASA Chief Health and Medical Officer. The PELs set requirements and standards for mission design and crew selection as recommended in NASA-STD-3001, Volume 1. NASA has used dose limits for cancer risks and the non-cancer risks to the BFOs, skin, and lens since 1970. For Exploration mission planning, preliminary dose limits for the CNS risks are based largely on experimental results with animal models. Further research is needed to validate and quantify these risks, however, and to refine the values for dose limits. The CNS PELs, which correspond to the doses at the region of the brain called the hippocampus, are set for time periods of 30 days or 1 year, or for a career with values of 500, 1,000, and 1,500 mGy-Eq, respectively. Although the unit mGy-Eq is used, the RBE for CNS effects is largely unknown; therefore, the use of the quality factor function for cancer risk estimates is advocated. For particles with charge Z>10, an addition PEL requirement limits the physical dose (mGy) for 1 year and the career to 100 and 250 mGy, respectively. NASA uses computerized anatomical geometry models to estimate the body self-shielding at the hippocampus.Evidence

Review of human data

Evidence of the effects of terrestrial forms of ionizing radiation on the CNS has been documented from radiotherapy patients, although the dose is higher for these patients than would be experienced by astronauts in the space environment. CNS behavioral changes such as chronic fatigue and depression occur in patients who are undergoing irradiation for cancer therapy. Neurocognitive effects, especially in children, are observed at lower radiation doses. A recent review on intelligence and the academic achievement of children after treatment for brain tumors indicates that radiation exposure is related to a decline in intelligence and academic achievement, including low intelligence quotient (IQ) scores, verbal abilities, and performance IQ; academic achievement in reading, spelling, and mathematics; and attention functioning. Mental retardation was observed in the children of the atomic-bomb survivors in Japan who were exposed to radiation prenatally at moderate doses (<2 15="" 8="" at="" but="" earlier="" gy="" later="" not="" or="" p="" post-conception="" prenatal="" times.="" to="" weeks="">Radiotherapy for the treatment of several tumors with protons and other charged particle beams provides ancillary data for considering radiation effects for the CNS. NCRP Report No. 153 notes charge particle usage “for treatment of pituitary tumors, hormone-responsive metastatic mammary carcinoma, brain tumors, and intracranial arteriovenous malformations and other cerebrovascular diseases.” In these studies are found associations with neurological complications such as impairments in cognitive functioning, language acquisition, visual spatial ability, and memory and executive functioning, as well as changes in social behaviors. Similar effects did not appear in patients who were treated with chemotherapy. In all of these examples, the patients were treated with extremely high doses that were below the threshold for necrosis. Since cognitive functioning and memory are closely associated with the cerebral white volume of the prefrontal/frontal lobe and cingulate gyrus, defects in neurogenesis may play a critical role in neurocognitive problems in irradiated patients.

Review of space flight issues

The first proposal concerning the effect of space radiation on the CNS was made by Cornelius Tobias in his 1952 description of light flash phenomenon caused by single HZE nuclei traversals of the retina. Light flashes, such as those described by Tobias, were observed by the astronauts during the early Apollo missions as well as in dedicated experiments that were subsequently performed on Apollo and Skylab missions. More recently, studies of light flashes were made on the Russian Mir space station and the ISS. A 1973 report by the NAS considered these effects in detail. This phenomenon, which is known as a Phosphene, is the visual perception of flickering light. It is considered a subjective sensation of light since it can be caused by simply applying pressure on the eyeball. The traversal of a single, highly charged particle through the occipital cortex or the retina was estimated to be able to cause a light flash. Possible mechanisms for HZE-induced light flashes include direction ionization and Cerenkov radiation within the retina.The observation of light flashes by the astronauts brought attention to the possible effects of HZE nuclei on brain function. The microlesion concept, which considered the effects of the column of damaged cells surrounding the path of an HZE nucleus traversing critical regions of the brain, originated at this time. An important task that still remains is to determine whether and to what extent such particle traversals contribute to functional degradation within the CNS.

The possible observation of CNS effects in astronauts who were participating in past NASA missions is highly unlikely for several reasons. First, the lengths of past missions are relatively short and the population sizes of astronauts are small. Second, when astronauts are traveling in LEO, they are partially protected by the magnetic field and the solid body of the Earth, which together reduce the GCR dose-rate by about two-thirds from its free space values. Furthermore, the GCR in LEO has lower LET components compared to the GCR that will be encountered in transit to Mars or on the lunar surface because the magnetic field of the Earth repels nuclei with energies that are below about 1,000 MeV/u, which are of higher LET. For these reasons, the CNS risks are a greater concern for long-duration lunar missions or for a Mars mission than for missions on the ISS.

Radiobiology studies of central nervous system risks for protons, neutrons, and high-Z high-energy nuclei

Both GCR and SPE could possibly contribute to acute and late CNS risks to astronaut health and performance. This section presents a description of the studies that have been performed on the effects of space radiation in cell, tissue, and animal models.Effects in neuronal cells and the central nervous system

Neurogenesis

The CNS consists of neurons, astrocytes, and oligodendrocytes that are generated from multipotent stem cells. NCRP Report No. 153 provides the following excellent and short introduction to the composition and cell types of interest for radiation studies of the CNS: “The CNS consists of neurons differing markedly in size and number per unit area. There are several nuclei or centers that consist of closely packed neuron cell bodies (e.g., the respiratory and cardiac centers in the floor of the fourth ventricle). In the cerebral cortex the large neuron cell bodies, such as Betz cells, are separated by a considerable distance. Of additional importance are the neuroglia which are the supporting cells and consist of astrocytes, oligodendroglia, and microglia. These cells permeate and support the nervous tissue of the CNS, binding it together like a scaffold that also supports the vasculature. The most numerous of the neuroglia are Type I astrocytes, which make up about half the brain, greatly outnumbering the neurons. Neuroglia retain the capability of cell division in contrast to neurons and, therefore, the responses to radiation differ between the cell types. A third type of tissue in the brain is the vasculature which exhibits a comparable vulnerability for radiation damage to that found elsewhere in the body. Radiation-induced damage to oligodendrocytes and endothelial cells of the vasculature accounts for major aspects of the pathogenesis of brain damage that can occur after high doses of low-LET radiation.” Based on studies with low-LET radiation, the CNS is considered a radioresistant tissue. For example: in radiotherapy, early brain complications in adults usually do not develop if daily fractions of 2 Gy or less are administered with a total dose of up to 50 Gy. The tolerance dose in the CNS, as with other tissues, depends on the volume and the specific anatomical location in the human brain that is irradiated.In recent years, studies with stem cells uncovered that neurogenesis still occurs in the adult hippocampus, where cognitive actions such as memory and learning are determined. This discovery provides an approach to understand mechanistically the CNS risk of space radiation. Accumulating data indicate that radiation not only affects differentiated neural cells, but also the proliferation and differentiation of neuronal precursor cells and even adult stem cells. Recent evidence points out that neuronal progenitor cells are sensitive to radiation. Studies on low-LET radiation show that radiation stops not only the generation of neuronal progenitor cells, but also their differentiation into neurons and other neural cells. NCRP Report No. 153 notes that cells in the SGZ of the dentate gyrus undergo dose-dependent apoptosis above 2 Gy of X-ray irradiation, and the production of new neurons in young adult male mice is significantly reduced by relatively low (>2 Gy) doses of X rays. NCRP Report No. 153 also notes that: “These changes are observed to be dose dependent. In contrast there were no apparent effects on the production of new astrocytes or oligodendrocytes. Measurements of activated microglia indicated that changes in neurogenesis were associated with a significant dose-dependent inflammatory response even 2 months after irradiation. This suggests that the pathogenesis of long-recognized radiation-induced cognitive injury may involve loss of neural precursor cells from the SGZ of the hippocampal dentate gyrus and alterations in neurogenesis.”

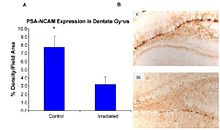

Recent studies provide evidence of the pathogenesis of HZE nuclei in the CNS. The authors of one of these studies were the first to suggest neurodegeneration with HZE nuclei, as shown in figure 6-1(a). These studies demonstrate that HZE radiation led to the progressive loss of neuronal progenitor cells in the SGZ at doses of 1 to 3 Gy in a dosedependent manner. NCRP Report No. 153 notes that “Mice were irradiated with 1 to 3 Gy of 12C or 56Fe-ions and 9 months later proliferating cells and immature neurons in the dentate SGZ were quantified. The results showed that reductions in these cells were dependent on the dose and LET. Loss of precursor cells was also associated with altered neurogenesis and a robust inflammatory response, as shown in figures 6-1(a) and 6-1(b). These results indicate that high-LET radiation has a significant and long-lasting effect on the neurogenic population in the hippocampus that involves cell loss and changes in the microenvironment. The work has been confirmed by other studies. These investigators noted that these changes are consistent with those found in aged subjects, indicating that heavy-particle irradiation is a possible model for the study of aging.”

Figure 6-1(a).

(Panel A) Expression of polysialic acid form of neural cell adhesion

molecule (PSA-NCAM) in the hippocampus of rats that were irradiated (IR)

with 2.5 Gy of 56Fe high-energy radiation and control subjects as

measured by % density/field area measured. (Panel B) PSA-NCAM staining

in the dentate gyrus of representative irradiated (IR) and control (C)

subjects at 5x magnification.

Figure 6-1(b).

Numbers of proliferating cells (left panel) and immature neurons (right

panel) in the dentate SGZ are significantly decreased 48 hours after

irradiation. Antibodies against Ki-67 and doublecortin (Dcx) were used

to detect proliferating cells and immature neurons, respectively. Doses

from 2 to 10 Gy significantly (p < 0.05) reduced the numbers of

proliferating cells. Immature neurons were also reduced in a

dose-dependent fashion (p<0 .001="" an="" and="" animals="" average="" bar="" bars="" div="" each="" error.="" error="" four="" of="" represents="" standard="">

Oxidative damage

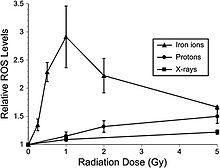

Recent studies indicate that adult rat neural precursor cells from the hippocampus show an acute, dose-dependent apoptotic response that was accompanied by an increase in ROS. Low-LET protons are also used in clinical proton beam radiation therapy, at an RBE of 1.1 relative to megavoltage X rays at a high dose. NCRP Report No. 153 notes that: “Relative ROS levels were increased at nearly all doses (1 to 10 Gy) of Bragg-peak 250 MeV protons at post-irradiation times (6 to 24 hours) compared to unirradiated controls. The increase in ROS after proton irradiation was more rapid than that observed with X rays and showed a well-defined dose response at 6 and 24 hours, increasing about 10-fold over controls at a rate of 3% per Gy. However, by 48 hours post-irradiation, ROS levels fell below controls and coincided with minor reductions in mitochondrial content. Use of the antioxidant alpha-lipoic acid (before or after irradiation) was shown to eliminate the radiation-induced rise in ROS levels. These results corroborate the earlier studies using X rays and provide further evidence that elevated ROS are integral to the radioresponse of neural precursor cells.” Furthermore, high-LET radiation led to significantly higher levels of oxidative stress in hippocampal precursor cells as compared to lower-LET radiations (X rays, protons) at lower doses (≤1 Gy) (figure 6-2). The use of the antioxidant lipoic acid was able to reduce ROS levels below background levels when added before or after 56Fe-ion irradiation. These results conclusively show that low doses of 56Fe-ions can elicit significant levels of oxidative stress in neural precursor cells at a low dose.

Figure 6-2.

Dose response for oxidative stress after 56Fe-ion irradiation.

Hippocampal precursors that are subjected to 56Fe-ion irradiation were

analyzed for oxidative stress 6 hours after exposure. At doses ≤1 Gy a

linear dose response for the induction of oxidative stress was observed.

At higher 56Fe doses, oxidative stress fell to values that were found

using lower-LET irradiations (X rays, protons). Experiments, which

represent a minimum of three independent measurements (±SE), were

normalized against unirradiated controls set to unity. ROS levels

induced after 56Fe irradiation were significantly (P < 0.05) higher

than controls.

Neuroinflammation

Neuroinflammation, which is a fundamental reaction to brain injury, is characterized by the activation of resident microglia and astrocytes and local expression of a wide range of inflammatory mediators. Acute and chronic neuroinflammation has been studied in the mouse brain following exposure to HZE. The acute effect of HZE is detectable at 6 and 9 Gy; no studies are available at lower doses. Myeloid cell recruitment appears by 6 months following exposure. The estimated RBE value of HZE irradiation for induction of an acute neuroinflammatory response is three compared to that of gamma irradiation. COX-2 pathways are implicated in neuroinflammatory processes that are caused by low-LET radiation. COX-2 up-regulation in irradiated microglia cells leads to prostaglandin E2 production, which appears to be responsible for radiation-induced gliosis (overproliferation of astrocytes in damaged areas of the CNS).Behavioral effects

As behavioral effects are difficult to quantitate, they consequently are one of the most uncertain of the space radiation risks. NCRP Report No. 153 notes that: “The behavioral neurosciences literature is replete with examples of major differences in behavioral outcome depending on the animal species, strain, or measurement method used. For example, compared to unirradiated controls, X-irradiated mice show hippocampal-dependent spatial learning and memory impairments in the Barnes maze, but not in the Morris water maze which, however, can be used to demonstrate deficits in rats. Particle radiation studies of behavior have been accomplished with rats and mice, but with some differences in the outcome depending on the endpoint measured.”The following studies provide evidence that space radiation affects the CNS behavior of animals in a somewhat dose- and LET-dependent manner.

Sensorimotor effects

Sensorimotor deficits and neurochemical changes were observed in rats that were exposed to low doses of 56Fe-ions. Doses that are below 1 Gy reduce performance, as tested by the wire suspension test. Behavioral changes were observed as early as 3 days after radiation exposure and lasted up to 8 months. Biochemical studies showed that the K+-evoked release of dopamine was significantly reduced in the irradiated group, together with an alteration of the nerve signaling pathways. A negative result was reported by Pecaut et al., in which no behavioral effects were seen in female C57/BL6 mice in a 2- to 8-week period following their exposure to 0, 0.1, 0.5 or 2 Gy accelerated 56Fe-ions (1 GeV/u56Fe) as measured by open-field, rotorod, or acoustic startle habituation.Radiation-induced changes in conditioned taste aversion

There is evidence that deficits in conditioned taste aversion (CTA) are induced by low doses of heavy ions. The CTA test is a classical conditioning paradigm that assesses the avoidance behavior that occurs when the ingestion of a normally acceptable food item is associated with illness. This is considered a standard behavioral test of drug toxicity. NCRP Report No. 153 notes that: “The role of the dopaminergic system in radiation-induced changes in CTA is suggested by the fact that amphetamine-induced CTA, which depends on the dopaminergic system, is affected by radiation, whereas lithium chloride-induced CTA, which does not involve the dopaminergic system, is not affected by radiation. It was established that the degree of CTA due to radiation is LET-dependent ([figure 6-3]) and that 56Fe-ions are the most effective of the various low and high LET radiation types that have been tested. Doses as low as ~0.2 Gy of 56Fe-ions appear to have an effect on CTA.”The RBE of different types of heavy particles on CNS function and cognitive/behavioral performance was studied in Sprague-Dawley rats. The relationship between the thresholds for the HZE particle-induced disruption of amphetamine-induced CTA learning is shown in figure 6-4; and for the disruption of operant responding is shown in figure 6-5. These figures show a similar pattern of responsiveness to the disruptive effects of exposure to either 56Fe or 28Si particles on both CTA learning and operant responding. These results suggest that the RBE of different particles for neurobehavioral dysfunction cannot be predicted solely on the basis of the LET of the specific particle.

Figure 6-3. ED50 for CTA as a function of LET for the following radiation sources: 40Ar = argon ions, 60Co = Cobalt-60 gamma rays, e− = electrons, 56FE = iron ions, 4He = helium ions, n0 = neutrons, 20Ne = neon ions.

Figure 6-4. Radiation-induced disruption in CTA. This figure shows the relationship between exposure to different energies of 56FE and 28Si particles and the threshold dose for the disruption of amphetamine-induced CTA learning. Only a single energy of 48Ti particles was tested. The threshold dose (cGy) for the disruption of the response is plotted against particle LET (keV/μm).

Figure 6-5.jpg

High-LET radiation effects on operant response. This figure shows the

relationship between the exposure to different energies of 56Fe and 28Si

particles and the threshold dose for the disruption of performance on a

food-reinforced operant response. Only a single energy of 48Ti particles was tested. The threshold dose (cGy) for the disruption of the response is plotted against particle LET (keV/μm).

Radiation affect on operant conditioning

Operant conditioning uses several consequences to modify a voluntary behavior. Recent studies by Rabin et al. have examined the ability of rats to perform an operant order to obtain food reinforcement using an ascending fixed ratio (FR) schedule. They found that 56Fe-ion doses that are above 2 Gy affect the appropriate responses of rats to increasing work requirements. NCRP Report No. 153 notes that "The disruption of operant response in rats was tested 5 and 8 months after exposure, but maintaining the rats on a diet containing strawberry, but not blueberry, extract were shown to prevent the disruption. When tested 13 and 18 months after irradiation, there were no differences in performance between the irradiated rats maintained on control, strawberry or blueberry diets. These observations suggest that the beneficial effects of antioxidant diets may be age dependent."Spatial learning and memory

The effects of exposure to HZE nuclei on spatial learning, memory behavior, and neuronal signaling have been tested, and threshold doses have also been considered for such effects. It will be important to understand the mechanisms that are involved in these deficits to extrapolate the results to other dose regimes, particle types, and, eventually, astronauts. Studies on rats were performed using the Morris water maze test 1 month after whole-body irradiation with 1.5 Gy of 1 GeV/u 56Fe-ions. Irradiated rats demonstrated cognitive impairment that was similar to that seen in aged rats. This leads to the possibility that an increase in the amount of ROS may be responsible for the induction of both radiation- and age-related cognitive deficits.NCRP Report No. 153 notes that: “Denisova et al. exposed rats to 1.5 Gy of 1 GeV/u56Feions and tested their spatial memory in an eight-arm radial maze. Radiation exposure impaired the rats’ cognitive behavior, since they committed more errors than control rats in the radial maze and were unable to adopt a spatial strategy to solve the maze. To determine whether these findings related to brain-region specific alterations in sensitivity to oxidative stress, inflammation or neuronal plasticity, three regions of the brain, the striatum, hippocampus and frontal cortex that are linked to behavior, were isolated and compared to controls. Those that were irradiated were adversely affected as reflected through the levels of dichlorofluorescein, heat shock, and synaptic proteins (for example, synaptobrevin and synaptophysin). Changes in these factors consequently altered cellular signaling (for example, calciumdependent protein kinase C and protein kinase A). These changes in brain responses significantly correlated with working memory errors in the radial maze. The results show differential brain-region-specific sensitivity induced by 56Fe irradiation ([figure 6-6]). These findings are similar to those seen in aged rats, suggesting that increased oxidative stress and inflammation may be responsible for the induction of both radiation and age-related cognitive deficits.”

Figure 6-6.

Brain-region-specific calcium-dependent protein kinase C expression was

assessed in control and irradiated rats using standard Western

blotting procedures. Values are means ± SEM (standard error of mean).

Acute central nervous system risks

In addition to the possible in-flight performance and motor skill changes that were described above, the immediate CNS effects (i.e., within 24 hours following exposure to low-LET radiation) are anorexia and nausea. These prodromal risks are dose-dependent and, as such, can provide an indicator of the exposure dose. Estimates are ED50 = 1.08 Gy for anorexia, ED50 = 1.58 Gy for nausea, and ED50=2.40 Gy for emesis. The relative effectiveness of different radiation types in producing emesis was studied in ferrets and is illustrated in figure 6-7. High-LET radiation at doses that are below 0.5 Gy show greater relative biological effectiveness compared to low-LET radiation. The acute effects on the CNS, which are associated with increases in cytokines and chemokines, may lead to disruption in the proliferation of stem cells or memory loss that may contribute to other degenerative diseases.

Figure 6-7. LET dependence of RBE of radiation in producing emesis or retching in a ferret. B = bremsstrahlung; e− = electrons; P = protons; 60Co = cobalt gamma rays; n0 = neutrons; and 56Fe = iron.

Computer models and systems biology analysis of central nervous system risks

Since human epidemiology and experimental data for CNS risks from space radiation are limited, mammalian models are essential tools for understanding the uncertainties of human risks. Cellular, tissue, and genetic animal models have been used in biological studies on the CNS using simulated space radiation. New technologies, such as three-dimensional cell cultures, microarrays, proteomics, and brain imaging, are used in systematic studies on CNS risks from different radiation types. According to biological data, mathematical models can be used to estimate the risks from space radiation.Systems biology approaches to Alzheimer’s disease that consider the biochemical pathways that are important in CNS disease evolution have been developed by research that was funded outside NASA. Figure 6-8 shows a schematic of the biochemical pathways that are important in the development of Alzheimer’s disease. The description of the interaction of space radiation within these pathways would be one approach to developing predictive models of space radiation risks. For example, if the pathways that were studied in animal models could be correlated with studies in humans who are suffering from Alzheimer’s disease, an approach to describe risk that uses biochemical degrees-of-freedom could be pursued. Edelstein-Keshet and Spiros have developed an in silico model of senile plaques that are related to Alzheimer’s disease. In this model, the biochemical interactions among TNF, IL-1B, and IL-6 are described within several important cell populations, including astrocytes, microglia, and neurons. Further, in this model soluble amyloid causes microglial chemotaxis and activates IL-1B secretion. Figure 6-9 shows the results of the Edelstein-Keshet and Spiros model simulating plaque formation and neuronal death. Establishing links between space radiation-induced changes to the changes that are described in this approach can be pursued to develop an in silico model of Alzheimer’s disease that results from space radiation.

Figure 6-8.Molecular pathways important in Alzheimer’s disease. From Kyoto Encyclopedia of Genes and Genomes. Copyrighted image located at http://www.genome.jp/kegg/pathway/hsa/hsa05010.html

Figure 6-9.

Model of plaque formation and neuronal death in Alzheimer’s disease.

From Edelstein-Keshet and Spiros, 2002 : Top row: Formation of a plaque

and death of neurons in the absence of glial cells, when fibrous amyloid

is the only injurious influence. The simulation was run with no

astrocytes or microglia, and the health of neurons was determined solely

by the local fibrous amyloid. Shown above is a time sequence (left to

right) of three stages in plaque development, at early, intermediate,

and advanced stages. Density of fibrous deposit is represented by small

dots and neuronal health by shading from white (healthy) to black

(dead). Note radial symmetry due to simple diffusion. Bottom row: Effect

of microglial removal of amyloid on plaque morphology. Note that

microglia (small star-like shapes) are seen approaching the plaque (via

chemotaxis to soluble amyloid, not shown). At a later stage, they have

congregated at the plaque center, where they adhere to fibers. As a

result of the removal of soluble and fibrous amyloid, the microglia lead

to irregular plaque morphology. Size scale: In this figure, the

distance between the small single dots (representing low-fiber deposits)

is 10 mm. Similar results were obtained for a 10-fold scaling in the

time scale of neuronal health dynamics.

Risks in context of exploration mission operational scenarios

Projections for space missions

Reliable projections of CNS risks for space missions cannot be made from the available data. Animal behavior studies indicate that high-HZE radiation has a high RBE, but the data are not consistent. Other uncertainties include: age at exposure, radiation quality, and dose-rate effects, as well as issues regarding genetic susceptibility to CNS risk from space radiation exposure. More research is required before CNS risk can be estimated.Potential for biological countermeasures

The goal of space radiation research is to estimate and reduce uncertainties in risk projection models and, if necessary, develop countermeasures and technologies to monitor and treat adverse outcomes to human health and performance that are relevant to space radiation for short-term and career exposures, including acute or late CNS effects from radiation exposure. The need for the development of countermeasures to CNS risks is dependent on further understanding of CNS risks, especially issues that are related to a possible dose threshold, and if so, which NASA missions would likely exceed threshold doses. As a result of animal experimental studies, antioxidant and anti-inflammation are expected to be effective countermeasures for CNS risks from space radiation. Diets of blueberries and strawberries were shown to reduce CNS risks after heavy-ion exposure. Estimating the effects of diet and nutritional supplementation will be a primary goal of CNS research on countermeasures.A diet that is rich in fruit and vegetables significantly reduces the risk of several diseases. Retinoids and vitamins A, C, and E are probably the most well-known and studied natural radioprotectors, but hormones (e.g., melatonin), glutathione, superoxide dismutase, and phytochemicals from plant extracts (including green tea and cruciferous vegetables), as well as metals (especially selenium, zinc, and copper salts) are also under study as dietary supplements for individuals, including astronauts, who have been overexposed to radiation. Antioxidants should provide reduced or no protection against the initial damage from densely ionizing radiation such as HZE nuclei, because the direct effect is more important than the free-radical-mediated indirect radiation damage at high LET. However, there is an expectation that some benefits should occur for persistent oxidative damage that is related to inflammation and immune responses. Some recent experiments suggest that, at least for acute high-dose irradiation, an efficient radioprotection by dietary supplements can be achieved, even in the case of exposure to high-LET radiation. Although there is evidence that dietary antioxidants (especially strawberries) can protect the CNS from the deleterious effects of high doses of HZE particles, because the mechanisms of biological effects are different at low dose-rates compared to those of acute irradiation, new studies for protracted exposures will be needed to understand the potential benefits of biological countermeasures.

Concern about the potential detrimental effects of antioxidants was raised by a recent meta-study of the effects of antioxidant supplements in the diet of normal subjects. The authors of this study did not find statistically significant evidence that antioxidant supplements have beneficial effects on mortality. On the contrary, they concluded that β-carotene, vitamin A, and vitamin E seem to increase the risk of death. Concerns are that the antioxidants may allow rescue of cells that still sustain DNA mutations or altered genomic methylation patterns following radiation damage to DNA, which can result in genomic instability. An approach to target damaged cells for apoptosis may be advantageous for chronic exposures to GCR.

![r_{{p}}=\left|\left[e_{{p}}-\sum _{{j=1,j\neq p}}^{{n}}k_{{pj}}\left|r_{{j}}-r_{{pj}}^{{o}}\right|\right]\right|](https://wikimedia.org/api/rest_v1/media/math/render/svg/0d90ffed5e4a6921f3feeb1ff1ff58ca526526b5)