Prediction can be further distinguished from earthquake warning systems, which, upon detection of an earthquake, provide a real-time warning of seconds to neighboring regions that might be affected.

In the 1970s, scientists were optimistic that a practical method for predicting earthquakes would soon be found, but by the 1990s continuing failure led many to question whether it was even possible. Demonstrably successful predictions of large earthquakes have not occurred, and the few claims of success are controversial. For example, the most famous claim of a successful prediction is that alleged for the 1975 Haicheng earthquake. A later study said that there was no valid short-term prediction. Extensive searches have reported many possible earthquake precursors, but, so far, such precursors have not been reliably identified across significant spatial and temporal scales. While part of the scientific community hold that, taking into account non-seismic precursors and given enough resources to study them extensively, prediction might be possible, most scientists are pessimistic and some maintain that earthquake prediction is inherently impossible.

Evaluating earthquake predictions

Predictions are deemed significant if they can be shown to be successful beyond random chance. Therefore, methods of statistical hypothesis testing are used to determine the probability that an earthquake such as is predicted would happen anyway (the null hypothesis). The predictions are then evaluated by testing whether they correlate with actual earthquakes better than the null hypothesis.

In many instances, however, the statistical nature of earthquake occurrence is not simply homogeneous. Clustering occurs in both space and time. In southern California about 6% of M≥3.0 earthquakes are "followed by an earthquake of larger magnitude within 5 days and 10 km." In central Italy 9.5% of M≥3.0 earthquakes are followed by a larger event within 48 hours and 30 km. While such statistics are not satisfactory for purposes of prediction (giving ten to twenty false alarms for each successful prediction) they will skew the results of any analysis that assumes that earthquakes occur randomly in time, for example, as realized from a Poisson process. It has been shown that a "naive" method based solely on clustering can successfully predict about 5% of earthquakes; "far better than 'chance'".

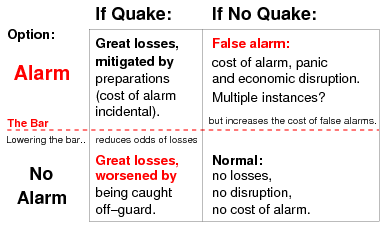

As the purpose of short-term prediction is to enable emergency measures to reduce death and destruction, failure to give warning of a major earthquake, that does occur, or at least an adequate evaluation of the hazard, can result in legal liability, or even political purging. For example, it has been reported that members of the Chinese Academy of Sciences were purged for "having ignored scientific predictions of the disastrous Tangshan earthquake of summer 1976." Following the 2009 L'Aquila Earthquake, seven scientists and technicians in Italy were convicted of manslaughter, but not so much for failing to predict the earthquake, where some 300 people died, as for giving undue assurance to the populace – one victim called it "anaesthetizing" – that there would not be a serious earthquake, and therefore no need to take precautions. But warning of an earthquake that does not occur also incurs a cost: not only the cost of the emergency measures themselves, but of civil and economic disruption. False alarms, including alarms that are canceled, also undermine the credibility, and thereby the effectiveness, of future warnings. In 1999 it was reported that China was introducing "tough regulations intended to stamp out 'false' earthquake warnings, in order to prevent panic and mass evacuation of cities triggered by forecasts of major tremors." This was prompted by "more than 30 unofficial earthquake warnings ... in the past three years, none of which has been accurate." The acceptable trade-off between missed quakes and false alarms depends on the societal valuation of these outcomes. The rate of occurrence of both must be considered when evaluating any prediction method.

In a 1997 study of the cost-benefit ratio of earthquake prediction research in Greece, Stathis Stiros suggested that even a (hypothetical) excellent prediction method would be of questionable social utility, because "organized evacuation of urban centers is unlikely to be successfully accomplished", while "panic and other undesirable side-effects can also be anticipated." He found that earthquakes kill less than ten people per year in Greece (on average), and that most of those fatalities occurred in large buildings with identifiable structural issues. Therefore, Stiros stated that it would be much more cost-effective to focus efforts on identifying and upgrading unsafe buildings. Since the death toll on Greek highways is more than 2300 per year on average, he argued that more lives would also be saved if Greece's entire budget for earthquake prediction had been used for street and highway safety instead.

Prediction methods

Earthquake prediction is an immature science – it has not yet led to a successful prediction of an earthquake from first physical principles. Research into methods of prediction therefore focus on empirical analysis, with two general approaches: either identifying distinctive precursors to earthquakes, or identifying some kind of geophysical trend or pattern in seismicity that might precede a large earthquake. Precursor methods are pursued largely because of their potential utility for short-term earthquake prediction or forecasting, while 'trend' methods are generally thought to be useful for forecasting, long term prediction (10 to 100 years time scale) or intermediate term prediction (1 to 10 years time scale).

Precursors

An earthquake precursor is an anomalous phenomenon that might give effective warning of an impending earthquake. Reports of these – though generally recognized as such only after the event – number in the thousands, some dating back to antiquity. There have been around 400 reports of possible precursors in scientific literature, of roughly twenty different types, running the gamut from aeronomy to zoology. None have been found to be reliable for the purposes of earthquake prediction.

In the early 1990, the IASPEI solicited nominations for a Preliminary List of Significant Precursors. Forty nominations were made, of which five were selected as possible significant precursors, with two of those based on a single observation each.

After a critical review of the scientific literature, the International Commission on Earthquake Forecasting for Civil Protection (ICEF) concluded in 2011 there was "considerable room for methodological improvements in this type of research." In particular, many cases of reported precursors are contradictory, lack a measure of amplitude, or are generally unsuitable for a rigorous statistical evaluation. Published results are biased towards positive results, and so the rate of false negatives (earthquake but no precursory signal) is unclear.

Animal behavior

After an earthquake has already begun, pressure waves (P waves) travel twice as fast as the more damaging shear waves (s waves). Typically not noticed by humans, some animals may notice the smaller vibrations that arrive a few to a few dozen seconds before the main shaking, and become alarmed or exhibit other unusual behavior. Seismometers can also detect P waves, and the timing difference is exploited by electronic earthquake warning systems to provide humans with a few seconds to move to a safer location.

A review of scientific studies available as of 2018 covering over 130 species found insufficient evidence to show that animals could provide warning of earthquakes hours, days, or weeks in advance. Statistical correlations suggest some reported unusual animal behavior is due to smaller earthquakes (foreshocks) that sometimes precede a large quake, which if small enough may go unnoticed by people. Foreshocks may also cause groundwater changes or release gases that can be detected by animals. Foreshocks are also detected by seismometers, and have long been studied as potential predictors, but without success (see #Seismicity patterns). Seismologists have not found evidence of medium-term physical or chemical changes that predict earthquakes which animals might be sensing.

Anecdotal reports of strange animal behavior before earthquakes have been recorded for thousands of years. Some unusual animal behavior may be mistakenly attributed to a near-future earthquake. The flashbulb memory effect causes unremarkable details to become more memorable and more significant when associated with an emotionally powerful event such as an earthquake. Even the vast majority of scientific reports in the 2018 review did not include observations showing that animals did not act unusually when there was not an earthquake about to happen, meaning the behavior was not established to be predictive.

Most researchers investigating animal prediction of earthquakes are in China and Japan. Most scientific observations have come from the 2010 Canterbury earthquake in New Zealand, the 1984 Nagano earthquake in Japan, and the 2009 L'Aquila earthquake in Italy.

Animals known to be magnetoreceptive might be able to detect electromagnetic waves in the ultra low frequency and extremely low frequency ranges that reach the surface of the Earth before an earthquake, causing odd behavior. These electromagnetic waves could also cause air ionization, water oxidation and possible water toxification which other animals could detect.

Dilatancy–diffusion

In the 1970s the dilatancy–diffusion hypothesis was highly regarded as providing a physical basis for various phenomena seen as possible earthquake precursors. It was based on "solid and repeatable evidence" from laboratory experiments that highly stressed crystalline rock experienced a change in volume, or dilatancy, which causes changes in other characteristics, such as seismic velocity and electrical resistivity, and even large-scale uplifts of topography. It was believed this happened in a 'preparatory phase' just prior to the earthquake, and that suitable monitoring could therefore warn of an impending quake.

Detection of variations in the relative velocities of the primary and secondary seismic waves – expressed as Vp/Vs – as they passed through a certain zone was the basis for predicting the 1973 Blue Mountain Lake (NY) and 1974 Riverside (CA) quake. Although these predictions were informal and even trivial, their apparent success was seen as confirmation of both dilatancy and the existence of a preparatory process, leading to what were subsequently called "wildly over-optimistic statements" that successful earthquake prediction "appears to be on the verge of practical reality."

However, many studies questioned these results, and the hypothesis eventually languished. Subsequent study showed it "failed for several reasons, largely associated with the validity of the assumptions on which it was based", including the assumption that laboratory results can be scaled up to the real world. Another factor was the bias of retrospective selection of criteria. Other studies have shown dilatancy to be so negligible that Main et al. 2012 concluded: "The concept of a large-scale 'preparation zone' indicating the likely magnitude of a future event, remains as ethereal as the ether that went undetected in the Michelson–Morley experiment."

Changes in Vp/Vs

Vp is the symbol for the velocity of a seismic "P" (primary or pressure) wave passing through rock, while Vs is the symbol for the velocity of the "S" (secondary or shear) wave. Small-scale laboratory experiments have shown that the ratio of these two velocities – represented as Vp/Vs – changes when rock is near the point of fracturing. In the 1970s it was considered a likely breakthrough when Russian seismologists reported observing such changes (later discounted.) in the region of a subsequent earthquake. This effect, as well as other possible precursors, has been attributed to dilatancy, where rock stressed to near its breaking point expands (dilates) slightly.

Study of this phenomenon near Blue Mountain Lake in New York State led to a successful albeit informal prediction in 1973, and it was credited for predicting the 1974 Riverside (CA) quake. However, additional successes have not followed, and it has been suggested that these predictions were a fluke. A Vp/Vs anomaly was the basis of a 1976 prediction of a M 5.5 to 6.5 earthquake near Los Angeles, which failed to occur. Other studies relying on quarry blasts (more precise, and repeatable) found no such variations, while an analysis of two earthquakes in California found that the variations reported were more likely caused by other factors, including retrospective selection of data. Geller (1997) noted that reports of significant velocity changes have ceased since about 1980.

Radon emissions

Most rock contains small amounts of gases that can be isotopically distinguished from the normal atmospheric gases. There are reports of spikes in the concentrations of such gases prior to a major earthquake; this has been attributed to release due to pre-seismic stress or fracturing of the rock. One of these gases is radon, produced by radioactive decay of the trace amounts of uranium present in most rock. Radon is potentially useful as an earthquake predictor because it is radioactive and thus easily detected, and its short half-life (3.8 days) makes radon levels sensitive to short-term fluctuations.

A 2009 compilation listed 125 reports of changes in radon emissions prior to 86 earthquakes since 1966. The International Commission on Earthquake Forecasting for Civil Protection (ICEF) however found in its 2011 critical review that the earthquakes with which these changes are supposedly linked were up to a thousand kilometers away, months later, and at all magnitudes. In some cases the anomalies were observed at a distant site, but not at closer sites. The ICEF found "no significant correlation".

Electromagnetic anomalies

Observations of electromagnetic disturbances and their attribution to the earthquake failure process go back as far as the Great Lisbon earthquake of 1755, but practically all such observations prior to the mid-1960s are invalid because the instruments used were sensitive to physical movement. Since then various anomalous electrical, electric-resistive, and magnetic phenomena have been attributed to precursory stress and strain changes that precede earthquakes, raising hopes for finding a reliable earthquake precursor. While a handful of researchers have gained much attention with either theories of how such phenomena might be generated, claims of having observed such phenomena prior to an earthquake, no such phenomena has been shown to be an actual precursor.

A 2011 review by the International Commission on Earthquake Forecasting for Civil Protection (ICEF) found the "most convincing" electromagnetic precursors to be ultra low frequency magnetic anomalies, such as the Corralitos event (discussed below) recorded before the 1989 Loma Prieta earthquake. However, it is now believed that observation was a system malfunction. Study of the closely monitored 2004 Parkfield earthquake found no evidence of precursory electromagnetic signals of any type; further study showed that earthquakes with magnitudes less than 5 do not produce significant transient signals. The ICEF considered the search for useful precursors to have been unsuccessful.

VAN seismic electric signals

The most touted, and most criticized, claim of an electromagnetic precursor is the VAN method of physics professors Panayiotis Varotsos, Kessar Alexopoulos and Konstantine Nomicos (VAN) of the University of Athens. In a 1981 paper they claimed that by measuring geoelectric voltages – what they called "seismic electric signals" (SES) – they could predict earthquakes.

In 1984, they claimed there was a "one-to-one correspondence" between SES and earthquakes – that is, that "every sizable EQ is preceded by an SES and inversely every SES is always followed by an EQ the magnitude and the epicenter of which can be reliably predicted" – the SES appearing between 6 and 115 hours before the earthquake. As proof of their method they claimed a series of successful predictions.

Although their report was "saluted by some as a major breakthrough", among seismologists it was greeted by a "wave of generalized skepticism". In 1996, a paper VAN submitted to the journal Geophysical Research Letters was given an unprecedented public peer-review by a broad group of reviewers, with the paper and reviews published in a special issue; the majority of reviewers found the methods of VAN to be flawed. Additional criticism was raised the same year in a public debate between some of the principals.

A primary criticism was that the method is geophysically implausible and scientifically unsound. Additional objections included the demonstrable falsity of the claimed one-to-one relationship of earthquakes and SES, the unlikelihood of a precursory process generating signals stronger than any observed from the actual earthquakes, and the very strong likelihood that the signals were man-made. Further work in Greece has tracked SES-like "anomalous transient electric signals" back to specific human sources, and found that such signals are not excluded by the criteria used by VAN to identify SES. More recent work, by employing modern methods of statistical physics, i.e., detrended fluctuation analysis (DFA), multifractal DFA and wavelet transform revealed that SES are clearly distinguished from signals produced by man made sources.

The validity of the VAN method, and therefore the predictive significance of SES, was based primarily on the empirical claim of demonstrated predictive success. Numerous weaknesses have been uncovered in the VAN methodology, and in 2011 the International Commission on Earthquake Forecasting for Civil Protection concluded that the prediction capability claimed by VAN could not be validated. Most seismologists consider VAN to have been "resoundingly debunked". On the other hand, the Section "Earthquake Precursors and Prediction" of "Encyclopedia of Solid Earth Geophysics: part of "Encyclopedia of Earth Sciences Series" (Springer 2011) ends as follows (just before its summary): "it has recently been shown that by analyzing time-series in a newly introduced time domain "natural time", the approach to the critical state can be clearly identified [Sarlis et al. 2008]. This way, they appear to have succeeded in shortening the lead-time of VAN prediction to only a few days [Uyeda and Kamogawa 2008]. This means, seismic data may play an amazing role in short term precursor when combined with SES data".

Since 2001, the VAN group has introduced a concept they call "natural time", applied to the analysis of their precursors. Initially it is applied on SES to distinguish them from noise and relate them to a possible impending earthquake. In case of verification (classification as "SES activity"), natural time analysis is additionally applied to the general subsequent seismicity of the area associated with the SES activity, in order to improve the time parameter of the prediction. The method treats earthquake onset as a critical phenomenon. A review of the updated VAN method in 2020 says that it suffers from an abundance of false positives and is therefore not usable as a prediction protocol. VAN group answered by pinpointing misunderstandings in the specific reasoning.

Corralitos anomaly

Probably the most celebrated seismo-electromagnetic event ever, and one of the most frequently cited examples of a possible earthquake precursor, is the 1989 Corralitos anomaly. In the month prior to the 1989 Loma Prieta earthquake, measurements of the Earth's magnetic field at ultra-low frequencies by a magnetometer in Corralitos, California, just 7 km from the epicenter of the impending earthquake, started showing anomalous increases in amplitude. Just three hours before the quake, the measurements soared to about thirty times greater than normal, with amplitudes tapering off after the quake. Such amplitudes had not been seen in two years of operation, nor in a similar instrument located 54 km away. To many people such apparent locality in time and space suggested an association with the earthquake.

Additional magnetometers were subsequently deployed across northern and southern California, but after ten years and several large earthquakes, similar signals have not been observed. More recent studies have cast doubt on the connection, attributing the Corralitos signals to either unrelated magnetic disturbance or, even more simply, to sensor-system malfunction.

Freund physics

In his investigations of crystalline physics, Friedemann Freund found that water molecules embedded in rock can dissociate into ions if the rock is under intense stress. The resulting charge carriers can generate battery currents under certain conditions. Freund suggested that perhaps these currents could be responsible for earthquake precursors such as electromagnetic radiation, earthquake lights and disturbances of the plasma in the ionosphere. The study of such currents and interactions is known as "Freund physics".

Most seismologists reject Freund's suggestion that stress-generated signals can be detected and put to use as precursors, for a number of reasons. First, it is believed that stress does not accumulate rapidly before a major earthquake, and thus there is no reason to expect large currents to be rapidly generated. Secondly, seismologists have extensively searched for statistically reliable electrical precursors, using sophisticated instrumentation, and have not identified any such precursors. And thirdly, water in the Earth's crust would cause any generated currents to be absorbed before reaching the surface.

Disturbance of the daily cycle of the ionosphere

The ionosphere usually develops its lower D layer during the day, while at night this layer disappears as the plasma there turns to gas. During the night, the F layer of the ionosphere remains formed, in higher altitude than D layer. A waveguide for low HF radio frequencies up to 10 MHz is formed during the night (skywave propagation) as the F layer reflects these waves back to the Earth. The skywave is lost during the day, as the D layer absorbs these waves.

Tectonic stresses in the Earth's crust are claimed to cause waves of electric charges that travel to the surface of the Earth and affect the ionosphere. ULF* recordings of the daily cycle of the ionosphere indicate that the usual cycle could be disturbed a few days before a shallow strong earthquake. When the disturbance occurs, it is observed that either the D layer is lost during the day resulting to ionosphere elevation and skywave formation or the D layer appears at night resulting to lower of the ionosphere and hence absence of skywave.

Science centers have developed a network of VLF transmitters and receivers on a global scale that detect changes in skywave. Each receiver is also daisy transmitter for distances of 1000–10,000 kilometers and is operating at different frequencies within the network. The general area under excitation can be determined depending on the density of the network. It was shown on the other hand that global extreme events like magnetic storms or solar flares and local extreme events in the same VLF path like another earthquake or a volcano eruption that occur in near time with the earthquake under evaluation make it difficult or impossible to relate changes in skywave to the earthquake of interest.

In 2017, an article in the Journal of Geophysical Research showed that the relationship between ionospheric anomalies and large seismic events (M≥6.0) occurring globally from 2000 to 2014 was based on the presence of solar weather. When the solar data are removed from the time series, the correlation is no longer statistically significant. A subsequent article in Physics of the Earth and Planetary Interiors in 2020 shows that solar weather and ionospheric disturbances are a potential cause to trigger large earthquakes based on this statistical relationship. The proposed mechanism is electromagnetic induction from the ionosphere to the fault zone. Fault fluids are conductive, and can produce telluric currents at depth. The resulting change in the local magnetic field in the fault triggers dissolution of minerals and weakens the rock, while also potentially changing the groundwater chemistry and level. After the seismic event, different minerals may be precipitated thus changing groundwater chemistry and level again. This process of mineral dissolution and precipitation before and after an earthquake has been observed in Iceland. This model makes sense of the ionospheric, seismic and groundwater data.

Satellite observation of the expected ground temperature declination

One way of detecting the mobility of tectonic stresses is to detect locally elevated temperatures on the surface of the crust measured by satellites. During the evaluation process, the background of daily variation and noise due to atmospheric disturbances and human activities are removed before visualizing the concentration of trends in the wider area of a fault. This method has been experimentally applied since 1995.

In a newer approach to explain the phenomenon, NASA's Friedmann Freund has proposed that the infrared radiation captured by the satellites is not due to a real increase in the surface temperature of the crust. According to this version the emission is a result of the quantum excitation that occurs at the chemical re-bonding of positive charge carriers (holes) which are traveling from the deepest layers to the surface of the crust at a speed of 200 meters per second. The electric charge arises as a result of increasing tectonic stresses as the time of the earthquake approaches. This emission extends superficially up to 500 x 500 square kilometers for very large events and stops almost immediately after the earthquake.

Trends

Instead of watching for anomalous phenomena that might be precursory signs of an impending earthquake, other approaches to predicting earthquakes look for trends or patterns that lead to an earthquake. As these trends may be complex and involve many variables, advanced statistical techniques are often needed to understand them, therefore these are sometimes called statistical methods. These approaches also tend to be more probabilistic, and to have larger time periods, and so merge into earthquake forecasting.

Nowcasting

Earthquake nowcasting, suggested in 2016 is the estimate of the current dynamic state of a seismological system, based on natural time introduced in 2001. It differs from forecasting which aims to estimate the probability of a future event but it is also considered a potential base for forecasting. Nowcasting calculations produce the "earthquake potential score", an estimation of the current level of seismic progress. Typical applications are: great global earthquakes and tsunamis, aftershocks and induced seismicity, induced seismicity at gas fields, seismic risk to global megacities, studying of clustering of large global earthquakes, etc.

Elastic rebound

Even the stiffest of rock is not perfectly rigid. Given a large force (such as between two immense tectonic plates moving past each other) the Earth's crust will bend or deform. According to the elastic rebound theory of Reid (1910), eventually the deformation (strain) becomes great enough that something breaks, usually at an existing fault. Slippage along the break (an earthquake) allows the rock on each side to rebound to a less deformed state. In the process energy is released in various forms, including seismic waves. The cycle of tectonic force being accumulated in elastic deformation and released in a sudden rebound is then repeated. As the displacement from a single earthquake ranges from less than a meter to around 10 meters (for an M 8 quake), the demonstrated existence of large strike-slip displacements of hundreds of miles shows the existence of a long running earthquake cycle.

Characteristic earthquakes

The most studied earthquake faults (such as the Nankai megathrust, the Wasatch Fault, and the San Andreas Fault) appear to have distinct segments. The characteristic earthquake model postulates that earthquakes are generally constrained within these segments. As the lengths and other properties of the segments are fixed, earthquakes that rupture the entire fault should have similar characteristics. These include the maximum magnitude (which is limited by the length of the rupture), and the amount of accumulated strain needed to rupture the fault segment. Since continuous plate motions cause the strain to accumulate steadily, seismic activity on a given segment should be dominated by earthquakes of similar characteristics that recur at somewhat regular intervals. For a given fault segment, identifying these characteristic earthquakes and timing their recurrence rate (or conversely return period) should therefore inform us about the next rupture; this is the approach generally used in forecasting seismic hazard. UCERF3 is a notable example of such a forecast, prepared for the state of California. Return periods are also used for forecasting other rare events, such as cyclones and floods, and assume that future frequency will be similar to observed frequency to date.

The idea of characteristic earthquakes was the basis of the Parkfield prediction: fairly similar earthquakes in 1857, 1881, 1901, 1922, 1934, and 1966 suggested a pattern of breaks every 21.9 years, with a standard deviation of ±3.1 years. Extrapolation from the 1966 event led to a prediction of an earthquake around 1988, or before 1993 at the latest (at the 95% confidence interval). The appeal of such a method is that the prediction is derived entirely from the trend, which supposedly accounts for the unknown and possibly unknowable earthquake physics and fault parameters. However, in the Parkfield case the predicted earthquake did not occur until 2004, a decade late. This seriously undercuts the claim that earthquakes at Parkfield are quasi-periodic, and suggests the individual events differ sufficiently in other respects to question whether they have distinct characteristics in common.

The failure of the Parkfield prediction has raised doubt as to the validity of the characteristic earthquake model itself. Some studies have questioned the various assumptions, including the key one that earthquakes are constrained within segments, and suggested that the "characteristic earthquakes" may be an artifact of selection bias and the shortness of seismological records (relative to earthquake cycles). Other studies have considered whether other factors need to be considered, such as the age of the fault. Whether earthquake ruptures are more generally constrained within a segment (as is often seen), or break past segment boundaries (also seen), has a direct bearing on the degree of earthquake hazard: earthquakes are larger where multiple segments break, but in relieving more strain they will happen less often.

Seismic gaps

At the contact where two tectonic plates slip past each other every section must eventually slip, as (in the long-term) none get left behind. But they do not all slip at the same time; different sections will be at different stages in the cycle of strain (deformation) accumulation and sudden rebound. In the seismic gap model the "next big quake" should be expected not in the segments where recent seismicity has relieved the strain, but in the intervening gaps where the unrelieved strain is the greatest. This model has an intuitive appeal; it is used in long-term forecasting, and was the basis of a series of circum-Pacific (Pacific Rim) forecasts in 1979 and 1989–1991.

However, some underlying assumptions about seismic gaps are now known to be incorrect. A close examination suggests that "there may be no information in seismic gaps about the time of occurrence or the magnitude of the next large event in the region"; statistical tests of the circum-Pacific forecasts shows that the seismic gap model "did not forecast large earthquakes well". Another study concluded that a long quiet period did not increase earthquake potential.

Seismicity patterns

Various heuristically derived algorithms have been developed for predicting earthquakes. Probably the most widely known is the M8 family of algorithms (including the RTP method) developed under the leadership of Vladimir Keilis-Borok. M8 issues a "Time of Increased Probability" (TIP) alarm for a large earthquake of a specified magnitude upon observing certain patterns of smaller earthquakes. TIPs generally cover large areas (up to a thousand kilometers across) for up to five years. Such large parameters have made M8 controversial, as it is hard to determine whether any hits that happened were skillfully predicted, or only the result of chance.

M8 gained considerable attention when the 2003 San Simeon and Hokkaido earthquakes occurred within a TIP. In 1999, Keilis-Borok's group published a claim to have achieved statistically significant intermediate-term results using their M8 and MSc models, as far as world-wide large earthquakes are regarded. However, Geller et al. are skeptical of prediction claims over any period shorter than 30 years. A widely publicized TIP for an M 6.4 quake in Southern California in 2004 was not fulfilled, nor two other lesser known TIPs. A deep study of the RTP method in 2008 found that out of some twenty alarms only two could be considered hits (and one of those had a 60% chance of happening anyway). It concluded that "RTP is not significantly different from a naïve method of guessing based on the historical rates [of] seismicity."

Accelerating moment release (AMR, "moment" being a measurement of seismic energy), also known as time-to-failure analysis, or accelerating seismic moment release (ASMR), is based on observations that foreshock activity prior to a major earthquake not only increased, but increased at an exponential rate. In other words, a plot of the cumulative number of foreshocks gets steeper just before the main shock.

Following formulation by Bowman et al. (1998) into a testable hypothesis, and a number of positive reports, AMR seemed promising despite several problems. Known issues included not being detected for all locations and events, and the difficulty of projecting an accurate occurrence time when the tail end of the curve gets steep. But rigorous testing has shown that apparent AMR trends likely result from how data fitting is done, and failing to account for spatiotemporal clustering of earthquakes. The AMR trends are therefore statistically insignificant. Interest in AMR (as judged by the number of peer-reviewed papers) has fallen off since 2004.

Machine learning

Rouet-Leduc et al. (2019) reported having successfully trained a regression random forest on acoustic time series data capable of identifying a signal emitted from fault zones that forecasts fault failure. Rouet-Leduc et al. (2019) suggested that the identified signal, previously assumed to be statistical noise, reflects the increasing emission of energy before its sudden release during a slip event. Rouet-Leduc et al. (2019) further postulated that their approach could bound fault failure times and lead to the identification of other unknown signals. Due to the rarity of the most catastrophic earthquakes, acquiring representative data remains problematic. In response, Rouet-Leduc et al. (2019) have conjectured that their model would not need to train on data from catastrophic earthquakes, since further research has shown the seismic patterns of interest to be similar in smaller earthquakes.

Deep learning has also been applied to earthquake prediction. Although Bath's law and Omori's law describe the magnitude of earthquake aftershocks and their time-varying properties, the prediction of the "spatial distribution of aftershocks" remains an open research problem. Using the Theano and TensorFlow software libraries, DeVries et al. (2018) trained a neural network that achieved higher accuracy in the prediction of spatial distributions of earthquake aftershocks than the previously established methodology of Coulomb failure stress change. Notably, DeVries et al. (2018) reported that their model made no "assumptions about receiver plane orientation or geometry" and heavily weighted the change in shear stress, "sum of the absolute values of the independent components of the stress-change tensor," and the von Mises yield criterion. DeVries et al. (2018) postulated that the reliance of their model on these physical quantities indicated that they might "control earthquake triggering during the most active part of the seismic cycle." For validation testing, DeVries et al. (2018) reserved 10% of positive training earthquake data samples and an equal quantity of randomly chosen negative samples.

Arnaud Mignan and Marco Broccardo have similarly analyzed the application of artificial neural networks to earthquake prediction. They found in a review of literature that earthquake prediction research utilizing artificial neural networks has gravitated towards more sophisticated models amidst increased interest in the area. They also found that neural networks utilized in earthquake prediction with notable success rates were matched in performance by simpler models. They further addressed the issues of acquiring appropriate data for training neural networks to predict earthquakes, writing that the "structured, tabulated nature of earthquake catalogues" makes transparent machine learning models more desirable than artificial neural networks.

EMP induced seismicity

High energy electromagnetic pulses can induce earthquakes within 2–6 days after the emission by EMP generators. It has been proposed that strong EM impacts could control seismicity, as the seismicity dynamics that follow appear to be a lot more regular than usual.

Notable predictions

These are predictions, or claims of predictions, that are notable either scientifically or because of public notoriety, and claim a scientific or quasi-scientific basis. As many predictions are held confidentially, or published in obscure locations, and become notable only when they are claimed, there may be a selection bias in that hits get more attention than misses. The predictions listed here are discussed in Hough's book and Geller's paper.

1975: Haicheng, China

The M 7.3 1975 Haicheng earthquake is the most widely cited "success" of earthquake prediction. The ostensible story is that study of seismic activity in the region led the Chinese authorities to issue a medium-term prediction in June 1974, and the political authorities therefore ordered various measures taken, including enforced evacuation of homes, construction of "simple outdoor structures", and showing of movies out-of-doors. The quake, striking at 19:36, was powerful enough to destroy or badly damage about half of the homes. However, the "effective preventative measures taken" were said to have kept the death toll under 300 in an area with population of about 1.6 million, where otherwise tens of thousands of fatalities might have been expected.

However, although a major earthquake occurred, there has been some skepticism about the narrative of measures taken on the basis of a timely prediction. This event occurred during the Cultural Revolution, when "belief in earthquake prediction was made an element of ideological orthodoxy that distinguished the true party liners from right wing deviationists". Recordkeeping was disordered, making it difficult to verify details, including whether there was any ordered evacuation. The method used for either the medium-term or short-term predictions (other than "Chairman Mao's revolutionary line") has not been specified. The evacuation may have been spontaneous, following the strong (M 4.7) foreshock that occurred the day before.

A 2006 study that had access to an extensive range of records found that the predictions were flawed. "In particular, there was no official short-term prediction, although such a prediction was made by individual scientists." Also: "it was the foreshocks alone that triggered the final decisions of warning and evacuation". They estimated that 2,041 lives were lost. That more did not die was attributed to a number of fortuitous circumstances, including earthquake education in the previous months (prompted by elevated seismic activity), local initiative, timing (occurring when people were neither working nor asleep), and local style of construction. The authors conclude that, while unsatisfactory as a prediction, "it was an attempt to predict a major earthquake that for the first time did not end up with practical failure."

1981: Lima, Peru (Brady)

In 1976, Brian Brady, a physicist, then at the U.S. Bureau of Mines, where he had studied how rocks fracture, "concluded a series of four articles on the theory of earthquakes with the deduction that strain building in the subduction zone [off-shore of Peru] might result in an earthquake of large magnitude within a period of seven to fourteen years from mid November 1974." In an internal memo written in June 1978 he narrowed the time window to "October to November, 1981", with a main shock in the range of 9.2±0.2. In a 1980 memo he was reported as specifying "mid-September 1980". This was discussed at a scientific seminar in San Juan, Argentina, in October 1980, where Brady's colleague, W. Spence, presented a paper. Brady and Spence then met with government officials from the U.S. and Peru on 29 October, and "forecast a series of large magnitude earthquakes in the second half of 1981." This prediction became widely known in Peru, following what the U.S. embassy described as "sensational first page headlines carried in most Lima dailies" on January 26, 1981.

On 27 January 1981, after reviewing the Brady-Spence prediction, the U.S. National Earthquake Prediction Evaluation Council (NEPEC) announced it was "unconvinced of the scientific validity" of the prediction, and had been "shown nothing in the observed seismicity data, or in the theory insofar as presented, that lends substance to the predicted times, locations, and magnitudes of the earthquakes." It went on to say that while there was a probability of major earthquakes at the predicted times, that probability was low, and recommend that "the prediction not be given serious consideration."

Unfazed, Brady subsequently revised his forecast, stating there would be at least three earthquakes on or about July 6, August 18 and September 24, 1981, leading one USGS official to complain: "If he is allowed to continue to play this game ... he will eventually get a hit and his theories will be considered valid by many."

On June 28 (the date most widely taken as the date of the first predicted earthquake), it was reported that: "the population of Lima passed a quiet Sunday". The headline on one Peruvian newspaper: "NO PASÓ NADA" ("Nothing happened").

In July Brady formally withdrew his prediction on the grounds that prerequisite seismic activity had not occurred. Economic losses due to reduced tourism during this episode has been roughly estimated at one hundred million dollars.

1985–1993: Parkfield, U.S. (Bakun-Lindh)

The "Parkfield earthquake prediction experiment" was the most heralded scientific earthquake prediction ever. It was based on an observation that the Parkfield segment of the San Andreas Fault breaks regularly with a moderate earthquake of about M 6 every several decades: 1857, 1881, 1901, 1922, 1934, and 1966. More particularly, Bakun & Lindh (1985) pointed out that, if the 1934 quake is excluded, these occur every 22 years, ±4.3 years. Counting from 1966, they predicted a 95% chance that the next earthquake would hit around 1988, or 1993 at the latest. The National Earthquake Prediction Evaluation Council (NEPEC) evaluated this, and concurred. The U.S. Geological Survey and the State of California therefore established one of the "most sophisticated and densest nets of monitoring instruments in the world", in part to identify any precursors when the quake came. Confidence was high enough that detailed plans were made for alerting emergency authorities if there were signs an earthquake was imminent. In the words of The Economist: "never has an ambush been more carefully laid for such an event."

Year 1993 came, and passed, without fulfillment. Eventually there was an M 6.0 earthquake on the Parkfield segment of the fault, on 28 September 2004, but without forewarning or obvious precursors. While the experiment in catching an earthquake is considered by many scientists to have been successful, the prediction was unsuccessful in that the eventual event was a decade late.

1983–1995: Greece (VAN)

In 1981, the "VAN" group, headed by Panayiotis Varotsos, said that they found a relationship between earthquakes and 'seismic electric signals' (SES). In 1984 they presented a table of 23 earthquakes from 19 January 1983 to 19 September 1983, of which they claimed to have successfully predicted 18 earthquakes. Other lists followed, such as their 1991 claim of predicting six out of seven earthquakes with Ms ≥ 5.5 in the period of 1 April 1987 through 10 August 1989, or five out of seven earthquakes with Ms ≥ 5.3 in the overlapping period of 15 May 1988 to 10 August 1989, In 1996 they published a "Summary of all Predictions issued from January 1st, 1987 to June 15, 1995", amounting to 94 predictions. Matching this against a list of "All earthquakes with MS(ATH)" and within geographical bounds including most of Greece, they come up with a list of 14 earthquakes they should have predicted. Here they claim ten successes, for a success rate of 70%.

The VAN predictions have been criticized on various grounds, including being geophysically implausible, "vague and ambiguous", failing to satisfy prediction criteria, and retroactive adjustment of parameters. A critical review of 14 cases where VAN claimed 10 successes showed only one case where an earthquake occurred within the prediction parameters. The VAN predictions not only fail to do better than chance, but show "a much better association with the events which occurred before them", according to Mulargia and Gasperini. Other early reviews found that the VAN results, when evaluated by definite parameters, were statistically significant. Both positive and negative views on VAN predictions from this period were summarized in the 1996 book A Critical Review of VAN edited by Sir James Lighthill and in a debate issue presented by the journal Geophysical Research Letters that was focused on the statistical significance of the VAN method. VAN had the opportunity to reply to their critics in those review publications. In 2011, the ICEF reviewed the 1996 debate, and concluded that the optimistic SES prediction capability claimed by VAN could not be validated. In 2013, the SES activities were found to be coincident with the minima of the fluctuations of the order parameter of seismicity, which have been shown to be statistically significant precursors by employing the event coincidence analysis.

A crucial issue is the large and often indeterminate parameters of the predictions, such that some critics say these are not predictions, and should not be recognized as such. Much of the controversy with VAN arises from this failure to adequately specify these parameters. Some of their telegrams include predictions of two distinct earthquake events, such as (typically) one earthquake predicted at 300 km "NW" of Athens, and another at 240 km "W", "with magnitutes [sic] 5,3 and 5,8", with no time limit. The time parameter estimation was introduced in VAN Method by means of natural time in 2001. VAN has disputed the 'pessimistic' conclusions of their critics, but the critics have not relented. It was suggested that VAN failed to account for clustering of earthquakes, or that they interpreted their data differently during periods of greater seismic activity.

VAN has been criticized on several occasions for causing public panic and widespread unrest. This has been exacerbated by the broadness of their predictions, which cover large areas of Greece (up to 240 kilometers across, and often pairs of areas), much larger than the areas actually affected by earthquakes of the magnitudes predicted (usually several tens of kilometers across). Magnitudes are similarly broad: a predicted magnitude of "6.0" represents a range from a benign magnitude 5.3 to a broadly destructive 6.7. Coupled with indeterminate time windows of a month or more, such predictions "cannot be practically utilized" to determine an appropriate level of preparedness, whether to curtail usual societal functioning, or even to issue public warnings.

2008: Greece (VAN)

After 2006, VAN claim that all alarms related to SES activity have been made public by posting at arxiv.org. Such SES activity is evaluated using a new method they call 'natural time'. One such report was posted on Feb. 1, 2008, two weeks before the strongest earthquake in Greece during the period 1983–2011. This earthquake occurred on February 14, 2008, with magnitude (Mw) 6.9. VAN's report was also described in an article in the newspaper Ethnos on Feb. 10, 2008. However, Gerassimos Papadopoulos commented that the VAN reports were confusing and ambiguous, and that "none of the claims for successful VAN predictions is justified." A reply to this comment, which insisted on the prediction's accuracy, was published in the same issue.

1989: Loma Prieta, U.S.

The 1989 Loma Prieta earthquake (epicenter in the Santa Cruz Mountains northwest of San Juan Bautista, California) caused significant damage in the San Francisco Bay Area of California. The United States Geological Survey (USGS) reportedly claimed, twelve hours after the event, that it had "forecast" this earthquake in a report the previous year. USGS staff subsequently claimed this quake had been "anticipated"; various other claims of prediction have also been made.

Ruth Harris (Harris (1998)) reviewed 18 papers (with 26 forecasts) dating from 1910 "that variously offer or relate to scientific forecasts of the 1989 Loma Prieta earthquake." (In this case no distinction is made between a forecast, which is limited to a probabilistic estimate of an earthquake happening over some time period, and a more specific prediction.) None of these forecasts can be rigorously tested due to lack of specificity, and where a forecast does bracket the correct time and location, the window was so broad (e.g., covering the greater part of California for five years) as to lose any value as a prediction. Predictions that came close (but given a probability of only 30%) had ten- or twenty-year windows.

One debated prediction came from the M8 algorithm used by Keilis-Borok and associates in four forecasts. The first of these forecasts missed both magnitude (M 7.5) and time (a five-year window from 1 January 1984, to 31 December 1988). They did get the location, by including most of California and half of Nevada. A subsequent revision, presented to the NEPEC, extended the time window to 1 July 1992, and reduced the location to only central California; the magnitude remained the same. A figure they presented had two more revisions, for M ≥ 7.0 quakes in central California. The five-year time window for one ended in July 1989, and so missed the Loma Prieta event; the second revision extended to 1990, and so included Loma Prieta.

When discussing success or failure of prediction for the Loma Prieta earthquake, some scientists argue that it did not occur on the San Andreas Fault (the focus of most of the forecasts), and involved dip-slip (vertical) movement rather than strike-slip (horizontal) movement, and so was not predicted.

Other scientists argue that it did occur in the San Andreas Fault zone, and released much of the strain accumulated since the 1906 San Francisco earthquake; therefore several of the forecasts were correct. Hough states that "most seismologists" do not believe this quake was predicted "per se". In a strict sense there were no predictions, only forecasts, which were only partially successful.

Iben Browning claimed to have predicted the Loma Prieta event, but (as will be seen in the next section) this claim has been rejected.

1990: New Madrid, U.S. (Browning)

Iben Browning (a scientist with a Ph.D. degree in zoology and training as a biophysicist, but no experience in geology, geophysics, or seismology) was an "independent business consultant" who forecast long-term climate trends for businesses. He supported the idea (scientifically unproven) that volcanoes and earthquakes are more likely to be triggered when the tidal force of the Sun and the Moon coincide to exert maximum stress on the Earth's crust (syzygy). Having calculated when these tidal forces maximize, Browning then "projected" what areas were most at risk for a large earthquake. An area he mentioned frequently was the New Madrid seismic zone at the southeast corner of the state of Missouri, the site of three very large earthquakes in 1811–12, which he coupled with the date of 3 December 1990.

Browning's reputation and perceived credibility were boosted when he claimed in various promotional flyers and advertisements to have predicted (among various other events) the Loma Prieta earthquake of 17 October 1989. The National Earthquake Prediction Evaluation Council (NEPEC) formed an Ad Hoc Working Group (AHWG) to evaluate Browning's prediction. Its report (issued 18 October 1990) specifically rejected the claim of a successful prediction of the Loma Prieta earthquake. A transcript of his talk in San Francisco on 10 October showed he had said: "there will probably be several earthquakes around the world, Richter 6+, and there may be a volcano or two" – which, on a global scale, is about average for a week – with no mention of any earthquake in California.

Though the AHWG report disproved both Browning's claims of prior success and the basis of his "projection", it made little impact after a year of continued claims of a successful prediction. Browning's prediction received the support of geophysicist David Stewart, and the tacit endorsement of many public authorities in their preparations for a major disaster, all of which was amplified by massive exposure in the news media. Nothing happened on 3 December, and Browning died of a heart attack seven months later.

2004 and 2005: Southern California, U.S. (Keilis-Borok)

The M8 algorithm (developed under the leadership of Vladimir Keilis-Borok at UCLA) gained respect by the apparently successful predictions of the 2003 San Simeon and Hokkaido earthquakes. Great interest was therefore generated by the prediction in early 2004 of a M ≥ 6.4 earthquake to occur somewhere within an area of southern California of approximately 12,000 sq. miles, on or before 5 September 2004. In evaluating this prediction the California Earthquake Prediction Evaluation Council (CEPEC) noted that this method had not yet made enough predictions for statistical validation, and was sensitive to input assumptions. It therefore concluded that no "special public policy actions" were warranted, though it reminded all Californians "of the significant seismic hazards throughout the state." The predicted earthquake did not occur.

A very similar prediction was made for an earthquake on or before 14 August 2005, in approximately the same area of southern California. The CEPEC's evaluation and recommendation were essentially the same, this time noting that the previous prediction and two others had not been fulfilled. This prediction also failed.

2009: L'Aquila, Italy (Giuliani)

At 03:32 on 6 April 2009, the Abruzzo region of central Italy was rocked by a magnitude M 6.3 earthquake. In the city of L'Aquila and surrounding area around 60,000 buildings collapsed or were seriously damaged, resulting in 308 deaths and 67,500 people left homeless. Around the same time, it was reported that Giampaolo Giuliani had predicted the earthquake, had tried to warn the public, but had been muzzled by the Italian government.

Giampaolo Giuliani was a laboratory technician at the Laboratori Nazionali del Gran Sasso. As a hobby he had for some years been monitoring radon using instruments he had designed and built. Prior to the L'Aquila earthquake he was unknown to the scientific community, and had not published any scientific work. He had been interviewed on 24 March by an Italian-language blog, Donne Democratiche, about a swarm of low-level earthquakes in the Abruzzo region that had started the previous December. He said that this swarm was normal and would diminish by the end of March. On 30 March, L'Aquila was struck by a magnitude 4.0 temblor, the largest to date.

On 27 March Giuliani warned the mayor of L'Aquila there could be an earthquake within 24 hours, and an earthquake M~2.3 occurred. On 29 March he made a second prediction. He telephoned the mayor of the town of Sulmona, about 55 kilometers southeast of L'Aquila, to expect a "damaging" – or even "catastrophic" – earthquake within 6 to 24 hours. Loudspeaker vans were used to warn the inhabitants of Sulmona to evacuate, with consequential panic. No quake ensued and Giuliano was cited for inciting public alarm and enjoined from making future public predictions.

After the L'Aquila event Giuliani claimed that he had found alarming rises in radon levels just hours before. He said he had warned relatives, friends and colleagues on the evening before the earthquake hit. He was subsequently interviewed by the International Commission on Earthquake Forecasting for Civil Protection, which found that Giuliani had not transmitted a valid prediction of the mainshock to the civil authorities before its occurrence.

Difficulty or impossibility

As the preceding examples show, the record of earthquake prediction has been disappointing. The optimism of the 1970s that routine prediction of earthquakes would be "soon", perhaps within ten years, was coming up disappointingly short by the 1990s, and many scientists began wondering why. By 1997 it was being positively stated that earthquakes can not be predicted, which led to a notable debate in 1999 on whether prediction of individual earthquakes is a realistic scientific goal.

Earthquake prediction may have failed only because it is "fiendishly difficult" and still beyond the current competency of science. Despite the confident announcement four decades ago that seismology was "on the verge" of making reliable predictions, there may yet be an underestimation of the difficulties. As early as 1978 it was reported that earthquake rupture might be complicated by "heterogeneous distribution of mechanical properties along the fault", and in 1986 that geometrical irregularities in the fault surface "appear to exert major controls on the starting and stopping of ruptures". Another study attributed significant differences in fault behavior to the maturity of the fault. These kinds of complexities are not reflected in current prediction methods.

Seismology may even yet lack an adequate grasp of its most central concept, elastic rebound theory. A simulation that explored assumptions regarding the distribution of slip found results "not in agreement with the classical view of the elastic rebound theory". (This was attributed to details of fault heterogeneity not accounted for in the theory.)

Earthquake prediction may be intrinsically impossible. In 1997, it has been argued that the Earth is in a state of self-organized criticality "where any small earthquake has some probability of cascading into a large event". It has also been argued on decision-theoretic grounds that "prediction of major earthquakes is, in any practical sense, impossible." In 2021, a multitude of authors from a variety of universities and research institutes studying the China Seismo-Electromagnetic Satellite reported that the claims based on self-organized criticality stating that at any moment any small earthquake can eventually cascade to a large event, do not stand in view of the results obtained to date by natural time analysis.

That earthquake prediction might be intrinsically impossible has been strongly disputed, but the best disproof of impossibility – effective earthquake prediction – has yet to be demonstrated.