The behavioral analysis of child development originates from John B. Watson's behaviorism. Watson studied child development, looking specifically at development through conditioning.

He helped bring a natural science perspective to child psychology by

introducing objective research methods based on observable and

measurable behavior. B.F. Skinner then further extended this model to cover operant conditioning and verbal behavior.

Skinner was then able to focus these research methods on feelings and

how those emotions can be shaped by a subject's interaction with the

environment. Sidney Bijou (1955) was the first to use this methodological approach extensively with children.

History

In 1948, Sidney Bijou

took a position as associate professor of psychology at the

University of Washington and served as director of the university's

Institute of Child Development. Under his leadership, the Institute added a child development clinic, nursery school classrooms, and a research lab.

Bijou began working with Donald Baer in the Department of Human Development and Family Life at the University of Kansas, applying behavior analytic principles to child development in an area referred to as "Behavioral Development" or "Behavior Analysis of Child Development".

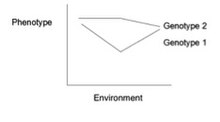

Skinner's behavioral approach and Kantor's interbehavioral approach

were adopted in Bijou and Baer's model. They created a three-stage model

of development (e.g., basic, foundational, and societal). Bijou and

Baer looked at these socially determined stages, as opposed to

organizing behavior into change points or cusps (behavioral cusp).

In the behavioral model, development is considered a behavioral change.

It is dependent on the kind of stimulus and the person's behavioral and

learning function. Behavior analysis in child development takes a mechanistic, contextual, and pragmatic approach.

From its inception, the behavioral model has focused on prediction and control of the developmental process. The model focuses on the analysis of a behavior and then synthesizes the action to support the original behavior. The model was changed after Richard J. Herrnstein studied the matching law

of choice behavior developed by studying of reinforcement in the

natural environment. More recently, the model has focused more on

behavior over time and the way that behavioral responses become

repetitive. it has become concerned with how behavior is selected over time and forms into stable patterns of responding. A detailed history of this model was written by Pelaez.

In 1995, Henry D. Schlinger, Jr. provided the first behavior analytic

text since Bijou and Baer comprehensively showed how behavior analysis—a

natural science approach to human behavior—could be used to understand

existing research in child development.

In addition, the quantitative behavioral developmental model by Commons

and Miller is the first behavioral theory and research to address

notion similar to stage.

Research methods

The methods used to analyze behavior in child development are based on several types of measurements. Single-subject research with a longitudinal study follow-up is a commonly-used approach. Current research is focused on integrating single-subject designs

through meta-analysis to determine the effect sizes of behavioral

factors in development. Lag sequential analysis has become popular for

tracking the stream of behavior during observations. Group designs are

increasingly being used. Model construction research involves latent growth modeling to determine developmental trajectories and structural equation modeling. Rasch analysis is now widely used to show sequentiality within a developmental trajectory.

A recent methodological change in the behavioral analytic theory

is the use of observational methods combined with lag sequential

analysis can determine reinforcement in the natural setting.

Quantitative behavioral development

The model of hierarchical complexity

is a quantitative analytic theory of development. This model offers an

explanation for why certain tasks are acquired earlier than others

through developmental sequences and gives an explanation of the

biological, cultural, organizational, and individual principles of

performance. It quantifies the order of hierarchical complexity of a task based on explicit and mathematical measurements of behavior.

Research

Contingencies, uncertainty, and attachment

The behavioral model of attachment

recognizes the role of uncertainty in an infant and the child's limited

communication abilities. Contingent relationships are instrumental in

the behavior analytic theory, because much emphasis is put on those

actions that produce parents’ responses.

The importance of contingency appears to be highlighted in other developmental theories, but the behavioral model recognizes that contingency must be determined by two factors:

the efficiency of the action and that efficiency compared to other

tasks that the infant might perform at that point. Both infants and

adults function in their environments by understanding these contingent

relationships. Research has shown that contingent relationships lead to

emotionally satisfying relationships.

Since 1961, behavioral research has shown that there is

relationship between the parents’ responses to separation from the

infant and outcomes of a “stranger situation.”. In a study done in 2000, six infants participated in a classic reversal design

study that assessed infant approach rate to a stranger. If attention

was based on stranger avoidance, the infant avoided the stranger. If

attention was placed on infant approach, the infant approached the

stranger.

Recent meta-analytic studies of this model of attachment based on

contingency found a moderate effect of contingency on attachment, which

increased to a large effect size when the quality of reinforcement was

considered. Other research on contingency highlights its effect on the development of both pro-social and anti-social behavior. These effects can also be furthered by training parents to become more sensitive to children's behaviors, Meta-analytic research supports the notion that attachment is operant-based learning.

An infant's sensitivity to contingencies can be affected by

biological factors and environment changes. Studies show that being

placed in erratic environments with few contingencies may cause a child

to have conduct problems and may lead to depression. (see Behavioral

Development and Depression below). Research continues to look at the effects of learning-based attachment on moral development.

Some studies have shown that erratic use of contingencies by parents

early in life can produce devastating long-term effects for the child.

Motor development

Since Watson developed the theory of behaviorism, behavior analysts have held that motor development

represents a conditioning process. This holds that crawling, climbing,

and walking displayed by infants represents conditioning of biologically

innate reflexes.

In this case, the reflex of stepping is the respondent behavior and

these reflexes are environmentally conditioned through experience and

practice. This position was criticized by maturation theorists. They

believed that the stepping reflex for infants actually disappeared over

time and was not "continuous". By working with a slightly different

theoretical model, while still using operant conditioning, Esther Thelen

was able to show that children's stepping reflex disappears as a

function of increased physical weight. However, when infants were placed

in water, that same stepping reflex returned. This offered a model for the continuity of the stepping reflex and the progressive stimulation model for behavior analysts.

Infants deprived of physical stimulation or the opportunity to respond were found to have delayed motor development. Under conditions of extra stimulation, the motor behavior of these children rapidly improved. Some research has shown that the use of a treadmill can be beneficial to children with motor delays including Down syndrome and cerebral palsy. Research on opportunity to respond and the building of motor development continues today.

The behavioral development model of motor activity has produced a number of techniques, including operant-based biofeedback

to facilitate development with success. Some of the stimulation methods

such as operant-based biofeedback have been applied as treatment to

children with cerebral palsy and even spinal injury successfully.

Brucker's group demonstrated that specific operant conditioning-based

biofeedback procedures can be effective in establishing more efficient

use of remaining and surviving central nervous system cells after injury

or after birth complications (like cerebral palsy). While such methods are not a cure and gains tend to be in the moderate range, they do show ability to enhance functioning.

Imitation and verbal behavior

Behaviorists have studied verbal behavior since the 1920s. E.A. Esper (1920) studied associative models of language, which has evolved into the current language interventions of matrix training and recombinative generalization.

Skinner (1957) created a comprehensive taxonomy of language for

speakers. Baer, along with Zettle and Haynes (1989), provided a

developmental analysis of rule-governed behavior for the listener. and for the listener Zettle and Hayes (1989) with Don Baer providing a developmental analysis of rule-governed behavior.

According to Skinner, language learning depends on environmental

variables, which can be mastered by a child through imitation, practice,

and selective reinforcement including automatic reinforcement.

B.F. Skinner was one of the first psychologists to take the role of imitation in verbal behavior as a serious mechanism for acquisition.

He identified echoic behavior as one of his basic verbal operants,

postulating that verbal behavior was learned by an infant from a verbal

community. Skinner's account takes verbal behavior beyond an

intra-individual process to an inter-individual process. He defined

verbal behavior as "behavior reinforced through the mediation of

others". Noam Chomsky refuted Skinner's assumptions.

In the behavioral model, the child is prepared to contact the contingencies to "join" the listener and speaker. At the very core, verbal episodes involve the rotation of the roles as speaker and listener. These kinds of exchanges are called conversational units and have been the focus of research at Columbia's communication disorders department.

Conversational units is a measure of socialization because they

consist of verbal interactions in which the exchange is reinforced by

both the speaker and the listener.

H.C.

Chu (1998) demonstrated contextual conditions for inducing and expanding

conversational units between children with autism and non-handicapped

siblings in two separate experiments.

The acquisition of conversational units and the expansion of verbal

behavior decrease incidences of physical "aggression" in the Chu study and several other reviews suggest similar effects.

The joining of the listener and speaker progresses from listener

speaker rotations with others as a likely precedent for the three major

components of speaker-as-own listener—say so correspondence, self-talk

conversational units, and naming.

Development of self

Robert Kohelenberg and Mavis Tsai (1991) created a behavior analytic model accounting for the development of one's “self”.

Their model proposes that verbal processes can be used to form a stable

sense of who we are through behavioral processes such as stimulus control. Kohlenberg and Tsai developed functional analytic psychotherapy

to treat psychopathological disorders arising from the frequent

invalidations of a child's statements such that “I” does not emerge.

Other behavior analytic models for personality disorders exist.

They trace out the complex biological–environmental interaction for the

development of avoidant and borderline personality disorders. They

focus on Reinforcement sensitivity theory,

which states that some individuals are more or less sensitive to

reinforcement than others. Nelson-Grey views problematic response

classes as being maintained by reinforcing consequences or through rule

governance.

Socialization

Over the last few decades, studies have supported the idea that contingent use of reinforcement and punishment over extended periods of time lead to the development of both pro-social and anti-social behaviors.

However research has shown that reinforcement is more effective than

punishment when teaching behavior to a child. It has also been shown

that modeling is more effective than “preaching” in developing

pro-social behavior in children.

Rewards have also been closely studied in relation to the development

of social behaviors in children. The building of self-control, empathy,

and cooperation has all implicated rewards as a successful tactic, while

sharing has been strongly linked with reinforcement.

The development of social skills in children is largely affected

in that classroom setting by both teachers and peers. Reinforcement and

punishment play major roles here as well. Peers frequently reinforce

each other's behavior.

One of the major areas that teachers and peers influence is sex-typed

behavior, while peers also largely influence modes of initiating

interaction, and aggression. Peers are more likely to punish cross-gender play while at the same time reinforcing play specific to gender. Some studies found that teachers were more likely to reinforce dependent behavior in females.

Behavioral principles have also been researched in emerging peer groups, focusing on status.

Research shows that it takes different social skills to enter groups

than it does to maintain or build one's status in groups. Research also

suggests that neglected children are the least interactive and aversive,

yet remain relatively unknown in groups.

Children suffering from social problems do see an improvement in social

skills after behavior therapy and behavior modification (see applied behavior analysis). Modeling has been successfully used to increase participation by shy and withdrawn children. Shaping of socially desirable behavior through positive reinforcement seems to have some of the most positive effects in children experiencing social problems.

Anti-social behavior

In the development of anti-social behavior, etiological models for anti-social behavior show considerable correlation with negative reinforcement and response matching. Escape conditioning,

through the use of coercive behavior, has a powerful effect on the

development and use of future anti-social tactics. The use of

anti-social tactics during conflicts can be negatively reinforced and

eventually seen as functional for the child in moment to moment

interactions.

Anti-social behaviors will also develop in children when imitation is

reinforced by social approval. If approval is not given by teachers or

parents, it can often be given by peers. An example of this is swearing.

Imitating a parent, brother, peer, or a character on TV, a child may

engage in the anti-social behavior of swearing. Upon saying it they may

be reinforced by those around them which will lead to an increase in the

anti-social behavior.

The role of stimulus control has also been extensively explored in the development of anti-social behavior.

Recent behavioral focus in the study of anti-social behavior has been a

focus on rule-governed behavior. While correspondence for saying and

doing has long been an interest for behavior analysts in normal

development and typical socialization, recent conceptualizations have

been built around families that actively train children in anti-social

rules, as well as children who fail to develop rule control.

Developmental depression with origins in childhood

Behavioral theory of depression was outlined by Charles Ferster. A later revision was provided by Peter Lewisohn and Hyman Hops. Hops continued the work on the role of negative reinforcement in maintaining depression with Anthony Biglan.

Additional factors such as the role of loss of contingent relations

through extinction and punishment were taken from early work of Martin

Seligman. The most recent summary and conceptual revisions of the

behavioral model was provided by Johnathan Kanter.

The standard model is that depression has multiple paths to develop. It

can be generated by five basic processes, including: lack or loss of positive reinforcement,

direct positive or negative reinforcement for depressive behavior, lack

of rule-governed behavior or too much rule-governed behavior, and/or

too much environmental punishment.

For children, some of these variables could set the pattern for

lifelong problems. For example, a child whose depressive behavior

functions for negative reinforcement

by stopping fighting between parents could develop a lifelong pattern

of depressive behavior in the case of conflicts. Two paths that are

particularly important are (1) lack or loss of reinforcement because of

missing necessary skills at a developmental cusp point or (2) the

failure to develop adequate rule-governed behavior. For the latter, the

child could develop a pattern of always choosing the short-term small

immediate reward (i.e., escaping studying for a test) at the expense of

the long-term larger reward (passing courses in middle school). The

treatment approach that emerged from this research is called behavioral activation.

In addition, use of positive reinforcement has been shown to improve symptoms of depression in children. Reinforcement has also been shown to improve the self-concept in children with depression comorbid with learning difficulties.

Rawson and Tabb (1993) used reinforcement with 99 students (90 males

and 9 females) aged from 8 to 12 with behavior disorders in a

residential treatment program and showed significant reduction in

depression symptoms compared to the control group.

Cognitive behavior

As children get older, direct control of contingencies is modified by the presence of rule-governed behavior. Rules serve as an establishing operation and set a motivational stage as well as a discrimintative stage for behavior.

While the size of the effects on intellectual development are less

clear, it appears that stimulation does have a facilitative effect on

intellectual ability. However, it is important to be sure not to confuse the enhancing effect with the initial causal effect. Some data exists to show that children with developmental delays take more learning trials to acquire in material.

Learned units and developmental retardation

Behavior

analysts have spent considerable time measuring learning in both the

classroom and at home. In these settings, the role of a lack of

stimulation has often been evidenced in the development of mild and

moderate mental retardation. Recent work has focused on a model of "developmental retardation,".

an area that emphasizes cumulative environmental effects and their role

in developmental delays. To measure these developmental delays,

subjects are given the opportunity to respond, defined as the

instructional antecedent, and success is signified by the appropriate

response and/or fluency in responses. Consequently, the learned unit is identified by the opportunity to respond in addition to given reinforcement.

One study employed this model by comparing students' time of

instruction was in affluent schools to time of instruction in lower

income schools. Results showed that lower income schools displayed

approximately 15 minutes less instruction than more affluent schools due

to disruptions in classroom management and behavior management. Altogether, these disruptions culminated into two years worth of lost instructional time by grade 10.

The goal of behavior analytic research is to provide methods for

reducing the overall number of children who fall into the retardation

range of development by behavioral engineering.

Hart and Risely (1995, 1999) have completed extensive research on this topic as well.

These researchers measured the rates of parent communication with

children of the ages of 2–4 years and correlated this information with

the IQ scores of the children at age 9. Their analyses revealed that

higher parental communication with younger children was positively

correlated with higher IQ in older children, even after controlling for

race, class, and socio-economic status. Additionally, they concluded a

significant change in IQ scores required intervention with at-risk

children for approximately 40 hours per week.

Class formation

The formation of class-like behavior has also been a significant aspect in the behavioral analysis of development. . This research has provided multiple explanations to the development and formation of class-like behavior, including primary stimulus generalization, an analysis of abstraction, relational frame theory, stimulus class analysis (sometimes referred to as recombinative generalization), stimulus equivalence, and response class analysis.

Multiple processes for class-like formation provide behavior analysts

with relatively pragmatic explanations for common issues of novelty and

generalization.

Responses are organized based upon the particular form needed to

fit the current environmental challenges as well as the functional

consequences. An example of large response classes lies in contingency

adduction,

which is an area that needs much further research, especially with a

focus on how large classes of concepts shift. For example, as Piaget

observed, individuals have a tendency at the pre-operational stage to

have limits in their ability to preserve information(Piaget &

Szeminska, 1952). While children's training in the development of

conservation skills has been generally successful, complications have been noted.

Behavior analysts argue that this is largely due to the number of tool

skills that need to be developed and integrated. Contingency adduction

offers a process by which such skills can be synthesized and which shows

why it deserves further attention, particularly by early childhood

interventionists.

Autism

Ferster (1961) was the first researcher to posit a behavior analytic theory for autism.

Ferster's model saw autism as a by-product of social interactions

between parent and child. Ferster presented an analysis of how a variety

of contingencies of reinforcement between parent and child during early

childhood might establish and strengthen a repertoire of behaviors

typically seen in children diagnosed with autism. A similar model was

proposed by Drash and Tutor (1993), who developed the contingency-shaped

or behavioral incompatibility theory of autism. They identified at least six reinforcement

paradigms that may contribute to significant deficiencies in verbal

behavior typically characteristic of children diagnosed as austistic.

They proposed that each of these paradigms may also create a repertoire

of avoidance responses that could contribute to the establishment of a

repertoire of behavior that would be incompatible with the acquisition

of age-appropriate verbal behavior.

More recent models attribute autism

to neurological and sensory models that are overly worked and

subsequently produce the autistic repertoire. Lovaas and Smith (1989)

proposed that children with autism have a mismatch between their nervous

systems and the environment, while Bijou and Ghezzi (1999) proposed a behavioral interference theory. However, both the environmental mismatch model and the inference model were recently reviewed,

and new evidence shows support for the notion that the development of

autistic behaviors are due to escape and avoidance of certain types of

sensory stimuli. However, most behavioral models of autism remain

largely speculative due to limited research efforts.

Role in education

One

of the largest impacts of behavior analysis of child development is its

role in the field of education. In 1968, Siegfried Englemann used operant conditioning techniques in a combination with rule learning to produce the direct instruction curriculum. In addition, Fred S. Keller used similar techniques to develop programmed instruction. B.F. Skinner developed a programmed instruction curriculum for teaching handwriting. One of Skinner's students, Ogden Lindsley,

developed a standardized semilogrithmic chart, the "Standard Behavior

Chart," now "Standard Celeration Chart," used to record frequencies of

behavior, and to allow direct visual comparisons of both frequencies and

changes in those frequencies (termed "celeration"). The use of this

charting tool for analysis of instructional effects or other

environmental variables through the direct measurement of learner

performance has become known as precision teaching.

Behavior analysts with a focus on behavioral development form the basis of a movement called positive behavior support (PBS). PBS has focused on building safe schools.

In education, there are many different kinds of learning that are

implemented to improve skills needed for interactions later in life.

Examples of this differential learning include social and language

skills.

According to the NWREL (Northwest Regional Educational Laboratory), too

much interaction with technology will hinder a child's social

interactions with others due to its potential to become an addiction and

subsequently lead to anti-social behavior. In terms of language development, children will start to learn and know about 5–20 different words by 18 months old.

Critiques of behavioral approach and new developments

Behavior

analytic theories have been criticized for their focus on the

explanation of the acquisition of relatively simple behavior (i.e., the

behavior of nonhuman species, of infants, and of individuals who are

intellectually disabled or autistic) rather than of complex behavior

(see Commons & Miller). Michael Commons continued behavior analysis's rejection of mentalism and the substitution of a task analysis

of the particular skills to be learned. In his new model, Commons has

created a behavior analytic model of more complex behavior in line with

more contemporary quantitative behavior analytic models called the model of hierarchical complexity.

Commons constructed the model of hierarchical complexity of tasks and

their corresponding stages of performance using just three main axioms.

In the study of development, recent work has been generated

regarding the combination of behavior analytic views with dynamical

systems theory.

The added benefit of this approach is its portrayal of how small

patterns of changes in behavior in terms of principles and mechanisms

over time can produce substantial changes in development.

Current research in behavior analysis attempts to extend the patterns learned in childhood and to determine their impact on adult development.

Professional organizations

The Association for Behavior Analysis International has a special interest group for the behavior analysis of child development.

Doctoral level behavior analysts who are psychologists belong to American Psychological Association's division 25: behavior analysis.

The World Association for Behavior Analysis has a certification

in behavior therapy. The exam draws questions on behavioral theories of

child development as well as behavioral theories of child

psychopathology.