From Wikipedia, the free encyclopedia

https://en.wikipedia.org/wiki/Superintelligence

A superintelligence is a hypothetical agent that possesses intelligence far surpassing that of the brightest and most gifted human minds. "Superintelligence" may also refer to a property of problem-solving systems (e.g., superintelligent language translators or engineering assistants) whether or not these high-level intellectual competencies are embodied in agents that act in the world. A superintelligence may or may not be created by an intelligence explosion and associated with a technological singularity.

University of Oxford philosopher Nick Bostrom defines superintelligence as "any intellect that greatly exceeds the cognitive performance of humans in virtually all domains of interest". The program Fritz falls short of superintelligence—even though it is much better than humans at chess—because Fritz cannot outperform humans in other tasks. Following Hutter and Legg, Bostrom treats superintelligence as general dominance at goal-oriented behavior, leaving open whether an artificial or human superintelligence would possess capacities such as intentionality (cf. the Chinese room argument) or first-person consciousness (cf. the hard problem of consciousness).

Technological researchers disagree about how likely present-day human intelligence is to be surpassed. Some argue that advances in artificial intelligence (AI) will probably result in general reasoning systems that lack human cognitive limitations. Others believe that humans will evolve or directly modify their biology so as to achieve radically greater intelligence. A number of futures studies scenarios combine elements from both of these possibilities, suggesting that humans are likely to interface with computers, or upload their minds to computers, in a way that enables substantial intelligence amplification.

Some researchers believe that superintelligence will likely follow shortly after the development of artificial general intelligence. The first generally intelligent machines are likely to immediately hold an enormous advantage in at least some forms of mental capability, including the capacity of perfect recall, a vastly superior knowledge base, and the ability to multitask in ways not possible to biological entities. This may give them the opportunity to—either as a single being or as a new species—become much more powerful than humans, and to displace them.

A number of scientists and forecasters argue for prioritizing early research into the possible benefits and risks of human and machine cognitive enhancement, because of the potential social impact of such technologies.

Feasibility of artificial superintelligence

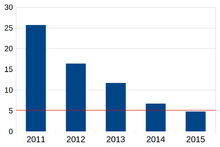

The error rate of AI by year. Red line - the error rate of a trained human

Philosopher David Chalmers argues that artificial general intelligence is a very likely path to superhuman intelligence. Chalmers breaks this claim down into an argument that AI can achieve equivalence to human intelligence, that it can be extended to surpass human intelligence, and that it can be further amplified to completely dominate humans across arbitrary tasks.

Concerning human-level equivalence, Chalmers argues that the human brain is a mechanical system, and therefore ought to be emulatable by synthetic materials. He also notes that human intelligence was able to biologically evolve, making it more likely that human engineers will be able to recapitulate this invention. Evolutionary algorithms in particular should be able to produce human-level AI. Concerning intelligence extension and amplification, Chalmers argues that new AI technologies can generally be improved on, and that this is particularly likely when the invention can assist in designing new technologies.

If research into strong AI produced sufficiently intelligent software, it would be able to reprogram and improve itself – a feature called "recursive self-improvement". It would then be even better at improving itself, and could continue doing so in a rapidly increasing cycle, leading to a superintelligence. This scenario is known as an intelligence explosion. Such an intelligence would not have the limitations of human intellect, and may be able to invent or discover almost anything.

Computer components already greatly surpass human performance in speed. Bostrom writes, "Biological neurons operate at a peak speed of about 200 Hz, a full seven orders of magnitude slower than a modern microprocessor (~2 GHz)." Moreover, neurons transmit spike signals across axons at no greater than 120 m/s, "whereas existing electronic processing cores can communicate optically at the speed of light". Thus, the simplest example of a superintelligence may be an emulated human mind run on much faster hardware than the brain. A human-like reasoner that could think millions of times faster than current humans would have a dominant advantage in most reasoning tasks, particularly ones that require haste or long strings of actions.

Another advantage of computers is modularity, that is, their size or computational capacity can be increased. A non-human (or modified human) brain could become much larger than a present-day human brain, like many supercomputers. Bostrom also raises the possibility of collective superintelligence: a large enough number of separate reasoning systems, if they communicated and coordinated well enough, could act in aggregate with far greater capabilities than any sub-agent.

There may also be ways to qualitatively improve on human reasoning and decision-making. Humans appear to differ from chimpanzees in the ways we think more than we differ in brain size or speed. Humans outperform non-human animals in large part because of new or enhanced reasoning capacities, such as long-term planning and language use. If there are other possible improvements to reasoning that would have a similarly large impact, this makes it likelier that an agent can be built that outperforms humans in the same fashion humans outperform chimpanzees.

All of the above advantages hold for artificial superintelligence, but it is not clear how many hold for biological superintelligence. Physiological constraints limit the speed and size of biological brains in many ways that are inapplicable to machine intelligence. As such, writers on superintelligence have devoted much more attention to superintelligent AI scenarios.

Feasibility of biological superintelligence

Carl Sagan suggested that the advent of Caesarean sections and in vitro fertilization may permit humans to evolve larger heads, resulting in improvements via natural selection in the heritable component of human intelligence. By contrast, Gerald Crabtree has argued that decreased selection pressure is resulting in a slow, centuries-long reduction in human intelligence, and that this process instead is likely to continue into the future. There is no scientific consensus concerning either possibility, and in both cases the biological change would be slow, especially relative to rates of cultural change.

Selective breeding, nootropics, NSI-189, MAOIs, epigenetic modulation, and genetic engineering could improve human intelligence more rapidly. Bostrom writes that if we come to understand the genetic component of intelligence, pre-implantation genetic diagnosis could be used to select for embryos with as much as 4 points of IQ gain (if one embryo is selected out of two), or with larger gains (e.g., up to 24.3 IQ points gained if one embryo is selected out of 1000). If this process is iterated over many generations, the gains could be an order of magnitude greater. Bostrom suggests that deriving new gametes from embryonic stem cells could be used to iterate the selection process very rapidly. A well-organized society of high-intelligence humans of this sort could potentially achieve collective superintelligence.

Alternatively, collective intelligence might be constructible by better organizing humans at present levels of individual intelligence. A number of writers have suggested that human civilization, or some aspect of it (e.g., the Internet, or the economy), is coming to function like a global brain with capacities far exceeding its component agents. If this systems-based superintelligence relies heavily on artificial components, however, it may qualify as an AI rather than as a biology-based superorganism. A prediction market is sometimes considered an example of working collective intelligence system, consisting of humans only (assuming algorithms are not used to inform decisions).

A final method of intelligence amplification would be to directly enhance individual humans, as opposed to enhancing their social or reproductive dynamics. This could be achieved using nootropics, somatic gene therapy, or brain–computer interfaces. However, Bostrom expresses skepticism about the scalability of the first two approaches, and argues that designing a superintelligent cyborg interface is an AI-complete problem.

Forecasts

Most surveyed AI researchers expect machines to eventually be able to rival humans in intelligence, though there is little consensus on when this will likely happen. At the 2006 AI@50 conference, 18% of attendees reported expecting machines to be able "to simulate learning and every other aspect of human intelligence" by 2056; 41% of attendees expected this to happen sometime after 2056; and 41% expected machines to never reach that milestone.

In a survey of the 100 most cited authors in AI (as of May 2013, according to Microsoft academic search), the median year by which respondents expected machines "that can carry out most human professions at least as well as a typical human" (assuming no global catastrophe occurs) with 10% confidence is 2024 (mean 2034, st. dev. 33 years), with 50% confidence is 2050 (mean 2072, st. dev. 110 years), and with 90% confidence is 2070 (mean 2168, st. dev. 342 years). These estimates exclude the 1.2% of respondents who said no year would ever reach 10% confidence, the 4.1% who said 'never' for 50% confidence, and the 16.5% who said 'never' for 90% confidence. Respondents assigned a median 50% probability to the possibility that machine superintelligence will be invented within 30 years of the invention of approximately human-level machine intelligence.

Design considerations

Bostrom expressed concern about what values a superintelligence should be designed to have. He compared several proposals:

- The coherent extrapolated volition (CEV) proposal is that it should have the values upon which humans would converge.

- The moral rightness (MR) proposal is that it should value moral rightness.

- The moral permissibility (MP) proposal is that it should value staying within the bounds of moral permissibility (and otherwise have CEV values).

Bostrom clarifies these terms:

instead of implementing humanity's coherent extrapolated volition, one could try to build an AI with the goal of doing what is morally right, relying on the AI’s superior cognitive capacities to figure out just which actions fit that description. We can call this proposal “moral rightness” (MR) ... MR would also appear to have some disadvantages. It relies on the notion of “morally right,” a notoriously difficult concept, one with which philosophers have grappled since antiquity without yet attaining consensus as to its analysis. Picking an erroneous explication of “moral rightness” could result in outcomes that would be morally very wrong ... The path to endowing an AI with any of these [moral] concepts might involve giving it general linguistic ability (comparable, at least, to that of a normal human adult). Such a general ability to understand natural language could then be used to understand what is meant by “morally right.” If the AI could grasp the meaning, it could search for actions that fit ...

One might try to preserve the basic idea of the MR model while reducing its demandingness by focusing on moral permissibility: the idea being that we could let the AI pursue humanity’s CEV so long as it did not act in ways that are morally impermissible.

Responding to Bostrom, Santos-Lang raised concern that developers may attempt to start with a single kind of superintelligence.

Potential threat to humanity

It has been suggested that if AI systems rapidly become superintelligent, they may take unforeseen actions or out-compete humanity. Researchers have argued that, by way of an "intelligence explosion," a self-improving AI could become so powerful as to be unstoppable by humans.

Concerning human extinction scenarios, Bostrom (2002) identifies superintelligence as a possible cause:

When we create the first superintelligent entity, we might make a mistake and give it goals that lead it to annihilate humankind, assuming its enormous intellectual advantage gives it the power to do so. For example, we could mistakenly elevate a subgoal to the status of a supergoal. We tell it to solve a mathematical problem, and it complies by turning all the matter in the solar system into a giant calculating device, in the process killing the person who asked the question.

In theory, since a superintelligent AI would be able to bring about almost any possible outcome and to thwart any attempt to prevent the implementation of its goals, many uncontrolled, unintended consequences could arise. It could kill off all other agents, persuade them to change their behavior, or block their attempts at interference. Eliezer Yudkowsky illustrates such instrumental convergence as follows: "The AI does not hate you, nor does it love you, but you are made out of atoms which it can use for something else."

This presents the AI control problem: how to build an intelligent agent that will aid its creators, while avoiding inadvertently building a superintelligence that will harm its creators. The danger of not designing control right "the first time," is that a superintelligence may be able to seize power over its environment and prevent humans from shutting it down. Potential AI control strategies include "capability control" (limiting an AI's ability to influence the world) and "motivational control" (building an AI whose goals are aligned with human values).

Bill Hibbard advocates for public education about superintelligence and public control over the development of superintelligence.