Differential geometry is a mathematical discipline that studies the geometry of smooth shapes and smooth spaces, otherwise known as smooth manifolds. It uses the techniques of single variable calculus, vector calculus, linear algebra and multilinear algebra. The field has its origins in the study of spherical geometry as far back as antiquity. It also relates to astronomy, the geodesy of the Earth, and later the study of hyperbolic geometry by Lobachevsky. The simplest examples of smooth spaces are the plane and space curves and surfaces in the three-dimensional Euclidean space, and the study of these shapes formed the basis for development of modern differential geometry during the 18th and 19th centuries.

Since the late 19th century, differential geometry has grown into a field concerned more generally with geometric structures on differentiable manifolds. A geometric structure is one which defines some notion of size, distance, shape, volume, or other rigidifying structure. For example, in Riemannian geometry distances and angles are specified, in symplectic geometry volumes may be computed, in conformal geometry only angles are specified, and in gauge theory certain fields are given over the space. Differential geometry is closely related to, and is sometimes taken to include, differential topology, which concerns itself with properties of differentiable manifolds that do not rely on any additional geometric structure (see that article for more discussion on the distinction between the two subjects). Differential geometry is also related to the geometric aspects of the theory of differential equations, otherwise known as geometric analysis.

Differential geometry finds applications throughout mathematics and the natural sciences. Most prominently the language of differential geometry was used by Albert Einstein in his theory of general relativity, and subsequently by physicists in the development of quantum field theory and the standard model of particle physics. Outside of physics, differential geometry finds applications in chemistry, economics, engineering, control theory, computer graphics and computer vision, and recently in machine learning.

History and development

The history and development of differential geometry as a subject begins at least as far back as classical antiquity. It is intimately linked to the development of geometry more generally, of the notion of space and shape, and of topology, especially the study of manifolds. In this section we focus primarily on the history of the application of infinitesimal methods to geometry, and later to the ideas of tangent spaces, and eventually the development of the modern formalism of the subject in terms of tensors and tensor fields.

Classical antiquity until the Renaissance (300 BC – 1600 AD)

The study of differential geometry, or at least the study of the geometry of smooth shapes, can be traced back at least to classical antiquity. In particular, much was known about the geometry of the Earth, a spherical geometry, in the time of the ancient Greek mathematicians. Famously, Eratosthenes calculated the circumference of the Earth around 200 BC, and around 150 AD Ptolemy in his Geography introduced the stereographic projection for the purposes of mapping the shape of the Earth. Implicitly throughout this time principles that form the foundation of differential geometry and calculus were used in geodesy, although in a much simplified form. Namely, as far back as Euclid's Elements it was understood that a straight line could be defined by its property of providing the shortest distance between two points, and applying this same principle to the surface of the Earth leads to the conclusion that great circles, which are only locally similar to straight lines in a flat plane, provide the shortest path between two points on the Earth's surface. Indeed, the measurements of distance along such geodesic paths by Eratosthenes and others can be considered a rudimentary measure of arclength of curves, a concept which did not see a rigorous definition in terms of calculus until the 1600s.

Around this time there were only minimal overt applications of the theory of infinitesimals to the study of geometry, a precursor to the modern calculus-based study of the subject. In Euclid's Elements the notion of tangency of a line to a circle is discussed, and Archimedes applied the method of exhaustion to compute the areas of smooth shapes such as the circle, and the volumes of smooth three-dimensional solids such as the sphere, cones, and cylinders.

There was little development in the theory of differential geometry between antiquity and the beginning of the Renaissance. Before the development of calculus by Newton and Leibniz, the most significant development in the understanding of differential geometry came from Gerardus Mercator's development of the Mercator projection as a way of mapping the Earth. Mercator had an understanding of the advantages and pitfalls of his map design, and in particular was aware of the conformal nature of his projection, as well as the difference between praga, the lines of shortest distance on the Earth, and the directio, the straight line paths on his map. Mercator noted that the praga were oblique curvatur in this projection. This fact reflects the lack of a metric-preserving map of the Earth's surface onto a flat plane, a consequence of the later Theorema Egregium of Gauss.

After calculus (1600–1800)

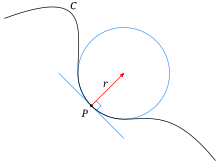

The first systematic or rigorous treatment of geometry using the theory of infinitesimals and notions from calculus began around the 1600s when calculus was first developed by Gottfried Leibniz and Isaac Newton. At this time, the recent work of René Descartes introducing analytic coordinates to geometry allowed geometric shapes of increasing complexity to be described rigorously. In particular around this time Pierre de Fermat, Newton, and Leibniz began the study of plane curves and the investigation of concepts such as points of inflection and circles of osculation, which aid in the measurement of curvature. Indeed, already in his first paper on the foundations of calculus, Leibniz notes that the infinitesimal condition indicates the existence of an inflection point. Shortly after this time the Bernoulli brothers, Jacob and Johann made important early contributions to the use of infinitesimals to study geometry. In lectures by Johann Bernoulli at the time, later collated by L'Hopital into the first textbook on differential calculus, the tangents to plane curves of various types are computed using the condition , and similarly points of inflection are calculated. At this same time the orthogonality between the osculating circles of a plane curve and the tangent directions is realised, and the first analytical formula for the radius of an osculating circle, essentially the first analytical formula for the notion of curvature, is written down.

In the wake of the development of analytic geometry and plane curves, Alexis Clairaut began the study of space curves at just the age of 16. In his book Clairaut introduced the notion of tangent and subtangent directions to space curves in relation to the directions which lie along a surface on which the space curve lies. Thus Clairaut demonstrated an implicit understanding of the tangent space of a surface and studied this idea using calculus for the first time. Importantly Clairaut introduced the terminology of curvature and double curvature, essentially the notion of principal curvatures later studied by Gauss and others.

Around this same time, Leonhard Euler, originally a student of Johann Bernoulli, provided many significant contributions not just to the development of geometry, but to mathematics more broadly. In regards to differential geometry, Euler studied the notion of a geodesic on a surface deriving the first analytical geodesic equation, and later introduced the first set of intrinsic coordinate systems on a surface, beginning the theory of intrinsic geometry upon which modern geometric ideas are based. Around this time Euler's study of mechanics in the Mechanica lead to the realization that a mass traveling along a surface not under the effect of any force would traverse a geodesic path, an early precursor to the important foundational ideas of Einstein's general relativity, and also to the Euler–Lagrange equations and the first theory of the calculus of variations, which underpins in modern differential geometry many techniques in symplectic geometry and geometric analysis. This theory was used by Lagrange, a co-developer of the calculus of variations, to derive the first differential equation describing a minimal surface in terms of the Euler–Lagrange equation. In 1760 Euler proved a theorem expressing the curvature of a space curve on a surface in terms of the principal curvatures, known as Euler's theorem.

Later in the 1700s, the new French school led by Gaspard Monge began to make contributions to differential geometry. Monge made important contributions to the theory of plane curves, surfaces, and studied surfaces of revolution and envelopes of plane curves and space curves. Several students of Monge made contributions to this same theory, and for example Charles Dupin provided a new interpretation of Euler's theorem in terms of the principle curvatures, which is the modern form of the equation.

Intrinsic geometry and non-Euclidean geometry (1800–1900)

The field of differential geometry became an area of study considered in its own right, distinct from the more broad idea of analytic geometry, in the 1800s, primarily through the foundational work of Carl Friedrich Gauss and Bernhard Riemann, and also in the important contributions of Nikolai Lobachevsky on hyperbolic geometry and non-Euclidean geometry and throughout the same period the development of projective geometry.

Dubbed the single most important work in the history of differential geometry, in 1827 Gauss produced the Disquisitiones generales circa superficies curvas detailing the general theory of curved surfaces. In this work and his subsequent papers and unpublished notes on the theory of surfaces, Gauss has been dubbed the inventor of non-Euclidean geometry and the inventor of intrinsic differential geometry. In his fundamental paper Gauss introduced the Gauss map, Gaussian curvature, first and second fundamental forms, proved the Theorema Egregium showing the intrinsic nature of the Gaussian curvature, and studied geodesics, computing the area of a geodesic triangle in various non-Euclidean geometries on surfaces.

At this time Gauss was already of the opinion that the standard paradigm of Euclidean geometry should be discarded, and was in possession of private manuscripts on non-Euclidean geometry which informed his study of geodesic triangles. Around this same time János Bolyai and Lobachevsky independently discovered hyperbolic geometry and thus demonstrated the existence of consistent geometries outside Euclid's paradigm. Concrete models of hyperbolic geometry were produced by Eugenio Beltrami later in the 1860s, and Felix Klein coined the term non-Euclidean geometry in 1871, and through the Erlangen program put Euclidean and non-Euclidean geometries on the same footing. Implicitly, the spherical geometry of the Earth that had been studied since antiquity was a non-Euclidean geometry, an elliptic geometry.

The development of intrinsic differential geometry in the language of Gauss was spurred on by his student, Bernhard Riemann in his Habilitationsschrift, On the hypotheses which lie at the foundation of geometry. In this work Riemann introduced the notion of a Riemannian metric and the Riemannian curvature tensor for the first time, and began the systematic study of differential geometry in higher dimensions. This intrinsic point of view in terms of the Riemannian metric, denoted by by Riemann, was the development of an idea of Gauss's about the linear element of a surface. At this time Riemann began to introduce the systematic use of linear algebra and multilinear algebra into the subject, making great use of the theory of quadratic forms in his investigation of metrics and curvature. At this time Riemann did not yet develop the modern notion of a manifold, as even the notion of a topological space had not been encountered, but he did propose that it might be possible to investigate or measure the properties of the metric of spacetime through the analysis of masses within spacetime, linking with the earlier observation of Euler that masses under the effect of no forces would travel along geodesics on surfaces, and predicting Einstein's fundamental observation of the equivalence principle a full 60 years before it appeared in the scientific literature.

In the wake of Riemann's new description, the focus of techniques used to study differential geometry shifted from the ad hoc and extrinsic methods of the study of curves and surfaces to a more systematic approach in terms of tensor calculus and Klein's Erlangen program, and progress increased in the field. The notion of groups of transformations was developed by Sophus Lie and Jean Gaston Darboux, leading to important results in the theory of Lie groups and symplectic geometry. The notion of differential calculus on curved spaces was studied by Elwin Christoffel, who introduced the Christoffel symbols which describe the covariant derivative in 1868, and by others including Eugenio Beltrami who studied many analytic questions on manifolds. In 1899 Luigi Bianchi produced his Lectures on differential geometry which studied differential geometry from Riemann's perspective, and a year later Tullio Levi-Civita and Gregorio Ricci-Curbastro produced their textbook systematically developing the theory of absolute differential calculus and tensor calculus. It was in this language that differential geometry was used by Einstein in the development of general relativity and pseudo-Riemannian geometry.

Modern differential geometry (1900–2000)

The subject of modern differential geometry emerged from the early 1900s in response to the foundational contributions of many mathematicians, including importantly the work of Henri Poincaré on the foundations of topology. At the start of the 1900s there was a major movement within mathematics to formalise the foundational aspects of the subject to avoid crises of rigour and accuracy, known as Hilbert's program. As part of this broader movement, the notion of a topological space was distilled in by Felix Hausdorff in 1914, and by 1942 there were many different notions of manifold of a combinatorial and differential-geometric nature.

Interest in the subject was also focused by the emergence of Einstein's theory of general relativity and the importance of the Einstein Field equations. Einstein's theory popularised the tensor calculus of Ricci and Levi-Civita and introduced the notation for a Riemannian metric, and for the Christoffel symbols, both coming from G in Gravitation. Élie Cartan helped reformulate the foundations of the differential geometry of smooth manifolds in terms of exterior calculus and the theory of moving frames, leading in the world of physics to Einstein–Cartan theory.

Following this early development, many mathematicians contributed to the development of the modern theory, including Jean-Louis Koszul who introduced connections on vector bundles, Shiing-Shen Chern who introduced characteristic classes to the subject and began the study of complex manifolds, Sir William Vallance Douglas Hodge and Georges de Rham who expanded understanding of differential forms, Charles Ehresmann who introduced the theory of fibre bundles and Ehresmann connections, and others. Of particular importance was Hermann Weyl who made important contributions to the foundations of general relativity, introduced the Weyl tensor providing insight into conformal geometry, and first defined the notion of a gauge leading to the development of gauge theory in physics and mathematics.

In the middle and late 20th century differential geometry as a subject expanded in scope and developed links to other areas of mathematics and physics. The development of gauge theory and Yang–Mills theory in physics brought bundles and connections into focus, leading to developments in gauge theory. Many analytical results were investigated including the proof of the Atiyah–Singer index theorem. The development of complex geometry was spurred on by parallel results in algebraic geometry, and results in the geometry and global analysis of complex manifolds were proven by Shing-Tung Yau and others. In the latter half of the 20th century new analytic techniques were developed in regards to curvature flows such as the Ricci flow, which culminated in Grigori Perelman's proof of the Poincaré conjecture. During this same period primarily due to the influence of Michael Atiyah, new links between theoretical physics and differential geometry were formed. Techniques from the study of the Yang–Mills equations and gauge theory were used by mathematicians to develop new invariants of smooth manifolds. Physicists such as Edward Witten, the only physicist to be awarded a Fields medal, made new impacts in mathematics by using topological quantum field theory and string theory to make predictions and provide frameworks for new rigorous mathematics, which has resulted for example in the conjectural mirror symmetry and the Seiberg–Witten invariants.

Branches

Riemannian geometry

Riemannian geometry studies Riemannian manifolds, smooth manifolds with a Riemannian metric. This is a concept of distance expressed by means of a smooth positive definite symmetric bilinear form defined on the tangent space at each point. Riemannian geometry generalizes Euclidean geometry to spaces that are not necessarily flat, though they still resemble Euclidean space at each point infinitesimally, i.e. in the first order of approximation. Various concepts based on length, such as the arc length of curves, area of plane regions, and volume of solids all possess natural analogues in Riemannian geometry. The notion of a directional derivative of a function from multivariable calculus is extended to the notion of a covariant derivative of a tensor. Many concepts of analysis and differential equations have been generalized to the setting of Riemannian manifolds.

A distance-preserving diffeomorphism between Riemannian manifolds is called an isometry. This notion can also be defined locally, i.e. for small neighborhoods of points. Any two regular curves are locally isometric. However, the Theorema Egregium of Carl Friedrich Gauss showed that for surfaces, the existence of a local isometry imposes that the Gaussian curvatures at the corresponding points must be the same. In higher dimensions, the Riemann curvature tensor is an important pointwise invariant associated with a Riemannian manifold that measures how close it is to being flat. An important class of Riemannian manifolds is the Riemannian symmetric spaces, whose curvature is not necessarily constant. These are the closest analogues to the "ordinary" plane and space considered in Euclidean and non-Euclidean geometry.

Pseudo-Riemannian geometry

Pseudo-Riemannian geometry generalizes Riemannian geometry to the case in which the metric tensor need not be positive-definite. A special case of this is a Lorentzian manifold, which is the mathematical basis of Einstein's general relativity theory of gravity.

Finsler geometry

Finsler geometry has Finsler manifolds as the main object of study. This is a differential manifold with a Finsler metric, that is, a Banach norm defined on each tangent space. Riemannian manifolds are special cases of the more general Finsler manifolds. A Finsler structure on a manifold is a function such that:

- for all in and all ,

- is infinitely differentiable in ,

- The vertical Hessian of is positive definite.

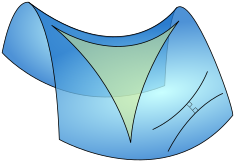

Symplectic geometry

Symplectic geometry is the study of symplectic manifolds. An almost symplectic manifold is a differentiable manifold equipped with a smoothly varying non-degenerate skew-symmetric bilinear form on each tangent space, i.e., a nondegenerate 2-form ω, called the symplectic form. A symplectic manifold is an almost symplectic manifold for which the symplectic form ω is closed: dω = 0.

A diffeomorphism between two symplectic manifolds which preserves the symplectic form is called a symplectomorphism. Non-degenerate skew-symmetric bilinear forms can only exist on even-dimensional vector spaces, so symplectic manifolds necessarily have even dimension. In dimension 2, a symplectic manifold is just a surface endowed with an area form and a symplectomorphism is an area-preserving diffeomorphism. The phase space of a mechanical system is a symplectic manifold and they made an implicit appearance already in the work of Joseph Louis Lagrange on analytical mechanics and later in Carl Gustav Jacobi's and William Rowan Hamilton's formulations of classical mechanics.

By contrast with Riemannian geometry, where the curvature provides a local invariant of Riemannian manifolds, Darboux's theorem states that all symplectic manifolds are locally isomorphic. The only invariants of a symplectic manifold are global in nature and topological aspects play a prominent role in symplectic geometry. The first result in symplectic topology is probably the Poincaré–Birkhoff theorem, conjectured by Henri Poincaré and then proved by G.D. Birkhoff in 1912. It claims that if an area preserving map of an annulus twists each boundary component in opposite directions, then the map has at least two fixed points.

Contact geometry

Contact geometry deals with certain manifolds of odd dimension. It is close to symplectic geometry and like the latter, it originated in questions of classical mechanics. A contact structure on a (2n + 1)-dimensional manifold M is given by a smooth hyperplane field H in the tangent bundle that is as far as possible from being associated with the level sets of a differentiable function on M (the technical term is "completely nonintegrable tangent hyperplane distribution"). Near each point p, a hyperplane distribution is determined by a nowhere vanishing 1-form , which is unique up to multiplication by a nowhere vanishing function:

A local 1-form on M is a contact form if the restriction of its exterior derivative to H is a non-degenerate two-form and thus induces a symplectic structure on Hp at each point. If the distribution H can be defined by a global one-form then this form is contact if and only if the top-dimensional form

is a volume form on M, i.e. does not vanish anywhere. A contact analogue of the Darboux theorem holds: all contact structures on an odd-dimensional manifold are locally isomorphic and can be brought to a certain local normal form by a suitable choice of the coordinate system.

Complex and Kähler geometry

Complex differential geometry is the study of complex manifolds. An almost complex manifold is a real manifold , endowed with a tensor of type (1, 1), i.e. a vector bundle endomorphism (called an almost complex structure)

- , such that

It follows from this definition that an almost complex manifold is even-dimensional.

An almost complex manifold is called complex if , where is a tensor of type (2, 1) related to , called the Nijenhuis tensor (or sometimes the torsion). An almost complex manifold is complex if and only if it admits a holomorphic coordinate atlas. An almost Hermitian structure is given by an almost complex structure J, along with a Riemannian metric g, satisfying the compatibility condition

An almost Hermitian structure defines naturally a differential two-form

The following two conditions are equivalent:

where is the Levi-Civita connection of . In this case, is called a Kähler structure, and a Kähler manifold is a manifold endowed with a Kähler structure. In particular, a Kähler manifold is both a complex and a symplectic manifold. A large class of Kähler manifolds (the class of Hodge manifolds) is given by all the smooth complex projective varieties.

CR geometry

CR geometry is the study of the intrinsic geometry of boundaries of domains in complex manifolds.

Conformal geometry

Conformal geometry is the study of the set of angle-preserving (conformal) transformations on a space.

Differential topology

Differential topology is the study of global geometric invariants without a metric or symplectic form.

Differential topology starts from the natural operations such as Lie derivative of natural vector bundles and de Rham differential of forms. Beside Lie algebroids, also Courant algebroids start playing a more important role.

Lie groups

A Lie group is a group in the category of smooth manifolds. Beside the algebraic properties this enjoys also differential geometric properties. The most obvious construction is that of a Lie algebra which is the tangent space at the unit endowed with the Lie bracket between left-invariant vector fields. Beside the structure theory there is also the wide field of representation theory.

Geometric analysis

Geometric analysis is a mathematical discipline where tools from differential equations, especially elliptic partial differential equations are used to establish new results in differential geometry and differential topology.

Gauge theory

Gauge theory is the study of connections on vector bundles and principal bundles, and arises out of problems in mathematical physics and physical gauge theories which underpin the standard model of particle physics. Gauge theory is concerned with the study of differential equations for connections on bundles, and the resulting geometric moduli spaces of solutions to these equations as well as the invariants that may be derived from them. These equations often arise as the Euler–Lagrange equations describing the equations of motion of certain physical systems in quantum field theory, and so their study is of considerable interest in physics.

Bundles and connections

The apparatus of vector bundles, principal bundles, and connections on bundles plays an extraordinarily important role in modern differential geometry. A smooth manifold always carries a natural vector bundle, the tangent bundle. Loosely speaking, this structure by itself is sufficient only for developing analysis on the manifold, while doing geometry requires, in addition, some way to relate the tangent spaces at different points, i.e. a notion of parallel transport. An important example is provided by affine connections. For a surface in R3, tangent planes at different points can be identified using a natural path-wise parallelism induced by the ambient Euclidean space, which has a well-known standard definition of metric and parallelism. In Riemannian geometry, the Levi-Civita connection serves a similar purpose. More generally, differential geometers consider spaces with a vector bundle and an arbitrary affine connection which is not defined in terms of a metric. In physics, the manifold may be spacetime and the bundles and connections are related to various physical fields.

Intrinsic versus extrinsic

From the beginning and through the middle of the 19th century, differential geometry was studied from the extrinsic point of view: curves and surfaces were considered as lying in a Euclidean space of higher dimension (for example a surface in an ambient space of three dimensions). The simplest results are those in the differential geometry of curves and differential geometry of surfaces. Starting with the work of Riemann, the intrinsic point of view was developed, in which one cannot speak of moving "outside" the geometric object because it is considered to be given in a free-standing way. The fundamental result here is Gauss's theorema egregium, to the effect that Gaussian curvature is an intrinsic invariant.

The intrinsic point of view is more flexible. For example, it is useful in relativity where space-time cannot naturally be taken as extrinsic. However, there is a price to pay in technical complexity: the intrinsic definitions of curvature and connections become much less visually intuitive.

These two points of view can be reconciled, i.e. the extrinsic geometry can be considered as a structure additional to the intrinsic one. (See the Nash embedding theorem.) In the formalism of geometric calculus both extrinsic and intrinsic geometry of a manifold can be characterized by a single bivector-valued one-form called the shape operator.

Applications

Below are some examples of how differential geometry is applied to other fields of science and mathematics.

- In physics, differential geometry has many applications, including:

- Differential geometry is the language in which Albert Einstein's general theory of relativity is expressed. According to the theory, the universe is a smooth manifold equipped with a pseudo-Riemannian metric, which describes the curvature of spacetime. Understanding this curvature is essential for the positioning of satellites into orbit around the Earth. Differential geometry is also indispensable in the study of gravitational lensing and black holes.

- Differential forms are used in the study of electromagnetism.

- Differential geometry has applications to both Lagrangian mechanics and Hamiltonian mechanics. Symplectic manifolds in particular can be used to study Hamiltonian systems.

- Riemannian geometry and contact geometry have been used to construct the formalism of geometrothermodynamics which has found applications in classical equilibrium thermodynamics.

- In chemistry and biophysics when modelling cell membrane structure under varying pressure.

- In economics, differential geometry has applications to the field of econometrics.

- Geometric modeling (including computer graphics) and computer-aided geometric design draw on ideas from differential geometry.

- In engineering, differential geometry can be applied to solve problems in digital signal processing.

- In control theory, differential geometry can be used to analyze nonlinear controllers, particularly geometric control

- In probability, statistics, and information theory, one can interpret various structures as Riemannian manifolds, which yields the field of information geometry, particularly via the Fisher information metric.

- In structural geology, differential geometry is used to analyze and describe geologic structures.

- In computer vision, differential geometry is used to analyze shapes.

- In image processing, differential geometry is used to process and analyse data on non-flat surfaces.

- Grigori Perelman's proof of the Poincaré conjecture using the techniques of Ricci flows demonstrated the power of the differential-geometric approach to questions in topology and it highlighted the important role played by its analytic methods.

- In wireless communications, Grassmannian manifolds are used for beamforming techniques in multiple antenna systems.

- In geodesy, for calculating distances and angles on the mean sea level surface of the Earth, modelled by an ellipsoid of revolution.