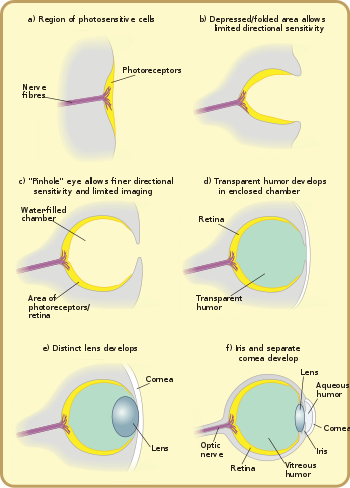

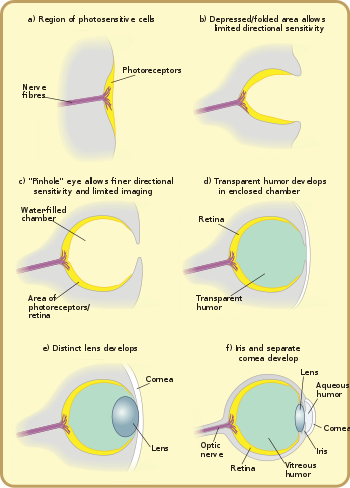

Major stages in the evolution of the eye.

The

evolution of the eye has been a subject of significant study, as a distinctive example of a

homologous organ present in a wide variety of taxa. Complex, image-forming eyes evolved independently some 50 to 100 times.

[1]

Complex eyes appear to have first evolved within a few million years, in the rapid burst of evolution known as the

Cambrian explosion. There is no evidence of eyes before the Cambrian, but a wide range of diversity is evident in the Middle Cambrian

Burgess shale, and the slightly older

Emu Bay Shale.

[2] Eyes show a wide range of adaptations to meet the requirements of the organisms which bear them. Eyes vary in their

visual acuity, the range of wavelengths they can detect, their sensitivity in low light levels, their ability to detect motion or resolve objects, and whether they can

discriminate colours.

History of research[edit]

The human eye, demonstrating the

iris

In 1802, philosopher

William Paley called it a miracle of "design".

Charles Darwin himself wrote in his

Origin of Species, that the evolution of the eye by natural selection at first glance seemed "absurd in the highest possible degree". However, he went on to explain that despite the difficulty in imagining it, this was perfectly feasible:

...if numerous gradations from a simple and imperfect eye to one complex and perfect can be shown to exist, each grade being useful to its possessor, as is certainly the case; if further, the eye ever varies and the variations be inherited, as is likewise certainly the case and if such variations should be useful to any animal under changing conditions of life, then the difficulty of believing that a perfect and complex eye could be formed by natural selection, though insuperable by our imagination, should not be considered as subversive of the theory.[3]

He suggested a gradation from "an optic nerve merely coated with pigment, and without any other mechanism" to "a moderately high stage of perfection", giving examples of

extant intermediate grades of evolution.

[3] Darwin's suggestions were soon shown to be correct, and current research is investigating the genetic mechanisms responsible for eye development and evolution.

[4]

Modern researchers have been putting forth work on the topic. D.E. Nilsson has independently put forth four theorized general stages in the evolution of a vertebrate eye from a patch of photoreceptors.

[5] Nilsson and S. Pelger published a classical paper theorizing how many generations are needed to evolve a complex eye in vertebrates.

[6] Another researcher, G.C. Young, has used fossil evidence to infer evolutionary conclusions, based on the structure of eye orbits and openings in fossilized skulls for blood vessels and nerves to go through.

[7] All this evidence adds to the growing amount of evidence that supports Darwin's theory.

Rate of evolution

The first fossils of eyes that have been found to date are from the lower

Cambrian period (about

540 million years ago).

[8] This period saw a burst of apparently rapid evolution, dubbed the "

Cambrian explosion". One of the many hypotheses for "causes" of this diversification, the "Light Switch" theory of

Andrew Parker, holds that the evolution of eyes initiated an

arms race that led to a rapid spate of evolution.

[9] Earlier than this, organisms may have had use for light sensitivity, but not for fast locomotion and navigation by vision.

It is difficult to estimate the rate of eye evolution because the fossil record, particularly of the Early Cambrian, is poor. The evolution of a circular patch of photoreceptor cells into a fully functional vertebrate eye has been approximated based on rates of mutation, relative advantage to the organism, and natural selection. Based on pessimistic calculations that consistently overestimate the time required for each stage and a generation time of one year, which is common in small animals, it has been proposed that it would take less than 364,000 years for the vertebrate eye to evolve from a patch of photoreceptors.

[10][note 1]

One origin or many?

Whether one considers the eye to have evolved once or multiple times depends somewhat on the definition of an eye. Much of the genetic machinery employed in eye development is common to all eyed organisms, which may suggest that their ancestor utilized some form of light-sensitive machinery – even if it lacked a dedicated optical organ. However, even photoreceptor cells may have evolved more than once from molecularly similar chemoreceptors, and photosensitive cells probably existed long before the Cambrian explosion.

[11] Higher-level similarities – such as the use of the protein

crystallin in the independently derived cephalopod and vertebrate lenses

[12] – reflect the

co-option of a protein from a more fundamental role to a new function within the eye.

[13]

Shared traits common to all light-sensitive organs include the family of photo-receptive proteins called

opsins. All seven sub-families of opsin were already present in the last common ancestor of animals. In addition, the genetic toolkit for positioning eyes is common to all animals: the

PAX6 gene controls where the eye develops in organisms ranging from octopuses

[14] to mice to

fruit flies.

[15][16][17] These high-level genes are, by implication, much older than many of the structures that they are today seen to control; they must originally have served a different purpose, before being co-opted for a new role in eye development.

[13]

Sensory organs probably evolved before the brain did—there is no need for an information-processing organ (brain) before there is information to process.

[18]

Stages of eye evolution

The

stigma (2) of the

euglena hides a light-sensitive spot.

The earliest predecessors of the eye were photoreceptor proteins that sense light, found even in unicellular organisms, called "

eyespots". Eyespots can only sense ambient brightness: they can distinguish light from dark, sufficient for

photoperiodism and daily synchronization of

circadian rhythms. They are insufficient for vision, as they cannot distinguish shapes or determine the direction light is coming from. Eyespots are found in nearly all major animal groups, and are common among unicellular organisms, including

euglena. The euglena's eyespot, called a

stigma, is located at its anterior end. It is a small splotch of red pigment which shades a collection of light sensitive crystals. Together with the leading flagellum, the eyespot allows the organism to move in response to light, often toward the light to assist in

photosynthesis,

[19] and to predict day and night, the primary function of circadian rhythms. Visual pigments are located in the brains of more complex organisms, and are thought to have a role in synchronising spawning with lunar cycles. By detecting the subtle changes in night-time illumination, organisms could synchronise the release of sperm and eggs to maximise the probability of fertilisation.

[citation needed]

Vision itself relies on a basic biochemistry which is common to all eyes. However, how this biochemical toolkit is used to interpret an organism's environment varies widely: eyes have a wide range of structures and forms, all of which have evolved quite late relative to the underlying proteins and molecules.

[19]

At a cellular level, there appear to be two main "designs" of eyes, one possessed by the

protostomes (

molluscs,

annelid worms and

arthropods), the other by the

deuterostomes (

chordates and

echinoderms).

[19]

The functional unit of the eye is the receptor cell, which contains the opsin proteins and responds to light by initiating a nerve impulse. The light sensitive opsins are borne on a hairy layer, to maximise the surface area. The nature of these "hairs" differs, with two basic forms underlying photoreceptor structure:

microvilli and

cilia.

[20] In the protostomes, they are microvilli: extensions or protrusions of the cellular membrane. But in the deuterostomes, they are derived from cilia, which are separate structures.

[19] The actual derivation may be more complicated, as some microvilli contain traces of cilia — but other observations appear to support a fundamental difference between protostomes and deuterostomes.

[19] These considerations centre on the response of the cells to light – some use sodium to cause the electric signal that will form a nerve impulse, and others use potassium; further, protostomes on the whole construct a signal by allowing

more sodium to pass through their cell walls, whereas deuterostomes allow less through.

[19]

This suggests that when the two lineages diverged in the Precambrian, they had only very primitive light receptors, which developed into more complex eyes independently.

Early eyes

The basic light-processing unit of eyes is the

photoreceptor cell, a specialized cell containing two types of molecules in a membrane: the

opsin, a light-sensitive protein, surrounding the

chromophore, a

pigment that distinguishes colors. Groups of such cells are termed "eyespots", and have evolved independently somewhere between 40 and 65 times. These eyespots permit animals to gain only a very basic sense of the direction and intensity of light, but not enough to discriminate an object from its surroundings.

[19]

Developing an optical system that can discriminate the direction of light to within a few degrees is apparently much more difficult, and only six of the thirty-some phyla

[note 2] possess such a system. However, these phyla account for 96% of living species.

[19]

The

planarian has "cup" eyespots that can slightly distinguish light direction.

These complex optical systems started out as the multicellular eyepatch gradually depressed into a cup, which first granted the ability to discriminate brightness in directions, then in finer and finer directions as the pit deepened. While flat eyepatches were ineffective at determining the direction of light, as a beam of light would activate exactly the same patch of photo-sensitive cells regardless of its direction, the "cup" shape of the pit eyes allowed limited directional differentiation by changing which cells the lights would hit depending upon the light's angle. Pit eyes, which had arisen by the

Cambrian period, were seen in ancient

snails,

[clarification needed] and are found in some snails and other invertebrates living today, such as

planaria. Planaria can slightly differentiate the direction and intensity of light because of their cup-shaped, heavily pigmented

retina cells, which shield the light-sensitive cells from exposure in all directions except for the single opening for the light. However, this proto-eye is still much more useful for detecting the absence or presence of light than its direction; this gradually changes as the eye's pit deepens and the number of photoreceptive cells grows, allowing for increasingly precise visual information.

[21]

When a

photon is absorbed by the chromophore, a chemical reaction causes the photon's energy to be transduced into electrical energy and relayed, in higher animals, to the

nervous system. These photoreceptor cells form part of the

retina, a thin layer of cells that relays

visual information,

[22] including the light and day-length information needed by the circadian rhythm system, to the brain. However, some

jellyfish, such as Cladonema, have elaborate eyes but no brain. Their eyes transmit a message directly to the muscles without the intermediate processing provided by a brain.

[18]

During the

Cambrian explosion, the development of the eye accelerated rapidly, with radical improvements in image-processing and detection of light direction.

[23]

After the photosensitive cell region invaginated, there came a point when reducing the width of the light opening became more efficient at increasing visual resolution than continued deepening of the cup.

[10] By reducing the size of the opening, organisms achieved true imaging, allowing for fine directional sensing and even some shape-sensing. Eyes of this nature are currently found in the

nautilus. Lacking a cornea or lens, they provide poor resolution and dim imaging, but are still, for the purpose of vision, a major improvement over the early eyepatches.

[24]

Overgrowths of transparent cells prevented contamination and parasitic infestation. The chamber contents, now segregated, could slowly specialize into a transparent humour, for optimizations such as colour filtering, higher

refractive index, blocking of

ultraviolet radiation, or the ability to operate in and out of water. The layer may, in certain classes,

[which?] be related to the

moulting of the organism's shell or skin. An example of this can be observed in

Onychophorans where the cuticula of the shell continues to the cornea. The cornea is composed of either one or two cuticular layers depending on how recently the animal has moulted.

[25] Along with the lens and two humors, the cornea is responsible for converging light and aiding the focusing of it on the back of the retina. The cornea protects the eyeball while at the same time accounting for approximately 2/3 of the eye’s total refractive power.

[26]

It is likely that a key reason eyes specialize in detecting a specific, narrow range of wavelengths on the

electromagnetic spectrum—the

visible spectrum—is because the earliest species to develop

photosensitivity were aquatic, and only two specific wavelength ranges of

electromagnetic radiation, blue and green visible light, can travel through water. This same light-filtering property of water also influenced the photosensitivity of plants.

[27][28][29]

Lens formation and diversification

Light from a distant object and a near object being focused by changing the curvature of the

lens

In a lensless eye, a distant point of light enters and hits the back of the eye with about the same size as when it entered. Adding a lens to the eye directs this incoming light onto a smaller surface area, without reducing the intensity of the stimulus.

[6] The focal length of an early

lobopod with lens-containing simple eyes focused the image

behind the retina, so while no part of the image could be brought into focus, the intensity of light allowed the organism to see in deeper (and therefore darker) waters.

[25] A subsequent increase of the lens's refractive index probably resulted in an in-focus image being formed.

[25]

The development of the lens in camera-type eyes probably followed a different trajectory. The transparent cells over a pinhole eye's aperture split into two layers, with liquid in between.

[citation needed] The liquid originally served as a circulatory fluid for oxygen, nutrients, wastes, and immune functions, allowing greater total thickness and higher mechanical protection. In addition, multiple interfaces between solids and liquids increase optical power, allowing wider viewing angles and greater imaging resolution. Again, the division of layers may have originated with the shedding of skin; intracellular fluid may infill naturally depending on layer depth.

[citation needed]

Note that this optical layout has not been found, nor is it expected to be found.

Fossilization rarely preserves soft tissues, and even if it did, the new humour would almost certainly close as the remains desiccated, or as sediment overburden forced the layers together, making the fossilized eye resemble the previous layout.

Vertebrate

lenses are composed of adapted

epithelial cells which have high concentrations of the protein

crystallin. These crystallins belong to two major families, the α-crystallins and the βγ-crystallins. Both were categories of proteins originally used for other functions in organisms, but eventually were adapted for the sole purpose of vision in animal eyes.

[30] In the embryo, the lens is living tissue, but the cellular machinery is not transparent so must be removed before the organism can see. Removing the machinery means the lens is composed of dead cells, packed with crystallins. These crystallins are special because they have the unique characteristics required for transparency and function in the lens such as tight packing, resistance to crystallization, and extreme longevity, as they must survive for the entirety of the organism’s life.

[30] The

refractive index gradient which makes the lens useful is caused by the radial shift in crystallin concentration in different parts of the lens, rather than by the specific type of protein: it is not the presence of crystallin, but the relative distribution of it, that renders the lens useful.

[31]

It is biologically difficult to maintain a transparent layer of cells. Deposition of transparent, nonliving, material eased the need for nutrient supply and waste removal.

Trilobites used

calcite, a mineral which has not been used by any other organism; in other compound eyes

[verification needed] and camera eyes, the material is

crystallin. A gap between tissue layers naturally forms a biconvex shape, which is optically and mechanically ideal for substances of normal refractive index. A biconvex lens confers not only optical resolution, but aperture and low-light ability, as resolution is now decoupled from hole size—which slowly increases again, free from the circulatory constraints.

Independently, a transparent layer and a nontransparent layer may split forward from the lens: a separate

cornea and

iris. (These may happen before or after crystal deposition, or not at all.) Separation of the forward layer again forms a humour, the

aqueous humour. This increases refractive power and again eases circulatory problems. Formation of a nontransparent ring allows more blood vessels, more circulation, and larger eye sizes. This flap around the perimeter of the lens also masks optical imperfections, which are more common at lens edges. The need to mask lens imperfections gradually increases with lens curvature and power, overall lens and eye size, and the resolution and aperture needs of the organism, driven by hunting or survival requirements. This type is now functionally identical to the eye of most vertebrates, including humans. Indeed, "the basic pattern of all vertebrate eyes is similar."

[32]

Other developments

Color vision

Five classes of visual photopigmentations are found in vertebrates. All but one of these developed prior to the divergence of cyclostomes and fish.

[33] Various adaptations within these five classes give rise to suitable eyes depending on the spectrum encountered. As light travels through water, longer wavelengths, such as reds and yellows, are absorbed more quickly than the shorter wavelengths of the greens and blues. This can create a gradient of light types as the depth of water increases. The visual receptors in fish are more sensitive to the range of light present in their habitat level. However, this phenomenon does not occur in land environments, creating little variation in pigment sensitivities among terrestrial vertebrates. The homogenous nature of the pigment sensitivities directly contributes to the significant presence of communication colors.

[33] This presents distinct

selective advantages, such as better recognition of predators, food, and mates. Indeed, it is thought that simple sensory-neural mechanisms may selectively control general behaviour patterns, such as escape, foraging, and hiding. Many examples of wavelength-specific behaviour patterns have been identified, in two primary groups: less than 450 nm, associated with natural light sources, and greater than 450 nm, associated with reflected light sources.

[34] As opsin molecules were subtly fine-tuned to detect different wavelengths of light, at some point

color vision developed when photoreceptor cells developed multiple pigments.

[22] As a chemical adaption rather than a mechanical one, this may have occurred at any of the early stages of the eye's evolution, and the capability may have disappeared and reappeared as organisms became predator or prey. Similarly, night and day vision emerged when receptors differentiated into rods and cones, respectively.

Polarization vision

As discussed earlier, the properties of light under water differ from those in air. One example of this is the polarization of light.

Polarization is the organization of originally disordered light, from the sun, into linear arrangements. This occurs when light passes through slit like filters, as well as when passing into a new medium. Sensitivity to polarized light is especially useful for organisms whose habitats are located more than a few meters under water. In this environment, color vision is less dependable, and therefore a weaker selective factor. While most photoreceptors have the ability to distinguish partially polarized light, terrestrial vertebrates’ membranes are orientated perpendicularly, such that they are insensitive to polarized light.

[35] However, some fish can discern polarized light, demonstrating that they possess some linear photoreceptors. Like color vision, sensitivity to polarization can aid in an organism's ability to differentiate their surrounding objects and individuals. Because of the marginal reflective interference of polarized light, it is often used for orientation and navigation, as well as distinguishing concealed objects, such as disguised prey.

[35]

Focusing mechanism

By utilizing the iris sphincter muscle, some species move the lens back and forth, some stretch the lens flatter. Another mechanism regulates focusing chemically and independently of these two, by controlling growth of the eye and maintaining focal length. In addition, the pupil shape can be used to predict the focal system being utilized. A slit pupil can indicate the common multifocal system, while circle pupil usually specifies a monofocal system. When using a circlular form, the pupil will constrict under bright light, increasing the focal length, and will dilate when dark in order to decrease the depth of focus.

[36] Note that a focusing method is not a requirement. As photographers know, focal errors increase as

aperture increases. Thus, countless organisms with small eyes are active in direct sunlight and survive with no focus mechanism at all. As a species grows larger, or transitions to dimmer environments, a means of focusing need only appear gradually.

Location

Prey generally have eyes on the sides of their head so to have a larger field of view, from which to avoid predators. Predators, however, have eyes in front of their head in order to have better

depth perception.

[37][38] Flatfish are predators which lie on their side on the bottom, and have eyes placed asymmetrically on the same side of the head. A

transitional fossil from the common symmetric position is

Amphistium.

Evolutionary baggage

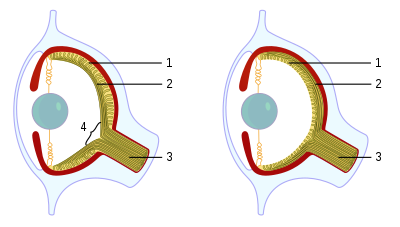

Vertebrates and

octopuses developed the camera eye

independently. In the vertebrate version the nerve fibers pass

in front of the

retina, and there is a

blind spot where the nerves pass through the retina. In the vertebrate example,

4 represents the

blind spot, which is notably absent from the octopus eye. In vertebrates,

1 represents the

retina and

2 is the nerve fibers, including the optic nerve (

3), whereas in the octopus eye,

1 and

2 represent the nerve fibers and retina respectively.

The eyes of many taxa record their evolutionary history in their contemporary anatomy. The vertebrate eye, for instance, is built "backwards and upside down", requiring "photons of light to travel through the cornea, lens, aqueous fluid, blood vessels, ganglion cells, amacrine cells, horizontal cells, and bipolar cells before they reach the light-sensitive rods and cones that transduce the light signal into neural impulses, which are then sent to the visual cortex at the back of the brain for processing into meaningful patterns."

[39] While such a construct has some drawbacks, it also allows the outer retina of the vertebrates to sustain higher metabolic activities as compared to the non-inverted design.

[40] It also allowed for the evolution of the choroid layer,