From Wikipedia, the free encyclopedia

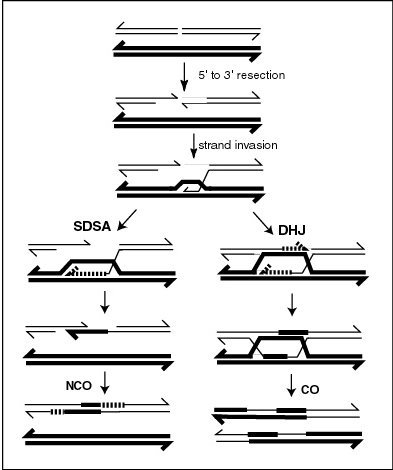

A current model of meiotic recombination, initiated by a double-strand break or gap, followed by pairing with an homologous chromosome and strand invasion to initiate the recombinational repair process. Repair of the gap can lead to crossover (CO) or non-crossover (NCO) of the flanking regions. CO recombination is thought to occur by the Double Holliday Junction (DHJ) model, illustrated on the right, above. NCO recombinants are thought to occur primarily by the Synthesis Dependent Strand Annealing (SDSA) model, illustrated on the left, above. Most recombination events appear to be the SDSA type.

Genetic recombination is the production of offspring with combinations of traits that differ from those found in either parent. In eukaryotes, genetic recombination during meiosis can lead, through sexual reproduction, to a novel set of genetic information that can be passed on through heredity from the parents to the offspring. Most recombination is naturally occurring. During meiosis in eukaryotes, genetic recombination involves the pairing of homologous chromosomes. This may be followed by information exchange between the chromosomes. The information exchange may occur without physical exchange (a section of genetic material is copied from one chromosome to another, without the donating chromosome being changed) (see SDSA pathway in Figure); or by the breaking and rejoining of DNA strands, which forms new molecules of DNA (see DHJ pathway in Figure). Recombination may also occur during mitosis in eukaryotes where it ordinarily involves the two sister chromosomes formed after chromosomal replication. In this case, new combinations of alleles are not produced since the sister chromosomes are usually identical. In meiosis and mitosis, recombination occurs between homologous that is similar molecules (homologs) of DNA. In meiosis, non-sister homologous chromosomes pair with each other so that recombination characteristically occurs between non-sister homologues. In both meiotic and mitotic cells, recombination between homologous chromosomes is a common mechanism used in DNA repair.

Genetic recombination and recombinational DNA repair also occurs in bacteria and archaea, which use asexual reproduction.

Recombination can be artificially induced in laboratory (in vitro) settings, producing recombinant DNA for purposes including vaccine development.

V(D)J recombination in organisms with an adaptive immune system is a type of site-specific genetic recombination that helps immune cells rapidly diversify to recognize and adapt to new pathogens.

Synapsis

During meiosis, synapsis (the pairing of homologous chromosomes) ordinarily precedes genetic recombination.Mechanism

Genetic recombination is catalyzed by many different enzymes. Recombinases are key enzymes that catalyse the strand transfer step during recombination. RecA, the chief recombinase found in Escherichia coli, is responsible for the repair of DNA double strand breaks (DSBs). In yeast and other eukaryotic organisms there are two recombinases required for repairing DSBs. The RAD51 protein is required for mitotic and meiotic recombination, whereas the DNA repair protein, DMC1, is specific to meiotic recombination. In the archaea, the ortholog of the bacterial RecA protein is RadA.Chromosomal crossover

In eukaryotes, recombination during meiosis is facilitated by chromosomal crossover. The crossover process leads to offspring having different combinations of genes from those of their parents, and can occasionally produce new chimeric alleles. The shuffling of genes brought about by genetic recombination produces increased genetic variation. It also allows sexually reproducing organisms to avoid Muller's ratchet, in which the genomes of an asexual population accumulate genetic deletions in an irreversible manner.

Chromosomal crossover involves recombination between the paired chromosomes inherited from each of one's parents, generally occurring during meiosis. During prophase I (pachytene stage) the four available chromatids are in tight formation with one another. While in this formation, homologous sites on two chromatids can closely pair with one another, and may exchange genetic information.[1]

Because recombination can occur with small probability at any location along chromosome, the frequency of recombination between two locations depends on the distance separating them. Therefore, for genes sufficiently distant on the same chromosome the amount of crossover is high enough to destroy the correlation between alleles.

Tracking the movement of genes resulting from crossovers has proven quite useful to geneticists. Because two genes that are close together are less likely to become separated than genes that are farther apart, geneticists can deduce roughly how far apart two genes are on a chromosome if they know the frequency of the crossovers. Geneticists can also use this method to infer the presence of certain genes. Genes that typically stay together during recombination are said to be linked. One gene in a linked pair can sometimes be used as a marker to deduce the presence of another gene. This is typically used in order to detect the presence of a disease-causing gene.[2]

Gene conversion

In gene conversion, a section of genetic material is copied from one chromosome to another, without the donating chromosome being changed. Gene conversion occurs at high frequency at the actual site of the recombination event during meiosis. It is a process by which a DNA sequence is copied from one DNA helix (which remains unchanged) to another DNA helix, whose sequence is altered. Gene conversion has often been studied in fungal crosses[3] where the 4 products of individual meioses can be conveniently observed. Gene conversion events can be distinguished as deviations in an individual meiosis from the normal 2:2 segregation pattern (e.g. a 3:1 pattern).Nonhomologous recombination

Recombination can occur between DNA sequences that contain no sequence homology. This can cause chromosomal translocations, sometimes leading to cancer.In B cells

B cells of the immune system perform genetic recombination, called immunoglobulin class switching. It is a biological mechanism that changes an antibody from one class to another, for example, from an isotype called IgM to an isotype called IgG.Genetic engineering

In genetic engineering, recombination can also refer to artificial and deliberate recombination of disparate pieces of DNA, often from different organisms, creating what is called recombinant DNA. A prime example of such a use of genetic recombination is gene targeting, which can be used to add, delete or otherwise change an organism's genes. This technique is important to biomedical researchers as it allows them to study the effects of specific genes.Techniques based on genetic recombination are also applied in protein engineering to develop new proteins of biological interest.

Recombinational repair

During both mitosis and meiosis, DNA damages caused by a variety of exogenous agents (e.g. UV light, X-rays, chemical cross-linking agents) can be repaired by homologous recombinational repair (HRR).[4] These findings suggest that DNA damages arising from natural processes, such as exposure to reactive oxygen species that are byproducts of normal metabolism, are also repaired by HRR. In humans and rodents, deficiencies in the gene products necessary for HRR during meiosis cause infertility.[4] In humans, deficiencies in gene products necessary for HRR, such as BRCA1 and BRCA2, increase the risk of cancer (see DNA repair-deficiency disorder).In bacteria, transformation is a process of gene transfer that ordinarily occurs between individual cells of the same bacterial species. Transformation involves integration of donor DNA into the recipient chromosome by recombination. This process appears to be an adaptation for repairing DNA damages in the recipient chromosome by HRR.[5] Transformation may provide a benefit to pathogenic bacteria by allowing repair of DNA damage, particularly damages that occur in the inflammatory, oxidizing environment associated with infection of a host.

When two or more viruses, each containing lethal genomic damages, infect the same host cell, the virus genomes can often pair with each other and undergo HRR to produce viable progeny. This process, referred to as multiplicity reactivation, has been studied in bacteriophages T4 and lambda,[6] as well as in several pathogenic viruses. In the case of pathogenic viruses, multiplicity reactivation may be an adaptive benefit to the virus since it allows the repair of DNA damages caused by exposure to the oxidizing environment produced during host infection.[5]

Meiotic recombination

Molecular models of meiotic recombination have evolved over the years as relevant evidence accumulated. A major incentive for developing a fundamental understanding of the mechanism of meiotic recombination is that such understanding is crucial for solving the problem of the adaptive function of sex, a major unresolved issue in biology. A recent model that reflects current understanding was presented by Anderson and Sekelsky,[7] and is outlined in the first figure in this article. The figure shows that two of the four chromatids present early in meiosis (prophase I) are paired with each other and able to interact. Recombination, in this version of the model, is initiated by a double-strand break (or gap) shown in the DNA molecule (chromatid) at the top of the first figure in this article. However, other types of DNA damage may also initiate recombination. For instance, an inter-strand cross-link (caused by exposure to a cross-linking agent such as mitomycin C) can be repaired by HRR.As indicated in the first figure, above, two types of recombinant product are produced. Indicated on the right side is a “crossover” (CO) type, where the flanking regions of the chromosomes are exchanged, and on the left side, a “non-crossover” (NCO) type where the flanking regions are not exchanged. The CO type of recombination involves the intermediate formation of two “Holliday junctions” indicated in the lower right of the figure by two X shaped structures in each of which there is an exchange of single strands between the two participating chromatids. This pathway is labeled in the figure as the DHJ (double-Holliday junction) pathway.

The NCO recombinants (illustrated on the left in the figure) are produced by a process referred to as “synthesis dependent strand annealing” (SDSA). Recombination events of the NCO/SDSA type appear to be more common than the CO/DHJ type.[4] The NCO/SDSA pathway contributes little to genetic variation since the arms of the chromosomes flanking the recombination event remain in the parental configuration. Thus, explanations for the adaptive function of meiosis that focus exclusively on crossing-over are inadequate to explain the majority of recombination events.