The main goal of MIC is to extract clinically relevant

information or knowledge from medical images. While closely related to

the field of medical imaging,

MIC focuses on the computational analysis of the images, not their

acquisition. The methods can be grouped into several broad categories: image segmentation, image registration, image-based physiological modeling, and others.

Data forms

Medical

image computing typically operates on uniformly sampled data with

regular x-y-z spatial spacing (images in 2D and volumes in 3D,

generically referred to as images). At each sample point, data is

commonly represented in integral

form such as signed and unsigned short (16-bit), although forms from

unsigned char (8-bit) to 32-bit float are not uncommon. The particular

meaning of the data at the sample point depends on modality: for example

a CT acquisition collects radiodensity values, while a MRI acquisition may collect T1 or T2-weighted

images. Longitudinal, time-varying acquisitions may or may not acquire

images with regular time steps. Fan-like images due to modalities such

as curved-array ultrasound

are also common and require different representational and algorithmic

techniques to process. Other data forms include sheared images due to gantry tilt during acquisition; and unstructured meshes, such as hexahedral and tetrahedral forms, which are used in advanced biomechanical analysis (e.g., tissue deformation, vascular transport, bone implants).

Segmentation

A T1 weighted MR image of the brain of a patient with a meningioma after injection of a MRI contrast agent

(top left), and the same image with the result of an interactive

segmentation overlaid in green (3D model of the segmentation on the top

right, axial and coronal views at the bottom).

Segmentation is the process of partitioning an image into different

meaningful segments. In medical imaging, these segments often correspond

to different tissue classes, organs, pathologies, or other biologically relevant structures. Medical image segmentation is made difficult by low contrast, noise, and other imaging ambiguities. Although there are many computer vision techniques for image segmentation,

some have been adapted specifically for medical image computing. Below

is a sampling of techniques within this field; the implementation relies

on the expertise that clinicians can provide.

- Atlas-Based Segmentation: For many applications, a clinical expert can manually label several images; segmenting unseen images is a matter of extrapolating from these manually labeled training images. Methods of this style are typically referred to as atlas-based segmentation methods. Parametric atlas methods typically combine these training images into a single atlas image, while nonparametric atlas methods typically use all of the training images separately. Atlas-based methods usually require the use of image registration in order to align the atlas image or images to a new, unseen image.

- Shape-Based Segmentation: Many methods parametrize a template shape for a given structure, often relying on control points along the boundary. The entire shape is then deformed to match a new image. Two of the most common shape-based techniques are Active Shape Models and Active Appearance Models. These methods have been very influential, and have given rise to similar models.

- Image-Based segmentation: Some methods initiate a template and refine its shape according to the image data while minimizing integral error measures, like the Active contour model and its variations.

- Interactive Segmentation: Interactive methods are useful when clinicians can provide some information, such as a seed region or rough outline of the region to segment. An algorithm can then iteratively refine such a segmentation, with or without guidance from the clinician. Manual segmentation, using tools such as a paint brush to explicitly define the tissue class of each pixel, remains the gold standard for many imaging applications. Recently, principles from feedback control theory have been incorporated into segmentation, which give the user much greater flexibility and allow for the automatic correction of errors.

- Subjective surface Segmentation: This method is based on the idea of evolution of segmentation function which is governed by an advection-diffusion model. To segment an object, a segmentation seed is needed (that is the starting point that determines the approximate position of the object in the image). Consequently, an initial segmentation function is constructed. The idea behind the subjective surface method is that the position of the seed is the main factor determining the form of this segmentation function.

However, there are some other classification of image segmentation

methods which are similar to above categories. Moreover, we can classify

another group as “Hybrid” which is

based on combination of methods.

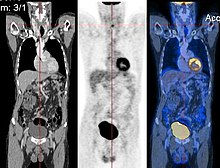

Registration

CT image (left), PET image (center) and overlay of both (right) after correct registration.

Image registration is a process that searches for the correct alignment of images.

In the simplest case, two images are aligned. Typically, one image is

treated as the target image and the other is treated as a source image;

the source image is transformed to match the target image. The optimization procedure

updates the transformation of the source image based on a similarity

value that evaluates the current quality of the alignment. This

iterative procedure is repeated until a (local) optimum is found. An

example is the registration of CT and PET images to combine structural and metabolic information (see figure).

Image registration is used in a variety of medical applications:

- Studying temporal changes. Longitudinal studies acquire images over several months or years to study long-term processes, such as disease progression. Time series correspond to images acquired within the same session (seconds or minutes). They can be used to study cognitive processes, heart deformations and respiration.

- Combining complementary information from different imaging modalities. An example is the fusion of anatomical and functional information. Since the size and shape of structures vary across modalities, it is more challenging to evaluate the alignment quality. This has led to the use of similarity measures such as mutual information.

- Characterizing a population of subjects. In contrast to intra-subject registration, a one-to-one mapping may not exist between subjects, depending on the structural variability of the organ of interest. Inter-subject registration is required for atlas construction in computational anatomy. Here, the objective is to statistically model the anatomy of organs across subjects.

- Computer-assisted surgery. In computer-assisted surgery pre-operative images such as CT or MRI are registered to intra-operative images or tracking systems to facilitate image guidance or navigation.

There are several important considerations when performing image registration:

- The transformation model. Common choices are rigid, affine, and deformable transformation models. B-spline and thin plate spline models are commonly used for parameterized transformation fields. Non-parametric or dense deformation fields carry a displacement vector at every grid location; this necessitates additional regularization constraints. A specific class of deformation fields are diffeomorphisms, which are invertible transformations with a smooth inverse.

- The similarity metric. A distance or similarity function is used to quantify the registration quality. This similarity can be calculated either on the original images or on features extracted from the images. Common similarity measures are sum of squared distances (SSD), correlation coefficient, and mutual information. The choice of similarity measure depends on whether the images are from the same modality; the acquisition noise can also play a role in this decision. For example, SSD is the optimal similarity measure for images of the same modality with Gaussian noise. However, the image statistics in ultrasound are significantly different from Gaussian noise, leading to the introduction of ultrasound specific similarity measures. Multi-modal registration requires a more sophisticated similarity measure; alternatively, a different image representation can be used, such as structural representations or registering adjacent anatomy.

- The optimization procedure. Either continuous or discrete optimization is performed. For continuous optimization, gradient-based optimization techniques are applied to improve the convergence speed.

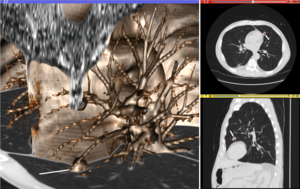

Visualization

Volume

rendering (left), axial cross-section (right top), and sagittal

cross-section (right bottom) of a CT image of a subject with multiple

nodular lesions (white line) in the lung.

Visualization plays several key roles in Medical Image Computing. Methods from scientific visualization are used to understand and communicate about medical images, which are inherently spatial-temporal. Data visualization and data analysis are used on unstructured data forms, for example when evaluating statistical measures derived during algorithmic processing. Direct interaction

with data, a key feature of the visualization process, is used to

perform visual queries about data, annotate images, guide segmentation

and registration processes, and control the visual representation of

data (by controlling lighting rendering properties and viewing

parameters). Visualization is used both for initial exploration and for

conveying intermediate and final results of analyses.

The figure "Visualization of Medical Imaging" illustrates several

types of visualization: 1. the display of cross-sections as gray scale

images; 2. reformatted views of gray scale images (the sagittal view in

this example has a different orientation than the original direction of

the image acquisition; and 3. A 3D volume rendering

of the same data. The nodular lesion is clearly visible in the

different presentations and has been annotated with a white line.

Atlases

Medical

images can vary significantly across individuals due to people having

organs of different shapes and sizes. Therefore, representing medical

images to account for this variability is crucial. A popular approach to

represent medical images is through the use of one or more atlases.

Here, an atlas refers to a specific model for a population of images

with parameters that are learned from a training dataset.

The simplest example of an atlas is a mean intensity image,

commonly referred to as a template. However, an atlas can also include

richer information, such as local image statistics and the probability

that a particular spatial location has a certain label. New medical

images, which are not used during training, can be mapped to an atlas,

which has been tailored to the specific application, such as segmentation and group analysis. Mapping an image to an atlas usually involves registering the image and the atlas. This deformation can be used to address variability in medical images.

Single template

The

simplest approach is to model medical images as deformed versions of a

single template image. For example, anatomical MRI brain scans are often

mapped to the MNI template

as to represent all the brain scans in common coordinates. The main

drawback of a single-template approach is that if there are significant

differences between the template and a given test image, then there may

not be a good way to map one onto the other. For example, an anatomical

MRI brain scan of a patient with severe brain abnormalities (i.e., a

tumor or surgical procedure), may not easily map to the MNI template.

Multiple templates

Rather

than relying on a single template, multiple templates can be used. The

idea is to represent an image as a deformed version of one of the

templates. For example, there could be one template for a healthy

population and one template for a diseased population. However, in many

applications, it is not clear how many templates are needed. A simple

albeit computationally expensive way to deal with this is to have every

image in a training dataset be a template image and thus every new image

encountered is compared against every image in the training dataset. A

more recent approach automatically finds the number of templates needed.

Statistical analysis

Statistical methods combine the medical imaging field with modern Computer Vision, Machine Learning and Pattern Recognition.

Over the last decade, several large datasets have been made publicly

available (see for example ADNI, 1000 functional Connectomes Project),

in part due to collaboration between various institutes and research

centers. This increase in data size calls for new algorithms that can

mine and detect subtle changes in the images to address clinical

questions. Such clinical questions are very diverse and include group

analysis, imaging biomarkers, disease phenotyping and longitudinal

studies.

Group analysis

In

the Group Analysis, the objective is to detect and quantize

abnormalities induced by a disease by comparing the images of two or

more cohorts. Usually one of these cohorts consist of normal (control)

subjects, and the other one consists of abnormal patients. Variation

caused by the disease can manifest itself as abnormal deformation of

anatomy. For example, shrinkage of sub-cortical tissues such as the Hippocampus in brain may be linked to Alzheimer's disease. Additionally, changes in biochemical (functional) activity can be observed using imaging modalities such as Positron Emission Tomography.

The comparison between groups is usually conducted on the voxel level. Hence, the most popular pre-processing pipeline, particularly in neuroimaging, transforms all of the images in a dataset to a common coordinate frame via (Medical Image Registration) in order to maintain correspondence between voxels. Given this voxel-wise correspondence, the most common Frequentist method is to extract a statistic for each voxel (for example, the mean voxel intensity for each group) and perform statistical hypothesis testing

to evaluate whether a null hypothesis is or is not supported. The null

hypothesis typically assumes that the two cohorts are drawn from the

same distribution, and hence, should have the same statistical

properties (for example, the mean values of two groups are equal for a

particular voxel). Since medical images contain large numbers of voxels,

the issue of multiple comparison needs to be addressed,. There are also Bayesian approaches to tackle group analysis problem.

Classification

Although

group analysis can quantify the general effects of a pathology on an

anatomy and function, it does not provide subject level measures, and

hence cannot be used as biomarkers for diagnosis (see Imaging

Biomarkers). Clinicians, on the other hand, are often interested in

early diagnosis of the pathology (i.e. classification) and in learning the progression of a disease (i.e. regression).

From methodological point of view, current techniques varies from

applying standard machine learning algorithms to medical imaging

datasets (e.g. Support Vector Machine), to developing new approaches adapted for the needs of the field. The main difficulties are as follows:

- Small sample size: a large medical imaging dataset contains hundreds to thousands of images, whereas the number of voxels in a typical volumetric image can easily go beyond millions. A remedy to this problem is to reduce the number of features in an informative sense. Several unsupervised and semi-/supervised, approaches have been proposed to address this issue.

- Interpretability: A good generalization accuracy is not always the primary objective, as clinicians would like to understand which parts of anatomy are affected by the disease. Therefore, interpretability of the results is very important; methods that ignore the image structure are not favored. Alternative methods based on feature selection have been proposed,.

Clustering

Image-based

pattern classification methods typically assume that the neurological

effects of a disease are distinct and well defined. This may not always

be the case. For a number of medical conditions, the patient populations

are highly heterogeneous, and further categorization into

sub-conditions has not been established. Additionally, some diseases

(e.g., Autism Spectrum Disorder (ASD), Schizophrenia, Mild cognitive impairment

(MCI)) can be characterized by a continuous or nearly-continuous

spectra from mild cognitive impairment to very pronounced pathological

changes. To facilitate image-based analysis of heterogeneous disorders,

methodological alternatives to pattern classification have been

developed. These techniques borrow ideas from high-dimensional

clustering

and high-dimensional pattern-regression to cluster a given population

into homogeneous sub-populations. The goal is to provide a better

quantitative understanding of the disease within each sub-population.

Shape analysis

Shape Analysis is the field of Medical Image Computing that studies geometrical properties of structures obtained from different imaging modalities. Shape analysis recently become of increasing interest to the medical community due to its potential to precisely locate morphological

changes between different populations of structures, i.e. healthy vs

pathological, female vs male, young vs elderly. Shape Analysis includes

two main steps: shape correspondence and statistical analysis.

- Shape correspondence is the methodology that computes correspondent locations between geometric shapes represented by triangle meshes, contours, point sets or volumetric images. Obviously definition of correspondence will influence directly the analysis. Among the different options for correspondence frameworks we can find: Anatomical correspondence, manual landmarks, functional correspondence (i.e. in brain morphometry locus responsible for same neuronal functionality), geometry correspondence, (for image volumes) intensity similarity, etc. Some approaches, e.g. spectral shape analysis, do not require correspondence but compare shape descriptors directly.

- Statistical analysis will provide measurements of structural change at correspondent locations.

Longitudinal studies

In longitudinal studies the same person is imaged repeatedly. This information can be incorporated both into the image analysis, as well as into the statistical modeling.

- In longitudinal image processing, segmentation and analysis methods of individual time points are informed and regularized with common information usually from a within-subject template. This regularization is designed to reduce measurement noise and thus helps increase sensitivity and statistical power. At the same time over-regularization needs to be avoided, so that effect sizes remain stable. Intense regularization, for example, can lead to excellent test-retest reliability, but limits the ability to detect any true changes and differences across groups. Often a trade-off needs to be aimed for, that optimizes noise reduction at the cost of limited effect size loss. Another common challenge in longitudinal image processing is the, often unintentional, introduction of processing bias. When, for example, follow-up images get registered and resampled to the baseline image, interpolation artifacts get introduced to only the follow-up images and not the baseline. These artifact can cause spurious effects (usually a bias towards overestimating longitudinal change and thus underestimating required sample size). It is therefore essential that all-time points get treated exactly the same to avoid any processing bias.

- Post-processing and statistical analysis of longitudinal data usually requires dedicated statistical tools such as repeated measure ANOVA or the more powerful linear mixed effects models. Additionally, it is advantageous to consider the spatial distribution of the signal. For example, cortical thickness measurements will show a correlation within-subject across time and also within a neighborhood on the cortical surface - a fact that can be used to increase statistical power. Furthermore, time-to-event (aka survival) analysis is frequently employed to analyze longitudinal data and determine significant predictors.

Image-based physiological modeling

Traditionally,

medical image computing has seen to address the quantification and

fusion of structural or functional information available at the point

and time of image acquisition. In this regard, it can be seen as

quantitative sensing of the underlying anatomical, physical or

physiological processes. However, over the last few years, there has

been a growing interest in the predictive assessment of disease or

therapy course. Image-based modelling, be it of biomechanical or

physiological nature, can therefore extend the possibilities of image

computing from a descriptive to a predictive angle.

According to the STEP research roadmap, the Virtual Physiological Human

(VPH) is a methodological and technological framework that, once

established, will enable the investigation of the human body as a single

complex system. Underlying the VPH concept, the International Union for

Physiological Sciences (IUPS) has been sponsoring the IUPS Physiome Project for more than a decade,.

This is a worldwide public domain effort to provide a computational

framework for understanding human physiology. It aims at developing

integrative models at all levels of biological organization, from genes

to the whole organisms via gene regulatory networks, protein pathways,

integrative cell functions, and tissue and whole organ

structure/function relations. Such an approach aims at transforming

current practice in medicine and underpins a new era of computational

medicine.

In this context, medical imaging and image computing play an

increasingly important role as they provide systems and methods to

image, quantify and fuse both structural and functional information

about the human being in vivo. These two broad research areas include

the transformation of generic computational models to represent specific

subjects, thus paving the way for personalized computational models. Individualization of generic computational models through imaging can be realized in three complementary directions:

- Definition of the subject-specific computational domain (anatomy) and related subdomains (tissue types);

- Definition of boundary and initial conditions from (dynamic and/or functional) imaging; and

- Characterization of structural and functional tissue properties.

In addition, imaging also plays a pivotal role in the evaluation and

validation of such models both in humans and in animal models, and in

the translation of models to the clinical setting with both diagnostic

and therapeutic applications. In this specific context, molecular,

biological, and pre-clinical imaging render additional data and

understanding of basic structure and function in molecules, cells,

tissues and animal models that may be transferred to human physiology

where appropriate.

The applications of image-based VPH/Physiome models in basic and

clinical domains are vast. Broadly speaking, they promise to become new virtual imaging techniques. Effectively more, often non-observable, parameters will be imaged in silico

based on the integration of observable but sometimes sparse and

inconsistent multimodal images and physiological measurements.

Computational models will serve to engender interpretation of the

measurements in a way compliant with the underlying biophysical,

biochemical or biological laws of the physiological or

pathophysiological processes under investigation. Ultimately, such

investigative tools and systems will help our understanding of disease

processes, the natural history of disease evolution, and the influence

on the course of a disease of pharmacological and/or interventional

therapeutic procedures.

Cross-fertilization between imaging and modelling goes beyond

interpretation of measurements in a way consistent with physiology.

Image-based patient-specific modelling, combined with models of medical

devices and pharmacological therapies, opens the way to predictive

imaging whereby one will be able to understand, plan and optimize such

interventions in silico.

Mathematical methods in medical imaging

A number of sophisticated mathematical methods have entered medical imaging, and have already been

implemented in various software packages. These include approaches based on partial differential equations

(PDEs) and curvature driven flows for enhancement, segmentation, and

registration. Since they employ PDEs, the methods are amenable to

parallelization and implementation on GPGPUs. A number of these

techniques have been inspired from ideas in optimal control.

Accordingly, very recently ideas from control have recently made their

way into interactive methods, especially segmentation. Moreover, because

of noise and the need for statistical estimation techniques for more

dynamically changing imagery, the Kalman filter and particle filter have come into use. A survey of these methods with an extensive list of references may be found in.

Modality specific computing

Some

imaging modalities provide very specialized information. The resulting

images cannot be treated as regular scalar images and give rise to new

sub-areas of Medical Image Computing. Examples include diffusion MRI,

functional MRI and others.

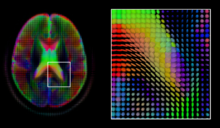

Diffusion MRI

A

mid-axial slice of the ICBM diffusion tensor image template. Each

voxel's value is a tensor represented here by an ellipsoid. Color

denotes principal orientation: red = left-right, blue=inferior-superior,

green = posterior-anterior

Diffusion MRI is a structural magnetic resonance imaging

modality that allows measurement of the diffusion process of molecules.

Diffusion is measured by applying a gradient pulse to a magnetic field

along a particular direction. In a typical acquisition, a set of

uniformly distributed gradient directions is used to create a set of

diffusion weighted volumes. In addition, an unweighted volume is

acquired under the same magnetic field without application of a gradient

pulse. As each acquisition is associated with multiple volumes, diffusion MRI has created a variety of unique challenges in medical image computing.

In medicine, there are two major computational goals in diffusion MRI:

- Estimation of local tissue properties, such as diffusivity;

- Estimation of local directions and global pathways of diffusion.

The diffusion tensor, a 3 × 3 symmetric positive-definite matrix,

offers a straightforward solution to both of these goals. It is

proportional to the covariance matrix of a Normally distributed local

diffusion profile and, thus, the dominant eigenvector of this matrix is

the principal direction of local diffusion. Due to the simplicity of

this model, a maximum likelihood estimate of the diffusion tensor can be

found by simply solving a system of linear equations at each location

independently. However, as the volume is assumed to contain contiguous

tissue fibers, it may be preferable to estimate the volume of diffusion

tensors in its entirety by imposing regularity conditions on the

underlying field of tensors. Scalar values can be extracted from the diffusion tensor, such as the fractional anisotropy, mean, axial and radial diffusivities, which indirectly measure tissue properties such as the dysmyelination of axonal fibers or the presence of edema.

Standard scalar image computing methods, such as registration and

segmentation, can be applied directly to volumes of such scalar values.

However, to fully exploit the information in the diffusion tensor, these

methods have been adapted to account for tensor valued volumes when

performing registration and segmentation.

Given the principal direction of diffusion at each location in

the volume, it is possible to estimate the global pathways of diffusion

through a process known as tractography. However, due to the relatively low resolution of diffusion MRI, many of these pathways may cross, kiss or fan at a single location. In this situation, the single principal direction of the diffusion tensor

is not an appropriate model for the local diffusion distribution. The

most common solution to this problem is to estimate multiple directions

of local diffusion using more complex models. These include mixtures of

diffusion tensors, Q-ball imaging, diffusion spectrum imaging and fiber orientation distribution functions, which typically require HARDI

acquisition with a large number of gradient directions. As with the

diffusion tensor, volumes valued with these complex models require

special treatment when applying image computing methods, such as registration and segmentation.

Functional MRI

Functional magnetic resonance imaging (fMRI) is a medical imaging modality that indirectly measures neural activity by observing the local hemodynamics,

or blood oxygen level dependent signal (BOLD). fMRI data offers a range

of insights, and can be roughly divided into two categories:

- Task related fMRI is acquired as the subject is performing a sequence of timed experimental conditions. In block-design experiments, the conditions are present for short periods of time (e.g., 10 seconds) and are alternated with periods of rest. Event-related experiments rely on a random sequence of stimuli and use a single time point to denote each condition. The standard approach to analyze task related fMRI is the general linear model (GLM)

- Resting state fMRI is acquired in the absence of any experimental task. Typically, the objective is to study the intrinsic network structure of the brain. Observations made during rest have also been linked to specific cognitive processes such as encoding or reflection. Most studies of resting state fMRI focus on low frequency fluctuations of the fMRI signal (LF-BOLD). Seminal discoveries include the default network, a comprehensive cortical parcellation, and the linking of network characteristics to behavioral parameters.

There is a rich set of methodology used to analyze functional neuroimaging data, and there is often no consensus regarding the best

method. Instead, researchers approach each problem independently and

select a suitable model/algorithm. In this context there is a relatively

active exchange among neuroscience, computational biology, statistics, and machine learning communities. Prominent approaches include

- Massive univariate approaches that probe individual voxels in the imaging data for a relationship to the experiment condition. The prime approach is the general linear model (GLM)

- Multivariate- and classifier based approaches, often referred to as multi voxel pattern analysis or multi-variate pattern analysis probe the data for global and potentially distributed responses to an experimental condition. Early approaches used support vector machines (SVM) to study responses to visual stimuli. Recently, alternative pattern recognition algorithms have been explored, such as random forest based gini contrast or sparse regression and dictionary learning

- Functional connectivity analysis studies the intrinsic network structure of the brain, including the interactions between regions. The majority of such studies focus on resting state data to parcelate the brain or to find correlates to behavioral measures. Task specific data can be used to study causal relationships among brain regions (e.g., dynamic causal mapping (DCM)).

When working with large cohorts of subjects, the normalization

(registration) of individual subjects into a common reference frame is

crucial. A body of work and tools exist to perform normalization based

on anatomy (FSL, FreeSurfer, SPM).

Alignment taking spatial variability across subjects into account is a

more recent line of work. Examples are the alignment of the cortex based

on fMRI signal correlation, the alignment based on the global functional connectivity structure both in task-, or resting state data, and the alignment based on stimulus specific activation profiles of individual voxels.

Software

Software

for medical image computing is a complex combination of systems

providing IO, visualization and interaction, user interface, data

management and computation. Typically system architectures are layered

to serve algorithm developers, application developers, and users. The

bottom layers are often libraries and/or toolkits which provide base

computational capabilities; while the top layers are specialized

applications which address specific medical problems, diseases, or body

systems.

Additional notes

Medical Image Computing is also related to the field of Computer Vision. An international society, the MICCAI society

represents the field and organizes an annual conference and associated

workshops. Proceedings for this conference are published by Springer in

the Lecture Notes in Computer Science series. In 2000, N. Ayache and J. Duncan reviewed the state of the field.