History

While

the analysis of variance reached fruition in the 20th century,

antecedents extend centuries into the past according to Stigler. These include hypothesis testing, the partitioning of sums of squares, experimental techniques and the additive model. Laplace was performing hypothesis testing in the 1770s. The development of least-squares methods by Laplace and Gauss

circa 1800 provided an improved method of combining observations (over

the existing practices then used in astronomy and geodesy). It also

initiated much study of the contributions to sums of squares. Laplace

knew how to estimate a variance from a residual (rather than a total)

sum of squares. By 1827, Laplace was using least squares methods to address ANOVA problems regarding measurements of atmospheric tides. Before 1800, astronomers had isolated observational errors resulting

from reaction times (the "personal equation") and had developed methods of reducing the errors. The experimental methods used in the study of the personal equation were later accepted by the emerging field of psychology which developed strong (full factorial) experimental methods to which randomization and blinding were soon added. An eloquent non-mathematical explanation of the additive effects model was

available in 1885.

Ronald Fisher introduced the term variance and proposed its formal analysis in a 1918 article The Correlation Between Relatives on the Supposition of Mendelian Inheritance. His first application of the analysis of variance was published in 1921. Analysis of variance became widely known after being included in Fisher's 1925 book Statistical Methods for Research Workers.

Randomization models were developed by several researchers. The first was published in Polish by Jerzy Neyman in 1923.

One of the attributes of ANOVA that ensured its early popularity

was computational elegance. The structure of the additive model allows

solution for the additive coefficients by simple algebra rather than by

matrix calculations. In the era of mechanical calculators this

simplicity was critical. The determination of statistical significance

also required access to tables of the F function which were supplied by

early statistics texts.

Motivating example

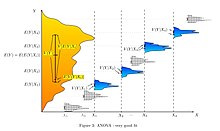

No fit.

Fair fit

Very good fit

The

analysis of variance can be used as an exploratory tool to explain

observations. A dog show provides an example. A dog show is not a

random sampling of the breed: it is typically limited to dogs that are

adult, pure-bred, and exemplary. A histogram of dog weights from a show

might plausibly be rather complex, like the yellow-orange distribution

shown in the illustrations. Suppose we wanted to predict the weight of a

dog based on a certain set of characteristics of each dog. One way to

do that is to explain the distribution of weights by dividing the

dog population into groups based on those characteristics. A successful

grouping will split dogs such that (a) each group has a low variance of

dog weights (meaning the group is relatively homogeneous) and (b) the

mean of each group is distinct (if two groups have the same mean, then

it isn't reasonable to conclude that the groups are, in fact, separate

in any meaningful way).

In the illustrations to the right, groups are identified as X1, X2,

etc. In the first illustration, the dogs are divided according to the

product (interaction) of two binary groupings: young vs old, and

short-haired vs long-haired (e.g., group 1 is young, short-haired dogs,

group 2 is young, long-haired dogs, etc.). Since the distributions of

dog weight within each of the groups (shown in blue) has a relatively

large variance, and since the means are very similar across groups,

grouping dogs by these characteristics does not produce an effective way

to explain the variation in dog weights: knowing which group a dog is

in doesn't allow us to predict its weight much better than simply

knowing the dog is in a dog show. Thus, this grouping fails to explain

the variation in the overall distribution (yellow-orange).

An attempt to explain the weight distribution by grouping dogs as pet vs working breed and less athletic vs more athletic

would probably be somewhat more successful (fair fit). The heaviest

show dogs are likely to be big, strong, working breeds, while breeds

kept as pets tend to be smaller and thus lighter. As shown by the

second illustration, the distributions have variances that are

considerably smaller than in the first case, and the means are more

distinguishable. However, the significant overlap of distributions, for

example, means that we cannot distinguish X1 and X2 reliably. Grouping dogs according to a coin flip might produce distributions that look similar.

An attempt to explain weight by breed is likely to produce a very

good fit. All Chihuahuas are light and all St Bernards are heavy. The

difference in weights between Setters and Pointers does not justify

separate breeds. The analysis of variance provides the formal tools to

justify these intuitive judgments. A common use of the method is the

analysis of experimental data or the development of models. The method

has some advantages over correlation: not all of the data must be

numeric and one result of the method is a judgment in the confidence in

an explanatory relationship.

Background and terminology

ANOVA is a form of statistical hypothesis testing heavily used in the analysis of experimental data. A test result (calculated from the null hypothesis and the sample) is called statistically significant if it is deemed unlikely to have occurred by chance, assuming the truth of the null hypothesis. A statistically significant result, when a probability (p-value) is less than a pre-specified threshold (significance level), justifies the rejection of the null hypothesis, but only if the a priori probability of the null hypothesis is not high.

In the typical application of ANOVA, the null hypothesis is that

all groups are random samples from the same population. For example,

when studying the effect of different treatments on similar samples of

patients, the null hypothesis would be that all treatments have the same

effect (perhaps none). Rejecting the null hypothesis is taken to mean

that the differences in observed effects between treatment groups are

unlikely to be due to random chance.

By construction, hypothesis testing limits the rate of Type I errors (false positives) to a significance level. Experimenters also wish to limit Type II errors (false negatives).

The rate of Type II errors depends largely on sample size (the rate is larger for smaller samples), significance

level (when the standard of proof is high, the chances of overlooking

a discovery are also high) and effect size (a smaller effect size is more prone to Type II error).

The terminology of ANOVA is largely from the statistical

design of experiments. The experimenter adjusts factors and

measures responses in an attempt to determine an effect. Factors are

assigned to experimental units by a combination of randomization and

blocking to ensure the validity of the results. Blinding keeps the

weighing impartial. Responses show a variability that is partially

the result of the effect and is partially random error.

ANOVA is the synthesis of several ideas and it is used for multiple

purposes. As a consequence, it is difficult to define concisely or precisely.

"Classical" ANOVA for balanced data does three things at once:

- As exploratory data analysis, an ANOVA employs an additive data decomposition, and its sums of squares indicate the variance of each component of the decomposition (or, equivalently, each set of terms of a linear model).

- Comparisons of mean squares, along with an F-test ... allow testing of a nested sequence of models.

- Closely related to the ANOVA is a linear model fit with coefficient estimates and standard errors.

In short, ANOVA is a statistical tool used in several ways to develop and confirm an explanation for the observed data.

Additionally:

- It is computationally elegant and relatively robust against violations of its assumptions.

- ANOVA provides strong (multiple sample comparison) statistical analysis.

- It has been adapted to the analysis of a variety of experimental designs.

As a result:

ANOVA "has long enjoyed the status of being the most used (some would

say abused) statistical technique in psychological research."

ANOVA "is probably the most useful technique in the field of

statistical inference."

ANOVA is difficult to teach, particularly for complex experiments, with split-plot designs being notorious. In some cases the proper

application of the method is best determined by problem pattern recognition

followed by the consultation of a classic authoritative test.

Design-of-experiments terms

(Condensed from the "NIST Engineering Statistics Handbook": Section 5.7. A

Glossary of DOE Terminology.)

- Balanced design

- An experimental design where all cells (i.e. treatment combinations) have the same number of observations.

- Blocking

- A schedule for conducting treatment combinations in an experimental study such that any effects on the experimental results due to a known change in raw materials, operators, machines, etc., become concentrated in the levels of the blocking variable. The reason for blocking is to isolate a systematic effect and prevent it from obscuring the main effects. Blocking is achieved by restricting randomization.

- Design

- A set of experimental runs which allows the fit of a particular model and the estimate of effects.

- DOE

- Design of experiments. An approach to problem solving involving collection of data that will support valid, defensible, and supportable conclusions.

- Effect

- How changing the settings of a factor changes the response. The effect of a single factor is also called a main effect.

- Error

- Unexplained variation in a collection of observations. DOE's typically require understanding of both random error and lack of fit error.

- Experimental unit

- The entity to which a specific treatment combination is applied.

- Factors

- Process inputs that an investigator manipulates to cause a change in the output.

- Lack-of-fit error

- Error that occurs when the analysis omits one or more important terms or factors from the process model. Including replication in a DOE allows separation of experimental error into its components: lack of fit and random (pure) error.

- Model

- Mathematical relationship which relates changes in a given response to changes in one or more factors.

- Random error

- Error that occurs due to natural variation in the process. Random error is typically assumed to be normally distributed with zero mean and a constant variance. Random error is also called experimental error.

- Randomization

- A schedule for allocating treatment material and for conducting treatment combinations in a DOE such that the conditions in one run neither depend on the conditions of the previous run nor predict the conditions in the subsequent runs.

- Replication

- Performing the same treatment combination more than once. Including replication allows an estimate of the random error independent of any lack of fit error.

- Responses

- The output(s) of a process. Sometimes called dependent variable(s).

- Treatment

- A treatment is a specific combination of factor levels whose effect is to be compared with other treatments.

Classes of models

There are three classes of models used in the analysis of variance, and these are outlined here.

Fixed-effects models

The fixed-effects model (class I) of analysis of variance applies to

situations in which the experimenter applies one or more treatments to

the subjects of the experiment to see whether the response variable

values change. This allows the experimenter to estimate the ranges of

response variable values that the treatment would generate in the

population as a whole.

Random-effects models

Random-effects model (class II) is used when the treatments are not

fixed. This occurs when the various factor levels are sampled from a

larger population. Because the levels themselves are random variables,

some assumptions and the method of contrasting the treatments (a

multi-variable generalization of simple differences) differ from the

fixed-effects model.

Mixed-effects models

A mixed-effects model (class III) contains experimental factors of

both fixed and random-effects types, with appropriately different

interpretations and analysis for the two types.

Example:

Teaching experiments could be performed by a college or university department

to find a good introductory textbook, with each text considered a

treatment. The fixed-effects model would compare a list of candidate

texts. The random-effects model would determine whether important

differences exist among a list of randomly selected texts. The

mixed-effects model would compare the (fixed) incumbent texts to

randomly selected alternatives.

Defining fixed and random effects has proven elusive, with competing

definitions arguably leading toward a linguistic quagmire.

Assumptions

The analysis of variance has been studied from several approaches, the most common of which uses a linear model

that relates the response to the treatments and blocks. Note that the

model is linear in parameters but may be nonlinear across factor levels.

Interpretation is easy when data is balanced across factors but much

deeper understanding is needed for unbalanced data.

Textbook analysis using a normal distribution

The analysis of variance can be presented in terms of a linear model, which makes the following assumptions about the probability distribution of the responses:

- Independence of observations – this is an assumption of the model that simplifies the statistical analysis.

- Normality – the distributions of the residuals are normal.

- Equality (or "homogeneity") of variances, called homoscedasticity — the variance of data in groups should be the same.

The separate assumptions of the textbook model imply that the errors are independently, identically, and normally distributed for fixed effects models, that is, that the errors () are independent and

Randomization-based analysis

In a randomized controlled experiment,

the treatments are randomly assigned to experimental units, following

the experimental protocol. This randomization is objective and declared

before the experiment is carried out. The objective random-assignment is

used to test the significance of the null hypothesis, following the

ideas of C. S. Peirce and Ronald Fisher. This design-based analysis was discussed and developed by Francis J. Anscombe at Rothamsted Experimental Station and by Oscar Kempthorne at Iowa State University. Kempthorne and his students make an assumption of unit treatment additivity, which is discussed in the books of Kempthorne and David R. Cox.

Unit-treatment additivity

In its simplest form, the assumption of unit-treatment additivity states that the observed response from experimental unit when receiving treatment can be written as the sum of the unit's response and the treatment-effect , that is

The assumption of unit-treatment additivity implies that, for every treatment , the th treatment has exactly the same effect on every experiment unit.

The assumption of unit treatment additivity usually cannot be directly falsified, according to Cox and Kempthorne. However, many consequences of treatment-unit additivity can be falsified. For a randomized experiment, the assumption of unit-treatment additivity implies that the variance is constant for all treatments. Therefore, by contraposition, a necessary condition for unit-treatment additivity is that the variance is constant.

The use of unit treatment additivity and randomization is similar

to the design-based inference that is standard in finite-population survey sampling.

Derived linear model

Kempthorne uses the randomization-distribution and the assumption of unit treatment additivity to produce a derived linear model, very similar to the textbook model discussed previously.

The test statistics of this derived linear model are closely

approximated by the test statistics of an appropriate normal linear

model, according to approximation theorems and simulation studies.

However, there are differences. For example, the randomization-based

analysis results in a small but (strictly) negative correlation between

the observations. In the randomization-based analysis, there is no assumption of a normal distribution and certainly no assumption of independence. On the contrary, the observations are dependent!

The randomization-based analysis has the disadvantage that its

exposition involves tedious algebra and extensive time. Since the

randomization-based analysis is complicated and is closely approximated

by the approach using a normal linear model, most teachers emphasize the

normal linear model approach. Few statisticians object to model-based

analysis of balanced randomized experiments.

Statistical models for observational data

However, when applied to data from non-randomized experiments or observational studies, model-based analysis lacks the warrant of randomization. For observational data, the derivation of confidence intervals must use subjective models, as emphasized by Ronald Fisher

and his followers. In practice, the estimates of treatment-effects from

observational studies generally are often inconsistent. In practice,

"statistical models" and observational data are useful for suggesting

hypotheses that should be treated very cautiously by the public.

Summary of assumptions

The normal-model based ANOVA analysis assumes the independence, normality and

homogeneity of variances of the residuals. The

randomization-based analysis assumes only the homogeneity of the

variances of the residuals (as a consequence of unit-treatment

additivity) and uses the randomization procedure of the experiment.

Both these analyses require homoscedasticity,

as an assumption for the normal-model analysis and as a consequence of

randomization and additivity for the randomization-based analysis.

However, studies of processes that

change variances rather than means (called dispersion effects) have

been successfully conducted using ANOVA. There are

no necessary assumptions for ANOVA in its full generality, but the

F-test used for ANOVA hypothesis testing has assumptions and practical

limitations which are of continuing interest.

Problems which do not satisfy the assumptions of ANOVA can often

be transformed to satisfy the assumptions.

The property of unit-treatment additivity is not invariant under a

"change of scale", so statisticians often use transformations to achieve

unit-treatment additivity. If the response variable is expected to

follow a parametric family of probability distributions, then the

statistician may specify (in the protocol for the experiment or

observational study) that the responses be transformed to stabilize the

variance.

Also, a statistician may specify that logarithmic transforms be applied

to the responses, which are believed to follow a multiplicative model.

According to Cauchy's functional equation theorem, the logarithm is the only continuous transformation that transforms real multiplication to addition.

Characteristics

ANOVA is used in the analysis of comparative experiments, those in

which only the difference in outcomes is of interest. The statistical

significance of the experiment is determined by a ratio of two

variances. This ratio is independent of several possible alterations

to the experimental observations: Adding a constant to all

observations does not alter significance. Multiplying all

observations by a constant does not alter significance. So ANOVA

statistical significance result is independent of constant bias and

scaling errors as well as the units used in expressing observations.

In the era of mechanical calculation it was common to

subtract a constant from all observations (when equivalent to

dropping leading digits) to simplify data entry. This is an example of data

coding.

Logic

The calculations of ANOVA can be characterized as computing a number

of means and variances, dividing two variances and comparing the ratio

to a handbook value to determine statistical significance. Calculating

a treatment effect is then trivial, "the effect of any treatment is

estimated by taking the difference between the mean of the

observations which receive the treatment and the general mean".

Partitioning of the sum of squares

ANOVA uses traditional standardized terminology. The definitional

equation of sample variance is

, where the

divisor is called the degrees of freedom (DF), the summation is called

the sum of squares (SS), the result is called the mean square (MS) and

the squared terms are deviations from the sample mean. ANOVA

estimates 3 sample variances: a total variance based on all the

observation deviations from the grand mean, an error variance based on

all the observation deviations from their appropriate

treatment means, and a treatment variance. The treatment variance is

based on the deviations of treatment means from the grand mean, the

result being multiplied by the number of observations in each

treatment to account for the difference between the variance of

observations and the variance of means.

The fundamental technique is a partitioning of the total sum of squares SS

into components related to the effects used in the model. For example,

the model for a simplified ANOVA with one type of treatment at different

levels.

The number of degrees of freedom DF can be partitioned in a similar way: one of these components (that for error) specifies a chi-squared distribution which describes the associated sum of squares, while the same is true for "treatments" if there is no treatment effect.

The F-test

The F-test

is used for comparing the factors of the total deviation. For example,

in one-way, or single-factor ANOVA, statistical significance is tested

for by comparing the F test statistic

where MS is mean square, = number of treatments and

= total number of cases to the F-distribution with , degrees of freedom. Using the F-distribution

is a natural candidate because the test statistic is the ratio of two

scaled sums of squares each of which follows a scaled chi-squared distribution.

The expected value of F is (where n is the treatment sample size)

which is 1 for no treatment effect. As values of F increase above 1,

the evidence is increasingly inconsistent with the null hypothesis. Two

apparent experimental methods of increasing F are increasing the sample

size and reducing the error variance by tight experimental controls.

There are two methods of concluding the ANOVA hypothesis test, both of which produce the same result:

- The textbook method is to compare the observed value of F with the critical value of F determined from tables. The critical value of F is a function of the degrees of freedom of the numerator and the denominator and the significance level (α). If F ≥ FCritical, the null hypothesis is rejected.

- The computer method calculates the probability (p-value) of a value of F greater than or equal to the observed value. The null hypothesis is rejected if this probability is less than or equal to the significance level (α).

The ANOVA F-test is known to be nearly optimal in the sense of

minimizing false negative errors for a fixed rate of false positive

errors (i.e. maximizing power for a fixed significance level). For

example, to test the hypothesis that various medical treatments have

exactly the same effect, the F-test's p-values closely approximate the permutation test's p-values: The approximation is particularly close when the design is balanced. Such permutation tests characterize tests with maximum power against all alternative hypotheses, as observed by Rosenbaum. The ANOVA F-test

(of the null-hypothesis that all treatments have exactly the same

effect) is recommended as a practical test, because of its robustness

against many alternative distributions.

Extended logic

ANOVA consists of separable parts; partitioning sources of variance

and hypothesis testing can be used individually. ANOVA is used to

support other statistical tools. Regression is first used to fit more

complex models to data, then ANOVA is used to compare models with the

objective of selecting simple(r) models that adequately describe the

data. "Such models could be fit without any reference to ANOVA, but

ANOVA tools could then be used to make some sense of the fitted models,

and to test hypotheses about batches of coefficients."

"[W]e think of the analysis of variance as a way of understanding and structuring

multilevel models—not as an alternative to regression but as a tool

for summarizing complex high-dimensional inferences ..."

For a single factor

The simplest experiment suitable for ANOVA analysis is the completely

randomized experiment with a single factor. More complex experiments

with a single factor involve constraints on randomization and include

completely randomized blocks and Latin squares (and variants:

Graeco-Latin squares, etc.). The more complex experiments share many

of the complexities of multiple factors. A relatively complete

discussion of the analysis (models, data summaries, ANOVA table) of

the completely randomized experiment is

available.

For multiple factors

ANOVA generalizes to the study of the effects of multiple factors.

When the experiment includes observations at all combinations of

levels of each factor, it is termed factorial.

Factorial experiments

are more efficient than a series of single factor experiments and the

efficiency grows as the number of factors increases. Consequently, factorial designs are heavily used.

The use of ANOVA to study the effects of multiple factors has a

complication. In a 3-way ANOVA with factors x, y and z, the ANOVA model

includes terms for the main effects (x, y, z) and terms for interactions

(xy, xz, yz, xyz).

All terms require hypothesis tests. The proliferation of interaction

terms increases the risk that some hypothesis test will produce a false

positive by chance. Fortunately, experience says that high order

interactions are rare.

The ability to detect interactions is a major advantage of multiple

factor ANOVA. Testing one factor at a time hides interactions, but

produces apparently inconsistent experimental results.

Caution is advised when encountering interactions; Test

interaction terms first and expand the analysis beyond ANOVA if

interactions are found. Texts vary in their recommendations regarding

the continuation of the ANOVA procedure after encountering an

interaction. Interactions complicate the interpretation of

experimental data. Neither the calculations of significance nor the

estimated treatment effects can be taken at face value. "A

significant interaction will often mask the significance of main effects." Graphical methods are recommended

to enhance understanding. Regression is often useful. A lengthy discussion of interactions is available in Cox (1958). Some interactions can be removed (by transformations) while others cannot.

A variety of techniques are used with multiple factor ANOVA to

reduce expense. One technique used in factorial designs is to minimize

replication (possibly no replication with support of analytical trickery)

and to combine groups when effects are found to be statistically (or

practically) insignificant. An experiment with many insignificant

factors may collapse into one with a few factors supported by many

replications.

Worked numeric examples

Numerous fully worked numerical examples are available in standard textbooks and online. A

simple case uses one-way (a single factor) analysis.

Associated analysis

Some analysis is required in support of the design

of the experiment while other analysis is performed after changes in

the factors are formally found to produce statistically significant

changes in the responses. Because experimentation is iterative, the

results of one experiment alter plans for following experiments.

Preparatory analysis

The number of experimental units

In

the design of an experiment, the number of experimental units is

planned to satisfy the goals of the experiment. Experimentation is often

sequential.

Early experiments are often designed to provide mean-unbiased

estimates of treatment effects and of experimental error. Later

experiments are often designed to test a hypothesis that a treatment

effect has an important magnitude; in this case, the number of

experimental units is chosen so that the experiment is within budget and

has adequate power, among other goals.

Reporting sample size analysis is generally required in

psychology. "Provide information on sample size and the process that led

to sample size decisions."

The analysis, which is written in the experimental protocol before the

experiment is conducted, is examined in grant applications and

administrative review boards.

Besides the power analysis, there are less formal methods for

selecting the number of experimental units. These include graphical

methods based on limiting

the probability of false negative errors, graphical methods based on an

expected variation increase (above the residuals) and methods based on

achieving a desired confident interval.

Power analysis

Power analysis

is often applied in the context of ANOVA in order to assess the

probability of successfully rejecting the null hypothesis if we assume a

certain ANOVA design, effect size in the population, sample size and

significance level. Power analysis can assist in study design by

determining what sample size would be required in order to have a

reasonable chance of rejecting the null hypothesis when the alternative

hypothesis is true.

Effect size

Several standardized measures of effect have been proposed for ANOVA

to summarize the strength of the association between a predictor(s) and

the dependent variable or the overall standardized difference of the

complete model. Standardized effect-size estimates facilitate comparison

of findings across studies and disciplines. However, while

standardized effect sizes are commonly used in much of the professional

literature, a non-standardized measure of effect size that has

immediately "meaningful" units may be preferable for reporting purposes.

Follow-up analysis

It

is always appropriate to carefully consider outliers. They have a

disproportionate impact on statistical conclusions and are often the

result of errors.

Model confirmation

It is prudent to verify that the assumptions of ANOVA have been met. Residuals are examined or analyzed to confirm homoscedasticity and gross normality.

Residuals should have the appearance of (zero mean normal

distribution) noise when plotted as a function of anything including

time and

modeled data values. Trends hint at interactions among factors or among

observations. One rule of thumb: "If the largest standard deviation is

less than twice the smallest standard deviation, we can use methods

based on the assumption of equal standard deviations and our results

will still be approximately correct."

Follow-up tests

A

statistically significant effect in ANOVA is often followed up with one

or more different follow-up tests. This can be done in order to assess

which groups are different from which other groups or to test various

other focused hypotheses. Follow-up tests are often distinguished in

terms of whether they are planned (a priori) or post hoc. Planned tests are determined before looking at the data and post hoc tests are performed after looking at the data.

Often one of the "treatments" is none, so the treatment group can act as a control. Dunnett's test (a modification of the t-test) tests whether each of the other treatment groups has the same mean as the control.

Post hoc tests such as Tukey's range test

most commonly compare every group mean with every other group mean and

typically incorporate some method of controlling for Type I errors.

Comparisons, which are most commonly planned, can be either simple or

compound. Simple comparisons compare one group mean with one other group

mean. Compound comparisons typically compare two sets of groups means

where one set has two or more groups (e.g., compare average group means

of group A, B and C with group D). Comparisons can also look at tests of

trend, such as linear and quadratic relationships, when the independent

variable involves ordered levels.

Following ANOVA with pair-wise multiple-comparison tests has been criticized on several grounds. There are many such tests (10 in one table) and recommendations regarding their use are vague or conflicting.

Study designs

There are several types of ANOVA. Many statisticians base ANOVA on the design of the experiment, especially on the protocol that specifies the random assignment

of treatments to subjects; the protocol's description of the assignment

mechanism should include a specification of the structure of the

treatments and of any blocking. It is also common to apply ANOVA to observational data using an appropriate statistical model.

Some popular designs use the following types of ANOVA:

- One-way ANOVA is used to test for differences among two or more independent groups (means),e.g. different levels of urea application in a crop, or different levels of antibiotic action on several different bacterial species, or different levels of effect of some medicine on groups of patients. However, should these groups not be independent, and there is an order in the groups (such as mild, moderate and severe disease), or in the dose of a drug (such as 5 mg/mL, 10 mg/mL, 20 mg/mL) given to the same group of patients, then a linear trend estimation should be used. Typically, however, the one-way ANOVA is used to test for differences among at least three groups, since the two-group case can be covered by a t-test.[65] When there are only two means to compare, the t-test and the ANOVA F-test are equivalent; the relation between ANOVA and t is given by F = t2.

- Factorial ANOVA is used when the experimenter wants to study the interaction effects among the treatments.

- Repeated measures ANOVA is used when the same subjects are used for each treatment (e.g., in a longitudinal study).

- Multivariate analysis of variance (MANOVA) is used when there is more than one response variable.

Cautions

Balanced

experiments (those with an equal sample size for each treatment) are

relatively easy to interpret; Unbalanced

experiments offer more complexity. For single-factor (one-way) ANOVA,

the adjustment for unbalanced data is easy, but the unbalanced analysis

lacks both robustness and power.

For more complex designs the lack of balance leads to further

complications. "The orthogonality property of main effects and

interactions present in balanced data does not carry over to the

unbalanced case. This means that the usual analysis of variance

techniques do not apply.

Consequently, the analysis of unbalanced factorials is much more

difficult than that for balanced designs."

In the general case, "The analysis of variance can also be applied to

unbalanced data, but then the sums of squares, mean squares, and F-ratios will depend on the order in which the sources of variation

are considered."

The simplest techniques for handling unbalanced data restore balance

by either throwing out data or by synthesizing missing data. More

complex techniques use regression.

ANOVA is (in part) a test of statistical significance. The

American Psychological Association holds the view that simply reporting

statistical significance is insufficient and that reporting confidence

bounds is preferred.

While ANOVA is conservative (in maintaining a significance level) against multiple comparisons in one dimension, it is not conservative against comparisons in multiple dimensions.

Generalizations

ANOVA is considered to be a special case of linear regression which in turn is a special case of the general linear model. All consider the observations to be the sum of a model (fit) and a residual (error) to be minimized.

The Kruskal–Wallis test and the Friedman test are nonparametric tests, which do not rely on an assumption of normality.

Connection to linear regression

Below we make clear the connection between multi-way ANOVA and linear regression.

Linearly re-order the data so that observation is associated with a response and factors where denotes the different factors and is the total number of factors. In one-way ANOVA and in two-way ANOVA . Furthermore, we assume the factor has levels, namely . Now, we can one-hot encode the factors into the dimensional vector .

The one-hot encoding function is defined such that the entry of is

The vector is the concatenation of all of the above vectors for all . Thus, . In order to obtain a fully general -way interaction ANOVA we must also concatenate every additional interaction term in the vector and then add an intercept term. Let that vector be .

With this notation in place, we now have the exact connection with linear regression. We simply regress response against the vector .

However, there is a concern about identifiability. In order to overcome

such issues we assume that the sum of the parameters within each set of

interactions is equal to zero. From here, one can use F-statistics or other methods to determine the relevance of the individual factors.

Example

We can

consider the 2-way interaction example where we assume that the first

factor has 2 levels and the second factor has 3 levels.

Define if and if , i.e. is the one-hot encoding of the first factor and is the one-hot encoding of the second factor.

With that,

where the last term is an intercept term. For a more concrete example suppose that

Then,

![{\displaystyle v_{k}=[g_{1}(Z_{k,1}),g_{2}(Z_{k,2}),\ldots ,g_{B}(Z_{k,B})]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8476d72369317405eaaeddaea47d3d02a6f65689)

![{\displaystyle X_{k}=[0,1,1,0,0,0,0,0,1,0,0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/71b98d7ab058cee5179fa785b602b7b6a44ff624)

![{\displaystyle {\begin{aligned}{\bar {H}}&={\frac {2\mu }{2\mu +{\frac {1}{2N_{e}}}}}\\[3pt]&={\frac {4N_{e}\mu }{1+4N_{e}\mu }}\\[3pt]&={\frac {\theta }{1+\theta }}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/86758cdfe85141754c500e699339681027f7fd45)