The neuroscience of music is the scientific study of brain-based mechanisms involved in the cognitive processes underlying music. These behaviours include music listening, performing, composing, reading, writing, and ancillary activities. It also is increasingly concerned with the brain basis for musical aesthetics and musical emotion. Scientists working in this field may have training in cognitive neuroscience, neurology, neuroanatomy, psychology, music theory, computer science, and other relevant fields.

The cognitive neuroscience of music represents a significant branch of music psychology, and is distinguished from related fields such as cognitive musicology in its reliance on direct observations of the brain and use of such techniques as functional magnetic resonance imaging (fMRI), transcranial magnetic stimulation (TMS), magnetoencephalography (MEG), electroencephalography (EEG), and positron emission tomography (PET).

The cognitive neuroscience of music represents a significant branch of music psychology, and is distinguished from related fields such as cognitive musicology in its reliance on direct observations of the brain and use of such techniques as functional magnetic resonance imaging (fMRI), transcranial magnetic stimulation (TMS), magnetoencephalography (MEG), electroencephalography (EEG), and positron emission tomography (PET).

Neurological bases

Auditory Pathway

Children

who study music have shown increased development in the auditory

pathway after only two years. The development could accelerate language

and reading development.

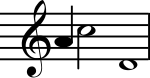

Pitch

Successive parts of the tonotopically organized basilar membrane in the cochlea resonate to corresponding frequency bandwidths of incoming sound. The hair cells in the cochlea release neurotransmitter as a result, causing action potentials down the auditory nerve. The auditory nerve then leads to several layers of synapses at numerous nuclei in the auditory brainstem.

These nuclei are also tonotopically organized, and the process of

achieving this tonotopy after the cochlea is not well understood. This tonotopy is in general maintained up to primary auditory cortex in mammals, however it is often found that cells in primary and non-primary auditory cortex have spatio-temporal receptive fields, rather than being strictly responsive or phase-locking their action potentials to narrow frequency regions.

A widely postulated mechanism for pitch processing in the early central auditory system is the phase-locking and mode-locking of action potentials to frequencies in a stimulus. Phase-locking to stimulus frequencies has been shown in the auditory nerve, the cochlear nucleus, the inferior colliculus, and the auditory thalamus. By phase- and mode-locking in this way, the auditory brainstem is known to preserve a good deal of the temporal and low-passed frequency information from the original sound; this is evident by measuring the auditory brainstem response using EEG. This temporal preservation is one way to argue directly for the temporal theory of pitch perception, and to argue indirectly against the place theory of pitch perception.

Melody processing in the secondary auditory cortex

Studies suggest that individuals are capable of automatically detecting a difference or anomaly in a melody such as an out of tune pitch

which does not fit with their previous music experience. This automatic

processing occurs in the secondary auditory cortex. Brattico,

Tervaniemi, Naatanen, and Peretz (2006) performed one such study to

determine if the detection of tones that do not fit an individual's

expectations can occur automatically. They recorded event-related potentials

(ERPs) in nonmusicians as they were presented unfamiliar melodies with

either an out of tune pitch or an out of key pitch while participants

were either distracted from the sounds or attending to the melody. Both

conditions revealed an early frontal negativity independent of where

attention was directed. This negativity originated in the auditory

cortex, more precisely in the supratemporal lobe (which corresponds with

the secondary auditory cortex) with greater activity from the right

hemisphere. The negativity response was larger for pitch that was out of

tune than that which was out of key. Ratings of musical incongruity

were higher for out of tune pitch melodies than for out of key pitch.

In the focused attention condition, out of key and out of tune pitches

produced late parietal positivity. The findings of Brattico et al.

(2006) suggest that there is automatic and rapid processing of melodic

properties in the secondary auditory cortex.

The findings that pitch incongruities were detected automatically, even

in processing unfamiliar melodies, suggests that there is an automatic

comparison of incoming information with long term knowledge of musical

scale properties, such as culturally influenced rules of musical

properties (common chord progressions, scale patterns, etc.) and

individual expectations of how the melody should proceed. The auditory

area processes the sound of the music. The auditory area is located in

the temporal lobe. The temporal lobe deals with the recognition and

perception of auditory stimuli, memory, and speech (Kinser, 2012).

Role of right auditory cortex in fine pitch resolution

The primary auditory cortex is one of the main areas associated with superior pitch resolution.

The right secondary auditory cortex has finer pitch resolution than

the left. Hyde, Peretz and Zatorre (2008) used functional magnetic

resonance imaging (fMRI) in their study to test the involvement of right

and left auditory cortical regions in frequency processing of melodic

sequences.

As well as finding superior pitch resolution in the right secondary

auditory cortex, specific areas found to be involved were the planum temporale (PT) in the secondary auditory cortex, and the primary auditory cortex in the medial section of Heschl's gyrus (HG).

Many neuroimaging studies have found evidence of the importance

of right secondary auditory regions in aspects of musical pitch

processing, such as melody.

Many of these studies such as one by Patterson, Uppenkamp, Johnsrude

and Griffiths (2002) also find evidence of a hierarchy of pitch

processing. Patterson et al. (2002) used spectrally matched sounds which

produced: no pitch, fixed pitch or melody in an fMRI study and found

that all conditions activated HG and PT. Sounds with pitch activated

more of these regions than sounds without. When a melody was produced

activation spread to the superior temporal gyrus (STG) and planum polare (PP). These results support the existence of a pitch processing hierarchy.

Rhythm

The belt and parabelt areas of the right hemisphere are involved in processing rhythm. When individuals are preparing to tap out a rhythm of regular intervals (1:2 or 1:3) the left frontal cortex, left parietal cortex, and right cerebellum are all activated. With more difficult rhythms such as a 1:2.5, more areas in the cerebral cortex and cerebellum are involved. EEG recordings have also shown a relationship between brain electrical activity and rhythm perception. Snyder and Large (2005) performed a study examining rhythm perception in human subjects, finding that activity in the gamma band (20 – 60 Hz) corresponds to the beats in a simple rhythm. Two types of gamma activity were found by Snyder & Large: induced gamma activity, and evoked

gamma activity. Evoked gamma activity was found after the onset of each

tone in the rhythm; this activity was found to be phase-locked (peaks

and troughs were directly related to the exact onset of the tone) and

did not appear when a gap (missed beat) was present in the rhythm.

Induced gamma activity, which was not found to be phase-locked, was also

found to correspond with each beat. However, induced gamma activity did

not subside when a gap was present in the rhythm, indicating that

induced gamma activity may possibly serve as a sort of internal

metronome independent of auditory input. The motor and auditory areas

are located in the cerebrum of the brain. The motor area processes the

rhythm of the music (Dean, 2013).

The motor area of the brain is located in the parietal lobe. The

parietal lobe also deals with orientation, recognition, and perception.

Tonality

Tonality describes the relationships between the elements of melody and harmony – tones, intervals, chords, and scales.

These relationships are often characterised as hierarchical, such that

one of the elements dominates or attracts another. They occur both

within and between every type of element, creating a rich and

time-varying percept between tones and their melodic, harmonic, and chromatic contexts. In one conventional sense, tonality refers to just the major and minor

scale types – examples of scales whose elements are capable of

maintaining a consistent set of functional relationships. The most

important functional relationship is that of the tonic

note and the tonic chord with the rest of the scale. The tonic is the

element which tends to assert its dominance and attraction over all

others, and it functions as the ultimate point of attraction, rest and

resolution for the scale.

The right auditory cortex is primarily involved in perceiving pitch, and parts of harmony, melody and rhythm. One study by Petr Janata found that there are tonality-sensitive areas in the medial prefrontal cortex, the cerebellum, the superior temporal sulci of both hemispheres and the superior temporal gyri (which has a skew towards the right hemisphere).

Music production and performance

Motor control functions

Musical

performance usually involves at least three elementary motor control

functions: timing, sequencing, and spatial organization of motor

movements. Accuracy in timing of movements is related to musical

rhythm. Rhythm, the pattern of temporal intervals within a musical

measure or phrase, in turn creates the perception of stronger and weaker beats. Sequencing and spatial organization relate to the expression of individual notes on a musical instrument.

These functions and their neural mechanisms have been

investigated separately in many studies, but little is known about their

combined interaction in producing a complex musical performance. The study of music requires examining them together.

Timing

Although

neural mechanisms involved in timing movement have been studied

rigorously over the past 20 years, much remains controversial. The

ability to phrase movements in precise time has been accredited to a

neural metronome or clock mechanism where time is represented through oscillations or pulses.

An opposing view to this metronome mechanism has also been hypothesized

stating that it is an emergent property of the kinematics of movement

itself.

Kinematics is defined as parameters of movement through space without

reference to forces (for example, direction, velocity and

acceleration).

Functional neuroimaging studies, as well as studies of brain-damaged patients, have linked movement timing to several cortical and sub-cortical regions, including the cerebellum, basal ganglia and supplementary motor area (SMA).

Specifically the basal ganglia and possibly the SMA have been

implicated in interval timing at longer timescales (1 second and above),

while the cerebellum may be more important for controlling motor timing

at shorter timescales (milliseconds).

Furthermore, these results indicate that motor timing is not controlled

by a single brain region, but by a network of regions that control

specific parameters of movement and that depend on the relevant

timescale of the rhythmic sequence.

Sequencing

Motor

sequencing has been explored in terms of either the ordering of

individual movements, such as finger sequences for key presses, or the

coordination of subcomponents of complex multi-joint movements.

Implicated in this process are various cortical and sub-cortical

regions, including the basal ganglia, the SMA and the pre-SMA, the

cerebellum, and the premotor and prefrontal cortices, all involved in

the production and learning of motor sequences but without explicit

evidence of their specific contributions or interactions amongst one

another.

In animals, neurophysiological studies have demonstrated an interaction

between the frontal cortex and the basal ganglia during the learning of

movement sequences. Human neuroimaging studies have also emphasized the contribution of the basal ganglia for well-learned sequences.

The cerebellum is arguably important for sequence learning and

for the integration of individual movements into unified sequences, while the pre-SMA and SMA have been shown to be involved in organizing or chunking of more complex movement sequences.

Chunking, defined as the re-organization or re-grouping of movement

sequences into smaller sub-sequences during performance, is thought to

facilitate the smooth performance of complex movements and to improve motor memory.

Lastly, the premotor cortex has been shown to be involved in tasks that

require the production of relatively complex sequences, and it may

contribute to motor prediction.

Spatial organization

Few

studies of complex motor control have distinguished between sequential

and spatial organization, yet expert musical performances demand not

only precise sequencing but also spatial organization of movements.

Studies in animals and humans have established the involvement of parietal,

sensory–motor and premotor cortices in the control of movements, when

the integration of spatial, sensory and motor information is required. Few studies so far have explicitly examined the role of spatial processing in the context of musical tasks.

Auditory-motor interactions

Feedforward and feedback interactions

An

auditory–motor interaction may be loosely defined as any engagement of

or communication between the two systems. Two classes of auditory-motor

interaction are "feedforward" and "feedback".

In feedforward interactions, it is the auditory system that

predominately influences the motor output, often in a predictive way.

An example is the phenomenon of tapping to the beat, where the listener

anticipates the rhythmic accents in a piece of music. Another example

is the effect of music on movement disorders: rhythmic auditory stimuli

have been shown to improve walking ability in Parkinson's disease and stroke patients.

Feedback interactions are particularly relevant in playing an

instrument such as a violin, or in singing, where pitch is variable and

must be continuously controlled. If auditory feedback is blocked,

musicians can still execute well-rehearsed pieces, but expressive

aspects of performance are affected. When auditory feedback is experimentally manipulated by delays or distortions,

motor performance is significantly altered: asynchronous feedback

disrupts the timing of events, whereas alteration of pitch information

disrupts the selection of appropriate actions, but not their timing.

This suggests that disruptions occur because both actions and percepts

depend on a single underlying mental representation.

Models of auditory–motor interactions

Several models of auditory–motor interactions have been advanced. The model of Hickok and Poeppel,

which is specific for speech processing, proposes that a ventral

auditory stream maps sounds onto meaning, whereas a dorsal stream maps

sounds onto articulatory representations. They and others

suggest that posterior auditory regions at the parieto-temporal

boundary are crucial parts of the auditory–motor interface, mapping

auditory representations onto motor representations of speech, and onto

melodies.

Mirror/echo neurons and auditory–motor interactions

The mirror neuron

system has an important role in neural models of sensory–motor

integration. There is considerable evidence that neurons respond to both

actions and the accumulated observation of actions. A system proposed

to explain this understanding of actions is that visual representations

of actions are mapped onto our own motor system.

Some mirror neurons are activated both by the observation of

goal-directed actions, and by the associated sounds produced during the

action. This suggests that the auditory modality can access the motor

system. While these auditory–motor interactions have mainly been studied for speech processes, and have focused on Broca's area

and the vPMC, as of 2011, experiments have begun to shed light on how

these interactions are needed for musical performance. Results point to

a broader involvement of the dPMC and other motor areas.

Music and language

Certain aspects of language

and melody have been shown to be processed in near identical functional

brain areas. Brown, Martinez and Parsons (2006) examined the

neurological structural similarities between music and language.

Utilizing positron emission tomography (PET), the findings showed that

both linguistic and melodic phrases produced activation in almost

identical functional brain areas. These areas included the primary motor cortex, supplementary motor area, Broca's area, anterior insula, primary and secondary auditory cortices, temporal pole, basal ganglia, ventral thalamus and posterior cerebellum.

Differences were found in lateralization tendencies as language tasks

favoured the left hemisphere, but the majority of activations were

bilateral which produced significant overlap across modalities.

Syntactical information mechanisms in both music and language

have been shown to be processed similarly in the brain. Jentschke,

Koelsch, Sallat and Friederici (2008) conducted a study investigating

the processing of music in children with specific language impairments (SLI).

Children with typical language development (TLD) showed ERP patterns

different from those of children with SLI, which reflected their

challenges in processing music-syntactic regularities. Strong

correlations between the ERAN (Early Right Anterior Negativity—a

specific ERP measure) amplitude and linguistic and musical abilities

provide additional evidence for the relationship of syntactical

processing in music and language.

However, production of melody and production of speech may be

subserved by different neural networks. Stewart, Walsh, Frith and

Rothwell (2001) studied the differences between speech production and

song production using transcranial magnetic stimulation (TMS). Stewart et al. found that TMS applied to the left frontal lobe

disturbs speech but not melody supporting the idea that they are

subserved by different areas of the brain. The authors suggest that a

reason for the difference is that speech generation can be localized

well but the underlying mechanisms of melodic production cannot.

Alternatively, it was also suggested that speech production may be less

robust than melodic production and thus more susceptible to

interference.

Language processing is a function more of the left side of the brain than the right side, particularly Broca's area and Wernicke's area,

though the roles played by the two sides of the brain in processing

different aspects of language are still unclear. Music is also processed

by both the left and the right sides of the brain. Recent evidence further suggest shared processing between language and music at the conceptual level.

It has also been found that, among music conservatory students, the

prevalence of absolute pitch is much higher for speakers of tone

language, even controlling for ethnic background, showing that language

influences how musical tones are perceived.

Musician vs. non-musician processing

Professional

pianists show less cortical activation for complex finger movement

tasks due to structural differences in the brain.

Differences

Brain

structure within musicians and non-musicians is distinctly different.

Gaser and Schlaug (2003) compared brain structures of professional

musicians with non-musicians and discovered gray matter volume differences in motor, auditory and visual-spatial brain regions.

Specifically, positive correlations were discovered between musician

status (professional, amateur and non-musician) and gray matter volume

in the primary motor and somatosensory areas, premotor areas,

anterior superior parietal areas and in the inferior temporal gyrus

bilaterally. This strong association between musician status and gray

matter differences supports the notion that musicians' brains show

use-dependent structural changes.

Due to the distinct differences in several brain regions, it is

unlikely that these differences are innate but rather due to the

long-term acquisition and repetitive rehearsal of musical skills.

Brains of musicians also show functional differences from those

of non-musicians. Krings, Topper, Foltys, Erberich, Sparing, Willmes

and Thron (2000) utilized fMRI to study brain area involvement of

professional pianists and a control group while performing complex

finger movements.

Krings et al. found that the professional piano players showed lower

levels of cortical activation in motor areas of the brain. It was

concluded that a lesser amount of neurons needed to be activated for the

piano players due to long-term motor practice which results in the

different cortical activation patterns. Koeneke, Lutz, Wustenberg and

Jancke (2004) reported similar findings in keyboard players.

Skilled keyboard players and a control group performed complex tasks

involving unimanual and bimanual finger movements. During task

conditions, strong hemodynamic responses in the cerebellum were shown by

both non-musicians and keyboard players, but non-musicians showed the

stronger response. This finding indicates that different cortical

activation patterns emerge from long-term motor practice. This evidence

supports previous data showing that musicians require fewer neurons to

perform the same movements.

Musicians have been shown to have significantly more developed

left planum temporales, and have also shown to have a greater word

memory.

Chan's study controlled for age, grade point average and years of

education and found that when given a 16 word memory test, the musicians

averaged one to two more words above their non musical counterparts.

Similarities

Studies have shown that the human brain has an implicit musical ability.

Koelsch, Gunter, Friederici and Schoger (2000) investigated the

influence of preceding musical context, task relevance of unexpected chords and the degree of probability of violation on music processing in both musicians and non-musicians.

Findings showed that the human brain unintentionally extrapolates

expectations about impending auditory input. Even in non-musicians, the

extrapolated expectations are consistent with music theory. The ability

to process information musically supports the idea of an implicit

musical ability in the human brain. In a follow-up study, Koelsch,

Schroger, and Gunter (2002) investigated whether ERAN and N5 could be

evoked preattentively in non-musicians.

Findings showed that both ERAN and N5 can be elicited even in a

situation where the musical stimulus is ignored by the listener

indicating that there is a highly differentiated preattentive musicality

in the human brain.

Gender differences

Minor

neurological differences regarding hemispheric processing exist between

brains of males and females. Koelsch, Maess, Grossmann and Friederici

(2003) investigated music processing through EEG and ERPs and discovered

gender differences.

Findings showed that females process music information bilaterally and

males process music with a right-hemispheric predominance. However,

the early negativity of males was also present over the left hemisphere.

This indicates that males do not exclusively utilize the right

hemisphere for musical information processing. In a follow-up study,

Koelsch, Grossman, Gunter, Hahne, Schroger and Friederici (2003) found

that boys show lateralization of the early anterior negativity in the

left hemisphere but found a bilateral effect in girls.

This indicates a developmental effect as early negativity is

lateralized in the right hemisphere in men and in the left hemisphere in

boys.

Handedness differences

It

has been found that subjects who are lefthanded, particularly those who

are also ambidextrous, perform better than righthanders on short term

memory for the pitch.

It was hypothesized that this handedness advantage is due to the fact

that lefthanders have more duplication of storage in the two hemispheres

than do righthanders. Other work has shown that there are pronounced

differences between righthanders and lefthanders (on a statistical

basis) in how musical patterns are perceived, when sounds come from

different regions of space. This has been found, for example, in the Octave illusion and the Scale illusion.

Musical imagery

Musical imagery refers to the experience of replaying music by imagining it inside the head. Musicians show a superior ability for musical imagery due to intense musical training.

Herholz, Lappe, Knief and Pantev (2008) investigated the differences

in neural processing of a musical imagery task in musicians and

non-musicians. Utilizing magnetoencephalography (MEG), Herholz et al.

examined differences in the processing of a musical imagery task with

familiar melodies in musicians and non-musicians. Specifically, the

study examined whether the mismatch negativity

(MMN) can be based solely on imagery of sounds. The task involved

participants listening to the beginning of a melody, continuation of the

melody in his/her head and finally hearing a correct/incorrect tone as

further continuation of the melody. The imagery of these melodies was

strong enough to obtain an early preattentive brain response to

unanticipated violations of the imagined melodies in the musicians.

These results indicate similar neural correlates are relied upon for

trained musicians imagery and perception. Additionally, the findings

suggest that modification of the imagery mismatch negativity (iMMN)

through intense musical training results in achievement of a superior

ability for imagery and preattentive processing of music.

Perceptual musical processes and musical imagery may share a

neural substrate in the brain. A PET study conducted by Zatorre,

Halpern, Perry, Meyer and Evans (1996) investigated cerebral blood flow (CBF) changes related to auditory imagery and perceptual tasks.

These tasks examined the involvement of particular anatomical regions

as well as functional commonalities between perceptual processes and

imagery. Similar patterns of CBF changes provided evidence supporting

the notion that imagery processes share a substantial neural substrate

with related perceptual processes. Bilateral neural activity in the

secondary auditory cortex was associated with both perceiving and

imagining songs. This implies that within the secondary auditory cortex,

processes underlie the phenomenological impression of imagined sounds.

The supplementary motor area

(SMA) was active in both imagery and perceptual tasks suggesting covert

vocalization as an element of musical imagery. CBF increases in the

inferior frontal polar cortex and right thalamus suggest that these

regions may be related to retrieval and/or generation of auditory

information from memory.

Absolute pitch

Musicians possessing perfect pitch can identify the pitch of musical tones without external reference.

Absolute pitch

(AP) is defined as the ability to identify the pitch of a musical tone

or to produce a musical tone at a given pitch without the use of an

external reference pitch.

Neuroscientific research has not discovered a distinct activation

pattern common for possessors of AP. Zatorre, Perry, Beckett, Westbury

and Evans (1998) examined the neural foundations of AP using functional

and structural brain imaging techniques.

Positron emission tomography (PET) was utilized to measure cerebral

blood flow (CBF) in musicians possessing AP and musicians lacking AP.

When presented with musical tones, similar patterns of increased CBF in

auditory cortical areas emerged in both groups. AP possessors and

non-AP subjects demonstrated similar patterns of left dorsolateral

frontal activity when they performed relative pitch judgments. However,

in non-AP subjects activation in the right inferior frontal cortex was

present whereas AP possessors showed no such activity. This finding

suggests that musicians with AP do not need access to working memory

devices for such tasks. These findings imply that there is no specific

regional activation pattern unique to AP. Rather, the availability of

specific processing mechanisms and task demands determine the recruited

neural areas.

Emotion

Emotions induced by music activate similar frontal brain regions compared to emotions elicited by other stimuli.

Schmidt and Trainor (2001) discovered that valence (i.e. positive vs.

negative) of musical segments was distinguished by patterns of frontal

EEG activity.

Joyful and happy musical segments were associated with increases in

left frontal EEG activity whereas fearful and sad musical segments were

associated with increases in right frontal EEG activity. Additionally,

the intensity of emotions was differentiated by the pattern of overall

frontal EEG activity. Overall frontal region activity increased as

affective musical stimuli became more intense.

Music is able to create an incredibly pleasurable experience that can be described as "chills".[78]

Blood and Zatorre (2001) used PET to measure changes in cerebral blood

flow while participants listened to music that they knew to give them

the "chills" or any sort of intensely pleasant emotional response. They

found that as these chills increase, many changes in cerebral blood flow

are seen in brain regions such as the amygdala, orbitofrontal cortex, ventral striatum, midbrain, and the ventral medial prefrontal cortex.

Many of these areas appear to be linked to reward, motivation, emotion,

and arousal, and are also activated in other pleasurable situations. The resulting pleasure responses enable the release dopamine, serotonin, and oxytocin. Nucleus accumbens (a part of striatum) is involved in both music related emotions, as well as rhythmic timing.

When unpleasant melodies are played, the posterior cingulate cortex activates, which indicates a sense of conflict or emotional pain.

The right hemisphere has also been found to be correlated with emotion,

which can also activate areas in the cingulate in times of emotional

pain, specifically social rejection (Eisenberger). This evidence, along

with observations, has led many musical theorists, philosophers and

neuroscientists to link emotion with tonality. This seems almost obvious

because the tones in music seem like a characterization of the tones in human speech, which indicate emotional content. The vowels in the phonemes

of a song are elongated for a dramatic effect, and it seems as though

musical tones are simply exaggerations of the normal verbal tonality.

Memory

Neuropsychology of musical memory

Musical memory involves both explicit and implicit memory systems.

Explicit musical memory is further differentiated between episodic

(where, when and what of the musical experience) and semantic (memory

for music knowledge including facts and emotional concepts). Implicit

memory centers on the 'how' of music and involves automatic processes

such as procedural memory and motor skill learning – in other words

skills critical for playing an instrument. Samson and Baird (2009) found

that the ability of musicians with Alzheimer's Disease to play an

instrument (implicit procedural memory) may be preserved.

Neural correlates of musical memory

A PET study looking into the neural correlates of musical semantic and episodic memory found distinct activation patterns.

Semantic musical memory involves the sense of familiarity of songs. The

semantic memory for music condition resulted in bilateral activation in

the medial and orbital frontal cortex, as well as activation in the

left angular gyrus and the left anterior region of the middle temporal

gyri. These patterns support the functional asymmetry favouring the left

hemisphere for semantic memory. Left anterior temporal and inferior

frontal regions that were activated in the musical semantic memory task

produced activation peaks specifically during the presentation of

musical material, suggestion that these regions are somewhat

functionally specialized for musical semantic representations.

Episodic memory of musical information involves the ability to recall the former context associated with a musical excerpt.

In the condition invoking episodic memory for music, activations were

found bilaterally in the middle and superior frontal gyri and precuneus,

with activation predominant in the right hemisphere. Other studies have

found the precuneus to become activated in successful episodic recall.

As it was activated in the familiar memory condition of episodic

memory, this activation may be explained by the successful recall of the

melody.

When it comes to memory for pitch, there appears to be a dynamic

and distributed brain network subserves pitch memory processes. Gaab,

Gaser, Zaehle, Jancke and Schlaug (2003) examined the functional anatomy

of pitch memory using functional magnetic resonance imaging (fMRI).

An analysis of performance scores in a pitch memory task resulted in a

significant correlation between good task performance and the

supramarginal gyrus (SMG) as well as the dorsolateral cerebellum.

Findings indicate that the dorsolateral cerebellum may act as a pitch

discrimination processor and the SMG may act as a short-term pitch

information storage site. The left hemisphere was found to be more

prominent in the pitch memory task than the right hemispheric regions.

Therapeutic effects of music on memory

Musical training has been shown to aid memory.

Altenmuller et al. studied the difference between active and passive

musical instruction and found both that over a longer (but not short)

period of time, the actively taught students retained much more

information than the passively taught students. The actively taught

students were also found to have greater cerebral cortex activation. It

should also be noted that the passively taught students weren't wasting

their time; they, along with the active group, displayed greater left

hemisphere activity, which is typical in trained musicians.

Research suggests we listen to the same songs repeatedly because

of musical nostalgia. One major study, published in the journal Memory

& Cognition, found that music enables the mind to evoke memories of

the past.

Attention

Treder et al.

identified neural correlates of attention when listening to simplified

polyphonic music patterns. In a musical oddball experiment, they had

participants shift selective attention to one out of three different

instruments in music audio clips, with each instrument occasionally

playing one or several notes deviating from an otherwise repetitive

pattern. Contrasting attended versus unattended instruments, ERP

analysis shows subject- and instrument-specific responses including P300

and early auditory components. The attended instrument could be

classified offline with high accuracy. This indicates that attention

paid to a particular instrument in polyphonic music can be inferred from

ongoing EEG, a finding that is potentially relevant for building more

ergonomic music-listing based brain-computer interfaces.

Development

Musical four-year-olds have been found to have one greater left hemisphere intrahemispheric coherence. Musicians have been found to have more developed anterior portions of the corpus callosum

in a study by Cowell et al. in 1992. This was confirmed by a study by

Schlaug et al. in 1995 that found that classical musicians between the

ages of 21 and 36 have significantly greater anterior corpora callosa

than the non-musical control. Schlaug also found that there was a strong

correlation of musical exposure before the age of seven, and a great

increase in the size of the corpus callosum.

These fibers join together the left and right hemispheres and indicate

an increased relaying between both sides of the brain. This suggests the

merging between the spatial- emotiono-tonal processing of the right

brain and the linguistical processing of the left brain. This large

relaying across many different areas of the brain might contribute to

music's ability to aid in memory function.

Impairment

Focal hand dystonia

Focal hand dystonia is a task-related movement disorder associated with occupational activities that require repetitive hand movements.

Focal hand dystonia is associated with abnormal processing in the

premotor and primary sensorimotor cortices. An fMRI study examined five

guitarists with focal hand dystonia.

The study reproduced task-specific hand dystonia by having guitarists

use a real guitar neck inside the scanner as well as performing a guitar

exercise to trigger abnormal hand movement. The dystonic guitarists

showed significantly more activation of the contralateral primary

sensorimotor cortex as well as a bilateral underactivation of premotor

areas. This activation pattern represents abnormal recruitment of the

cortical areas involved in motor control. Even in professional

musicians, widespread bilateral cortical region involvement is necessary

to produce complex hand movements such as scales and arpeggios. The abnormal shift from premotor to primary sensorimotor activation directly correlates with guitar-induced hand dystonia.

Music agnosia

Music agnosia, an auditory agnosia, is a syndrome of selective impairment in music recognition.

Three cases of music agnosia are examined by Dalla Bella and Peretz

(1999); C.N., G.L., and I.R.. All three of these patients suffered

bilateral damage to the auditory cortex which resulted in musical

difficulties while speech understanding remained intact. Their

impairment is specific to the recognition of once familiar melodies.

They are spared in recognizing environmental sounds and in recognizing

lyrics. Peretz (1996) has studied C.N.'s music agnosia further and

reports an initial impairment of pitch processing and spared temporal

processing. C.N. later recovered in pitch processing abilities but remained impaired in tune recognition and familiarity judgments.

Musical agnosias may be categorized based on the process which is impaired in the individual.

Apperceptive music agnosia involves an impairment at the level of

perceptual analysis involving an inability to encode musical information

correctly. Associative music agnosia reflects an impaired

representational system which disrupts music recognition. Many of the

cases of music agnosia have resulted from surgery involving the middle

cerebral artery. Patient studies have surmounted a large amount of

evidence demonstrating that the left side of the brain is more suitable

for holding long-term memory representations of music and that the right

side is important for controlling access to these representations.

Associative music agnosias tend to be produced by damage to the left

hemisphere, while apperceptive music agnosia reflects damage to the

right hemisphere.

Congenital amusia

Congenital amusia, otherwise known as tone deafness,

is a term for lifelong musical problems which are not attributable to

mental retardation, lack of exposure to music or deafness, or brain

damage after birth.

Amusic brains have been found in fMRI studies to have less white matter

and thicker cortex than controls in the right inferior frontal cortex.

These differences suggest abnormal neuronal development in the auditory

cortex and inferior frontal gyrus, two areas which are important in

musical-pitch processing.

Studies on those with amusia suggest different processes are involved in speech tonality and musical tonality. Congenital

amusics lack the ability to distinguish between pitches and so are for

example unmoved by dissonance and playing the wrong key on a piano. They

also cannot be taught to remember a melody or to recite a song;

however, they are still capable of hearing the intonation of speech, for

example, distinguishing between "You speak French" and "You speak

French?" when spoken.

Amygdala damage

Damage to the amygdala may impair recognition of scary music.

Damage to the amygdala has selective emotional impairments on musical

recognition. Gosselin, Peretz, Johnsen and Adolphs (2007) studied

S.M., a patient with bilateral damage of the amygdala with the rest of the temporal lobe undamaged and found that S.M. was impaired in recognition of scary and sad music.

S.M.'s perception of happy music was normal, as was her ability to use

cues such as tempo to distinguish between happy and sad music. It

appears that damage specific to the amygdala can selectively impair

recognition of scary music.

Selective deficit in music reading

Specific

musical impairments may result from brain damage leaving other musical

abilities intact. Cappelletti, Waley-Cohen, Butterworth and Kopelman

(2000) studied a single case study of patient P.K.C., a professional

musician who sustained damage to the left posterior temporal lobe as

well as a small right occipitotemporal lesion.

After sustaining damage to these regions, P.K.C. was selectively

impaired in the areas of reading, writing and understanding musical

notation but maintained other musical skills. The ability to read aloud

letters, words, numbers and symbols (including musical ones) was

retained. However, P.K.C. was unable to read aloud musical notes on the

staff regardless of whether the task involved naming with the

conventional letter or by singing or playing. Yet despite this specific

deficit, P.K.C. retained the ability to remember and play familiar and

new melodies.

Auditory arrhythmia

Arrhythmia in the auditory modality

is defined as a disturbance of rhythmic sense; and includes deficits

such as the inability to rhythmically perform music, the inability to

keep time to music and the inability to discriminate between or

reproduce rhythmic patterns.

A study investigating the elements of rhythmic function examined

Patient H.J., who acquired arrhythmia after sustaining a right

temporoparietal infarct.

Damage to this region impaired H.J.'s central timing system which is

essentially the basis of his global rhythmic impairment. H.J. was

unable to generate steady pulses in a tapping task. These findings

suggest that keeping a musical beat relies on functioning in the right

temporal auditory cortex.