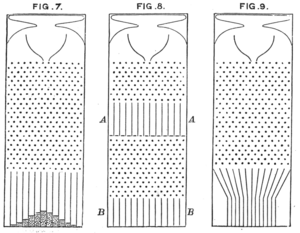

Galton's experimental setup (Fig.8)

In statistics, regression toward (or to) the mean is the phenomenon that arises if a random variable is extreme on its first measurement but closer to the mean or average on its second measurement and if it is extreme on its second measurement but closer to the average on its first. To avoid making incorrect inferences, regression toward the mean must be considered when designing scientific experiments and interpreting data. Historically, what is now called regression toward the mean has also been called reversion to the mean and reversion to mediocrity.

The conditions under which regression toward the mean occurs depend on

the way the term is mathematically defined. The British polymath Sir Francis Galton first observed the phenomenon in the context of simple linear regression of data points. Galton developed the following model: pellets fall through a quincunx to form a normal distribution

centered directly under their entrance point. These pellets might then

be released down into a second gallery corresponding to a second

measurement. Galton then asked the reverse question: "From where did

these pellets come?"

The answer was not 'on average directly above'. Rather it was 'on average, more towards the middle', for the simple reason that there were more pellets above it towards the middle that could wander left than there were in the left extreme that could wander to the right, inwards.

As a less restrictive approach, regression towards the mean can be defined for any bivariate distribution with identical marginal distributions. Two such definitions exist.

One definition accords closely with the common usage of the term

"regression towards the mean". Not all such bivariate distributions show

regression towards the mean under this definition. However, all such

bivariate distributions show regression towards the mean under the other

definition.

Jeremy Siegel uses the term "return to the mean" to describe a financial time series in which "returns can be very unstable in the short run but very stable in the long run." More quantitatively, it is one in which the standard deviation of average annual returns declines faster than the inverse of the holding period, implying that the process is not a random walk,

but that periods of lower returns are systematically followed by

compensating periods of higher returns, as is the case in many seasonal

businesses, for example.

Conceptual background

Consider

a simple example: a class of students takes a 100-item true/false test

on a subject. Suppose that all students choose randomly on all

questions. Then, each student's score would be a realization of one of a

set of independent and identically distributed random variables, with an expected mean

of 50. Naturally, some students will score substantially above 50 and

some substantially below 50 just by chance. If one takes only the top

scoring 10% of the students and gives them a second test on which they

again choose randomly on all items, the mean score would again be

expected to be close to 50. Thus the mean of these students would

"regress" all the way back to the mean of all students who took the

original test. No matter what a student scores on the original test, the

best prediction of their score on the second test is 50.

If choosing answers to the test questions was not random – i.e.

if there were no luck (good or bad) or random guessing involved in the

answers supplied by the students – then all students would be expected

to score the same on the second test as they scored on the original

test, and there would be no regression toward the mean.

Most realistic situations fall between these two extremes: for example, one might consider exam scores as a combination of skill and luck.

In this case, the subset of students scoring above average would be

composed of those who were skilled and had not especially bad luck,

together with those who were unskilled, but were extremely lucky. On a

retest of this subset, the unskilled will be unlikely to repeat their

lucky break, while the skilled will have a second chance to have bad

luck. Hence, those who did well previously are unlikely to do quite as

well in the second test even if the original cannot be replicated.

The following is an example of this second kind of regression

toward the mean. A class of students takes two editions of the same test

on two successive days. It has frequently been observed that the worst

performers on the first day will tend to improve their scores on the

second day, and the best performers on the first day will tend to do

worse on the second day.

The phenomenon occurs because student scores are determined in part by

underlying ability and in part by chance. For the first test, some will

be lucky, and score more than their ability, and some will be unlucky

and score less than their ability. Some of the lucky students on the

first test will be lucky again on the second test, but more of them will

have (for them) average or below average scores. Therefore, a student

who was lucky on the first test is more likely to have a worse score on

the second test than a better score. Similarly, students who score less

than the mean on the first test will tend to see their scores increase

on the second test.

Other examples

If

your favorite sports team won the championship last year, what does

that mean for their chances for winning next season? To the extent this

result is due to skill (the team is in good condition, with a top coach,

etc.), their win signals that it is more likely they will win again

next year. But the greater the extent this is due to luck (other teams

embroiled in a drug scandal, favorable draw, draft picks turned out to

be productive, etc.), the less likely it is they will win again next

year.

If one medical trial suggests that a particular drug or treatment

is outperforming all other treatments for a condition, then in a second

trial it is more likely that the outperforming drug or treatment will

perform closer to the mean.

If a business organisation has a highly profitable quarter,

despite the underlying reasons for its performance being unchanged, it

is likely to do less well the next quarter.

If the country's GDP jumps in one quarter it is likely not to do as well in the next.

Baseball players who hit well in their rookie season are likely to do worse their 2nd; the "Sophomore slump".

History

The concept of regression comes from genetics and was popularized by Sir Francis Galton during the late 19th century with the publication of Regression towards mediocrity in hereditary stature.

Galton observed that extreme characteristics (e.g., height) in parents

are not passed on completely to their offspring. Rather, the

characteristics in the offspring regress towards a mediocre

point (a point which has since been identified as the mean). By

measuring the heights of hundreds of people, he was able to quantify

regression to the mean, and estimate the size of the effect. Galton

wrote that, "the average regression of the offspring is a constant

fraction of their respective mid-parental

deviations". This means that the difference between a child and its

parents for some characteristic is proportional to its parents'

deviation from typical people in the population. If its parents are each

two inches taller than the averages for men and women, then, on

average, the offspring will be shorter than its parents by some factor

(which, today, we would call one minus the regression coefficient)

times two inches. For height, Galton estimated this coefficient to be

about 2/3: the height of an individual will measure around a midpoint

that is two thirds of the parents' deviation from the population

average.

Galton coined the term "regression" to describe an observable fact in the inheritance of multi-factorial quantitative genetic

traits: namely that the offspring of parents who lie at the tails of

the distribution will tend to lie closer to the centre, the mean, of the

distribution. He quantified this trend, and in doing so invented linear regression

analysis, thus laying the groundwork for much of modern statistical

modelling. Since then, the term "regression" has taken on a variety of

meanings, and it may be used by modern statisticians to describe

phenomena of sampling bias which have little to do with Galton's original observations in the field of genetics.

Though his mathematical analysis was correct, Galton's biological

explanation for the regression phenomenon he observed is now known to

be incorrect. He stated: "A child inherits partly from his parents,

partly from his ancestors. Speaking generally, the further his genealogy

goes back, the more numerous and varied will his ancestry become, until

they cease to differ from any equally numerous sample taken at

haphazard from the race at large."

This is incorrect, since a child receives its genetic make-up

exclusively from its parents. There is no generation-skipping in genetic

material: any genetic material from earlier ancestors must have passed

through the parents (though it may not have been expressed

in them). The phenomenon is better understood if we assume that the

inherited trait (e.g., height) is controlled by a large number of recessive genes. Exceptionally tall individuals must be homozygous for increased height mutations at a large proportion of these loci.

But the loci which carry these mutations are not necessarily shared

between two tall individuals, and if these individuals mate, their

offspring will be on average homozygous for "tall" mutations on fewer

loci than either of their parents. In addition, height is not entirely

genetically determined, but also subject to environmental influences

during development, which make offspring of exceptional parents even

more likely to be closer to the average than their parents.

This population genetic

phenomenon of regression to the mean is best thought of as a

combination of a binomially distributed process of inheritance plus

normally distributed environmental influences. In contrast, the term

"regression to the mean" is now often used to describe the phenomenon by

which an initial sampling bias may disappear as new, repeated, or larger samples display sample means that are closer to the true underlying population mean.

Importance

Regression toward the mean is a significant consideration in the design of experiments.

Take a hypothetical example of 1,000 individuals of a similar age

who were examined and scored on the risk of experiencing a heart

attack. Statistics could be used to measure the success of an

intervention on the 50 who were rated at the greatest risk. The

intervention could be a change in diet, exercise, or a drug treatment.

Even if the interventions are worthless, the test group would be

expected to show an improvement on their next physical exam, because of

regression toward the mean. The best way to combat this effect is to

divide the group randomly into a treatment group that receives the

treatment, and a control

group that does not. The treatment would then be judged effective only

if the treatment group improves more than the control group.

Alternatively, a group of disadvantaged

children could be tested to identify the ones with most college

potential. The top 1% could be identified and supplied with special

enrichment courses, tutoring, counseling and computers. Even if the

program is effective, their average scores may well be less when the

test is repeated a year later. However, in these circumstances it may be

considered unethical to have a control group of disadvantaged children

whose special needs are ignored. A mathematical calculation for shrinkage can adjust for this effect, although it will not be as reliable as the control group method.

The effect can also be exploited for general inference and

estimation. The hottest place in the country today is more likely to be

cooler tomorrow than hotter, as compared to today. The best performing

mutual fund over the last three years is more likely to see relative

performance decline than improve over the next three years. The most

successful Hollywood actor of this year is likely to have less gross

than more gross for his or her next movie. The baseball player with the

greatest batting average by the All-Star break is more likely to have a

lower average than a higher average over the second half of the season.

Misunderstandings

The concept of regression toward the mean can be misused very easily.

In the student test example above, it was assumed implicitly that

what was being measured did not change between the two measurements.

Suppose, however, that the course was pass/fail and students were

required to score above 70 on both tests to pass. Then the students who

scored under 70 the first time would have no incentive to do well, and

might score worse on average the second time. The students just over 70,

on the other hand, would have a strong incentive to study and

concentrate while taking the test. In that case one might see movement away

from 70, scores below it getting lower and scores above it getting

higher. It is possible for changes between the measurement times to

augment, offset or reverse the statistical tendency to regress toward

the mean.

Statistical regression toward the mean is not a causal

phenomenon. A student with the worst score on the test on the first day

will not necessarily increase his score substantially on the second day

due to the effect. On average, the worst scorers improve, but that is

only true because the worst scorers are more likely to have been unlucky

than lucky. To the extent that a score is determined randomly, or that a

score has random variation or error, as opposed to being determined by

the student's academic ability or being a "true value", the phenomenon

will have an effect. A classic mistake in this regard was in education.

The students that received praise for good work were noticed to do more

poorly on the next measure, and the students who were punished for poor

work were noticed to do better on the next measure. The educators

decided to stop praising and keep punishing on this basis.

Such a decision was a mistake, because regression toward the mean is

not based on cause and effect, but rather on random error in a natural

distribution around a mean.

Although extreme individual measurements regress toward the mean, the second sample

of measurements will be no closer to the mean than the first. Consider

the students again. Suppose the tendency of extreme individuals is to

regress 10% of the way toward the mean of 80, so a student who scored 100 the first day is expected

to score 98 the second day, and a student who scored 70 the first day

is expected to score 71 the second day. Those expectations are closer to

the mean than the first day scores. But the second day scores will vary

around their expectations; some will be higher and some will be lower.

In addition, individuals that measure very close to the mean should

expect to move away from the mean. The effect is the exact reverse of

regression toward the mean, and exactly offsets it. So for extreme

individuals, we expect the second score to be closer to the mean than

the first score, but for all individuals, we expect the distribution of distances from the mean to be the same on both sets of measurements.

Related to the point above, regression toward the mean works

equally well in both directions. We expect the student with the highest

test score on the second day to have done worse on the first day. And if

we compare the best student on the first day to the best student on the

second day, regardless of whether it is the same individual or not,

there is a tendency to regress toward the mean going in either

direction. We expect the best scores on both days to be equally far from

the mean.

Regression fallacies

Many phenomena tend to be attributed to the wrong causes when regression to the mean is not taken into account.

An extreme example is Horace Secrist's 1933 book The Triumph of Mediocrity in Business,

in which the statistics professor collected mountains of data to prove

that the profit rates of competitive businesses tend toward the average

over time. In fact, there is no such effect; the variability of profit

rates is almost constant over time. Secrist had only described the

common regression toward the mean. One exasperated reviewer, Harold Hotelling,

likened the book to "proving the multiplication table by arranging

elephants in rows and columns, and then doing the same for numerous

other kinds of animals".

The calculation and interpretation of "improvement scores" on

standardized educational tests in Massachusetts probably provides

another example of the regression fallacy.

In 1999, schools were given improvement goals. For each school, the

Department of Education tabulated the difference in the average score

achieved by students in 1999 and in 2000. It was quickly noted that most

of the worst-performing schools had met their goals, which the

Department of Education took as confirmation of the soundness of their

policies. However, it was also noted that many of the supposedly best

schools in the Commonwealth, such as Brookline High School (with 18

National Merit Scholarship finalists) were declared to have failed. As

in many cases involving statistics and public policy, the issue is

debated, but "improvement scores" were not announced in subsequent years

and the findings appear to be a case of regression to the mean.

The psychologist Daniel Kahneman, winner of the 2002 Nobel Memorial Prize in Economic Sciences,

pointed out that regression to the mean might explain why rebukes can

seem to improve performance, while praise seems to backfire.

| “ | I had the most satisfying Eureka experience of my career while attempting to teach flight instructors that praise is more effective than punishment for promoting skill-learning. When I had finished my enthusiastic speech, one of the most seasoned instructors in the audience raised his hand and made his own short speech, which began by conceding that positive reinforcement might be good for the birds, but went on to deny that it was optimal for flight cadets. He said, "On many occasions I have praised flight cadets for clean execution of some aerobatic maneuver, and in general when they try it again, they do worse. On the other hand, I have often screamed at cadets for bad execution, and in general they do better the next time. So please don't tell us that reinforcement works and punishment does not, because the opposite is the case." This was a joyous moment, in which I understood an important truth about the world: because we tend to reward others when they do well and punish them when they do badly, and because there is regression to the mean, it is part of the human condition that we are statistically punished for rewarding others and rewarded for punishing them. I immediately arranged a demonstration in which each participant tossed two coins at a target behind his back, without any feedback. We measured the distances from the target and could see that those who had done best the first time had mostly deteriorated on their second try, and vice versa. But I knew that this demonstration would not undo the effects of lifelong exposure to a perverse contingency. | ” |

To put Kahneman's story in simple terms, when one makes a severe

mistake, their performance will later usually return to average level

anyway. This will seem as an improvement and as "proof" of a belief that

it is better to criticize than to praise (held especially by anyone who

is willing to criticize at that "low" moment). In the contrary

situation, when one happens to perform high above average, their

performance will also tend to return to the average level later on; the

change will be perceived as a deterioration and any initial praise

following the first performance as a cause of that deterioration. Just

because criticizing or praising precedes the regression toward the mean,

the act of criticizing or of praising is falsely attributed causality.

The regression fallacy is also explained in Rolf Dobelli's The Art of Thinking Clearly.

UK law enforcement policies have encouraged the visible siting of static or mobile speed cameras at accident blackspots. This policy was justified by a perception that there is a corresponding reduction in serious road traffic accidents

after a camera is set up. However, statisticians have pointed out that,

although there is a net benefit in lives saved, failure to take into

account the effects of regression to the mean results in the beneficial

effects being overstated.

Statistical analysts have long recognized the effect of

regression to the mean in sports; they even have a special name for it:

the "sophomore slump". For example, Carmelo Anthony of the NBA's Denver Nuggets

had an outstanding rookie season in 2004. It was so outstanding, in

fact, that he could not possibly be expected to repeat it: in 2005,

Anthony's numbers had dropped from his rookie season. The reasons for

the "sophomore slump" abound, as sports are all about adjustment and

counter-adjustment, but luck-based excellence as a rookie is as good a

reason as any. Regression to the mean in sports performance may also be

the reason for the apparent "Sports Illustrated cover jinx" and the "Madden Curse". John Hollinger has an alternate name for the phenomenon of regression to the mean: the "fluke rule", while Bill James calls it the "Plexiglas Principle".

Because popular lore has focused on regression toward the mean as

an account of declining performance of athletes from one season to the

next, it has usually overlooked the fact that such regression can also

account for improved performance. For example, if one looks at the batting average of Major League Baseball

players in one season, those whose batting average was above the league

mean tend to regress downward toward the mean the following year, while

those whose batting average was below the mean tend to progress upward

toward the mean the following year.

Other statistical phenomena

Regression

toward the mean simply says that, following an extreme random event,

the next random event is likely to be less extreme. In no sense does the

future event "compensate for" or "even out" the previous event, though

this is assumed in the gambler's fallacy (and the variant law of averages). Similarly, the law of large numbers

states that in the long term, the average will tend towards the

expected value, but makes no statement about individual trials. For

example, following a run of 10 heads on a flip of a fair coin (a rare,

extreme event), regression to the mean states that the next run of heads

will likely be less than 10, while the law of large numbers states that

in the long term, this event will likely average out, and the average

fraction of heads will tend to 1/2. By contrast, the gambler's fallacy

incorrectly assumes that the coin is now "due" for a run of tails to

balance out.

Definition for simple linear regression of data points

This is the definition of regression toward the mean that closely follows Sir Francis Galton's original usage.

Suppose there are n data points {yi, xi}, where i = 1, 2, …, n. We want to find the equation of the regression line, i.e. the straight line

which would provide a "best" fit for the data points. (Note that a

straight line may not be the appropriate regression curve for the given

data points.) Here the "best" will be understood as in the least-squares approach: such a line that minimizes the sum of squared residuals of the linear regression model. In other words, numbers α and β solve the following minimization problem:

- Find , where

Using calculus it can be shown that the values of α and β that minimize the objective function Q are

where rxy is the sample correlation coefficient between x and y, sx is the standard deviation of x, and sy is correspondingly the standard deviation of y. Horizontal bar over a variable means the sample average of that variable. For example:

Substituting the above expressions for and into yields fitted values

which yields

This shows the role rxy plays in the regression line of standardized data points.

If −1 < rxy < 1, then we say that

the data points exhibit regression toward the mean. In other words, if

linear regression is the appropriate model for a set of data points

whose sample correlation coefficient is not perfect, then there is

regression toward the mean. The predicted (or fitted) standardized value

of y is closer to its mean than the standardized value of x is to its mean.

Definitions for bivariate distribution with identical marginal distributions

Restrictive definition

Let X1, X2 be random variables with identical marginal distributions with mean μ. In this formalization, the bivariate distribution of X1 and X2 is said to exhibit regression toward the mean if, for every number c > μ, we have

- μ ≤ E[X2 | X1 = c] < c,

with the reverse inequalities holding for c < μ.

The following is an informal description of the above definition. Consider a population of widgets. Each widget has two numbers, X1 and X2 (say, its left span (X1 ) and right span (X2)). Suppose that the probability distributions of X1 and X2 in the population are identical, and that the means of X1 and X2 are both μ. We now take a random widget from the population, and denote its X1 value by c. (Note that c may be greater than, equal to, or smaller than μ.) We have no access to the value of this widget's X2 yet. Let d denote the expected value of X2 of this particular widget. (i.e. Let d denote the average value of X2 of all widgets in the population with X1=c.) If the following condition is true:

- Whatever the value c is, d lies between μ and c (i.e. d is closer to μ than c is),

then we say that X1 and X2 show regression toward the mean.

This definition accords closely with the current common usage,

evolved from Galton's original usage, of the term "regression toward the

mean." It is "restrictive" in the sense that not every bivariate

distribution with identical marginal distributions exhibits regression

toward the mean (under this definition).

Theorem

If a pair (X, Y) of random variables follows a bivariate normal distribution, then the conditional mean E(Y|X) is a linear function of X. The correlation coefficient r between X and Y, along with the marginal means and variances of X and Y, determines this linear relationship:

where E[X] and E[Y] are the expected values of X and Y, respectively, and σx and σy are the standard deviations of X and Y, respectively.

Hence the conditional expected value of Y, given that X is t standard deviations above its mean (and that includes the case where it's below its mean, when t < 0), is rt standard deviations above the mean of Y. Since |r| ≤ 1, Y is no farther from the mean than X is, as measured in the number of standard deviations.

Hence, if 0 ≤ r < 1, then (X, Y) shows regression toward the mean (by this definition).

General definition

The following definition of reversion toward the mean has been proposed by Samuels as an alternative to the more restrictive definition of regression toward the mean above.

Let X1, X2 be random variables with identical marginal distributions with mean μ. In this formalization, the bivariate distribution of X1 and X2 is said to exhibit reversion toward the mean if, for every number c, we have

- μ ≤ E[X2 | X1 > c] < E[X1 | X1 > c], and

- μ ≥ E[X2 | X1 < c] > E[X1 | X1 < c]

This definition is "general" in the sense that every bivariate distribution with identical marginal distributions exhibits reversion toward the mean.

![{\begin{aligned}&{\hat {\beta }}={\frac {\sum _{i=1}^{n}(x_{i}-{\bar {x}})(y_{i}-{\bar {y}})}{\sum _{i=1}^{n}(x_{i}-{\bar {x}})^{2}}}={\frac {{\overline {xy}}-{\bar {x}}{\bar {y}}}{{\overline {x^{2}}}-{\bar {x}}^{2}}}={\frac {\operatorname {Cov} [x,y]}{\operatorname {Var} [x]}}=r_{xy}{\frac {s_{y}}{s_{x}}},\\&{\hat {\alpha }}={\bar {y}}-{\hat {\beta }}\,{\bar {x}},\end{aligned}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f4ceb26f542d9dec173923b43d3e9589fef9f36f)

![{\displaystyle {\frac {E(Y\mid X)-E[Y]}{\sigma _{y}}}=r{\frac {X-E[X]}{\sigma _{x}}},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1139b41de03b98d327888dc8a7f0a2c9ab867375)

![1-\left[\frac{15}{16}\right]^{16} \,=\, 64.39\%](https://wikimedia.org/api/rest_v1/media/math/render/svg/0d791f63cddc590830c6ef468bbf823c14c1953f)

![1-\left[\frac{15}{16}\right]^{15} \,=\, 62.02\%](https://wikimedia.org/api/rest_v1/media/math/render/svg/1b0b7ce6d128c5742499914574afc8e625b73af1)