The health effects of wine are mainly determined by its active ingredient – alcohol. Preliminary studies found that drinking small quantities of wine (up to one standard drink per day for women and one to two drinks per day for men), particularly of red wine, may be associated with a decreased risk of cardiovascular diseases, cognitive decline, stroke, diabetes mellitus, metabolic syndrome, and early death. Other studies found no such effects.

Drinking more than the standard drink amount increases the risk of cardiovascular diseases, high blood pressure, atrial fibrillation, stroke, and cancer. Mixed results are also observed in light drinking and cancer mortality.

Risk is greater in young people due to binge drinking, which may result in violence or accidents. About 88,000 deaths in the United States are estimated to be due to alcohol each year. Alcoholism reduces a person's life expectancy by around ten years and excessive alcohol use is the third leading cause of early death in the United States. According to systematic reviews and medical associations, people who are non-drinkers should never start drinking wine nor any other alcoholic drink.

The history of wine includes use as an early form of medication, being recommended variously as a safe alternative to drinking water, an antiseptic for treating wounds, a digestive aid, and as a cure for a wide range of ailments including lethargy, diarrhea, and pain from child birth. Ancient Egyptian papyri and Sumerian tablets dating back to 2200 BC detail the medicinal role of wine, making it the world's oldest documented human-made medicine. Wine continued to play a major role in medicine until the late 19th and early 20th century, when changing opinions and medical research on alcohol and alcoholism cast doubt on its role as part of a healthy lifestyle.

Moderate consumption

Nearly all research into the positive medical benefits of wine consumption makes a distinction between moderate consumption and heavy or binge drinking. Moderate levels of consumption vary by the individual according to age, sex, genetics, weight and body stature, as well as situational conditions, such as food consumption or use of drugs. In general, women absorb alcohol more quickly than men due to their lower body water content, so their moderate levels of consumption may be lower than those for a male of equal age. Some experts define "moderate consumption" as less than one 5-US-fluid-ounce (150 ml) glass of wine per day for women and two glasses per day for men.

The view of consuming wine in moderation has a history recorded as early as the Greek poet Eubulus (360 BC) who believed that three bowls (kylix) were the ideal amount of wine to consume. The number of three bowls for moderation is a common theme throughout Greek writing; today the standard 750 ml wine bottle contains roughly the volume of three kylix cups (250 ml or 8 fl oz each). However, the kylix cups would have contained a diluted wine, at a 1:2 or 1:3 dilution with water. In his circa 375 BC play Semele or Dionysus, Eubulus has Dionysus say:

Three bowls do I mix for the temperate: one to health, which they empty first, the second to love and pleasure, the third to sleep. When this bowl is drunk up, wise guests go home. The fourth bowl is ours no longer, but belongs to violence; the fifth to uproar, the sixth to drunken revel, the seventh to black eyes, the eighth is the policeman's, the ninth belongs to biliousness, and the tenth to madness and hurling the furniture.

Emerging evidence suggests that "even drinking within the recommended limits may increase the overall risk of death from various causes". A 2018 systematic analysis found that "The level of alcohol consumption that minimised harm across health outcomes was zero (95% UI 0·0–0·8) standard drinks per week". On the other hand, a 2020 USDA systematic review found that "low average consumption was associated with lower risk of mortality compared with never drinking status". As of 2022, "moderate" consumption is usually defined in average consumption per day while the patterns of consumption vary and may have implications for risks and effects on health (such as habituation from daily consumption or nonlinear dosage-harm associations from intermittent excessive alcohol use). According to the CDC, it would be important to focus on the amount people drink on the days that they drink.

Effect on the body

Bones

Heavy alcohol consumption has been shown to have a damaging effect on the cellular processes that create bone tissue, and long-term alcoholic consumption at high levels increases the frequency of fractures. A 2012 study found no relation between wine consumption and bone mineral density.

Cancer

The International Agency for Research on Cancer of the World Health Organization has classified alcohol as a Group 1 carcinogen.

Cardiovascular system

Professional cardiology associations recommend that people who are currently nondrinkers should abstain from drinking alcohol. Heavy drinkers have increased risk for heart disease, cardiac arrhythmias, hypertension, and elevated cholesterol levels.

The alcohol in wine has anticoagulant properties that may limit blood clotting.

Digestive system

The risk of infection from the bacterium Helicobacter pylori, which is associated with gastritis and peptic ulcers, appears to be lower with moderate alcohol consumption.

Headaches

There are several potential causes of so-called "red wine headaches", including histamine and tannins from grape skin or other phenolic compounds in wine. Sulfites – which are used as a preservative in wine – are unlikely to be a headache factor. Wine, like other alcoholic beverages, is a diuretic which promotes dehydration that can lead to headaches (such as the case often experienced with hangovers), indicating a need to maintain hydration when drinking wine and to consume in moderation. A 2017 review found that 22% of people experiencing migraine or tension headaches identified alcohol as a precipitating factor, and red wine as three times more likely to trigger a headache than beer.

Food intake

Alcohol can stimulate the appetite so it is better to drink it with food. When alcohol is mixed with food, it can slow the stomach's emptying time and potentially decrease the amount of food consumed at the meal.

A 150-millilitre (5-US-fluid-ounce) serving of red or white wine provides about 500 to 540 kilojoules (120 to 130 kilocalories) of food energy, while dessert wines provide more. Most wines have an alcohol by volume (ABV) percentage of about 11%; the higher the ABV, the higher the energy content of a wine.

Psychological and social

Danish epidemiological studies suggest that a number of psychological health benefits are associated with drinking wine. In a study testing this idea, Mortensen et al. (2001) measured socioeconomic status, education, IQ, personality, psychiatric symptoms, and health related behaviors, which included alcohol consumption. The analysis was then broken down into groups of those who drank beer, those who drank wine, and then those who did and did not drink at all. The results showed that for both men and women drinking wine was related to higher parental socioeconomic status, parental education and the socioeconimic status of the subjects. When the subjects were given an IQ test, wine drinkers consistently scored higher IQs than their counterpart beer drinkers. The average difference of IQ between wine and beer drinkers was 18 points. In regards to psychological functioning, personality, and other health-related behaviors, the study found wine drinkers to operate at optimal levels while beer drinkers performed below optimal levels. As these social and psychological factors also correlate with health outcomes, they represent a plausible explanation for at least some of the apparent health benefits of wine.

However, more research should be conducted as to the relationship between wine consumption and IQ along with the apparent correlations between beer drinkers and wine drinkers and how they are different psychologically. The study conducted by Mortensen should not be read as gospel. Wine and Beer being an indicator of a person's IQ level should be viewed with a very cautious lens. This study, from what we know, does not take into account the genetic, prenatal and environmental influences of how a person's generalized intelligence is formed. In current scientific literature, it is still a matter of debate and discovery as to what are true and reliable indicators of intelligence. Regular wine consumption being an indicator of higher intelligence while beer being an indicator of low intelligence according to Mortensen et al. (2009) should be looked at with a very critical lens. There should be future research into the validity of whether or not individuals who regularly consume wine have higher IQ scores in comparison to those who drink beer.

Heavy metals

In 2008, researchers from Kingston University in London discovered red wine to contain high levels of toxic metals relative to other beverages in the sample. Although the metal ions, which included chromium, copper, iron, manganese, nickel, vanadium and zinc, were also present in other plant-based beverages, the sample wine tested significantly higher for all metal ions, especially vanadium. Risk assessment was calculated using "target hazard quotients" (THQ), a method of quantifying health concerns associated with lifetime exposure to chemical pollutants. Developed by the Environmental Protection Agency in the US and used mainly to examine seafood, a THQ of less than 1 represents no concern while, for example, mercury levels in fish calculated to have THQs of between 1 and 5 would represent cause for concern.

The researchers stressed that a single glass of wine would not lead to metal poisoning, pointing out that their THQ calculations were based on the average person drinking one-third of a bottle of wine (250 ml) every day between the ages of 18 and 80. However the "combined THQ values" for metal ions in the red wine they analyzed were reported to be as high as 125. A subsequent study by the same university using a meta analysis of data based on wine samples from a selection of mostly European countries found equally high levels of vanadium in many red wines, showing combined THQ values in the range of 50 to 200, with some as high as 350.

The findings sparked immediate controversy due to several issues: the study's reliance on secondary data; the assumption that all wines contributing to that data were representative of the countries stated; and the grouping together of poorly understood high-concentration ions, such as vanadium, with relatively low-level, common ions such as copper and manganese. Some publications pointed out that the lack of identifiable wines and grape varieties, specific producers or even wine regions, provided only misleading generalizations that should not be relied upon in choosing wines.

In a news bulletin following the widespread reporting of the findings, the UK's National Health Service (NHS) were also concerned that "the way the researchers added together hazards from different metals to produce a final score for individual wines may not be particularly meaningful". Commentators in the US questioned the relevance of seafood-based THQ assessments to agricultural produce, with the TTB, responsible for testing imports for metal ion contamination, have not detected an increased risk. George Solas, quality assessor for the Canadian Liquor Control Board of Ontario (LCBO) claimed that the levels of heavy metal contamination reported were within the permitted levels for drinking water in tested reservoirs.

Whereas the NHS also described calls for improved wine labeling as an "extreme response" to research which provided "few solid answers", they acknowledged the authors call for further research to investigate wine production, including the influence that grape variety, soil type, geographical region, insecticides, containment vessels and seasonal variations may have on metal ion uptake.

Chemical composition

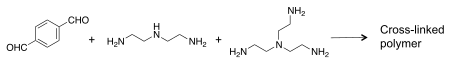

Natural phenols and polyphenols

Although red wine contains many chemicals under basic research for their potential health benefits, resveratrol has been particularly well studied and evaluated by regulatory authorities, such as the European Food Safety Authority and US Food and Drug Administration which identified it and other such phenolic compounds as not sufficiently understood to confirm their role as physiological antioxidants.

Resveratrol

Red wine contains an average of 1.9 (±1.7) mg of trans-resveratrol per liter. For comparison, dietary supplements of resveratrol (trans-resveratrol content varies) may contain as much as 500 mg.

Resveratrol is a stilbenoid phenolic compound found in wine produced in the grape skins and leaves of grape vines.

The production and concentration of resveratrol is not equal among all the varieties of wine grapes. Differences in clones, rootstock, Vitis species as well as climate conditions can affect the production of resveratrol. Also, because resveratrol is part of the defence mechanism in grapevines against attack by fungi or grape disease, the degree of exposure to fungal infection and grape diseases also appear to play a role. The Muscadinia family of vines, which has adapted over time through exposure to North American grape diseases such as phylloxera, has some of the highest concentrations of resveratrol among wine grapes. Among the European Vitis vinifera, grapes derived from the Burgundian Pinot family tend to have substantially higher amounts of resveratrol than grapes derived from the Cabernet family of Bordeaux. Wine regions with cooler, wetter climates that are more prone to grape disease and fungal attacks such as Oregon and New York tend to produce grapes with higher concentrations of resveratrol than warmer, dry climates like California and Australia.

Although red wine and white vine varieties produce similar amounts of resveratrol, red wine contains more than white, since red wines are produced by maceration (soaking the grape skins in the mash). Other winemaking techniques, such as the use of certain strains of yeast during fermentation or lactic acid bacteria during malolactic fermentation, can have an influence on the amount of resveratrol left in the resulting wines. Similarly, the use of certain fining agents during the clarification and stabilization of wine can strip the wine of some resveratrol molecules.

Anthocyanins

Red grapes are high in anthocyanins which are the source of the color of various fruits, such as red grapes. The darker the red wine, the more anthocyanins present.

Typical concentrations of free anthocyanins in full-bodied young red wines are around 500 mg per liter. For comparison, 100 g of fresh bilberry contain 300–700 mg and 100 g FW elderberry contain around 603–1265 mg.

Following dietary ingestion, anthocyanins undergo rapid and extensive metabolism that makes the biological effects presumed from in vitro studies unlikely to apply in vivo.

Although anthocyanins are under basic and early-stage clinical research for a variety of disease conditions, there exists no sufficient evidence that they have any beneficial effect in the human body. The US FDA has issued warning letters, e.g., to emphasize that anthocyanins are not a defined nutrient, cannot be assigned a dietary content level and are not regulated as a drug to treat any human disease.

History of wine in medicine

Early medicine was intimately tied with religion and the supernatural, with early practitioners often being priests and magicians. Wine's close association with ritual made it a logical tool for these early medical practices. Tablets from Sumeria and papyri from Egypt dating to 2200 BC include recipes for wine based medicines, making wine the oldest documented human-made medicine.

Early history

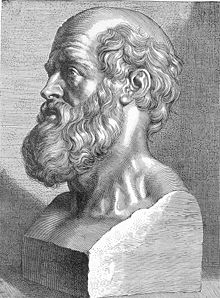

When the Greeks introduced a more systematized approach to medicine, wine retained its prominent role. The Greek physician Hippocrates considered wine a part of a healthy diet, and advocated its use as a disinfectant for wounds, as well as a medium in which to mix other drugs for consumption by the patient. He also prescribed wine as a cure for various ailments ranging from diarrhea and lethargy to pain during childbirth.

The medical practices of the Romans involved the use of wine in a similar manner. In his 1st-century work De Medicina, the Roman encyclopedist Aulus Cornelius Celsus detailed a long list of Greek and Roman wines used for medicinal purposes. While treating gladiators in Asia Minor, the Roman physician Galen would use wine as a disinfectant for all types of wounds, and even soaked exposed bowels before returning them to the body. During his four years with the gladiators, only five deaths occurred, compared to sixty deaths under the watch of the physician before him.

Religion still played a significant role in promoting wine's use for health. The Jewish Talmud noted wine to be "the foremost of all medicines: wherever wine is lacking, medicines become necessary." In his first epistle to Timothy, Paul the Apostle recommended that his young colleague drink a little wine every now and then for the benefit of his stomach and digestion. While the Islamic Koran contained restrictions on all alcohol, Islamic doctors such as the Persian Avicenna in the 11th century AD noted that wine was an efficient digestive aid but, because of the laws, were limited to use as a disinfectant while dressing wounds. Catholic monasteries during the Middle Ages also regularly used wine for medical treatments. So closely tied was the role of wine and medicine, that the first printed book on wine was written in the 14th century by a physician, Arnaldus de Villa Nova, with lengthy essays on wine's suitability for treatment of a variety of medical ailments such dementia and sinus problems.

Risks of consumption

The lack of safe drinking water may have been one reason for wine's popularity in medicine. Wine was still being used to sterilize water as late as the Hamburg cholera epidemic of 1892 in order to control the spread of the disease. However, the late 19th century and early 20th century ushered in a period of changing views on the role of alcohol and, by extension, wine in health and society. The Temperance movement began to gain steam by touting the ills of alcoholism, which was eventually defined by the medical establishment as a disease. Studies of the long- and Short-term effects of alcohol consumption caused many in the medical community to reconsider the role of wine in medicine and diet. Soon, public opinion turned against consumption of alcohol in any form, leading to Prohibition in the United States and other countries. In some areas, wine was able to maintain a limited role, such as an exemption from Prohibition in the United States for "therapeutic wines" that were sold legally in drug stores. These wines were marketed for their supposed medicinal benefits, but some wineries used this measure as a loophole to sell large quantities of wine for recreational consumption. In response, the United States government issued a mandate requiring producers to include an emetic additive that would induce vomiting above the consumption of a certain dosage level.

Throughout the mid to early 20th century, health advocates pointed to the risk of alcohol consumption and the role it played in a variety of ailments such as blood disorders, high blood pressure, cancer, infertility, liver damage, muscle atrophy, psoriasis, skin infections, strokes, and long-term brain damage. Studies showed a connection between alcohol consumption among pregnant mothers and an increased risk of mental retardation and physical abnormalities in what became known as fetal alcohol syndrome, prompting the use of alcohol packaging warning messages in several countries.

French paradox

The hypothesis of the French paradox assumes a low prevalence of heart disease due to the consumption of red wine despite a diet high in saturated fat. Although epidemiological studies indicate red wine consumption may support the French paradox, there is insufficient clinical evidence to confirm it, as of 2017.