Population projections are attempts to show how the human population statistics might change in the future. These projections are an important input to forecasts of the population's impact on this planet and humanity's future well-being. Models of population growth take trends in human development, and apply projections into the future. These models use trend-based-assumptions about how populations will respond to economic, social and technological forces to understand how they will affect fertility and mortality, and thus population growth.

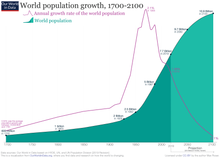

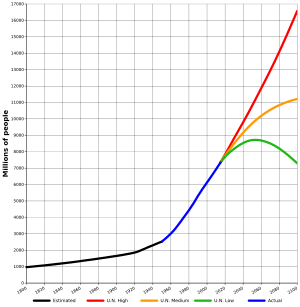

The 2019 projections from the United Nations Population Division (made before the COVID-19 pandemic) show that annual world population growth peaked at 2.1% in 1968, has since dropped to 1.1%, and could drop even further to 0.1% by 2100, which would be a growth rate not seen since pre-industrial revolution days. Based on this, the UN Population Division projects the world population, which is 7.8 billion as of 2020, to level out around 2100 at 10.9 billion (the median line), assuming a continuing decrease in the global average fertility rate from 2.5 births per woman during the 2015–2020 period to 1.9 in 2095–2100, according to the medium-variant projection. A 2014 projection has the population continuing to grow into the next century.

However, estimates outside of the United Nations have put forward alternative models based on additional downward pressure on fertility (such as successful implementation of education and family planning goals in the Sustainable Development Goals) which could result in peak population during the 2060-2070 period rather than later.

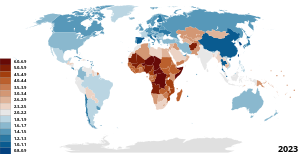

According to the UN, about two-thirds of the predicted growth in population between 2020 and 2050 will take place in Africa. It is projected that 50% of births in the 5-year period 2095-2100 will be in Africa. Other organizations project lower levels of population growth in Africa based particularly on improvement in women's education and successfully implementing family planning.

By 2100, the UN projects the population in Sub-Saharan Africa will reach 3.8 billion, IHME projects 3.1 billion, and IIASA is the lowest at 2.6 billion. In contrast to the UN projections, the models of fertility developed by IHME and IIASA incorporate women's educational attainment, and in the case of IHME, also assume successful implementation of family planning.

Because of population momentum the global population will continue to grow, although at a steadily slower rate, for the remainder of this century, but the main driver of long-term future population growth will be the evolution of the global average fertility rate.

Table of UN projections

The United Nation's Population Division publishes high & low estimates (by gender) & density.

| Year | Total population |

|---|---|

| 2021 | 7,874,965,732 |

| 2022 | 7,953,952,577 |

| 2023 | 8,031,800,338 |

| 2024 | 8,108,605,256 |

| 2025 | 8,184,437,453 |

| 2026 | 8,259,276,651 |

| 2027 | 8,333,078,318 |

| 2028 | 8,405,863,301 |

| 2029 | 8,477,660,723 |

| 2030 | 8,548,487,371 |

| 2031 | 8,618,349,454 |

| 2032 | 8,687,227,873 |

| 2033 | 8,755,083,512 |

| 2034 | 8,821,862,705 |

| 2035 | 8,887,524,229 |

| 2036 | 8,952,048,885 |

| 2037 | 9,015,437,616 |

| 2038 | 9,077,693,645 |

| 2039 | 9,138,828,562 |

| 2040 | 9,198,847,382 |

| 2041 | 9,257,745,483 |

| 2042 | 9,315,508,153 |

| 2043 | 9,372,118,247 |

| 2044 | 9,427,555,382 |

| 2045 | 9,481,803,272 |

| 2046 | 9,534,854,673 |

| 2047 | 9,586,707,749 |

| 2048 | 9,637,357,320 |

| 2049 | 9,686,800,146 |

| 2050 | 9,735,033,900 |

| 2051 | 9,782,061,758 |

| 2052 | 9,827,885,441 |

| 2053 | 9,872,501,562 |

| 2054 | 9,915,905,251 |

| 2055 | 9,958,098,746 |

| 2056 | 9,999,085,167 |

| 2057 | 10,038,881,262 |

| 2058 | 10,077,518,080 |

| 2059 | 10,115,036,360 |

| 2060 | 10,151,469,683 |

| 2061 | 10,186,837,209 |

| 2062 | 10,221,149,040 |

| 2063 | 10,254,419,004 |

| 2064 | 10,286,658,354 |

| 2065 | 10,317,879,315 |

| 2066 | 10,348,098,079 |

| 2067 | 10,377,330,830 |

| 2068 | 10,405,590,532 |

| 2069 | 10,432,889,136 |

| 2070 | 10,459,239,501 |

| 2071 | 10,484,654,858 |

| 2072 | 10,509,150,402 |

| 2073 | 10,532,742,861 |

| 2074 | 10,555,450,003 |

| 2075 | 10,577,288,195 |

| 2076 | 10,598,274,172 |

| 2077 | 10,618,420,909 |

| 2078 | 10,637,736,819 |

| 2079 | 10,656,228,233 |

| 2080 | 10,673,904,454 |

| 2081 | 10,690,773,335 |

| 2082 | 10,706,852,426 |

| 2083 | 10,722,171,375 |

| 2084 | 10,736,765,444 |

| 2085 | 10,750,662,353 |

| 2086 | 10,763,874,023 |

| 2087 | 10,776,402,019 |

| 2088 | 10,788,248,948 |

| 2089 | 10,799,413,366 |

| 2090 | 10,809,892,303 |

| 2091 | 10,819,682,643 |

| 2092 | 10,828,780,959 |

| 2093 | 10,837,182,077 |

| 2094 | 10,844,878,798 |

| 2095 | 10,851,860,145 |

| 2096 | 10,858,111,587 |

| 2097 | 10,863,614,776 |

| 2098 | 10,868,347,636 |

| 2099 | 10,872,284,134 |

| 2100 | 10,875,393,719 |

History of population projections

Walter Greiling projected in the 1950s that world population would reach a peak of about nine billion, in the 21st century, and then stop growing after a readjustment of the Third World and a sanitation of the tropics.

Estimates published in the 2000s tended to predict that the population of Earth would stop increasing around 2070. In a 2004 long-term prospective report, the United Nations Population Division projected the world population would peak at 7.85 billion in 2075. After reaching this maximum, it would decline slightly and then resume a slow increase, reaching a level of 5.11 billion by 2300, about the same as the projected 2050 figure.

This prediction was revised in the 2010s, to the effect that no maximum will likely be reached in the 21st century. The main reason for the revision was that the ongoing rapid population growth in Africa had been underestimated. A 2014 paper by demographers from several universities and the United Nations Population Division forecast that the world's population would reach about 10.9 billion in 2100 and continue growing thereafter. In 2017 the UN predicted a decline of global population growth rate from +1.0% in 2020 to +0.5% in 2050 and to +0.1% in 2100.

Jørgen Randers, one of the authors of the seminal 1972 long-term simulations in The Limits to Growth, offered an alternative scenario in a 2012 book, arguing that traditional projections insufficiently take into account the downward impact of global urbanization on fertility. Randers' "most likely scenario" predicts a peak in the world population in the early 2040s at about 8.1 billion people, followed by decline.

Drivers of population change

The population of a country or area grows or declines through the interaction of three demographic drivers: fertility, mortality, and migration.

Fertility

Fertility is expressed as the total fertility rate (TFR), a measure of the number of children on average that a woman will bear in her lifetime. With longevity trending towards uniform and stable values worldwide, the main driver of future population growth will be the evolution of the fertility rate.

Where fertility is high, demographers generally assume that fertility will decline and eventually stabilize at about two children per woman.

During the period 2015–2020, the average world fertility rate was 2.5 children per woman, about half the level in 1950-1955 (5 children per woman). In the medium variant, global fertility is projected to decline further to 2.2 in 2045-2050 and to 1.9 in 2095–2100.

Mortality

If the mortality rate is relatively high and the resulting life expectancy is therefore relatively low, changes in mortality can have a material impact on population growth. Where the mortality rate is low and life expectancy has therefore risen, a change in mortality will have much less of an effect.

Because child mortality has declined substantially over the last several decades, global life expectancy at birth, which is estimated to have risen from 47 years in 1950–1955 to 67 years in 2000–2005, is expected to keep rising to reach 77 years in 2045–2050. In the more Developed regions, the projected increase is from 79 years today to 83 years by mid-century. Among the Least Developed countries, where life expectancy today is just under 65 years, it is expected to be 71 years in 2045–2050.

The population of 31 countries or areas, including Ukraine, Romania, Japan and most of the successor states of the Soviet Union, is expected to be lower in 2050 than in 2005.

Migration

Migration can have a significant effect on population change. Global South-South migration accounts for 38% of total migration, and Global South-North for 34%. For example, the United Nations reports that during the period 2010–2020, fourteen countries will have seen a net inflow of more than one million migrants, while ten countries will have seen a net outflow of similar proportions. The largest migratory outflows have been in response to demand for workers in other countries (Bangladesh, Nepal and the Philippines) or to insecurity in the home country (Myanmar, Syria and Venezuela). Belarus, Estonia, Germany, Hungary, Italy, Japan, the Russian Federation, Serbia and Ukraine have experienced a net inflow of migrants over the decade, helping to offset population losses caused by a negative natural increase (births minus deaths).

World population

Asia Africa Europe Latin America Northern America Oceania

2050

The median scenario of the UN 2019 World Population Prospects predicts the following populations per region in 2050 (compared to population in 2000), in billions:

| 2000 | 2050 | Growth | %/yr | |

|---|---|---|---|---|

| Asia | 3.74 | 5.29 | +41% | +0.7% |

| Africa | 0.81 | 2.49 | +207% | +2.3% |

| Europe | 0.73 | 0.71 | −3% | −0.1% |

| South/Central America +Caribbean |

0.52 | 0.76 | +46% | +0.8% |

| North America | 0.31 | 0.43 | +39% | +0.7% |

| Oceania | 0.03 | 0.06 | +100% | +1.4% |

| World | 6.14 | 9.74 | +60% | +0.9% |

After 2050

Projections of population reaching more than one generation into the future are highly speculative: Thus, the United Nations Department of Economic and Social Affairs report of 2004 projected the world population to peak at 9.22 billion in 2075 and then stabilise at a value close to 9 billion; By contrast, a 2014 projection by the United Nations Population Division predicted a population close to 11 billion by 2100 without any declining trend in the foreseeable future.

United Nations projections

The UN Population Division report of 2019 projects world population to continue growing, although at a steadily decreasing rate, and to reach 10.9 billion in 2100 with a growth rate at that time of close to zero.

This projected growth of population, like all others, depends on

assumptions about vital rates. For example, the UN Population Division

assumes that Total fertility rate (TFR) will continue to decline, at

varying paces depending on circumstances in individual regions, to a

below-replacement level of 1.9 by 2100. Between now (2020) and 2100,

regions with TFR currently below this rate, e.g. Europe, will see TFR

rise. Regions with TFR above this rate, will see TFR continue to

decline.

Other projections

- A 2020 study published by The Lancet from researchers funded by the Global Burden of Disease Study promotes a lower growth scenario, projecting that world population will peak in 2064 at 9.7 billion and then decline to 8.8 billion in 2100. This projection assumes further advancement of women's rights globally. In this case TFR is assumed to decline more rapidly than the UN's projection, to reach 1.7 in 2100.

- An analysis from the Wittgenstein Center IIASA predicts global population to peak in 2070 at 9.4 billion and then decline to 9.0 billion in 2100.

- The Institute for Health Metrics and Evaluation (IHME) and the Insurance Institute of South Africa (IIASA) project lower fertility in Sub-Saharan Africa (SSA) in 2100 than the UN. By 2100, the UN projects the population in SSA will reach 3.8 billion, IHME projects 3.1 billion, and IIASA projects 2.6 billion. IHME and IIASA incorporate women's educational attainment in their models of fertility, and in the case of IHME, also consider met need for family planning.

Other assumptions can produce other results. Some of the authors of the 2004 UN report assumed that life expectancy would rise slowly and continuously. The projections in the report assume this with no upper limit, though at a slowing pace depending on circumstances in individual countries. By 2100, the report assumed life expectancy to be from 66 to 97 years, and by 2300 from 87 to 106 years, depending on the country. Based on that assumption, they expect that rising life expectancy will produce small but continuing population growth by the end of the projections, ranging from 0.03 to 0.07 percent annually. The hypothetical feasibility (and wide availability) of life extension by technological means would further contribute to long term (beyond 2100) population growth.

Evolutionary biology also suggests the demographic transition may reverse itself and global population may continue to grow in the long term. In addition, recent evidence suggests birth rates may be rising in the 21st century in the developed world.

Growth regions

The table below shows that from 2020 to 2050, the bulk of the world's population growth is predicted to take place in Africa: of the additional 1.9 billion people projected between 2020 and 2050, 1.2 billion will be added in Africa, 0.7 billion in Asia and zero in the rest of the world. Africa's share of global population is projected to grow from 17% in 2020 to 26% in 2050 and 39% by 2100, while the share of Asia will fall from 59% in 2020 to 55% in 2050 and 43% in 2100. The strong growth of the African population will happen regardless of the rate of decrease of fertility, because of the exceptional proportion of young people already living today. For example, the UN projects that the population of Nigeria will surpass that of the United States by about 2050.

| Region | Population | ||||||

|---|---|---|---|---|---|---|---|

| 2020 | 2050 | Change 2020–50 |

2100 | ||||

| bn | % of Total |

bn | % of Total |

bn | % of Total | ||

| Africa | 1.3 | 17 | 2.5 | 26 | 1.2 | 4.3 | 39 |

| Asia | 4.6 | 59 | 5.3 | 55 | 0.7 | 4.7 | 43 |

| Other | 1.9 | 24 | 1.9 | 20 | 0.0 | 1.9 | 17 |

|

| |||||||

| More Developed | 1.3 | 17 | 1.3 | 13 | 0.0 | 1.3 | 12 |

| Less Developed | 6.5 | 83 | 8.4 | 87 | 1.9 | 9.6 | 88 |

|

| |||||||

| World | 7.8 | 100 | 9.7 | 100 | 1.9 | 10.9 | 100 |

Thus the population of the More Developed regions is slated to remain mostly unchanged, at 1.3 billion for the remainder of the 21st century. All population growth comes from the Less Developed regions.

The table below breaks out the UN's future population growth predictions by region

| Region | 2020–25 | 2045–50 | 2095–2100 |

|---|---|---|---|

| Africa | 2.4 | 1.8 | 0.6 |

| Asia | 0.8 | 0.1 | −0.4 |

| Europe | 0.0 | −0.3 | −0.1 |

| Latin America & the Caribbean | 0.8 | 0.2 | −0.5 |

| Northern America | 0.6 | 0.3 | 0.2 |

| Oceania | 1.2 | 0.8 | 0.4 |

| World | 1.0 | 0.5 | 0.0 |

The UN projects that between 2020 and 2100 there will be declines in population growth in all six regions; that by 2100 three of them will be undergoing population decline, and the world will have reached zero population growth.

Most populous nations by 2050 and 2100

The UN Population Division has calculated the future population of the world's countries, based on current demographic trends. Current (2020) world population is 7.8 billion. The 2019 report projects world population in 2050 to be 9.7 billion people, and possibly as high as 11 billion by the next century, with the following estimates for the top 14 countries in 2020, 2050, and 2100:

| Country | Population (millions) | Rank | ||||

|---|---|---|---|---|---|---|

| 2020 | 2050 | 2100 | 2020 | 2050 | 2100 | |

| China | 1,439 | 1,402 | 1,065 | 1 | 2 | 2 |

| India | 1,380 | 1,639 | 1,447 | 2 | 1 | 1 |

| United States | 331 | 379 | 434 | 3 | 4 | 4 |

| Indonesia | 273 | 331 | 321 | 4 | 6 | 7 |

| Pakistan | 221 | 338 | 403 | 5 | 5 | 5 |

| Brazil | 212 | 229 | 181 | 6 | 7 | 12 |

| Nigeria | 206 | 401 | 733 | 7 | 3 | 3 |

| Bangladesh | 165 | 192 | 151 | 8 | 10 | 14 |

| Russia | 146 | 136 | 126 | 9 | 14 | 19 |

| Mexico | 129 | 155 | 141 | 10 | 12 | 17 |

| Japan | 126 | 106 | 75 | 11 | 17 | 36 |

| Ethiopia | 115 | 205 | 294 | 12 | 8 | 8 |

| Philippines | 110 | 144 | 146 | 13 | 13 | 15 |

| Egypt | 102 | 160 | 225 | 14 | 11 | 10 |

| Democratic Republic of the Congo | 90 | 194 | 362 | 16 | 9 | 6 |

| Tanzania | 60 | 135 | 286 | 24 | 15 | 9 |

| Niger | 24 | 66 | 165 | 56 | 30 | 13 |

| Angola | 33 | 77 | 188 | 44 | 24 | 11 |

| World | 7,795 | 9,735 | 10,875 |

| ||

From 2017 to 2050, the nine highlighted countries are expected to account for half of the world's projected population increase: India, Nigeria, the Democratic Republic of the Congo, Pakistan, Ethiopia, Tanzania, the United States, Uganda, and Indonesia, listed according to the expected size of their contribution to that projected population growth.