| |

| Program overview | |

|---|---|

| Country | United States |

| Organization | NASA |

| Purpose | Crewed orbital flight |

| Status | Completed |

| Program history | |

| Cost | US$196 billion (2011) |

| Duration | 1972–1986 1989-2011 |

| First flight |

|

| First crewed flight |

|

| Last flight |

|

| Successes | 133 |

| Failures | 2 (STS-51-L and STS-107) |

| Partial failures | 1 (STS-83) |

| Launch site(s) | |

| Vehicle information | |

| Crewed vehicle(s) | Space Shuttle orbiter |

| Launch vehicle(s) | Space Shuttle |

The Space Shuttle program was the fourth human spaceflight program carried out by the U.S. National Aeronautics and Space Administration (NASA), which accomplished routine transportation for Earth-to-orbit crew and cargo from 1981 to 2011. Its official name, Space Transportation System (STS), was taken from a 1969 plan for a system of reusable spacecraft of which it was the only item funded for development. It flew 135 missions and carried 355 astronauts from 16 countries, many on multiple trips.

The Space Shuttle—composed of an orbiter launched with two reusable solid rocket boosters and a disposable external fuel tank—carried up to eight astronauts and up to 50,000 lb (23,000 kg) of payload into low Earth orbit (LEO). When its mission was complete, the orbiter would reenter the Earth's atmosphere and land like a glider at either the Kennedy Space Center or Edwards Air Force Base.

The Shuttle is the only winged crewed spacecraft to have achieved orbit and landing, and the first reusable crewed space vehicle that made multiple flights into orbit.[a] Its missions involved carrying large payloads to various orbits including the International Space Station (ISS), providing crew rotation for the space station, and performing service missions on the Hubble Space Telescope. The orbiter also recovered satellites and other payloads (e.g., from the ISS) from orbit and returned them to Earth, though its use in this capacity was rare. Each vehicle was designed with a projected lifespan of 100 launches, or 10 years' operational life. Original selling points on the shuttles were over 150 launches over a 15-year operational span with a 'launch per month' expected at the peak of the program, but extensive delays in the development of the International Space Station never created such a peak demand for frequent flights.

Background

Various shuttle concepts had been explored since the late 1960s. The program formally commenced in 1972, becoming the sole focus of NASA's human spaceflight operations after the Apollo, Skylab, and Apollo–Soyuz programs in 1975. The Shuttle was originally conceived of and presented to the public in 1972 as a 'Space Truck' which would, among other things, be used to build a United States space station in low Earth orbit during the 1980s and then be replaced by a new vehicle by the early 1990s. The stalled plans for a U.S. space station evolved into the International Space Station and were formally initiated in 1983 by President Ronald Reagan, but the ISS suffered from long delays, design changes and cost over-runs and forced the service life of the Space Shuttle to be extended several times until 2011 when it was finally retired—serving twice as long as it was originally designed to do. In 2004, according to President George W. Bush's Vision for Space Exploration, use of the Space Shuttle was to be focused almost exclusively on completing assembly of the ISS, which was far behind schedule at that point.

The first experimental orbiter Enterprise was a high-altitude glider, launched from the back of a specially modified Boeing 747, only for initial atmospheric landing tests (ALT). Enterprise's first test flight was on February 18, 1977, only five years after the Shuttle program was formally initiated; leading to the launch of the first space-worthy shuttle Columbia on April 12, 1981, on STS-1. The Space Shuttle program finished with its last mission, STS-135 flown by Atlantis, in July 2011, retiring the final Shuttle in the fleet. The Space Shuttle program formally ended on August 31, 2011.

Conception and development

Before the Apollo 11 Moon landing in 1969, NASA began studies of Space Shuttle designs as early as October 1968. The early studies were denoted "Phase A", and in June 1970, "Phase B", which were more detailed and specific. The primary intended use of the Space Shuttle was supporting the future space station, ferrying a minimum crew of four and about 20,000 pounds (9,100 kg) of cargo, and being able to be rapidly turned around for future flights.

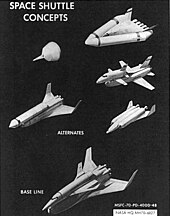

Two designs emerged as front-runners. One was designed by engineers at the Manned Spaceflight Center, and championed especially by George Mueller. This was a two-stage system with delta-winged spacecraft, and generally complex. An attempt to re-simplify was made in the form of the DC-3, designed by Maxime Faget, who had designed the Mercury capsule among other vehicles. Numerous offerings from a variety of commercial companies were also offered but generally fell by the wayside as each NASA lab pushed for its own version.

All of this was taking place in the midst of other NASA teams proposing a wide variety of post-Apollo missions, a number of which would cost as much as Apollo or more. As each of these projects fought for funding, the NASA budget was at the same time being severely constrained. Three were eventually presented to Vice President Agnew in 1969. The shuttle project rose to the top, largely due to tireless campaigning by its supporters. By 1970 the shuttle had been selected as the one major project for the short-term post-Apollo time frame.

When funding for the program came into question, there were concerns that the project might be canceled. This led to an effort to interest the US Air Force in using the shuttle for their missions as well. The Air Force was mildly interested but demanded a much larger vehicle, far larger than the original concepts, which NASA accepted since it was also beneficial to their own plans. To lower the development costs of the resulting designs, boosters were added, a throw-away fuel tank was adopted, and many other changes were made that greatly lowered the reusability and greatly added to the vehicle and operational costs. With the Air Force's assistance, the system emerged in its operational form.Program history

All Space Shuttle missions were launched from the Kennedy Space Center (KSC) in Florida. Some civilian and military circumpolar space shuttle missions were planned for Vandenberg AFB in California. However, the use of Vandenberg AFB for space shuttle missions was canceled after the Challenger disaster in 1986. The weather criteria used for launch included, but were not limited to: precipitation, temperatures, cloud cover, lightning forecast, wind, and humidity. The Shuttle was not launched under conditions where it could have been struck by lightning.

The first fully functional orbiter was Columbia (designated OV-102), built in Palmdale, California. It was delivered to Kennedy Space Center (KSC) on March 25, 1979, and was first launched on April 12, 1981—the 20th anniversary of Yuri Gagarin's space flight—with a crew of two.

Challenger (OV-099) was delivered to KSC in July 1982, Discovery (OV-103) in November 1983, Atlantis (OV-104) in April 1985 and Endeavour in May 1991. Challenger was originally built and used as a Structural Test Article (STA-099), but was converted to a complete orbiter when this was found to be less expensive than converting Enterprise from its Approach and Landing Test configuration into a spaceworthy vehicle.

On April 24, 1990, Discovery carried the Hubble Space Telescope into space during STS-31.

In the course of 135 missions flown, two orbiters (Columbia and Challenger) suffered catastrophic accidents, with the loss of all crew members, totaling 14 astronauts.

The accidents led to national level inquiries and detailed analysis of why the accidents occurred. There was a significant pause where changes were made before the Shuttles returned to flight. The Columbia disaster occurred in 2003, but STS took more than a year off before returning to flight in June 2005 with the STS-114 mission. The previously mentioned break was between January 1986 (when the Challenger disaster occurred) and 32 months later when STS-26 was launched on September 29, 1988.

The longest Shuttle mission was STS-80 lasting 17 days, 15 hours. The final flight of the Space Shuttle program was STS-135 on July 8, 2011.

Since the Shuttle's retirement in 2011, many of its original duties are performed by an assortment of government and private vessels. The European ATV Automated Transfer Vehicle supplied the ISS between 2008 and 2015. Classified military missions are being flown by the US Air Force's uncrewed spaceplane, the X-37B. By 2012, cargo to the International Space Station was already being delivered commercially under NASA's Commercial Resupply Services by SpaceX's partially reusable Dragon spacecraft, followed by Orbital Sciences' Cygnus spacecraft in late 2013. Crew service to the ISS is currently provided by the Russian Soyuz and, since 2020, the SpaceX Dragon 2 crew capsule, launched on the company's reusable Falcon 9 rocket as part of NASA's Commercial Crew Development program. Boeing is also developing its Starliner capsule for ISS crew service, but has been delayed since its Dec. 2019 uncrewed test flight was unsuccessful. For missions beyond low Earth orbit, NASA is building the Space Launch System and the Orion spacecraft, part of the Artemis program.

|

|

|

Accomplishments

Space Shuttle missions have included:

- Spacelab missions Including:

- Science

- Astronomy

- Crystal growth

- Space physics

- Construction of the International Space Station (ISS)

- Crew rotation and servicing of Mir and the International Space Station (ISS)

- Servicing missions, such as to repair the Hubble Space Telescope (HST) and orbiting satellites

- Human experiments in low Earth orbit (LEO)

- Carried to low Earth orbit (LEO):

- The Hubble Space Telescope (HST)

- Components of the International Space Station (ISS)

- Supplies in Spacehab modules or Multi-Purpose Logistics Modules

- The Long Duration Exposure Facility

- The Upper Atmosphere Research Satellite

- The Compton Gamma Ray Observatory

- The Earth Radiation Budget Satellite

- The Mir Shuttle Docking Node

- Carried satellites with a booster, such as the Payload Assist Module (PAM-D) or the Inertial Upper Stage (IUS), to the point where the booster sends the satellite to:

- A higher Earth orbit; these have included:

- Chandra X-ray Observatory

- The first six TDRS satellites

- Two DSCS-III (Defense Satellite Communications System) communications satellites in one mission

- A Defense Support Program satellite

- An interplanetary mission; these have included:

- A higher Earth orbit; these have included:

-

U.S. Shuttle Columbia landing at the end of STS-73, 1995

-

Space art for the Spacelab 2 mission, showing some of the various experiments in the payload bay. Spacelab was a major European contribution to the Space Shuttle program

-

European astronauts prepare for their Spacelab mission, 1984.

-

SpaceLab hardware included a pressurized lab, but also other equipment allowing the Orbiter to serve as a crewed space observatory (Astro-2 mission, 1995, shown)

-

Astronauts Thomas D. Akers and Kathryn C. Thornton install corrective optics on the Hubble Space Telescope during STS-61.

Budget

Early during development of the Space Shuttle, NASA had estimated that the program would cost $7.45 billion ($43 billion in 2011 dollars, adjusting for inflation) in development/non-recurring costs, and $9.3M ($54M in 2011 dollars) per flight. Early estimates for the cost to deliver payload to low-Earth orbit were as low as $118 per pound ($260/kg) of payload ($635/lb or $1,400/kg in 2011 dollars), based on marginal or incremental launch costs, and assuming a 65,000 pound (30 000 kg) payload capacity and 50 launches per year. A more realistic projection of 12 flights per year for the 15-year service life combined with the initial development costs would have resulted in a total cost projection for the program of roughly $54 billion (in 2011 dollars).

The total cost of the actual 30-year service life of the Shuttle program through 2011, adjusted for inflation, was $196 billion. The exact breakdown into non-recurring and recurring costs is not available, but, according to NASA, the average cost to launch a Space Shuttle as of 2011 was about $450 million per mission.

NASA's budget for 2005 allocated 30%, or $5 billion, to space shuttle operations; this was decreased in 2006 to a request of $4.3 billion. Non-launch costs account for a significant part of the program budget: for example, during fiscal years 2004 to 2006, NASA spent around $13 billion on the Space Shuttle program, even though the fleet was grounded in the aftermath of the Columbia disaster and there were a total of three launches during this period of time. In fiscal year 2009, NASA budget allocated $2.98 billion for 5 launches to the program, including $490 million for "program integration", $1.03 billion for "flight and ground operations", and $1.46 billion for "flight hardware" (which includes maintenance of orbiters, engines, and the external tank between flights.)

Per-launch costs can be measured by dividing the total cost over the life of the program (including buildings, facilities, training, salaries, etc.) by the number of launches. With 135 missions, and the total cost of US$192 billion (in 2010 dollars), this gives approximately $1.5 billion per launch over the life of the Shuttle program. A 2017 study found that carrying one kilogram of cargo to the ISS on the Shuttle cost $272,000 in 2017 dollars, twice the cost of Cygnus and three times that of Dragon.

NASA used a management philosophy known as success-oriented management during the Space Shuttle program which was described by historian Alex Roland in the aftermath of the Columbia disaster as "hoping for the best". Success-oriented management has since been studied by several analysts in the area.

Accidents

In the course of 135 missions flown, two orbiters were destroyed, with loss of crew totalling 14 astronauts:

- Challenger – lost 73 seconds after liftoff, STS-51-L, January 28, 1986

- Columbia – lost approximately 16 minutes before its expected landing, STS-107, February 1, 2003

There was also one abort-to-orbit and some fatal accidents on the ground during launch preparations.

STS-51-L (Challenger, 1986)

Close-up video footage of Challenger during its final launch on January 28, 1986, clearly shows that the problems began due to an O-ring failure on the right solid rocket booster (SRB). The hot plume of gas leaking from the failed joint caused the collapse of the external tank, which then resulted in the orbiter's disintegration due to high aerodynamic stress. The accident resulted in the loss of all seven astronauts on board. Endeavour (OV-105) was built to replace Challenger (using structural spare parts originally intended for the other orbiters) and delivered in May 1991; it was first launched a year later.

After the loss of Challenger, NASA grounded the Space Shuttle program for over two years, making numerous safety changes recommended by the Rogers Commission Report, which included a redesign of the SRB joint that failed in the Challenger accident. Other safety changes included a new escape system for use when the orbiter was in controlled flight, improved landing gear tires and brakes, and the reintroduction of pressure suits for Shuttle astronauts (these had been discontinued after STS-4; astronauts wore only coveralls and oxygen helmets from that point on until the Challenger accident). The Shuttle program continued in September 1988 with the launch of Discovery on STS-26.

The accidents did not just affect the technical design of the orbiter, but also NASA. Quoting some recommendations made by the post-Challenger Rogers commission:

Recommendation I – The faulty Solid Rocket Motor joint and seal must be changed. This could be a new design eliminating the joint or a redesign of the current joint and seal. ... the Administrator of NASA should request the National Research Council to form an independent Solid Rocket Motor design oversight committee to implement the Commission's design recommendations and oversee the design effort.

Recommendation II – The Shuttle Program Structure should be reviewed. ... NASA should encourage the transition of qualified astronauts into agency management Positions.

Recommendation III – NASA and the primary shuttle contractors should review all Criticality 1, 1R, 2, and 2R items and hazard analyses.

Recommendation IV – NASA should establish an Office of Safety, Reliability and Quality Assurance to be headed by an Associate Administrator, reporting directly to the NASA Administrator.

Recommendation VI – NASA must take actions to improve landing safety. The tire, brake and nosewheel system must be improved.

Recommendation VII – Make all efforts to provide a crew escape system for use during controlled gliding flight.

Recommendation VIII – The nation's reliance on the shuttle as its principal space launch capability created a relentless pressure on NASA to increase the flight rate ... NASA must establish a flight rate that is consistent with its resources.

STS-107 (Columbia, 2003)

The Shuttle program operated accident-free for seventeen years and 88 missions after the Challenger disaster, until Columbia broke up on reentry, killing all seven crew members, on February 1, 2003. The ultimate cause of the accident was a piece of foam separating from the external tank moments after liftoff and striking the leading edge of the orbiter's left wing, puncturing one of the reinforced carbon-carbon (RCC) panels that covered the wing edge and protected it during reentry. As Columbia reentered the atmosphere at the end of an otherwise normal mission, hot gas penetrated the wing and destroyed it from the inside out, causing the orbiter to lose control and disintegrate.

After the Columbia disaster, the International Space Station operated on a skeleton crew of two for more than two years and was serviced primarily by Russian spacecraft. While the "Return to Flight" mission STS-114 in 2005 was successful, a similar piece of foam from a different portion of the tank was shed. Although the debris did not strike Discovery, the program was grounded once again for this reason.

The second "Return to Flight" mission, STS-121 launched on July 4, 2006, at 14:37 (EDT). Two previous launches were scrubbed because of lingering thunderstorms and high winds around the launch pad, and the launch took place despite objections from its chief engineer and safety head. A five-inch (13 cm) crack in the foam insulation of the external tank gave cause for concern; however, the Mission Management Team gave the go for launch. This mission increased the ISS crew to three. Discovery touched down successfully on July 17, 2006, at 09:14 (EDT) on Runway 15 at Kennedy Space Center.

Following the success of STS-121, all subsequent missions were completed without major foam problems, and the construction of the ISS was completed (during the STS-118 mission in August 2007, the orbiter was again struck by a foam fragment on liftoff, but this damage was minimal compared to the damage sustained by Columbia).

The Columbia Accident Investigation Board, in its report, noted the reduced risk to the crew when a Shuttle flew to the International Space Station (ISS), as the station could be used as a safe haven for the crew awaiting rescue in the event that damage to the orbiter on ascent made it unsafe for reentry. The board recommended that for the remaining flights, the Shuttle always orbit with the station. Prior to STS-114, NASA Administrator Sean O'Keefe declared that all future flights of the Space Shuttle would go to the ISS, precluding the possibility of executing the final Hubble Space Telescope servicing mission which had been scheduled before the Columbia accident, despite the fact that millions of dollars worth of upgrade equipment for Hubble were ready and waiting in NASA warehouses. Many dissenters, including astronauts[who?], asked NASA management to reconsider allowing the mission, but initially the director stood firm. On October 31, 2006, NASA announced approval of the launch of Atlantis for the fifth and final shuttle servicing mission to the Hubble Space Telescope, scheduled for August 28, 2008. However SM4/STS-125 eventually launched in May 2009.

One impact of Columbia was that future crewed launch vehicles, namely the Ares I, had a special emphasis on crew safety compared to other considerations.

Retirement

The Space Shuttle retirement was announced in January 2004. President George W. Bush announced his Vision for Space Exploration, which called for the retirement of the Space Shuttle once it completed construction of the ISS. To ensure the ISS was properly assembled, the contributing partners determined the need for 16 remaining assembly missions in March 2006. One additional Hubble Space Telescope servicing mission was approved in October 2006. Originally, STS-134 was to be the final Space Shuttle mission. However, the Columbia disaster resulted in additional orbiters being prepared for launch on need in the event of a rescue mission. As Atlantis was prepared for the final launch-on-need mission, the decision was made in September 2010 that it would fly as STS-135 with a four-person crew that could remain at the ISS in the event of an emergency. STS-135 launched on July 8, 2011, and landed at the KSC on July 21, 2011, at 5:57 a.m. EDT (09:57 UTC). From then until the launch of Crew Dragon Demo-2 on May 30, 2020, the US launched its astronauts aboard Russian Soyuz spacecraft.

Following each orbiter's final flight, it was processed to make it safe for display. The OMS and RCS systems used presented the primary dangers due to their toxic hypergolic propellant, and most of their components were permanently removed to prevent any dangerous outgassing. Atlantis is on display at the Kennedy Space Center Visitor Complex, Florida, Discovery is at the Udvar-Hazy Center, Virginia, Endeavour is on display at the California Science Center in Los Angeles, and Enterprise is displayed at the Intrepid Sea-Air-Space Museum in New York. Components from the orbiters were transferred to the US Air Force, ISS program, and Russian and Canadian governments. The engines were removed to be used on the Space Launch System, and spare RS-25 nozzles were attached for display purposes.

Preservation

Out of the five fully functional shuttle orbiters built, three remain. Enterprise, which was used for atmospheric test flights but not for orbital flight, had many parts taken out for use on the other orbiters. It was later visually restored and was on display at the National Air and Space Museum's Steven F. Udvar-Hazy Center until April 19, 2012. Enterprise was moved to New York City in April 2012 to be displayed at the Intrepid Sea, Air & Space Museum, whose Space Shuttle Pavilion opened on July 19, 2012. Discovery replaced Enterprise at the National Air and Space Museum's Steven F. Udvar-Hazy Center. Atlantis formed part of the Space Shuttle Exhibit at the Kennedy Space Center visitor complex and has been on display there since June 29, 2013, following its refurbishment.

On October 14, 2012, Endeavour completed an unprecedented 12 mi (19 km) drive on city streets from Los Angeles International Airport to the California Science Center, where it has been on display in a temporary hangar since late 2012. The transport from the airport took two days and required major street closures, the removal of over 400 city trees, and extensive work to raise power lines, level the street, and temporarily remove street signs, lamp posts, and other obstacles. Hundreds of volunteers, and fire and police personnel, helped with the transport. Large crowds of spectators waited on the streets to see the shuttle as it passed through the city. Endeavour, along with the last flight-qualified external tank (ET-94), is currently on display at the California Science Center's Samuel Oschin Pavilion (in a horizontal orientation) until the completion of the Samuel Oschin Air and Space Center (a planned addition to the California Science Center). Once moved, it will be permanently displayed in launch configuration, complete with genuine solid rocket boosters and external tank.

Crew modules

One area of Space Shuttle applications is an expanded crew. Crews of up to eight have been flown in the Orbiter, but it could have held at least a crew of ten. Various proposals for filling the payload bay with additional passengers were also made as early as 1979. One proposal by Rockwell provided seating for 74 passengers in the Orbiter payload bay, with support for three days in Earth orbit. With a smaller 64 seat orbiter, costs for the late 1980s would be around US$1.5 million per seat per launch. The Rockwell passenger module had two decks, four seats across on top and two on the bottom, including a 25-inch (63.5 cm) wide aisle and extra storage space.

Another design was Space Habitation Design Associates 1983 proposal for 72 passengers in the Space Shuttle Payload bay. Passengers were located in 6 sections, each with windows and its own loading ramp at launch, and with seats in different configurations for launch and landing. Another proposal was based on the Spacelab habitation modules, which provided 32 seats in the payload bay in addition to those in the cockpit area.

There were some efforts to analyze commercial operation of STS. Using the NASA figure for average cost to launch a Space Shuttle as of 2011 at about $450 million per mission, a cost per seat for a 74 seat module envisioned by Rockwell came to less than $6 million, not including the regular crew. Some passenger modules used hardware similar to existing equipment, such as the tunnel, which was also needed for Spacehab and Spacelab.

Successors

During the three decades of operation, various follow-on and replacements for the STS Space Shuttle were partially developed but not finished.

Examples of possible future space vehicles to supplement or supplant STS:

- Advanced Crewed Earth-to-Orbit Vehicle

- Shuttle II, Johnson Space Center concept for a follow-on, with 2 boosters and 2 tanks mounted on its wings.

- National Aero-Space Plane (NASP)

- Rockwell X-30 (not funded)

- VentureStar, SSTO spacelane concept using an aerospike engine.

- Lockheed Martin X-33 (cancelled 2001)

- Ares I (ended with Constellation cancellation)

- Orbital Space Plane Program

One effort in the direction of space transportation was the Reusable Launch Vehicle (RLV) program, initiated in 1994 by NASA. This led to work on the X-33 and X-34 vehicles. NASA spent about US$1 billion on developing the X-33 hoping for it be in operation by 2005. Another program around the turn of the millennium was the Space Launch Initiative, which was a next generation launch initiative.

The Space Launch Initiative program was started in 2001, and in late 2002 it was evolved into two programs, the Orbital Space Plane Program and the Next Generation Launch Technology program. OSP was oriented towards provided access to the International Space Station.

Other vehicles that would have taken over some of the Shuttles responsibilities were the HL-20 Personnel Launch System or the NASA X-38 of the Crew Return Vehicle program, which were primarily for getting people down from ISS. The X-38 was cancelled in 2002, and the HL-20 was cancelled in 1993. Several other programs in this existed such as the Station Crew Return Alternative Module (SCRAM) and Assured Crew Return Vehicle (ACRV).

According to the 2004 Vision for Space Exploration, the next human NASA program was to be Constellation program with its Ares I and Ares V launch vehicles and the Orion spacecraft; however, the Constellation program was never fully funded, and in early 2010 the Obama administration asked Congress to instead endorse a plan with heavy reliance on the private sector for delivering cargo and crew to LEO.

The Commercial Orbital Transportation Services (COTS) program began in 2006 with the purpose of creating commercially operated uncrewed cargo vehicles to service the ISS. The first of these vehicles, SpaceX Dragon, became operational in 2012, and the second, Orbital Sciences's Cygnus did so in 2014.

The Commercial Crew Development (CCDev) program was initiated in 2010 with the purpose of creating commercially operated crewed spacecraft capable of delivering at least four crew members to the ISS, staying docked for 180 days and then returning them back to Earth. These spacecraft, like SpaceX's Dragon 2 and Boeing CST-100 Starliner were expected to become operational around 2020. On the Crew Dragon Demo-2 mission, SpaceX's Dragon 2 sent astronauts to the ISS, restoring America's human launch capability. The first operational SpaceX mission launched on November 15, 2020, at 7:27:17 p.m. ET, carrying four astronauts to the ISS.

Although the Constellation program was canceled, it has been replaced with a very similar Artemis program. The Orion spacecraft has been left virtually unchanged from its previous design. The planned Ares V rocket has been replaced with the smaller Space Launch System (SLS), which is planned to launch both Orion and other necessary hardware. Exploration Flight Test-1 (EFT-1), an uncrewed test flight of the Orion spacecraft, launched on December 5, 2014, on a Delta IV Heavy rocket.

Artemis 1 is the first flight of the SLS and was launched as a test of the completed Orion and SLS system. During the mission, an uncrewed Orion capsule spent 10 days in a 57,000-kilometer (31,000-nautical-mile) distant retrograde orbit around the Moon before returning to Earth. Artemis 2, the first crewed mission of the program, will launch four astronauts in 2024 on a free-return flyby of the Moon at a distance of 8,520 kilometers (4,600 nautical miles). After Artemis 2, the Power and Propulsion Element of the Lunar Gateway and three components of an expendable lunar lander are planned to be delivered on multiple launches from commercial launch service providers. Artemis 3 is planned to launch in 2025 aboard a SLS Block 1 rocket and will use the minimalist Gateway and expendable lander to achieve the first crewed lunar landing of the program. The flight is planned to touch down on the lunar south pole region, with two astronauts staying there for about one week.

Gallery

-

Linear aerospike engine for the cancelled X-33

-

The Dragon spacecraft, one of the Space Shuttle's several successors, is seen here on its way to deliver cargo to the ISS

-

NASA's Orion Spacecraft for the Artemis 1 mission seen in Plum Brook On December 1, 2019

-

The Core Stage for the Space Launch System rocket for Artemis I

-

The Space Launch System Core Stage rolling out of the Michoud Facility for shipping to Stennis

-

The Boeing CST-100 Starliner spacecraft in the process of docking to the International Space Station

-

The SpaceX Crew Dragon in the process of docking to the International Space Station

Assets and transition plan

The Space Shuttle program occupied over 654 facilities, used over 1.2 million line items of equipment, and employed over 5,000 people. The total value of equipment was over $12 billion. Shuttle-related facilities represented over a quarter of NASA's inventory. There were over 1,200 active suppliers to the program throughout the United States. NASA's transition plan had the program operating through 2010 with a transition and retirement phase lasting through 2015. During this time, the Ares I and Orion as well as the Altair Lunar Lander were to be under development, although these programs have since been canceled.

In the 2010s, two major programs for human spaceflight are Commercial Crew Program and the Artemis program. Kennedy Space Center Launch Complex 39A is, for example, used to launch Falcon Heavy and Falcon 9.

Criticism

The partial reusability of the Space Shuttle was one of the primary design requirements during its initial development. The technical decisions that dictated the orbiter's return and re-use reduced the per-launch payload capabilities. The original intention was to compensate for this lower payload by lowering the per-launch costs and a high launch frequency. However, the actual costs of a Space Shuttle launch were higher than initially predicted, and the Space Shuttle did not fly the intended 24 missions per year as initially predicted by NASA.

The Space Shuttle was originally intended as a launch vehicle to deploy satellites, which it was primarily used for on the missions prior to the Challenger disaster. NASA's pricing, which was below cost, was lower than expendable launch vehicles; the intention was that the high volume of Space Shuttle missions would compensate for early financial losses. The improvement of expendable launch vehicles and the transition away from commercial payloads on the Space Shuttle resulted in expendable launch vehicles becoming the primary deployment option for satellites. A key customer for the Space Shuttle was the National Reconnaissance Office (NRO) responsible for spy satellites. The existence of NRO's connection was classified through 1993, and secret considerations of NRO payload requirements led to lack of transparency in the program. The proposed Shuttle-Centaur program, cancelled in the wake of the Challenger disaster, would have pushed the spacecraft beyond its operational capacity.

The fatal Challenger and Columbia disasters demonstrated the safety risks of the Space Shuttle that could result in the loss of the crew. The spaceplane design of the orbiter limited the abort options, as the abort scenarios required the controlled flight of the orbiter to a runway or to allow the crew to egress individually, rather than the abort escape options on the Apollo and Soyuz space capsules. Early safety analyses advertised by NASA engineers and management predicted the chance of a catastrophic failure resulting in the death of the crew as ranging from 1 in 100 launches to as rare as 1 in 100,000. Following the loss of two Space Shuttle missions, the risks for the initial missions were reevaluated, and the chance of a catastrophic loss of the vehicle and crew was found to be as high as 1 in 9. NASA management was criticized afterwards for accepting increased risk to the crew in exchange for higher mission rates. Both the Challenger and Columbia reports explained that NASA culture had failed to keep the crew safe by not objectively evaluating the potential risks of the missions.Support vehicles

Many other vehicles were used in support of the Space Shuttle program, mainly terrestrial transportation vehicles.

- The crawler-transporter carried the mobile launcher platform and the Space Shuttle from the Vehicle Assembly Building (VAB) to Launch Complex 39, originally built for Project Apollo.

- The Shuttle Carrier Aircraft (SCA) were two modified Boeing 747s. Either could fly an orbiter from alternative landing sites back to the Kennedy Space Center. These aircraft were retired to the Joe Davies Heritage Airpark at the Armstrong Flight Research Center and Space Center Houston.

- A 36-wheeled transport trailer, the Orbiter Transfer System, originally built for the U.S. Air Force's launch facility at Vandenberg Air Force Base in California (since then converted for Delta IV rockets) would transport the orbiter from the landing facility to the launch pad, which allowed both "stacking" and launch without utilizing a separate VAB-style building and crawler-transporter roadway. Prior to the closing of the Vandenberg facility, orbiters were transported from the OPF to the VAB on their undercarriages, only to be raised when the orbiter was being lifted for attachment to the SRB/ET stack. The trailer allowed the transportation of the orbiter from the OPF to either the SCA "Mate-Demate" stand or the VAB without placing any additional stress on the undercarriage.

- The Crew Transport Vehicle (CTV), a modified airport jet bridge, was used to assist astronauts to egress from the orbiter after landing. Upon entering the CTV, astronauts could take off their launch and reentry suits then proceed to chairs and beds for medical checks before being transported back to the crew quarters in the Operations and Checkout Building. Originally built for Project Apollo.

- The Astrovan was used to transport astronauts from the crew quarters in the Operations and Checkout Building to the launch pad on launch day. It was also used to transport astronauts back again from the Crew Transport Vehicle at the Shuttle Landing Facility.

- The three locomotives serving the NASA Railroad, used to transport segments of the Space Shuttle Solid Rocket Boosters, were determined to be no longer needed for day-to-day operation at the Kennedy Space Center. In April 2015, locomotive No. 1 was sent to Natchitoches Parish Port and No. 3 sent to the Madison Railroad. Locomotive No. 2 was sent to the Gold Coast Railroad Museum in 2014.

|

|

|