| Water vapor (H2O) | |

|---|---|

Invisible water vapor condenses to form

visible clouds of liquid rain droplets |

|

| Liquid state | Water |

| Solid state | Ice |

| Properties[1] | |

| Molecular formula | H2O |

| Molar mass | 18.01528(33) g/mol |

| Melting point | 0.00 °C (273.15 K)[2] |

| Boiling point | 99.98 °C (373.13 K)[2] |

| specific gas constant | 461.5 J/(kg·K) |

| Heat of vaporization | 2.27 MJ/kg |

| Heat capacity at 300 K | 1.864 kJ/(kg·K)[3] |

Water vapor, water vapour or aqueous vapor is the gaseous phase of water. It is one state of water within the hydrosphere. Water vapor can be produced from the evaporation or boiling of liquid water or from the sublimation of ice. Unlike other forms of water, water vapor is invisible.[4] Under typical atmospheric conditions, water vapor is continuously generated by evaporation and removed by condensation. It is less dense than air and triggers convection currents that can lead to clouds.

Being a component of Earth's hydrosphere and hydrologic cycle, it is particularly abundant in Earth's atmosphere where it is also a potent greenhouse gas along with other gases such as carbon dioxide and methane. Use of water vapor, as steam, has been important to humans for cooking and as a major component in energy production and transport systems since the industrial revolution.

Water vapor is a relatively common atmospheric constituent, present even in the solar atmosphere as well as every planet in the Solar System and many astronomical objects including natural satellites, comets and even large asteroids. Likewise the detection of extrasolar water vapor would indicate a similar distribution in other planetary systems. Water vapor is significant in that it can be indirect evidence supporting the presence of extraterrestrial liquid water in the case of some planetary mass objects.

Properties

Evaporation

Whenever a water molecule leaves a surface and diffuses into a surrounding gas, it is said to have evaporated. Each individual water molecule which transitions between a more associated (liquid) and a less associated (vapor/gas) state does so through the absorption or release of kinetic energy. The aggregate measurement of this kinetic energy transfer is defined as thermal energy and occurs only when there is differential in the temperature of the water molecules. Liquid water that becomes water vapor takes a parcel of heat with it, in a process called evaporative cooling.[5] The amount of water vapor in the air determines how frequently molecules will return to the surface. When a net evaporation occurs, the body of water will undergo a net cooling directly related to the loss of water.In the US, the National Weather Service measures the actual rate of evaporation from a standardized "pan" open water surface outdoors, at various locations nationwide. Others do likewise around the world. The US data is collected and compiled into an annual evaporation map.[6] The measurements range from under 30 to over 120 inches per year. Formulas can be used for calculating the rate of evaporation from a water surface such as a swimming pool.[7][8] In some countries, the evaporation rate far exceeds the precipitation rate.

Evaporative cooling is restricted by atmospheric conditions. Humidity is the amount of water vapor in the air. The vapor content of air is measured with devices known as hygrometers. The measurements are usually expressed as specific humidity or percent relative humidity. The temperatures of the atmosphere and the water surface determine the equilibrium vapor pressure; 100% relative humidity occurs when the partial pressure of water vapor is equal to the equilibrium vapor pressure. This condition is often referred to as complete saturation. Humidity ranges from 0 gram per cubic metre in dry air to 30 grams per cubic metre (0.03 ounce per cubic foot) when the vapor is saturated at 30 °C.[9]

Recovery of meteorites in Antarctica (ANSMET)

Electron micrograph of freeze-etched capillary tissue

Sublimation

Sublimation is when water molecules directly leave the surface of ice without first becoming liquid water. Sublimation accounts for the slow mid-winter disappearance of ice and snow at temperatures too low to cause melting. Antarctica shows this effect to a unique degree because it is by far the continent with the lowest rate of precipitation on Earth. As a result, there are large areas where millennial layers of snow have sublimed, leaving behind whatever non-volatile materials they had contained. This is extremely valuable to certain scientific disciplines, a dramatic example being the collection of meteorites that are left exposed in unparalleled numbers and excellent states of preservation.Sublimation is important in the preparation of certain classes of biological specimens for scanning electron microscopy. Typically the specimens are prepared by cryofixation and freeze-fracture, after which the broken surface is freeze-etched, being eroded by exposure to vacuum till it shows the required level of detail. This technique can display protein molecules, organelle structures and lipid bilayers with very low degrees of distortion.

Condensation

Clouds, formed by condensed water vapor

Water vapor will only condense onto another surface when that surface is cooler than the dew point temperature, or when the water vapor equilibrium in air has been exceeded. When water vapor condenses onto a surface, a net warming occurs on that surface. The water molecule brings heat energy with it. In turn, the temperature of the atmosphere drops slightly.[11] In the atmosphere, condensation produces clouds, fog and precipitation (usually only when facilitated by cloud condensation nuclei). The dew point of an air parcel is the temperature to which it must cool before water vapor in the air begins to condense concluding water vapor is a type of water or rain.

Also, a net condensation of water vapor occurs on surfaces when the temperature of the surface is at or below the dew point temperature of the atmosphere. Deposition is a phase transition separate from condensation which leads to the direct formation of ice from water vapor. Frost and snow are examples of deposition.

Chemical reactions

A number of chemical reactions have water as a product. If the reactions take place at temperatures higher than the dew point of the surrounding air the water will be formed as vapor and increase the local humidity, if below the dew point local condensation will occur. Typical reactions that result in water formation are the burning of hydrogen or hydrocarbons in air or other oxygen containing gas mixtures, or as a result of reactions with oxidizers.In a similar fashion other chemical or physical reactions can take place in the presence of water vapor resulting in new chemicals forming such as rust on iron or steel, polymerization occurring (certain polyurethane foams and cyanoacrylate glues cure with exposure to atmospheric humidity) or forms changing such as where anhydrous chemicals may absorb enough vapor to form a crystalline structure or alter an existing one, sometimes resulting in characteristic color changes that can be used for measurement.

Measurement

Measuring the quantity of water vapor in a medium can be done directly or remotely with varying degrees of accuracy. Remote methods such electromagnetic absorption are possible from satellites above planetary atmospheres. Direct methods may use electronic transducers, moistened thermometers or hygroscopic materials measuring changes in physical properties or dimensions.| medium | temperature range (degC) | measurement uncertainty | typical measurement frequency | system cost | notes | |

|---|---|---|---|---|---|---|

| sling psychrometer | air | −10 to 50 | low to moderate | hourly | low | |

| satellite-based spectroscopy | air | −80 to 60 | low | very high | ||

| capacitive sensor | air/gases | −40 to 50 | moderate | 2 to 0.05 Hz | medium | prone to becoming saturated/contaminated over time |

| warmed capacitive sensor | air/gases | −15 to 50 | moderate to low | 2 to 0.05 Hz (temp dependant) | medium to high | prone to becoming saturated/contaminated over time |

| resistive sensor | air/gases | −10 to 50 | moderate | 60 seconds | medium | prone to contamination |

| lithium chloride dewcell | air | −30 to 50 | moderate | continuous | medium | see dewcell |

| Cobalt(II) chloride | air/gases | 0 to 50 | high | 5 minutes | very low | often used in Humidity indicator card |

| Absorption spectroscopy | air/gases | moderate | high | |||

| Aluminum oxide | air/gases | moderate | medium | see Moisture analysis | ||

| silicon oxide | air/gases | moderate | medium | see Moisture analysis | ||

| Piezoelectric sorption | air/gases | moderate | medium | see Moisture analysis | ||

| Electrolytic | air/gases | moderate | medium | see Moisture analysis | ||

| hair tension | air | 0 to 40 | high | continuous | low to medium | Affected by temperature. Adversely affected by prolonged high concentrations |

| Nephelometer | air/other gases | low | very high | |||

| Goldbeater's skin (Cow Peritoneum) | air | −20 to 30 | moderate (with corrections) | slow, slower at lower temperatures | low | ref:WMO Guide to Meteorological Instruments and Methods of Observation No. 8 2006, (pages 1.12–1) |

| Lyman-alpha | high frequency | high | http://amsglossary.allenpress.com/glossary/search?id=lyman-alpha-hygrometer1 Requires frequent calibration | |||

| Gravimetric Hygrometer | very low | very high | often called primary source, national independent standards developed in US,UK,EU & Japan | |||

| medium | temperature range (degC) | measurement uncertainty | typical measurement frequency | system cost | notes |

Impact on air density

Water vapor is lighter or less dense than dry air.[12][13] At equivalent temperatures it is buoyant with respect to dry air, whereby the density of dry air at standard temperature and pressure is 1.27 g/L and water vapor at standard temperature and pressure has the much lower density of 0.804 g/L.Calculations

Water vapor and dry air density calculations at 0 °C:- The molar mass of water is 18.02 g/mol, as calculated from the sum of the atomic masses of its constituent atoms.

- The average molecular mass of air (approx. 78% nitrogen, N2; 21% oxygen, O2; 1% other gases) is 28.57 g/mol at standard temperature and pressure (STP).

- Using Avogadro's Law and the ideal gas law, water vapor and air will have a molar volume of 22.414 L/mol at STP. A molar mass of air and water vapor occupy the same volume of 22.414 litres. The density (mass/volume) of water vapor is 0.804 g/L, which is significantly less than that of dry air at 1.27 g/L at STP. This means water vapor is lighter than air.

- STP conditions imply a temperature of 0 °C, at which the ability of water to become vapor is very restricted. Its concentration in air is very low at 0 °C. The red line on the chart to the right is the maximum concentration of water vapor expected for a given temperature. The water vapor concentration increases significantly as the temperature rises, approaching 100% (steam, pure water vapor) at 100 °C. However the difference in densities between air and water vapor would still exist.

At equal temperatures

At the same temperature, a column of dry air will be denser or heavier than a column of air containing any water vapor, the molar mass of diatomic nitrogen and diatomic oxygen both being greater than the molar mass of water. Thus, any volume of dry air will sink if placed in a larger volume of moist air. Also, a volume of moist air will rise or be buoyant if placed in a larger region of dry air. As the temperature rises the proportion of water vapor in the air increases, and its buoyancy will increase. The increase in buoyancy can have a significant atmospheric impact, giving rise to powerful, moisture rich, upward air currents when the air temperature and sea temperature reaches 25 °C or above. This phenomenon provides a significant driving force for cyclonic and anticyclonic weather systems (typhoons and hurricanes).Respiration and breathing

Water vapor is a by-product of respiration in plants and animals. Its contribution to the pressure, increases as its concentration increases. Its partial pressure contribution to air pressure increases, lowering the partial pressure contribution of the other atmospheric gases (Dalton's Law). The total air pressure must remain constant. The presence of water vapor in the air naturally dilutes or displaces the other air components as its concentration increases.This can have an effect on respiration. In very warm air (35 °C) the proportion of water vapor is large enough to give rise to the stuffiness that can be experienced in humid jungle conditions or in poorly ventilated buildings.

Lifting gas

Water vapor has lower density than that of air and is therefore buoyant in air but has lower vapor pressure than that of air. When water vapor is used as a lifting gas by a thermal airship the water vapor is heated to form steam so that its vapor pressure is greater than the surrounding air pressure in order to maintain the shape of a theoretical "steam balloon", which yields approximately 60% the lift of helium and twice that of hot air.[14]General discussion

The amount of water vapor in an atmosphere is constrained by the restrictions of partial pressures and temperature. Dew point temperature and relative humidity act as guidelines for the process of water vapor in the water cycle. Energy input, such as sunlight, can trigger more evaporation on an ocean surface or more sublimation on a chunk of ice on top of a mountain. The balance between condensation and evaporation gives the quantity called vapor partial pressure.The maximum partial pressure (saturation pressure) of water vapor in air varies with temperature of the air and water vapor mixture. A variety of empirical formulas exist for this quantity; the most used reference formula is the Goff-Gratch equation for the SVP over liquid water below zero degree Celsius:

|

|

|

|

|

- Where T, temperature of the moist air, is given in units of kelvin, and p is given in units of millibars (hectopascals).

Under certain conditions, such as when the boiling temperature of water is reached, a net evaporation will always occur during standard atmospheric conditions regardless of the percent of relative humidity. This immediate process will dispel massive amounts of water vapor into a cooler atmosphere.

Exhaled air is almost fully at equilibrium with water vapor at the body temperature. In the cold air the exhaled vapor quickly condenses, thus showing up as a fog or mist of water droplets and as condensation or frost on surfaces. Forcibly condensing these water droplets from exhaled breath is the basis of exhaled breath condensate, an evolving medical diagnostic test.

Controlling water vapor in air is a key concern in the heating, ventilating, and air-conditioning (HVAC) industry. Thermal comfort depends on the moist air conditions. Non-human comfort situations are called refrigeration, and also are affected by water vapor. For example, many food stores, like supermarkets, utilize open chiller cabinets, or food cases, which can significantly lower the water vapor pressure (lowering humidity). This practice delivers several benefits as well as problems.

In Earth's atmosphere

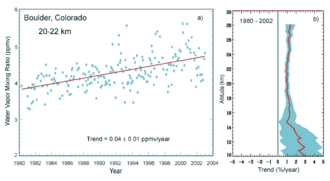

Evidence for increasing amounts of stratospheric water vapor over time in Boulder, Colorado.

Gaseous water represents a small but environmentally significant constituent of the atmosphere. The percentage water vapor in surface air varies from 0.01% at -42 °C (-44 °F)[16] to 4.24% when the dew point is 30 °C (86 °F).[17] Approximately 99.13% of it is contained in the troposphere. The condensation of water vapor to the liquid or ice phase is responsible for clouds, rain, snow, and other precipitation, all of which count among the most significant elements of what we experience as weather. Less obviously, the latent heat of vaporization, which is released to the atmosphere whenever condensation occurs, is one of the most important terms in the atmospheric energy budget on both local and global scales. For example, latent heat release in atmospheric convection is directly responsible for powering destructive storms such as tropical cyclones and severe thunderstorms. Water vapor is the most potent greenhouse gas owing to the presence of the hydroxyl bond which strongly absorbs in the infra-red region of the light spectrum.

Water in Earth's atmosphere is not merely below its boiling point (100 °C), but at altitude it goes below its freezing point (0 °C), due to water's highly polar attraction. When combined with its quantity, water vapor then has a relevant dew point and frost point, unlike e. g., carbon dioxide and methane. Water vapor thus has a scale height a fraction of that of the bulk atmosphere,[18][19][20] as the water condenses and exits, primarily in the troposphere, the lowest layer of the atmosphere.[21] Carbon dioxide (CO2) and methane, being non-polar, rise above water vapor. The absorption and emission of both compounds contribute to Earth's emission to space, and thus the planetary greenhouse effect.[19][22][23] This greenhouse forcing is directly observable, via distinct spectral features versus water vapor, and observed to be rising with rising CO2 levels.[24] Conversely, adding water vapor at high altitudes has a disproportionate impact, which is why methane (rising, then oxidizing to CO2 and two water molecules) and jet traffic[25][26][27] have disproportionately high warming effects.

It is less clear how cloudiness would respond to a warming climate; depending on the nature of the response, clouds could either further amplify or partly mitigate warming from long-lived greenhouse gases.

In the absence of other greenhouse gases, Earth's water vapor would condense to the surface;[28][29][30] this has likely happened, possibly more than once. Scientists thus distinguish between non-condensable (driving) and condensable (driven) greenhouse gases- i. e., the above water vapor feedback.[31][32][33]

Fog and clouds form through condensation around cloud condensation nuclei. In the absence of nuclei, condensation will only occur at much lower temperatures. Under persistent condensation or deposition, cloud droplets or snowflakes form, which precipitate when they reach a critical mass.

The water content of the atmosphere as a whole is constantly depleted by precipitation. At the same time it is constantly replenished by evaporation, most prominently from seas, lakes, rivers, and moist earth. Other sources of atmospheric water include combustion, respiration, volcanic eruptions, the transpiration of plants, and various other biological and geological processes. The mean global content of water vapor in the atmosphere is roughly sufficient to cover the surface of the planet with a layer of liquid water about 25 mm deep. The mean annual precipitation for the planet is about 1 meter, which implies a rapid turnover of water in the air – on average, the residence time of a water molecule in the troposphere is about 9 to 10 days.

Episodes of surface geothermal activity, such as volcanic eruptions and geysers, release variable amounts of water vapor into the atmosphere. Such eruptions may be large in human terms, and major explosive eruptions may inject exceptionally large masses of water exceptionally high into the atmosphere, but as a percentage of total atmospheric water, the role of such processes is minor. The relative concentrations of the various gases emitted by volcanoes varies considerably according to the site and according to the particular event at any one site. However, water vapor is consistently the commonest volcanic gas; as a rule, it comprises more than 60% of total emissions during a subaerial eruption.[34]

Atmospheric water vapor content is expressed using various measures. These include vapor pressure, specific humidity, mixing ratio, dew point temperature, and relative humidity.

Radar and satellite imaging

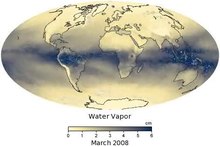

These maps show the average amount of water vapor in a column of atmosphere in a given month.(click for more detail)

Generally, radar signals lose strength progressively the farther they travel through the troposphere. Different frequencies attenuate at different rates, such that some components of air are opaque to some frequencies and transparent to others. Radio waves used for broadcasting and other communication experience the same effect.

Water vapor reflects radar to a lesser extent than do water's other two phases. In the form of drops and ice crystals, water acts as a prism, which it does not do as an individual molecule; however, the existence of water vapor in the atmosphere causes the atmosphere to act as a giant prism.[36]

A comparison of GOES-12 satellite images shows the distribution of atmospheric water vapor relative to the oceans, clouds and continents of the Earth. Vapor surrounds the planet but is unevenly distributed. The image loop on the right shows monthly average of water vapor content with the units are given in centimeters, which is the precipitable water or equivalent amount of water that could be produced if all the water vapor in the column were to condense. The lowest amounts of water vapor (0 centimeters) appear in yellow, and the highest amounts (6 centimeters) appear in dark blue. Areas of missing data appear in shades of gray. The maps are based on data collected by the Moderate Resolution Imaging Spectroradiometer (MODIS) sensor on NASA's Aqua satellite. The most noticeable pattern in the time series is the influence of seasonal temperature changes and incoming sunlight on water vapor. In the tropics, a band of extremely humid air wobbles north and south of the equator as the seasons change. This band of humidity is part of the Intertropical Convergence Zone, where the easterly trade winds from each hemisphere converge and produce near-daily thunderstorms and clouds. Farther from the equator, water vapor concentrations are high in the hemisphere experiencing summer and low in the one experiencing winter. Another pattern that shows up in the time series is that water vapor amounts over land areas decrease more in winter months than adjacent ocean areas do. This is largely because air temperatures over land drop more in the winter than temperatures over the ocean. Water vapor condenses more rapidly in colder air.[37]

As water vapour absorbs light in the visible spectral range, its absorption can be used in spectroscopic applications (such as DOAS) to determine the amount of water vapor in the atmosphere. This is done operationally, e.g. from the GOME spectrometers on ERS and MetOp.[38] The weaker water vapor absorption lines in the blue spectral range and further into the UV up to its dissociation limit around 243 nm are mostly based on quantum mechanical calculations[39] and are only partly confirmed by experiments.[40]

Lightning generation

Water vapor plays a key role in lightning production in the atmosphere. From cloud physics, usually, clouds are the real generators of static charge as found in Earth's atmosphere. But the ability, or capability of clouds to hold massive amounts of electrical energy is directly related to the amount of water vapor present in the local system.The amount of water vapor directly controls the permittivity of the air. During times of low humidity, static discharge is quick and easy. During times of higher humidity, fewer static discharges occur. Permittivity and capacitance work hand in hand to produce the megawatt outputs of lightning.[41]

After a cloud, for instance, has started its way to becoming a lightning generator, atmospheric water vapor acts as a substance (or insulator) that decreases the ability of the cloud to discharge its electrical energy. Over a certain amount of time, if the cloud continues to generate and store more static electricity, the barrier that was created by the atmospheric water vapor will ultimately break down from the stored electrical potential energy.[42] This energy will be released to a locally, oppositely charged region in the form of lightning. The strength of each discharge is directly related to the atmospheric permittivity, capacitance, and the source's charge generating ability.[43]

Extraterrestrial

Water vapor is common in the Solar System and by extension, other planetary systems. Its signature has been detected in the atmospheres of the Sun, occurring in sunspots. The presence of water vapor has been detected in the atmospheres of all seven extraterrestrial planets in the solar system, the Earth's Moon,[44] and the moons of other planets,[which?] although typically in only trace amounts.

Artist's illustration of the signatures of water in exoplanet atmospheres detectable by instruments such as the Hubble Space Telescope.[46]

Geological formations such as cryogeysers are thought to exist on the surface of several icy moons ejecting water vapor due to tidal heating and may indicate the presence of substantial quantities of subsurface water. Plumes of water vapor have been detected on Jupiter's moon Europa and are similar to plumes of water vapor detected on Saturn's moon Enceladus.[45] Traces of water vapor have also been detected in the stratosphere of Titan.[47] Water vapor has been found to be a major constituent of the atmosphere of dwarf planet, Ceres, largest object in the asteroid belt[48] The detection was made by using the far-infrared abilities of the Herschel Space Observatory.[49] The finding is unexpected because comets, not asteroids, are typically considered to "sprout jets and plumes." According to one of the scientists, "The lines are becoming more and more blurred between comets and asteroids."[49] Scientists studying Mars hypothesize that if water moves about the planet, it does so as vapor.[50]

The brilliance of comet tails comes largely from water vapor. On approach to the Sun, the ice many comets carry sublimates to vapor, which reflects light from the Sun. Knowing a comet's distance from the sun, astronomers may deduce a comet's water content from its brilliance.[51]

Water vapor has also been confirmed outside the Solar System. Spectroscopic analysis of HD 209458 b, an extrasolar planet in the constellation Pegasus, provides the first evidence of atmospheric water vapor beyond the Solar System. A star called CW Leonis was found to have a ring of vast quantities of water vapor circling the aging, massive star. A NASA satellite designed to study chemicals in interstellar gas clouds, made the discovery with an onboard spectrometer. Most likely, "the water vapor was vaporized from the surfaces of orbiting comets."[52] HAT-P-11b a relatively small exoplanet has also been found to possess water vapour.[53]