The CIE 1931 x,y chromaticity space, also showing the chromaticities of black-body light sources of various temperatures (Planckian locus), and lines of constant correlated color temperature.

The color temperature of a light source is the temperature of an ideal black-body radiator that radiates light of a color comparable to that of the light source. Color temperature is a characteristic of visible light that has important applications in lighting, photography, videography, publishing, manufacturing, astrophysics, horticulture,

and other fields. In practice, color temperature is meaningful only for

light sources that do in fact correspond somewhat closely to the

radiation of some black body, i.e., light in a range going from red to

orange to yellow to white

to blueish white; it does not make sense to speak of the color

temperature of, e.g., a green or a purple light. Color temperature is

conventionally expressed in kelvins, using the symbol K, a unit of measure for absolute temperature.

Color temperatures over 5000 K are called "cool colors" (bluish),

while lower color temperatures (2700–3000 K) are called "warm colors"

(yellowish). "Warm" in this context is an analogy to radiated heat flux

of traditional incandescent lighting

rather than temperature. The spectral peak of warm-coloured light is

closer to infrared, and most natural warm-coloured light sources emit

significant infrared radiation. The fact that "warm" lighting in this

sense actually has a "cooler" color temperature often leads to

confusion.

Categorizing different lighting

| Temperature | Source |

|---|---|

| 1700 K | Match flame, low pressure sodium lamps (LPS/SOX) |

| 1850 K | Candle flame, sunset/sunrise |

| 2400 K | Standard incandescent lamps |

| 2550 K | Soft white incandescent lamps |

| 2700 K | "Soft white" compact fluorescent and LED lamps |

| 3000 K | Warm white compact fluorescent and LED lamps |

| 3200 K | Studio lamps, photofloods, etc. |

| 3350 K | Studio "CP" light |

| 5000 K | Horizon daylight |

| 5000 K | Tubular fluorescent lamps or cool white / daylight compact fluorescent lamps (CFL) |

| 5500 – 6000 K | Vertical daylight, electronic flash |

| 6200 K | Xenon short-arc lamp |

| 6500 K | Daylight, overcast |

| 6500 – 9500 K | LCD or CRT screen |

| 15,000 – 27,000 K | Clear blue poleward sky |

| These temperatures are merely characteristic; there may be considerable variation | |

The black-body radiance (Bλ) vs. wavelength (λ) curves for the visible spectrum. The vertical axes of Planck's law

plots building this animation were proportionally transformed to keep

equal areas between functions and horizontal axis for wavelengths

380–780 nm. K indicates the color temperature in Kelvins, and M

indicates the color temperature in micro reciprocal degrees.

The color temperature of the electromagnetic radiation emitted from an ideal black body is defined as its surface temperature in kelvins, or alternatively in micro reciprocal degrees (mired). This permits the definition of a standard by which light sources are compared.

To the extent that a hot surface emits thermal radiation but is not an ideal black-body radiator, the color temperature of the light is not the actual temperature of the surface. An incandescent lamp's

light is thermal radiation, and the bulb approximates an ideal

black-body radiator, so its color temperature is essentially the

temperature of the filament. Thus a relatively low temperature emits a

dull red and a high temperature emits the almost white of the

traditional incandescent light bulb. Metal workers are able to judge the

temperature of hot metals by their color, from dark red to orange-white

and then white.

Many other light sources, such as fluorescent lamps, or LEDs

(light emitting diodes) emit light primarily by processes other than

thermal radiation. This means that the emitted radiation does not follow

the form of a black-body spectrum. These sources are assigned what is known as a correlated color temperature (CCT). CCT is the color temperature of a black-body radiator which to human color perception

most closely matches the light from the lamp. Because such an

approximation is not required for incandescent light, the CCT for an

incandescent light is simply its unadjusted temperature, derived from

comparison to a black-body radiator.

The Sun

The Sun

closely approximates a black-body radiator. The effective temperature,

defined by the total radiative power per square unit, is about 5780 K. The color temperature of sunlight above the atmosphere is about 5900 K.

The Sun may appear red, orange, yellow, or white from Earth, depending on its position in the sky. The changing color of the Sun over the course of the day is mainly a result of the scattering of sunlight and is not due to changes in black-body radiation. Rayleigh scattering of sunlight by Earth's atmosphere causes the blue color of the sky, which tends to scatter blue light more than red light.

Some daylight in the early morning and late afternoon (the golden hours) has a lower ("warmer") color temperature due to increased scattering of shorter-wavelength sunlight by atmospheric particles – an optical phenomenon called the Tyndall effect.

Daylight has a spectrum similar to that of a black body with a correlated color temperature of 6500 K (D65 viewing standard) or 5500 K (daylight-balanced photographic film standard).

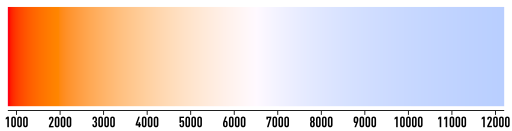

Hues of the Planckian locus on a linear scale

For colors based on black-body theory, blue occurs at higher

temperatures, whereas red occurs at lower temperatures. This is the

opposite of the cultural associations attributed to colors, in which

"red" is "hot", and "blue" is "cold".

Applications

Lighting

Color temperature comparison of common electric lamps

For lighting building interiors, it is often important to take into

account the color temperature of illumination. A warmer (i.e., a lower

color temperature) light is often used in public areas to promote

relaxation, while a cooler (higher color temperature) light is used to

enhance concentration, for example in schools and offices.

CCT dimming for LED technology is regarded as a difficult task,

since binning, age and temperature drift effects of LEDs change the

actual color value output. Here feedback loop systems are used, for

example with color sensors, to actively monitor and control the color

output of multiple color mixing LEDs.

Aquaculture

In fishkeeping, color temperature has different functions and foci in the various branches.

- In freshwater aquaria, color temperature is generally of concern only for producing a more attractive display. Lights tend to be designed to produce an attractive spectrum, sometimes with secondary attention paid to keeping the plants in the aquaria alive.

- In a saltwater/reef aquarium, color temperature is an essential part of tank health. Within about 400 to 3000 nanometers, light of shorter wavelength can penetrate deeper into water than longer wavelengths, providing essential energy sources to the algae hosted in (and sustaining) coral. This is equivalent to an increase of color temperature with water depth in this spectral range. Because coral typically live in shallow water and receive intense, direct tropical sunlight, the focus was once on simulating this situation with 6500 K lights. In the meantime higher temperature light sources have become more popular, first with 10000 K and more recently 16000 K and 20000 K. Actinic lighting at the violet end of the visible range (420–460 nm) is used to allow night viewing without increasing algae bloom or enhancing photosynthesis, and to make the somewhat fluorescent colors of many corals and fish "pop", creating brighter display tanks.

Digital photography

In digital photography,

the term color temperature sometimes refers to remapping of color

values to simulate variations in ambient color temperature. Most digital

cameras and raw image software provide presets simulating specific

ambient values (e.g., sunny, cloudy, tungsten, etc.) while others allow

explicit entry of white balance values in kelvins. These settings vary

color values along the blue–yellow axis, while some software includes

additional controls (sometimes labeled "tint") adding the magenta–green

axis, and are to some extent arbitrary and a matter of artistic

interpretation.

Photographic film

Photographic emulsion film does not respond to lighting color

identically to the human retina or visual perception. An object that

appears to the observer to be white may turn out to be very blue or

orange in a photograph. The color balance

may need to be corrected during printing to achieve a neutral color

print. The extent of this correction is limited since color film

normally has three layers sensitive to different colors and when used

under the "wrong" light source, every layer may not respond

proportionally, giving odd color casts in the shadows, although the

mid-tones may have been correctly white-balanced under the enlarger.

Light sources with discontinuous spectra, such as fluorescent tubes,

cannot be fully corrected in printing either, since one of the layers

may barely have recorded an image at all.

Photographic film is made for specific light sources (most commonly daylight film and tungsten film), and, used properly, will create a neutral color print. Matching the sensitivity of the film

to the color temperature of the light source is one way to balance

color. If tungsten film is used indoors with incandescent lamps, the

yellowish-orange light of the tungsten

incandescent lamps will appear as white (3200 K) in the photograph.

Color negative film is almost always daylight-balanced, since it is

assumed that color can be adjusted in printing (with limitations, see

above). Color transparency film, being the final artefact in the

process, has to be matched to the light source or filters must be used

to correct color.

Filters on a camera lens, or color gels

over the light source(s) may be used to correct color balance. When

shooting with a bluish light (high color temperature) source such as on

an overcast day, in the shade, in window light, or if using tungsten

film with white or blue light, a yellowish-orange filter will correct

this. For shooting with daylight film (calibrated to 5600 K) under

warmer (low color temperature) light sources such as sunsets,

candlelight or tungsten lighting,

a bluish (e.g. #80A) filter may be used. More-subtle filters are needed

to correct for the difference between, say 3200 K and 3400 K tungsten

lamps or to correct for the slightly blue cast of some flash tubes,

which may be 6000 K.

If there is more than one light source with varied color

temperatures, one way to balance the color is to use daylight film and

place color-correcting gel filters over each light source.

Photographers sometimes use color temperature meters. These are

usually designed to read only two regions along the visible spectrum

(red and blue); more expensive ones read three regions (red, green, and

blue). However, they are ineffective with sources such as fluorescent or

discharge lamps, whose light varies in color and may be harder to

correct for. Because this light is often greenish, a magenta filter may

correct it. More sophisticated colorimetry tools can be used if such meters are lacking.

Desktop publishing

In the desktop publishing industry, it is important to know a

monitor’s color temperature. Color matching software, such as Apple's ColorSync

for Mac OS, measures a monitor's color temperature and then adjusts its

settings accordingly. This enables on-screen color to more closely

match printed color. Common monitor color temperatures, along with

matching standard illuminants in parentheses, are as follows:

- 5000 K (D50)

- 5500 K (D55)

- 6500 K (D65)

- 7500 K (D75)

- 9300 K

D50 is scientific shorthand for a standard illuminant:

the daylight spectrum at a correlated color temperature of 5000 K.

Similar definitions exist for D55, D65 and D75. Designations such as D50 are used to help classify color temperatures of light tables and viewing booths. When viewing a color slide

at a light table, it is important that the light be balanced properly

so that the colors are not shifted towards the red or blue.

Digital cameras, web graphics, DVDs, etc., are normally designed for a 6500 K color temperature. The sRGB standard commonly used for images on the Internet stipulates (among other things) a 6500 K display white point.

TV, video, and digital still cameras

The NTSC and PAL

TV norms call for a compliant TV screen to display an electrically

black and white signal (minimal color saturation) at a color temperature

of 6500 K. On many consumer-grade televisions, there is a very

noticeable deviation from this requirement. However, higher-end

consumer-grade televisions can have their color temperatures adjusted to

6500 K by using a preprogrammed setting or a custom calibration.

Current versions of ATSC

explicitly call for the color temperature data to be included in the

data stream, but old versions of ATSC allowed this data to be omitted.

In this case, current versions of ATSC cite default colorimetry

standards depending on the format. Both of the cited standards specify a

6500 K color temperature.

Most video and digital still cameras can adjust for color

temperature by zooming into a white or neutral colored object and

setting the manual "white balance" (telling the camera that "this object

is white"); the camera then shows true white as white and adjusts all

the other colors accordingly. White-balancing is necessary especially

when indoors under fluorescent lighting and when moving the camera from

one lighting situation to another. Most cameras also have an automatic

white balance function that attempts to determine the color of the light

and correct accordingly. While these settings were once unreliable,

they are much improved in today's digital cameras and produce an

accurate white balance in a wide variety of lighting situations.

Artistic application via control of color temperature

The

house above appears a light cream during midday, but seems to be bluish

white here in the dim light before full sunrise. Note the color

temperature of the sunrise in the background.

Video camera operators

can white-balance objects that are not white, downplaying the color of

the object used for white-balancing. For instance, they can bring more

warmth into a picture by white-balancing off something that is light

blue, such as faded blue denim; in this way white-balancing can replace a

filter or lighting gel when those are not available.

Cinematographers do not “white balance” in the same way as video camera operators; they use techniques such as filters, choice of film stock, pre-flashing, and, after shooting, color grading,

both by exposure at the labs and also digitally. Cinematographers also

work closely with set designers and lighting crews to achieve the

desired color effects.

For artists, most pigments and papers have a cool or warm cast,

as the human eye can detect even a minute amount of saturation. Gray

mixed with yellow, orange, or red is a “warm gray”. Green, blue, or

purple create “cool grays”. Note that this sense of temperature is the

reverse of that of real temperature; bluer is described as “cooler” even

though it corresponds to a higher-temperature black body.

| |

| "Warm" gray | "Cool" gray |

| Mixed with 6% yellow. | Mixed with 6% blue. |

Lighting designers sometimes select filters by color temperature, commonly to match light that is theoretically white. Since fixtures using discharge type lamps produce a light of a considerably higher color temperature than do tungsten lamps, using the two in conjunction could potentially produce a stark contrast, so sometimes fixtures with HID lamps,

commonly producing light of 6000–7000 K, are fitted with 3200 K filters

to emulate tungsten light. Fixtures with color mixing features or with

multiple colors, (if including 3200 K) are also capable of producing

tungsten-like light. Color temperature may also be a factor when

selecting lamps, since each is likely to have a different color temperature.

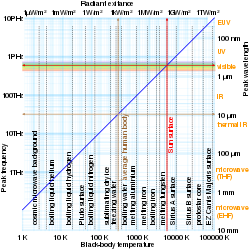

Log-log graphs of peak emission wavelength and radiant exitance vs black-body temperature – red arrows show that 5780 K black bodies have 501 nm peak wavelength and 63.3 MW/m² radiant exitance

The correlated color temperature (CCT, Tcp) is the temperature of the Planckian radiator whose perceived color most closely resembles that of a given stimulus at the same brightness and under specified viewing conditions

— CIE/IEC 17.4:1987, International Lighting Vocabulary (ISBN 3900734070)

Motivation

Black-body

radiators are the reference by which the whiteness of light sources is

judged. A black body can be described by its color temperature, whose

hues are depicted above. By analogy, nearly Planckian light sources such

as certain fluorescent or high-intensity discharge lamps

can be judged by their correlated color temperature (CCT), the color

temperature of the Planckian radiator that best approximates them. For

light source spectra that are not Planckian, color temperature is not a

well defined attribute; the concept of correlated color temperature was

developed to map such sources as well as possible onto the

one-dimensional scale of color temperature, where "as well as possible"

is defined in the context of an objective color space.

Background

Judd's (r,g) diagram. The concentric curves indicate the loci of constant purity.

Judd's

Maxwell triangle. Planckian locus in gray. Translating from trilinear

co-ordinates into Cartesian co-ordinates leads to the next diagram.

Judd's

uniform chromaticity space (UCS), with the Planckian locus and the

isotherms from 1000 K to 10000 K, perpendicular to the locus. Judd

calculated the isotherms in this space before translating them back into

the (x,y) chromaticity space, as depicted in the diagram at the top of

the article.

Close up of the Planckian locus in the CIE 1960 UCS, with the isotherms in mireds.

Note the even spacing of the isotherms when using the reciprocal

temperature scale and compare with the similar figure below. The even

spacing of the isotherms on the locus implies that the mired scale is a

better measure of perceptual color difference than the temperature

scale.

The notion of using Planckian radiators as a yardstick against which to judge other light sources is not new.

In 1923, writing about "grading of illuminants with reference to

quality of color ... the temperature of the source as an index of the

quality of color", Priest essentially described CCT as we understand it

today, going so far as to use the term "apparent color temperature", and

astutely recognized three cases:

- "Those for which the spectral distribution of energy is identical with that given by the Planckian formula."

- "Those for which the spectral distribution of energy is not identical with that given by the Planckian formula, but still is of such a form that the quality of the color evoked is the same as would be evoked by the energy from a Planckian radiator at the given color temperature."

- "Those for which the spectral distribution of energy is such that the color can be matched only approximately by a stimulus of the Planckian form of spectral distribution."

Several important developments occurred in 1931. In chronological order:

- Raymond Davis published a paper on "correlated color temperature" (his term). Referring to the Planckian locus on the r-g diagram, he defined the CCT as the average of the "primary component temperatures" (RGB CCTs), using trilinear coordinates.

- The CIE announced the XYZ color space.

- Deane B. Judd published a paper on the nature of "least perceptible differences" with respect to chromatic stimuli. By empirical means he determined that the difference in sensation, which he termed ΔE for a "discriminatory step between colors ... Empfindung" (German for sensation) was proportional to the distance of the colors on the chromaticity diagram. Referring to the (r,g) chromaticity diagram depicted aside, he hypothesized that

-

- KΔE = |c1 − c2| = max(|r1 − r2|, |g1 − g2|).

These developments paved the way for the development of new

chromaticity spaces that are more suited to estimating correlated color

temperatures and chromaticity differences. Bridging the concepts of

color difference and color temperature, Priest made the observation that

the eye is sensitive to constant differences in "reciprocal"

temperature:

A difference of one micro-reciprocal-degree (μrd) is fairly representative of the doubtfully perceptible difference under the most favorable conditions of observation.

Priest proposed to use "the scale of temperature as a scale for

arranging the chromaticities of the several illuminants in a serial

order". Over the next few years, Judd published three more significant

papers:

The first verified the findings of Priest, Davis, and Judd, with a paper on sensitivity to change in color temperature.

The second proposed a new chromaticity space, guided by a principle that has become the holy grail of color spaces: perceptual uniformity (chromaticity distance should be commensurate with perceptual difference). By means of a projective transformation,

Judd found a more "uniform chromaticity space" (UCS) in which to find

the CCT. Judd determined the "nearest color temperature" by simply

finding the point on the Planckian locus nearest to the chromaticity of the stimulus on Maxwell's color triangle, depicted aside. The transformation matrix he used to convert X,Y,Z tristimulus values to R,G,B coordinates was:

From this, one can find these chromaticities:

The third depicted the locus of the isothermal chromaticities on the CIE 1931 x,y chromaticity diagram. Since the isothermal points formed normals

on his UCS diagram, transformation back into the xy plane revealed them

still to be lines, but no longer perpendicular to the locus.

MacAdam's "uniform chromaticity scale" diagram; a simplification of Judd's UCS.

Calculation

Judd's

idea of determining the nearest point to the Planckian locus on a

uniform chromaticity space is current. In 1937, MacAdam suggested a

"modified uniform chromaticity scale diagram", based on certain

simplifying geometrical considerations:

This (u,v) chromaticity space became the CIE 1960 color space, which is still used to calculate the CCT (even though MacAdam did not devise it with this purpose in mind). Using other chromaticity spaces, such as u'v', leads to non-standard results that may nevertheless be perceptually meaningful.

Close up of the CIE 1960 UCS.

The isotherms are perpendicular to the Planckian locus, and are drawn

to indicate the maximum distance from the locus that the CIE considers

the correlated color temperature to be meaningful:

The distance from the locus (i.e., degree of departure from a black body) is traditionally indicated in units of ; positive for points above the locus. This concept of distance has evolved to become Delta E, which continues to be used today.

Robertson's method

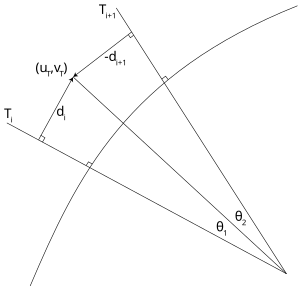

Before the advent of powerful personal computers, it was common to estimate the correlated color temperature by way of interpolation from look-up tables and charts. The most famous such method is Robertson's, who took advantage of the relatively even spacing of the mired scale (see above) to calculate the CCT Tc using linear interpolation of the isotherm's mired values:

Computation of the CCT Tc corresponding to the chromaticity coordinate in the CIE 1960 UCS.

where and are the color temperatures of the look-up isotherms and i is chosen such that . (Furthermore, the test chromaticity lies between the only two adjacent lines for which .)

If the isotherms are tight enough, one can assume , leading to

The distance of the test point to the i-th isotherm is given by

where is the chromaticity coordinate of the i-th isotherm on the Planckian locus and mi is the isotherm's slope. Since it is perpendicular to the locus, it follows that where li is the slope of the locus at .

Precautions

Although

the CCT can be calculated for any chromaticity coordinate, the result

is meaningful only if the light sources are nearly white.

The CIE recommends that "The concept of correlated color temperature

should not be used if the chromaticity of the test source differs more

than [] from the Planckian radiator."

Beyond a certain value of , a chromaticity co-ordinate may be equidistant to two points on the locus, causing ambiguity in the CCT.

Approximation

If

a narrow range of color temperatures is considered—those encapsulating

daylight being the most practical case—one can approximate the Planckian

locus in order to calculate the CCT in terms of chromaticity

coordinates. Following Kelly's observation that the isotherms intersect

in the purple region near (x = 0.325, y = 0.154), McCamy proposed this cubic approximation:

where n = (x − xe)/(y - ye) is the inverse slope line, and (xe = 0.3320, ye = 0.1858)

is the "epicenter"; quite close to the intersection point mentioned by

Kelly. The maximum absolute error for color temperatures ranging from

2856 K (illuminant A) to 6504 K (D65) is under 2 K.

A more recent proposal, using exponential terms, considerably

extends the applicable range by adding a second epicenter for high color

temperatures:

where n is as before and the other constants are defined below:

|

|

3–50 kK | 50–800 kK |

|---|---|---|

| xe | 0.3366 | 0.3356 |

| ye | 0.1735 | 0.1691 |

| A0 | −949.86315 | 36284.48953 |

| A1 | 6253.80338 | 0.00228 |

| t1 | 0.92159 | 0.07861 |

| A2 | 28.70599 | 5.4535×10−36 |

| t2 | 0.20039 | 0.01543 |

| A3 | 0.00004 |

|

| t3 | 0.07125 |

|

The author suggests that one use the low-temperature equation to determine whether the higher-temperature parameters are needed.

Color rendering index

The CIE color rendering index

(CRI) is a method to determine how well a light source's illumination

of eight sample patches compares to the illumination provided by a

reference source. Cited together, the CRI and CCT give a numerical

estimate of what reference (ideal) light source best approximates a

particular artificial light, and what the difference is.

Spectral power distribution

Characteristic spectral power distributions (SPDs) for an incandescent lamp (left) and a fluorescent lamp (right). The horizontal axes are wavelengths in nanometers, and the vertical axes show relative intensity in arbitrary units.

Light sources and illuminants may be characterized by their spectral power distribution (SPD). The relative SPD curves provided by many manufacturers may have been produced using 10 nm increments or more on their spectroradiometer. The result is what would seem to be a smoother ("fuller spectrum")

power distribution than the lamp actually has. Owing to their spiky

distribution, much finer increments are advisable for taking

measurements of fluorescent lights, and this requires more expensive

equipment.

Color temperature in astronomy

In astronomy,

the color temperature is defined by the local slope of the SPD at a

given wavelength, or, in practice, a wavelength range. Given, for

example, the color magnitudes B and V which are calibrated to be equal for an A0V star (e.g. Vega), the stellar color temperature is given by the temperature for which the color index of a black-body radiator fits the stellar one. Besides the ,

other color indices can be used as well. The color temperature (as well

as the correlated color temperature defined above) may differ largely

from the effective temperature given by the radiative flux of the

stellar surface. For example, the color temperature of an A0V star is

about 15000 K compared to an effective temperature of about 9500 K.

- Characteristic spectral power distribution of an A0V star (Teff = 9500 K, cf. Vega) compared to black-body spectra. The 15000 K black-body spectrum (dashed line) matches the visible part of the stellar SPD much better than the black body of 9500 K. All spectra are normalized to intersect at 555 nanometers.