From Wikipedia, the free encyclopedia

Heuristics is the process by which humans use mental short cuts to arrive at decisions. Heuristics are simple strategies that humans, animals, organizations, and even machines use to quickly form judgments, make decisions, and find solutions

to complex problems. Often this involves focusing on the most relevant

aspects of a problem or situation to formulate a solution. While heuristic processes are used to find the answers and solutions that are most likely to work or be correct, they are not always right or the most accurate.

Judgments and decisions based on heuristics are simply good enough to

satisfy a pressing need in situations of uncertainty, where information

is incomplete. In that sense they can differ from answers given by logic and probability.

The economist and cognitive psychologist Herbert A. Simon

introduced the concept of heuristics in the 1950s, suggesting there

were limitations to rational decision making. In the 1970s,

psychologists Amos Tversky and Daniel Kahneman added to the field with their research on cognitive bias.

It was their work that introduced specific heuristic models, a field

which has only expanded since. While some argue that pure laziness is

behind the heuristics process, others argue that it can be more accurate

than decisions based on every known factor and consequence, the less-is-more effect.

History

Herbert A. Simon formulated one of the first models of heuristics, known as satisficing. His more general research program posed the question of how humans make decisions when the conditions for rational choice theory are not met, that is how people decide under uncertainty. Simon is also known as the father of bounded rationality,

which he understood as the study of the match (or mismatch) between

heuristics and decision environments. This program was later extended

into the study of ecological rationality.

In the early 1970s, psychologists Amos Tversky and Daniel Kahneman

took a different approach, linking heuristics to cognitive biases.

Their typical experimental setup consisted of a rule of logic or

probability, embedded in a verbal description of a judgement problem,

and demonstrated that people's intuitive judgement deviated from the

rule. The "Linda problem" below gives an example. The deviation is then

explained by a heuristic. This research, called the

heuristics-and-biases program, challenged the idea that human beings are

rational actors and first gained worldwide attention in 1974 with the Science paper "Judgment Under Uncertainty: Heuristics and Biases"

and although the originally proposed heuristics have been refined over

time, this research program has changed the field by permanently setting

the research questions.

The original ideas by Herbert Simon were taken up in the 1990s by Gerd Gigerenzer

and others. According to their perspective, the study of heuristics

requires formal models that allow predictions of behavior to be made ex ante. Their program has three aspects:

- What are the heuristics humans use? (the descriptive study of the "adaptive toolbox")

- Under what conditions should humans rely on a given heuristic? (the prescriptive study of ecological rationality)

- How to design heuristic decision aids that are easy to understand and execute? (the engineering study of intuitive design)

Among others, this program has shown that heuristics can lead to

fast, frugal, and accurate decisions in many real-world situations that

are characterized by uncertainty.

These two different research programs have led to two kinds of

models of heuristics, formal models and informal ones. Formal models

describe the decision process in terms of an algorithm, which allows for

mathematical proofs and computer simulations. In contrast, informal

models are verbal descriptions.

Formal models of heuristics

Simon's satisficing strategy

Herbert Simon's satisficing heuristic can be used to choose one alternative from a set of alternatives in situations of uncertainty.

Here, uncertainty means that the total set of alternatives and their

consequences is not known or knowable. For instance, professional

real-estate entrepreneurs rely on satisficing to decide in which

location to invest to develop new commercial areas: "If I believe I can

get at least x return within y years, then I take the option." In general, satisficing is defined as:

- Step 1: Set an aspiration level α

- Step 2: Choose the first alternative that satisfies α

If no alternative is found, then the aspiration level can be adapted.

- Step 3: If after time β no alternative has satisfied α, then decrease α by some amount δ and return to step 1.

Satisficing has been reported across many domains, for instance as a heuristic car dealers use to price used BMWs.

Elimination by aspects

Unlike satisficing, Amos Tversky's

elimination-by-aspect heuristic can be used when all alternatives are

simultaneously available. The decision-maker gradually reduces the

number of alternatives by eliminating alternatives that do not meet the

aspiration level of a specific attribute (or aspect).

During a series of selections, people tend to experience uncertainty

and exhibit inconsistency. Elimination by aspects could be used when

facing selections. In general, the process of elimination by aspects is

as follows:

·Step 1: Select one attribute related to decision making

·Step 2: Eliminate all alternatives that exclude this specific attribute

·Step 3: Use another attribute in order to further eliminate alternatives

·Step 4: Repeat step 3 until only one option is left, a decision has then been made

Elimination by aspects does not speculate that choosing

alternatives could help consumers to maximize utility, on the contrary,

it holds that selection is the result of a probabilistic process that

gradually eliminates alternatives. A simple example is given by Amos Tversky:

when someone wants to purchase a new car, the first aspect they will

take into account might be the automatic transmission, this will

eliminate all alternatives that do not contain such an aspect. Then when

all the alternatives that do not have this feature are eliminated,

another aspect will be given such as a 3000$ price limit. The process of

elimination continues to occur until all alternatives are eliminated.

Elimination by aspects is well used in the early stage of

business angels’ decision-making process since it facilitates a

fast-decision-making tool - alternatives will be eliminated when

investors find a critical defect of the potential opportunities. Another research also demonstrated that elimination by aspects has widely been used in electricity contract choice.

The logic behind these two examples is that elimination by aspects

helps to make decisions when facing a series of complicated choices. One

may need to make a decision among all alternatives while he or she only

has limited intuitive computational facilities and time. However,

elimination by aspects as a compensatory model could help to make such

complex decisions since it is easier to apply and involves nonnumerical

computations.

Recognition heuristic

The recognition heuristic exploits the basic psychological capacity for

recognition in order to make inferences about unknown quantities in the

world. For two alternatives, the heuristic is:

If

one of two alternatives is recognized and the other not, then infer

that the recognized alternative has the higher value with respect to the

criterion.

For example, in the 2003 Wimbledon

tennis tournament, Andy Roddick played Tommy Robredo. If one has heard

of Roddick but not of Robredo, the recognition heuristic leads to the

prediction that Roddick will win. The recognition heuristic exploits

partial ignorance, if one has heard of both or no player, a different

strategy is needed. Studies of Wimbledon 2003 and 2005 have shown that

the recognition heuristic applied by semi-ignorant amateur players

predicted the outcomes of all gentlemen single games as well and better

than the seedings of the Wimbledon experts (who had heard of all

players), as well as the ATP rankings. The recognition heuristic is ecologically rational

(that is, it predicts well) when the recognition validity is

substantially above chance. In the present case, recognition of players'

names is highly correlated with their chances of winning.

Take-the-best

The take-the-best heuristic exploits the basic psychological capacity

for retrieving cues from memory in the order of their validity. Based

on the cue values, it infers which of two alternatives has a higher

value on a criterion.

Unlike the recognition heuristic, it requires that all alternatives are

recognized, and it thus can be applied when the recognition heuristic

cannot. For binary cues (where 1 indicates the higher criterion value),

the heuristic is defined as:

Search rule: Search cues in the order of their validity v.

Stopping rule: Stop search on finding the first cue that discriminates

between the two alternatives (i.e., one cue values are 0 and 1).

Decision rule: Infer that the alternative with the positive cue value

(1) has the higher criterion value).

The validity vi of a cue i is defined as the proportion of correct decisions ci:

vi = ci / ti

where ti is the number of cases the values of the two

alternatives differ on cue i. The validity of each cue can be estimated

from samples of observation.

Take-the-best has remarkable properties. In comparison with

complex machine learning models, it has been shown that it can often

predict better than regression models, classification-and-regression trees, neural networks, and support vector machines. [Brighton & Gigerenzer, 2015]

Similarly, psychological studies have shown that in situations

where take-the-best is ecologically rational, a large proportion of

people tend to rely on it. This includes decision making by airport

custom officers, professional burglars and police officers and student populations. The conditions under which take-the-best is ecologically rational are mostly known.

Take-the-best shows that the previous view that ignoring part of the

information would be generally irrational is incorrect. Less can be

more.

Fast-and-frugal trees

A fast-and-frugal tree is a heuristic that allows to make a classifications, such as whether a patient with severe chest pain is likely to have a heart attack or not, or whether a car approaching a checkpoint is likely to be a terrorist or a civilian.

It is called “fast and frugal” because, just like take-the-best, it

allows for quick decisions with only few cues or attributes. It is

called a “tree” because it can be represented like a decision tree in

which one asks a sequence of questions. Unlike a full decision tree,

however, it is an incomplete tree – to save time and reduce the danger

of overfitting.

Figure

1: Screening for HIV in the general public follows the logic of a

fast-and-frugal tree. If the first enzyme immunoassay (ELISA) is

negative, the diagnosis is “no HIV.” If not, a second ELISA is

performed; if it is negative, the diagnosis is “no HIV.” Otherwise, a

Western blot test is performed, which determines the final

classification

Figure 1 shows a fast-and-frugal tree used for screening for HIV

(human immunodeficiency virus). Just like take-the-best, the tree has a

search rule, stopping rule, and decision rule:

Search rule: Search through cues in a specified order.

Stopping rule: Stop search if an exit is reached.

Decision rule: Classify the person according to the exit (here: No HIV or HIV).

In the HIV tree, an ELISA (enzyme-linked immunosorbent assay)

test is conducted first. If the outcome is negative, then the testing

procedure stops and the client is informed of the good news, that is,

“no HIV.” If, however, the result is positive, a second ELISA test is

performed, preferably from a different manufacturer. If the second ELISA

is negative, then the procedure stops and the client is informed of

having “no HIV.” However, if the result is positive, a final test, the

Western blot, is conducted.

In general, for n binary cues, a fast-and-frugal tree has exactly

n + 1 exits – one for each cue and two for the final cue. A full

decision tree, in contrast, requires 2n exits. The order of

cues (tests) in a fast-and-frugal tree is determined by the sensitivity

and specificity of the cues, or by other considerations such as the

costs of the tests. In the case of the HIV tree, the ELISA is ranked

first because it produces fewer misses than the Western blot test, and

also is less expensive. The Western blot test, in contrast, produces

fewer false alarms. In a full tree, in contrast, order does not matter

for the accuracy of the classifications.

Fast-and-frugal trees are descriptive or prescriptive models of

decision making under uncertainty. For instance, an analysis or court

decisions reported that the best model of how London magistrates make

bail decisions is a fast and frugal tree.

The HIV tree is both prescriptive– physicians are taught the procedure –

and a descriptive model, that is, most physicians actually follow the

procedure.

Informal models of heuristics

In

their initial research, Tversky and Kahneman proposed three

heuristics—availability, representativeness, and anchoring and

adjustment. Subsequent work has identified many more. Heuristics that

underlie judgment are called "judgment heuristics". Another type, called

"evaluation heuristics", are used to judge the desirability of possible

choices.

Availability

In psychology, availability is the ease with which a

particular idea can be brought to mind. When people estimate how likely

or how frequent an event is on the basis of its availability, they are

using the availability heuristic.

When an infrequent event can be brought easily and vividly to mind,

this heuristic overestimates its likelihood. For example, people

overestimate their likelihood of dying in a dramatic event such as a tornado or terrorism. Dramatic, violent deaths are usually more highly publicised and therefore have a higher availability.

On the other hand, common but mundane events are hard to bring to mind,

so their likelihoods tend to be underestimated. These include deaths

from suicides, strokes, and diabetes.

This heuristic is one of the reasons why people are more easily swayed

by a single, vivid story than by a large body of statistical evidence. It may also play a role in the appeal of lotteries:

to someone buying a ticket, the well-publicised, jubilant winners are

more available than the millions of people who have won nothing.

When people judge whether more English words begin with T or with K , the availability heuristic gives a quick way to answer the question. Words that begin with T

come more readily to mind, and so subjects give a correct answer

without counting out large numbers of words. However, this heuristic can

also produce errors. When people are asked whether there are more

English words with K in the first position or with K in the third position, they use the same process. It is easy to think of words that begin with K, such as kangaroo, kitchen, or kept. It is harder to think of words with K as the third letter, such as lake, or acknowledge, although objectively these are three times more common. This leads people to the incorrect conclusion that K is more common at the start of words.

In another experiment, subjects heard the names of many celebrities,

roughly equal numbers of whom were male and female. The subjects were

then asked whether the list of names included more men or more women.

When the men in the list were more famous, a great majority of subjects

incorrectly thought there were more of them, and vice versa for women.

Tversky and Kahneman's interpretation of these results is that judgments

of proportion are based on availability, which is higher for the names

of better-known people.

In one experiment that occurred before the 1976 U.S. Presidential election, some participants were asked to imagine Gerald Ford winning, while others did the same for a Jimmy Carter

victory. Each group subsequently viewed their allocated candidate as

significantly more likely to win. The researchers found a similar effect

when students imagined a good or a bad season for a college football team. The effect of imagination on subjective likelihood has been replicated by several other researchers.

A concept's availability can be affected by how recently and how

frequently it has been brought to mind. In one study, subjects were

given partial sentences to complete. The words were selected to activate

the concept either of hostility or of kindness: a process known as priming.

They then had to interpret the behavior of a man described in a short,

ambiguous story. Their interpretation was biased towards the emotion

they had been primed with: the more priming, the greater the effect. A

greater interval between the initial task and the judgment decreased the

effect.

Tversky and Kahneman offered the availability heuristic as an explanation for illusory correlations

in which people wrongly judge two events to be associated with each

other. They explained that people judge correlation on the basis of the

ease of imagining or recalling the two events together.

Representativeness

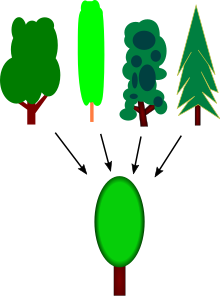

Snap judgement of whether novel object fits an existing category

The representativeness heuristic is seen when people use categories,

for example when deciding whether or not a person is a criminal. An

individual thing has a high representativeness for a category if

it is very similar to a prototype of that category. When people

categorise things on the basis of representativeness, they are using the

representativeness heuristic. "Representative" is here meant in two

different senses: the prototype used for comparison is representative of

its category, and representativeness is also a relation between that

prototype and the thing being categorised. While it is effective for some problems, this heuristic involves

attending to the particular characteristics of the individual, ignoring

how common those categories are in the population (called the base rates).

Thus, people can overestimate the likelihood that something has a very

rare property, or underestimate the likelihood of a very common

property. This is called the base rate fallacy. Representativeness explains this and several other ways in which human judgments break the laws of probability.

The representativeness heuristic is also an explanation of how

people judge cause and effect: when they make these judgements on the

basis of similarity, they are also said to be using the

representativeness heuristic. This can lead to a bias, incorrectly

finding causal relationships between things that resemble one another

and missing them when the cause and effect are very different. Examples

of this include both the belief that "emotionally relevant events ought

to have emotionally relevant causes", and magical associative thinking.

Representativeness of base rates

A 1973 experiment used a psychological profile of Tom W., a fictional graduate student.

One group of subjects had to rate Tom's similarity to a typical student

in each of nine academic areas (including Law, Engineering and Library

Science). Another group had to rate how likely it is that Tom

specialised in each area. If these ratings of likelihood are governed by

probability, then they should resemble the base rates,

i.e. the proportion of students in each of the nine areas (which had

been separately estimated by a third group). If people based their

judgments on probability, they would say that Tom is more likely to

study Humanities than Library Science, because there are many more

Humanities students, and the additional information in the profile is

vague and unreliable. Instead, the ratings of likelihood matched the

ratings of similarity almost perfectly, both in this study and a similar

one where subjects judged the likelihood of a fictional woman taking

different careers. This suggests that rather than estimating probability

using base rates, subjects had substituted the more accessible

attribute of similarity.

Conjunction fallacy

When people rely on representativeness, they can fall into an error which breaks a fundamental law of probability.

Tversky and Kahneman gave subjects a short character sketch of a woman

called Linda, describing her as, "31 years old, single, outspoken, and

very bright. She majored in philosophy. As a student, she was deeply

concerned with issues of discrimination and social justice, and also

participated in anti-nuclear demonstrations". People reading this

description then ranked the likelihood of different statements about

Linda. Amongst others, these included "Linda is a bank teller", and,

"Linda is a bank teller and is active in the feminist movement". People

showed a strong tendency to rate the latter, more specific statement as

more likely, even though a conjunction of the form "Linda is both X and Y" can never be more probable than the more general statement "Linda is X".

The explanation in terms of heuristics is that the judgment was

distorted because, for the readers, the character sketch was

representative of the sort of person who might be an active feminist but

not of someone who works in a bank. A similar exercise concerned Bill,

described as "intelligent but unimaginative". A great majority of people

reading this character sketch rated "Bill is an accountant who plays

jazz for a hobby", as more likely than "Bill plays jazz for a hobby".

Without success, Tversky and Kahneman used what they described as

"a series of increasingly desperate manipulations" to get their

subjects to recognise the logical error. In one variation, subjects had

to choose between a logical explanation of why "Linda is a bank teller"

is more likely, and a deliberately illogical argument

which said that "Linda is a feminist bank teller" is more likely

"because she resembles an active feminist more than she resembles a bank

teller". Sixty-five percent of subjects found the illogical argument

more convincing.

Other researchers also carried out variations of this study, exploring

the possibility that people had misunderstood the question. They did not

eliminate the error. It has been shown that individuals with high CRT scores are significantly less likely to be subject to the conjunction fallacy.

The error disappears when the question is posed in terms of

frequencies. Everyone in these versions of the study recognised that out

of 100 people fitting an outline description, the conjunction statement

("She is X and Y") cannot apply to more people than the general statement ("She is X").

Ignorance of sample size

Tversky and Kahneman asked subjects to consider a problem about

random variation. Imagining for simplicity that exactly half of the

babies born in a hospital are male, the ratio will not be exactly half

in every time period. On some days, more girls will be born and on

others, more boys. The question was, does the likelihood of deviating

from exactly half depend on whether there are many or few births per

day? It is a well-established consequence of sampling theory

that proportions will vary much more day-to-day when the typical number

of births per day is small. However, people's answers to the problem do

not reflect this fact. They typically reply that the number of births

in the hospital makes no difference to the likelihood of more than 60%

male babies in one day. The explanation in terms of the heuristic is

that people consider only how representative the figure of 60% is of the

previously given average of 50%.

Dilution effect

Richard E. Nisbett and colleagues suggest that representativeness explains the dilution effect, in which irrelevant information weakens the effect of a stereotype.

Subjects in one study were asked whether "Paul" or "Susan" was more

likely to be assertive, given no other information than their first

names. They rated Paul as more assertive, apparently basing their

judgment on a gender stereotype. Another group, told that Paul's and

Susan's mothers each commute to work in a bank, did not show this

stereotype effect; they rated Paul and Susan as equally assertive. The

explanation is that the additional information about Paul and Susan made

them less representative of men or women in general, and so the

subjects' expectations about men and women had a weaker effect.

This means unrelated and non-diagnostic information about certain issue

can make relative information less powerful to the issue when people

understand the phenomenon.

Misperception of randomness

Representativeness

explains systematic errors that people make when judging the

probability of random events. For example, in a sequence of coin tosses,

each of which comes up heads (H) or tails (T), people reliably tend to

judge a clearly patterned sequence such as HHHTTT as less likely than a

less patterned sequence such as HTHTTH. These sequences have exactly the

same probability, but people tend to see the more clearly patterned

sequences as less representative of randomness, and so less likely to

result from a random process. Tversky and Kahneman argued that this effect underlies the gambler's fallacy; a tendency to expect outcomes to even out over the short run, like expecting a roulette wheel to come up black because the last several throws came up red.

They emphasised that even experts in statistics were susceptible to

this illusion: in a 1971 survey of professional psychologists, they

found that respondents expected samples to be overly representative of

the population they were drawn from. As a result, the psychologists

systematically overestimated the statistical power of their tests, and underestimated the sample size needed for a meaningful test of their hypotheses.

Anchoring and adjustment

Anchoring and adjustment is a heuristic used in many situations where people estimate a number.

According to Tversky and Kahneman's original description, it involves

starting from a readily available number—the "anchor"—and shifting

either up or down to reach an answer that seems plausible.

In Tversky and Kahneman's experiments, people did not shift far enough

away from the anchor. Hence the anchor contaminates the estimate, even

if it is clearly irrelevant. In one experiment, subjects watched a

number being selected from a spinning "wheel of fortune". They had to

say whether a given quantity was larger or smaller than that number. For

instance, they might be asked, "Is the percentage of African countries

which are members of the United Nations

larger or smaller than 65%?" They then tried to guess the true

percentage. Their answers correlated well with the arbitrary number they

had been given.

Insufficient adjustment from an anchor is not the only explanation for

this effect. An alternative theory is that people form their estimates

on evidence which is selectively brought to mind by the anchor.

The

amount of money people will pay in an auction for a bottle of wine can

be influenced by considering an arbitrary two-digit number.

The anchoring effect has been demonstrated by a wide variety of experiments both in laboratories and in the real world.

It remains when the subjects are offered money as an incentive to be

accurate, or when they are explicitly told not to base their judgment on

the anchor. The effect is stronger when people have to make their judgments quickly. Subjects in these experiments lack introspective awareness of the heuristic, denying that the anchor affected their estimates.

Even when the anchor value is obviously random or extreme, it can still contaminate estimates. One experiment asked subjects to estimate the year of Albert Einstein's

first visit to the United States. Anchors of 1215 and 1992 contaminated

the answers just as much as more sensible anchor years. Other experiments asked subjects if the average temperature in San Francisco is more or less than 558 degrees, or whether there had been more or fewer than 100,025 top ten albums by The Beatles. These deliberately absurd anchors still affected estimates of the true numbers.

Anchoring results in a particularly strong bias when estimates are stated in the form of a confidence interval.

An example is where people predict the value of a stock market index on

a particular day by defining an upper and lower bound so that they are

98% confident the true value will fall in that range. A reliable finding

is that people anchor their upper and lower bounds too close to their

best estimate. This leads to an overconfidence effect.

One much-replicated finding is that when people are 98% certain that a

number is in a particular range, they are wrong about thirty to forty

percent of the time.

Anchoring also causes particular difficulty when many numbers are

combined into a composite judgment. Tversky and Kahneman demonstrated

this by asking a group of people to rapidly estimate the product

8 x 7 x 6 x 5 x 4 x 3 x 2 x 1. Another group had to estimate the same

product in reverse order; 1 x 2 x 3 x 4 x 5 x 6 x 7 x 8. Both groups

underestimated the answer by a wide margin, but the latter group's

average estimate was significantly smaller. The explanation in terms of anchoring is that people multiply the first few terms of each product and anchor on that figure.

A less abstract task is to estimate the probability that an aircraft

will crash, given that there are numerous possible faults each with a

likelihood of one in a million. A common finding from studies of these

tasks is that people anchor on the small component probabilities and so

underestimate the total. A corresponding effect happens when people estimate the probability of multiple events happening in sequence, such as an accumulator bet

in horse racing. For this kind of judgment, anchoring on the individual

probabilities results in an overestimation of the combined probability.

Examples

People's

valuation of goods, and the quantities they buy, respond to anchoring

effects. In one experiment, people wrote down the last two digits of

their social security numbers.

They were then asked to consider whether they would pay this number of

dollars for items whose value they did not know, such as wine,

chocolate, and computer equipment. They then entered an auction

to bid for these items. Those with the highest two-digit numbers

submitted bids that were many times higher than those with the lowest

numbers.

When a stack of soup cans in a supermarket was labelled, "Limit 12 per

customer", the label influenced customers to buy more cans. In another experiment, real estate agents

appraised the value of houses on the basis of a tour and extensive

documentation. Different agents were shown different listing prices, and

these affected their valuations. For one house, the appraised value

ranged from US$114,204 to $128,754.

Anchoring and adjustment has also been shown to affect grades

given to students. In one experiment, 48 teachers were given bundles of

student essays, each of which had to be graded and returned. They were

also given a fictional list of the students' previous grades. The mean

of these grades affected the grades that teachers awarded for the essay.

One study showed that anchoring affected the sentences in a fictional rape trial.

The subjects were trial judges with, on average, more than fifteen

years of experience. They read documents including witness testimony,

expert statements, the relevant penal code, and the final pleas from the

prosecution and defence. The two conditions of this experiment differed

in just one respect: the prosecutor demanded a 34-month sentence in one

condition and 12 months in the other; there was an eight-month

difference between the average sentences handed out in these two

conditions.

In a similar mock trial, the subjects took the role of jurors in a

civil case. They were either asked to award damages "in the range from

$15 million to $50 million" or "in the range from $50 million to $150

million". Although the facts of the case were the same each time, jurors

given the higher range decided on an award that was about three times

higher. This happened even though the subjects were explicitly warned

not to treat the requests as evidence.

Assessments can also be influenced by the stimuli provided. In

one review, researchers found that if a stimulus is perceived to be

important or carry "weight" to a situation, that people were more likely

to attribute that stimulus as heavier physically.

Affect heuristic

"Affect", in this context, is a feeling such as fear, pleasure or surprise. It is shorter in duration than a mood, occurring rapidly and involuntarily in response to a stimulus. While reading the words "lung cancer" might generate an affect of dread, the words "mother's love" can create an affect of affection and comfort. When people use affect ("gut responses") to judge benefits or risks, they are using the affect heuristic. The affect heuristic has been used to explain why messages framed to activate emotions are more persuasive than those framed in a purely factual way.

Others

Theories

There are competing theories of human judgment, which differ on whether the use of heuristics is irrational. A cognitive laziness approach argues that heuristics are inevitable shortcuts given the limitations of the human brain. According to the natural assessments

approach, some complex calculations are already done rapidly and

automatically by the brain, and other judgments make use of these

processes rather than calculating from scratch. This has led to a theory

called "attribute substitution", which says that people often handle a

complicated question by answering a different, related question, without

being aware that this is what they are doing.

A third approach argues that heuristics perform just as well as more

complicated decision-making procedures, but more quickly and with less

information. This perspective emphasises the "fast and frugal" nature of

heuristics.

Cognitive laziness

An effort-reduction framework proposed by Anuj K. Shah and Daniel M. Oppenheimer states that people use a variety of techniques to reduce the effort of making decisions.

Attribute substitution

A

visual example of attribute substitution. This illusion works because

the 2D size of parts of the scene is judged on the basis of 3D

(perspective) size, which is rapidly calculated by the visual system.

In 2002 Daniel Kahneman and Shane Frederick

proposed a process called attribute substitution which happens without

conscious awareness. According to this theory, when somebody makes a

judgment (of a target attribute) which is computationally complex, a rather more easily calculated heuristic attribute is substituted.

In effect, a difficult problem is dealt with by answering a rather

simpler problem, without the person being aware this is happening.

This explains why individuals can be unaware of their own biases, and

why biases persist even when the subject is made aware of them. It also

explains why human judgments often fail to show regression toward the mean.

This substitution is thought of as taking place in the automatic intuitive judgment system, rather than the more self-aware reflective

system. Hence, when someone tries to answer a difficult question, they

may actually answer a related but different question, without realizing

that a substitution has taken place.

In 1975, psychologist Stanley Smith Stevens proposed that the strength of a stimulus (e.g. the brightness of a light, the severity of a crime) is encoded by brain cells in a way that is independent of modality.

Kahneman and Frederick built on this idea, arguing that the target

attribute and heuristic attribute could be very different in nature.

[P]eople are not accustomed to thinking hard, and are often content to trust a plausible judgment that comes to mind.

Daniel Kahneman, American Economic Review 93 (5) December 2003, p. 1450

Kahneman and Frederick propose three conditions for attribute substitution:

- The target attribute is relatively inaccessible.

Substitution

is not expected to take place in answering factual questions that can

be retrieved directly from memory ("What is your birthday?") or about

current experience ("Do you feel thirsty now?). - An associated attribute is highly accessible.

This might be because it is evaluated automatically in normal perception or because it has been primed.

For example, someone who has been thinking about their love life and is

then asked how happy they are might substitute how happy they are with

their love life rather than other areas. - The substitution is not detected and corrected by the reflective system.

For

example, when asked "A bat and a ball together cost $1.10. The bat

costs $1 more than the ball. How much does the ball cost?" many subjects

incorrectly answer $0.10.

An explanation in terms of attribute substitution is that, rather than

work out the sum, subjects parse the sum of $1.10 into a large amount

and a small amount, which is easy to do. Whether they feel that is the

right answer will depend on whether they check the calculation with

their reflective system.

Kahneman gives an example where some Americans were offered insurance

against their own death in a terrorist attack while on a trip to

Europe, while another group were offered insurance that would cover

death of any kind on the trip. Even though "death of any kind" includes

"death in a terrorist attack", the former group were willing to pay more

than the latter. Kahneman suggests that the attribute of fear is being substituted for a calculation of the total risks of travel. Fear of terrorism for these subjects was stronger than a general fear of dying on a foreign trip.

Fast and frugal

Gerd Gigerenzer

and colleagues have argued that heuristics can be used to make

judgments that are accurate rather than biased. According to them,

heuristics are "fast and frugal" alternatives to more complicated

procedures, giving answers that are just as good.

Consequences

Efficient decision heuristics

Warren

Thorngate, a social psychologist, implemented ten simple decision rules

or heuristics in a computer program. He determined how often each

heuristic selected alternatives with highest-through-lowest expected

value in a series of randomly-generated decision situations. He found

that most of the simulated heuristics selected alternatives with highest

expected value and almost never selected alternatives with lowest

expected value.

"Beautiful-is-familiar" effect

Psychologist

Benoît Monin reports a series of experiments in which subjects, looking

at photographs of faces, have to judge whether they have seen those

faces before. It is repeatedly found that attractive faces are more

likely to be mistakenly labeled as familiar.

Monin interprets this result in terms of attribute substitution. The

heuristic attribute in this case is a "warm glow"; a positive feeling

towards someone that might either be due to their being familiar or

being attractive. This interpretation has been criticised, because not

all the variance in familiarity is accounted for by the attractiveness of the photograph.

Judgments of morality and fairness

Legal scholar Cass Sunstein has argued that attribute substitution is pervasive when people reason about moral, political or legal matters.

Given a difficult, novel problem in these areas, people search for a

more familiar, related problem (a "prototypical case") and apply its

solution as the solution to the harder problem. According to Sunstein,

the opinions of trusted political or religious authorities can serve as

heuristic attributes when people are asked their own opinions on a

matter. Another source of heuristic attributes is emotion: people's moral opinions on sensitive subjects like sexuality and human cloning may be driven by reactions such as disgust, rather than by reasoned principles.

Sunstein has been challenged as not providing enough evidence that

attribute substitution, rather than other processes, is at work in these

cases.

Persuasion

An example of how persuasion plays a role in heuristic processing can be explained through the heuristic-systematic model.

This explains how there are often two ways we are able to process

information from persuasive messages, one being heuristically and the

other systematically. A heuristic is when we make a quick short

judgement into our decision making. On the other hand, systematic

processing involves more analytical and inquisitive cognitive thinking.

Individuals looks further than their own prior knowledge for the

answers. An example of this model could be used when watching an advertisement

about a specific medication. One without prior knowledge would see the

person in the proper pharmaceutical attire and assume that they know

what they are talking about. Therefore, that person automatically has

more credibility and is more likely to trust the content of the messages

than they deliver. While another who is also in that field of work or

already has prior knowledge of the medication will not be persuaded by

the ad because of their systematic way of thinking. This was also

formally demonstrated in an experiment conducted my Chaiken and

Maheswaran (1994).

In addition to these examples, the fluency heuristic ties in perfectly

with the topic of persuasion. It is described as how we all easily make

"the most of an automatic by-product of retrieval from memory". An example would be a friend asking about good books to read.

Many could come to mind, but you name the first book recalled from your

memory. Since it was the first thought, therefore you value it as

better than any other book one could suggest. The effort heuristic is

almost identical to fluency. The one distinction would be that objects

that take longer to produce are seen with more value. One may conclude

that a glass vase is more valuable than a drawing, merely because it may

take longer for the vase. These two varieties of heuristics confirms

how we may be influenced easily our mental shortcuts, or what may come

quickest to our mind.