Chemical equilibrium

From Wikipedia, the free encyclopedia

Historical introduction

Burette, a common laboratory apparatus for carrying out titration, an important experimental technique in equilibrium and analytical chemistry.

The concept of chemical equilibrium was developed after Berthollet (1803) found that some chemical reactions are reversible. For any reaction mixture to exist at equilibrium, the rates of the forward and backward (reverse) reactions are equal. In the following chemical equation with arrows pointing both ways to indicate equilibrium, A and B are reactant chemical species, S and T are product species, and α, β, σ, and τ are the stoichiometric coefficients of the respective reactants and products:

Guldberg and Waage (1865), building on Berthollet’s ideas, proposed the law of mass action:

Despite the failure of this derivation, the equilibrium constant for a reaction is indeed a constant, independent of the activities of the various species involved, though it does depend on temperature as observed by the van 't Hoff equation. Adding a catalyst will affect both the forward reaction and the reverse reaction in the same way and will not have an effect on the equilibrium constant. The catalyst will speed up both reactions thereby increasing the speed at which equilibrium is reached.[2][4]

Although the macroscopic equilibrium concentrations are constant in time, reactions do occur at the molecular level. For example, in the case of acetic acid dissolved in water and forming acetate and hydronium ions,

- CH3CO2H + H2O ⇌ CH3CO2− + H3O+

Le Chatelier's principle (1884) gives an idea of the behavior of an equilibrium system when changes to its reaction conditions occur. If a dynamic equilibrium is disturbed by changing the conditions, the position of equilibrium moves to partially reverse the change. For example, adding more S from the outside will cause an excess of products, and the system will try to counteract this by increasing the reverse reaction and pushing the equilibrium point backward (though the equilibrium constant will stay the same).

If mineral acid is added to the acetic acid mixture, increasing the concentration of hydronium ion, the amount of dissociation must decrease as the reaction is driven to the left in accordance with this principle. This can also be deduced from the equilibrium constant expression for the reaction:

A quantitative version is given by the reaction quotient.

J. W. Gibbs suggested in 1873 that equilibrium is attained when the Gibbs free energy of the system is at its minimum value (assuming the reaction is carried out at constant temperature and pressure).

What this means is that the derivative of the Gibbs energy with respect to reaction coordinate (a measure of the extent of reaction that has occurred, ranging from zero for all reactants to a maximum for all products) vanishes, signalling a stationary point. This derivative is called the reaction Gibbs energy (or energy change) and corresponds to the difference between the chemical potentials of reactants and products at the composition of the reaction mixture.[1] This criterion is both necessary and sufficient. If a mixture is not at equilibrium, the liberation of the excess Gibbs energy (or Helmholtz energy at constant volume reactions) is the “driving force” for the composition of the mixture to change until equilibrium is reached. The equilibrium constant can be related to the standard Gibbs free energy change for the reaction by the equation

When the reactants are dissolved in a medium of high ionic strength the quotient of activity coefficients may be taken to be constant. In that case the concentration quotient, Kc,

Thermodynamics

At constant temperature and pressure, one must consider the Gibbs free energy, G, while at constant temperature and volume, one must consider the Helmholtz free energy: A, for the reaction; and at constant internal energy and volume, one must consider the entropy for the reaction: S.The constant volume case is important in geochemistry and atmospheric chemistry where pressure variations are significant. Note that, if reactants and products were in standard state (completely pure), then there would be no reversibility and no equilibrium. The mixing of the products and reactants contributes a large entropy (known as entropy of mixing) to states containing equal mixture of products and reactants. The combination of the standard Gibbs energy change and the Gibbs energy of mixing determines the equilibrium state.[5][6]

In this article only the constant pressure case is considered. The relation between the Gibbs free energy and the equilibrium constant can be found by considering chemical potentials.[1]

At constant temperature and pressure, the Gibbs free energy, G, for the reaction depends only on the extent of reaction: ξ (Greek letter xi), and can only decrease according to the second law of thermodynamics. It means that the derivative of G with ξ must be negative if the reaction happens; at the equilibrium the derivative being equal to zero.

: equilibrium

, (

is the standard chemical potential ).

in the case of a closed system.

(

corresponds to the Stoichiometric coefficient and

is the differential of the extent of reaction ).

which corresponds to the Gibbs free energy change for the reaction .

.

,

-

: which is the standard Gibbs energy change for the reaction. It is a constant at a given temperature, which can be calculated, using thermodynamical tables.

- (

is the reaction quotient when the system is not at equilibrium ).

- (

-

; the reaction quotient becomes equal to the equilibrium constant.

Addition of reactants or products

For a reactional system at equilibrium:- If are modified activities of constituents, the value of the reaction quotient changes and becomes different from the equilibrium constant:

and

then

- If activity of a reagent

increases

- then

- If activity of a product

increases

- then

Note that activities and equilibrium constants are dimensionless numbers.

Treatment of activity

The expression for the equilibrium constant can be rewritten as the product of a concentration quotient, Kc and an activity coefficient quotient, Γ.This practice will be followed here.

For reactions in the gas phase partial pressure is used in place of concentration and fugacity coefficient in place of activity coefficient. In the real world, for example, when making ammonia in industry, fugacity coefficients must be taken into account. Fugacity, f, is the product of partial pressure and fugacity coefficient. The chemical potential of a species in the gas phase is given by

Concentration quotients

In aqueous solution, equilibrium constants are usually determined in the presence of an "inert" electrolyte such as sodium nitrate NaNO3 or potassium perchlorate KClO4. The ionic strength of a solution is given byTo use a published value of an equilibrium constant in conditions of ionic strength different from the conditions used in its determination, the value should be adjustedSoftware (below).

Metastable mixtures

A mixture may be appear to have no tendency to change, though it is not at equilibrium. For example, a mixture of SO2 and O2 is metastable as there is a kinetic barrier to formation of the product, SO3.- 2SO2 + O2

2SO3

Likewise, the formation of bicarbonate from carbon dioxide and water is very slow under normal conditions

- CO2 + 2H2O

HCO3- +H3O+

Pure substances

When pure substances (liquids or solids) are involved in equilibria their activities do not appear in the equilibrium constant[10] because their numerical values are considered one.Applying the general formula for an equilibrium constant to the specific case of acetic acid one obtains

- CH3CO2H + H2O ⇌ CH3CO2− + H3O+

a constant factor is incorporated into the equilibrium constant.

A particular case is the self-ionization of water itself

It is perfectly legitimate to write [H+] for the hydronium ion concentration, since the state of solvation of the proton is constant (in dilute solutions) and so does not affect the equilibrium concentrations. Kw varies with variation in ionic strength and/or temperature.

The concentrations of H+ and OH- are not independent quantities. Most commonly [OH-] is replaced by Kw[H+]−1 in equilibrium constant expressions which would otherwise include hydroxide ion.

Solids also do not appear in the equilibrium constant expression, if they are considered to be pure and thus their activities taken to be one. An example is the Boudouard reaction:[10]

Multiple equilibria

Consider the case of a dibasic acid H2A. When dissolved in water, the mixture will contain H2A, HA- and A2-. This equilibrium can be split into two steps in each of which one proton is liberated.Effect of temperature

The effect of changing temperature on an equilibrium constant is given by the van 't Hoff equationEffect of electric and magnetic fields

The effect of electric field on equilibrium has been studied by Manfred Eigen[citation needed] among others.Types of equilibrium

- In the gas phase. Rocket engines[12]

- The industrial synthesis such as ammonia in the Haber–Bosch process (depicted right) takes place through a succession of equilibrium steps including adsorption processes.

- atmospheric chemistry

- Seawater and other natural waters: Chemical oceanography

- Distribution between two phases

- LogD-Distribution coefficient: Important for pharmaceuticals where lipophilicity is a significant property of a drug

- Liquid–liquid extraction, Ion exchange, Chromatography

- Solubility product

- Uptake and release of oxygen by haemoglobin in blood

- Acid/base equilibria: Acid dissociation constant, hydrolysis, buffer solutions, indicators, acid–base homeostasis

- Metal-ligand complexation: sequestering agents, chelation therapy, MRI contrast reagents, Schlenk equilibrium

- Adduct formation: Host–guest chemistry, supramolecular chemistry, molecular recognition, dinitrogen tetroxide

- In certain oscillating reactions, the approach to equilibrium is not asymptotically but in the form of a damped oscillation .[10]

- The related Nernst equation in electrochemistry gives the difference in electrode potential as a function of redox concentrations.

- When molecules on each side of the equilibrium are able to further react irreversibly in secondary reactions, the final product ratio is determined according to the Curtin–Hammett principle.

Composition of a mixture

When the only equilibrium is that of the formation of a 1:1 adduct as the composition of a mixture, there are any number of ways that the composition of a mixture can be calculated. For example, see ICE table for a traditional method of calculating the pH of a solution of a weak acid.There are three approaches to the general calculation of the composition of a mixture at equilibrium.

- The most basic approach is to manipulate the various equilibrium constants until the desired concentrations are expressed in terms of measured equilibrium constants (equivalent to measuring chemical potentials) and initial conditions.

- Minimize the Gibbs energy of the system.[13]

- Satisfy the equation of mass balance. The equations of mass balance are simply statements that demonstrate that the total concentration of each reactant must be constant by the law of conservation of mass.

Mass-balance equations

In general, the calculations are rather complicated or complex. For instance, in the case of a dibasic acid, H2A dissolved in water the two reactants can be specified as the conjugate base, A2-, and the proton, H+. The following equations of mass-balance could apply equally well to a base such as 1,2-diaminoethane, in which case the base itself is designated as the reactant A:When the equilibrium constants are known and the total concentrations are specified there are two equations in two unknown "free concentrations" [A] and [H]. This follows from the fact that [HA]= β1[A][H], [H2A]= β2[A][H]2 and [OH] = Kw[H]−1

Polybasic acids

The composition of solutions containing reactants A and H is easy to calculate as a function of p[H]. When [H] is known, the free concentration [A] is calculated from the mass-balance equation in A.

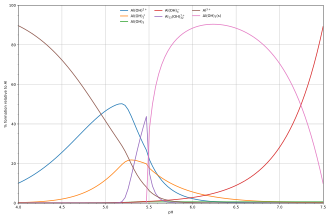

The diagram alongside, shows an example of the hydrolysis of the aluminium Lewis acid Al3+aq[14] shows the species concentrations for a 5×10−6M solution of an aluminium salt as a function of pH. Each concentration is shown as a percentage of the total aluminium.

Solution and precipitation

The diagram above illustrates the point that a precipitate that is not one of the main species in the solution equilibrium may be formed. At pH just below 5.5 the main species present in a 5μM solution of Al3+ are aluminium hydroxides Al(OH)2+, Al(OH)2+ and Al13(OH)327+, but on raising the pH Al(OH)3 precipitates from the solution. This occurs because Al(OH)3 has a very large lattice energy. As the pH rises more and more Al(OH)3 comes out of solution. This is an example of Le Chatelier's principle in action: Increasing the concentration of the hydroxide ion causes more aluminium hydroxide to precipitate, which removes hydroxide from the solution. When the hydroxide concentration becomes sufficiently high the soluble aluminate, Al(OH)4-, is formed.Another common instance where precipitation occurs is when a metal cation interacts with an anionic ligand to form an electrically neutral complex. If the complex is hydrophobic, it will precipitate out of water. This occurs with the nickel ion Ni2+ and dimethylglyoxime, (dmgH2): in this case the lattice energy of the solid is not particularly large, but it greatly exceeds the energy of solvation of the molecule Ni(dmgH)2.

Minimization of free energy

At equilibrium, G is at a minimum:The total number of atoms of each element will remain constant. This means that the minimization above must be subjected to the constraints:

This is a standard problem in optimisation, known as constrained minimisation. The most common method of solving it is using the method of Lagrange multipliers, also known as undetermined multipliers (though other methods may be used).

Define:

and

This is a set of (m+k) equations in (m+k) unknowns (the

This method of calculating equilibrium chemical concentrations is useful for systems with a large number of different molecules. The use of k atomic element conservation equations for the mass constraint is straightforward, and replaces the use of the stoichiometric coefficient equations.[12]