What Catastrophe?

MIT’s Richard Lindzen, the unalarmed climate scientist

Jan 13, 2014, Vol. 19, No. 17 • By ETHAN EPSTEIN

When you first meet Richard Lindzen, the Alfred P. Sloan professor of meteorology at MIT, senior fellow at the Cato Institute, leading climate “skeptic,” and all-around scourge of James Hansen, Bill McKibben, Al Gore, the Intergovernmental Panel on Climate Change (IPCC), and sundry other climate “alarmists,” as Lindzen calls them, you may find yourself a bit surprised. If you know Lindzen only from the way his opponents characterize him—variously, a liar, a lunatic, a charlatan, a denier, a shyster, a crazy person, corrupt—you might expect a spittle-flecked, wild-eyed loon. But in person, Lindzen cuts a rather different figure. With his gray beard, thick glasses, gentle laugh, and disarmingly soft voice, he comes across as nothing short of grandfatherly.

Thomas Fluharty

Granted, Lindzen is no shrinking violet. A pioneering climate scientist with decades at Harvard and MIT, Lindzen sees his discipline as being deeply compromised by political pressure, data fudging, out-and-out guesswork, and wholly unwarranted alarmism. In a shot across the bow of what many insist is indisputable scientific truth, Lindzen characterizes global warming as “small and . . . nothing to be alarmed about.” In the climate debate—on which hinge far-reaching questions of public policy—them’s fightin’ words.

In his mid-seventies, married with two sons, and now emeritus at MIT, Lindzen spends between four and six months a year at his second home in Paris. But that doesn’t mean he’s no longer in the thick of the climate controversy; he writes, gives myriad talks, participates in debates, and occasionally testifies before Congress. In an eventful life, Lindzen has made the strange journey from being a pioneer in his field and eventual IPCC coauthor to an outlier in the discipline—if not an outcast.

Richard Lindzen was born in 1940 in Webster, Massachusetts, to Jewish immigrants from Germany. His bootmaker father moved the family to the Bronx shortly after Richard was born. Lindzen attended the Bronx High School of Science before winning a scholarship to the only place he applied that was out of town, the Rensselaer Polytechnic Institute, in Troy, New York. After a couple of years at Rensselaer, he transferred to Harvard, where he completed his bachelor’s degree and, in 1964, a doctorate.

Lindzen wasn’t a climatologist from the start—“climate science” as such didn’t exist when he was beginning his career in academia. Rather, Lindzen studied math. “I liked applied math,” he says, “[and] I was a bit turned off by modern physics, but I really enjoyed classical physics, fluid mechanics, things like that.” A few years after arriving at Harvard, he began his transition to meteorology. “Harvard actually got a grant from the Ford Foundation to offer generous fellowships to people in the atmospheric sciences,” he explains. “Harvard had no department in atmospheric sciences, so these fellowships allowed you to take a degree in applied math or applied physics, and that worked out very well because in applied math the atmosphere and oceans were considered a good area for problems. . . . I discovered I really liked atmospheric sciences—meteorology. So I stuck with it and picked out a thesis.”

And with that, Lindzen began his meteoric rise through the nascent field. In the 1970s, while a professor at Harvard, Lindzen disproved the then-accepted theory of how heat moves around the Earth’s atmosphere, winning numerous awards in the process. Before his 40th birthday, he was a member of the National Academy of Sciences. In the mid-1980s, he made the short move from Harvard to MIT, and he’s remained there ever since. Over the decades, he’s authored or coauthored some 200 peer-reviewed papers on climate.

Where Lindzen hasn’t remained is in the mainstream of his discipline. By the 1980s, global warming was becoming a major political issue. Already, Lindzen was having doubts about the more catastrophic predictions being made. The public rollout of the “alarmist” case, he notes, “was immediately accompanied by an issue of Newsweek declaring all scientists agreed. And that was the beginning of a ‘consensus’ argument. Already by ’88 the New York Times had literally a global warming beat.” Lindzen wasn’t buying it. Nonetheless, he remained in the good graces of mainstream climate science, and in the early 1990s, he was invited to join the IPCC, a U.N.-backed multinational consortium of scientists charged with synthesizing and analyzing the current state of the world’s climate science. Lindzen accepted, and he ended up as a contributor to the 1995 report and the lead author of Chapter 7 (“Physical Climate Processes and Feedbacks”) of the 2001 report. Since then, however, he’s grown increasingly distant from prevalent (he would say “hysterical”) climate science, and he is voluminously on record disputing the predictions of catastrophe.

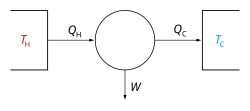

The Earth’s climate is immensely complex, but the basic principle behind the “greenhouse effect” is easy to understand. The burning of oil, gas, and especially coal pumps carbon dioxide and other gases into the atmosphere, where they allow the sun’s heat to penetrate to the Earth’s surface but impede its escape, thus causing the lower atmosphere and the Earth’s surface to warm. Essentially everybody, Lindzen included, agrees. The question at issue is how sensitive the planet is to increasing concentrations of greenhouse gases (this is called climate sensitivity), and how much the planet will heat up as a result of our pumping into the sky ever more CO2, which remains in the atmosphere for upwards of 1,000 years. (Carbon dioxide, it may be needless to point out, is not a poison. On the contrary, it is necessary for plant life.)

Lindzen doesn’t deny that the climate has changed or that the planet has warmed. “We all agree that temperature has increased since 1800,” he tells me. There’s a caveat, though: It’s increased by “a very small amount. We’re talking about tenths of a degree [Celsius]. We all agree that CO2 is a greenhouse gas. All other things kept equal, [there has been] some warming. As a result, there’s hardly anyone serious who says that man has no role. And in many ways, those have never been the questions. The questions have always been, as they ought to be in science, how much?”

Lindzen says not much at all—and he contends that the “alarmists” vastly overstate the Earth’s climate sensitivity. Judging by where we are now, he appears to have a point; so far, 150 years of burning fossil fuels in large quantities has had a relatively minimal effect on the climate. By some measurements, there is now more CO2 in the atmosphere than there has been at any time in the past 15 million years. Yet since the advent of the Industrial Revolution, the average global temperature has risen by, at most, 1 degree Celsius, or 1.6 degrees Fahrenheit. And while it’s true that sea levels have risen over the same period, it’s believed they’ve been doing so for roughly 20,000 years. What’s more, despite common misconceptions stoked by the media in the wake of Katrina, Sandy, and the recent typhoon in the Philippines, even the IPCC concedes that it has “low confidence” that there has been any measurable uptick in storm intensity thanks to human activity. Moreover, over the past 15 years, as man has emitted record levels of carbon dioxide year after year, the warming trend of previous decades has stopped. Lindzen says this is all consistent with what he holds responsible for climate change: a small bit of man-made impact and a whole lot of natural variability.

The real fight, though, is over what’s coming in the future if humans continue to burn fossil fuels unabated. According to the IPCC, the answer is nothing good. Its most recent Summary for Policymakers, which was released early this fall—and which some scientists reject as too sanguine—predicts that if emissions continue to rise, by the year 2100, global temperatures could increase as much as 5.5 degrees Celsius from current averages, while sea levels could rise by nearly a meter. If we hit those projections, it’s generally thought that the Earth would be rife with crop failures, drought, extreme weather, and epochal flooding. Adios, Miami.

It is to avoid those disasters that the “alarmists” call on governments to adopt policies reducing the amounts of greenhouse gases released into the atmosphere. As a result of such policies—and a fortuitous increase in natural gas production—U.S. greenhouse emissions are at a 20-year low and falling. But global emissions are rising, thanks to massive increases in energy use in the developing world, particularly in China and India. If the “alarmists” are right, then, a way must be found to compel the major developing countries to reduce carbon emissions.

But Lindzen rejects the dire projections. For one thing, he says that the Summary for Policymakers is an inherently problematic document. The IPCC report itself, weighing in at thousands of pages, is “not terrible. It’s not unbiased, but the bias [is] more or less to limit your criticism of models,” he says. The Summary for Policymakers, on the other hand—the only part of the report that the media and the politicians pay any attention to—“rips out doubts to a large extent. . . . [Furthermore], government representatives have the final say on the summary.” Thus, while the full IPPC report demonstrates a significant amount of doubt among scientists, the essentially political Summary for Policymakers filters it out.

Lindzen also disputes the accuracy of the computer models that climate scientists rely on to project future temperatures. He contends that they oversimplify the vast complexity of the Earth’s climate and, moreover, that it’s impossible to untangle man’s effect on the climate from natural variability. The models also rely on what Lindzen calls “fudge factors.” Take aerosols. These are tiny specks of matter, both liquid and solid (think dust), that are present throughout the atmosphere. Their effect on the climate—even whether they have an overall cooling or warming effect—is still a matter of debate. Lindzen charges that when actual temperatures fail to conform to the models’ predictions, climate scientists purposely overstate the cooling effect of aerosols to give the models the appearance of having been accurate. But no amount of fudging can obscure the most glaring failure of the models: their inability to predict the 15-year-long (and counting) pause in warming—a pause that would seem to place the burden of proof squarely on the defenders of the models.

Lindzen also questions the “alarmist” line on water vapor. Water vapor (and its close cousin, clouds) is one of the most prevalent greenhouse gases in the atmosphere. According to most climate scientists, the hotter the planet gets, the more water vapor there will be, magnifying the effects of other greenhouse gases, like CO2, in a sort of hellish positive feedback loop. Lindzen disputes this, contending that water vapor could very well end up having a cooling effect on the planet. As the science writer Justin Gillis explained in a 2012 New York Times piece, Lindzen “says the earth is not especially sensitive to greenhouse gases because clouds will react to counter them, and he believes he has identified a specific mechanism. On a warming planet, he says, less coverage by high clouds in the tropics will allow more heat to escape to space, countering the temperature increase.”

If Lindzen is right about this and global warming is nothing to worry about, why do so many climate scientists, many with résumés just as impressive as his, preach imminent doom? He says it mostly comes down to the money—to the incentive structure of academic research funded by government grants. Almost all funding for climate research comes from the government, which, he says, makes scientists essentially vassals of the state. And generating fear, Lindzen contends, is now the best way to ensure that policymakers keep the spigot open.

Lindzen contrasts this with the immediate aftermath of World War II, when American science was at something of a peak. “Science had established its relevance with the A-bomb, with radar, for that matter the proximity fuse,” he notes. Americans and their political leadership were profoundly grateful to the science community; scientists, unlike today, didn’t have to abase themselves by approaching the government hat in hand. Science funding was all but assured.

But with the cuts to basic science funding that occurred around the time of the Vietnam war, taxpayer support for research was no longer a political no-brainer. “It was recognized that gratitude only went so far,” Lindzen says, “and fear was going to be a much greater motivator. And so that’s when people began thinking about . . . how to perpetuate fear that would motivate the support of science.”

A need to generate fear, in Lindzen’s telling, is what’s driving the apocalyptic rhetoric heard from many climate scientists and their media allies. “The idea was, to engage the public you needed an event . . . not just a Sputnik—a drought, a storm, a sand demon. You know, something you could latch onto. [Climate scientists] carefully arranged a congressional hearing. And they arranged for [James] Hansen [author of Storms of My Grandchildren, and one of the leading global warming “alarmists”] to come and say something vague that would somehow relate a heat wave or a drought to global warming.” (This theme, by the way, is developed to characteristic extremes in the late Michael Crichton’s entertaining 2004 novel State of Fear, in which environmental activists engineer a series of fake “natural” disasters to sow fear over global warming.)

Lindzen also says that the “consensus”—the oft-heard contention that “virtually all” climate scientists believe in catastrophic, anthropogenic global warming—is overblown, primarily for structural reasons. “When you have an issue that is somewhat bogus, the opposition is always scattered and without resources,” he explains. “But the environmental movement is highly organized. There are hundreds of NGOs. To coordinate these hundreds, they quickly organized the Climate Action Network, the central body on climate. There would be, I think, actual meetings to tell them what the party line is for the year, and so on.” Skeptics, on the other hand, are more scattered across disciplines and continents. As such, they have a much harder time getting their message across.

Because CO2 is invisible and the climate is so complex (your local weatherman doesn’t know for sure whether it will rain tomorrow, let alone conditions in 2100), expertise is particularly important. Lindzen sees a danger here. “I think the example, the paradigm of this, was medical practice.” He says that in the past, “one went to a physician because something hurt or bothered you, and you tended to judge him or her according to whether you felt better. That may not always have been accurate, but at least it had some operational content. . . . [Now, you] go to an annual checkup, get a blood test. And the physician tells you if you’re better or not and it’s out of your hands.” Because climate change is invisible, only the experts can tell us whether the planet is sick or not. And because of the way funds are granted, they have an incentive to say that the Earth belongs in intensive care.

Richard Lindzen presents a problem for those who say that the science behind climate change is “settled.” So many “alarmists” prefer to ignore him and instead highlight straw men: less credible skeptics, such as climatologist Roy Spencer of the University of Alabama (signatory to a declaration that “Earth and its ecosystems—created by God’s intelligent design and infinite power and sustained by His faithful providence—are robust, resilient, self-regulating, and self-correcting”), the Heartland Institute (which likened climate “alarmists” to the Unabomber), and Senator Jim Inhofe of Oklahoma (a major energy-producing state). The idea is to make it seem as though the choice is between accepting the view of, say, journalist James Delingpole (B.A., English literature), who says global warming is a hoax, and that of, say, James Hansen (Ph.D., physics, former head of the NASA Goddard Institute for Space Studies), who says that we are moving toward “an ice-free Antarctica and a desolate planet without human inhabitants.”

But Lindzen, plainly, is different. He can’t be dismissed. Nor, of course, is he the only skeptic with serious scientific credentials. Judith Curry, the chair of the School of Earth and Atmospheric Sciences at Georgia Tech, William Happer, professor of physics at Princeton, John Christy, a climate scientist honored by NASA, now at the University of Alabama, and the famed physicist Freeman Dyson are among dozens of scientists who have gone on record questioning various aspects of the IPCC’s line on climate change. Lindzen, for his part, has said that scientists have called him privately to thank him for the work he’s doing.

But Lindzen, perhaps because of his safely tenured status at MIT, or just because of the contours of his personality, is a particularly outspoken and public critic of the consensus. It’s clear that he relishes taking on the “alarmists.” It’s little wonder, then, that he’s come under exceptionally vituperative attack from many of those who are concerned about the impact of climate change. It also stands to reason that they might take umbrage at his essentially accusing them of mass corruption with his charge that they are “stoking fear.”

Take Joe Romm, himself an MIT Ph.D., who runs the climate desk at the left-wing Center for American Progress. On the center’s blog, Romm regularly lights into Lindzen. “Lindzen could not be more discredited,” he says in one post. In another post, he calls Lindzen an “uber-hypocritical anti-scientific scientist.” (Romm, it should be noted, is a bit more measured, if no less condescending, when the klieg lights are off. “I tend to think Lindzen is just one of those scientists whom time and science has passed by, like the ones who held out against plate tectonics for so long,” he tells me.) Seldom, however, does Romm stoop to explain what grounds justify dismissing Lindzen’s views with such disdain.

Andrew Dessler, a climatologist at Texas A&M University, is another harsh critic of Lindzen. As he told me in an emailed statement, “Over the past 25 years, Dr. Lindzen has published several theories about climate, all of which suggest that the climate will not warm much in response to increases in atmospheric CO2. These theories have been tested by the scientific community and found to be completely without merit. Lindzen knows this, of course, and no longer makes any effort to engage with the scientific community about his theories (e.g., he does not present his work at scientific conferences). It seems his main audience today is Fox News and the editorial board of the Wall Street Journal.”

The Internet, meanwhile, is filled with hostile missives directed at Lindzen. They’re of varying quality. Some, written by climate scientists, are point-by-point rebuttals of Lindzen’s scholarly work; others, angry ad hominem screeds full of heat, signifying nothing. (When Lindzen transitioned to emeritus status last year, one blog headlined the news “Denier Down: Lindzen Retires.”)

For decades, Lindzen has also been dogged by unsubstantiated accusations of corruption—specifically, that he’s being paid off by the energy industry. He denies this with a laugh.

“I wish it were so!” What appears to be the primary source for this calumny—a Harper’s magazine article from 1995—provides no documentation for its assertions. But that hasn’t stopped the charge from being widely disseminated on the Internet.

One frustrating feature of the climate debate is that people’s outlook on global warming usually correlates with their political views. So if a person wants low taxes and restrictions on abortion, he probably isn’t worried about climate change. And if a person supports gay marriage and raising the minimum wage, he most likely thinks the threat from global warming warrants costly public-policy remedies. And of course, even though Lindzen is an accomplished climate scientist, he has his own political outlook—a conservative one.

He wasn’t reared that way. “Growing up in the Bronx, politics, I would say, was an automatic issue. I grew up with a picture of Franklin Roosevelt over my bed.” But his views started to shift in the late ’60s and ’70s. “I think [my politics] began changing in the Vietnam war. I was deeply disturbed by the way vets were being treated,” he says. He also says that his experience in the climate debate—and the rise in political correctness in the universities throughout the ’70s and ’80s—further pushed him to the right. So, yes, Lindzen, a climate skeptic, is also a political conservative whom one would expect to oppose many environmental regulations for ideological, as opposed to scientific, reasons.

By the same token, it is well known that the vast majority of “alarmist” climate scientists, dependent as they are on federal largesse, are liberal Democrats.

But whatever buried ideological component there may be to any given scientist’s work, it doesn’t tell us who has the science right. In a 2012 public letter, Lindzen noted, “Critics accuse me of doing a disservice to the scientific method. I would suggest that in questioning the views of the critics and subjecting them to specific tests, I am holding to the scientific method.” Whoever is right about computer models, climate sensitivity, aerosols, and water vapor, Lindzen is certainly right about that. Skepticism is essential to science.

In a 2007 debate with Lindzen in New York City, climate scientist Richard C. J. Somerville, who is firmly in the “alarmist” camp, likened climate skeptics to “some eminent earth scientists [who] couldn’t be persuaded that plate tectonics were real . . . when the revolution of continental drift was sweeping through geology and geophysics.”

“Most people who think they’re a Galileo are just wrong,” he said, much to the delight of a friendly audience of Manhattanites.

But Somerville botched the analogy. The story of plate tectonics is the story of how one man, Alfred Wegener, came up with the theory of continental drift, only to be widely opposed and mocked. Wegener challenged the earth science “consensus” of his day. And in the end, his view prevailed.

Ethan Epstein is an assistant editor at The Weekly Standard