From Wikipedia, the free encyclopedia

|

|

| Developer | Community |

|---|---|

| Written in | Various (Notably C and Assembly) |

| OS family | Unix-like |

| Working state | Current |

| Source model | Mainly open source, closed source also available |

| Initial release | 1991 |

| Latest release | 3.19 (8 February 2015) [±][2] |

| Marketing target | Personal computers, mobile devices, embedded devices, servers, mainframes, supercomputers |

| Available in | Multilingual |

| Platforms | Alpha, ARC, ARM, AVR32, Blackfin, C6x, ETRAX CRIS, FR-V, H8/300, Hexagon, Itanium, M32R, m68k, META, Microblaze, MIPS, MN103, Nios II, OpenRISC, PA-RISC, PowerPC, s390, S+core, SuperH, SPARC, TILE64, Unicore32, x86, Xtensa |

| Kernel type | Monolithic (Linux kernel) |

| Userland | Various |

| Default user interface | Many |

| License | GNU GPL[3] and other free and open source licenses; "Linux" trademark is owned by Linus Torvalds[4] and administered by the Linux Mark Institute |

Linux (

Linux was originally developed as a free operating system for Intel x86–based personal computers, but has since been ported to more computer hardware platforms than any other operating system.[citation needed] It is the leading operating system on servers and other big iron systems such as mainframe computers and supercomputers,[14][15][16] but is used on only around 1% of desktop computers.[17] Linux also runs on embedded systems, which are devices whose operating system is typically built into the firmware and is highly tailored to the system; this includes mobile phones,[18] tablet computers, network routers, facility automation controls, televisions[19][20] and video game consoles. Android, the most widely used operating system for tablets and smartphones, is built on top of the Linux kernel.[21]

The development of Linux is one of the most prominent examples of free and open-source software collaboration. The underlying source code may be used, modified, and distributed—commercially or non-commercially—by anyone under licenses such as the GNU General Public License. Typically, Linux is packaged in a form known as a Linux distribution, for both desktop and server use. Some popular mainstream Linux distributions include Debian, Ubuntu, Linux Mint, Fedora, openSUSE, Arch Linux, and the commercial Red Hat Enterprise Linux and SUSE Linux Enterprise Server. Linux distributions include the Linux kernel, supporting utilities and libraries and usually a large amount of application software to fulfill the distribution's intended use.

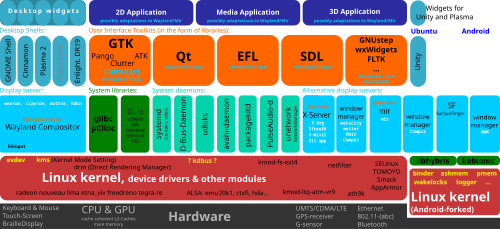

A distribution oriented toward desktop use will typically include X11, Wayland or Mir as the windowing system, and an accompanying desktop environment such as GNOME or the KDE Software Compilation. Some such distributions may include a less resource intensive desktop such as LXDE or Xfce, for use on older or less powerful computers. A distribution intended to run as a server may omit all graphical environments from the standard install, and instead include other software to set up and operate a solution stack such as LAMP. Because Linux is freely redistributable, anyone may create a distribution for any intended use.

History

Antecedents

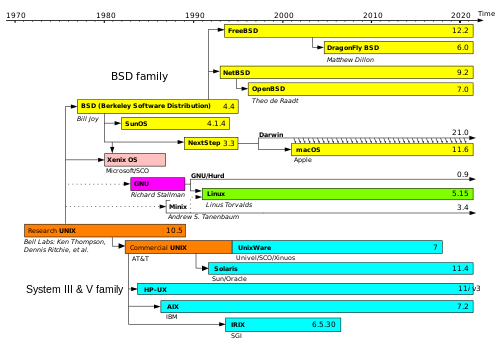

The Unix operating system was conceived and implemented in 1969 at AT&T's Bell Laboratories in the United States by Ken Thompson, Dennis Ritchie, Douglas McIlroy, and Joe Ossanna.[22] It was first released in 1971, initially written entirely in assembly language, as it was a common practice at the time. Later, in a key pioneering approach in 1973, Unix was re-written in the programming language C by Dennis Ritchie (with exceptions to the kernel and I/O). The availability of an operating system written in a high-level language allowed easier portability to different computer platforms.

With AT&T being required to license the operating system's source code to anyone who asked (due to an earlier antitrust case forbidding them from entering the computer business),[23] Unix grew quickly and became widely adopted by academic institutions and businesses. In 1984, AT&T divested itself of Bell Labs. Free of the legal obligation requiring free licensing, Bell Labs began selling Unix as a proprietary product.

The GNU Project, started in 1983 by Richard Stallman, had the goal of creating a "complete Unix-compatible software system" composed entirely of free software. Work began in 1984.[24] Later, in 1985, Stallman started the Free Software Foundation and wrote the GNU General Public License (GNU GPL) in 1989. By the early 1990s, many of the programs required in an operating system (such as libraries, compilers, text editors, a Unix shell, and a windowing system) were completed, although low-level elements such as device drivers, daemons, and the kernel were stalled and incomplete.[25]

Linus Torvalds has said that if the GNU kernel had been available at the time (1991), he would not have decided to write his own.[26]

Although not released until 1992 due to legal complications, development of 386BSD, from which NetBSD, OpenBSD and FreeBSD descended, predated that of Linux. Linus Torvalds has said that if 386BSD had been available at the time, he probably would not have created Linux.[27]

MINIX, initially released in 1987, is an inexpensive minimal Unix-like operating system, designed for education in computer science, written by Andrew S. Tanenbaum. Starting with version 3 in 2005, MINIX became free and was redesigned for use in embedded systems.

Creation

In 1991, while attending the University of Helsinki, Torvalds became curious about operating systems[28] and frustrated by the licensing of MINIX, which limited it to educational use only. He began to work on his own operating system kernel, which eventually became the Linux kernel.Torvalds began the development of the Linux kernel on MINIX and applications written for MINIX were also used on Linux. Later, Linux matured and further Linux kernel development took place on Linux systems.[29] GNU applications also replaced all MINIX components, because it was advantageous to use the freely available code from the GNU Project with the fledgling operating system; code licensed under the GNU GPL can be reused in other projects as long as they also are released under the same or a compatible license. Torvalds initiated a switch from his original license, which prohibited commercial redistribution, to the GNU GPL.[30] Developers worked to integrate GNU components with the Linux kernel, making a fully functional and free operating system.[25]

Naming

Linus Torvalds had wanted to call his invention Freax, a portmanteau of "free", "freak", and "x" (as an allusion to Unix). During the start of his work on the system, he stored the files under the name "Freax" for about half of a year. Torvalds had already considered the name "Linux," but initially dismissed it as too egotistical.[31]

In order to facilitate development, the files were uploaded to the FTP server (ftp.funet.fi) of FUNET in September 1991. Ari Lemmke, Torvald's coworker at the Helsinki University of Technology (HUT) who was one of the volunteer administrators for the FTP server at the time, did not think that "Freax" was a good name. So, he named the project "Linux" on the server without consulting Torvalds.[31] Later, however, Torvalds consented to "Linux".

To demonstrate how the word "Linux" should be pronounced (

Commercial and popular uptake

Today, Linux systems are used in every domain, from embedded systems to supercomputers,[16][33] and have secured a place in server installations often using the popular LAMP application stack.[34] Use of Linux distributions in home and enterprise desktops has been growing.[35][36][37][38][39][40][41] Linux distributions have also become popular in the netbook market, with many devices shipping with customized Linux distributions installed, and Google releasing their own Google Chrome OS designed for netbooks.

Linux's greatest success in the consumer market is perhaps the mobile device market, with Android being one of the most prominent OSes among smartphones, tablets and recently wearable technology. Linux gaming is also on the rise with Valve showing its support for Linux and rolling out its own gaming oriented Linux distribution. Linux distributions have also gained popularity with various local and national governments, such as the federal government of Brazil.

Current development

Torvalds continues to direct the development of the kernel.[42] Stallman heads the Free Software Foundation,[43] which in turn supports the GNU components.[44] Finally, individuals and corporations develop third-party non-GNU components. These third-party components comprise a vast body of work and may include both kernel modules and user applications and libraries.Linux vendors and communities combine and distribute the kernel, GNU components, and non-GNU components, with additional package management software in the form of Linux distributions.

Design

A Linux-based system is a modular Unix-like operating system. It derives much of its basic design from principles established in Unix during the 1970s and 1980s. Such a system uses a monolithic kernel, the Linux kernel, which handles process control, networking, and peripheral and file system access. Device drivers are either integrated directly with the kernel or added as modules loaded while the system is running.[45]Separate projects that interface with the kernel provide much of the system's higher-level functionality. The GNU userland is an important part of most Linux-based systems, providing the most common implementation of the C library, a popular CLI shell, and many of the common Unix tools which carry out many basic operating system tasks. The graphical user interface (or GUI) used by most Linux systems is built on top of an implementation of the X Window System.[46] More recently, the Linux community seeks to advance to Wayland as the new display server protocol in place of X11; Ubuntu, however, develops Mir instead of Wayland.[47]

| User mode | User applications | For example, bash, LibreOffice, Apache OpenOffice, Blender, 0 A.D., Mozilla Firefox, etc. | ||||

|---|---|---|---|---|---|---|

| Low-level system components: | System daemons: systemd, runit, logind, networkd, soundd... |

Windowing system: X11, Wayland, Mir, SurfaceFlinger (Android) |

Other libraries: GTK+, Qt, EFL, SDL, SFML, FLTK, GNUstep, etc. |

Graphics: Mesa 3D, AMD Catalyst, ... |

||

| C standard library | open(), exec(), sbrk(), socket(), fopen(), calloc(), ... (up to 2000 subroutines) glibc aims to be POSIX/SUS-compatible, uClibc targets embedded systems, bionic written for Android, etc. |

|||||

| Kernel mode | Linux kernel | stat, splice, dup, read, open, ioctl, write, mmap, close, exit, etc. (about 380 system calls) The Linux kernel System Call Interface (SCI, aims to be POSIX/SUS-compatible) |

||||

| Process scheduling subsystem |

IPC subsystem |

Memory management subsystem |

Virtual files subsystem |

Network subsystem |

||

| Other components: ALSA, DRI, evdev, LVM, device mapper, Linux Network Scheduler, Netfilter Linux Security Modules: SELinux, TOMOYO, AppArmor, Smack |

||||||

| Hardware (CPU, main memory, data storage devices, etc.) | ||||||

Installed components of a Linux system include the following:[46][48]

- A bootloader, for example GNU GRUB, LILO, SYSLINUX, Coreboot or Gummiboot. This is a program that loads the Linux kernel into the computer's main memory, by being executed by the computer when it is turned on and after the firmware initialization is performed.

- An init program, such as the traditional sysvinit and the newer systemd, OpenRC and Upstart. This is the first process launched by the Linux kernel, and is at the root of the process tree: in other terms, all processes are launched through init. It starts processes such as system services and login prompts (whether graphical or in terminal mode).

- Software libraries, which contain code that can be used by running processes. On Linux systems using ELF-format executable files, the dynamic linker that manages use of dynamic libraries is known as ld-linux.so. If the system is set up for the user to compile software themselves, header files will also be included to describe the interface of installed libraries. Beside the most commonly used software library on Linux systems, the GNU C Library (glibc), there are numerous other libraries.

- C standard library is the library needed to run standard C programs on a computer system, with the GNU C Library being the most commonly used. Several alternatives are available, such as the EGLIBC (which was used by Debian for some time) and uClibc (which was designed for uClinux).

- Widget toolkits are the libraries used to build graphical user interfaces (GUIs) for software applications. Numerous widget toolkits are available, including GTK+ and Clutter (software) developed by the GNOME project, Qt developed by the Qt Project and led by Digia, and Enlightenment Foundation Libraries (EFL) developed primarily by the Enlightenment team.

- User interface programs such as command shells or windowing environments.

User interface

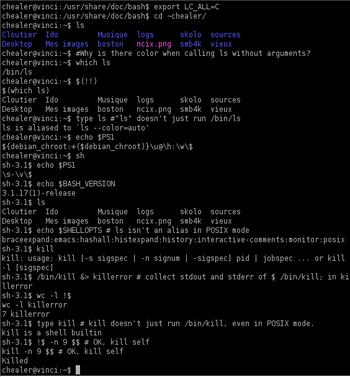

The user interface, also known as the shell, is either a command-line interface (CLI), a graphical user interface (GUI), or through controls attached to the associated hardware, which is common for embedded systems. For desktop systems, the default mode is usually a graphical user interface, although the CLI is available through terminal emulator windows or on a separate virtual console.

CLI shells are the text-based user interfaces, which use text for both input and output. The dominant shell used in Linux is the GNU Bourne-Again Shell (bash), originally developed for the GNU project. Most low-level Linux components, including various parts of the userland, use the CLI exclusively. The CLI is particularly suited for automation of repetitive or delayed tasks, and provides very simple inter-process communication.

On desktop systems, the most popular user interfaces are the GUI shells, packaged together with extensive desktop environments, such as the K Desktop Environment (KDE), GNOME, Cinnamon, Unity, LXDE, Pantheon and Xfce, though a variety of additional user interfaces exist. Most popular user interfaces are based on the X Window System, often simply called "X". It provides network transparency and permits a graphical application running on one system to be displayed on another where a user may interact with the application; however, certain extensions of the X Window System are not capable of working over the network.[50] Several popular X display servers exist, with the reference implementation, X.Org Server, being the most popular.

Different window managers variants exist for X11, including the tiling, dynamic, stacking and compositing ones. Simpler X window managers, such as FVWM, Enlightenment, and Window Maker, provide a minimalist functionality with respect to the desktop environments. A window manager provides a means to control the placement and appearance of individual application windows, and interacts with the X Window System. The desktop environments include window managers as part of their standard installations (Mutter for GNOME, KWin for KDE, Xfwm for xfce) although users may choose to use a different window manager if preferred.

Wayland is a display server protocol intended as a replacement for the aged X11 protocol; as of 2014[update], Wayland has not received wider adoption. Unlike X11, Wayland does not need an external window manager and compositing manager. Therefore, a Wayland compositor takes the role of the display server, window manager and compositing manager. Weston is the reference implementation of Wayland, while GNOME's Mutter and KDE's KWin are being ported to Wayland as standalone display servers instead of merely compositing window managers. Enlightenment has already been successfully ported to Wayland since version 19.

Video input infrastructure

Linux currently has two modern kernel-userspace APIs for handing video input devices: V4L2 API for video streams and radio, and DVB API for digital TV reception.[51]Due to the complexity and diversity of different devices, and due to the large amount of formats and standards handled by those APIs, this infrastructure needs to evolve to better fit other devices. Also, a good userspace device library is the key of the success for having userspace applications to be able to work with all formats supported by those devices.[52][53]

Development

Linux based distributions are intended by developers for interoperability with other operating systems and established computing standards. Linux systems adhere to POSIX,[55] SUS,[56] LSB, ISO, and ANSI standards where possible, although to date only one Linux distribution has been POSIX.1 certified, Linux-FT.[57][58]

Free software projects, although developed through collaboration, are often produced independently of each other. The fact that the software licenses explicitly permit redistribution, however, provides a basis for larger scale projects that collect the software produced by stand-alone projects and make it available all at once in the form of a Linux distribution.

Many Linux distributions, or "distros", manage a remote collection of system software and application software packages available for download and installation through a network connection. This allows users to adapt the operating system to their specific needs. Distributions are maintained by individuals, loose-knit teams, volunteer organizations, and commercial entities. A distribution is responsible for the default configuration of the installed Linux kernel, general system security, and more generally integration of the different software packages into a coherent whole. Distributions typically use a package manager such as dpkg, Synaptic, YAST, yum, or Portage to install, remove and update all of a system's software from one central location.

Community

A distribution is largely driven by its developer and user communities. Some vendors develop and fund their distributions on a volunteer basis, Debian being a well-known example. Others maintain a community version of their commercial distributions, as Red Hat does with Fedora and SUSE does with openSUSE.In many cities and regions, local associations known as Linux User Groups (LUGs) seek to promote their preferred distribution and by extension free software. They hold meetings and provide free demonstrations, training, technical support, and operating system installation to new users. Many Internet communities also provide support to Linux users and developers. Most distributions and free software / open-source projects have IRC chatrooms or newsgroups. Online forums are another means for support, with notable examples being LinuxQuestions.org and the various distribution specific support and community forums, such as ones for Ubuntu, Fedora, and Gentoo. Linux distributions host mailing lists; commonly there will be a specific topic such as usage or development for a given list.

There are several technology websites with a Linux focus. Print magazines on Linux often include cover disks including software or even complete Linux distributions.[59][60]

Although Linux distributions are generally available without charge, several large corporations sell, support, and contribute to the development of the components of the system and of free software. An analysis of the Linux kernel showed 75 percent of the code from December 2008 to January 2010 was developed by programmers working for corporations, leaving about 18 percent to volunteers and 7% unclassified.[61] Major corporations that provide contributions include Dell, IBM, HP, Oracle, Sun Microsystems (now part of Oracle), SUSE, and Nokia. A number of corporations, notably Red Hat, Canonical, and SUSE, have built a significant business around Linux distributions.

The free software licenses, on which the various software packages of a distribution built on the Linux kernel are based, explicitly accommodate and encourage commercialization; the relationship between a Linux distribution as a whole and individual vendors may be seen as symbiotic. One common business model of commercial suppliers is charging for support, especially for business users. A number of companies also offer a specialized business version of their distribution, which adds proprietary support packages and tools to administer higher numbers of installations or to simplify administrative tasks.

Another business model is to give away the software in order to sell hardware. This used to be the norm in the computer industry, with operating systems such as CP/M, Apple DOS and versions of Mac OS prior to 7.6 freely copyable (but not modifiable). As computer hardware standardized throughout the 1980s, it became more difficult for hardware manufacturers to profit from this tactic, as the OS would run on any manufacturer's computer that shared the same architecture.

Programming on Linux

Most Linux distributions support dozens of programming languages. The original development tools used for building both Linux applications and operating system programs are found within the GNU toolchain, which includes the GNU Compiler Collection (GCC) and the GNU build system. Amongst others, GCC provides compilers for Ada, C, C++, Go and Fortran. Many programming languages have a cross-platform reference implementation that supports Linux, for example PHP, Perl, Ruby, Python, Java, Go, Rust and Haskell. First released in 2003, the LLVM project provides an alternative cross-platform open-source compiler for many languages. Proprietary compilers for Linux include the Intel C++ Compiler, Sun Studio, and IBM XL C/C++ Compiler. BASIC in the form of Visual Basic is supported in such forms as Gambas, FreeBASIC, and XBasic, and in terms of terminal programming or QuickBASIC or Turbo BASIC programming in the form of QB64.A common feature of Unix-like systems, Linux includes traditional specific-purpose programming languages targeted at scripting, text processing and system configuration and management in general. Linux distributions support shell scripts, awk, sed and make. Many programs also have an embedded programming language to support configuring or programming themselves. For example, regular expressions are supported in programs like grep, or locate, while advanced text editors, like GNU Emacs, have a complete Lisp interpreter built-in.

Most distributions also include support for PHP, Perl, Ruby, Python and other dynamic languages. While not as common, Linux also supports C# (via Mono), Vala, and Scheme. A number of Java Virtual Machines and development kits run on Linux, including the original Sun Microsystems JVM (HotSpot), and IBM's J2SE RE, as well as many open-source projects like Kaffe and JikesRVM.

GNOME and KDE are popular desktop environments and provide a framework for developing applications. These projects are based on the GTK+ and Qt widget toolkits, respectively, which can also be used independently of the larger framework. Both support a wide variety of languages. There are a number of Integrated development environments available including Anjuta, Code::Blocks, CodeLite, Eclipse, Geany, ActiveState Komodo, KDevelop, Lazarus, MonoDevelop, NetBeans, and Qt Creator, while the long-established editors Vim, nano and Emacs remain popular.[62]

Uses

As well as those designed for general purpose use on desktops and servers, distributions may be specialized for different purposes including: computer architecture support, embedded systems, stability, security, localization to a specific region or language, targeting of specific user groups, support for real-time applications, or commitment to a given desktop environment. Furthermore, some distributions deliberately include only free software. Currently, over three hundred distributions are actively developed, with about a dozen distributions being most popular for general-purpose use.[63]Linux is a widely ported operating system kernel. The Linux kernel runs on a highly diverse range of computer architectures: in the hand-held ARM-based iPAQ and the mainframe IBM System z9, System z10; in devices ranging from mobile phones to supercomputers.[64] Specialized distributions exist for less mainstream architectures. The ELKS kernel fork can run on Intel 8086 or Intel 80286 16-bit microprocessors, while the µClinux kernel fork may run on systems without a memory management unit. The kernel also runs on architectures that were only ever intended to use a manufacturer-created operating system, such as Macintosh computers (with both PowerPC and Intel processors), PDAs, video game consoles, portable music players, and mobile phones.

There are several industry associations and hardware conferences devoted to maintaining and improving support for diverse hardware under Linux, such as FreedomHEC.

Desktop

Visible software components of the Linux desktop stack include the display server, widget engines, and some of the more widespread widget toolkits. There are also components not directly visible to end users, including D-Bus and PulseAudio.

No single official Linux desktop exists: rather desktop environments and Linux distributions select components from a pool of free and open-source software with which they construct a GUI implementing some more or less strict design guide. GNOME, for example, has its human interface guidelines as a design guide, which gives the human–machine interface an important role, not just when doing the graphical design, but also when considering people with disabilities, and even when focusing on security.[66]

The collaborative nature of free software development allows distributed teams to perform language localization of some Linux distributions for use in locales where localizing proprietary systems would not be cost-effective. For example the Sinhalese language version of the Knoppix distribution became available significantly before Microsoft translated Windows XP into Sinhalese.[citation needed] In this case the Lanka Linux User Group played a major part in developing the localized system by combining the knowledge of university professors, linguists, and local developers.

Performance and applications

The performance of Linux on the desktop has been a controversial topic;[citation needed] for example in 2007 Con Kolivas accused the Linux community of favoring performance on servers. He quit Linux kernel development out of frustration with this lack of focus on the desktop, and then gave a "tell all" interview on the topic.[67] Since then a significant amount of development has focused on improving the desktop experience. Projects such as Upstart and systemd aim for a faster boot time; the Wayland and Mir projects aim at replacing X11 while enhancing desktop performance, security and appearance.[68]Many popular applications are available for a wide variety of operating systems. For example Mozilla Firefox, OpenOffice.org/LibreOffice and Blender have downloadable versions for all major operating systems. Furthermore, some applications initially developed for Linux, such as Pidgin, and GIMP, were ported to other operating systems (including Windows and Mac OS X) due to their popularity. In addition, a growing number of proprietary desktop applications are also supported on Linux,[69] such as Autodesk Maya, Softimage XSI and Apple Shake in the high-end field of animation and visual effects; see the List of proprietary software for Linux for more details. There are also several companies that have ported their own or other companies' games to Linux, with Linux also being a supported platform on both the popular Steam and Desura digital-distribution services.[70]

Many other types of applications available for Microsoft Windows and Mac OS X also run on Linux. Commonly, either a free software application will exist which does the functions of an application found on another operating system, or that application will have a version that works on Linux, such as with Skype and some video games like Dota 2 and Team Fortress 2. Furthermore, the Wine project provides a Windows compatibility layer to run unmodified Windows applications on Linux. It is sponsored by commercial interests including CodeWeavers, which produces a commercial version of the software. Since 2009, Google has also provided funding to the Wine project.[71][72] CrossOver, a proprietary solution based on the open-source Wine project, supports running Windows versions of Microsoft Office, Intuit applications such as Quicken and QuickBooks, Adobe Photoshop versions through CS2, and many popular games such as World of Warcraft. In other cases, where there is no Linux port of some software in areas such as desktop publishing[73] and professional audio,[74][75][76] there is equivalent software available on Linux.

Components and installation

Besides externally visible components, such as X window managers, a non-obvious but quite central role is played by the programs hosted by freedesktop.org, such as D-Bus or PulseAudio; both major desktop environments (GNOME and KDE) include them, each offering graphical front-ends written using the corresponding toolkit (GTK+ or Qt). A display server is another component, which for the longest time has been communicating in the X11 display server protocol with its clients; prominent software talking X11 includes the X.Org Server and Xlib. Frustration over the cumbersome X11 core protocol, and especially over its numerous extensions, has led to the creation of a new display server protocol, Wayland.Installing, updating and removing software in Linux is typically done through the use of package managers such as the Synaptic Package Manager, PackageKit, and Yum Extender. While most major Linux distributions have extensive repositories, often containing tens of thousands of packages, not all the software that can run on Linux is available from the official repositories. Alternatively, users can install packages from unofficial repositories, download pre-compiled packages directly from websites, or compile the source code by themselves. All these methods come with different degrees of difficulty; compiling the source code is in general considered a challenging process for new Linux users, but it is hardly needed in modern distributions and is not a method specific to Linux.

- Samples of graphical desktop environments

-

GNOME Shell (GNOME 3)

-

KDE Plasma (KDE 4)

-

MATE (GNOME 2)

-

Trinity (KDE 3)

Netbooks

Linux distributions have also become popular in the netbook market, with many devices such as the ASUS Eee PC and Acer Aspire One shipping with customized Linux distributions installed.[77]In 2009, Google announced its Google Chrome OS, a minimal Linux based operating system which application consists only of the Google Chrome browser, a file manager and a media player.[78] The netbooks that shipped with the operating system, termed Chromebooks, started appearing in the market in June 2011.[79]

Servers, mainframes and supercomputers

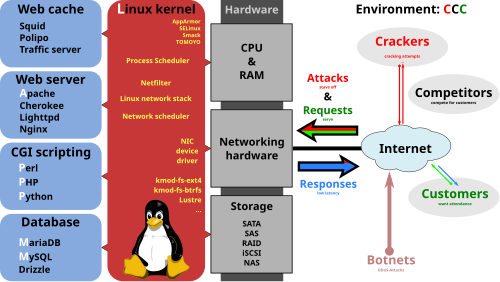

Broad overview of the LAMP software bundle, displayed here together with Squid. A high-performance and high-availability web server solution providing security in a hostile environment.

Linux distributions have long been used as server operating systems, and have risen to prominence in that area; Netcraft reported in September 2006, that eight of the ten most reliable internet hosting companies ran Linux distributions on their web servers.[80] Since June 2008, Linux distributions represented five of the top ten, FreeBSD three of ten, and Microsoft two of ten;[81] since February 2010, Linux distributions represented six of the top ten, FreeBSD two of ten, and Microsoft one of ten.[82]

Linux distributions are the cornerstone of the LAMP server-software combination (Linux, Apache, MariaDB/MySQL, Perl/PHP/Python) which has achieved popularity among developers, and which is one of the more common platforms for website hosting.[83]

Linux distributions have become increasingly popular on mainframes in the last decade partly due to pricing and the open-source model.[16][citation needed] In December 2009, computer giant IBM reported that it would predominantly market and sell mainframe-based Enterprise Linux Server.[84]

Linux distributions are also commonly used as operating systems for supercomputers; in the decade since Earth Simulator supercomputer, all the fastest supercomputers have used Linux. As of November 2014[update], 97% of the world's 500 fastest supercomputers run some variant of Linux,[85] including the top 80.[86]

Smart devices

Several OSes for smart devices, e.g. smartphones, tablet computers, smart TVs, and in-vehicle infotainment (IVI) systems, are Linux-based. The three major platforms are mer, Tizen, and Android.Android has become the dominant mobile operating system for smartphones, during the second quarter of 2013, 79.3% of smartphones sold worldwide used Android.[87] Android is also a popular OS for tablets, and Android smart TVs and in-vehicle infotainment systems have also appeared in the market.

Cell phones and PDAs running Linux on open-source platforms became more common from 2007; examples include the Nokia N810, Openmoko's Neo1973, and the Motorola ROKR E8. Continuing the trend, Palm (later acquired by HP) produced a new Linux-derived operating system, webOS, which is built into its new line of Palm Pre smartphones.

Nokia's Maemo, one of the earliest mobile OSes, was based on Debian.[88] It was later merged with Intel's Moblin, another Linux-based OS, to form MeeGo.[89] The project was later terminated in favor of Tizen, an operating system targeted at mobile devices as well as in-vehicle infotainment (IVI). Tizen is a project within The Linux Foundation. Several Samsung products are already running Tizen, Samsung Gear 2 being the most significant example.[90] Samsung Z smartphones will use Tizen instead of Android.[91]

As a result of MeeGo's termination, the Mer project forked the MeeGo codebase to create a basis for mobile-oriented OSes.[92] In July 2012, Jolla announced Sailfish OS, their own mobile OS built upon Mer technology.

Mozilla's Firefox OS consists of the Linux kernel, a hardware abstraction layer, a web standards based runtime environment and user interface, and an integrated web browser.[93]

Canonical has released Ubuntu Touch, its own mobile OS that aims to bring convergence to the user experience on the OS and its desktop counterpart, Ubuntu. The OS also provides a full Ubuntu desktop when connected to an external monitor.[94]

Embedded devices

Due to its low cost and ease of customization, Linux is often used in embedded systems. In the non-mobile telecommunications equipment sector, the majority of customer-premises equipment (CPE) hardware runs some Linux-based operating system. OpenWrt is a community driven example upon which many of the OEM firmwares are based.

For example, the popular TiVo digital video recorder also uses a customized Linux,[98] as do several network firewalls and routers from such makers as Cisco/Linksys. The Korg OASYS, the Korg KRONOS, the Yamaha Motif XS/Motif XF music workstations,[99] Yamaha S90XS/S70XS, Yamaha MOX6/MOX8 synthesizers, Yamaha Motif-Rack XS tone generator module, and Roland RD-700GX digital piano also run Linux. Linux is also used in stage lighting control systems, such as the WholeHogIII console.[100]

Gaming

There had been several games that run on traditional desktop Linux, and many of which originally written for desktop OS. However, due to most game developers not paying attention to such a small market as desktop Linux, only a few prominent games have been available for desktop Linux. On the other hand, as a popular mobile platform, Android has gained much developer interest and there are many games available for Android.On 14 February 2013, Valve released a Linux version of Steam, a popular game distribution platform on PC.[101] Many Steam games were ported to Linux.[102] On 13 December 2013, Valve released SteamOS, a gaming oriented OS based on Debian, for beta testing, and has plans to ship Steam Machines as a gaming and entertainment platform.[103] Valve has also developed VOGL, an OpenGL debugger intended to aid video game development,[104] as well as porting its Source game engine to desktop Linux.[105] As a result of Valve's effort, several prominent games such as DotA 2, Team Fortress 2, Portal, Portal 2 and Left 4 Dead 2 are now natively available on desktop Linux.

On 31 July 2013, Nvidia released Shield as an attempt to use Android as a specialized gaming platform.[106]

Specialized uses

Due to the flexibility, customizability and free and open-source nature of Linux, it becomes possible to highly tune Linux for a specific purpose. There are two main methods for creating a specialized Linux distribution: building from scratch or from a general-purpose distribution as a base. The distributions often used for this purpose include Debian, Fedora, Ubuntu (which is itself based on Debian), Arch Linux, Gentoo, and Slackware. In contrast, Linux distributions built from scratch do not have general-purpose bases; instead, they focus on the JeOS philosophy by including only necessary components and avoiding resource overhead caused by components considered redundant in the distribution's use cases.Home theater PC

A home theater PC (HTPC) is a PC that is mainly used as an entertainment system, especially a Home theater system. It is normally connected to a television, and often an additional audio system.OpenELEC, a Linux distribution that incorporates the media center software Kodi, is an OS tuned specifically for an HTPC. Having been built from the ground up adhering to the JeOS principle, the OS is very lightweight and very suitable for the confined usage range of an HTPC.

There are also special editions of Linux distributions that include the MythTV media center software, such as Mythbuntu, a special edition of Ubuntu.

Digital security

Kali Linux is a Debian-based Linux distribution designed for digital forensics and penetration testing. It comes preinstalled with several software applications for penetration testing and identifying security exploits.[107]System rescue

Linux Live CD sessions have long been used as a tool for recovering data from a broken computer system and for repairing the system. Building upon that idea, several Linux distributions tailored for this purpose have emerged, most of which use GParted as a partition editor, with additional data recovery and system repair software:- GParted Live – a Debian-based distribution developed by the GParted project.

- Parted Magic – a commercial Linux distribution.

- SystemRescueCD – a Gentoo-based distribution with support for editing Windows registry.

In space

SpaceX uses multiple redundant flight computers in a fault-tolerant design in the Falcon 9 rocket. Each Merlin engine is controlled by three voting computers, with two physical processors per computer that constantly check each other's operation. Linux is not inherently fault-tolerant (no operating system is, as it is a function of the whole system including the hardware), but the flight computer software makes it so for its purpose.[108] For flexibility, commercial off-the-shelf parts and system-wide "radiation-tolerant" design are used instead of radiation hardened parts.[108] As of September 2014[update], SpaceX has made 13 launches of the Falcon 9 since 2010, and all 13 have successfully delivered their primary payloads to Earth orbit, including some missions meant for to the International Space Station.In addition, Windows was used as an operating system on non-mission critical systems—laptops used on board the space station, for example—but it has been replaced with Linux; the first Linux-powered humanoid robot is also undergoing in-flight testing.[109]

The Jet Propulsion Laboratory has used Linux for a number of years "to help with projects relating to the construction of unmanned space flight and deep space exploration"; NASA uses Linux in robotics in the Mars rover, and Ubuntu Linux to "save data from satellites".[110]

Teaching

Linux distributions have been created to provide hands-on experience with coding and source code to students, on devices such as the Raspberry Pi. In addition to producing a practical device, the intention is to show students "how things work under the hood".Many quantitative studies of free/open-source software focus on topics including market share and reliability, with numerous studies specifically examining Linux.[111] The Linux market is growing rapidly, and the revenue of servers, desktops, and packaged software running Linux was expected to exceed $35.7 billion by 2008.[112] Analysts and proponents attribute the relative success of Linux to its security, reliability, low cost, and freedom from vendor lock-in.[113][114]

- Desktops and laptops

- According to web server statistics, as of December 2014[update], the estimated market share of Linux on desktop computers is 1.25%. In comparison, Microsoft Windows has a market share of around 91%, while Mac OS covers around 7%.[17]

- Web servers

- IDC's Q1 2007 report indicated that Linux held 12.7% of the overall server market at that time.[115] This estimate was based on the number of Linux servers sold by various companies, and did not include server hardware purchased separately which had Linux installed on it later. In September 2008 Microsoft CEO Steve Ballmer stated that 60% of Web servers ran Linux versus 40% that ran Windows Server.[116]

- Mobile devices

- Android, which is based on the Linux kernel, has become the dominant operating system for smartphones. During the second quarter of 2013, 79.3% of smartphones sold worldwide used Android.[87] Android is also a popular operating system for tablets, being responsible for more than 60% of tablet sales as of 2013.[117] According to web server statistics, as of December 2014[update] Android has a market share of about 46%, with iOS holding 45%, and the remaining 9% attributed to various niche platforms.[118]

- Film production

- For years Linux has been the platform of choice in the film industry. The first major film produced on Linux servers was 1997's Titanic.[119][120] Since then major studios including DreamWorks Animation, Pixar, Weta Digital, and Industrial Light & Magic have migrated to Linux.[121][122][123] According to the Linux Movies Group, more than 95% of the servers and desktops at large animation and visual effects companies use Linux.[124]

- Use in government

- Linux distributions have also gained popularity with various local and national governments. The federal government of Brazil is well known for its support for Linux.[125][126] News of the Russian military creating its own Linux distribution has also surfaced, and has come to fruition as the G.H.ost Project.[127] The Indian state of Kerala has gone to the extent of mandating that all state high schools run Linux on their computers.[128][129] China uses Linux exclusively as the operating system for its Loongson processor family to achieve technology independence.[130] In Spain, some regions have developed their own Linux distributions, which are widely used in education and official institutions, like gnuLinEx in Extremadura and Guadalinex in Andalusia. France and Germany have also taken steps toward the adoption of Linux.[131]

Copyright, trademark, and naming

Linux kernel is licensed under the GNU General Public License (GPL), version 2. The GPL requires that anyone who distributes software based on source code under this license, must make the originating source code (and any modifications) available to the recipient under the same terms.[132]

Other key components of a typical Linux distribution are also mainly licensed under the GPL, but they may use other licenses; many libraries use the GNU Lesser General Public License (LGPL), a more permissive variant of the GPL, and the X.org implementation of the X Window System uses the MIT License.

Torvalds states that the Linux kernel will not move from version 2 of the GPL to version 3.[133][134] He specifically dislikes some provisions in the new license which prohibit the use of the software in digital rights management.[135] It would also be impractical to obtain permission from all the copyright holders, who number in the thousands.[136]

A 2001 study of Red Hat Linux 7.1 found that this distribution contained 30 million source lines of code.[137] Using the Constructive Cost Model, the study estimated that this distribution required about eight thousand man-years of development time. According to the study, if all this software had been developed by conventional proprietary means, it would have cost about $1.48 billion (2015 US dollars) to develop in the United States.[137] Most of the source code (71%) was written in the C programming language, but many other languages were used, including C++, Lisp, assembly language, Perl, Python, Fortran, and various shell scripting languages. Slightly over half of all lines of code were licensed under the GPL. The Linux kernel itself was 2.4 million lines of code, or 8% of the total.[137]

In a later study, the same analysis was performed for Debian version 4.0 (etch, which was released in 2007).[138] This distribution contained close to 283 million source lines of code, and the study estimated that it would have required about seventy three thousand man-years and cost US$8.16 billion (in 2015 dollars) to develop by conventional means.

In the United States, the name Linux is a trademark registered to Linus Torvalds.[4] Initially, nobody registered it, but on 15 August 1994, William R. Della Croce, Jr. filed for the trademark Linux, and then demanded royalties from Linux distributors. In 1996, Torvalds and some affected organizations sued him to have the trademark assigned to Torvalds, and, in 1997, the case was settled.[139] The licensing of the trademark has since been handled by the Linux Mark Institute. Torvalds has stated that he trademarked the name only to prevent someone else from using it. LMI originally charged a nominal sublicensing fee for use of the Linux name as part of trademarks,[140] but later changed this in favor of offering a free, perpetual worldwide sublicense.[141]

The Free Software Foundation prefers GNU/Linux as the name when referring to the operating system as a whole, because it considers Linux to be a variant of the GNU operating system, initiated in 1983 by Richard Stallman, president of the Free Software Foundation.[13][12]

A minority of public figures and software projects other than Stallman and the Free Software Foundation, notably Debian (which had been sponsored by the Free Software Foundation up to 1996[142]), also use GNU/Linux when referring to the operating system as a whole.[98][143][144] Most media and common usage,[original research?] however, refers to this family of operating systems simply as Linux, as do many large Linux distributions (for example, SUSE Linux and Red Hat). As of May 2011[update], about 8% to 13% of a modern Linux distribution is made of GNU components (the range depending on whether GNOME is considered part of GNU), as determined by counting lines of source code making up Ubuntu's "Natty" release; meanwhile, about 9% is taken by the Linux kernel.[145]

Torvalds states that the Linux kernel will not move from version 2 of the GPL to version 3.[133][134] He specifically dislikes some provisions in the new license which prohibit the use of the software in digital rights management.[135] It would also be impractical to obtain permission from all the copyright holders, who number in the thousands.[136]

A 2001 study of Red Hat Linux 7.1 found that this distribution contained 30 million source lines of code.[137] Using the Constructive Cost Model, the study estimated that this distribution required about eight thousand man-years of development time. According to the study, if all this software had been developed by conventional proprietary means, it would have cost about $1.48 billion (2015 US dollars) to develop in the United States.[137] Most of the source code (71%) was written in the C programming language, but many other languages were used, including C++, Lisp, assembly language, Perl, Python, Fortran, and various shell scripting languages. Slightly over half of all lines of code were licensed under the GPL. The Linux kernel itself was 2.4 million lines of code, or 8% of the total.[137]

In a later study, the same analysis was performed for Debian version 4.0 (etch, which was released in 2007).[138] This distribution contained close to 283 million source lines of code, and the study estimated that it would have required about seventy three thousand man-years and cost US$8.16 billion (in 2015 dollars) to develop by conventional means.

In the United States, the name Linux is a trademark registered to Linus Torvalds.[4] Initially, nobody registered it, but on 15 August 1994, William R. Della Croce, Jr. filed for the trademark Linux, and then demanded royalties from Linux distributors. In 1996, Torvalds and some affected organizations sued him to have the trademark assigned to Torvalds, and, in 1997, the case was settled.[139] The licensing of the trademark has since been handled by the Linux Mark Institute. Torvalds has stated that he trademarked the name only to prevent someone else from using it. LMI originally charged a nominal sublicensing fee for use of the Linux name as part of trademarks,[140] but later changed this in favor of offering a free, perpetual worldwide sublicense.[141]

The Free Software Foundation prefers GNU/Linux as the name when referring to the operating system as a whole, because it considers Linux to be a variant of the GNU operating system, initiated in 1983 by Richard Stallman, president of the Free Software Foundation.[13][12]

A minority of public figures and software projects other than Stallman and the Free Software Foundation, notably Debian (which had been sponsored by the Free Software Foundation up to 1996[142]), also use GNU/Linux when referring to the operating system as a whole.[98][143][144] Most media and common usage,[original research?] however, refers to this family of operating systems simply as Linux, as do many large Linux distributions (for example, SUSE Linux and Red Hat). As of May 2011[update], about 8% to 13% of a modern Linux distribution is made of GNU components (the range depending on whether GNOME is considered part of GNU), as determined by counting lines of source code making up Ubuntu's "Natty" release; meanwhile, about 9% is taken by the Linux kernel.[145]