From Wikipedia, the free encyclopedia

Biochemistry, sometimes called biological chemistry, is the study of chemical processes within and relating to living organisms.[1] By controlling information flow through biochemical signaling and the flow of chemical energy through metabolism, biochemical processes give rise to the complexity of life. Over the last 40 years, biochemistry has become so successful at explaining living processes that now almost all areas of the life sciences from botany to medicine are engaged in biochemical research.[2] Today, the main focus of pure biochemistry is in understanding how biological molecules give rise to the processes that occur within living cells, which in turn relates greatly to the study and understanding of whole organisms.

Biochemistry is closely related to molecular biology, the study of the molecular mechanisms by which genetic information encoded in DNA is able to result in the processes of life. Depending on the exact definition of the terms used, molecular biology can be thought of as a branch of biochemistry, or biochemistry as a tool with which to investigate and study molecular biology.

Much of biochemistry deals with the structures, functions and interactions of biological macromolecules, such as proteins, nucleic acids, carbohydrates and lipids, which provide the structure of cells and perform many of the functions associated with life. The chemistry of the cell also depends on the reactions of smaller molecules and ions. These can be inorganic, for example water and metal ions, or organic, for example the amino acids which are used to synthesize proteins. The mechanisms by which cells harness energy from their environment via chemical reactions are known as metabolism. The findings of biochemistry are applied primarily in medicine, nutrition, and agriculture. In medicine, biochemists investigate the causes and cures of disease. In nutrition, they study how to maintain health and study the effects of nutritional deficiencies. In agriculture, biochemists investigate soil and fertilizers, and try to discover ways to improve crop cultivation, crop storage and pest control.

History

Gerty Cori and Carl Cori jointly won the Nobel Prize in 1947 for their discovery of the Cori cycle at RPMI.

It once was generally believed that life and its materials had some essential property or substance (often referred to as the "vital principle") distinct from any found in non-living matter, and it was thought that only living beings could produce the molecules of life.[3] Then, in 1828, Friedrich Wöhler published a paper on the synthesis of urea, proving that organic compounds can be created artificially.[4]

The beginning of biochemistry may have been the discovery of the first enzyme, diastase (today called amylase), in 1833 by Anselme Payen.[5] Eduard Buchner contributed the first demonstration of a complex biochemical process outside a cell in 1896: alcoholic fermentation in cell extracts of yeast.[6] Although the term "biochemistry" seems to have been first used in 1882, it is generally accepted that the formal coinage of biochemistry occurred in 1903 by Carl Neuberg, a German chemist.[7] Since then, biochemistry has advanced, especially since the mid-20th century, with the development of new techniques such as chromatography, X-ray diffraction, dual polarisation interferometry, NMR spectroscopy, radioisotopic labeling, electron microscopy, and molecular dynamics simulations. These techniques allowed for the discovery and detailed analysis of many molecules and metabolic pathways of the cell, such as glycolysis and the Krebs cycle (citric acid cycle).

Another significant historic event in biochemistry is the discovery of the gene and its role in the transfer of information in the cell. This part of biochemistry is often called molecular biology.[8] In the 1950s, James D. Watson, Francis Crick, Rosalind Franklin, and Maurice Wilkins were instrumental in solving DNA structure and suggesting its relationship with genetic transfer of information.[9] In 1958, George Beadle and Edward Tatum received the Nobel Prize for work in fungi showing that one gene produces one enzyme.[10] In 1988, Colin Pitchfork was the first person convicted of murder with DNA evidence, which led to growth of forensic science.[11] More recently, Andrew Z. Fire and Craig C. Mello received the 2006 Nobel Prize for discovering the role of RNA interference (RNAi), in the silencing of gene expression.[12]

Starting materials: the chemical elements of life

Around two dozen of the 92 naturally occurring chemical elements are essential to various kinds of biological life. Most rare elements on Earth are not needed by life (exceptions being selenium and iodine), while a few common ones (aluminum and titanium) are not used. Most organisms share element needs, but there are a few differences between plants and animals. For example ocean algae use bromine but land plants and animals seem to need none. All animals require sodium, but some plants do not. Plants need boron and silicon, but animals may not (or may need ultra-small amounts).Just six elements—carbon, hydrogen, nitrogen, oxygen, calcium, and phosphorus—make up almost 99% of the mass of a human body (see composition of the human body for a complete list). In addition to the six major elements that compose most of the human body, humans require smaller amounts of possibly 18 more.[13]

Biomolecules

The four main classes of molecules in biochemistry (often called biomolecules) are carbohydrates, lipids, proteins, and nucleic acids. Many biological molecules are polymers: in this terminology, monomers are relatively small micromolecules that are linked together to create large macromolecules known as polymers. When monomers are linked together to synthesize a biological polymer, they undergo a process called dehydration synthesis. Different macromolecules can assemble in larger complexes, often needed for biological activity.Carbohydrates

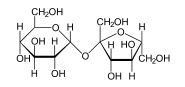

Carbohydrates are made from monomers called monosaccharides. Some of these monosaccharides include glucose (C6H12O6), fructose (C6H12O6), and deoxyribose (C5H10O4). When two monosaccharides undergo dehydration synthesis, water is produced, as two hydrogen atoms and one oxygen atom are lost from the two monosaccharides' hydroxyl group.

Lipids

Lipids are usually made from one molecule of glycerol combined with other molecules. In triglycerides, the main group of bulk lipids, there is one molecule of glycerol and three fatty acids. Fatty acids are considered the monomer in that case, and may be saturated (no double bonds in the carbon chain) or unsaturated (one or more double bonds in the carbon chain).

Lipids, especially phospholipids, are also used in various pharmaceutical products, either as co-solubilisers (e.g., in parenteral infusions) or else as drug carrier components (e.g., in a liposome or transfersome).

Proteins

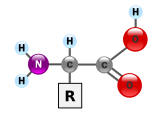

Proteins are very large molecules – macro-biopolymers – made from monomers called amino acids. There are 20 standard amino acids, each containing a carboxyl group, an amino group, and a side-chain (known as an "R" group). The "R" group is what makes each amino acid different, and the properties of the side-chains greatly influence the overall three-dimensional conformation of a protein. When amino acids combine, they form a special bond called a peptide bond through dehydration synthesis, and become a polypeptide, or protein.

In order to determine whether two proteins are related, or in other words to decide whether they are homologous or not, scientists use sequence-comparison methods. Methods like Sequence Alignments and Structural Alignments are powerful tools that help scientists identify homologies between related molecules.[14]

The relevance of finding homologies among proteins goes beyond forming an evolutionary pattern of protein families. By finding how similar two protein sequences are, we acquire knowledge about their structure and therefore their function.

Nucleic acids

Carbohydrates

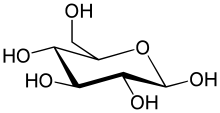

The function of carbohydrates includes energy storage and providing structure. Sugars are carbohydrates, but not all carbohydrates are sugars. There are more carbohydrates on Earth than any other known type of biomolecule; they are used to store energy and genetic information, as well as play important roles in cell to cell interactions and communications.Monosaccharides

The simplest type of carbohydrate is a monosaccharide, which between other properties contains carbon, hydrogen, and oxygen, mostly in a ratio of 1:2:1 (generalized formula CnH2nOn, where n is at least 3). Glucose, one of the most important carbohydrates, is an example of a monosaccharide. So is fructose, the sugar commonly associated with the sweet taste of fruits.[15][a] Some carbohydrates (especially after condensation to oligo- and polysaccharides) contain less carbon relative to H and O, which still are present in 2:1 (H:O) ratio. Monosaccharides can be grouped into aldoses (having an aldehyde group at the end of the chain, e.g. glucose) and ketoses (having a keto group in their chain; e.g. fructose). Both aldoses and ketoses occur in an equilibrium (starting with chain lengths of C4) cyclic forms. These are generated by bond formation between one of the hydroxyl groups of the sugar chain with the carbon of the aldehyde or keto group to form a hemiacetal bond. This leads to saturated five-membered (in furanoses) or six-membered (in pyranoses) heterocyclic rings containing one O as heteroatom.

Disaccharides

Two monosaccharides can be joined using dehydration synthesis, in which a hydrogen atom is removed from the end of one molecule and a hydroxyl group (—OH) is removed from the other; the remaining residues are then attached at the sites from which the atoms were removed. The H—OH or H2O is then released as a molecule of water, hence the term dehydration. The new molecule, consisting of two monosaccharides, is called a disaccharide and is conjoined together by a glycosidic or ether bond. The reverse reaction can also occur, using a molecule of water to split up a disaccharide and break the glycosidic bond; this is termed hydrolysis. The most well-known disaccharide is sucrose, ordinary sugar (in scientific contexts, called table sugar or cane sugar to differentiate it from other sugars). Sucrose consists of a glucose molecule and a fructose molecule joined together. Another important disaccharide is lactose, consisting of a glucose molecule and a galactose molecule. As most humans age, the production of lactase, the enzyme that hydrolyzes lactose back into glucose and galactose, typically decreases.

This results in lactase deficiency, also called lactose intolerance.

Sugar polymers are characterized by having reducing or non-reducing ends. A reducing end of a carbohydrate is a carbon atom that can be in equilibrium with the open-chain aldehyde or keto form. If the joining of monomers takes place at such a carbon atom, the free hydroxy group of the pyranose or furanose form is exchanged with an OH-side-chain of another sugar, yielding a full acetal. This prevents opening of the chain to the aldehyde or keto form and renders the modified residue non-reducing. Lactose contains a reducing end at its glucose moiety, whereas the galactose moiety form a full acetal with the C4-OH group of glucose. Saccharose does not have a reducing end because of full acetal formation between the aldehyde carbon of glucose (C1) and the keto carbon of fructose (C2).

Oligosaccharides and polysaccharides

When a few (around three to six) monosaccharides are joined, it is called an oligosaccharide (oligo- meaning "few"). These molecules tend to be used as markers and signals, as well as having some other uses. Many monosaccharides joined together make a polysaccharide. They can be joined together in one long linear chain, or they may be branched. Two of the most common polysaccharides are cellulose and glycogen, both consisting of repeating glucose monomers.

- Cellulose is made by plants and is an important structural component of their cell walls. Humans can neither manufacture nor digest it.

- Glycogen, on the other hand, is an animal carbohydrate; humans and other animals use it as a form of energy storage.

Use of carbohydrates as an energy source

Glucose is the major energy source in most life forms. For instance, polysaccharides are broken down into their monomers (glycogen phosphorylase removes glucose residues from glycogen). Disaccharides like lactose or sucrose are cleaved into their two component monosaccharides.Glycolysis (anaerobic)

Glucose is mainly metabolized by a very important ten-step pathway called glycolysis, the net result of which is to break down one molecule of glucose into two molecules of pyruvate; this also produces a net two molecules of ATP, the energy currency of cells, along with two reducing equivalents as converting NAD+ to NADH. This does not require oxygen; if no oxygen is available (or the cell cannot use oxygen), the NAD is restored by converting the pyruvate to lactate (lactic acid) (e.g., in humans) or to ethanol plus carbon dioxide (e.g., in yeast). Other monosaccharides like galactose and fructose can be converted into intermediates of the glycolytic pathway.[16]Aerobic

In aerobic cells with sufficient oxygen, as in most human cells, the pyruvate is further metabolized. It is irreversibly converted to acetyl-CoA, giving off one carbon atom as the waste product carbon dioxide, generating another reducing equivalent as NADH. The two molecules acetyl-CoA (from one molecule of glucose) then enter the citric acid cycle, producing two more molecules of ATP, six more NADH molecules and two reduced (ubi)quinones (via FADH2 as enzyme-bound cofactor), and releasing the remaining carbon atoms as carbon dioxide. The produced NADH and quinol molecules then feed into the enzyme complexes of the respiratory chain, an electron transport system transferring the electrons ultimately to oxygen and conserving the released energy in the form of a proton gradient over a membrane (inner mitochondrial membrane in eukaryotes). Thus, oxygen is reduced to water and the original electron acceptors NAD+ and quinone are regenerated. This is why humans breathe in oxygen and breathe out carbon dioxide. The energy released from transferring the electrons from high-energy states in NADH and quinol is conserved first as proton gradient and converted to ATP via ATP synthase. This generates an additional 28 molecules of ATP (24 from the 8 NADH + 4 from the 2 quinols), totaling to 32 molecules of ATP conserved per degraded glucose (two from glycolysis + two from the citrate cycle). It is clear that using oxygen to completely oxidize glucose provides an organism with far more energy than any oxygen-independent metabolic feature, and this is thought to be the reason why complex life appeared only after Earth's atmosphere accumulated large amounts of oxygen.Gluconeogenesis

In vertebrates, vigorously contracting skeletal muscles (during weightlifting or sprinting, for example) do not receive enough oxygen to meet the energy demand, and so they shift to anaerobic metabolism, converting glucose to lactate. The liver regenerates the glucose, using a process called gluconeogenesis. This process is not quite the opposite of glycolysis, and actually requires three times the amount of energy gained from glycolysis (six molecules of ATP are used, compared to the two gained in glycolysis). Analogous to the above reactions, the glucose produced can then undergo glycolysis in tissues that need energy, be stored as glycogen (or starch in plants), or be converted to other monosaccharides or joined into di- or oligosaccharides. The combined pathways of glycolysis during exercise, lactate's crossing via the bloodstream to the liver, subsequent gluconeogenesis and release of glucose into the bloodstream is called the Cori cycle.[17]Proteins

A schematic of hemoglobin. The red and blue ribbons represent the protein globin; the green structures are the heme groups.

Like carbohydrates, some proteins perform largely structural roles. For instance, movements of the proteins actin and myosin ultimately are responsible for the contraction of skeletal muscle. One property many proteins have is that they specifically bind to a certain molecule or class of molecules—they may be extremely selective in what they bind. Antibodies are an example of proteins that attach to one specific type of molecule. In fact, the enzyme-linked immunosorbent assay (ELISA), which uses antibodies, is one of the most sensitive tests modern medicine uses to detect various biomolecules. Probably the most important proteins, however, are the enzymes. These molecules recognize specific reactant molecules called substrates; they then catalyze the reaction between them. By lowering the activation energy, the enzyme speeds up that reaction by a rate of 1011 or more: a reaction that would normally take over 3,000 years to complete spontaneously might take less than a second with an enzyme. The enzyme itself is not used up in the process, and is free to catalyze the same reaction with a new set of substrates. Using various modifiers, the activity of the enzyme can be regulated, enabling control of the biochemistry of the cell as a whole.

In essence, proteins are chains of amino acids. An amino acid consists of a carbon atom bound to four groups. One is an amino group, —NH2, and one is a carboxylic acid group, —COOH (although these exist as —NH3+ and —COO− under physiologic conditions). The third is a simple hydrogen atom. The fourth is commonly denoted "—R" and is different for each amino acid. There are 20 standard amino acids. Some of these have functions by themselves or in a modified form; for instance, glutamate functions as an important neurotransmitter. Also if a glycine amino acid undergoes methylation to a pseudo alanine amino acid, it is an indication of cancer metastasis.[medical citation needed]

Amino acids can be joined via a peptide bond. In this dehydration synthesis, a water molecule is removed and the peptide bond connects the nitrogen of one amino acid's amino group to the carbon of the other's carboxylic acid group. The resulting molecule is called a dipeptide, and short stretches of amino acids (usually, fewer than thirty) are called peptides or polypeptides. Longer stretches merit the title proteins. As an example, the important blood serum protein albumin contains 585 amino acid residues.[18]

The structure of proteins is traditionally described in a hierarchy of four levels. The primary structure of a protein simply consists of its linear sequence of amino acids; for instance, "alanine-glycine-tryptophan-serine-glutamate-asparagine-glycine-lysine-…". Secondary structure is concerned with local morphology (morphology being the study of structure). Some combinations of amino acids will tend to curl up in a coil called an α-helix or into a sheet called a β-sheet; some α-helixes can be seen in the hemoglobin schematic above. Tertiary structure is the entire three-dimensional shape of the protein. This shape is determined by the sequence of amino acids. In fact, a single change can change the entire structure. The alpha chain of hemoglobin contains 146 amino acid residues; substitution of the glutamate residue at position 6 with a valine residue changes the behavior of hemoglobin so much that it results in sickle-cell disease. Finally, quaternary structure is concerned with the structure of a protein with multiple peptide subunits, like hemoglobin with its four subunits. Not all proteins have more than one subunit.[19]

Ingested proteins are usually broken up into single amino acids or dipeptides in the small intestine, and then absorbed. They can then be joined to make new proteins. Intermediate products of glycolysis, the citric acid cycle, and the pentose phosphate pathway can be used to make all twenty amino acids, and most bacteria and plants possess all the necessary enzymes to synthesize them. Humans and other mammals, however, can synthesize only half of them. They cannot synthesize isoleucine, leucine, lysine, methionine, phenylalanine, threonine, tryptophan, and valine. These are the essential amino acids, since it is essential to ingest them. Mammals do possess the enzymes to synthesize alanine, asparagine, aspartate, cysteine, glutamate, glutamine, glycine, proline, serine, and tyrosine, the nonessential amino acids. While they can synthesize arginine and histidine, they cannot produce it in sufficient amounts for young, growing animals, and so these are often considered essential amino acids.

If the amino group is removed from an amino acid, it leaves behind a carbon skeleton called an α-keto acid. Enzymes called transaminases can easily transfer the amino group from one amino acid (making it an α-keto acid) to another α-keto acid (making it an amino acid). This is important in the biosynthesis of amino acids, as for many of the pathways, intermediates from other biochemical pathways are converted to the α-keto acid skeleton, and then an amino group is added, often via transamination. The amino acids may then be linked together to make a protein.[20]

A similar process is used to break down proteins. It is first hydrolyzed into its component amino acids. Free ammonia (NH3), existing as the ammonium ion (NH4+) in blood, is toxic to life forms. A suitable method for excreting it must therefore exist. Different tactics have evolved in different animals, depending on the animals' needs. Unicellular organisms, of course, simply release the ammonia into the environment. Likewise, bony fish can release the ammonia into the water where it is quickly diluted. In general, mammals convert the ammonia into urea, via the urea cycle.[21]

Lipids

The term lipid composes a diverse range of molecules and to some extent is a catchall for relatively water-insoluble or nonpolar compounds of biological origin, including waxes, fatty acids, fatty-acid derived phospholipids, sphingolipids, glycolipids, and terpenoids (e.g., retinoids and steroids). Some lipids are linear aliphatic molecules, while others have ring structures. Some are aromatic, while others are not. Some are flexible, while others are rigid.[22]Most lipids have some polar character in addition to being largely nonpolar. In general, the bulk of their structure is nonpolar or hydrophobic ("water-fearing"), meaning that it does not interact well with polar solvents like water. Another part of their structure is polar or hydrophilic ("water-loving") and will tend to associate with polar solvents like water. This makes them amphiphilic molecules (having both hydrophobic and hydrophilic portions). In the case of cholesterol, the polar group is a mere -OH (hydroxyl or alcohol). In the case of phospholipids, the polar groups are considerably larger and more polar, as described below.

Lipids are an integral part of our daily diet. Most oils and milk products that we use for cooking and eating like butter, cheese, ghee etc., are composed of fats. Vegetable oils are rich in various polyunsaturated fatty acids (PUFA). Lipid-containing foods undergo digestion within the body and are broken into fatty acids and glycerol, which are the final degradation products of fats and lipids.

Nucleic acids

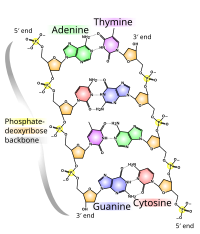

A nucleic acid is a complex, high-molecular-weight biochemical macromolecule composed of nucleotide chains that convey genetic information.[23] The most common nucleic acids are deoxyribonucleic acid (DNA) and ribonucleic acid (RNA). Nucleic acids are found in all living cells and viruses. Aside from the genetic material of the cell, nucleic acids often play a role as second messengers, as well as forming the base molecule for adenosine triphosphate (ATP), the primary energy-carrier molecule found in all living organisms.Nucleic acid, so called because of its prevalence in cellular nuclei, is the generic name of the family of biopolymers. The monomers are called nucleotides, and each consists of three components: a nitrogenous heterocyclic base (either a purine or a pyrimidine), a pentose sugar, and a phosphate group. Different nucleic acid types differ in the specific sugar found in their chain (e.g., DNA or deoxyribonucleic acid contains 2-deoxyriboses). Also, the nitrogenous bases possible in the two nucleic acids are different: adenine, cytosine, and guanine occur in both RNA and DNA, while thymine occurs only in DNA and uracil occurs in RNA.[24]

Relationship to other "molecular-scale" biological sciences

Researchers in biochemistry use specific techniques native to biochemistry, but increasingly combine these with techniques and ideas developed in the fields of genetics, molecular biology and biophysics. There has never been a hard-line among these disciplines in terms of content and technique. Today, the terms molecular biology and biochemistry are nearly interchangeable. The following figure is a schematic that depicts one possible view of the relationship between the fields:

- Biochemistry is the study of the chemical substances and vital processes occurring in living organisms. Biochemists focus heavily on the role, function, and structure of biomolecules. The study of the chemistry behind biological processes and the synthesis of biologically active molecules are examples of biochemistry.

- Genetics is the study of the effect of genetic differences on organisms. Often this can be inferred by the absence of a normal component (e.g., one gene). The study of "mutants" – organisms with a changed gene that leads to the organism being different with respect to the so-called "wild type" or normal phenotype. Genetic interactions (epistasis) can often confound simple interpretations of such "knock-out" or "knock-in" studies.

- Molecular biology is the study of molecular underpinnings of the process of replication, transcription and translation of the genetic material. The central dogma of molecular biology where genetic material is transcribed into RNA and then translated into protein, despite being an oversimplified picture of molecular biology, still provides a good starting point for understanding the field. This picture, however, is undergoing revision in light of emerging novel roles for RNA.[25]

- Chemical biology seeks to develop new tools based on small molecules that allow minimal perturbation of biological systems while providing detailed information about their function. Further, chemical biology employs biological systems to create non-natural hybrids between biomolecules and synthetic devices (for example emptied viral capsids that can deliver gene therapy or drug molecules).

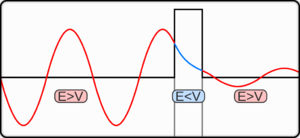

in atomic units (a.u.). The solid lines represent the

in atomic units (a.u.). The solid lines represent the  .

.

or

or

is the reduced

is the reduced

, where

, where

is then separated into real and imaginary parts:

is then separated into real and imaginary parts: , where A(x) and B(x) are real-valued functions.

, where A(x) and B(x) are real-valued functions. .

. to satisfy the real part of the equation; for a good classical limit starting with the highest power of

to satisfy the real part of the equation; for a good classical limit starting with the highest power of

,

,

.

. and

and

![\Psi(x) \approx C \frac{ e^{i \int dx \sqrt{\frac{2m}{\hbar^2} \left( E - V(x) \right)} + \theta} }{\sqrt[4]{\frac{2m}{\hbar^2} \left( E - V(x) \right)}}](http://upload.wikimedia.org/math/5/9/c/59c905daa195675abf6216e2f08ad32d.png)

and

and

![\Psi(x) \approx \frac{ C_{+} e^{+\int dx \sqrt{\frac{2m}{\hbar^2} \left( V(x) - E \right)}} + C_{-} e^{-\int dx \sqrt{\frac{2m}{\hbar^2} \left( V(x) - E \right)}}}{\sqrt[4]{\frac{2m}{\hbar^2} \left( V(x) - E \right)}}](http://upload.wikimedia.org/math/a/8/1/a8181fce7cb1887dc8c0c2cbb0cfd776.png)

. Away from the potential hill, the particle acts similar to a free and oscillating wave; beneath the potential hill, the particle undergoes exponential changes in amplitude. By considering the behaviour at these limits and classical turning points a global solution can be made.

. Away from the potential hill, the particle acts similar to a free and oscillating wave; beneath the potential hill, the particle undergoes exponential changes in amplitude. By considering the behaviour at these limits and classical turning points a global solution can be made. and expand

and expand  in a power series about

in a power series about

.

. .

.![\Psi(x) = C_A Ai\left( \sqrt[3]{v_1} (x - x_1) \right) + C_B Bi\left( \sqrt[3]{v_1} (x - x_1) \right)](http://upload.wikimedia.org/math/4/a/b/4abb5ca637ad527f20ddc4d3a6714beb.png)

and

and  are

are

![T(E) = e^{-2\int_{x_1}^{x_2} \mathrm{d}x \sqrt{\frac{2m}{\hbar^2} \left[ V(x) - E \right]}}](http://upload.wikimedia.org/math/d/a/f/dafeb9d7c4b09ed1de9a90099d82cba9.png) ,

, are the 2 classical turning points for the potential barrier.

are the 2 classical turning points for the potential barrier. .

.