From Wikipedia, the free encyclopedia

Greenhouse effect schematic showing energy flows between space, the atmosphere, and Earth's surface. Energy influx and emittance are expressed in watts per square meter (W/m2).

A greenhouse gas (sometimes abbreviated GHG) is a gas in an atmosphere that absorbs and emits radiation within the thermal infrared range. This process is the fundamental cause of the greenhouse effect.[1] The primary greenhouse gases in the Earth's atmosphere are water vapor, carbon dioxide, methane, nitrous oxide, and ozone. Greenhouse gases greatly affect the temperature of the Earth; without them, Earth's surface would average about 33 °C colder, which is about 59 °F below the present average of 14 °C (57 °F).[2][3][4]

Since the beginning of the Industrial Revolution (taken as the year 1750), the burning of fossil fuels and extensive clearing of native forests has contributed to a 40% increase in the atmospheric concentration of carbon dioxide, from 280 ppm in 1750 to 392.6 ppm in 2012.[5][6] It has now reached 400 ppm in the northern hemisphere. This increase has occurred despite the uptake of a large portion of the emissions by various natural "sinks" involved in the carbon cycle.[7][8] Anthropogenic carbon dioxide (CO2) emissions (i.e., emissions produced by human activities) come from combustion of carbon-based fuels, principally wood, coal, oil, and natural gas.[9] Under ongoing greenhouse gas emissions, available Earth System Models project that the Earth's surface temperature could exceed historical analogs as early as 2047 affecting most ecosystems on Earth and the livelihoods of over 3 billion people worldwide.[10] Greenhouse gases also trigger[clarification needed] ocean bio-geochemical changes with broad ramifications in marine systems.[11]

In the Solar System, the atmospheres of Venus, Mars, and Titan also contain gases that cause a greenhouse effect, though Titan's atmosphere has an anti-greenhouse effect that reduces the warming.

Gases in Earth's atmosphere

Greenhouse gases

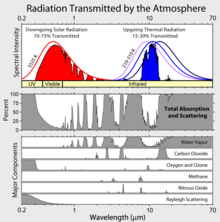

Atmospheric absorption and scattering at different wavelengths of electromagnetic waves. The largest absorption band of carbon dioxide is in the infrared.

Greenhouse gases are those that can absorb and emit infrared radiation,[1] but not radiation in or near the visible spectrum. In order, the most abundant greenhouse gases in Earth's atmosphere are:

- Water vapor (H

2O) - Carbon dioxide (CO2)

- Methane (CH

4) - Nitrous oxide (N

2O) - Ozone (O

3) - Chlorofluorocarbons (CFCs)

Non-greenhouse gases

Although contributing to many other physical and chemical reactions, the major atmospheric constituents, nitrogen (N2), oxygen (O

2), and argon (Ar), are not greenhouse gases. This is because molecules containing two atoms of the same element such as N

2 and O

2 and monatomic molecules such as argon (Ar) have no net change in their dipole moment when they vibrate and hence are almost totally unaffected by infrared radiation. Although molecules containing two atoms of different elements such as carbon monoxide (CO) or hydrogen chloride (HCl) absorb IR, these molecules are short-lived in the atmosphere owing to their reactivity and solubility. Because they do not contribute significantly to the greenhouse effect, they are usually omitted when discussing greenhouse gases.

Indirect radiative effects

The false colors in this image represent levels of carbon monoxide in the lower atmosphere, ranging from about 390 parts per billion (dark brown pixels), to 220 parts per billion (red pixels), to 50 parts per billion (blue pixels).[14]

Some gases have indirect radiative effects (whether or not they are a greenhouse gas themselves). This happens in two main ways. One way is that when they break down in the atmosphere they produce another greenhouse gas. For example, methane and carbon monoxide (CO) are oxidized to give carbon dioxide (and methane oxidation also produces water vapor; that will be considered below). Oxidation of CO to CO2 directly produces an unambiguous increase in radiative forcing although the reason is subtle. The peak of the thermal IR emission from the Earth's surface is very close to a strong vibrational absorption band of CO2 (667 cm−1). On the other hand, the single CO vibrational band only absorbs IR at much higher frequencies (2145 cm−1)[clarification needed], where the ~300 K thermal emission of the surface is at least a factor of ten lower. On the other hand, oxidation of methane to CO2, which requires reactions with the OH radical, produces an instantaneous reduction, since CO2 is a weaker greenhouse gas than methane; but it has a longer lifetime. As described below this is not the whole story, since the oxidations of CO and CH

4 are intertwined by both consuming OH radicals. In any case, the calculation of the total radiative effect needs to include both the direct and indirect forcing.

A second type of indirect effect happens when chemical reactions in the atmosphere involving these gases change the concentrations of greenhouse gases. For example, the destruction of non-methane volatile organic compounds (NMVOCs) in the atmosphere can produce ozone. The size of the indirect effect can depend strongly on where and when the gas is emitted.[15]

Methane has a number of indirect effects in addition to forming CO2. Firstly, the main chemical that destroys methane in the atmosphere is the hydroxyl radical (OH). Methane reacts with OH and so more methane means that the concentration of OH goes down. Effectively, methane increases its own atmospheric lifetime and therefore its overall radiative effect. The second effect is that the oxidation of methane can produce ozone. Thirdly, as well as making CO2 the oxidation of methane produces water; this is a major source of water vapor in the stratosphere, which is otherwise very dry. CO and NMVOC also produce CO2 when they are oxidized. They remove OH from the atmosphere and this leads to higher concentrations of methane. The surprising effect of this is that the global warming potential of CO is three times that of CO2.[16] The same process that converts NMVOC to carbon dioxide can also lead to the formation of tropospheric ozone. Halocarbons have an indirect effect because they destroy stratospheric ozone. Finally hydrogen can lead to ozone production and CH

4 increases as well as producing water vapor in the stratosphere.[15]

Contribution of clouds to Earth's greenhouse effect

The major non-gas contributor to the Earth's greenhouse effect, clouds, also absorb and emit infrared radiation and thus have an effect on radiative properties of the greenhouse gases. Clouds are water droplets or ice crystals suspended in the atmosphere.[17][18]Impacts on the overall greenhouse effect

Schmidt et al. (2010)[19] analysed how individual components of the atmosphere contribute to the total greenhouse effect. They estimated that water vapor accounts for about 50% of the Earth's greenhouse effect, with clouds contributing 25%, carbon dioxide 20%, and the minor greenhouse gases and aerosols accounting for the remaining 5%. In the study, the reference model atmosphere is for 1980 conditions. Image credit: NASA.[20]

The contribution of each gas to the greenhouse effect is affected by the characteristics of that gas, its abundance, and any indirect effects it may cause. For example, the direct radiative effect of a mass of methane is about 72 times stronger than the same mass of carbon dioxide over a 20-year time frame[21] but it is present in much smaller concentrations so that its total direct radiative effect is smaller, in part due to its shorter atmospheric lifetime. On the other hand, in addition to its direct radiative impact, methane has a large, indirect radiative effect because it contributes to ozone formation. Shindell et al. (2005)[22] argue that the contribution to climate change from methane is at least double previous estimates as a result of this effect.[23]

When ranked by their direct contribution to the greenhouse effect, the most important are:[17]

| Compound |

Formula |

Contribution (%) |

|---|---|---|

| Water vapor and clouds | H 2O |

36–72% |

| Carbon dioxide | CO2 | 9–26% |

| Methane | CH 4 |

4–9% |

| Ozone | O 3 |

3–7% |

In addition to the main greenhouse gases listed above, other greenhouse gases include sulfur hexafluoride, hydrofluorocarbons and perfluorocarbons (see IPCC list of greenhouse gases). Some greenhouse gases are not often listed. For example, nitrogen trifluoride has a high global warming potential (GWP) but is only present in very small quantities.[24]

Proportion of direct effects at a given moment

It is not possible to state that a certain gas causes an exact percentage of the greenhouse effect. This is because some of the gases absorb and emit radiation at the same frequencies as others, so that the total greenhouse effect is not simply the sum of the influence of each gas. The higher ends of the ranges quoted are for each gas alone; the lower ends account for overlaps with the other gases.[17][18] In addition, some gases such as methane are known to have large indirect effects that are still being quantified.[25]Atmospheric lifetime

Aside from water vapor, which has a residence time of about nine days,[26] major greenhouse gases are well mixed and take many years to leave the atmosphere.[27] Although it is not easy to know with precision how long it takes greenhouse gases to leave the atmosphere, there are estimates for the principal greenhouse gases. Jacob (1999)[28] defines the lifetime of an atmospheric species X in a one-box model as the average time that a molecule of X remains in the box. Mathematically

of an atmospheric species X in a one-box model as the average time that a molecule of X remains in the box. Mathematically  can be defined as the ratio of the mass

can be defined as the ratio of the mass  (in kg) of X in the box to its removal rate, which is the sum of the flow of X out of the box (

(in kg) of X in the box to its removal rate, which is the sum of the flow of X out of the box ( ), chemical loss of X (

), chemical loss of X ( ), and deposition of X (

), and deposition of X ( ) (all in kg/s):

) (all in kg/s):  .[28] If one stopped pouring any of this gas into the box, then after a time

.[28] If one stopped pouring any of this gas into the box, then after a time  , its concentration would be about halved.

, its concentration would be about halved.The atmospheric lifetime of a species therefore measures the time required to restore equilibrium following a sudden increase or decrease in its concentration in the atmosphere. Individual atoms or molecules may be lost or deposited to sinks such as the soil, the oceans and other waters, or vegetation and other biological systems, reducing the excess to background concentrations. The average time taken to achieve this is the mean lifetime.

Carbon dioxide has a variable atmospheric lifetime, and cannot be specified precisely.[29] The atmospheric lifetime of CO2 is estimated of the order of 30–95 years.[30] This figure accounts for CO2 molecules being removed from the atmosphere by mixing into the ocean, photosynthesis, and other processes. However, this excludes the balancing fluxes of CO2 into the atmosphere from the geological reservoirs, which have slower characteristic rates.[31] While more than half of the CO2 emitted is removed from the atmosphere within a century, some fraction (about 20%) of emitted CO2 remains in the atmosphere for many thousands of years.[32][33][34] Similar issues apply to other greenhouse gases, many of which have longer mean lifetimes than CO2. E.g., N2O has a mean atmospheric lifetime of 114 years.[21]

Radiative forcing

The Earth absorbs some of the radiant energy received from the sun, reflects some of it as light and reflects or radiates the rest back to space as heat.[35] The Earth's surface temperature depends on this balance between incoming and outgoing energy.[35] If this energy balance is shifted, the Earth's surface could become warmer or cooler, leading to a variety of changes in global climate.[35]A number of natural and man-made mechanisms can affect the global energy balance and force changes in the Earth's climate.[35] Greenhouse gases are one such mechanism.[35] Greenhouse gases in the atmosphere absorb and re-emit some of the outgoing energy radiated from the Earth's surface, causing that heat to be retained in the lower atmosphere.[35] As explained above, some greenhouse gases remain in the atmosphere for decades or even centuries, and therefore can affect the Earth's energy balance over a long time period.[35] Factors that influence Earth's energy balance can be quantified in terms of "radiative climate forcing."[35] Positive radiative forcing indicates warming (for example, by increasing incoming energy or decreasing the amount of energy that escapes to space), while negative forcing is associated with cooling.[35]

Global warming potential

The global warming potential (GWP) depends on both the efficiency of the molecule as a greenhouse gas and its atmospheric lifetime. GWP is measured relative to the same mass of CO2 and evaluated for a specific timescale. Thus, if a gas has a high (positive) radiative forcing but also a short lifetime, it will have a large GWP on a 20-year scale but a small one on a 100-year scale. Conversely, if a molecule has a longer atmospheric lifetime than CO2 its GWP will increase with the timescale considered. Carbon dioxide is defined to have a GWP of 1 over all time periods.Methane has an atmospheric lifetime of 12 ± 3 years. The 2007 IPCC report lists the GWP as 72 over a time scale of 20 years, 25 over 100 years and 7.6 over 500 years.[21] A 2014 analysis, however, states that although methane’s initial impact is about 100 times greater than that of CO2, because of the shorter atmospheric lifetime, after six or seven decades, the impact of the two gases is about equal, and from then on methane’s relative role continues to decline.[36] The decrease in GWP at longer times is because methane is degraded to water and CO2 through chemical reactions in the atmosphere.

Examples of the atmospheric lifetime and GWP relative to CO2 for several greenhouse gases are given in the following table:[21]

| Gas name | Chemical formula |

Lifetime (years) |

Global warming potential (GWP) for given time horizon | ||

|---|---|---|---|---|---|

| 20-yr | 100-yr | 500-yr | |||

| Carbon dioxide | CO2 | See above | 1 | 1 | 1 |

| Methane | CH 4 |

12 | 72 | 25 | 7.6 |

| Nitrous oxide | N 2O |

114 | 289 | 298 | 153 |

| CFC-12 | CCl 2F 2 |

100 | 11 000 | 10 900 | 5 200 |

| HCFC-22 | CHClF 2 |

12 | 5 160 | 1 810 | 549 |

| Tetrafluoromethane | CF 4 |

50 000 | 5 210 | 7 390 | 11 200 |

| Hexafluoroethane | C 2F 6 |

10 000 | 8 630 | 12 200 | 18 200 |

| Sulfur hexafluoride | SF 6 |

3 200 | 16 300 | 22 800 | 32 600 |

| Nitrogen trifluoride | NF 3 |

740 | 12 300 | 17 200 | 20 700 |

The use of CFC-12 (except some essential uses) has been phased out due to its ozone depleting properties.[37] The phasing-out of less active HCFC-compounds will be completed in 2030.[38]

Natural and anthropogenic sources

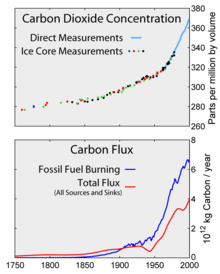

Top: Increasing atmospheric carbon dioxide levels as measured in the atmosphere and reflected in ice cores. Bottom: The amount of net carbon increase in the atmosphere, compared to carbon emissions from burning fossil fuel.

This diagram shows a simplified representation of the contemporary global carbon cycle. Changes are measured in gigatons of carbon per year (GtC/y). Canadell et al. (2007) estimated the growth rate of global average atmospheric CO2 for 2000–2006 as 1.93 parts-per-million per year (4.1 petagrams of carbon per year).[39]

Aside from purely human-produced synthetic halocarbons, most greenhouse gases have both natural and human-caused sources. During the pre-industrial Holocene, concentrations of existing gases were roughly constant. In the industrial era, human activities have added greenhouse gases to the atmosphere, mainly through the burning of fossil fuels and clearing of forests.[40][41]

The 2007 Fourth Assessment Report compiled by the IPCC (AR4) noted that "changes in atmospheric concentrations of greenhouse gases and aerosols, land cover and solar radiation alter the energy balance of the climate system", and concluded that "increases in anthropogenic greenhouse gas concentrations is very likely to have caused most of the increases in global average temperatures since the mid-20th century".[42] In AR4, "most of" is defined as more than 50%.

Abbreviations used in the two tables below: ppm = parts-per-million; ppb = parts-per-billion; ppt = parts-per-trillion; W/m2 = watts per square metre

Natural and anthropogenic sources

Top: Increasing atmospheric carbon dioxide levels as measured in the atmosphere and reflected in ice cores. Bottom: The amount of net carbon increase in the atmosphere, compared to carbon emissions from burning fossil fuel.

This diagram shows a simplified representation of the contemporary global carbon cycle. Changes are measured in gigatons of carbon per year (GtC/y). Canadell et al. (2007) estimated the growth rate of global average atmospheric CO2 for 2000–2006 as 1.93 parts-per-million per year (4.1 petagrams of carbon per year).[39]

Aside from purely human-produced synthetic halocarbons, most greenhouse gases have both natural and human-caused sources. During the pre-industrial Holocene, concentrations of existing gases were roughly constant. In the industrial era, human activities have added greenhouse gases to the atmosphere, mainly through the burning of fossil fuels and clearing of forests.[40][41]

The 2007 Fourth Assessment Report compiled by the IPCC (AR4) noted that "changes in atmospheric concentrations of greenhouse gases and aerosols, land cover and solar radiation alter the energy balance of the climate system", and concluded that "increases in anthropogenic greenhouse gas concentrations is very likely to have caused most of the increases in global average temperatures since the mid-20th century".[42] In AR4, "most of" is defined as more than 50%.

Abbreviations used in the two tables below: ppm = parts-per-million; ppb = parts-per-billion; ppt = parts-per-trillion; W/m2 = watts per square metre

| Gas | Pre-1750 tropospheric concentration[43] |

Recent tropospheric concentration[44] |

Absolute increase since 1750 |

Percentage increase since 1750 |

Increased radiative forcing (W/m2)[45] |

|---|---|---|---|---|---|

| Carbon dioxide (CO2) | 280 ppm[46] | 395.4 ppm[47] | 115.4 ppm | 41.2% | 1.88 |

| Methane (CH 4) |

700 ppb[48] | 1893 ppb /[49] 1762 ppb[49] |

1193 ppb / 1062 ppb |

170.4% / 151.7% |

0.49 |

| Nitrous oxide (N 2O) |

270 ppb[45][50] | 326 ppb /[49] 324 ppb[49] |

56 ppb / 54 ppb |

20.7% / 20.0% |

0.17 |

| Tropospheric ozone (O 3) |

237 ppb[43] | 337 ppb[43] | 100 ppb | 42% | 0.4[51] |

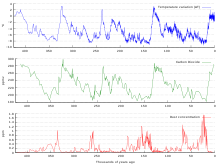

4 vary between glacial and interglacial phases, and concentrations of these gases correlate strongly with temperature. Direct data does not exist for periods earlier than those represented in the ice core record, a record that indicates CO2 mole fractions stayed within a range of 180 ppm to 280 ppm throughout the last 800,000 years, until the increase of the last 250 years. However, various proxies and modeling suggests larger variations in past epochs; 500 million years ago CO2 levels were likely 10 times higher than now.[53] Indeed higher CO2 concentrations are thought to have prevailed throughout most of the Phanerozoic eon, with concentrations four to six times current concentrations during the Mesozoic era, and ten to fifteen times current concentrations during the early Palaeozoic era until the middle of the Devonian period, about 400 Ma.[54][55][56] The spread of land plants is thought to have reduced CO2 concentrations during the late Devonian, and plant activities as both sources and sinks of CO2 have since been important in providing stabilising feedbacks.[57] Earlier still, a 200-million year period of intermittent, widespread glaciation extending close to the equator (Snowball Earth) appears to have been ended suddenly, about 550 Ma, by a colossal volcanic outgassing that raised the CO2 concentration of the atmosphere abruptly to 12%, about 350 times modern levels, causing extreme greenhouse conditions and carbonate deposition as limestone at the rate of about 1 mm per day.[58] This episode marked the close of the Precambrian eon, and was succeeded by the generally warmer conditions of the Phanerozoic, during which multicellular animal and plant life evolved. No volcanic carbon dioxide emission of comparable scale has occurred since. In the modern era, emissions to the atmosphere from volcanoes are only about 1% of emissions from human sources.[58][59][60]

Ice cores

Measurements from Antarctic ice cores show that before industrial emissions started atmospheric CO2 mole fractions were about 280 parts per million (ppm), and stayed between 260 and 280 during the preceding ten thousand years.[61] Carbon dioxide mole fractions in the atmosphere have gone up by approximately 35 percent since the 1900s, rising from 280 parts per million by volume to 387 parts per million in 2009. One study using evidence from stomata of fossilized leaves suggests greater variability, with carbon dioxide mole fractions above 300 ppm during the period seven to ten thousand years ago,[62] though others have argued that these findings more likely reflect calibration or contamination problems rather than actual CO2 variability.[63][64] Because of the way air is trapped in ice (pores in the ice close off slowly to form bubbles deep within the firn) and the time period represented in each ice sample analyzed, these figures represent averages of atmospheric concentrations of up to a few centuries rather than annual or decadal levels.Changes since the Industrial Revolution

Since the beginning of the Industrial Revolution, the concentrations of most of the greenhouse gases have increased. For example, the mole fraction of carbon dioxide has increased from 280 ppm by about 36% to 380 ppm, or 100 ppm over modern pre-industrial levels. The first 50 ppm increase took place in about 200 years, from the start of the Industrial Revolution to around 1973.[citation needed]; however the next 50 ppm increase took place in about 33 years, from 1973 to 2006.[65]

Recent data also shows that the concentration is increasing at a higher rate. In the 1960s, the average annual increase was only 37% of what it was in 2000 through 2007.[66]

Today, the stock of carbon in the atmosphere increases by more than 3 million tonnes per annum (0.04%) compared with the existing stock.[clarification needed] This increase is the result of human activities by burning fossil fuels, deforestation and forest degradation in tropical and boreal regions.[67]

The other greenhouse gases produced from human activity show similar increases in both amount and rate of increase. Many observations are available online in a variety of Atmospheric Chemistry Observational Databases.

Anthropogenic greenhouse gases

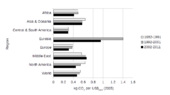

This bar graph shows global greenhouse gas emissions by sector from 1990 to 2005, measured in carbon dioxide equivalents.[69]

Since about 1750 human activity has increased the concentration of carbon dioxide and other greenhouse gases. Measured atmospheric concentrations of carbon dioxide are currently 100 ppm higher than pre-industrial levels.[70] Natural sources of carbon dioxide are more than 20 times greater than sources due to human activity,[71] but over periods longer than a few years natural sources are closely balanced by natural sinks, mainly photosynthesis of carbon compounds by plants and marine plankton. As a result of this balance, the atmospheric mole fraction of carbon dioxide remained between 260 and 280 parts per million for the 10,000 years between the end of the last glacial maximum and the start of the industrial era.[72]

It is likely that anthropogenic (i.e., human-induced) warming, such as that due to elevated greenhouse gas levels, has had a discernible influence on many physical and biological systems.[73] Future warming is projected to have a range of impacts, including sea level rise,[74] increased frequencies and severities of some extreme weather events,[74] loss of biodiversity,[75] and regional changes in agricultural productivity.[75]

The main sources of greenhouse gases due to human activity are:

- burning of fossil fuels and deforestation leading to higher carbon dioxide concentrations in the air. Land use change (mainly deforestation in the tropics) account for up to one third of total anthropogenic CO2 emissions.[72]

- livestock enteric fermentation and manure management,[76] paddy rice farming, land use and wetland changes, pipeline losses, and covered vented landfill emissions leading to higher methane atmospheric concentrations. Many of the newer style fully vented septic systems that enhance and target the fermentation process also are sources of atmospheric methane.

- use of chlorofluorocarbons (CFCs) in refrigeration systems, and use of CFCs and halons in fire suppression systems and manufacturing processes.

- agricultural activities, including the use of fertilizers, that lead to higher nitrous oxide (N

2O) concentrations.

| Seven main fossil fuel combustion sources |

Contribution (%) |

|---|---|

| Liquid fuels (e.g., gasoline, fuel oil) | 36% |

| Solid fuels (e.g., coal) | 35% |

| Gaseous fuels (e.g., natural gas) | 20% |

| Cement production | 3 % |

| Flaring gas industrially and at wells | < 1% |

| Non-fuel hydrocarbons | < 1% |

| "International bunker fuels" of transport not included in national inventories[78] |

4 % |

Carbon dioxide, methane, nitrous oxide (N

2O) and three groups of fluorinated gases (sulfur hexafluoride (SF

6), hydrofluorocarbons (HFCs), and perfluorocarbons (PFCs)) are the major anthropogenic greenhouse gases,[79]:147[80] and are regulated under the Kyoto Protocol international treaty, which came into force in 2005.[81] Emissions limitations specified in the Kyoto Protocol expire in 2012.[81] The Cancún agreement, agreed in 2010, includes voluntary pledges made by 76 countries to control emissions.[82] At the time of the agreement, these 76 countries were collectively responsible for 85% of annual global emissions.[82]

Although CFCs are greenhouse gases, they are regulated by the Montreal Protocol, which was motivated by CFCs' contribution to ozone depletion rather than by their contribution to global warming. Note that ozone depletion has only a minor role in greenhouse warming though the two processes often are confused in the media.

Sectors

- Tourism

Role of water vapor

Water vapor accounts for the largest percentage of the greenhouse effect, between 36% and 66% for clear sky conditions and between 66% and 85% when including clouds.[18] Water vapor concentrations fluctuate regionally, but human activity does not significantly affect water vapor concentrations except at local scales, such as near irrigated fields. The atmospheric concentration of vapor is highly variable and depends largely on temperature, from less than 0.01% in extremely cold regions up to 3% by mass at in saturated air at about 32 °C.(see Relative humidity#other important facts) [84]

The average residence time of a water molecule in the atmosphere is only about nine days, compared to years or centuries for other greenhouse gases such as CH

4 and CO2.[85] Thus, water vapor responds to and amplifies effects of the other greenhouse gases. The Clausius–Clapeyron relation establishes that more water vapor will be present per unit volume at elevated temperatures. This and other basic principles indicate that warming associated with increased concentrations of the other greenhouse gases also will increase the concentration of water vapor (assuming that the relative humidity remains approximately constant; modeling and observational studies find that this is indeed so). Because water vapor is a greenhouse gas, this results in further warming and so is a "positive feedback" that amplifies the original warming. Eventually other earth processes offset these positive feedbacks, stabilizing the global temperature at a new equilibrium and preventing the loss of Earth's water through a Venus-like runaway greenhouse effect.[86]

Direct greenhouse gas emissions

Between the period 1970 to 2004, GHG emissions (measured in CO2-equivalent)[87] increased at an average rate of 1.6% per year, with CO2 emissions from the use of fossil fuels growing at a rate of 1.9% per year.[88][89] Total anthropogenic emissions at the end of 2009 were estimated at 49.5 gigatonnes CO2-equivalent.[90]:15 These emissions include CO2 from fossil fuel use and from land use, as well as emissions of methane, nitrous oxide and other GHGs covered by the Kyoto Protocol.At present, the primary source of CO2 emissions is the burning of coal, natural gas, and petroleum for electricity and heat.[91]

Regional and national attribution of emissions

This figure shows the relative fraction of anthropogenic greenhouse gases coming from each of eight categories of sources, as estimated by the Emission Database for Global Atmospheric Research version 3.2, fast track 2000 project [1]. These values are intended to provide a snapshot of global annual greenhouse gas emissions in the year 2000. The top panel shows the sum over all anthropogenic greenhouse gases, weighted by their global warming potential over the next 100 years. This consists of 72% carbon dioxide, 18% methane, 8% nitrous oxide and 1% other gases. Lower panels show the comparable information for each of these three primary greenhouse gases, with the same coloring of sectors as used in the top chart. Segments with less than 1% fraction are not labeled.

There are several different ways of measuring GHG emissions, for example, see World Bank (2010)[92]:362 for tables of national emissions data. Some variables that have been reported[93] include:

- Definition of measurement boundaries: Emissions can be attributed geographically, to the area where they were emitted (the territory principle) or by the activity principle to the territory produced the emissions. These two principles result in different totals when measuring, for example, electricity importation from one country to another, or emissions at an international airport.

- Time horizon of different GHGs: Contribution of a given GHG is reported as a CO2 equivalent. The calculation to determine this takes into account how long that gas remains in the atmosphere. This is not always known accurately and calculations must be regularly updated to reflect new information.

- What sectors are included in the calculation (e.g., energy industries, industrial processes, agriculture etc.): There is often a conflict between transparency and availability of data.

- The measurement protocol itself: This may be via direct measurement or estimation. The four main methods are the emission factor-based method, mass balance method, predictive emissions monitoring systems, and continuous emissions monitoring systems. These methods differ in accuracy, cost, and usability.

Emissions may be measured over long time periods. This measurement type is called historical or cumulative emissions. Cumulative emissions give some indication of who is responsible for the build-up in the atmospheric concentration of GHGs (IEA, 2007, p. 199).[95]

The national accounts balance would be positively related to carbon emissions. The national accounts balance shows the difference between exports and imports. For many richer nations, such as the United States, the accounts balance is negative because more goods are imported than they are exported. This is mostly due to the fact that it is cheaper to produce goods outside of developed countries, leading the economies of developed countries to become increasingly dependent on services and not goods. We believed that a positive accounts balance would means that more production was occurring in a country, so more factories working would increase carbon emission levels.(Holtz-Eakin, 1995, pp.;85;101).[96]

Emissions may also be measured across shorter time periods. Emissions changes may, for example, be measured against a base year of 1990. 1990 was used in the United Nations Framework Convention on Climate Change (UNFCCC) as the base year for emissions, and is also used in the Kyoto Protocol (some gases are also measured from the year 1995).[79]:146,149 A country's emissions may also be reported as a proportion of global emissions for a particular year.

Another measurement is of per capita emissions. This divides a country's total annual emissions by its mid-year population.[92]:370 Per capita emissions may be based on historical or annual emissions (Banuri et al., 1996, pp. 106–107).[94]

Land-use change

Land-use change, e.g., the clearing of forests for agricultural use, can affect the concentration of GHGs in the atmosphere by altering how much carbon flows out of the atmosphere into carbon sinks.[97] Accounting for land-use change can be understood as an attempt to measure "net" emissions, i.e., gross emissions from all GHG sources minus the removal of emissions from the atmosphere by carbon sinks (Banuri et al., 1996, pp. 92–93).[94]

There are substantial uncertainties in the measurement of net carbon emissions.[98] Additionally, there is controversy over how carbon sinks should be allocated between different regions and over time (Banuri et al., 1996, p. 93).[94] For instance, concentrating on more recent changes in carbon sinks is likely to favour those regions that have deforested earlier, e.g., Europe.

Greenhouse gas intensity

Greenhouse gas intensity is a ratio between greenhouse gas emissions and another metric, e.g., gross domestic product (GDP) or energy use. The terms "carbon intensity" and "emissions intensity" are also sometimes used.[99] GHG intensities may be calculated using market exchange rates (MER) or purchasing power parity (PPP) (Banuri et al., 1996, p. 96).[94] Calculations based on MER show large differences in intensities between developed and developing countries, whereas calculations based on PPP show smaller differences.

Cumulative and historical emissions

Map of cumulative per capita anthropogenic atmospheric CO2 emissions by country. Cumulative emissions include land use change, and are measured between the years 1950 and 2000.

Cumulative anthropogenic (i.e., human-emitted) emissions of CO2 from fossil fuel use are a major cause of global warming,[100] and give some indication of which countries have contributed most to human-induced climate change.[101]:15

| Region | Industrial CO2 |

Total CO2 |

|---|---|---|

| OECD North America | 33.2 | 29.7 |

| OECD Europe | 26.1 | 16.6 |

| Former USSR | 14.1 | 12.5 |

| China | 5.5 | 6.0 |

| Eastern Europe | 5.5 | 4.8 |

The table above to the left is based on Banuri et al. (1996, p. 94).[94] Overall, developed countries accounted for 83.8% of industrial CO2 emissions over this time period, and 67.8% of total CO2 emissions. Developing countries accounted for industrial CO2 emissions of 16.2% over this time period, and 32.2% of total CO2 emissions. The estimate of total CO2 emissions includes biotic carbon emissions, mainly from deforestation. Banuri et al. (1996, p. 94)[94] calculated per capita cumulative emissions based on then-current population. The ratio in per capita emissions between industrialized countries and developing countries was estimated at more than 10 to 1.

Including biotic emissions brings about the same controversy mentioned earlier regarding carbon sinks and land-use change (Banuri et al., 1996, pp. 93–94).[94] The actual calculation of net emissions is very complex, and is affected by how carbon sinks are allocated between regions and the dynamics of the climate system.

Non-OECD countries accounted for 42% of cumulative energy-related CO2 emissions between 1890–2007.[102]:179–180 Over this time period, the US accounted for 28% of emissions; the EU, 23%; Russia, 11%; China, 9%; other OECD countries, 5%; Japan, 4%; India, 3%; and the rest of the world, 18%.[102]:179–180

Changes since a particular base year

Between 1970–2004, global growth in annual CO2 emissions was driven by North America, Asia, and the Middle East.[103] The sharp acceleration in CO2 emissions since 2000 to more than a 3% increase per year (more than 2 ppm per year) from 1.1% per year during the 1990s is attributable to the lapse of formerly declining trends in carbon intensity of both developing and developed nations. China was responsible for most of global growth in emissions during this period. Localised plummeting emissions associated with the collapse of the Soviet Union have been followed by slow emissions growth in this region due to more efficient energy use, made necessary by the increasing proportion of it that is exported.[77] In comparison, methane has not increased appreciably, and N2O by 0.25% y−1.

Using different base years for measuring emissions has an effect on estimates of national contributions to global warming.[101]:17–18[104] This can be calculated by dividing a country's highest contribution to global warming starting from a particular base year, by that country's minimum contribution to global warming starting from a particular base year. Choosing between different base years of 1750, 1900, 1950, and 1990 has a significant effect for most countries.[101]:17–18 Within the G8 group of countries, it is most significant for the UK, France and Germany. These countries have a long history of CO2 emissions (see the section on Cumulative and historical emissions).

Annual emissions

Annual per capita emissions in the industrialized countries are typically as much as ten times the average in developing countries.[79]:144 Due to China's fast economic development, its annual per capita emissions are quickly approaching the levels of those in the Annex I group of the Kyoto Protocol (i.e., the developed countries excluding the USA).[105] Other countries with fast growing emissions are South Korea, Iran, and Australia. On the other hand, annual per capita emissions of the EU-15 and the USA are gradually decreasing over time.[105] Emissions in Russia and the Ukraine have decreased fastest since 1990 due to economic restructuring in these countries.[106]

Energy statistics for fast growing economies are less accurate than those for the industrialized countries. For China's annual emissions in 2008, the Netherlands Environmental Assessment Agency estimated an uncertainty range of about 10%.[105]

The GHG footprint, or greenhouse gas footprint, refers to the amount of GHG that are emitted during the creation of products or services. It is more comprehensive than the commonly used carbon footprint, which measures only carbon dioxide, one of many greenhouse gases.

Top emitter countries

Annual

In 2009, the annual top ten emitting countries accounted for about two-thirds of the world's annual energy-related CO2 emissions.[107]| Country | % of global total annual emissions |

Tonnes of GHG per capita |

|---|---|---|

| People's Rep. of China | 23.6 | 5.13 |

| United States | 17.9 | 16.9 |

| India | 5.5 | 1.37 |

| Russian Federation | 5.3 | 10.8 |

| Japan | 3.8 | 8.6 |

| Germany | 2.6 | 9.2 |

| Islamic Rep. of Iran | 1.8 | 7.3 |

| Canada | 1.8 | 15.4 |

| Korea | 1.8 | 10.6 |

| United Kingdom | 1.6 | 7.5 |

Cumulative

| Country | % of world total |

Metric tonnes CO2 per person |

|---|---|---|

| United States | 28.5 | 1,132.7 |

| China | 9.36 | 85.4 |

| Russian Federation | 7.95 | 677.2 |

| Germany | 6.78 | 998.9 |

| United Kingdom | 5.73 | 1,127.8 |

| Japan | 3.88 | 367 |

| France | 2.73 | 514.9 |

| India | 2.52 | 26.7 |

| Canada | 2.17 | 789.2 |

| Ukraine | 2.13 | 556.4 |

Embedded emissions

One way of attributing greenhouse gas (GHG) emissions is to measure the embedded emissions (also referred to as "embodied emissions") of goods that are being consumed. Emissions are usually measured according to production, rather than consumption.[110] For example, in the main international treaty on climate change (the UNFCCC), countries report on emissions produced within their borders, e.g., the emissions produced from burning fossil fuels.[102]:179[111]:1 Under a production-based accounting of emissions, embedded emissions on imported goods are attributed to the exporting, rather than the importing, country. Under a consumption-based accounting of emissions, embedded emissions on imported goods are attributed to the importing country, rather than the exporting, country.Davis and Caldeira (2010)[111]:4 found that a substantial proportion of CO2 emissions are traded internationally. The net effect of trade was to export emissions from China and other emerging markets to consumers in the US, Japan, and Western Europe. Based on annual emissions data from the year 2004, and on a per-capita consumption basis, the top-5 emitting countries were found to be (in tCO2 per person, per year): Luxembourg (34.7), the US (22.0), Singapore (20.2), Australia (16.7), and Canada (16.6).[111]:5 Carbon Trust research revealed that approximately 25% of all CO2 emissions from human activities 'flow' (i.e. are imported or exported) from one country to another. Major developed economies were found to be typically net importers of embodied carbon emissions — with UK consumption emissions 34% higher than production emissions, and Germany (29%), Japan (19%) and the USA (13%) also significant net importers of embodied emissions.[112]

Effect of policy

Governments have taken action to reduce GHG emissions (climate change mitigation). Assessments of policy effectiveness have included work by the Intergovernmental Panel on Climate Change,[113] International Energy Agency,[114][115] and United Nations Environment Programme.[116] Policies implemented by governments have included[117][118][119] national and regional targets to reduce emissions, promoting energy efficiency, and support for renewable energy.Countries and regions listed in Annex I of the United Nations Framework Convention on Climate Change (UNFCCC) (i.e., the OECD and former planned economies of the Soviet Union) are required to submit periodic assessments to the UNFCCC of actions they are taking to address climate change.[119]:3 Analysis by the UNFCCC (2011)[119]:8 suggested that policies and measures undertaken by Annex I Parties may have produced emission savings of 1.5 thousand Tg CO2-eq in the year 2010, with most savings made in the energy sector. The projected emissions saving of 1.5 thousand Tg CO2-eq is measured against a hypothetical "baseline" of Annex I emissions, i.e., projected Annex I emissions in the absence of policies and measures. The total projected Annex I saving of 1.5 thousand CO2-eq does not include emissions savings in seven of the Annex I Parties.[119]:8

Projections

A wide range of projections of future GHG emissions have been produced.[120] Rogner et al. (2007)[121] assessed the scientific literature on GHG projections. Rogner et al. (2007)[88] concluded that unless energy policies changed substantially, the world would continue to depend on fossil fuels until 2025–2030. Projections suggest that more than 80% of the world's energy will come from fossil fuels. This conclusion was based on "much evidence" and "high agreement" in the literature.[88] Projected annual energy-related CO2 emissions in 2030 were 40–110% higher than in 2000, with two-thirds of the increase originating in developing countries.[88] Projected annual per capita emissions in developed country regions remained substantially lower (2.8–5.1 tonnes CO2) than those in developed country regions (9.6–15.1 tonnes CO2).[122] Projections consistently showed increase in annual world GHG emissions (the "Kyoto" gases,[123] measured in CO2-equivalent) of 25–90% by 2030, compared to 2000.[88]Relative CO2 emission from various fuels

One liter of gasoline, when used as a fuel, produces 2.32 kg (about 1300 liters or 1.3 cubic meters) of carbon dioxide, a greenhouse gas. One US gallon produces 19.4 lb (1,291.5 gallons or 172.65 cubic feet)[124][125][126]| Fuel name | CO2 emitted (lbs/106 Btu) |

CO2 emitted (g/MJ) |

|---|---|---|

| Natural gas | 117 | 50.30 |

| Liquefied petroleum gas | 139 | 59.76 |

| Propane | 139 | 59.76 |

| Aviation gasoline | 153 | 65.78 |

| Automobile gasoline | 156 | 67.07 |

| Kerosene | 159 | 68.36 |

| Fuel oil | 161 | 69.22 |

| Tires/tire derived fuel | 189 | 81.26 |

| Wood and wood waste | 195 | 83.83 |

| Coal (bituminous) | 205 | 88.13 |

| Coal (sub-bituminous) | 213 | 91.57 |

| Coal (lignite) | 215 | 92.43 |

| Petroleum coke | 225 | 96.73 |

| Tar-sand Bitumen | [citation needed] | [citation needed] |

| Coal (anthracite) | 227 | 97.59 |

Life-cycle greenhouse-gas emissions of energy sources

A literature review of numerous energy sources CO2 emissions by the IPCC in 2011, found that, the CO2 emission value, that fell within the 50th percentile of all total life cycle emissions studies conducted, was as follows.[128]| Technology | Description | 50th percentile (g CO2/kWhe) |

|---|---|---|

| Hydroelectric | reservoir | 4 |

| Ocean Energy | wave and tidal | 8 |

| Wind | onshore | 12 |

| Nuclear | various generation II reactor types | 16 |

| Biomass | various | 18 |

| Solar thermal | parabolic trough | 22 |

| Geothermal | hot dry rock | 45 |

| Solar PV | Polycrystaline silicon | 46 |

| Natural gas | various combined cycle turbines without scrubbing | 469 |

| Coal | various generator types without scrubbing | 1001 |

Removal from the atmosphere ("sinks")

Natural processes

Greenhouse gases can be removed from the atmosphere by various processes, as a consequence of:- a physical change (condensation and precipitation remove water vapor from the atmosphere).

- a chemical reaction within the atmosphere. For example, methane is oxidized by reaction with naturally occurring hydroxyl radical, OH· and degraded to CO2 and water vapor (CO2 from the oxidation of methane is not included in the methane Global warming potential). Other chemical reactions include solution and solid phase chemistry occurring in atmospheric aerosols.

- a physical exchange between the atmosphere and the other compartments of the planet. An example is the mixing of atmospheric gases into the oceans.

- a chemical change at the interface between the atmosphere and the other compartments of the planet. This is the case for CO2, which is reduced by photosynthesis of plants, and which, after dissolving in the oceans, reacts to form carbonic acid and bicarbonate and carbonate ions (see ocean acidification).

- a photochemical change. Halocarbons are dissociated by UV light releasing Cl· and F· as free radicals in the stratosphere with harmful effects on ozone (halocarbons are generally too stable to disappear by chemical reaction in the atmosphere).

Negative emissions

A number of technologies remove greenhouse gases emissions from the atmosphere. Most widely analysed are those that remove carbon dioxide from the atmosphere, either to geologic formations such as bio-energy with carbon capture and storage[129][130][131] and carbon dioxide air capture,[131] or to the soil as in the case with biochar.[131] The IPCC has pointed out that many long-term climate scenario models require large scale manmade negative emissions to avoid serious climate change.[132]History of scientific research

In the late 19th century scientists experimentally discovered that N2 and O

2 do not absorb infrared radiation (called, at that time, "dark radiation"). On the contrary, water (both as true vapor and condensed in the form of microscopic droplets suspended in clouds) and CO2 and other poly-atomic gaseous molecules do absorb infrared radiation. In the early 20th century researchers realized that greenhouse gases in the atmosphere made the Earth's overall temperature higher than it would be without them. During the late 20th century, a scientific consensus evolved that increasing concentrations of greenhouse gases in the atmosphere cause a substantial rise in global temperatures and changes to other parts of the climate system,[133] with consequences for the environment and for human health.

is the mass of atom

is the mass of atom  ).

).

is the wavenumber; [wavenumber = frequency/(speed of light)]

is the wavenumber; [wavenumber = frequency/(speed of light)]

is usually referred to as the coherence time and the second time period

is usually referred to as the coherence time and the second time period  is known as the waiting time. The excitation frequency is obtained by Fourier transforming along the

is known as the waiting time. The excitation frequency is obtained by Fourier transforming along the

are the internal coordinates for stretching of each of the four C-H bonds.

are the internal coordinates for stretching of each of the four C-H bonds.

,

, , the product of the

, the product of the