From Wikipedia, the free encyclopedia

Exponential growth occurs when the growth rate of the value of a mathematical function is proportional to the function's current value. Exponential decay occurs in the same way when the growth rate is negative. In the case of a discrete domain of definition with equal intervals, it is also called geometric growth or geometric decay (the function values form a geometric progression).

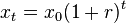

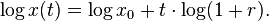

The formula for exponential growth of a variable x at the (positive or negative) growth rate r, as time t goes on in discrete intervals (that is, at integer times 0, 1, 2, 3, ...), is

Examples

- Biology

- The number of microorganisms in a culture will increase exponentially until an essential nutrient is exhausted. Typically the first organism splits into two daughter organisms, who then each split to form four, who split to form eight, and so on.

- A virus (for example SARS, or smallpox) typically will spread exponentially at first, if no artificial immunization is available. Each infected person can infect multiple new people.

- Human population, if the number of births and deaths per person per year were to remain at current levels (but also see logistic growth). For example, according to the United States Census Bureau, over the last 100 years (1910 to 2010), the population of the United States of America is exponentially increasing at an average rate of one and a half percent a year (1.5%). This means that the doubling time of the American population (depending on the yearly growth in population) is approximately 50 years.[1]

- Many responses of living beings to stimuli, including human perception, are logarithmic responses, which are the inverse of exponential responses; the loudness and frequency of sound are perceived logarithmically, even with very faint stimulus, within the limits of perception. This is the reason that exponentially increasing the brightness of visual stimuli is perceived by humans as a linear increase, rather than an exponential increase. This has survival value. Generally it is important for the organisms to respond to stimuli in a wide range of levels, from very low levels, to very high levels, while the accuracy of the estimation of differences at high levels of stimulus is much less important for survival.

- Genetic complexity of life on Earth has doubled every 376 million years. Extrapolating this exponential growth backwards indicates life began 9.7 billion years ago, potentially predating the Earth by 5.2 billion years.[2][3]

- Physics

- Avalanche breakdown within a dielectric material. A free electron becomes sufficiently accelerated by an externally applied electrical field that it frees up additional electrons as it collides with atoms or molecules of the dielectric media. These secondary electrons also are accelerated, creating larger numbers of free electrons. The resulting exponential growth of electrons and ions may rapidly lead to complete dielectric breakdown of the material.

- Nuclear chain reaction (the concept behind nuclear reactors and nuclear weapons). Each uranium nucleus that undergoes fission produces multiple neutrons, each of which can be absorbed by adjacent uranium atoms, causing them to fission in turn. If the probability of neutron absorption exceeds the probability of neutron escape (a function of the shape and mass of the uranium), k > 0 and so the production rate of neutrons and induced uranium fissions increases exponentially, in an uncontrolled reaction. "Due to the exponential rate of increase, at any point in the chain reaction 99% of the energy will have been released in the last 4.6 generations. It is a reasonable approximation to think of the first 53 generations as a latency period leading up to the actual explosion, which only takes 3–4 generations."[4]

- Positive feedback within the linear range of electrical or electroacoustic amplification can result in the exponential growth of the amplified signal, although resonance effects may favor some component frequencies of the signal over others.

- Heat transfer experiments yield results whose best fit line are exponential decay curves.

- Economics

- Economic growth is expressed in percentage terms, implying exponential growth. For example, U.S. GDP per capita has grown at an exponential rate of approximately two percent since World War 2.[citation needed]

- Finance

- Compound interest at a constant interest rate provides exponential growth of the capital. See also rule of 72.

- Pyramid schemes or Ponzi schemes also show this type of growth resulting in high profits for a few initial investors and losses among great numbers of investors.

- Computer technology

- Processing power of computers. See also Moore's law and technological singularity. (Under exponential growth, there are no singularities. The singularity here is a metaphor.)

- In computational complexity theory, computer algorithms of exponential complexity require an exponentially increasing amount of resources (e.g. time, computer memory) for only a constant increase in problem size. So for an algorithm of time complexity 2x, if a problem of size x = 10 requires 10 seconds to complete, and a problem of size x = 11 requires 20 seconds, then a problem of size x = 12 will require 40 seconds. This kind of algorithm typically becomes unusable at very small problem sizes, often between 30 and 100 items (most computer algorithms need to be able to solve much larger problems, up to tens of thousands or even millions of items in reasonable times, something that would be physically impossible with an exponential algorithm). Also, the effects of Moore's Law do not help the situation much because doubling processor speed merely allows you to increase the problem size by a constant. E.g. if a slow processor can solve problems of size x in time t, then a processor twice as fast could only solve problems of size x+constant in the same time t. So exponentially complex algorithms are most often impractical, and the search for more efficient algorithms is one of the central goals of computer science today.

- Internet traffic growth.[citation needed]

Basic formula

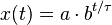

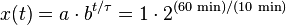

A quantity x depends exponentially on time t ifExample: If a species of bacteria doubles every ten minutes, starting out with only one bacterium, how many bacteria would be present after one hour? The question implies a = 1, b = 2 and τ = 10 min.

Many pairs (b, τ) of a dimensionless non-negative number b and an amount of time τ (a physical quantity which can be expressed as the product of a number of units and a unit of time) represent the same growth rate, with τ proportional to log b. For any fixed b not equal to 1 (e.g. e or 2), the growth rate is given by the non-zero time τ. For any non-zero time τ the growth rate is given by the dimensionless positive number b.

Thus the law of exponential growth can be written in different but mathematically equivalent forms, by using a different base. The most common forms are the following:

Parameters (negative in the case of exponential decay):

- The growth constant k is the frequency (number of times per unit time) of growing by a factor e; in finance it is also called the logarithmic return, continuously compounded return, or force of interest.

- The e-folding time τ is the time it takes to grow by a factor e.

- The doubling time T is the time it takes to double.

- The percent increase r (a dimensionless number) in a period p.

If p is the unit of time the quotient t/p is simply the number of units of time. Using the notation t for the (dimensionless) number of units of time rather than the time itself, t/p can be replaced by t, but for uniformity this has been avoided here. In this case the division by p in the last formula is not a numerical division either, but converts a dimensionless number to the correct quantity including unit.

A popular approximated method for calculating the doubling time from the growth rate is the rule of 70, i.e.

.

.Reformulation as log-linear growth

If a variable x exhibits exponential growth according to , then the log (to any base) of x grows linearly over time, as can be seen by taking logarithms of both sides of the exponential growth equation:

, then the log (to any base) of x grows linearly over time, as can be seen by taking logarithms of both sides of the exponential growth equation:Differential equation

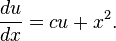

The exponential function satisfies the linear differential equation:

satisfies the linear differential equation:For a nonlinear variation of this growth model see logistic function.

Difference equation

The difference equationOther growth rates

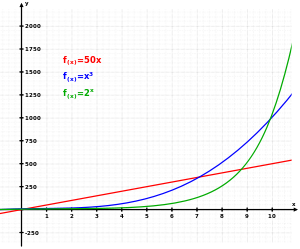

In the long run, exponential growth of any kind will overtake linear growth of any kind (the basis of the Malthusian catastrophe) as well as any polynomial growth, i.e., for all α:Growth rates may also be faster than exponential.

In the above differential equation, if k < 0, then the quantity experiences exponential decay.

Limitations of models

Exponential growth models of physical phenomena only apply within limited regions, as unbounded growth is not physically realistic. Although growth may initially be exponential, the modelled phenomena will eventually enter a region in which previously ignored negative feedback factors become significant (leading to a logistic growth model) or other underlying assumptions of the exponential growth model, such as continuity or instantaneous feedback, break down.Exponential stories

Rice on a chessboard

According to an old legend, vizier Sissa Ben Dahir presented an Indian King Sharim with a beautiful, hand-made chessboard. The king asked what he would like in return for his gift and the courtier surprised the king by asking for one grain of rice on the first square, two grains on the second, four grains on the third etc. The king readily agreed and asked for the rice to be brought. All went well at first, but the requirement for 2 n − 1 grains on the nth square demanded over a million grains on the 21st square, more than a million million (aka trillion) on the 41st and there simply was not enough rice in the whole world for the final squares. (From Swirski, 2006)[5]For variation of this see second half of the chessboard in reference to the point where an exponentially growing factor begins to have a significant economic impact on an organization's overall business strategy.

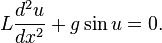

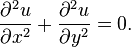

and only one undifferentiated quantity

and only one undifferentiated quantity  ; those involving

; those involving  and

and  ; and those involving more than two derivatives. As examples of the three cases, he solves the equations:

; and those involving more than two derivatives. As examples of the three cases, he solves the equations: ,

, , and

, and , respectively.

, respectively.

in the xy-plane, define some rectangular region

in the xy-plane, define some rectangular region  , such that

, such that ![Z = [l,m]\times[n,p]](http://upload.wikimedia.org/math/0/6/c/06c3b586eefc7610dc96c3d63cd5b0fe.png) and

and  and an initial condition

and an initial condition  , then there is a unique solution to this initial value problem if

, then there is a unique solution to this initial value problem if  and

and  are both continuous on

are both continuous on  .

.

, if

, if  and

and  are continuous on some interval containing

are continuous on some interval containing  ,

, ![\frac{\part k(t)}{\part t} = s [k(t)]^\alpha - \delta k(t)](http://upload.wikimedia.org/math/5/6/8/56891c7fb05bf3c2568aa89fd1c33dd2.png)