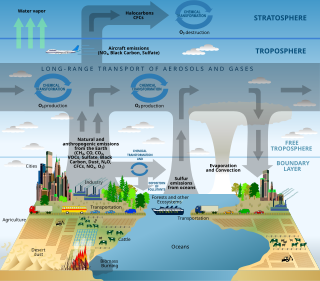

Example of scientific modelling. A schematic of chemical and transport processes related to atmospheric composition.

Scientific modelling is a scientific activity, the aim of which is to make a particular part or feature of the world easier to understand, define, quantify, visualize, or simulate by referencing it to existing and usually commonly accepted knowledge. It requires selecting and identifying relevant aspects of a situation in the real world and then using different types of models for different aims, such as conceptual models to better understand, operational models to operationalize, mathematical models to quantify, and graphical models to visualize the subject. Modelling is an essential and inseparable part of many scientific disciplines, each of which have their own ideas about specific types of modelling.[1][2]

There is also an increasing attention to scientific modelling[3] in fields such as science education, philosophy of science, systems theory, and knowledge visualization. There is growing collection of methods, techniques and meta-theory about all kinds of specialized scientific modelling.

Overview

A scientific model seeks to represent empirical objects, phenomena, and physical processes in a logical and objective way. All models are in simulacra, that is, simplified reflections of reality that, despite being approximations, can be extremely useful.[4] Building and disputing models is fundamental to the scientific enterprise. Complete and true representation may be impossible, but scientific debate often concerns which is the better model for a given task, e.g., which is the more accurate climate model for seasonal forecasting.[5]

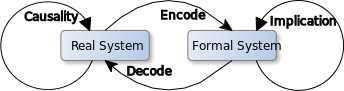

Attempts to formalize the principles of the empirical sciences use an interpretation to model reality, in the same way logicians axiomatize the principles of logic. The aim of these attempts is to construct a formal system that will not produce theoretical consequences that are contrary to what is found in reality. Predictions or other statements drawn from such a formal system mirror or map the real world only insofar as these scientific models are true.[6][7]

For the scientist, a model is also a way in which the human thought processes can be amplified.[8] For instance, models that are rendered in software allow scientists to leverage computational power to simulate, visualize, manipulate and gain intuition about the entity, phenomenon, or process being represented. Such computer models are in silico. Other types of scientific models are in vivo (living models, such as laboratory rats) and in vitro (in glassware, such as tissue culture).[9]

Basics of scientific modelling

Modelling as a substitute for direct measurement and experimentation

Models are typically used when it is either impossible or impractical to create experimental conditions in which scientists can directly measure outcomes. Direct measurement of outcomes under controlled conditions (see Scientific method) will always be more reliable than modelled estimates of outcomes.Within modelling and simulation, a model is a task-driven, purposeful simplification and abstraction of a perception of reality, shaped by physical, legal, and cognitive constraints.[10] It is task-driven, because a model is captured with a certain question or task in mind. Simplifications leave all the known and observed entities and their relation out that are not important for the task. Abstraction aggregates information that is important, but not needed in the same detail as the object of interest. Both activities, simplification and abstraction, are done purposefully. However, they are done based on a perception of reality. This perception is already a model in itself, as it comes with a physical constraint. There are also constraints on what we are able to legally observe with our current tools and methods, and cognitive constraints which limit what we are able to explain with our current theories. This model comprises the concepts, their behavior, and their relations in formal form and is often referred to as a conceptual model. In order to execute the model, it needs to be implemented as a computer simulation. This requires more choices, such as numerical approximations or the use of heuristics.[11] Despite all these epistemological and computational constraints, simulation has been recognized as the third pillar of scientific methods: theory building, simulation, and experimentation.[12]

Simulation

A simulation is the implementation of a model. A steady state simulation provides information about the system at a specific instant in time (usually at equilibrium, if such a state exists). A dynamic simulation provides information over time. A simulation brings a model to life and shows how a particular object or phenomenon will behave. Such a simulation can be useful for testing, analysis, or training in those cases where real-world systems or concepts can be represented by models.[13]Structure

Structure is a fundamental and sometimes intangible notion covering the recognition, observation, nature, and stability of patterns and relationships of entities. From a child's verbal description of a snowflake, to the detailed scientific analysis of the properties of magnetic fields, the concept of structure is an essential foundation of nearly every mode of inquiry and discovery in science, philosophy, and art.[14]Systems

A system is a set of interacting or interdependent entities, real or abstract, forming an integrated whole. In general, a system is a construct or collection of different elements that together can produce results not obtainable by the elements alone.[15] The concept of an 'integrated whole' can also be stated in terms of a system embodying a set of relationships which are differentiated from relationships of the set to other elements, and from relationships between an element of the set and elements not a part of the relational regime. There are two types of system models: 1) discrete in which the variables change instantaneously at separate points in time and, 2) continuous where the state variables change continuously with respect to time.[16]Generating a model

Modelling is the process of generating a model as a conceptual representation of some phenomenon. Typically a model will deal with only some aspects of the phenomenon in question, and two models of the same phenomenon may be essentially different—that is to say, that the differences between them comprise more than just a simple renaming of components.Such differences may be due to differing requirements of the model's end users, or to conceptual or aesthetic differences among the modellers and to contingent decisions made during the modelling process. Considerations that may influence the structure of a model might be the modeller's preference for a reduced ontology, preferences regarding statistical models versus deterministic models, discrete versus continuous time, etc. In any case, users of a model need to understand the assumptions made that are pertinent to its validity for a given use.

Building a model requires abstraction. Assumptions are used in modelling in order to specify the domain of application of the model. For example, the special theory of relativity assumes an inertial frame of reference. This assumption was contextualized and further explained by the general theory of relativity. A model makes accurate predictions when its assumptions are valid, and might well not make accurate predictions when its assumptions do not hold. Such assumptions are often the point with which older theories are succeeded by new ones (the general theory of relativity works in non-inertial reference frames as well).

The term "assumption" is actually broader than its standard use, etymologically speaking. The Oxford English Dictionary (OED) and online Wiktionary indicate its Latin source as assumere ("accept, to take to oneself, adopt, usurp"), which is a conjunction of ad- ("to, towards, at") and sumere (to take). The root survives, with shifted meanings, in the Italian sumere and Spanish sumir. In the OED, "assume" has the senses of (i) “investing oneself with (an attribute), ” (ii) “to undertake” (especially in Law), (iii) “to take to oneself in appearance only, to pretend to possess,” and (iv) “to suppose a thing to be.” Thus, "assumption" connotes other associations than the contemporary standard sense of “that which is assumed or taken for granted; a supposition, postulate,” and deserves a broader analysis in the philosophy of science.[citation needed]

Evaluating a model

A model is evaluated first and foremost by its consistency to empirical data; any model inconsistent with reproducible observations must be modified or rejected. One way to modify the model is by restricting the domain over which it is credited with having high validity. A case in point is Newtonian physics, which is highly useful except for the very small, the very fast, and the very massive phenomena of the universe. However, a fit to empirical data alone is not sufficient for a model to be accepted as valid. Other factors important in evaluating a model include:[citation needed]- Ability to explain past observations

- Ability to predict future observations

- Cost of use, especially in combination with other models

- Refutability, enabling estimation of the degree of confidence in the model

- Simplicity, or even aesthetic appeal

Visualization

Visualization is any technique for creating images, diagrams, or animations to communicate a message. Visualization through visual imagery has been an effective way to communicate both abstract and concrete ideas since the dawn of man. Examples from history include cave paintings, Egyptian hieroglyphs, Greek geometry, and Leonardo da Vinci's revolutionary methods of technical drawing for engineering and scientific purposes.Space mapping

Space mapping refers to a methodology that employs a "quasi-global" modeling formulation to link companion "coarse" (ideal or low-fidelity) with "fine" (practical or high-fidelity) models of different complexities. In engineering optimization, space mapping aligns (maps) a very fast coarse model with its related expensive-to-compute fine model so as to avoid direct expensive optimization of the fine model. The alignment process iteratively refines a "mapped" coarse model (surrogate model).Types of scientific modelling

Applications

Modelling and simulation

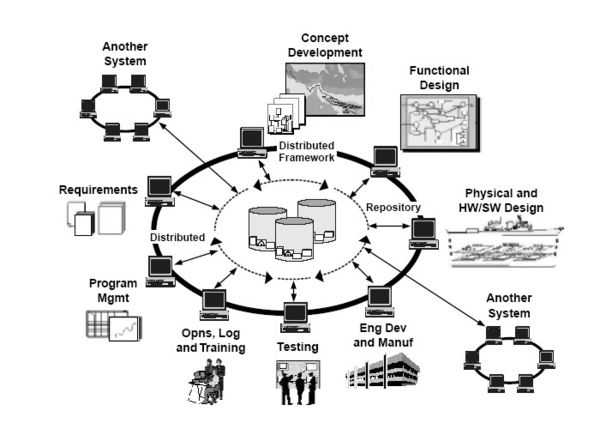

One application of scientific modelling is the field of modelling and simulation, generally referred to as "M&S". M&S has a spectrum of applications which range from concept development and analysis, through experimentation, measurement and verification, to disposal analysis. Projects and programs may use hundreds of different simulations, simulators and model analysis tools.

Example of the integrated use of Modelling and Simulation in Defence

life cycle management. The modelling and simulation in this image is

represented in the center of the image with the three containers.[13]

The figure shows how Modelling and Simulation is used as a central part of an integrated program in a Defence capability development process.[13]

Model-based learning in education

Model–based learning in education, particularly in relation to learning science involves students creating models for scientific concepts in order to:[17]- Gain insight of the scientific idea(s)

- Acquire deeper understanding of the subject through visualization of the model

- Improve student engagement in the course

- Physical macrocosms

- Representational systems

- Syntactic models

- Emergent models

"Model–based learning entails determining target models and a learning pathway that provide realistic chances of understanding." [19] Model making can also incorporate blended learning strategies by using web based tools and simulators, thereby allowing students to:

- Familiarize themselves with on-line or digital resources

- Create different models with various virtual materials at little or no cost

- Practice model making activity any time and any place

- Refine existing models

The teacher's role in the overall teaching and learning process is primarily that of a facilitator and arranger of the learning experience. He or she would assign the students, a model making activity for a particular concept and provide relevant information or support for the activity. For virtual model making activities, the teacher can also provide information on the usage of the digital tool and render troubleshooting support in case of glitches while using the same. The teacher can also arrange the group discussion activity between the students and provide the platform necessary for students to share their observations and knowledge extracted from the model making activity.

Model–based learning evaluation could include the use of rubrics that assess the ingenuity and creativity of the student in the model construction and also the overall classroom participation of the student vis-a-vis the knowledge constructed through the activity.

It is important, however, to give due consideration to the following for successful model–based learning to occur:

- Use of the right tool at the right time for a particular concept

- Provision within the educational setup for model–making activity: e.g., computer room with internet facility or software installed to access simulator or digital tool