From the author: This paper appears in

Toward a Science of Consciousness II: The Second Tucson Discussions and Debates

(S. Hameroff, A. Kaszniak, and A.Scott, eds), published with MIT Press

in 1998. It is a transcript of my talk at the second Tucson conference

in April 1996, lightly edited to include the contents of overheads and

to exclude some diversions with a consciousness meter. A more in-depth

argument for some of the claims in this paper can be found in Chapter 6

of my book

The Conscious Mind (Chalmers, 1996).

I’m going to talk about one aspect of the role that neuroscience

plays in the search for a theory of consciousness. Whether or not

neuroscience can solve all the problems of consciousness single

handedly, there is no question that it has a major role to play. We’ve

seen at this conference that there’s a vast amount of progress in

neurobiological research, and that much of it is clearly bearing on the

problems of consciousness. But the conceptual foundations of this sort

of research are only beginning to be laid. So I will look at some of the

things that are going on from a philosopher’s perspective and will see

if there’s anything helpful to say about these foundations.

We’ve all been hearing a lot about the "neural correlate of

consciousness". This phrase is intended to refer to the neural system or

systems primarily associated with conscious experience. I gather that

the catchword of the day is "NCC". We all have an NCC inside our head,

we just have to find out what it is. In recent years there have been

quite a few proposals about the identity of the NCC. One of the most

famous proposals is Crick and Koch’s suggestion concerning 40-hertz

oscillations. That proposal has since faded away a little but there are

all sorts of other suggestions out there. It’s almost got to a point

where it’s reminiscent of particle physics, where they have something

like 236 particles and people talk about the "particle zoo". In the

study of consciousness, one might talk about the "neural correlate zoo".

There have also been a number of related proposals about what we might

call the "cognitive correlate of consciousness" (CCC?).

A small list of suggestions that have been put forward might include:

- 40-hertz oscillations in the cerebral cortex (Crick and Koch 1990)

- Intralaminar nucleus in the thalamus (Bogen 1995)

- Re-entrant loops in thalamocortical systems (Edelman 1989)

- 40-hertz rhythmic activity in thalamocortical systems (Llinas et al 1994)

- Nucleus reticularis (Taylor and Alavi 1995)

- Extended reticular-thalamic activation system (Newman and Baars 1993)

- Anterior cingulate system (Cotterill 1994)

- Neural assemblies bound by NMDA (Flohr 1995)

- Temporally-extended neural activity (Libet 1994)

- Backprojections to lower cortical areas (Cauller and Kulics 1991)

- Neurons in extrastriate visual cortex projecting to prefrontal areas (Crick and Koch 1995)

- Neural activity in area V5/MT (Tootell et al 1995)

- Certain neurons in the superior temporal sulcus (Logothetis and Schall 1989)

- Neuronal gestalts in an epicenter (Greenfield 1995)

- Outputs of a comparator system in the hippocampus (Gray 1995)

- Quantum coherence in microtubules (Hameroff 1994)

- Global workspace (Baars 1988)

- Activated semantic memories (Hardcastle 1995)

- High-quality representations (Farah 1994)

- Selector inputs to action systems (Shallice 1988)

There are a few intriguing commonalities among the proposals on this

list. A number of them give a central role to interactions between the

thalamus and the cortex, for example. All the same, the sheer number and

diversity of the proposals can be a little overwhelming. I propose to

step back a little and try to make sense of all this activity by asking

some foundational questions.

A central question is this: how is it, in fact, that one can search

for the neural correlate of consciousness? As we all know, there are

problems in measuring consciousness. It’s not a directly and

straightforwardly observable phenomenon. It would be a lot easier if we

had a way of getting at consciousness directly; if we had, for example, a

consciousness meter.

If we had a consciousness meter, searching for the NCC would be

straightforward. We’d wave the consciousness meter and measure a

subject’s consciousness directly. At the same time, we’d monitor the

underlying brain processes. After a number of trials, we’d say OK,

such-and-such brain processes are correlated with experiences of various

kinds, so that’s the neural correlate of consciousness.

Alas, we don’t have a consciousness meter, and there seem to be

principled reasons why we can’t have one. Consciousness just isn’t the

sort of thing that can be measured directly. So: What do we do without a

consciousness meter? How can the search go forward? How does all this

experimental research proceed?

I think the answer is this: we get there through principles of

interpretation.

These are principles by which we interpret physical systems to judge

whether or not they have consciousness. We might call these

pre-experimental bridging principles.

These are the criteria that we bring to bear in looking at systems to

say (a) whether or not they are conscious now, and (b) what information

they are conscious of, and what information they are not. We can’t reach

in directly and grab those experiences and "transpersonalize" them into

our own, so we rely on external criteria instead.

That’s a perfectly reasonable thing to do. But in doing this we have

to realize that something interesting is going on. These principles of

interpretation are not themselves experimentally determined or

experimentally tested. In a sense they are pre-experimental assumptions.

Experimental research gives us a lot of information about processing;

then we bring in the bridging principles to interpret the experimental

results, whatever those results may be. They are the principles by which

we make

inferences from facts about processing to facts about

consciousness, so they are conceptually prior to the experiments

themselves. We can’t actually refine them experimentally (except perhaps

through first-person experimentation!), because we don’t have any

independent access to the independent variable. Instead, these

principles will be based on some combination of (a) conceptual judgments

about what counts as a conscious process and (b) information gleaned

from our first-person perspective on our own consciousness.

I think we are all stuck in this boat. The point applies whether one

is a reductionist or an anti-reductionist about consciousness. A

hard-line reductionist might put some of these points slightly

differently, but either way, the experimental work is going to require

pre-experimental reasoning to determine the criteria for ascription of

consciousness. Of course such principles are usually left implicit in

empirical research. We don’t usually see papers saying "Here is the

bridging principle, here are the data, and here is what follows." But

it’s useful to make them explicit. The very presence of these principles

has some strong and interesting consequences in the search for the NCC.

In a sense, in relying on these principles we are taking a leap into

the epistemological unknown. Because we don’t measure consciousness

directly, we have to make something of a leap of faith. It may not be a

big leap, but nevertheless it suggests that everyone doing this sort of

work is engaged in philosophical reasoning. Of course one can always

choose to stay on solid ground, talking about the empirical results in a

neutral way; but the price of doing so is that one gains no particular

insight into consciousness. Conversely, as soon as we draw any

conclusions about consciousness, we have gone beyond the information

given, so we need to pay careful attention to the reasoning involved.

So what are these principles of interpretation? The first and by far

the most prevalent such principle is a very straightforward one: it’s a

principle of verbal report. When someone says "Yes, I see that table

now", we infer that they are conscious of the table. When someone says

"Yes, I see red now", we infer that they are having an experience of

red. Of course one might always say "How do you know?" — a philosopher

might suggest that we may be faced with a fully functioning zombie – but

in fact most of us don’t believe that the people around us are zombies,

and in practice we are quite prepared to rely on this principle. As

pre-experimental assumptions go, this is a relatively "safe" one — it

doesn’t require a huge leap of faith — and it is very widely used.

So the principle here is that when information is verbally reported,

it is conscious. One can extend this slightly, as no one believes that

an

actual verbal report is required for consciousness; we are

conscious of much more than we report on any given occasion. So an

extended principle might say that when information is directly

available for verbal report, it is conscious.

Experimental researchers don’t rely only on these principles of

verbal report and reportability. These principles can be somewhat

limiting when we want to do broader experiments. In particular, we don’t

want to just restrict our studies of consciousness to subjects that

have language. In fact just this morning we saw a beautiful example of

research on consciousness in language-free creatures. I’m referring to

the work of Nikos Logothetis and his colleagues (e.g. Logothetis &

Schall 1989; Leopold & Logothetis 1996). This work uses experiments

on binocular rivalry in monkeys to draw conclusions about the neural

processes associated with consciousness. How do Logothetis

et al

manage to draw conclusions about a monkey’s consciousness without

getting any verbal reports? What they do is rely on a monkey’s pressing

bars: if a monkey can be made to press a bar in an appropriate way in

response to a stimulus, we’ll say that that stimulus was consciously

perceived.

The criterion at play seems to require that the information be

available for an arbitrary response. If it turned out that the monkey

could press a bar in response to a red light but couldn’t do anything

else, we would be tempted to say that it wasn’t a case of consciousness

at all, but some sort of subconscious connection. If on the other hand

we find information that is available for response in all sorts of

different ways, then we’ll say that it is conscious. Actually Logothetis

and his colleagues also use some subtler reasoning about similarities

with binocular rivalry in humans to buttress the claim that the monkey

is having the relevant conscious experience, but it is clearly the

response that carries the most weight.

The underlying general principle is something like this: When information is

directly available for global control

in a cognitive system, then it is conscious. If information is

available for response in many different motor modalities, we will say

that it is conscious, at least in a range of relatively familiar systems

such as humans and primates and so on. This principle squares well with

the previous principle in cases where the capacity for verbal report is

present: availability for verbal report and availability for global

control seem to go together in such cases (report is one of the key

aspects of control, after all, and it is rare to find information that

is reportable but not available more widely). But this principle is also

applicable more widely.

A correlation between consciousness and global availability (for

short) seems to fit the first-person evidence — the evidence gleaned

from our own conscious experience — quite well. When information is

present in my consciousness, it is generally reportable, and it can

generally be brought to bear in the control of behavior in all sorts of

different ways. I can talk about it, I can point in the general

direction of a stimulus, I can press bars, and so on. Conversely, when

we find information that is directly available in this way for report

and other aspects of control, it is generally conscious information. I

think one can bear this out by consideration of cases.

There are some interesting puzzle cases to consider, such as the case of blindsight, where one has

some

kind of availability for control but arguably no conscious experience.

Those cases might best be handled by invoking the directness criterion:

insofar as the information here is available for report and other

control processes at all, it is available only indirectly, by comparison

to the direct and automatic availability in standard cases. One might

also stipulate that it is availability for

voluntary control that

is relevant, to deal with certain cases of involuntary unconscious

response, although that is a complex issue. I discuss a number of puzzle

cases in more detail elsewhere (Chalmers 1996, forthcoming), where I

also give a much more detailed defense of the idea that something like

global availability is the key pre-empirical criterion for the

ascription of consciousness.

But this remains at best a first-order approximation of the

functional criteria that come into play. I’m less concerned today to get

all the fine details right than to work with the idea that some such

functional criterion is required and indeed is implicit in all the

empirical research on the neural correlate of consciousness. If you

disagree with the criterion I’ve suggested here – presumably because you

can think of counterexamples — you may want to use those

counterexamples to refine it or to come up with a better criterion of

your own. But the point I want to focus on here is that in the very act

of experimentally distinguishing conscious from unconscious processes,

some such criterion is always at play.

So the question I want to ask is: if

something like this is

right, then what follows? That is, if some such bridging principles are

implicit in the methodology of the search for the NCC, then what are the

consequences? I will use global availability as my central functional

criterion in the discussion that follows, but many of the points should

generalize.

The first thing one can do is produce what philosophers might call a

rational reconstruction

of the search for the neural correlate of consciousness. With a

rational reconstruction we can say, maybe things don’t work exactly like

this in practice, but the rational underpinnings of the process have

something like this form. That is, if one were to try to

justify

the conclusions one has reached as well as one can, one’s justification

would follow the shape of the rational reconstruction. In this case, a

rational reconstruction might look something like this:

(1) Consciousness <-> global availability (bridging principle)

(2) Global availability <-> neural process N (empirical work)

so

(3) Consciousness <-> neural process N (conclusion).

According to this reconstruction, one implicitly embraces some sort

of pre-experimental bridging principle that one finds plausible on

independent grounds, such as conceptual or phenomenological grounds.

Then one does the empirical research. Instead of measuring consciousness

directly, we detect the functional property. One sees that when this

functional property (e.g. global availability) is present, it is

correlated with a certain neural process (e.g. 40-hertz oscillations).

Combining the pre-empirical premise and the empirical result, we arrive

at the conclusion that this neural process is a candidate for the NCC.

Of course it doesn’t work nearly so simply in practice. The two

stages are very intertwined; our pre-experimental principles may

themselves be refined as experimental research goes along. Nevertheless I

think one can make a separation, at least at the rational level, into

pre-empirical and experimental components, for the sake of analysis. So

with this sort of rational reconstruction in hand, what sort of

conclusions follow? There are about six consequences that I want to draw

out here.

(1) The first conclusion is a characterization of the neural

correlates of consciousness. If the NCC is arrived at through this sort

of methodology, then whatever it turns out to be, it will be a mechanism

of

global availability. The presence of the NCC wherever global availability is present suggests that it is a mechanism that

subserves the process of global availability in the brain. The only alternative that we have to worry about is that it might be a

symptom rather than a

mechanism

of global availability; but that possibility ought to be addressable in

principle by dissociation studies, by lesioning, and so on. If a

process is a mere symptom of availability, we ought to be able to

empirically dissociate it from the process of global availability while

leaving the latter intact. The resulting data would suggest to us that

consciousness can be present even when the neural process in question is

not, thus indicating that it wasn’t a perfect correlate of

consciousness after all.

(A related line of reasoning supports the idea that a true NCC must be a mechanism of

direct

availability for global control. Mechanisms of indirect availability

will in principle be dissociable from the empirical evidence for

consciousness, for example by directly stimulating the mechanisms of

direct availability. The indirect mechanisms will be "screened off" by

the direct mechanisms in much the same way as the retina is screened off

as an NCC by the visual cortex.)

In fact, if one looks at the various proposals that are out there,

this template seems to fit them pretty well. For example, the 40-hertz

oscillations discussed by Crick and Koch were put forward precisely

because of the role they might play in binding and integrating

information into working memory, and working memory is of course a

central mechanism whereby information is made available for global

control in a cognitive system. Similarly, it is plausible that Libet’s

extended neural activity is relevant precisely because the temporal

extendedness of activity is what gives certain information the capacity

to dominate later processes that lead to control. Baars’ global

workspace is a particularly explicit proposal of a mechanism in this

direction; it is put forward explicitly as a mechanism whereby

information can be globally disseminated. All of these mechanisms and

many of the others seem to be candidates for mechanisms of global

availability in the brain.

(2) This reconstruction suggests that a full story about the neural

processes associated with consciousness will to do two things. Firstly,

it will

explain global availability in the brain. Once we know

all about the relevant neural processes, we will know precisely how

information is made directly available for global control in the brain,

and this will be an explanation in the full sense. Global availability

is a functional property, and as always the problem of explaining the

performance of a function is a problem to which mechanistic explanation

is well-suited. So we can be confident that in a century or two, global

availability will be straightforwardly explained. Secondly, this

explanation of availability will do something else: it will isolate the

processes that

underlie consciousness itself. If the bridging

principle is granted, then mechanisms of availability will automatically

be correlates of phenomenology in the full sense.

Now, I don’t think this gives us a full

explanation of

consciousness. One can always raise the question of why it is that these

processes of availability should give rise to consciousness in the

first place. As yet we have no explanation of why this is, and it may

well be that the full details concerning the processes of availability

still won’t answer this question. Certainly, nothing in the standard

methodology I have outlined answers the question; that methodology

assumes a relation between availability and consciousness, and therefore does nothing to

explain

it. The relationship between the two is instead taken as something of a

primitive. So the hard problem still remains. But who knows: somewhere

along the line we may be led to the relevant insights that show why the

link is there, and the hard problem may then be solved. In any case,

whether or not we have solved the hard problem, we may nevertheless have

isolated the

basis of consciousness in the brain. We just have to keep in mind the distinction between correlation and explanation.

(3) Given this paradigm, it is likely that there are going to be many

different neural correlates of consciousness. I take it that this is

not going to surprise many people; but the rational reconstruction gives

us a way of seeing just why such a multiplicity of correlates should

exist. There will be many neural correlates of consciousness because

there may well be many different mechanisms of global availability.

There will be mechanisms of availability in different modalities: the

mechanisms of visual availability may be quite different from the

mechanisms of auditory availability, for example. (Of course they

may

be the same, in that we could find a later area that integrates and

disseminates all this information, but that’s an open question.) There

will also be mechanisms at different stages of the processing path

whereby information is made globally available: early mechanisms and

later ones. So these may all be candidates for the NCC. And there will

be mechanisms at many different levels of description: for example,

40-hertz oscillations may well be redescribed as high-quality

representations, or as part of a global workspace, at a different level

of description. So it may turn out that a number of the animals in the

zoo, so to speak, can co-exist, because they are compatible in one of

these ways.

I won’t speculate much further on just what the neural correlates of consciousness

are.

No doubt some of the ideas in the initial list will prove to be

entirely off-track, while some of the others will prove closer to the

mark. As we philosophers like to say, humbly, that’s an empirical

question. But I hope the conceptual issues are becoming clearer.

(4) This way of thinking about things allows one to make sense of a idea that is sometimes floated: that of a

consciousness module.

Sometimes this notion is disparaged; sometimes it is embraced. But this

picture of the methodology in the search for an NCC suggests that it is

at least possible that there could turn out to be such a module. What

would it take? It would require that there turns out to be some sort of

functionally localizable, internally integrated area, through which all

global availability runs. It needn’t be anatomically localizable, but to

qualify as a module it would need to be localizable in some broader

sense. For example, the parts of the module would have to have

high-bandwidth communication among themselves, compared to the

relatively low-bandwidth communication that they have with other areas.

Such a thing

could turn out to exist. It doesn’t strike me as especially

likely

that things will turn out this way; it seems just as likely that there

will be multiple independent mechanisms of global availability in the

brain, scattered around without any special degree of mutual

integration. If that’s so, we will likely say that there doesn’t turn

out to be a consciousness module after all. But that’s another one of

those empirical questions.

If something like this does turn out to exist in the brain, it would

resemble Baars’ conception of a global workspace: a functional area

responsible for the integration of information in the brain and for its

dissemination to multiple nonconscious specialized processes. In fact I

should acknowledge that many of the ideas I’m putting forward here are

compatible with things that Baars has been saying for years about the

role of global availability in the study of consciousness. Indeed, this

way of looking at things suggests that some of his ideas are almost

forced on one by the methodology. The special epistemological role of

global availability helps explain why the idea of a global workspace

provides a useful way of thinking about almost any empirical proposal

about consciousness. If NCC’s are identified as such precisely because

of their role in global control, then at least on a first approximation,

we should expect the global workspace idea to be a natural fit.

(5) We can also apply this picture to a question that has been

discussed frequently at this conference: are the neural correlates of

visual

consciousness to be found in V1, in the extrastriate visual cortex, or

elsewhere? If our picture of the methodology is correct, then the answer

will presumably depend on which visual area is most directly implicated

in global availability.

Crick and Koch have suggested that the visual NCC is not to be found

within V1, as V1 does not contain neurons that project to the prefrontal

cortex. This reasoning has been criticized by Ned Block for conflating

access consciousness and phenomenal consciousness (see Block, this

volume); but interestingly, the picture I have developed suggests that

it may be good reasoning. The prefrontal cortex is known to be

associated with control processes; so

if a given area in the

visual cortex projects to prefrontal areas, then it may well be a

mechanism of direct availability. And if it does not project in this

way, it is less likely to be such a mechanism; at best it might be

indirectly

associated with global availability. Of course there is still plenty of

room to raise questions about the empirical details. But the broader

point is that for the sort of reasons discussed in (2) above, it is

likely that the neural processes involved in

explaining access consciousness will simultaneously be involved in a story about the

basis

of phenomenal consciousness. If something like this is implicit in

their reasoning, Crick and Koch might escape the charge of conflation.

Of course the reasoning does depend on these somewhat shaky bridging

principles, but then all work on the neural correlates of consciousness

must appeal to such principles somewhere, so this can’t be held against

Crick and Koch in particular.

(6) Sometimes the neural correlate of consciousness is conceived of

as the Holy Grail for a theory of consciousness. It will make everything

fall into place. For example, once we discover the NCC, then we’ll have

a definitive test for consciousness, enabling us to discover

consciousness wherever it arises. That is, we might use the neural

correlate itself as a sort of consciousness meter. If a system has

40-hertz oscillations (say), then it is conscious; if it has none, then

it is not conscious. Or if a thalamocortical system turns out to be the

NCC, then a system without that system is unlikely to be conscious. This

sort of reasoning is not usually put quite so baldly as this, but I

think one finds some version of it quite frequently.

This reasoning can be tempting, but one should not succumb to the

temptation. Given the very methodology that comes into play here, there

is no way to definitely establish a given NCC as an independent test for

consciousness. The primary criterion for consciousness will always

remain the functional property we started with: global availability, or

verbal report, or whatever. That’s how we discovered the correlations in

the first place. 40-hertz oscillations (or whatever) are relevant

only

because of the role they play in satisfying this criterion. True, in

cases where we know that this association between the NCC and the

functional property is present, the NCC might itself function as a sort

of "signature" of consciousness; but once we dissociate the NCC from the

functional property, all bets are off. To take an extreme example, if

we have 40-hertz oscillations in a test tube, that almost certainly

won’t yield consciousness. But the point applies equally in less extreme

cases. Because it was the bridging principles that gave us all the

traction in the search for an NCC in the first place, it’s not clear

that anything follows in cases where the functional criterion is thrown

it away. So there’s no free lunch here: one can’t get something for

nothing.

Once one recognizes the central role that pre-experimental

assumptions play in the search for the NCC, one realizes that there are

some limitations on just what we can expect this search to tell us.

Still, whether or not the NCC is the Holy Grail, I hope that I have said

enough to make it clear that the quest for it is likely to enhance our

understanding considerably. And I hope to have convinced you that there

are important ways in which philosophy and neuroscience can come

together to help clarify some of the deep problems involved in the study

of consciousness.

References

Baars, B.J. 1988.

A Cognitive Theory of Consciousness. Cambridge University Press.

Bogen, J.E. 1995. On the neurophysiology of consciousness, parts I and II.

Consciousness and Cognition, 4:52-62 & 4:137-58.

Cauller, L.J. & Kulics, A.T. 1991. The neural basis of the

behaviorally relevant N1 component of the somatosensory evoked potential

in awake monkeys: Evidence that backward cortical projections signal

conscious touch sensation.

Experimental Brain Research 84:607-619.

Chalmers, D.J. 1996. The Conscious Mind:

In Search of a Fundamental Theory. Oxford University Press.

Chalmers, D.J. (forthcoming). Availability: the cognitive basis of experience?

Behavioral and Brain Sciences. Also in N. Block, O. Flanagan, & G. Güzeldere (eds)

The Nature of Consciousness (MIT Press, 1997).

Cotterill, R. 1994. On the unity of conscious experience.

Journal of Consciousness Studies 2:290-311.

Crick, F. and Koch, C. 1990. Towards a neurobiological theory of consciousness.

Seminars in the Neurosciences 2: 263-275.

Crick, F. & Koch, C. 1995. Are we aware of neural activity in primary visual cortex?

Nature 375: 121-23.

Edelman, G.M. 1989. The Remembered Present:

A Biological Theory of Consciousness. New York: Basic Books.

Farah, M.J. 1994. Visual perception and visual awareness after brain

damage: A tutorial overview. In (C. Umilta and M. Moscovitch, eds.)

Consciousness and Unconscious Information Processing: Attention and Performance 15. MIT Press.

Flohr, H. 1995. Sensations and brain processes.

Behavioral Brain Research 71:157-61.

Gray, J.A. 1995. The contents of consciousness: A neuropsychological conjecture.

Behavioral and Brain Sciences 18:659-722.

Greenfield, S. 1995.

Journey to the Centers of the Mind. W.H. Freeman.

Hameroff, S.R. 1994. Quantum coherence in microtubules: A neural basis for emergent consciousness?

Journal of Consciousness Studies 1:91-118.

Hardcastle, V.G. 1996.

Locating Consciousness. Philadephia: John Benjamins.

Jackendoff, R. 1987.

Consciousness and the Computational Mind. MIT Press.

Leopold, D.A. & Logothetis, N.K. 1996. Activity-changes in early

visual cortex reflect monkeys’ percepts during binocular rivalry.

Nature 379: 549-553.

Libet, B. 1993. The neural time factor in conscious and unconscious events. In

Experimental and Theoretical Studies of Consciousness (Ciba Foundation Symposium 174). New York: Wiley.

Llinas, R.R., Ribary, U., Joliot, M. & Wang, X.-J. 1994. Content

and context in temporal thalamocortical binding. In (G. Buzsaki, R.R.

Llinas, & W. Singer, eds.)

Temporal Coding in the Brain. Berlin: Springer Verlag.

Logothetis, N. & Schall, J. 1989. Neuronal correlates of subjective visual perception.

Science 245:761-63.

Shallice, T. 1988. Information-processing models of consciousness:

possibilities and problems. In (A. Marcel and E. Bisiach, eds.)

Consciousness in Contemporary Science. Oxford University Press.

Taylor, J.G. & Alavi, F.N. 1993. Mathematical analysis of a competitive network for attention. In (J.G. Taylor, ed.)

Mathematical Approaches to Neural Networks. Elsevier.

Tootell, R.B., Reppas, J.B., Dale, A.M., Look, R.B., Sereno, M.I.,

Malach, R., Brady, J. & Rosen, B.R. 1995. Visual motion aftereffect

in human cortical area MT revealed by functional magnetic resonance

imaging.

Nature 375:139-41.

Copyright © 1998 MIT Press, from

Toward a Science of Consciousness II: The Second Tucson Discussions and Debates. Used with permission.

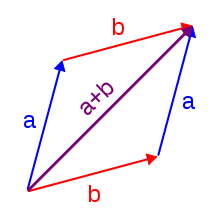

is commutative iff

is commutative iff  for each

for each  and

and  .

This image illustrates this property with the concept of an operation

as a "calculation machine". It doesn't matter for the output

.

This image illustrates this property with the concept of an operation

as a "calculation machine". It doesn't matter for the output  or

or  respectively which order the arguments

respectively which order the arguments  and

and  have – the final outcome is the same.

have – the final outcome is the same. is commutative iff

is commutative iff  for each

for each  and

and  .

This image illustrates this property with the concept of an operation

as a "calculation machine". It doesn't matter for the output

.

This image illustrates this property with the concept of an operation

as a "calculation machine". It doesn't matter for the output  or

or  respectively which order the arguments

respectively which order the arguments  and

and  have – the final outcome is the same.

have – the final outcome is the same.on a set S is called commutative if:

if:

is called commutative if:

.

. .

. .

.

.

.

" is a metalogical symbol representing "can be replaced in a proof with."

" is a metalogical symbol representing "can be replaced in a proof with."

but

but  ).

More such examples may be found in commutative non-associative magmas.

).

More such examples may be found in commutative non-associative magmas.

.

.

. These two operators do not commute as may be seen by considering the effect of their compositions

. These two operators do not commute as may be seen by considering the effect of their compositions  and

and  (also called products of operators) on a one-dimensional wave function

(also called products of operators) on a one-dimensional wave function  :

:

and

and  , respectively (where

, respectively (where  is the reduced Planck constant). This is the same example except for the constant

is the reduced Planck constant). This is the same example except for the constant  ,

so again the operators do not commute and the physical meaning is that

the position and linear momentum in a given direction are complementary.

,

so again the operators do not commute and the physical meaning is that

the position and linear momentum in a given direction are complementary.