Optogenetics (from Greek optikós,

meaning 'seen, visible') is a biological technique which involves the

use of light to control cells in living tissue, typically neurons, that have been genetically modified to express light-sensitive ion channels. It is a neuromodulation method that uses a combination of techniques from optics and genetics to control and monitor the activities of individual neurons in living tissue—even within freely-moving animals—and to precisely measure these manipulation effects in real-time. The key reagents used in optogenetics are light-sensitive proteins. Neuronal control is achieved using optogenetic actuators like channelrhodopsin, halorhodopsin, and archaerhodopsin, while optical recording of neuronal activities can be made with the help of optogenetic sensors for calcium (GCaMP), vesicular release (synapto-pHluorin), neurotransmitter (GluSnFRs), or membrane voltage (arc lightning, ASAP1).

Control (or recording) of activity is restricted to genetically defined

neurons and performed in a spatiotemporal-specific manner by light.

In 2010, optogenetics was chosen as the "Method of the Year" across all fields of science and engineering by the interdisciplinary research journal Nature Methods. At the same time, optogenetics was highlighted in the article on "Breakthroughs of the Decade" in the academic research journal Science.[5] These journals also referenced recent public-access general-interest video Method of the year video and textual SciAm summaries of optogenetics.

In 2010, optogenetics was chosen as the "Method of the Year" across all fields of science and engineering by the interdisciplinary research journal Nature Methods. At the same time, optogenetics was highlighted in the article on "Breakthroughs of the Decade" in the academic research journal Science.[5] These journals also referenced recent public-access general-interest video Method of the year video and textual SciAm summaries of optogenetics.

History

The "far-fetched" possibility of using light for selectively controlling precise neural activity (action potential) patterns within subtypes of cells in the brain was thought of by Francis Crick in his Kuffler Lectures at the University of California in San Diego in 1999.[6] An earlier use of light to activate neurons was carried out by Richard Fork,[7] who demonstrated laser activation of neurons within intact tissue, although not in a genetically-targeted manner. The earliest genetically targeted method that used light to control rhodopsin-sensitized neurons was reported in January 2002, by Boris Zemelman (now at UT Austin) and Gero Miesenböck, who employed Drosophila rhodopsin cultured mammalian neurons.[8] In 2003, Zemelman and Miesenböck developed a second method for light-dependent activation of neurons in which single inotropic channels TRPV1, TRPM8 and P2X2 were gated by photocaged ligands in response to light.[9] Beginning in 2004, the Kramer and Isacoff groups developed organic photoswitches or "reversibly caged" compounds in collaboration with the Trauner group that could interact with genetically introduced ion channels.[10][11] TRPV1 methodology, albeit without the illumination trigger, was subsequently used by several laboratories to alter feeding, locomotion and behavioral resilience in laboratory animals.[12][13][14] However, light-based approaches for altering neuronal activity were not applied outside the original laboratories, likely because the easier to employ channelrhodopsin was cloned soon thereafter.[15]Peter Hegemann, studying the light response of green algae at the University of Regensburg, had discovered photocurrents that were too fast to be explained by the classic g-protein-coupled animal rhodopsins.[16] Teaming up with the electrophysiologist Georg Nagel at the Max Planck Institute in Frankfurt, they could demonstrate that a single gene from the alga Chlamydomonas produced large photocurents when expressed in the oocyte of a frog.[17] To identify expressing cells, they replaced the cytoplasmic tail of the algal protein with the fluorescent protein YFP, generating the first generally applicable optogenetic tool.[15] Zhuo-Hua Pan of Wayne State University, researching on restore sight to blindness, thought about using channelrhodopsin when it came out in late 2003. By February 2004, he was trying channelrhodopsin out in ganglion cells—the neurons in our eyes that connect directly to the brain—that he had cultured in a dish. Indeed, the transfected neurons became electrically active in response to light.[18] In April 2005, Susana Lima and Miesenböck reported the first use of genetically-targeted P2X2 photostimulation to control the behaviour of an animal.[19] They showed that photostimulation of genetically circumscribed groups of neurons, such as those of the dopaminergic system, elicited characteristic behavioural changes in fruit flies. In August 2005, Karl Deisseroth's laboratory in the Bioengineering Department at Stanford including graduate students Ed Boyden and Feng Zhang (both now at MIT) published the first demonstration of a single-component optogenetic system in cultured mammalian neurons,[20][21] using the channelrhodopsin-2(H134R)-eYFP construct from Nagel and Hegemann.[15] The groups of Gottschalk and Nagel were first to use channelrhodopsin-2 for controlling neuronal activity in an intact animal, showing that motor patterns in the roundworm Caenorhabditis elegans could be evoked by light stimulation of genetically selected neural circuits (published in December 2005).[22] In mice, controlled expression of optogenetic tools is often achieved with cell-type-specific Cre/loxP methods developed for neuroscience by Joe Z. Tsien back in the 1990s[23] to activate or inhibit specific brain regions and cell-types in vivo.[24]

The primary tools for optogenetic recordings have been genetically encoded calcium indicators (GECIs). The first GECI to be used to image activity in an animal was cameleon, designed by Atsushi Miyawaki, Roger Tsien and coworkers.[25] Cameleon was first used successfully in an animal by Rex Kerr, William Schafer and coworkers to record from neurons and muscle cells of the nematode C. elegans.[26] Cameleon was subsequently used to record neural activity in flies[27] and zebrafish.[28] In mammals, the first GECI to be used in vivo was GCaMP,[29] first developed by Nakai and coworkers.[30] GCaMP has undergone numerous improvements, and GCaMP6[31] in particular has become widely used throughout neuroscience.

In 2010, Karl Deisseroth at Stanford University was awarded the inaugural HFSP Nakasone Award "for his pioneering work on the development of optogenetic methods for studying the function of neuronal networks underlying behavior". In 2012, Gero Miesenböck was awarded the InBev-Baillet Latour International Health Prize for "pioneering optogenetic approaches to manipulate neuronal activity and to control animal behaviour." In 2013, Ernst Bamberg, Ed Boyden, Karl Deisseroth, Peter Hegemann, Gero Miesenböck and Georg Nagel were awarded The Brain Prize for "their invention and refinement of optogenetics."[32][33] Karl Deisseroth was awarded the Else Kröner Fresenius Research Prize 2017 (4 million euro) for his "contributions to the understanding of the biological basis of psychiatric disorders".

Description

Fig 1.

Channelrhodopsin-2 (ChR2) induces temporally precise blue light-driven

activity in rat prelimbic prefrontal cortical neurons. a) In vitro

schematic (left) showing blue light delivery and whole-cell patch-clamp

recording of light-evoked activity from a fluorescent

CaMKllα::ChR2-EYFP expressing pyramidal neuron (right) in an acute brain

slice. b) In vivo schematic (left) showing blue light (473 nm) delivery

and single-unit recording. (bottom left) Coronal brain slice showing

expression of CaMKllα::ChR2-EYFP in the prelimbic region. Light blue

arrow shows tip of the optical fiber; black arrow shows tip of the

recording electrode (left). White bar, 100 µm. (bottom right) In vivo

light recording of prefrontal cortical neuron in a transduced

CaMKllα::ChR2-EYFP rat showing light-evoked spiking to 20 Hz delivery of

blue light pulses (right). Inset, representative light-evoked

single-unit response.[34]

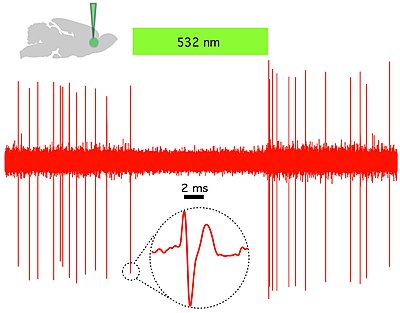

Fig 2. Halorhodopsin (NpHR) rapidly and reversibly silences spontaneous activity in vivo

in rat prelimbic prefrontal cortex. (Top left) Schematic showing in

vivo green (532 nm) light delivery and single- unit recording of a

spontaneously active CaMKllα::eNpHR3.0- EYFP expressing pyramidal

neuron. (Right) Example trace showing that continuous 532 nm

illumination inhibits single-unit activity in vivo. Inset, representative single unit event; Green bar, 10 seconds.[34]

A nematode expressing the light-sensitive ion channel Mac. Mac is a proton pump originally isolated in the fungus Leptosphaeria maculans and now expressed in the muscle cells of C. elegans

that opens in response to green light and causes hyperpolarizing

inhibition. Of note is the extension in body length that the worm

undergoes each time it is exposed to green light, which is presumably

caused by Mac's muscle-relaxant effects.[35]

Light-activated proteins: channels, pumps and enzymes

The hallmark of optogenetics therefore is introduction of fast light-activated channels, pumps, and enzymes that allow temporally precise manipulation of electrical and biochemical events while maintaining cell-type resolution through the use of specific targeting mechanisms. Among the microbial opsins which can be used to investigate the function of neural systems are the channelrhodopsins (ChR2, ChR1, VChR1, and SFOs) to excite neurons and anion-conducting channelrhodopsins for light-induced inhibition. Light-driven ion pumps are also used to inhibit neuronal activity, e.g. halorhodopsin (NpHR),[39] enhanced halorhodopsins (eNpHR2.0 and eNpHR3.0, see Figure 2),[40] archaerhodopsin (Arch), fungal opsins (Mac) and enhanced bacteriorhodopsin (eBR).[41]

Optogenetic control of well-defined biochemical events within behaving mammals is now also possible. Building on prior work fusing vertebrate opsins to specific G-protein coupled receptors[42] a family of chimeric single-component optogenetic tools was created that allowed researchers to manipulate within behaving mammals the concentration of defined intracellular messengers such as cAMP and IP3 in targeted cells.[43] Other biochemical approaches to optogenetics (crucially, with tools that displayed low activity in the dark) followed soon thereafter, when optical control over small GTPases and adenylyl cyclases was achieved in cultured cells using novel strategies from several different laboratories.[44][45][46][47][48] This emerging repertoire of optogenetic probes now allows cell-type-specific and temporally precise control of multiple axes of cellular function within intact animals.[49]

Hardware for light application

Another necessary factor is hardware (e.g. integrated fiberoptic and solid-state light sources) to allow specific cell types, even deep within the brain, to be controlled in freely behaving animals. Most commonly, the latter is now achieved using the fiberoptic-coupled diode technology introduced in 2007,[50][51][52] though to avoid use of implanted electrodes, researchers have engineered ways to inscribe a "window" made of zirconia that has been modified to be transparent and implanted in mice skulls, to allow optical waves to penetrate more deeply to stimulate or inhibit individual neurons.[53] To stimulate superficial brain areas such as the cerebral cortex, optical fibers or LEDs can be directly mounted to the skull of the animal. More deeply implanted optical fibers have been used to deliver light to deeper brain areas. Complementary to fiber-tethered approaches, completely wireless techniques have been developed utilizing wirelessly delivered power to headborne LEDs for unhindered study of complex behaviors in freely behaving organisms.[54]

Expression of optogenetic actuators

Optogenetics also necessarily includes the development of genetic targeting strategies such as cell-specific promoters or other customized conditionally-active viruses, to deliver the light-sensitive probes to specific populations of neurons in the brain of living animals (e.g. worms, fruit flies, mice, rats, and monkeys). In invertebrates such as worms and fruit flies some amount of all-trans-retinal (ATR) is supplemented with food. A key advantage of microbial opsins as noted above is that they are fully functional without the addition of exogenous co-factors in vertebrates.[52]

Technique

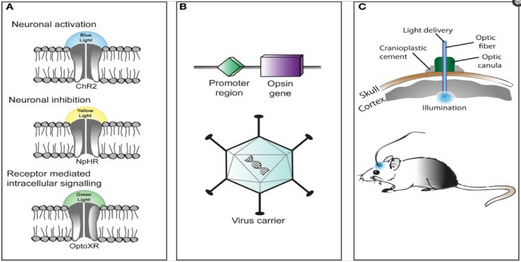

Three primary components in the application of optogenetics

are as follows (A)

Identification or synthesis of a

light-sensitive protein (opsin) such

as channelrhodopsin-2

(ChR2), halorhodopsin (NpHR), etc... (B)

The design of a

system to introduce the genetic material containing the

opsin

into cells for protein expression such as application of Cre

recombinase or an adeno-associated-virus (C) application of

light emitting instruments.[55]

The technique of using optogenetics is flexible and adaptable to the experimenter's needs. For starters, experimenters genetically engineer a microbial opsin based on the gating properties (rate of excitability, refractory period, etc..) required for the experiment.

There is a challenge in introducing the microbial opsin, an optogenetic actuator, into a specific region of the organism in question. A rudimentary approach is to introduce an engineered viral vector that contains the optogenetic actuator gene attached to a recognizable promoter such as CAMKIIα. This allows for some level of specificity as cells that already contain and can translate the given promoter will be infected with the viral vector and hopefully express the optogenetic actuator gene.

Another approach is the creation of transgenic mice where the optogenetic actuator gene is introduced into mice zygotes with a given promoter, most commonly Thy1. Introduction of the optogenetic actuator at an early stage allows for a larger genetic code to be incorporated and as a result, increases the specificity of cells to be infected.

A third and rather novel approach that has been developed is creating transgenic mice with Cre recombinase, an enzyme that catalyzes recombination between two lox-P sites. Then by introducing an engineered viral vector containing the optogenetic actuator gene in between two lox-P sites, only the cells containing the Cre recombinase will express the microbial opsin. This last technique has allowed for multiple modified optogenetic actuators to be used without the need to create a whole line of transgenic animals every time a new microbial opsin is needed.

After the introduction and expression of the microbial opsin, depending on the type of analysis being performed, application of light can be placed at the terminal ends or the main region where the infected cells are situated. Light stimulation can be performed with a vast array of instruments from light emitting diodes (LEDs) or diode-pumped solid state (DPSS). These light sources are most commonly connected to a computer through a fiber optic cable. Recent advances include the advent of wireless head-mounted devices that also apply LED to targeted areas and as a result give the animal more freedom of mobility to reproduce in vivo results.[56][57]

Issues

Although already a powerful scientific tool, optogenetics, according to Doug Tischer & Orion D. Weiner of the University of California San Francisco, should be regarded as a "first-generation GFP" because of its immense potential for both utilization and optimization.[58] With that being said, the current approach to optogenetics is limited primarily by its versatility. Even within the field of Neuroscience where it is most potent, the technique is less robust on a subcellular level.[59]Selective expression

One of the main problems of optogenetics is that not all the cells in question may express the microbial opsin gene at the same level. Thus, even illumination with a defined light intensity will have variable effects on individual cells. Optogenetic stimulation of neurons in the brain is even less controlled as the light intensity drops exponentially from the light source (e.g. implanted optical fiber).Moreover, mathematical modelling shows that selective expression of opsin in specific cell types can dramatically alter the dynamical behavior of the neural circuitry. In particular, optogenetic stimulation that preferentially targets inhibitory cells can transform the excitability of the neural tissue from Type 1 — where neurons operate as integrators — to Type 2 where neurons operate as resonators.[60] Type 1 excitable media sustain propagating waves of activity whereas Type 2 excitable media do not. The transformation from one to the other explains how constant optical stimulation of primate motor cortex elicits gamma-band (40–80 Hz) oscillations in the manner of a Type 2 excitable medium. Yet those same oscillations propagate far into the surrounding tissue in the manner of a Type 1 excitable medium.[61]

Nonetheless, it remains difficult to target opsin to defined subcellular compartments, e.g. the plasma membrane, synaptic vesicles, or mitochondria.[59][62] Restricting the opsin to specific regions of the plasma membrane such as dendrites, somata or axon terminals would provide a more robust understanding of neuronal circuitry.[59]

Kinetics and synchronization

An issue with channelrhodopsin-2 is that its gating properties don't mimic in vivo cation channels of cortical neurons. A solution to this issue with a protein's kinetic property is introduction of variants of channelrhodopsin-2 with more favorable kinetics.[55][56]Another one of the technique's limitations is that light stimulation produces a synchronous activation of infected cells and this removes any individual cell properties of activation among the population affected. Therefore, it is difficult to understand how the cells in the population affected communicate with one another or how their phasic properties of activation may relate to the circuitry being observed.

Optogenetic activation has been combined with functional magnetic resonance imaging (ofMRI) to elucidate the connectome, a thorough map of the brain’s neural connections. The results, however, are limited by the general properties of fMRI.[59][63] The readouts from this neuroimaging procedure lack the spatial and temporal resolution appropriate for studying the densely packed and rapid-firing neuronal circuits.[63]

Excitation spectrum

The opsin proteins currently in use have absorption peaks across the visual spectrum, but remain considerable sensitivity to blue light.[59] This spectral overlap makes it very difficult to combine opsin activation with genenetically encoded indictors (GEVIs, GECIs, GluSnFR, synapto-pHluorin), most of which need blue light excitation. Opsins with infrared activation would, at a standard irradiance value, increase light penetration and augment resolution through reduction of light scattering.Applications

The field of optogenetics has furthered the fundamental scientific understanding of how specific cell types contribute to the function of biological tissues such as neural circuits in vivo (see references from the scientific literature below). Moreover, on the clinical side, optogenetics-driven research has led to insights into Parkinson's disease[64][65] and other neurological and psychiatric disorders. Indeed, optogenetics papers in 2009 have also provided insight into neural codes relevant to autism, Schizophrenia, drug abuse, anxiety, and depression.[41][66][67][68]Identification of particular neurons and networks

Amygdala

Optogenetic approaches have been used to map neural circuits in the amygdala that contribute to fear conditioning.[69][70][71][72] One such example of a neural circuit is the connection made from the basolateral amygdala to the dorsal-medial prefrontal cortex where neuronal oscillations of 4 Hz have been observed in correlation to fear induced freezing behaviors in mice. Transgenic mice were introduced with channelrhodoposin-2 attached with a parvalbumin-Cre promoter that selectively infected interneurons located both in the basolateral amygdala and the dorsal-medial prefrontal cortex responsible for the 4 Hz oscillations. The interneurons were optically stimulated generating a freezing behavior and as a result provided evidence that these 4 Hz oscillations may be responsible for the basic fear response produced by the neuronal populations along the dorsal-medial prefrontal cortex and basolateral amygdala.[73]Olfactory bulb

Optogenetic activation of olfactory sensory neurons was critical for demonstrating timing in odor processing[74] and for mechanism of neuromodulatory mediated olfactory guided behaviors (e.g. aggression, mating)[75] In addition, with the aid of optogenetics, evidence has been reproduced to show that the "afterimage" of odors is concentrated more centrally around the olfactory bulb rather than on the periphery where the olfactory receptor neurons would be located. Transgenic mice infected with channel-rhodopsin Thy1-ChR2, were stimulated with a 473 nm laser transcranially positioned over the dorsal section of the olfactory bulb. Longer photostimulation of mitral cells in the olfactory bulb led to observations of longer lasting neuronal activity in the region after the photostimulation had ceased, meaning the olfactory sensory system is able to undergo long term changes and recognize differences between old and new odors.[76]Nucleus accumbens

Optogenetics, freely moving mammalian behavior, in vivo electrophysiology, and slice physiology have been integrated to probe the cholinergic interneurons of the nucleus accumbens by direct excitation or inhibition. Despite representing less than 1% of the total population of accumbal neurons, these cholinergic cells are able to control the activity of the dopaminergic terminals that innervate medium spiny neurons (MSNs) in the nucleus accumbens.[77] These accumbal MSNs are known to be involved in the neural pathway through which cocaine exerts its effects, because decreasing cocaine-induced changes in the activity of these neurons has been shown to inhibit cocaine conditioning. The few cholinergic neurons present in the nucleus accumbens may prove viable targets for pharmacotherapy in the treatment of cocaine dependence[41]

Cages

for rat equipped of optogenetics leds commutators which permit in vivo

to study animal behavior during optogenetics' stimulations.

Prefrontal cortex

In vivo and in vitro recordings (by the Cooper laboratory) of individual CAMKII AAV-ChR2 expressing pyramidal neurons within the prefrontal cortex demonstrated high fidelity action potential output with short pulses of blue light at 20 Hz (Figure 1).[34] The same group recorded complete green light-induced silencing of spontaneous activity in the same prefrontal cortical neuronal population expressing an AAV-NpHR vector (Figure 2).[34]Heart

Optogenetics was applied on atrial cardiomyocytes to end spiral wave arrhythmias, found to occur in atrial fibrillation, with light.[78] This method is still in the development stage. A recent study explored the possibilities of optogenetics as a method to correct for arrythmias and resynchronize cardiac pacing. The study introduced channelrhodopsin-2 into cardiomyocytes in ventricular areas of hearts of transgenic mice and performed in vitro studies of photostimulation on both open-cavity and closed-cavity mice. Photostimulation led to increased activation of cells and thus increased ventricular contractions resulting in increasing heart rates. In addition, this approach has been applied in cardiac resynchronization therapy (CRT) as a new biological pacemaker as a substitute for electrode based-CRT.[79] Lately, optogenetics has been used in the heart to defibrillate ventricular arrhythmias with local epicardial illumination,[80] a generalized whole heart illumination[81] or with customized stimulation patterns based on arrhythmogenic mechanisms in order to lower defibrillation energy.[82]Spiral ganglion

Optogenetic stimulation of the spiral ganglion in deaf mice restored auditory activity.[83][84] Optogenetic application onto the cochlear region allows for the stimulation or inhibition of the spiral ganglion cells (SGN). In addition, due to the characteristics of the resting potentials of SGN's, different variants of the protein channelrhodopsin-2 have been employed such as Chronos and CatCh. Chronos and CatCh variants are particularly useful in that they have less time spent in their deactivated states, which allow for more activity with less bursts of blue light emitted. The result being that the LED producing the light would require less energy and the idea of cochlear prosthetics in association with photo-stimulation, would be more feasible.[85]Brainstem

Optogenetic stimulation of a modified red-light excitable channelrhodopsin (ReaChR) expressed in the facial motor nucleus enabled minimally invasive activation of motoneurons effective in driving whisker movements in mice.[86] One novel study employed optogenetics on the Dorsal Ralphe Nucleus to both activate and inhibit dopaminergic release onto the ventral tegmental area. To produce activation transgenic mice were infected with channelrhodopsin-2 with a TH-Cre promoter and to produce inhibition the hyperpolarizing opsin NpHR was added onto the TH-Cre promoter. Results showed that optically activating dopaminergic neurons led to an increase in social interactions, and their inhibition decreased the need to socialize only after a period of isolation.[87]Precise temporal control of interventions

The currently available optogenetic actuators allow for the accurate temporal control of the required intervention (i.e. inhibition or excitation of the target neurons) with precision routinely going down to the millisecond level. Therefore, experiments can now be devised where the light used for the intervention is triggered by a particular element of behavior (to inhibit the behavior), a particular unconditioned stimulus (to associate something to that stimulus) or a particular oscillatory event in the brain (to inhibit the event). This kind of approach has already been used in several brain regions:Hippocampus

Sharp waves and ripple complexes (SWRs) are distinct high frequency oscillatory events in the hippocampus thought to play a role in memory formation and consolidation. These events can be readily detected by following the oscillatory cycles of the on-line recorded local field potential. In this way the onset of the event can be used as a trigger signal for a light flash that is guided back into the hippocampus to inhibit neurons specifically during the SWRs and also to optogenetically inhibit the oscillation itself[88] These kinds of "closed-loop" experiments are useful to study SWR complexes and their role in memory.Cellular biology/cell signaling pathways

Optogenetic

control of cellular forces and induction of mechanotransduction.

Pictured cells receive an hour of imaging concurrent with blue light

that pulses every 60 seconds. This is also indicated when the blue point

flashes onto the image. The cell relaxes for an hour without light

activation and then this cycle repeats again. The square inset magnifies

the cell's nucleus.

The optogenetic toolkit has proven pivotal for the field of neuroscience as it allows precise manipulation of neuronal excitability. Moreover, this technique has been shown to extend outside neurons to an increasing number of proteins and cellular functions.[58] Cellular scale modifications including manipulation of contractile forces relevant to cell migration, cell division and wound healing have been optogenetically manipulated.[89] The field has not developed to the point where processes crucial to cellular and developmental biology and cell signaling including protein localization, post-translational modification and GTP loading can be consistently controlled via optogenetics.[58]