| Electroencephalography | |

|---|---|

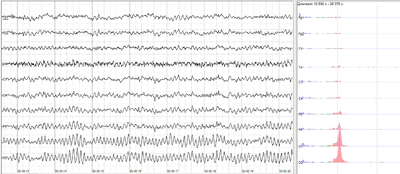

Epileptic spike and wave discharges monitored with EEG

|

EEG is most often used to diagnose epilepsy, which causes abnormalities in EEG readings.[2] It is also used to diagnose sleep disorders, depth of anesthesia, coma, encephalopathies, and brain death. EEG used to be a first-line method of diagnosis for tumors, stroke and other focal brain disorders,[3][4] but this use has decreased with the advent of high-resolution anatomical imaging techniques such as magnetic resonance imaging (MRI) and computed tomography (CT). Despite limited spatial resolution, EEG continues to be a valuable tool for research and diagnosis. It is one of the few mobile techniques available and offers millisecond-range temporal resolution which is not possible with CT, PET or MRI.

Derivatives of the EEG technique include evoked potentials (EP), which involves averaging the EEG activity time-locked to the presentation of a stimulus of some sort (visual, somatosensory, or auditory). Event-related potentials (ERPs) refer to averaged EEG responses that are time-locked to more complex processing of stimuli; this technique is used in cognitive science, cognitive psychology, and psychophysiological research.

History

The first human EEG recording obtained by Hans Berger in 1924. The upper tracing is EEG, and the lower is a 10 Hz timing signal.

The history of EEG is detailed by Barbara E. Swartz in Electroencephalography and Clinical Neurophysiology.[5] In 1875, Richard Caton (1842–1926), a physician practicing in Liverpool, presented his findings about electrical phenomena of the exposed cerebral hemispheres of rabbits and monkeys in the British Medical Journal. In 1890, Polish physiologist Adolf Beck published an investigation of spontaneous electrical activity of the brain of rabbits and dogs that included rhythmic oscillations altered by light. Beck started experiments on the electrical brain activity of animals. Beck placed electrodes directly on the surface of brain to test for sensory stimulation. His observation of fluctuating brain activity led to the conclusion of brain waves.[6]

In 1912, Ukrainian physiologist Vladimir Vladimirovich Pravdich-Neminsky published the first animal EEG and the evoked potential of the mammalian (dog).[7] In 1914, Napoleon Cybulski and Jelenska-Macieszyna photographed EEG recordings of experimentally induced seizures.

German physiologist and psychiatrist Hans Berger (1873–1941) recorded the first human EEG in 1924.[8] Expanding on work previously conducted on animals by Richard Caton and others, Berger also invented the electroencephalogram (giving the device its name), an invention described "as one of the most surprising, remarkable, and momentous developments in the history of clinical neurology".[9] His discoveries were first confirmed by British scientists Edgar Douglas Adrian and B. H. C. Matthews in 1934 and developed by them.

In 1934, Fisher and Lowenback first demonstrated epileptiform spikes. In 1935, Gibbs, Davis and Lennox described interictal spike waves and the three cycles/s pattern of clinical absence seizures, which began the field of clinical electroencephalography. Subsequently, in 1936 Gibbs and Jasper reported the interictal spike as the focal signature of epilepsy. The same year, the first EEG laboratory opened at Massachusetts General Hospital.

Franklin Offner (1911–1999), professor of biophysics at Northwestern University developed a prototype of the EEG that incorporated a piezoelectric inkwriter called a Crystograph (the whole device was typically known as the Offner Dynograph).

In 1947, The American EEG Society was founded and the first International EEG congress was held. In 1953 Aserinsky and Kleitman described REM sleep.

In the 1950s, William Grey Walter developed an adjunct to EEG called EEG topography, which allowed for the mapping of electrical activity across the surface of the brain. This enjoyed a brief period of popularity in the 1980s and seemed especially promising for psychiatry. It was never accepted by neurologists and remains primarily a research tool.

In 1988, report was given on EEG control of a physical object, a robot.[10][11]

Medical use

An EEG recording setup

A routine clinical EEG recording typically lasts 20–30 minutes (plus preparation time) and usually involves recording from scalp electrodes. Routine EEG is typically used in clinical circumstances to distinguish epileptic seizures from other types of spells, such as psychogenic non-epileptic seizures, syncope (fainting), sub-cortical movement disorders and migraine variants, to differentiate "organic" encephalopathy or delirium from primary psychiatric syndromes such as catatonia, to serve as an adjunct test of brain death, to prognosticate, in certain instances, in patients with coma, and to determine whether to wean anti-epileptic medications.

At times, a routine EEG is not sufficient, particularly when it is necessary to record a patient while he/she is having a seizure. In this case, the patient may be admitted to the hospital for days or even weeks, while EEG is constantly being recorded (along with time-synchronized video and audio recording). A recording of an actual seizure (i.e., an ictal recording, rather than an inter-ictal recording of a possibly epileptic patient at some period between seizures) can give significantly better information about whether or not a spell is an epileptic seizure and the focus in the brain from which the seizure activity emanates.

Epilepsy monitoring is typically done to distinguish epileptic seizures from other types of spells, such as psychogenic non-epileptic seizures, syncope (fainting), sub-cortical movement disorders and migraine variants, to characterize seizures for the purposes of treatment, and to localize the region of brain from which a seizure originates for work-up of possible seizure surgery.

Additionally, EEG may be used to monitor the depth of anesthesia, as an indirect indicator of cerebral perfusion in carotid endarterectomy, or to monitor amobarbital effect during the Wada test.

EEG can also be used in intensive care units for brain function monitoring to monitor for non-convulsive seizures/non-convulsive status epilepticus, to monitor the effect of sedative/anesthesia in patients in medically induced coma (for treatment of refractory seizures or increased intracranial pressure), and to monitor for secondary brain damage in conditions such as subarachnoid hemorrhage (currently a research method).

If a patient with epilepsy is being considered for resective surgery, it is often necessary to localize the focus (source) of the epileptic brain activity with a resolution greater than what is provided by scalp EEG. This is because the cerebrospinal fluid, skull and scalp smear the electrical potentials recorded by scalp EEG. In these cases, neurosurgeons typically implant strips and grids of electrodes (or penetrating depth electrodes) under the dura mater, through either a craniotomy or a burr hole. The recording of these signals is referred to as electrocorticography (ECoG), subdural EEG (sdEEG) or intracranial EEG (icEEG)--all terms for the same thing. The signal recorded from ECoG is on a different scale of activity than the brain activity recorded from scalp EEG. Low voltage, high frequency components that cannot be seen easily (or at all) in scalp EEG can be seen clearly in ECoG. Further, smaller electrodes (which cover a smaller parcel of brain surface) allow even lower voltage, faster components of brain activity to be seen. Some clinical sites record from penetrating microelectrodes.[1]

EEG is not indicated for diagnosing headache.[12] Recurring headache is a common pain problem, and this procedure is sometimes used in a search for a diagnosis, but it has no advantage over routine clinical evaluation.[12]

Research use

EEG, and the related study of ERPs are used extensively in neuroscience, cognitive science, cognitive psychology, neurolinguistics and psychophysiological research. Many EEG techniques used in research are not standardised sufficiently for clinical use. But research on mental disabilities, such as auditory processing disorder (APD), ADD, or ADHD, is becoming more widely known and EEGs are used as research and treatment.Advantages

Several other methods to study brain function exist, including functional magnetic resonance imaging (fMRI), positron emission tomography, magnetoencephalography (MEG), nuclear magnetic resonance spectroscopy, electrocorticography, single-photon emission computed tomography, near-infrared spectroscopy (NIRS), and event-related optical signal (EROS). Despite the relatively poor spatial sensitivity of EEG, it possesses multiple advantages over some of these techniques:- Hardware costs are significantly lower than those of most other techniques [13]

- EEG prevents limited availability of technologists to provide immediate care in high traffic hospitals.[14]

- EEG sensors can be used in more places than fMRI, SPECT, PET, MRS, or MEG, as these techniques require bulky and immobile equipment. For example, MEG requires equipment consisting of liquid helium-cooled detectors that can be used only in magnetically shielded rooms, altogether costing upwards of several million dollars;[15] and fMRI requires the use of a 1-ton magnet in, again, a shielded room.

- EEG has very high temporal resolution, on the order of milliseconds rather than seconds. EEG is commonly recorded at sampling rates between 250 and 2000 Hz in clinical and research settings, but modern EEG data collection systems are capable of recording at sampling rates above 20,000 Hz if desired. MEG and EROS are the only other noninvasive cognitive neuroscience techniques that acquire data at this level of temporal resolution.[15]

- EEG is relatively tolerant of subject movement, unlike most other neuroimaging techniques. There even exist methods for minimizing, and even eliminating movement artifacts in EEG data [16]

- EEG is silent, which allows for better study of the responses to auditory stimuli.

- EEG does not aggravate claustrophobia, unlike fMRI, PET, MRS, SPECT, and sometimes MEG[17]

- EEG does not involve exposure to high-intensity (>1 tesla) magnetic fields, as in some of the other techniques, especially MRI and MRS. These can cause a variety of undesirable issues with the data, and also prohibit use of these techniques with participants that have metal implants in their body, such as metal-containing pacemakers[18]

- EEG does not involve exposure to radioligands, unlike positron emission tomography.[19]

- ERP studies can be conducted with relatively simple paradigms, compared with IE block-design fMRI studies

- Extremely uninvasive, unlike Electrocorticography, which actually requires electrodes to be placed on the surface of the brain.

- EEG can detect covert processing (i.e., processing that does not require a response)[20]

- EEG can be used in subjects who are incapable of making a motor response[21]

- Some ERP components can be detected even when the subject is not attending to the stimuli

- Unlike other means of studying reaction time, ERPs can elucidate stages of processing (rather than just the final end result)[22]

- EEG is a powerful tool for tracking brain changes during different phases of life. EEG sleep analysis can indicate significant aspects of the timing of brain development, including evaluating adolescent brain maturation.[23]

- In EEG there is a better understanding of what signal is measured as compared to other research techniques, i.e. the BOLD response in MRI.

Disadvantages

- Low spatial resolution on the scalp. fMRI, for example, can directly display areas of the brain that are active, while EEG requires intense interpretation just to hypothesize what areas are activated by a particular response.[24]

- EEG poorly measures neural activity that occurs below the upper layers of the brain (the cortex).

- Unlike PET and MRS, cannot identify specific locations in the brain at which various neurotransmitters, drugs, etc. can be found.[19]

- Often takes a long time to connect a subject to EEG, as it requires precise placement of dozens of electrodes around the head and the use of various gels, saline solutions, and/or pastes to keep them in place (although a cap can be used). While the length of time differs dependent on the specific EEG device used, as a general rule it takes considerably less time to prepare a subject for MEG, fMRI, MRS, and SPECT.

- Signal-to-noise ratio is poor, so sophisticated data analysis and relatively large numbers of subjects are needed to extract useful information from EEG[25]

With other neuroimaging techniques

Simultaneous EEG recordings and fMRI scans have been obtained successfully,[26][27] though successful simultaneous recording requires that several technical difficulties be overcome, such as the presence of ballistocardiographic artifact, MRI pulse artifact and the induction of electrical currents in EEG wires that move within the strong magnetic fields of the MRI. While challenging, these have been successfully overcome in a number of studies.[28]MRI's produce detailed images created by generating strong magnetic fields that may induce potentially harmful displacement force and torque. These fields produce potentially harmful radio frequency heating and create image artifacts rendering images useless. Due to these potential risks, only certain medical devices can be used in an MR environment.

Similarly, simultaneous recordings with MEG and EEG have also been conducted, which has several advantages over using either technique alone:

- EEG requires accurate information about certain aspects of the skull that can only be estimated, such as skull radius, and conductivities of various skull locations. MEG does not have this issue, and a simultaneous analysis allows this to be corrected for.

- MEG and EEG both detect activity below the surface of the cortex very poorly, and like EEG, the level of error increases with the depth below the surface of the cortex one attempts to examine. However, the errors are very different between the techniques, and combining them thus allows for correction of some of this noise.

- MEG has access to virtually no sources of brain activity below a few centimetres under the cortex. EEG, on the other hand, can receive signals from greater depth, albeit with a high degree of noise. Combining the two makes it easier to determine what in the EEG signal comes from the surface (since MEG is very accurate in examining signals from the surface of the brain), and what comes from deeper in the brain, thus allowing for analysis of deeper brain signals than either EEG or MEG on its own.[29]

EEG has also been combined with positron emission tomography. This provides the advantage of allowing researchers to see what EEG signals are associated with different drug actions in the brain.[31]

Mechanisms

The brain's electrical charge is maintained by billions of neurons.[32] Neurons are electrically charged (or "polarized") by membrane transport proteins that pump ions across their membranes. Neurons are constantly exchanging ions with the extracellular milieu, for example to maintain resting potential and to propagate action potentials. Ions of similar charge repel each other, and when many ions are pushed out of many neurons at the same time, they can push their neighbours, who push their neighbours, and so on, in a wave. This process is known as volume conduction. When the wave of ions reaches the electrodes on the scalp, they can push or pull electrons on the metal in the electrodes. Since metal conducts the push and pull of electrons easily, the difference in push or pull voltages between any two electrodes can be measured by a voltmeter. Recording these voltages over time gives us the EEG.[33]The electric potential generated by an individual neuron is far too small to be picked up by EEG or MEG.[34] EEG activity therefore always reflects the summation of the synchronous activity of thousands or millions of neurons that have similar spatial orientation. If the cells do not have similar spatial orientation, their ions do not line up and create waves to be detected. Pyramidal neurons of the cortex are thought to produce the most EEG signal because they are well-aligned and fire together. Because voltage field gradients fall off with the square of distance, activity from deep sources is more difficult to detect than currents near the skull.[35]

Scalp EEG activity shows oscillations at a variety of frequencies. Several of these oscillations have characteristic frequency ranges, spatial distributions and are associated with different states of brain functioning (e.g., waking and the various sleep stages). These oscillations represent synchronized activity over a network of neurons. The neuronal networks underlying some of these oscillations are understood (e.g., the thalamocortical resonance underlying sleep spindles), while many others are not (e.g., the system that generates the posterior basic rhythm). Research that measures both EEG and neuron spiking finds the relationship between the two is complex, with a combination of EEG power in the gamma band and phase in the delta band relating most strongly to neuron spike activity.[36]

Method

Computer electroencephalograph Neurovisor-BMM 40

In conventional scalp EEG, the recording is obtained by placing electrodes on the scalp with a conductive gel or paste, usually after preparing the scalp area by light abrasion to reduce impedance due to dead skin cells. Many systems typically use electrodes, each of which is attached to an individual wire. Some systems use caps or nets into which electrodes are embedded; this is particularly common when high-density arrays of electrodes are needed.

Electrode locations and names are specified by the International 10–20 system[37] for most clinical and research applications (except when high-density arrays are used). This system ensures that the naming of electrodes is consistent across laboratories. In most clinical applications, 19 recording electrodes (plus ground and system reference) are used.[38] A smaller number of electrodes are typically used when recording EEG from neonates. Additional electrodes can be added to the standard set-up when a clinical or research application demands increased spatial resolution for a particular area of the brain. High-density arrays (typically via cap or net) can contain up to 256 electrodes more-or-less evenly spaced around the scalp.

Each electrode is connected to one input of a differential amplifier (one amplifier per pair of electrodes); a common system reference electrode is connected to the other input of each differential amplifier. These amplifiers amplify the voltage between the active electrode and the reference (typically 1,000–100,000 times, or 60–100 dB of voltage gain). In analog EEG, the signal is then filtered (next paragraph), and the EEG signal is output as the deflection of pens as paper passes underneath. Most EEG systems these days, however, are digital, and the amplified signal is digitized via an analog-to-digital converter, after being passed through an anti-aliasing filter. Analog-to-digital sampling typically occurs at 256–512 Hz in clinical scalp EEG; sampling rates of up to 20 kHz are used in some research applications.

During the recording, a series of activation procedures may be used. These procedures may induce normal or abnormal EEG activity that might not otherwise be seen. These procedures include hyperventilation, photic stimulation (with a strobe light), eye closure, mental activity, sleep and sleep deprivation. During (inpatient) epilepsy monitoring, a patient's typical seizure medications may be withdrawn.

The digital EEG signal is stored electronically and can be filtered for display. Typical settings for the high-pass filter and a low-pass filter are 0.5–1 Hz and 35–70 Hz respectively. The high-pass filter typically filters out slow artifact, such as electrogalvanic signals and movement artifact, whereas the low-pass filter filters out high-frequency artifacts, such as electromyographic signals. An additional notch filter is typically used to remove artifact caused by electrical power lines (60 Hz in the United States and 50 Hz in many other countries).[1]

The EEG signals can be captured with opensource hardware such as OpenBCI and the signal can be processed by freely available EEG software such as EEGLAB or the Neurophysiological Biomarker Toolbox.

As part of an evaluation for epilepsy surgery, it may be necessary to insert electrodes near the surface of the brain, under the surface of the dura mater. This is accomplished via burr hole or craniotomy. This is referred to variously as "electrocorticography (ECoG)", "intracranial EEG (I-EEG)" or "subdural EEG (SD-EEG)". Depth electrodes may also be placed into brain structures, such as the amygdala or hippocampus, structures, which are common epileptic foci and may not be "seen" clearly by scalp EEG. The electrocorticographic signal is processed in the same manner as digital scalp EEG (above), with a couple of caveats. ECoG is typically recorded at higher sampling rates than scalp EEG because of the requirements of Nyquist theorem—the subdural signal is composed of a higher predominance of higher frequency components. Also, many of the artifacts that affect scalp EEG do not impact ECoG, and therefore display filtering is often not needed.

A typical adult human EEG signal is about 10 µV to 100 µV in amplitude when measured from the scalp[39] and is about 10–20 mV when measured from subdural electrodes.

Since an EEG voltage signal represents a difference between the voltages at two electrodes, the display of the EEG for the reading encephalographer may be set up in one of several ways. The representation of the EEG channels is referred to as a montage.

- Sequential montage

- Each channel (i.e., waveform) represents the difference between two adjacent electrodes. The entire montage consists of a series of these channels. For example, the channel "Fp1-F3" represents the difference in voltage between the Fp1 electrode and the F3 electrode. The next channel in the montage, "F3-C3", represents the voltage difference between F3 and C3, and so on through the entire array of electrodes.

- Referential montage

- Each channel represents the difference between a certain electrode and a designated reference electrode. There is no standard position for this reference; it is, however, at a different position than the "recording" electrodes. Midline positions are often used because they do not amplify the signal in one hemisphere vs. the other. Another popular reference is "linked ears", which is a physical or mathematical average of electrodes attached to both earlobes or mastoids.

- Average reference montage

- The outputs of all of the amplifiers are summed and averaged, and this averaged signal is used as the common reference for each channel.

- Laplacian montage

- Each channel represents the difference between an electrode and a weighted average of the surrounding electrodes.[40]

The EEG is read by a clinical neurophysiologist or neurologist (depending on local custom and law regarding medical specialities), optimally one who has specific training in the interpretation of EEGs for clinical purposes. This is done by visual inspection of the waveforms, called graphoelements. The use of computer signal processing of the EEG—so-called quantitative electroencephalography—is somewhat controversial when used for clinical purposes (although there are many research uses).

Limitations

EEG has several limitations. Most important is its poor spatial resolution.[41] EEG is most sensitive to a particular set of post-synaptic potentials: those generated in superficial layers of the cortex, on the crests of gyri directly abutting the skull and radial to the skull. Dendrites, which are deeper in the cortex, inside sulci, in midline or deep structures (such as the cingulate gyrus or hippocampus), or producing currents that are tangential to the skull, have far less contribution to the EEG signal.EEG recordings do not directly capture axonal action potentials. An action potential can be accurately represented as a current quadrupole, meaning that the resulting field decreases more rapidly than the ones produced by the current dipole of post-synaptic potentials.[42] In addition, since EEGs represent averages of thousands of neurons, a large population of cells in synchronous activity is necessary to cause a significant deflection on the recordings. Action potentials are very fast and, as a consequence, the chances of field summation are slim. However, neural backpropagation, as a typically longer dendritic current dipole, can be picked up by EEG electrodes and is a reliable indication of the occurrence of neural output.

Not only do EEGs capture dendritic currents almost exclusively as opposed to axonal currents, they also show a preference for activity on populations of parallel dendrites and transmitting current in the same direction at the same time. Pyramidal neurons of cortical layers II/III and V extend apical dendrites to layer I. Currents moving up or down these processes underlie most of the signals produced by electroencephalography.[43]

Therefore, EEG provides information with a large bias to select neuron types, and generally should not be used to make claims about global brain activity. The meninges, cerebrospinal fluid and skull "smear" the EEG signal, obscuring its intracranial source.

It is mathematically impossible to reconstruct a unique intracranial current source for a given EEG signal,[1] as some currents produce potentials that cancel each other out. This is referred to as the inverse problem. However, much work has been done to produce remarkably good estimates of, at least, a localized electric dipole that represents the recorded currents.[citation needed]

EEG vs fMRI, fNIRS and PET

EEG has several strong points as a tool for exploring brain activity. EEGs can detect changes over milliseconds, which is excellent considering an action potential takes approximately 0.5–130 milliseconds to propagate across a single neuron, depending on the type of neuron.[44] Other methods of looking at brain activity, such as PET and fMRI have time resolution between seconds and minutes. EEG measures the brain's electrical activity directly, while other methods record changes in blood flow (e.g., SPECT, fMRI) or metabolic activity (e.g., PET, NIRS), which are indirect markers of brain electrical activity. EEG can be used simultaneously with fMRI so that high-temporal-resolution data can be recorded at the same time as high-spatial-resolution data, however, since the data derived from each occurs over a different time course, the data sets do not necessarily represent exactly the same brain activity. There are technical difficulties associated with combining these two modalities, including the need to remove the MRI gradient artifact present during MRI acquisition and the ballistocardiographic artifact (resulting from the pulsatile motion of blood and tissue) from the EEG. Furthermore, currents can be induced in moving EEG electrode wires due to the magnetic field of the MRI.EEG can be used simultaneously with NIRS without major technical difficulties. There is no influence of these modalities on each other and a combined measurement can give useful information about electrical activity as well as local hemodynamics.

EEG vs MEG

EEG reflects correlated synaptic activity caused by post-synaptic potentials of cortical neurons. The ionic currents involved in the generation of fast action potentials may not contribute greatly to the averaged field potentials representing the EEG.[34][45] More specifically, the scalp electrical potentials that produce EEG are generally thought to be caused by the extracellular ionic currents caused by dendritic electrical activity, whereas the fields producing magnetoencephalographic signals[15] are associated with intracellular ionic currents.[46]EEG can be recorded at the same time as MEG so that data from these complementary high-time-resolution techniques can be combined.

Studies on numerical modeling of EEG and MEG have also been done.[47]

Normal activity

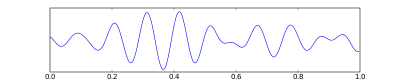

- The sample of human EEG with in resting state. Left: EEG traces (horizontal – time in seconds; vertical – amplitudes, scale 100 μV). Right: power spectra of shown signals (vertical lines – 10 and 20 Hz, scale is linear). 80–90% of people have prominent sinusoidal-like waves with frequencies in 8–12 Hz range – alpha rhythm. Others (like this) lack this type of activity.

- The sample of human EEG with prominent resting state activity – alpha-rhythm. Left: EEG traces (horizontal – time in seconds; vertical – amplitudes, scale 100 μV). Right: power spectra of shown signals (vertical lines – 10 and 20 Hz, scale is linear). Alpha-rhythm consists of sinusoidal-like waves with frequencies in 8–12 Hz range (11 Hz in this case) more prominent in posterior sites. Alpha range is red at power spectrum graph.

- The samples of main types of artifacts in human EEG. 1: Electrooculographic artifact caused by the excitation of eyeball's muscles (related to blinking, for example). Big-amplitude, slow, positive wave prominent in frontal electrodes. 2: Electrode's artifact caused by bad contact (and thus bigger impedance) between P3 electrode and skin. 3: Swallowing artifact. 4: Common reference electrode's artifact caused by bad contact between reference electrode and skin. Huge wave similar in all channels.

Most of the cerebral signal observed in the scalp EEG falls in the range of 1–20 Hz (activity below or above this range is likely to be artifactual, under standard clinical recording techniques). Waveforms are subdivided into bandwidths known as alpha, beta, theta, and delta to signify the majority of the EEG used in clinical practice.[48]

| Band | Frequency (Hz) | Location | Normally | Pathologically |

|---|---|---|---|---|

| Delta | < 4 | frontally in adults, posteriorly in children; high-amplitude waves |

|

|

| Theta | 4–7 | Found in locations not related to task at hand |

|

|

| Alpha | 8–15 | posterior regions of head, both sides, higher in amplitude on dominant side. Central sites (c3-c4) at rest |

|

|

| Beta | 16–31 | both sides, symmetrical distribution, most evident frontally; low-amplitude waves |

|

|

| Gamma | > 32 | Somatosensory cortex |

| |

| Mu | 8–12 | Sensorimotor cortex |

|

|

The practice of using only whole numbers in the definitions comes from practical considerations in the days when only whole cycles could be counted on paper records. This leads to gaps in the definitions, as seen elsewhere on this page. The theoretical definitions have always been more carefully defined to include all frequencies. Unfortunately there is no agreement in standard reference works on what these ranges should be – values for the upper end of alpha and lower end of beta include 12, 13, 14 and 15. If the threshold is taken as 14 Hz, then the slowest beta wave has about the same duration as the longest spike (70 ms), which makes this the most useful value.

| Band | Frequency (Hz) |

|---|---|

| Delta | < 4 |

| Theta | ≥ 4 and < 8 |

| Alpha | ≥ 8 and < 14 |

| Beta | ≥ 14 |

Others sometimes divide the bands into sub-bands for the purposes of data analysis.

Human EEG with prominent alpha-rhythm

Wave patterns

- Delta is the frequency range up to 4 Hz. It tends to be the highest in amplitude and the slowest waves. It is seen normally in adults in slow-wave sleep. It is also seen normally in babies. It may occur focally with subcortical lesions and in general distribution with diffuse lesions, metabolic encephalopathy hydrocephalus or deep midline lesions. It is usually most prominent frontally in adults (e.g. FIRDA – frontal intermittent rhythmic delta) and posteriorly in children (e.g. OIRDA – occipital intermittent rhythmic delta).

- Theta is the frequency range from 4 Hz to 7 Hz. Theta is seen normally in young children. It may be seen in drowsiness or arousal in older children and adults; it can also be seen in meditation.[56] Excess theta for age represents abnormal activity. It can be seen as a focal disturbance in focal subcortical lesions; it can be seen in generalized distribution in diffuse disorder or metabolic encephalopathy or deep midline disorders or some instances of hydrocephalus. On the contrary this range has been associated with reports of relaxed, meditative, and creative states.

- Alpha is the frequency range from 7 Hz to 13 Hz.[57] Hans Berger named the first rhythmic EEG activity he saw as the "alpha wave". This was the "posterior basic rhythm" (also called the "posterior dominant rhythm" or the "posterior alpha rhythm"), seen in the posterior regions of the head on both sides, higher in amplitude on the dominant side. It emerges with closing of the eyes and with relaxation, and attenuates with eye opening or mental exertion. The posterior basic rhythm is actually slower than 8 Hz in young children (therefore technically in the theta range).

- In addition to the posterior basic rhythm, there are other normal alpha rhythms such as the mu rhythm (alpha activity in the contralateral sensory and motor cortical areas) that emerges when the hands and arms are idle; and the "third rhythm" (alpha activity in the temporal or frontal lobes).[58][59] Alpha can be abnormal; for example, an EEG that has diffuse alpha occurring in coma and is not responsive to external stimuli is referred to as "alpha coma".

- Beta is the frequency range from 14 Hz to about 30 Hz. It is seen usually on both sides in symmetrical distribution and is most evident frontally. Beta activity is closely linked to motor behavior and is generally attenuated during active movements.[60] Low-amplitude beta with multiple and varying frequencies is often associated with active, busy or anxious thinking and active concentration. Rhythmic beta with a dominant set of frequencies is associated with various pathologies, such as Dup15q syndrome, and drug effects, especially benzodiazepines. It may be absent or reduced in areas of cortical damage. It is the dominant rhythm in patients who are alert or anxious or who have their eyes open.

- Gamma is the frequency range approximately 30–100 Hz. Gamma rhythms are thought to represent binding of different populations of neurons together into a network for the purpose of carrying out a certain cognitive or motor function.[1]

- Mu range is 8–13 Hz and partly overlaps with other frequencies. It reflects the synchronous firing of motor neurons in rest state. Mu suppression is thought to reflect motor mirror neuron systems, because when an action is observed, the pattern extinguishes, possibly because of the normal neuronal system and the mirror neuron system "go out of sync" and interfere with each other.[54]

Some features of the EEG are transient rather than rhythmic. Spikes and sharp waves may represent seizure activity or interictal activity in individuals with epilepsy or a predisposition toward epilepsy. Other transient features are normal: vertex waves and sleep spindles are seen in normal sleep.

Note that there are types of activity that are statistically uncommon, but not associated with dysfunction or disease. These are often referred to as "normal variants". The mu rhythm is an example of a normal variant.

The normal electroencephalography (EEG) varies by age. The neonatal EEG is quite different from the adult EEG. The EEG in childhood generally has slower frequency oscillations than the adult EEG.

The normal EEG also varies depending on state. The EEG is used along with other measurements (EOG, EMG) to define sleep stages in polysomnography. Stage I sleep (equivalent to drowsiness in some systems) appears on the EEG as drop-out of the posterior basic rhythm. There can be an increase in theta frequencies. Santamaria and Chiappa cataloged a number of the variety of patterns associated with drowsiness. Stage II sleep is characterized by sleep spindles – transient runs of rhythmic activity in the 12–14 Hz range (sometimes referred to as the "sigma" band) that have a frontal-central maximum. Most of the activity in Stage II is in the 3–6 Hz range. Stage III and IV sleep are defined by the presence of delta frequencies and are often referred to collectively as "slow-wave sleep". Stages I–IV comprise non-REM (or "NREM") sleep. The EEG in REM (rapid eye movement) sleep appears somewhat similar to the awake EEG.

EEG under general anesthesia depends on the type of anesthetic employed. With halogenated anesthetics, such as halothane or intravenous agents, such as propofol, a rapid (alpha or low beta), nonreactive EEG pattern is seen over most of the scalp, especially anteriorly; in some older terminology this was known as a WAR (widespread anterior rapid) pattern, contrasted with a WAIS (widespread slow) pattern associated with high doses of opiates. Anesthetic effects on EEG signals are beginning to be understood at the level of drug actions on different kinds of synapses and the circuits that allow synchronized neuronal activity (see: http://www.stanford.edu/group/maciverlab/).

Artifacts

Biological artifacts

Main types of artifacts in human EEG

Electrical signals detected along the scalp by an EEG, but that originate from non-cerebral origin are called artifacts. EEG data is almost always contaminated by such artifacts. The amplitude of artifacts can be quite large relative to the size of amplitude of the cortical signals of interest. This is one of the reasons why it takes considerable experience to correctly interpret EEGs clinically. Some of the most common types of biological artifacts include:

- Eye-induced artifacts (includes eye blinks, eye movements and extra-ocular muscle activity)

- ECG (cardiac) artifacts

- EMG (muscle activation)-induced artifacts

- Glossokinetic artifacts

Eyelid fluttering artifacts of a characteristic type were previously called Kappa rhythm (or Kappa waves). It is usually seen in the prefrontal leads, that is, just over the eyes. Sometimes they are seen with mental activity. They are usually in the Theta (4–7 Hz) or Alpha (7–14 Hz) range. They were named because they were believed to originate from the brain. Later study revealed they were generated by rapid fluttering of the eyelids, sometimes so minute that it was difficult to see. They are in fact noise in the EEG reading, and should not technically be called a rhythm or wave. Therefore, current usage in electroencephalography refers to the phenomenon as an eyelid fluttering artifact, rather than a Kappa rhythm (or wave).[66]

Some of these artifacts can be useful in various applications. The EOG signals, for instance, can be used to detect[64] and track eye-movements, which are very important in polysomnography, and is also in conventional EEG for assessing possible changes in alertness, drowsiness or sleep.

ECG artifacts are quite common and can be mistaken for spike activity. Because of this, modern EEG acquisition commonly includes a one-channel ECG from the extremities. This also allows the EEG to identify cardiac arrhythmias that are an important differential diagnosis to syncope or other episodic/attack disorders.

Glossokinetic artifacts are caused by the potential difference between the base and the tip of the tongue. Minor tongue movements can contaminate the EEG, especially in parkinsonian and tremor disorders.

Environmental artifacts

In addition to artifacts generated by the body, many artifacts originate from outside the body. Movement by the patient, or even just settling of the electrodes, may cause electrode pops, spikes originating from a momentary change in the impedance of a given electrode. Poor grounding of the EEG electrodes can cause significant 50 or 60 Hz artifact, depending on the local power system's frequency. A third source of possible interference can be the presence of an IV drip; such devices can cause rhythmic, fast, low-voltage bursts, which may be confused for spikes.Artifact correction

Recently, independent component analysis (ICA) techniques have been used to correct or remove EEG contaminants.[64][67][68][69][70][71] These techniques attempt to "unmix" the EEG signals into some number of underlying components. There are many source separation algorithms, often assuming various behaviors or natures of EEG. Regardless, the principle behind any particular method usually allow "remixing" only those components that would result in "clean" EEG by nullifying (zeroing) the weight of unwanted components. Fully automated artifact rejection methods, which use ICA, have also been developed.[72]In the last few years, by comparing data from paralysed and unparalysed subjects, EEG contamination by muscle has been shown to be far more prevalent than had previously been realized, particularly in the gamma range above 20 Hz.[73] However, Surface Laplacian has been shown to be effective in eliminating muscle artefact, particularly for central electrodes, which are further from the strongest contaminants.[74] The combination of Surface Laplacian with automated techniques for removing muscle components using ICA proved particularly effective in a follow up study.[75]

Abnormal activity

Abnormal activity can broadly be separated into epileptiform and non-epileptiform activity. It can also be separated into focal or diffuse.Focal epileptiform discharges represent fast, synchronous potentials in a large number of neurons in a somewhat discrete area of the brain. These can occur as interictal activity, between seizures, and represent an area of cortical irritability that may be predisposed to producing epileptic seizures. Interictal discharges are not wholly reliable for determining whether a patient has epilepsy nor where his/her seizure might originate.

Generalized epileptiform discharges often have an anterior maximum, but these are seen synchronously throughout the entire brain. They are strongly suggestive of a generalized epilepsy.

Focal non-epileptiform abnormal activity may occur over areas of the brain where there is focal damage of the cortex or white matter. It often consists of an increase in slow frequency rhythms and/or a loss of normal higher frequency rhythms. It may also appear as focal or unilateral decrease in amplitude of the EEG signal.

Diffuse non-epileptiform abnormal activity may manifest as diffuse abnormally slow rhythms or bilateral slowing of normal rhythms, such as the PBR.

Intracortical Encephalogram electrodes and sub-dural electrodes can be used in tandem to discriminate and discretize artifact from epileptiform and other severe neurological events.

More advanced measures of abnormal EEG signals have also recently received attention as possible biomarkers for different disorders such as Alzheimer's disease.[76]

Remote communication

The United States Army Research Office budgeted $4 million in 2009 to researchers at the University of California, Irvine to develop EEG processing techniques to identify correlates of imagined speech and intended direction to enable soldiers on the battlefield to communicate via computer-mediated reconstruction of team members' EEG signals, in the form of understandable signals such as words.[77]Economics

Inexpensive EEG devices exist for the low-cost research and consumer markets. Recently, a few companies have miniaturized medical grade EEG technology to create versions accessible to the general public. Some of these companies have built commercial EEG devices retailing for less than $100 USD.- In 2004 OpenEEG released its ModularEEG as open source hardware. Compatible open source software includes a game for balancing a ball.

- In 2007 NeuroSky released the first affordable consumer based EEG along with the game NeuroBoy. This was also the first large scale EEG device to use dry sensor technology.[78]

- In 2008 OCZ Technology developed device for use in video games relying primarily on electromyography.

- In 2008 the Final Fantasy developer Square Enix announced that it was partnering with NeuroSky to create a game, Judecca.[79][80]

- In 2009 Mattel partnered with NeuroSky to release the Mindflex, a game that used an EEG to steer a ball through an obstacle course. By far the best selling consumer based EEG to date.[79][81]

- In 2009 Uncle Milton Industries partnered with NeuroSky to release the Star Wars Force Trainer, a game designed to create the illusion of possessing the Force.[79][82]

- In 2009 Emotiv released the EPOC, a 14 channel EEG device. The EPOC is the first commercial BCI to not use dry sensor technology, requiring users to apply a saline solution to electrode pads (which need remoistening after an hour or two of use).[83]

- In 2010, NeuroSky added a blink and electromyography function to the MindSet.[84]

- In 2011, NeuroSky released the MindWave, an EEG device designed for educational purposes and games.[85][86] The MindWave won the Guinness Book of World Records award for "Heaviest machine moved using a brain control interface".[87]

- In 2012, a Japanese gadget project, neurowear, released Necomimi: a headset with motorized cat ears. The headset is a NeuroSky MindWave unit with two motors on the headband where a cat's ears might be. Slipcovers shaped like cat ears sit over the motors so that as the device registers emotional states the ears move to relate. For example, when relaxed, the ears fall to the sides and perk up when excited again.

- In 2014, OpenBCI released an eponymous open source brain-computer interface after a successful kickstarter campaign in 2013. The basic OpenBCI has 8 channels, expandable to 16, and supports EEG, EKG, and EMG. The OpenBCI is based on the Texas Instruments ADS1299 IC and the Arduino or PIC microcontroller, and costs $399 for the basic version. It uses standard metal cup electrodes and conductive paste.

- In 2015, Mind Solutions Inc released the smallest consumer BCI to date, the NeuroSync. This device functions as a dry sensor at a size no larger than a Bluetooth ear piece.[88]

- In 2015, A Chinese-based company Macrotellect released BrainLink Pro and BrainLink Lite, a consumer grade EEG wearable product providing 20 brain fitness enhancement Apps on Apple and Android App Stores.[89]

Future research

The EEG has been used for many purposes besides the conventional uses of clinical diagnosis and conventional cognitive neuroscience. An early use was during World War II by the U.S. Army Air Corps to screen out pilots in danger of having seizures;[90] long-term EEG recordings in epilepsy patients are still used today for seizure prediction. Neurofeedback remains an important extension, and in its most advanced form is also attempted as the basis of brain computer interfaces. The EEG is also used quite extensively in the field of neuromarketing.The EEG is altered by drugs that affect brain functions, the chemicals that are the basis for psychopharmacology. Berger's early experiments recorded the effects of drugs on EEG. The science of pharmaco-electroencephalography has developed methods to identify substances that systematically alter brain functions for therapeutic and recreational use.

Honda is attempting to develop a system to enable an operator to control its Asimo robot using EEG, a technology it eventually hopes to incorporate into its automobiles.[91]

EEGs have been used as evidence in criminal trials in the Indian state of Maharashtra.[92][93]

A lot of research is currently being carried out in order to make EEG devices smaller, more portable and easier to use. So called "Wearable EEG" is based upon creating low power wireless collection electronics and ‘dry’ electrodes which do not require a conductive gel to be used.[94] Wearable EEG aims to provide small EEG devices which are present only on the head and which can record EEG for days, weeks, or months at a time, as ear-EEG. Such prolonged and easy-to-use monitoring could make a step change in the diagnosis of chronic conditions such as epilepsy, and greatly improve the end-user acceptance of BCI systems.[95] Research is also being carried out on identifying specific solutions to increase the battery lifetime of Wearable EEG devices through the use of the data reduction approach. For example, in the context of epilepsy diagnosis, data reduction has been used to extend the battery lifetime of Wearable EEG devices by intelligently selecting, and only transmitting, diagnostically relevant EEG data.[96]

EEG signals from musical performers were used to create instant compositions and one CD by the Brainwave Music Project, run at the Computer Music Center at Columbia University by Brad Garton and Dave Soldier.