Waymo Chrysler Pacifica Hybrid undergoing testing in the San Francisco Bay Area.

Autonomous racing car on display at the 2017 New York City ePrix.

Autonomous cars combine a variety of techniques to perceive their surroundings, including radar, laser light, GPS, odometry, and computer vision. Advanced control systems interpret sensory information to identify appropriate navigation paths, as well as obstacles and relevant signage.

The potential benefits of autonomous cars include reduced mobility and infrastructure costs, increased safety, increased mobility, increased customer satisfaction, and reduced crime. These benefits also include a potentially significant reduction in traffic collisions; resulting injuries; and related costs, including less need for insurance. Autonomous cars are predicted to increase traffic flow; provide enhanced mobility for children, the elderly, disabled, and the poor; relieve travelers from driving and navigation chores; lower fuel consumption; significantly reduce needs for parking space; reduce crime; and facilitate business models for transportation as a service, especially via the sharing economy. This shows the vast disruptive potential of the emerging technology.

In spite of the various potential benefits to increased vehicle automation, there are unresolved problems, such as safety, technology issues, disputes concerning liability,[14][15] resistance by individuals to forfeiting control of their cars,[16] customer concern about the safety of driverless cars,[17] implementation of a legal framework and establishment of government regulations; risk of loss of privacy and security concerns, such as hackers or terrorism; concern about the resulting loss of driving-related jobs in the road transport industry; and risk of increased suburbanization as travel becomes less costly and time-consuming. Many of these issues arise because autonomous objects, for the first time, would allow computer-controlled ground vehicles to roam freely, with many related safety and security, even a moral concerns.

History

General Motors' Firebird II

of the 1950s was described as having an "electronic brain" that allowed

it to move into a lane with a metal conductor and follow it along.

1960 Citroën DS19, modified by TRL for automatic guidance experiments, on display at the Science Museum, London.[18]

Experiments have been conducted on automating driving since at least the 1920s;[19] promising trials took place in the 1950s. The first truly autonomous car was developed in 1977, by the Tsukuba Mechanical Engineering Laboratory in Japan. The vehicle tracked white street markers, which were able to be interpreted by two cameras fitted onto the vehicle, using an analog computer technology for signal processing. The Tsukuba autonomous car was able to achieve speeds up to 30 kilometres per hour (19 mph), with the support of an elevated rail.[20][21] Autonomous prototype cars appeared in the 1980s, with Carnegie Mellon University's Navlab[22] and ALV[23][24] projects funded by DARPA starting in 1984 and Mercedes-Benz and Bundeswehr University Munich's EUREKA Prometheus Project[25] in 1987. By 1985 the ALV had demonstrated self-driving speeds on two-lane roads of 31 kilometres per hour (19 mph) with obstacle avoidance added in 1986 and off-road driving in day and nighttime conditions by 1987.[26] From the 1960s up through the second DARPA Grand Challenge in 2005, autonomous vehicle research in the U.S. was primarily funded by DARPA, the US Army and the U.S. Navy yielding incremental advances in speeds, driving competence in more complex conditions, controls, and sensor systems.[27] Since then, numerous companies and research organizations have developed prototypes.

The U.S. Congress allocated $650 million in 1991 for research on the National Automated Highway System, which demonstrated autonomous driving through a combination of automation embedded in the highway with autonomous technology in vehicles and cooperative networking between the vehicles and with the highway infrastructure. The program concluded with a successful demonstration in 1997 but without clear direction or funding to implement the system on a larger scale.[37] Partly funded by the National Automated Highway System and DARPA, the Carnegie Mellon University Navlab drove 4,584 kilometres (2,848 mi) across America in 1995, 4,501 kilometres (2,797 mi) or 98% of it autonomously.[38] Navlab's record achievement stood unmatched for two decades until 2015 when Delphi improved it by piloting an Audi, augmented with Delphi technology, over 5,472 kilometres (3,400 mi) through 15 states while remaining in self-driving mode 99% of the time.[39] In 2015, the US states of Nevada, Florida, California, Virginia, and Michigan, together with Washington, D.C., allowed the testing of autonomous cars on public roads.[40]

In 2017, Audi stated that its latest A8 would be autonomous at speeds of up to 60 kilometres per hour (37 mph) using its "Audi AI". The driver would not have to do safety checks such as frequently gripping the steering wheel. The Audi A8 was claimed to be the first production car to reach level 3 autonomous driving, and Audi would be the first manufacturer to use laser scanners in addition to cameras and ultrasonic sensors for their system.[41]

In November 2017, Waymo announced that it had begun testing driverless cars without a safety driver in the driver position;[42] however there is still an employee in the car. In July 2018, Waymo announced that its test vehicles had traveled autonomously for over 8,000,000 miles (13,000,000 km), increasing by 1,000,000 miles (1,600,000 kilometres) per month.[43]

Definitions

By involving rather new and diverse concepts, those new technologies have required to define an accurate vocabulary.Autonomous vs. automated

Autonomous means self-governing.[44] Many historical projects related to vehicle autonomy have been automated (made automatic) subject to a heavy reliance on artificial aids in their environment, such as magnetic strips. Autonomous control implies satisfactory performance under significant uncertainties in the environment and the ability to compensate for system failures without external intervention.[44]One approach is to implement communication networks both in the immediate vicinity (for collision avoidance) and further away (for congestion management). Such outside influences in the decision process reduce an individual vehicle's autonomy, while still not requiring human intervention.

Wood et al. (2012) wrote, "This Article generally uses the term 'autonomous,' instead of the term 'automated.' " The term "autonomous" was chosen "because it is the term that is currently in more widespread use (and thus is more familiar to the general public). However, the latter term is arguably more accurate. 'Automated' connotes control or operation by a machine, while 'autonomous' connotes acting alone or independently. Most of the vehicle concepts (that we are currently aware of) have a person in the driver’s seat, utilize a communication connection to the Cloud or other vehicles, and do not independently select either destinations or routes for reaching them. Thus, the term 'automated' would more accurately describe these vehicle concepts".[45] As of 2017, most commercial projects focused on autonomous vehicles that did not communicate with other vehicles or with an enveloping management regime.

Classification

Tesla Autopilot system is considered to be an SAE level 2 system.[46]

A classification system based on six different levels (ranging from fully manual to fully automated systems) was published in 2014 by SAE International, an automotive standardization body, as J3016, Taxonomy and Definitions for Terms Related to On-Road Motor Vehicle Automated Driving Systems. This classification system is based on the amount of driver intervention and attentiveness required, rather than the vehicle capabilities, although these are very loosely related. In the United States in 2013, the National Highway Traffic Safety Administration (NHTSA) released a formal classification system,[49] but abandoned this system in favor of the SAE standard in 2016. Also in 2016, SAE updated its classification, called J3016_201609.[50]

Levels of driving automation

In SAE's autonomy level definitions, "driving mode" means "a type of driving scenario with characteristic dynamic driving task requirements (e.g., expressway merging, high speed cruising, low speed traffic jam, closed-campus operations, etc.)"[51]- Level 0: Automated system issues warnings and may momentarily intervene but has no sustained vehicle control.

- Level 1 ("hands on"): The driver and the automated system share control of the vehicle. Examples are Adaptive Cruise Control (ACC), where the driver controls steering and the automated system controls speed; and Parking Assistance, where steering is automated while speed is under manual control. The driver must be ready to retake full control at any time. Lane Keeping Assistance (LKA) Type II is a further example of level 1 self driving.

- Level 2 ("hands off"): The automated system takes full control of the vehicle (accelerating, braking, and steering). The driver must monitor the driving and be prepared to intervene immediately at any time if the automated system fails to respond properly. The shorthand "hands off" is not meant to be taken literally. In fact, contact between hand and wheel is often mandatory during SAE 2 driving, to confirm that the driver is ready to intervene.

- Level 3 ("eyes off"): The driver can safely turn their attention away from the driving tasks, e.g. the driver can text or watch a movie. The vehicle will handle situations that call for an immediate response, like emergency braking. The driver must still be prepared to intervene within some limited time, specified by the manufacturer, when called upon by the vehicle to do so. As an example, the 2018 Audi A8 Luxury Sedan was the first commercial car to claim to be capable of level 3 self driving. This particular car has a so-called Traffic Jam Pilot. When activated by the human driver, the car takes full control of all aspects of driving in slow-moving traffic at up to 60 kilometres per hour (37 mph). The function works only on highways with a physical barrier separating one stream of traffic from oncoming traffic.

- Level 4 ("mind off"): As level 3, but no driver attention is ever required for safety, i.e. the driver may safely go to sleep or leave the driver's seat. Self driving is supported only in limited spatial areas (geofenced) or under special circumstances, like traffic jams. Outside of these areas or circumstances, the vehicle must be able to safely abort the trip, i.e. park the car, if the driver does not retake control.

- Level 5 ("steering wheel optional"): No human intervention is required at all. An example would be a robotic taxi.

Technical challenges

The challenge for driverless car designers is to produce control systems capable of analyzing sensory data in order to provide accurate detection of other vehicles and the road ahead.[52] Modern self-driving cars generally use Bayesian simultaneous localization and mapping (SLAM) algorithms,[53] which fuse data from multiple sensors and an off-line map into current location estimates and map updates. Google is developing a variant called SLAM, with detection and tracking of other moving objects (DATMO), which also handles obstacles such as cars and pedestrians. Simpler systems may use roadside real-time locating system (RTLS) technologies to aid localization. Typical sensors include Lidar, stereo vision, GPS and IMU.[54][55] Udacity is developing an open-source software stack.[56] Control systems on autonomous cars may use Sensor Fusion, which is an approach that integrates information from a variety of sensors on the car to produce a more consistent, accurate, and useful view of the environment.[57]Driverless vehicles require some form of machine vision for the purpose of visual object recognition. Autonomous cars are being developed with deep neural networks,[54] a type of deep learning architecture with many computational stages, or levels, in which neurons are simulated from the environment that activate the network.[58] The neural network depends on an extensive amount of data extracted from real-life driving scenarios,[54] enabling the neural network to "learn" how to execute the best course of action.[58]

In May 2018, researchers from MIT announced that they had built an autonomous car that can navigate unmapped roads.[59] Researchers at their Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed a new system, called MapLite, which allows self-driving cars to drive on roads that they have never been on before, without using 3D maps. The system combines the GPS position of the vehicle, a "sparse topological map" such as OpenStreetMap, (i.e. having 2D features of the roads only), and a series of sensors that observe the road conditions.[60]

Testing

Testing vehicles with varying degrees of autonomy can be done physically, in closed environments,[61] on public roads (where permitted, typically with a license or permit[62] or adhering to a specific set of operating principles)[63] or virtually, i.e. in computer simulations[64].When driven on public roads, autonomous vehicles require a person to monitor their proper operation and "take over" when needed.

Apple is currently testing self-driven cars, and has increased the number of test vehicles from 3 to 27 in January 2018.[65] This number further increased to 45 in March 2018.[66]

One way to assess the progress of autonomous vehicles is to compute the average number of miles driven between "disengagements", when the autonomous system is turned off, typically by a human driver. In 2017, Waymo reported 63 disengagements over 352,545 miles of testing, or 5596 miles on average, the highest among companies reporting such figures. Waymo also traveled more miles in total than any other. Their 2017 rate of 0.18 disengagements per 1000 miles was an improvement from 0.2 disengagements per 1000 miles in 2016 and 0.8 in 2015. In March, 2017, Uber reported an average of 0.67 miles per disengagement. In the final three months of 2017, Cruise Automation (now owned by GM) averaged 5224 miles per disruption over 62,689 miles.[67] In July 2018, the first electric driverless racing car "Robocar" completed 1.8 kilometers track, using its navigation system, and artificial intelligence.[68]

| Maker | 2016 | |

|---|---|---|

| Miles between disengagements |

Miles | |

| 5,127.9 | 635,868 | |

| BMW | 638 | 638 |

| Nissan | 263.3 | 6,056 |

| Ford | 196.6 | 590 |

| General Motors | 54.7 | 8,156 |

| Delphi Automotive Systems | 14.9 | 2,658 |

| Tesla | 2.9 | 550 |

| Mercedes Benz | 2 | 673 |

| Bosch | 0.68 | 983 |

Fields of application

Autonomous trucks

Several companies are said to be testing autonomous technology in semi trucks. Otto, a self-driving trucking company that was acquired by Uber in August 2016, demonstrated their trucks on the highway before being acquired.[69] In May 2017, San Francisco-based startup Embark[70] announced a partnership with truck manufacturer Peterbilt to test and deploy autonomous technology in Peterbilt's vehicles.[71] Google's Waymo has also said to be testing autonomous technology in trucks,[72] however no timeline has been given for the project.In March 2018, Starsky Robotics, the San Francisco-based autonomous truck company, completed a 7-mile fully driverless trip in Florida without a single human in the truck. Starsky Robotics became the first player in the self-driving truck game to drive in fully autonomous mode on a public road without a person in the cab.[73]

In Europe, truck Platooning is considered with the Safe Road Trains for the Environment approach.

Vehicular automation also cover other kinds of vehicle such as Buses, Trains, Trucks.

Lockheed Martin with funding from the U.S. Army developed an autonomous truck convoying system that uses a lead truck operated by a human driver with a number of trucks following autonomously.[74] Developed as part of the Army's Autonomous Mobility Applique System (AMAS), the system consists of an autonomous driving package that has been installed on more than nine types of vehicles and has completed more than 55,000 hours of driving at speeds up to 40mph as of 2014.[75] As of 2017 the Army was planning to field 100-200 trucks as part of a rapid-fielding program.

Transport systems

In Europe, cities in Belgium, France, Italy and the UK are planning to operate transport systems for autonomous cars,[76][77][78] and Germany, the Netherlands, and Spain have allowed public testing in traffic. In 2015, the UK launched public trials of the LUTZ Pathfinder autonomous pod in Milton Keynes.[79] Beginning in summer 2015 the French government allowed PSA Peugeot-Citroen to make trials in real conditions in the Paris area. The experiments were planned to be extended to other cities such as Bordeaux and Strasbourg by 2016.[80] The alliance between French companies THALES and Valeo (provider of the first self-parking car system that equips Audi and Mercedes premi) is testing its own system.[81] New Zealand is planning to use autonomous vehicles for public transport in Tauranga and Christchurch.In China, Baidu and King Long produce autonomous minibus, a vehicle with 14 seats, but without driving seat. With 100 vehicles produced, 2018 will be the first year with commercial autonomous service in China. Those minibus should be at level 4, that is driver-less in closed roads.[citation needed]

Potential advantages

Safety

Driving safety experts predict that once driverless technology has been fully developed, traffic collisions (and resulting deaths and injuries and costs), caused by human error, such as delayed reaction time, tailgating, rubbernecking, and other forms of distracted or aggressive driving should be substantially reduced.[6][11][12][86] Consulting firm McKinsey & Company estimated that widespread use of autonomous vehicles could "eliminate 90% of all auto accidents in the United States, prevent up to US$190 billion in damages and health-costs annually and save thousands of lives."[87]According to motorist website "TheDrive.com" operated by Time magazine, none of the driving safety experts they were able to contact were able to rank driving under an autopilot system at the time (2017) as having achieved a greater level of safety than traditional fully hands-on driving, so the degree to which these benefits asserted by proponents will manifest in practice cannot be assessed.[88] Confounding factors that could reduce the net effect on safety may include unexpected interactions between humans and partly or fully autonomous vehicles, or between different types of vehicle system; complications at the boundaries of functionality at each automation level (such as handover when the vehicle reaches the limit of its capacity); the effect of the bugs and flaws that inevtably occur in complex interdependent software systems; sensor or data shortcomings; and successful compromise by malicious intereveners.

Welfare

Autonomous cars could reduce labor costs;[89][90] relieve travelers from driving and navigation chores, thereby replacing behind-the-wheel commuting hours with more time for leisure or work;[6][86] and also would lift constraints on occupant ability to drive, distracted and texting while driving, intoxicated, prone to seizures, or otherwise impaired.[91][92][8] For the young, the elderly, people with disabilities, and low-income citizens, autonomous cars could provide enhanced mobility.[93][94][95] The removal of the steering wheel—along with the remaining driver interface and the requirement for any occupant to assume a forward-facing position—would give the interior of the cabin greater ergonomic flexibility. Large vehicles, such as motorhomes, would attain appreciably enhanced ease of use.[96]Traffic

Additional advantages could include higher speed limits;[97] smoother rides;[98] and increased roadway capacity; and minimized traffic congestion, due to decreased need for safety gaps and higher speeds.[99][100] Currently, maximum controlled-access highway throughput or capacity according to the U.S. Highway Capacity Manual is about 2,200 passenger vehicles per hour per lane, with about 5% of the available road space is taken up by cars. One study estimated that autonomous cars could increase capacity by 273% (~8,200 cars per hour per lane). The study also estimated that with 100% connected vehicles using vehicle-to-vehicle communication, capacity could reach 12,000 passenger vehicles per hour (up 445% from 2,200 pc/h per lane) traveling safely at 120 km/h (75 mph) with a following gap of about 6 m (20 ft) of each other. Currently, at highway speeds drivers keep between 40 to 50 m (130 to 160 ft) away from the car in front. These increases in highway capacity could have a significant impact in traffic congestion, particularly in urban areas, and even effectively end highway congestion in some places.[101] The ability for authorities to manage traffic flow would increase, given the extra data and driving behavior predictability.[7] combined with less need for traffic police and even road signage.Lower costs

Safer driving is expected to reduce the costs of vehicle insurance.[89][102] Reduced traffic congestion and the improvements in traffic flow due to widespread use of autonomous cars will also translate into better fuel efficiency.[95][103][104] Additionally, self driving cars will be able to accelerate and brake more efficiently, meaning higher fuel economy from reducing wasted energy typically associated with inefficient changes to speed (energy typically lost due to friction, in the form of heat and sound).Related effects

By reducing the (labor and other) cost of mobility as a service, autonomous cars could reduce the number of cars that are individually owned, replaced by taxi/pooling and other car sharing services.[105][106] This could dramatically reduce the need for parking space, freeing scarce land for other uses. This would also dramatically reduce the size of the automotive production industry, with corresponding environmental and economic effects. Assuming the increased efficiency is not fully offset by increases in demand, more efficient traffic flow could free roadway space for other uses such as better support for pedestrians and cyclists.The vehicles' increased awareness could aid the police by reporting on illegal passenger behavior, while possibly enabling other crimes, such as deliberately crashing into another vehicle or a pedestrian.[10] However, this may also lead to much expanded mass surveillance if there is wide access granted to third parties to the large data sets generated.

The future of passenger rail transport in the era of autonomous cars is not clear.[107]

Potential limits or obstacles

The sort of hoped-for potential benefits from increased vehicle automation described may be limted by foreseeable challenges, such as disputes over liability (will each company operating a vehicle accept that they are its "driver" and thus responsible for what their car does, or will some try to project this liability onto others who are not in control?) ,[14][15] the time needed to turn over the existing stock of vehicles from non-autonomous to autonomous,[108] and thus a long period of humans and autonomous vehicles sharing the roads, resistance by individuals to having to forfeit control of their cars,[16] concerns about the safety of driverless in practice,[17] and the implementation of a legal framework and consistent global government regulations for self-driving cars.[109] Other obstacles could include de-skilling and lower levels of driver experience for dealing with potentially dangerous situations and anomalies,[110] ethical problems where an autonomous vehicle's software is forced during an unavoidable crash to choose between multiple harmful courses of action ('the trolley problem'),[111][112][113], concerns about making large numbers of people currently employed as drivers unemployed (at the same time as many other alternate blue collar occupations may be undermined by automation), the potential for more intrusive mass surveillance of location, association and travel as a result of police and intelligence agency access to large data sets generated by sensors and pattern-recognition AI (making anonymous travel difficult), and possibly insufficient understanding of verbal sounds, gestures and non-verbal cues by police, other drivers or pedestrians.[114]Possible technological obstacles for autonomous cars are:

- Artificial Intelligence is still not able to function properly in chaotic inner city environments.[115]

- A car's computer could potentially be compromised, as could a communication system between cars.[116][117][118][119][120]

- Susceptibility of the car's sensing and navigation systems to different types of weather (such as snow) or deliberate interference, including jamming and spoofing.[114]

- Avoidance of large animals requires recognition and tracking, and Volvo found that software suited to caribou, deer, and elk was ineffective with kangaroos.[121]

- Autonomous cars may require very high-quality specialised maps[122] to operate properly. Where these maps may be out of date, they would need to be able to fall back to reasonable behaviors.[114][123]

- Competition for the radio spectrum desired for the car's communication.[124]

- Field programmability for the systems will require careful evaluation of product development and the component supply chain.[120]

- Current road infrastructure may need changes for autonomous cars to function optimally.[125]

- Discrepancy between people’s beliefs of the necessary government intervention may cause a delay in accepting autonomous cars on the road.[126] Whether the public desires no change in existing laws, federal regulation, or another solution; the framework of regulation will likely result in differences of opinion.[126]

- Employment - Companies working on the technology have an increasing recruitment problem in that the available talent pool has not grown with demand.[127]. As such, education and training by third party organisations such as Sebastian Thrun's Udacity and self-taught community-driven projects such as DIY Robocars[128] and Formula Pi have quickly grown in popularity, while university level extra-curricular programmes such as Formula Student Driverless[129] have bolstered graduate experience. Industry is steadily increasing freely available information sources, such as code,[130] datasets[131] and glossaries[132] to widen the recruitment pool.

Potential disadvantages

A direct impact of widespread adoption of autonomous vehicles is the loss of driving-related jobs in the road transport industry.[89][90][133] There could be resistance from professional drivers and unions who are threatened by job losses.[134] In addition, there could be job losses in public transit services and crash repair shops. The automobile insurance industry might suffer as the technology makes certain aspects of these occupations obsolete.[95] A frequently cited paper by Michael Osborne and Carl Benedikt Frey found that autonomous cars would make many jobs redundant.[135]Privacy could be an issue when having the vehicle's location and position integrated into an interface in which other people have access to.[136] In addition, there is the risk of automotive hacking through the sharing of information through V2V (Vehicle to Vehicle) and V2I (Vehicle to Infrastructure) protocols.[137][138] There is also the risk of terrorist attacks. Self-driving cars could potentially be loaded with explosives and used as bombs.[139]

The lack of stressful driving, more productive time during the trip, and the potential savings in travel time and cost could become an incentive to live far away from cities, where land is cheaper, and work in the city's core, thus increasing travel distances and inducing more urban sprawl, more fuel consumption and an increase in the carbon footprint of urban travel.[140][141] There is also the risk that traffic congestion might increase, rather than decrease.[95] Appropriate public policies and regulations, such as zoning, pricing, and urban design are required to avoid the negative impacts of increased suburbanization and longer distance travel.[95][141]

Some believe that once automation in vehicles reaches higher levels and becomes reliable, drivers will pay less attention to the road.[142] Research shows that drivers in autonomous cars react later when they have to intervene in a critical situation, compared to if they were driving manually.[143] Depending on the capabilities of autonomous vehicles and the frequency with which human intervention is needed, this may counteract any increase in safety, as compared to all-human driving, that may be delivered by other factors.

Ethical and moral reasoning come into consideration when programming the software that decides what action the car takes in an unavoidable crash; whether the autonomous car will crash into a bus, potentially killing people inside; or swerve elsewhere, potentially killing its own passengers or nearby pedestrians.[144] A question that programmers of AI systems find difficult to answer (as do ordinary people, and ethicists) is "what decision should the car make that causes the ‘smallest’ damage to people's lives?"

The ethics of autonomous vehicles are still being articulated, and may lead to controversy.[145] They may also require closer consideration of the variability, context-dependency, complexity and non-deterministic nature of human ethics. Different human drivers make various ethical decisions when driving, such as avoiding harm to themselves, or putting themselves at risk to protect others. These decisions range from rare extremes such as self-sacrifice or criminal negligence, to routine decisions good enough to keep the traffic flowing but bad enough to cause accidents, road rage and stress.

Human thought and reaction time may sometimes be too slow to detect the risk of an upcoming fatal crash, think through the ethical implications of the available options, or take an action to implement an ethical choice. Whether a particular automated vehicle's capacity to correctly detect an upcoming risk, analyse the options or choose a 'good' option from among bad choices would be as good or better than a particular human's may be difficult to predict or assess. This difficulty may be in part because the level of automated vehicle system understanding of the ethical issues at play in a given road scenario, sensed for an instant from out of a continuous stream of synthetic physical predictions of the near future, and dependent on layers of pattern recognition and situational intelligence, may be opaque to human inspection because of its origins in probabilistic machine learning rather than a simple, plain English 'human values' logic of parsable rules. The depth of understanding, predictive power and ethical sophistication needed will be hard to implement, and even harder to test or assess.

The scale of this challenge may have other effects. There may be few entities able to marshal the resources and AI capacity necessary to meet it, as well as the capital necessary to take an autonomous vehicle system to market and sustain it operationally for the life of a vehicle, and the legal and 'government affairs' capacity to deal with the potential for liability for a significant proportion of traffic accidents. This may have the effect of narrowing the number of different system opertors, and eroding the presently quite diverse global vehicle market down to a small number of system suppliers.

Incidents

Mercedes autonomous cruise control system

In 1999, Mercedes introduced Distronic, the first radar-assisted ACC, on the Mercedes-Benz S-Class (W220)[146][147] and the CL-Class.[148] The Distronic system was able to adjust the vehicle speed automatically to the car in front in order to always maintain a safe distance to other cars on the road.

Mercedes-Benz S 450 4MATIC Coupe. The forward-facing Distronic sensors are usually placed behind the Mercedes-Benz logo and front grille.

In 2005, Mercedes refined the system (from this point called "Distronic Plus") with the Mercedes-Benz S-Class (W221) being the first car to receive the upgraded Distronic Plus system. Distronic Plus could now completely halt the car if necessary on E-Class and most Mercedes sedans. In an episode of Top Gear, Jeremy Clarkson demonstrated the effectiveness of the cruise control system in the S-class by coming to a complete halt from motorway speeds to a round-about and getting out, without touching the pedals.[149]

By 2017, Mercedes has vastly expanded its autonomous driving features on production cars: In addition to the standard Distronic Plus features such as an active brake assist, Mercedes now includes a steering pilot, a parking pilot, a cross-traffic assist system, night-vision cameras with automated danger warnings and braking assist (in case animals or pedestrians are on the road for example), and various other autonomous-driving features. In 2016, Mercedes also introduced its Active Brake Assist 4, which was the first emergency braking assistant with pedestrian recognition on the market.[155]

Due to Mercedes' history of gradually implementing advancements of their autonomous driving features that have been extensively tested, not many crashes that have been caused by it are known. One of the known crashes dates back to 2005, when German news magazine "Stern" was testing Mercedes' old Distronic system. During the test, the system did not always manage to brake in time.[156] Ulrich Mellinghoff, then Head of Safety, NVH, and Testing at the Mercedes-Benz Technology Centre, stated that some of the tests failed due to the vehicle being tested in a metallic hall, which caused problems with the system's radar. Later iterations of the Distronic system have an upgraded radar and numerous other sensors, which are not susceptible to a metallic environment anymore.[156][157] In 2008, Mercedes conducted a study comparing the crash rates of their vehicles equipped with Distronic Plus and the vehicles without it, and concluded that those equipped with Distronic Plus have an around 20% lower crash rate.[158] In 2013, German Formula One driver Michael Schumacher was invited by Mercedes to try to crash a Mercedes C-Class vehicle, which was equipped with all safety features that Mercedes offered for its production vehicles at the time, which included the Active Blind Spot Assist, Active Lane Keeping Assist, Brake Assist Plus, Collision Prevention Assist, Distronic Plus with Steering Assist, Pre-Safe Brake, and Stop&Go Pilot. Due to the safety features, Schumacher was unable to crash the vehicle in realistic scenarios.[159]

Tesla Autopilot

In mid‑October 2015 Tesla Motors rolled out version 7 of their software in the U.S. that included Tesla Autopilot capability.[160] On 9 January 2016, Tesla rolled out version 7.1 as an over-the-air update, adding a new "summon" feature that allows cars to self-park at parking locations without the driver in the car.[161] Tesla's autonomous driving features can be classified as somewhere between level 2 and level 3 under the U.S. Department of Transportation’s National Highway Traffic Safety Administration (NHTSA) five levels of vehicle automation. At this level the car can act autonomously but requires the full attention of the driver, who must be prepared to take control at a moment's notice.[162][163][164] Autopilot should be used only on limited-access highways, and sometimes it will fail to detect lane markings and disengage itself. In urban driving the system will not read traffic signals or obey stop signs. The system also does not detect pedestrians or cyclists.

Tesla Model S Autopilot system in use in July 2016; it was only suitable for limited-access highways, not for urban driving. Among other limitations, it could not detect pedestrians or cyclists.[165]

On 20 January 2016 the first known fatal crash of a Tesla with Autopilot occurred in China's Hubei province. According to China's 163.com news channel, this marked "China's first accidental death due to Tesla's automatic driving (system)." Initially, Tesla pointed out that the vehicle was so badly damaged from the impact that their recorder was not able to conclusively prove that the car had been on Autopilot at the time, however 163.com pointed out that other factors, such as the car's absolute failure to take any evasive actions prior to the high speed crash, and the driver's otherwise good driving record, seemed to indicate a strong likelihood that the car was on Autopilot at the time. A similar fatal crash occurred four months later in Florida.[166][167] In 2018, in a subsequent civil suit between the father of the driver killed and Tesla, Tesla did not deny that the car had been on Autopilot at the time of the accident, and sent evidence to the victim's father documenting that fact.[168]

The second known fatal accident involving a vehicle being driven by itself took place in Williston, Florida on 7 May 2016 while a Tesla Model S electric car was engaged in Autopilot mode. The occupant was killed in a crash with an 18-wheel tractor-trailer. On 28 June 2016 the National Highway Traffic Safety Administration (NHTSA) opened a formal investigation into the accident working with the Florida Highway Patrol. According to the NHTSA, preliminary reports indicate the crash occurred when the tractor-trailer made a left turn in front of the Tesla at an intersection on a non-controlled access highway, and the car failed to apply the brakes. The car continued to travel after passing under the truck’s trailer.[169][170] The NHTSA's preliminary evaluation was opened to examine the design and performance of any automated driving systems in use at the time of the crash, which involved a population of an estimated 25,000 Model S cars.[171] On 8 July 2016, the NHTSA requested Tesla Motors provide the agency detailed information about the design, operation and testing of its Autopilot technology. The agency also requested details of all design changes and updates to Autopilot since its introduction, and Tesla's planned updates schedule for the next four months.[172]

According to Tesla, "neither autopilot nor the driver noticed the white side of the tractor-trailer against a brightly lit sky, so the brake was not applied." The car attempted to drive full speed under the trailer, "with the bottom of the trailer impacting the windshield of the Model S." Tesla also claimed that this was Tesla’s first known autopilot death in over 130 million miles (208 million km) driven by its customers with Autopilot engaged, however by this statement, Tesla was apparently refusing to acknowledge claims that the January 2016 fatality in Hubei China had also been the result of an autopilot system error. According to Tesla there is a fatality every 94 million miles (150 million km) among all type of vehicles in the U.S.[169][170][173] However, this number also includes fatalities of the crashes, for instance, of motorcycle drivers with pedestrians.[174][175]

In July 2016 the U.S. National Transportation Safety Board (NTSB) opened a formal investigation into the fatal accident while the Autopilot was engaged. The NTSB is an investigative body that has the power to make only policy recommendations. An agency spokesman said "It's worth taking a look and seeing what we can learn from that event, so that as that automation is more widely introduced we can do it in the safest way possible."[176] In January 2017, the NTSB released the report that concluded Tesla was not at fault; the investigation revealed that for Tesla cars, the crash rate dropped by 40 percent after Autopilot was installed.[177]

According to Tesla, starting 19 October 2016, all Tesla cars are built with hardware to allow full self-driving capability at the highest safety level (SAE Level 5).[178] The hardware includes eight surround cameras and twelve ultrasonic sensors, in addition to the forward-facing radar with enhanced processing capabilities.[179] The system will operate in "shadow mode" (processing without taking action) and send data back to Tesla to improve its abilities until the software is ready for deployment via over-the-air upgrades.[180] After the required testing, Tesla hopes to enable full self-driving by the end of 2019 under certain conditions.

Google self-driving car

Google's in-house autonomous car.

In August 2012, Google announced that their vehicles had completed over 300,000 autonomous-driving miles (500,000 km) accident-free, typically involving about a dozen cars on the road at any given time, and that they were starting to test with single drivers instead of in pairs.[181] In late-May 2014, Google revealed a new prototype that had no steering wheel, gas pedal, or brake pedal, and was fully autonomous.[182] As of March 2016, Alphabet had test-driven their fleet in autonomous mode a total of 1,500,000 mi (2,400,000 km).[183] In December 2016, Google Corporation announced that its technology would be spun-off to a new subsidiary called Waymo.[184][185]

Based on Google's accident reports, their test cars have been involved in 14 collisions, of which other drivers were at fault 13 times, although in 2016 the car's software caused a crash.[186]

In June 2015, Brin confirmed that 12 vehicles had suffered collisions as of that date. Eight involved rear-end collisions at a stop sign or traffic light, two in which the vehicle was side-swiped by another driver, one in which another driver rolled through a stop sign, and one where a Google employee was controlling the car manually.[187] In July 2015, three Google employees suffered minor injuries when their vehicle was rear-ended by a car whose driver failed to brake at a traffic light. This was the first time that a collision resulted in injuries.[188] On 14 February 2016 a Waymo vehicle attempted to avoid sandbags blocking its path. During the maneuver it struck a bus. Google stated, "In this case, we clearly bear some responsibility, because if our car hadn't moved there wouldn't have been a collision."[189][190] Google characterized the crash as a misunderstanding and a learning experience. No injuries were reported in the crash.[186]

Uber

In March 2017, an Uber test vehicle was involved in a crash in Tempe, Arizona when another car failed to yield, flipping the Uber vehicle. There were no injuries in the accident.[191]By 22 December 2017, Uber had completed 2,000,000 miles in autonomous mode.[192]

On 18 March 2018, Elaine Herzberg became the first pedestrian to be killed by a self-driving car in the United States after being hit by an Uber vehicle, also in Tempe. Herzberg was crossing outside of a crosswalk, approximately 400 feet from an intersection.[193] The causes of the accidents include the following: nighttime, low visibility, pedestrian crossing from a shadowed portion of road, crossing a road that had high speed limit, and not checking for cars before crossing (blindly crossing street at night). This marks the first time an individual outside an auto-piloted car is known to have been killed by such a car. The first death of an essentially uninvolved third party is likely to raise new questions and concerns about the safety of autonomous cars in general.[194] Some experts say a human driver could have avoided the fatal crash.[195] Arizona Governor Doug Ducey later suspended the company's ability to test and operate its autonomous cars on public roadways citing an "unquestionable failure" of the expectation that Uber make public safety its top priority.[196] Uber has pulled out of all self-driving-car testing in California as a result of the accident.[197] On 24 May 2018 the National Transport Safety Board issued a preliminary report.[198]

On 9 November 2017 a Navya autonomous self-driving bus with passengers was involved in a crash with a truck. The truck was found to be at fault of the crash, reversing into the stationary autonomous bus. The autonomous bus did not take evasive actions or apply defensive driving such as flash headlights, sound the horn, or as one passenger commented "The shuttle didn't have the ability to move back. The shuttle just stayed still."[199]

Policy implications

Urban planning

According to a Wonkblog reporter, if fully autonomous cars become commercially available, they have the potential to be a disruptive innovation with major implications for society. The likelihood of widespread adoption is still unclear, but if they are used on a wide scale, policy makers face a number of unresolved questions about their effects.[125]One fundamental question is about their effect on travel behavior. Some people believe that they will increase car ownership and car use because it will become easier to use them and they will ultimately be more useful.[125] This may in turn encourage urban sprawl and ultimately total private vehicle use. Others argue that it will be easier to share cars and that this will thus discourage outright ownership and decrease total usage, and make cars more efficient forms of transportation in relation to the present situation.[200]

Policy-makers will have to take a new look at how infrastructure is to be built and how money will be allotted to build for autonomous vehicles. The need for traffic signals could potentially be reduced with the adoption of smart highways.[201] Due to smart highways and with the assistance of smart technological advances implemented by policy change, the dependence on oil imports may be reduced because of less time being spent on the road by individual cars which could have an effect on policy regarding energy.[202] On the other hand, autonomous vehicles could increase the overall number of cars on the road which could lead to a greater dependence on oil imports if smart systems are not enough to curtail the impact of more vehicles.[203] However, due to the uncertainty of the future of autonomous vehicles, policy makers may want to plan effectively by implementing infrastructure improvements that can be beneficial to both human drivers and autonomous vehicles.[204] Caution needs to be taken in acknowledgment to public transportation and that the use may be greatly reduced if autonomous vehicles are catered to through policy reform of infrastructure with this resulting in job loss and increased unemployment.[205]

Other disruptive effects will come from the use of autonomous vehicles to carry goods. Self-driving vans have the potential to make home deliveries significantly cheaper, transforming retail commerce and possibly making hypermarkets and supermarkets redundant. As of right now the U.S. Government defines automation into six levels, starting at level zero which means the human driver does everything and ending with level five, the automated system performs all the driving tasks. Also under the current law, manufacturers bear all the responsibility to self-certify vehicles for use on public roads. This means that currently as long as the vehicle is compliant within the regulatory framework, there are no specific federal legal barriers to a highly automated vehicle being offered for sale. Iyad Rahwan, an associate professor in the MIT Media lab said, "Most people want to live in a world where cars will minimize casualties, but everyone wants their own car to protect them at all costs." Furthermore, industry standards and best practice are still needed in systems before they can be considered reasonably safe under real-world conditions.[206]

Legislation

The 1968 Vienna Convention on Road Traffic, subscribed to by over 70 countries worldwide, establishes principles to govern traffic laws. One of the fundamental principles of the Convention has been the concept that a driver is always fully in control and responsible for the behavior of a vehicle in traffic.[207] The progress of technology that assists and takes over the functions of the driver is undermining this principle, implying that much of the groundwork must be rewritten.Legal status in the United States

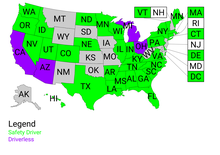

U.S. states that allow testing of driverless cars on public roads

In the United States, a non-signatory country to the Vienna Convention, state vehicle codes generally do not envisage — but do not necessarily prohibit — highly automated vehicles.[208][209] To clarify the legal status of and otherwise regulate such vehicles, several states have enacted or are considering specific laws.[210] In 2016, 7 states (Nevada, California, Florida, Michigan, Hawaii, Washington, and Tennessee), along with the District of Columbia, have enacted laws for autonomous vehicles. Incidents such as the first fatal accident by Tesla's Autopilot system have led to discussion about revising laws and standards for autonomous cars.

In September 2016, the US National Economic Council and Department of Transportation released federal standards that describe how automated vehicles should react if their technology fails, how to protect passenger privacy, and how riders should be protected in the event of an accident. The new federal guidelines are meant to avoid a patchwork of state laws, while avoiding being so overbearing as to stifle innovation.[211]

In June 2011, the Nevada Legislature passed a law to authorize the use of autonomous cars. Nevada thus became the first jurisdiction in the world where autonomous vehicles might be legally operated on public roads. According to the law, the Nevada Department of Motor Vehicles (NDMV) is responsible for setting safety and performance standards and the agency is responsible for designating areas where autonomous cars may be tested.[212][213][214] This legislation was supported by Google in an effort to legally conduct further testing of its Google driverless car.[215] The Nevada law defines an autonomous vehicle to be "a motor vehicle that uses artificial intelligence, sensors and global positioning system coordinates to drive itself without the active intervention of a human operator." The law also acknowledges that the operator will not need to pay attention while the car is operating itself. Google had further lobbied for an exemption from a ban on distracted driving to permit occupants to send text messages while sitting behind the wheel, but this did not become law.[215][216][217] Furthermore, Nevada's regulations require a person behind the wheel and one in the passenger’s seat during tests.[218]

In April 2012, Florida became the second state to allow the testing of autonomous cars on public roads,[219] and California became the third when Governor Jerry Brown signed the bill into law at Google Headquarters in Mountain View.[220] In December 2013, Michigan became the fourth state to allow testing of driverless cars on public roads.[221] In July 2014, the city of Coeur d'Alene, Idaho adopted a robotics ordinance that includes provisions to allow for self-driving cars.

A Toyota Prius modified by Google to operate as a driverless car.

On 19 February 2016, Assembly Bill No. 2866 was introduced in California that would allow completely autonomous vehicles to operate on the road, including those without a driver, steering wheel, accelerator pedal, or brake pedal. The Bill states the Department of Motor Vehicles would need to comply with these regulations by 1 July 2018 for these rules to take effect. This bill has yet to pass the house of origin.[223]

In September 2016, the U.S. Department of Transportation released its Federal Automated Vehicles Policy,[224] and California published discussions on the subject in October 2016.[225]

In December 2016, the California Department of Motor Vehicles ordered Uber to remove its self-driving vehicles from the road in response to two red-light violations. Uber immediately blamed the violations on "human-error", and has suspended the drivers.[226]

Legislation in Europe

In 2013, the government of the United Kingdom permitted the testing of autonomous cars on public roads.[227] Before this, all testing of robotic vehicles in the UK had been conducted on private property.[227]In 2014 the Government of France announced that testing of autonomous cars on public roads would be allowed in 2015. 2000 km of road would be opened through the national territory, especially in Bordeaux, in Isère, Île-de-France and Strasbourg. At the 2015 ITS World Congress, a conference dedicated to intelligent transport systems, the very first demonstration of autonomous vehicles on open road in France was carried out in Bordeaux in early October 2015.[228]

In 2015, a preemptive lawsuit against various automobile companies such as GM, Ford, and Toyota accused them of "Hawking vehicles that are vulnerable to hackers who could hypothetically wrest control of essential functions such as brakes and steering."[229]

In spring of 2015, the Federal Department of Environment, Transport, Energy and Communications in Switzerland (UVEK) allowed Swisscom to test a driverless Volkswagen Passat on the streets of Zurich.[230]

As of April 2017 it is possible to conduct public road tests for development vehicles in Hungary, furthermore the construction of a closed test track, the Zala Zone test track,[231] suitable for testing highly automated functions is also under way near the city of Zalaegerszeg.[232]

Legislation in Asia

In 2016, the Singapore Land Transit Authority in partnership with UK automotive supplier Delphi Automotive Plc will launch preparations for a test run of a fleet of automated taxis for an on-demand autonomous cab service to take effect in 2017.[233]Liability

Autonomous car liability is a developing area of law and policy that will determine who is liable when an autonomous car causes physical damage to persons, or breaks road rules.[234] When autonomous cars shift the control of driving from humans to autonomous car technology, there may be a need for existing liability laws to evolve in order to fairly identify the parties responsible for damage and injury, and to address the potential for conflicts of interest between human occupants, system operator, insurers, and the public purse. [95] Increases in the use of autonomous car technologies (e.g. advanced driver-assistance systems) may prompt incremental shifts in this responsibility for driving. It is claimed by proponents to have potential to affect the frequency of road accidents, although it is difficult to assess this claim in the absence of data from substantial actual use.[235] If there was a dramatic improvement in safety, the operators may seek to project their liability for the remaining accidents onto others as part of their reward for the improvement. However there is no obvious reason why they should escape liability if any such effects were found to be modest or nonexistent, since part of the purpose of such liability is to give an incentive to the party controlling something to do whatever is necessary to avoid it causing harm. Potential users may be reluctant to trust an operator if it seeks to pass its normal liability on to others.In any case, a well-advised person who is not controlling a car at all (Level 5) would be understandably reluctant to accept liability for something out of their control. And when there is some degree of sharing control possible (Level 3 or 4), a well-advised person would be concerned that the vehicle might try to pass back control at the last seconds before an accident, to pass responsibility and liability back too, but in circumstances where the potential driver has no better prospects of avoiding the crash than the vehicle, since they have not necessarily been paying close attention, and if it is too hard for the very smart car it might be too hard for a human. Since operators, especially those familiar with trying to ignore existing legal obligations (under a motto like 'seek forgiveness, not permission'), such as Google or Uber, could be normally expected to try to avoid responsibility to the maximum degree possible, there is potential for attempt to let the operators evade being held liable for accidents while they are in control.

As higher levels of autonomy are commercially introduced (level 3 and 4), the insurance industry may see a greater proportion of commercial and product liability lines while personal automobile insurance shrinks.[236]

Vehicular communication systems

Individual vehicles may benefit from information obtained from other vehicles in the vicinity, especially information relating to traffic congestion and safety hazards. Vehicular communication systems use vehicles and roadside units as the communicating nodes in a peer-to-peer network, providing each other with information. As a cooperative approach, vehicular communication systems can allow all cooperating vehicles to be more effective. According to a 2010 study by the National Highway Traffic Safety Administration, vehicular communication systems could help avoid up to 79 percent of all traffic accidents.[237]In 2012, computer scientists at the University of Texas in Austin began developing smart intersections designed for autonomous cars. The intersections will have no traffic lights and no stop signs, instead using computer programs that will communicate directly with each car on the road.[238]

An efficient intersection management technique called Crossroads was proposed in 2017 that is robust to network delay of V2I communication and Worst-case Execution time of the intersection manager. [239]

Among connected cars, an unconnected one is the weakest link and will be increasingly banned from busy high-speed roads, predicted a Helsinki think tank in January 2016.[240]

Public opinion surveys

In a 2011 online survey of 2,006 US and UK consumers by Accenture, 49% said they would be comfortable using a "driverless car".[241]A 2012 survey of 17,400 vehicle owners by J.D. Power and Associates found 37% initially said they would be interested in purchasing a fully autonomous car. However, that figure dropped to 20% if told the technology would cost $3,000 more.[242]

In a 2012 survey of about 1,000 German drivers by automotive researcher Puls, 22% of the respondents had a positive attitude towards these cars, 10% were undecided, 44% were skeptical and 24% were hostile.[243]

A 2013 survey of 1,500 consumers across 10 countries by Cisco Systems found 57% "stated they would be likely to ride in a car controlled entirely by technology that does not require a human driver", with Brazil, India and China the most willing to trust autonomous technology.[244]

In a 2014 US telephone survey by Insurance.com, over three-quarters of licensed drivers said they would at least consider buying a self-driving car, rising to 86% if car insurance were cheaper. 31.7% said they would not continue to drive once an autonomous car was available instead.[245]

In a February 2015 survey of top auto journalists, 46% predict that either Tesla or Daimler will be the first to the market with a fully autonomous vehicle, while (at 38%) Daimler is predicted to be the most functional, safe, and in-demand autonomous vehicle.[246]

In 2015 a questionnaire survey by Delft University of Technology explored the opinion of 5,000 people from 109 countries on automated driving. Results showed that respondents, on average, found manual driving the most enjoyable mode of driving. 22% of the respondents did not want to spend any money for a fully automated driving system. Respondents were found to be most concerned about software hacking/misuse, and were also concerned about legal issues and safety. Finally, respondents from more developed countries (in terms of lower accident statistics, higher education, and higher income) were less comfortable with their vehicle transmitting data.[247] The survey also gave results on potential consumer opinion on interest of purchasing an automated car, stating that 37% of surveyed current owners were either "definitely" or "probably" interested in purchasing an automated car.[247]

In 2016, a survey in Germany examined the opinion of 1,603 people, who were representative in terms of age, gender, and education for the German population, towards partially, highly, and fully automated cars. Results showed that men and women differ in their willingness to use them. Men felt less anxiety and more joy towards automated cars, whereas women showed the exact opposite. The gender difference towards anxiety was especially pronounced between young men and women but decreased with participants' age.[248]

In 2016, a PwC survey, in the United States, showing the opinion of 1,584 people, highlights that "66 percent of respondents said they think autonomous cars are probably smarter than the average human driver". People are still worried about safety and mostly the fact of having the car hacked. Nevertheless, only 13% of the interviewees see no advantages in this new kind of cars.[249]

A Pew Research Center survey of 4,135 U.S. adults conducted 1–15 May 2017 finds that many Americans anticipate significant impacts from various automation technologies in the course of their lifetimes—from the widespread adoption of autonomous vehicles to the replacement of entire job categories with robot workers.[250]

Moral issues

With the emergence of autonomous automobiles various ethical issues arise. While the introduction of autonomous vehicles to the mass market is said to be inevitable due to an (untestable) potential for reduction of crashes by "up to" 90%[251] and their potential greater accessibility to disabled, elderly, and young passengers, a range of ethical issues have not been fully addressed. Those include, but are not limited to: the moral, financial, and criminal responsibility for crashes and breaches of law; the decisions a car is to make right before a (fatal) crash; privacy issues including potential for mass surveillance; potential for massive job losses and unemployment among drivers; de-skilling and loss of independence by vehicle users; exposure to hacking and malware; and the further concentration of market and data power in the hands of a few global conglomerates capable of consolidating AI capacity, and of lobbying governments to facilitate the shift of liability onto others and their potential destruction of existing occupations and industries.There are different opinions on who should be held liable in case of a crash, especially with people being hurt. Many experts see the car manufacturers themselves responsible for those crashes that occur due to a technical malfunction or misconstruction.[252] Besides the fact that the car manufacturer would be the source of the problem in a situation where a car crashes due to a technical issue, there is another important reason why car manufacturers could be held responsible: it would encourage them to innovate and heavily invest into fixing those issues, not only due to protection of the brand image, but also due to financial and criminal consequences. However, there are also voices [who?] that argue those using or owning the vehicle should be held responsible since they know the risks involved in using such a vehicle. Experts [who?] suggest introducing a tax or insurances that would protect owners and users of autonomous vehicles of claims made by victims of an accident.[252] Other possible parties that can be held responsible in case of a technical failure include software engineers that programmed the code for the autonomous operation of the vehicles, and suppliers of components of the AV.[253]

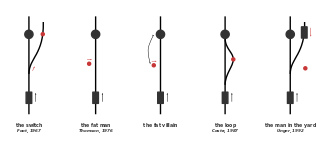

Taking aside the question of legal liability and moral responsibility, the question arises how autonomous vehicles should be programmed to behave in an emergency situation where either passengers or other traffic participants are endangered. A moral dilemma that a software engineer or car manufacturer might face in programming the operating software is described in an ethical thought experiment, the trolley problem: a conductor of a trolley has the choice of staying on the planned track and running over 5 people, or turn the trolley onto a track where it would kill only one person, assuming there is no traffic on it.[254] There are two main considerations that need to be addressed. First, what moral basis would be used by an autonomous vehicle to make decisions? Second, how could those be translated into software code? Researchers have suggested, in particular, two ethical theories to be applicable to the behavior of autonomous vehicles in cases of emergency: deontology and utilitarianism.[255] Asimov’s three laws of robotics are a typical example of deontological ethics. The theory suggests that an autonomous car needs to follow strict written-out rules that it needs to follow in any situation. Utilitarianism suggests the idea that any decision must be made based on the goal to maximize utility. This needs a definition of utility which could be maximizing the number of people surviving in a crash. Critics suggest that autonomous vehicles should adapt a mix of multiple theories to be able to respond morally right in the instance of a crash.[255]

(Many 'Trolley' discussions skip over the practical problems of how a probabilistic machine learning vehicle AI could be sophisticated enough to understand that a deep problem of moral philosophy is presenting itself from instant to instant while using a dynamic projection into the near future, what sort of moral problem it actually would be if any, what the relevant weightings in human value terms should be given to all the other humans involved who will be probably unreliably identified, and how reliably it can assess the probable outcomes. These practical difficulties, and those around testing and assessment of solutions to them, may present as much of a challenge as the theoretical abstractions.)

Privacy-related issues arise mainly from the interconnectivity of autonomous cars, making it just another mobile device that can gather any information about an individual. This information gathering ranges from tracking of the routes taken, voice recording, video recording, preferences in media that is consumed in the car, behavioral patterns, to many more streams of information.[256][257] The data and communications infrastructure needed to support these vehicles may also be capable of surveillance, especially if coupled to other data sets and advanced analytics.

The implementation of autonomous vehicles to the mass market might cost up to 5 million jobs in the US alone, making up almost 3% of the workforce.[258] Those jobs include drivers of taxis, buses, vans, trucks, and e-hailing vehicles. Many industries, such as the auto insurance industry are indirectly affected. This industry alone generates an annual revenue of about $220 billions, supporting 277,000 jobs.[259] To put this into perspective – this is about the number of mechanical engineering jobs.[260] The potential loss of a majority of those jobs will have a tremendous impact on those individuals involved.[261] Both India and China have placed bans on automated cars with the former citing protection of jobs.[citation needed]

Anticipated launch of self-driving cars

In December 2015, Tesla CEO Elon Musk predicted that a completely autonomous car would be introduced by the end of 2018;[262] in December 2017, he announced that it would take another two years to launch a fully self-driving Tesla onto the market.[263]BMW's all-electric autonomous car, called iNext, is expected to be ready by 2021; Toyota’s first self-driving car is due to hit the market in 2020, as is the driverless car being developed by Nissan.[264]

In fiction

In film

The autonomous and occasionally sentient self-driving car story has earned its place in both literary science fiction and pop sci-fi.[265]- A VW Beetle named Dudu features in the 1971 to 1978 German Superbug (film series) of movies similar to Disney's Herbie, but with an electronic brain. (Herbie, also a Beetle, was depicted as an anthropomorphic car with its own spirit.)

- In the film Batman (1989), starring Michael Keaton, the Batmobile is shown to be able to drive to Batman's current location with some navigation commands from Batman and possibly some autonomy.

- The film Total Recall (1990), starring Arnold Schwarzenegger, features taxis called Johnny Cabs controlled by artificial intelligence in the car or the android occupants.

- The film Demolition Man (1993), starring Sylvester Stallone and set in 2032, features vehicles that can be self-driven or commanded to "Auto Mode" where a voice-controlled computer operates the vehicle.

- The film Timecop (1994), starring Jean-Claude Van Damme, set in 2004 and 1994, has autonomous cars.

- Another Arnold Schwarzenegger movie, The 6th Day (2000), features an autonomous car commanded by Michael Rapaport.

- The film Minority Report (2002), set in Washington, D.C. in 2054, features an extended chase sequence involving autonomous cars. The vehicle of protagonist John Anderton is transporting him when its systems are overridden by police in an attempt to bring him into custody.

- The film, The Incredibles (2004), Mr. Incredible makes his car autonomous for him while it changes him into his supersuit when driving to save a cat from a tree.

- The film Eagle Eye ( 2008 ) Shia LaBeouf and Michelle Monaghan are driven around in a Porsche Cayenne that is controlled by ARIIA ( a giant supercomputer ).

- The film I, Robot (2004), set in Chicago in 2035, features autonomous vehicles driving on highways, allowing the car to travel safer at higher speeds than if manually controlled. The option to manually operate the vehicles is available.

- Logan (film) (2017) set in 2029, features fully autonomous trucks.

- Blade Runner 2049 (2017) opens with LAPD Replicant cop K waking up in his 3-wheeled autonomous flying vehicle (featuring a separable surveillance roof drone) on approach to a protein farm in northern California.

- Geostorm, set in 2022, features a self-driving taxi stolen by protagonists Max Lawson and Sarah Wilson to protect the President from mercenaries and a superstorm.

In literature

Intelligent or self-driving cars are a common theme in science fiction literature. Examples include:- In Isaac Asimov's science-fiction short story, "Sally" (first published May–June 1953), autonomous cars have "positronic brains" and communicate via honking horns and slamming doors, and save their human caretaker.

- Peter F. Hamilton's Commonwealth Saga series features intelligent or self-driving vehicles.

- In Robert A Heinlein's novel, The Number of the Beast (1980), Zeb Carter's driving and flying car "Gay Deceiver" is at first semi-autonomous and later, after modifications by Zeb's wife Deety, becomes sentient and capable of fully autonomous operation.

- In Edizioni Piemme's series Geronimo Stilton, a robotic vehicle called "Solar" is in the 54th book.

- Alastair Reynolds' series, Revelation Space, features intelligent or self-driving vehicles.

- In Daniel Suarez' novels Daemon (2006) and Freedom™ (2010) driverless cars and motorcycles are used for attacks in a software-based open-source warfare. The vehicles are modified for this using 3D printers and distributed manufacturing[266] and are also able to operate as swarms.

In television

- "CSI: Cyber" Season 2, episode 6, Gone in 60 Seconds, features three seemingly normal customized vehicles, a 2009 Nissan Fairlady Z Roadster, a BMW M3 E90 and a Cadillac CTS-V, and one stock luxury BMW 7 Series, being remote-controlled by a computer hacker.

- "Handicar", season 18, episode 4 of 2014 TV series South Park features a Japanese autonomous car that takes part in the Wacky Races-style car race.

- KITT and KARR, the Pontiac Trans Ams in the 1982 TV series Knight Rider, were sentient and autonomous.

- "Driven", series 4 episode 11 of the 2003 TV series NCIS features a robotic vehicle named "Otto", part of a high-level project of the Department of Defense, which causes the death of a Navy Lieutenant, and then later almost kills Abby.

- The TV series "Viper" features a silver/grey armored assault vehicle, called The Defender, which masquerades as a flame-red 1992 Dodge Viper RT/10 and later as a 1998 cobalt blue Dodge Viper GTS. The vehicle's sophisticated computer systems allow it to be controlled via remote on some occasions.

- "Black Mirror" episode "Hated in the Nation" briefly features a self-driving SUV with a touchscreen interface on the inside.

- Bull has a show discussing the effectiveness and safety of self-driving cars in an episode call E.J.[267]

- The cartoon 'Blaze and the Monster Machines' features self driving/autonomous cars and trucks.