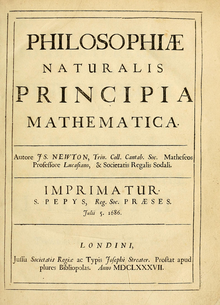

Title page of Principia, first edition (1686/1687)

| |

| Original title | Philosophiæ Naturalis Principia Mathematica |

|---|---|

| Language | New Latin |

Publication date

| 1687 |

Published in English

| 1728 |

| LC Class | QA803 .A53 |

Philosophiæ Naturalis Principia Mathematica (Latin for Mathematical Principles of Natural Philosophy), often referred to as simply the Principia, is a work in three books by Isaac Newton, in Latin, first published 5 July 1687. After annotating and correcting his personal copy of the first edition, Newton published two further editions, in 1713 and 1726. The Principia states Newton's laws of motion, forming the foundation of classical mechanics; Newton's law of universal gravitation; and a derivation of Kepler's laws of planetary motion (which Kepler first obtained empirically).

The Principia is considered one of the most important works in the history of science.[6]

The French mathematical physicist Alexis Clairaut assessed it in 1747: "The famous book of Mathematical Principles of Natural Philosophy marked the epoch of a great revolution in physics. The method followed by its illustrious author Sir Newton ... spread the light of mathematics on a science which up to then had remained in the darkness of conjectures and hypotheses."[7]

A more recent assessment has been that while acceptance of Newton's theories was not immediate, by the end of a century after publication in 1687, "no one could deny that" (out of the Principia) "a science had emerged that, at least in certain respects, so far exceeded anything that had ever gone before that it stood alone as the ultimate exemplar of science generally."[8]

In formulating his physical theories, Newton developed and used mathematical methods now included in the field of calculus. But the language of calculus as we know it was largely absent from the Principia; Newton gave many of his proofs in a geometric form of infinitesimal calculus, based on limits of ratios of vanishing small geometric quantities.[9] In a revised conclusion to the Principia (see General Scholium), Newton used his expression that became famous, Hypotheses non fingo ("I formulate no hypotheses"[10]).

Contents

Expressed aim and topics covered

Sir Isaac Newton (1643–1727) author of the Principia

In the preface of the Principia, Newton wrote:[11]

[...] Rational Mechanics will be the sciences of motion resulting from any forces whatsoever, and of the forces required to produce any motion, accurately proposed and demonstrated [...] And therefore we offer this work as mathematical principles of his philosophy. For all the difficulty of philosophy seems to consist in this—from the phenomenas of motions to investigate the forces of Nature, and then from these forces to demonstrate the other phenomena [...]The Principia deals primarily with massive bodies in motion, initially under a variety of conditions and hypothetical laws of force in both non-resisting and resisting media, thus offering criteria to decide, by observations, which laws of force are operating in phenomena that may be observed. It attempts to cover hypothetical or possible motions both of celestial bodies and of terrestrial projectiles. It explores difficult problems of motions perturbed by multiple attractive forces. Its third and final book deals with the interpretation of observations about the movements of planets and their satellites.

It shows:

- how astronomical observations prove the inverse square law of gravitation (to an accuracy that was high by the standards of Newton's time);

- offers estimates of relative masses for the known giant planets and for the Earth and the Sun;

- defines the very slow motion of the Sun relative to the solar-system barycenter;

- shows how the theory of gravity can account for irregularities in the motion of the Moon;

- identifies the oblateness of the figure of the Earth;

- accounts approximately for marine tides including phenomena of spring and neap tides by the perturbing (and varying) gravitational attractions of the Sun and Moon on the Earth's waters;

- explains the precession of the equinoxes as an effect of the gravitational attraction of the Moon on the Earth's equatorial bulge; and

- gives theoretical basis for numerous phenomena about comets and their elongated, near-parabolic orbits.

The Principia begin with "Definitions"[13] and "Axioms or Laws of Motion",[14] and continues in three books:

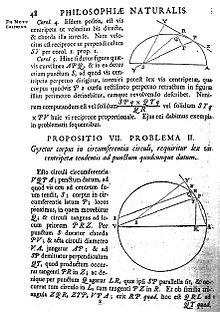

Book 1, De motu corporum

Book 1, subtitled De motu corporum (On the motion of bodies) concerns motion in the absence of any resisting medium. It opens with a mathematical exposition of "the method of first and last ratios",[15] a geometrical form of infinitesimal calculus.

Newton's

proof of Kepler's second law, as described in the book. If an

instantaneous centripetal force (red arrow) is considered on the planet

during its orbit, the area of the triangles defined by the path of the

planet will be the same. This is true for any fixed time interval. When

the interval tends to zero, the force can be considered continuous.

(Click image for a detailed description).

The second section establishes relationships between centripetal forces and the law of areas now known as Kepler's second law (Propositions 1–3),[16] and relates circular velocity and radius of path-curvature to radial force[17] (Proposition 4), and relationships between centripetal forces varying as the inverse-square of the distance to the center and orbits of conic-section form (Propositions 5–10).

Propositions 11–31[18] establish properties of motion in paths of eccentric conic-section form including ellipses, and their relation with inverse-square central forces directed to a focus, and include Newton's theorem about ovals (lemma 28).

Propositions 43–45[19] are demonstration that in an eccentric orbit under centripetal force where the apse may move, a steady non-moving orientation of the line of apses is an indicator of an inverse-square law of force.

Book 1 contains some proofs with little connection to real-world dynamics. But there are also sections with far-reaching application to the solar system and universe:

Propositions 57–69[20] deal with the "motion of bodies drawn to one another by centripetal forces". This section is of primary interest for its application to the solar system, and includes Proposition 66[21] along with its 22 corollaries:[22] here Newton took the first steps in the definition and study of the problem of the movements of three massive bodies subject to their mutually perturbing gravitational attractions, a problem which later gained name and fame (among other reasons, for its great difficulty) as the three-body problem.

Propositions 70–84[23] deal with the attractive forces of spherical bodies. The section contains Newton's proof that a massive spherically symmetrical body attracts other bodies outside itself as if all its mass were concentrated at its centre. This fundamental result, called the Shell theorem, enables the inverse square law of gravitation to be applied to the real solar system to a very close degree of approximation.

Book 2

Part of the contents originally planned for the first book was divided out into a second book, which largely concerns motion through resisting mediums. Just as Newton examined consequences of different conceivable laws of attraction in Book 1, here he examines different conceivable laws of resistance; thus Section 1 discusses resistance in direct proportion to velocity, and Section 2 goes on to examine the implications of resistance in proportion to the square of velocity. Book 2 also discusses (in Section 5) hydrostatics and the properties of compressible fluids. The effects of air resistance on pendulums are studied in Section 6, along with Newton's account of experiments that he carried out, to try to find out some characteristics of air resistance in reality by observing the motions of pendulums under different conditions. Newton compares the resistance offered by a medium against motions of globes with different properties (material, weight, size). In Section 8, he derives rules to determine the speed of waves in fluids and relates them to the density and condensation(Proposition 48;[24] this would become very important in acoustics). He assumes that these rules apply equally to light and sound and estimates that the speed of sound is around 1088 feet per second and can increase depending on the amount of water in air.[25]Less of Book 2 has stood the test of time than of Books 1 and 3, and it has been said that Book 2 was largely written on purpose to refute a theory of Descartes which had some wide acceptance before Newton's work (and for some time after). According to this Cartesian theory of vortices, planetary motions were produced by the whirling of fluid vortices that filled interplanetary space and carried the planets along with them.[26] Newton wrote at the end of Book 2[27] his conclusion that the hypothesis of vortices was completely at odds with the astronomical phenomena, and served not so much to explain as to confuse them.

Book 3, De mundi systemate

Book 3, subtitled De mundi systemate (On the system of the world), is an exposition of many consequences of universal gravitation, especially its consequences for astronomy. It builds upon the propositions of the previous books, and applies them with further specificity than in Book 1 to the motions observed in the solar system. Here (introduced by Proposition 22,[28] and continuing in Propositions 25–35[29]) are developed several of the features and irregularities of the orbital motion of the Moon, especially the variation. Newton lists the astronomical observations on which he relies,[30] and establishes in a stepwise manner that the inverse square law of mutual gravitation applies to solar system bodies, starting with the satellites of Jupiter[31] and going on by stages to show that the law is of universal application.[32] He also gives starting at Lemma 4[33] and Proposition 40[34] the theory of the motions of comets, for which much data came from John Flamsteed and Edmond Halley, and accounts for the tides,[35] attempting quantitative estimates of the contributions of the Sun[36] and Moon[37] to the tidal motions; and offers the first theory of the precession of the equinoxes.[38] Book 3 also considers the harmonic oscillator in three dimensions, and motion in arbitrary force laws.In Book 3 Newton also made clear his heliocentric view of the solar system, modified in a somewhat modern way, since already in the mid-1680s he recognised the "deviation of the Sun" from the centre of gravity of the solar system.[39] For Newton, "the common centre of gravity of the Earth, the Sun and all the Planets is to be esteem'd the Centre of the World",[40] and that this centre "either is at rest, or moves uniformly forward in a right line".[41] Newton rejected the second alternative after adopting the position that "the centre of the system of the world is immoveable", which "is acknowledg'd by all, while some contend that the Earth, others, that the Sun is fix'd in that centre".[41] Newton estimated the mass ratios Sun:Jupiter and Sun:Saturn,[42] and pointed out that these put the centre of the Sun usually a little way off the common center of gravity, but only a little, the distance at most "would scarcely amount to one diameter of the Sun".[43]

Commentary on the Principia

The sequence of definitions used in setting up dynamics in the Principia is recognisable in many textbooks today. Newton first set out the definition of massThe quantity of matter is that which arises conjointly from its density and magnitude. A body twice as dense in double the space is quadruple in quantity. This quantity I designate by the name of body or of mass.This was then used to define the "quantity of motion" (today called momentum), and the principle of inertia in which mass replaces the previous Cartesian notion of intrinsic force. This then set the stage for the introduction of forces through the change in momentum of a body. Curiously, for today's readers, the exposition looks dimensionally incorrect, since Newton does not introduce the dimension of time in rates of changes of quantities.

He defined space and time "not as they are well known to all". Instead, he defined "true" time and space as "absolute"[44] and explained:

Only I must observe, that the vulgar conceive those quantities under no other notions but from the relation they bear to perceptible objects. And it will be convenient to distinguish them into absolute and relative, true and apparent, mathematical and common. [...] instead of absolute places and motions, we use relative ones; and that without any inconvenience in common affairs; but in philosophical discussions, we ought to step back from our senses, and consider things themselves, distinct from what are only perceptible measures of them.To some modern readers it can appear that some dynamical quantities recognised today were used in the Principia but not named. The mathematical aspects of the first two books were so clearly consistent that they were easily accepted; for example, Locke asked Huygens whether he could trust the mathematical proofs, and was assured about their correctness.

However, the concept of an attractive force acting at a distance received a cooler response. In his notes, Newton wrote that the inverse square law arose naturally due to the structure of matter. However, he retracted this sentence in the published version, where he stated that the motion of planets is consistent with an inverse square law, but refused to speculate on the origin of the law. Huygens and Leibniz noted that the law was incompatible with the notion of the aether. From a Cartesian point of view, therefore, this was a faulty theory. Newton's defence has been adopted since by many famous physicists—he pointed out that the mathematical form of the theory had to be correct since it explained the data, and he refused to speculate further on the basic nature of gravity. The sheer number of phenomena that could be organised by the theory was so impressive that younger "philosophers" soon adopted the methods and language of the Principia.

Rules of Reasoning in Philosophy

Perhaps to reduce the risk of public misunderstanding, Newton included at the beginning of Book 3 (in the second (1713) and third (1726) editions) a section entitled "Rules of Reasoning in Philosophy." In the four rules, as they came finally to stand in the 1726 edition, Newton effectively offers a methodology for handling unknown phenomena in nature and reaching towards explanations for them. The four Rules of the 1726 edition run as follows (omitting some explanatory comments that follow each):Rule 1: We are to admit no more causes of natural things than such as are both true and sufficient to explain their appearances.

Rule 2: Therefore to the same natural effects we must, as far as possible, assign the same causes.

Rule 3: The qualities of bodies, which admit neither intensification nor remission of degrees, and which are found to belong to all bodies within the reach of our experiments, are to be esteemed the universal qualities of all bodies whatsoever.

Rule 4: In experimental philosophy we are to look upon propositions inferred by general induction from phenomena as accurately or very nearly true, not withstanding any contrary hypothesis that may be imagined, till such time as other phenomena occur, by which they may either be made more accurate, or liable to exceptions.

This section of Rules for philosophy is followed by a listing of 'Phenomena', in which are listed a number of mainly astronomical observations, that Newton used as the basis for inferences later on, as if adopting a consensus set of facts from the astronomers of his time.

Both the 'Rules' and the 'Phenomena' evolved from one edition of the Principia to the next. Rule 4 made its appearance in the third (1726) edition; Rules 1–3 were present as 'Rules' in the second (1713) edition, and predecessors of them were also present in the first edition of 1687, but there they had a different heading: they were not given as 'Rules', but rather in the first (1687) edition the predecessors of the three later 'Rules', and of most of the later 'Phenomena', were all lumped together under a single heading 'Hypotheses' (in which the third item was the predecessor of a heavy revision that gave the later Rule 3).

From this textual evolution, it appears that Newton wanted by the later headings 'Rules' and 'Phenomena' to clarify for his readers his view of the roles to be played by these various statements.

In the third (1726) edition of the Principia, Newton explains each rule in an alternative way and/or gives an example to back up what the rule is claiming. The first rule is explained as a philosophers' principle of economy. The second rule states that if one cause is assigned to a natural effect, then the same cause so far as possible must be assigned to natural effects of the same kind: for example respiration in humans and in animals, fires in the home and in the Sun, or the reflection of light whether it occurs terrestrially or from the planets. An extensive explanation is given of the third rule, concerning the qualities of bodies, and Newton discusses here the generalisation of observational results, with a caution against making up fancies contrary to experiments, and use of the rules to illustrate the observation of gravity and space.

Isaac Newton’s statement of the four rules revolutionised the investigation of phenomena. With these rules, Newton could in principle begin to address all of the world’s present unsolved mysteries. He was able to use his new analytical method to replace that of Aristotle, and he was able to use his method to tweak and update Galileo’s experimental method. The re-creation of Galileo's method has never been significantly changed and in its substance, scientists use it today.[citation needed]

General Scholium

The General Scholium is a concluding essay added to the second edition, 1713 (and amended in the third edition, 1726).[45] It is not to be confused with the General Scholium at the end of Book 2, Section 6, which discusses his pendulum experiments and resistance due to air, water, and other fluids.Here Newton used what became his famous expression Hypotheses non fingo, "I formulate no hypotheses",[10] in response to criticisms of the first edition of the Principia. ('Fingo' is sometimes nowadays translated 'feign' rather than the traditional 'frame'.) Newton's gravitational attraction, an invisible force able to act over vast distances, had led to criticism that he had introduced "occult agencies" into science.[46] Newton firmly rejected such criticisms and wrote that it was enough that the phenomena implied gravitational attraction, as they did; but the phenomena did not so far indicate the cause of this gravity, and it was both unnecessary and improper to frame hypotheses of things not implied by the phenomena: such hypotheses "have no place in experimental philosophy", in contrast to the proper way in which "particular propositions are inferr'd from the phenomena and afterwards rendered general by induction".[47]

Newton also underlined his criticism of the vortex theory of planetary motions, of Descartes, pointing to its incompatibility with the highly eccentric orbits of comets, which carry them "through all parts of the heavens indifferently".

Newton also gave theological argument. From the system of the world, he inferred the existence of a Lord God, along lines similar to what is sometimes called the argument from intelligent or purposive design. It has been suggested that Newton gave "an oblique argument for a unitarian conception of God and an implicit attack on the doctrine of the Trinity",[48][49] but the General Scholium appears to say nothing specifically about these matters.

Writing and publication

Halley and Newton's initial stimulus

In January 1684, Edmond Halley, Christopher Wren and Robert Hooke had a conversation in which Hooke claimed to not only have derived the inverse-square law, but also all the laws of planetary motion. Wren was unconvinced, Hooke did not produce the claimed derivation although the others gave him time to do it, and Halley, who could derive the inverse-square law for the restricted circular case (by substituting Kepler's relation into Huygens' formula for the centrifugal force) but failed to derive the relation generally, resolved to ask Newton.[50]Halley's visits to Newton in 1684 thus resulted from Halley's debates about planetary motion with Wren and Hooke, and they seem to have provided Newton with the incentive and spur to develop and write what became Philosophiae Naturalis Principia Mathematica. Halley was at that time a Fellow and Council member of the Royal Society in London (positions that in 1686 he resigned to become the Society's paid Clerk).[51] Halley's visit to Newton in Cambridge in 1684 probably occurred in August.[52] When Halley asked Newton's opinion on the problem of planetary motions discussed earlier that year between Halley, Hooke and Wren,[53] Newton surprised Halley by saying that he had already made the derivations some time ago; but that he could not find the papers. (Matching accounts of this meeting come from Halley and Abraham De Moivre to whom Newton confided.) Halley then had to wait for Newton to 'find' the results, but in November 1684 Newton sent Halley an amplified version of whatever previous work Newton had done on the subject. This took the form of a 9-page manuscript, De motu corporum in gyrum (Of the motion of bodies in an orbit): the title is shown on some surviving copies, although the (lost) original may have been without title.

Newton's tract De motu corporum in gyrum, which he sent to Halley in late 1684, derived what are now known as the three laws of Kepler, assuming an inverse square law of force, and generalised the result to conic sections. It also extended the methodology by adding the solution of a problem on the motion of a body through a resisting medium. The contents of De motu so excited Halley by their mathematical and physical originality and far-reaching implications for astronomical theory, that he immediately went to visit Newton again, in November 1684, to ask Newton to let the Royal Society have more of such work.[54] The results of their meetings clearly helped to stimulate Newton with the enthusiasm needed to take his investigations of mathematical problems much further in this area of physical science, and he did so in a period of highly concentrated work that lasted at least until mid-1686.[55]

Newton's single-minded attention to his work generally, and to his project during this time, is shown by later reminiscences from his secretary and copyist of the period, Humphrey Newton. His account tells of Isaac Newton's absorption in his studies, how he sometimes forgot his food, or his sleep, or the state of his clothes, and how when he took a walk in his garden he would sometimes rush back to his room with some new thought, not even waiting to sit before beginning to write it down.[56] Other evidence also shows Newton's absorption in the Principia: Newton for years kept up a regular programme of chemical or alchemical experiments, and he normally kept dated notes of them, but for a period from May 1684 to April 1686, Newton's chemical notebooks have no entries at all.[57] So it seems that Newton abandoned pursuits to which he was normally dedicated, and did very little else for well over a year and a half, but concentrated on developing and writing what became his great work.

The first of the three constituent books was sent to Halley for the printer in spring 1686, and the other two books somewhat later. The complete work, published by Halley at his own financial risk,[58] appeared in July 1687. Newton had also communicated De motu to Flamsteed, and during the period of composition he exchanged a few letters with Flamsteed about observational data on the planets, eventually acknowledging Flamsteed's contributions in the published version of the Principia of 1687.

Preliminary version

Newton's own first edition copy of his Principia, with handwritten corrections for the second edition.

The process of writing that first edition of the Principia went through several stages and drafts: some parts of the preliminary materials still survive, while others are lost except for fragments and cross-references in other documents.[59]

Surviving materials show that Newton (up to some time in 1685) conceived his book as a two-volume work. The first volume was to be titled De motu corporum, Liber primus, with contents that later appeared in extended form as Book 1 of the Principia.[citation needed]

A fair-copy draft of Newton's planned second volume De motu corporum, Liber secundus survives, its completion dated to about the summer of 1685. It covers the application of the results of Liber primus to the Earth, the Moon, the tides, the solar system, and the universe; in this respect it has much the same purpose as the final Book 3 of the Principia, but it is written much less formally and is more easily read.[citation needed]

Titlepage and frontispiece of the third edition, London, 1726 (John Rylands Library)

It is not known just why Newton changed his mind so radically about the final form of what had been a readable narrative in De motu corporum, Liber secundus of 1685, but he largely started afresh in a new, tighter, and less accessible mathematical style, eventually to produce Book 3 of the Principia as we know it. Newton frankly admitted that this change of style was deliberate when he wrote that he had (first) composed this book "in a popular method, that it might be read by many", but to "prevent the disputes" by readers who could not "lay aside the[ir] prejudices", he had "reduced" it "into the form of propositions (in the mathematical way) which should be read by those only, who had first made themselves masters of the principles established in the preceding books".[60] The final Book 3 also contained in addition some further important quantitative results arrived at by Newton in the meantime, especially about the theory of the motions of comets, and some of the perturbations of the motions of the Moon.

The result was numbered Book 3 of the Principia rather than Book 2, because in the meantime, drafts of Liber primus had expanded and Newton had divided it into two books. The new and final Book 2 was concerned largely with the motions of bodies through resisting mediums.[citation needed]

But the Liber secundus of 1685 can still be read today. Even after it was superseded by Book 3 of the Principia, it survived complete, in more than one manuscript. After Newton's death in 1727, the relatively accessible character of its writing encouraged the publication of an English translation in 1728 (by persons still unknown, not authorised by Newton's heirs). It appeared under the English title A Treatise of the System of the World.[61] This had some amendments relative to Newton's manuscript of 1685, mostly to remove cross-references that used obsolete numbering to cite the propositions of an early draft of Book 1 of the Principia. Newton's heirs shortly afterwards published the Latin version in their possession, also in 1728, under the (new) title De Mundi Systemate, amended to update cross-references, citations and diagrams to those of the later editions of the Principia, making it look superficially as if it had been written by Newton after the Principia, rather than before.[62] The System of the World was sufficiently popular to stimulate two revisions (with similar changes as in the Latin printing), a second edition (1731), and a 'corrected' reprint[63] of the second edition (1740).

Halley's role as publisher

The text of the first of the three books of the Principia was presented to the Royal Society at the close of April 1686. Hooke made some priority claims (but failed to substantiate them), causing some delay. When Hooke's claim was made known to Newton, who hated disputes, Newton threatened to withdraw and suppress Book 3 altogether, but Halley, showing considerable diplomatic skills, tactfully persuaded Newton to withdraw his threat and let it go forward to publication. Samuel Pepys, as President, gave his imprimatur on 30 June 1686, licensing the book for publication. The Society had just spent its book budget on a History of Fishes,[64] and the cost of publication was borne by Edmund Halley (who was also then acting as publisher of the Philosophical Transactions of the Royal Society): the book appeared in summer 1687.[65]Historical context

Beginnings of the Scientific Revolution

Nicolaus Copernicus (1473–1543) was the first person to formulate a comprehensive heliocentric (or Sun-centered) model of the universe

Nicolaus Copernicus had moved the Earth away from the center of the universe with the heliocentric theory for which he presented evidence in his book De revolutionibus orbium coelestium (On the revolutions of the heavenly spheres) published in 1543. The structure was completed when Johannes Kepler wrote the book Astronomia nova (A new astronomy) in 1609, setting out the evidence that planets move in elliptical orbits with the sun at one focus, and that planets do not move with constant speed along this orbit. Rather, their speed varies so that the line joining the centres of the sun and a planet sweeps out equal areas in equal times. To these two laws he added a third a decade later, in his book Harmonices Mundi (Harmonies of the world). This law sets out a proportionality between the third power of the characteristic distance of a planet from the sun and the square of the length of its year.

Italian physicist Galileo Galilei

(1564–1642), a champion of the Copernican model of the universe and an

enormously influential figure in the history of kinematics and classical

mechanics

The foundation of modern dynamics was set out in Galileo's book Dialogo sopra i due massimi sistemi del mondo (Dialogue on the two main world systems) where the notion of inertia was implicit and used. In addition, Galileo's experiments with inclined planes had yielded precise mathematical relations between elapsed time and acceleration, velocity or distance for uniform and uniformly accelerated motion of bodies.

Descartes' book of 1644 Principia philosophiae (Principles of philosophy) stated that bodies can act on each other only through contact: a principle that induced people, among them himself, to hypothesize a universal medium as the carrier of interactions such as light and gravity—the aether. Newton was criticized for apparently introducing forces that acted at distance without any medium.[46] Not until the development of particle theory was Descartes' notion vindicated when it was possible to describe all interactions, like the strong, weak, and electromagnetic fundamental interactions, using mediating gauge bosons [66] and gravity through hypothesized gravitons.[67] Although he was mistaken in his treatment of circular motion, this effort was more fruitful in the short term when it led others to identify circular motion as a problem raised by the principle of inertia. Christiaan Huygens solved this problem in the 1650s and published it much later in 1673 in his book Horologium oscillatorium sive de motu pendulorum.

Newton's role

Newton had studied these books, or, in some cases, secondary sources based on them, and taken notes entitled Quaestiones quaedam philosophicae (Questions about philosophy) during his days as an undergraduate. During this period (1664–1666) he created the basis of calculus, and performed the first experiments in the optics of colour. At this time, his proof that white light was a combination of primary colours (found via prismatics) replaced the prevailing theory of colours and received an overwhelmingly favourable response, and occasioned bitter disputes with Robert Hooke and others, which forced him to sharpen his ideas to the point where he already composed sections of his later book Opticks by the 1670s in response. Work on calculus is shown in various papers and letters, including two to Leibniz. He became a fellow of the Royal Society and the second Lucasian Professor of Mathematics (succeeding Isaac Barrow) at Trinity College, Cambridge.Newton's early work on motion

In the 1660s Newton studied the motion of colliding bodies, and deduced that the centre of mass of two colliding bodies remains in uniform motion. Surviving manuscripts of the 1660s also show Newton's interest in planetary motion and that by 1669 he had shown, for a circular case of planetary motion, that the force he called 'endeavour to recede' (now called centrifugal force) had an inverse-square relation with distance from the center.[68] After his 1679–1680 correspondence with Hooke, described below, Newton adopted the language of inward or centripetal force. According to Newton scholar J Bruce Brackenridge, although much has been made of the change in language and difference of point of view, as between centrifugal or centripetal forces, the actual computations and proofs remained the same either way. They also involved the combination of tangential and radial displacements, which Newton was making in the 1660s. The difference between the centrifugal and centripetal points of view, though a significant change of perspective, did not change the analysis.[69] Newton also clearly expressed the concept of linear inertia in the 1660s: for this Newton was indebted to Descartes' work published 1644.[70]Controversy with Hooke

Artist's impression of English polymath Robert Hooke (1635–1703).

Hooke published his ideas about gravitation in the 1660s and again in 1674. He argued for an attracting principle of gravitation in Micrographia of 1665, in a 1666 Royal Society lecture On gravity, and again in 1674, when he published his ideas about the System of the World in somewhat developed form, as an addition to An Attempt to Prove the Motion of the Earth from Observations.[71] Hooke clearly postulated mutual attractions between the Sun and planets, in a way that increased with nearness to the attracting body, along with a principle of linear inertia. Hooke's statements up to 1674 made no mention, however, that an inverse square law applies or might apply to these attractions. Hooke's gravitation was also not yet universal, though it approached universality more closely than previous hypotheses.[72] Hooke also did not provide accompanying evidence or mathematical demonstration. On these two aspects, Hooke stated in 1674: "Now what these several degrees [of gravitational attraction] are I have not yet experimentally verified" (indicating that he did not yet know what law the gravitation might follow); and as to his whole proposal: "This I only hint at present", "having my self many other things in hand which I would first compleat, and therefore cannot so well attend it" (i.e., "prosecuting this Inquiry").[71]

In November 1679, Hooke began an exchange of letters with Newton, of which the full text is now published.[73] Hooke told Newton that Hooke had been appointed to manage the Royal Society's correspondence,[74] and wished to hear from members about their researches, or their views about the researches of others; and as if to whet Newton's interest, he asked what Newton thought about various matters, giving a whole list, mentioning "compounding the celestial motions of the planets of a direct motion by the tangent and an attractive motion towards the central body", and "my hypothesis of the lawes or causes of springinesse", and then a new hypothesis from Paris about planetary motions (which Hooke described at length), and then efforts to carry out or improve national surveys, the difference of latitude between London and Cambridge, and other items. Newton's reply offered "a fansy of my own" about a terrestrial experiment (not a proposal about celestial motions) which might detect the Earth's motion, by the use of a body first suspended in air and then dropped to let it fall. The main point was to indicate how Newton thought the falling body could experimentally reveal the Earth's motion by its direction of deviation from the vertical, but he went on hypothetically to consider how its motion could continue if the solid Earth had not been in the way (on a spiral path to the centre). Hooke disagreed with Newton's idea of how the body would continue to move.[75] A short further correspondence developed, and towards the end of it Hooke, writing on 6 January 1680 to Newton, communicated his "supposition ... that the Attraction always is in a duplicate proportion to the Distance from the Center Reciprocall, and Consequently that the Velocity will be in a subduplicate proportion to the Attraction and Consequently as Kepler Supposes Reciprocall to the Distance."[76] (Hooke's inference about the velocity was actually incorrect.[77])

In 1686, when the first book of Newton's Principia was presented to the Royal Society, Hooke claimed that Newton had obtained from him the "notion" of "the rule of the decrease of Gravity, being reciprocally as the squares of the distances from the Center". At the same time (according to Edmond Halley's contemporary report) Hooke agreed that "the Demonstration of the Curves generated therby" was wholly Newton's.[73]

A recent assessment about the early history of the inverse square law is that "by the late 1660s," the assumption of an "inverse proportion between gravity and the square of distance was rather common and had been advanced by a number of different people for different reasons".[78] Newton himself had shown in the 1660s that for planetary motion under a circular assumption, force in the radial direction had an inverse-square relation with distance from the center.[68] Newton, faced in May 1686 with Hooke's claim on the inverse square law, denied that Hooke was to be credited as author of the idea, giving reasons including the citation of prior work by others before Hooke.[73] Newton also firmly claimed that even if it had happened that he had first heard of the inverse square proportion from Hooke, which it had not, he would still have some rights to it in view of his mathematical developments and demonstrations, which enabled observations to be relied on as evidence of its accuracy, while Hooke, without mathematical demonstrations and evidence in favour of the supposition, could only guess (according to Newton) that it was approximately valid "at great distances from the center".[73]

The background described above shows there was basis for Newton to deny deriving the inverse square law from Hooke. On the other hand, Newton did accept and acknowledge, in all editions of the Principia, that Hooke (but not exclusively Hooke) had separately appreciated the inverse square law in the solar system. Newton acknowledged Wren, Hooke and Halley in this connection in the Scholium to Proposition 4 in Book 1.[79] Newton also acknowledged to Halley that his correspondence with Hooke in 1679–80 had reawakened his dormant interest in astronomical matters, but that did not mean, according to Newton, that Hooke had told Newton anything new or original: "yet am I not beholden to him for any light into that business but only for the diversion he gave me from my other studies to think on these things & for his dogmaticalness in writing as if he had found the motion in the Ellipsis, which inclined me to try it ...".[73]) Newton's reawakening interest in astronomy received further stimulus by the appearance of a comet in the winter of 1680/1681, on which he corresponded with John Flamsteed.[80]

In 1759, decades after the deaths of both Newton and Hooke, Alexis Clairaut, mathematical astronomer eminent in his own right in the field of gravitational studies, made his assessment after reviewing what Hooke had published on gravitation. "One must not think that this idea ... of Hooke diminishes Newton's glory", Clairaut wrote; "The example of Hooke" serves "to show what a distance there is between a truth that is glimpsed and a truth that is demonstrated".[81][82]

Location of early-edition copies

A page from the Principia

Since only between 250 and 400 copies were printed by the Royal Society, the first edition is very rare. Several rare-book collections contain first edition and other early copies of Newton's Principia Mathematica, including:

- Cambridge University Library has Newton's own copy of the first edition, with handwritten notes for the second edition.[83]

- Somerville College Library, Oxford owns a second edition copy.

- The Earl Gregg Swem Library at the College of William & Mary has a first edition copy of the Principia.[84] In it are notes in Latin throughout by a not yet identified hand.

- The Frederick E. Brasch Collection of Newton and Newtoniana in Stanford University also has a first edition of the Principia.[85]

- A first edition forms part of the Crawford Collection, housed at the Royal Observatory, Edinburgh.[86]

- The Uppsala University Library owns a first edition copy, which was stolen in the 1960s and returned to the library in 2009.[87]

- The Folger Shakespeare Library in Washington, D.C. owns a first edition, as well as a 1713 second edition.

- The Huntington Library in San Marino, California owns Isaac Newton′s personal copy, with annotations in Newton′s own hand.[88]

- The Martin Bodmer Library keeps a copy of the original edition that was owned by Leibniz. In it, we can see handwritten notes by Leibniz, in particular concerning the controversy of who first formulated calculus (although he published it later, Newton argued that he developed it earlier).[89]

- The University of St Andrews Library holds both variants of the first edition, as well as copies of the 1713 and 1726 editions.[90]

A facsimile edition (based on the 3rd edition of 1726 but with variant readings from earlier editions and important annotations) was published in 1972 by Alexandre Koyré and I. Bernard Cohen.[5]

Later editions

Two later editions were published by Newton:Second edition, 1713

Newton had been urged to make a new edition of the Principia since the early 1690s, partly because copies of the first edition had already become very rare and expensive within a few years after 1687.[92] Newton referred to his plans for a second edition in correspondence with Flamsteed in November 1694:[93] Newton also maintained annotated copies of the first edition specially bound up with interleaves on which he could note his revisions; two of these copies still survive:[94] but he had not completed the revisions by 1708, and of two would-be editors, Newton had almost severed connections with one, Nicolas Fatio de Duillier, and the other, David Gregory seems not to have met with Newton's approval and was also terminally ill, dying later in 1708. Nevertheless, reasons were accumulating not to put off the new edition any longer.[95] Richard Bentley, master of Trinity College, persuaded Newton to allow him to undertake a second edition, and in June 1708 Bentley wrote to Newton with a specimen print of the first sheet, at the same time expressing the (unfulfilled) hope that Newton had made progress towards finishing the revisions.[96] It seems that Bentley then realised that the editorship was technically too difficult for him, and with Newton's consent he appointed Roger Cotes, Plumian professor of astronomy at Trinity, to undertake the editorship for him as a kind of deputy (but Bentley still made the publishing arrangements and had the financial responsibility and profit). The correspondence of 1709–1713 shows Cotes reporting to two masters, Bentley and Newton, and managing (and often correcting) a large and important set of revisions to which Newton sometimes could not give his full attention.[97] Under the weight of Cotes' efforts, but impeded by priority disputes between Newton and Leibniz,[98] and by troubles at the Mint,[99] Cotes was able to announce publication to Newton on 30 June 1713.[100] Bentley sent Newton only six presentation copies; Cotes was unpaid; Newton omitted any acknowledgement to Cotes.Among those who gave Newton corrections for the Second Edition were: Firmin Abauzit, Roger Cotes and David Gregory. However, Newton omitted acknowledgements to some because of the priority disputes. John Flamsteed, the Astronomer Royal, suffered this especially.

The Second Edition was the basis of the first edition to be printed abroad, which appeared in Amsterdam in 1714.

Third edition, 1726

The third edition was published 25 March 1726, under the stewardship of Henry Pemberton, M.D., a man of the greatest skill in these matters...; Pemberton later said that this recognition was worth more to him than the two hundred guinea award from Newton.[101]Annotated and other editions

In 1739–42, two French priests, Pères Thomas LeSeur and François Jacquier (of the Minim order, but sometimes erroneously identified as Jesuits), produced with the assistance of J.-L. Calandrini an extensively annotated version of the Principia in the 3rd edition of 1726. Sometimes this is referred to as the Jesuit edition: it was much used, and reprinted more than once in Scotland during the 19th century.[102]Émilie du Châtelet also made a translation of Newton's Principia into French. Unlike LeSeur and Jacquier's edition, hers was a complete translation of Newton's three books and their prefaces. She also included a Commentary section where she fused the three books into a much clearer and easier to understand summary. She included an analytical section where she applied the new mathematics of calculus to Newton's most controversial theories. Previously, geometry was the standard mathematics used to analyse theories. Du Châtelet's translation is the only complete one to have been done in French and hers remains the standard French translation to this day.[103]

English translations

Two full English translations of Newton's Principia have appeared, both based on Newton's 3rd edition of 1726.The first, from 1729, by Andrew Motte,[3] was described by Newton scholar I. Bernard Cohen (in 1968) as "still of enormous value in conveying to us the sense of Newton's words in their own time, and it is generally faithful to the original: clear, and well written".[104] The 1729 version was the basis for several republications, often incorporating revisions, among them a widely used modernised English version of 1934, which appeared under the editorial name of Florian Cajori (though completed and published only some years after his death). Cohen pointed out ways in which the 18th-century terminology and punctuation of the 1729 translation might be confusing to modern readers, but he also made severe criticisms of the 1934 modernised English version, and showed that the revisions had been made without regard to the original, also demonstrating gross errors "that provided the final impetus to our decision to produce a wholly new translation".[105]

The second full English translation, into modern English, is the work that resulted from this decision by collaborating translators I. Bernard Cohen, Anne Whitman, and Julia Budenz; it was published in 1999 with a guide by way of introduction.[106]

William H. Donahue has published a translation of the work's central argument, published in 1996, along with expansion of included proofs and ample commentary.[107] The book was developed as a textbook for classes at St. John's College and the aim of this translation is to be faithful to the Latin text.[108]