From Wikipedia, the free encyclopedia

A

scientific theory is an explanation of an aspect of the

natural world that can be

repeatedly tested and verified in accordance with the

scientific method, using accepted

protocols of

observation, measurement, and evaluation of results. Where possible, theories are tested under controlled conditions in an

experiment. In circumstances not amenable to experimental testing, theories are evaluated through principles of

abductive reasoning. Established scientific theories have withstood rigorous scrutiny and embody scientific

knowledge.

The meaning of the term

scientific theory (often contracted to

theory for brevity) as used in the

disciplines of science is significantly different from the common

vernacular usage of

theory. In everyday speech,

theory can imply an explanation that represents an unsubstantiated and speculative

guess,

whereas in science it describes an explanation that has been tested and

widely accepted as valid. These different usages are comparable to the

opposing usages of

prediction in science versus common speech, where it denotes a mere hope.

The strength of a scientific theory is related to the diversity of phenomena it can explain and its simplicity. As additional

scientific evidence

is gathered, a scientific theory may be modified and ultimately

rejected if it cannot be made to fit the new findings; in such

circumstances, a more accurate theory is then required. That doesn’t

mean that all theories can be fundamentally changed (for example, well

established foundational scientific theories such as evolution,

heliocentric theory, cell theory, theory of plate tectonics etc). In

certain cases, the less-accurate unmodified scientific theory can still

be treated as a theory if it is useful (due to its sheer simplicity) as

an approximation under specific conditions. A case in point is

Newton's laws of motion, which can serve as an approximation to

special relativity at velocities that are small relative to the speed of light.

Scientific theories are

testable and make

falsifiable predictions. They describe the causes of a particular natural phenomenon and are used to explain and predict aspects of the physical

universe

or specific areas of inquiry (for example, electricity, chemistry, and

astronomy). Scientists use theories to further scientific knowledge, as

well as to facilitate advances in

technology or

medicine.

As with other forms of scientific knowledge, scientific theories are both

deductive and

inductive, aiming for

predictive and

explanatory power.

The

paleontologist Stephen Jay Gould

wrote that "...facts and theories are different things, not rungs in a

hierarchy of increasing certainty. Facts are the world's data. Theories

are structures of ideas that explain and interpret facts."

Types

Albert Einstein

described two types of scientific theories: "Constructive theories" and

"principle theories". Constructive theories are constructive models for

phenomena: for example,

kinetic energy. Principle theories are empirical generalisations such as Newton's laws of motion.

Characteristics

Essential criteria

Typically for any theory to be accepted within most academia there is

one simple criterion. The essential criterion is that the theory must

be observable and repeatable. The aforementioned criterion is essential

to prevent fraud and perpetuate science itself.

The

tectonic plates of the world were mapped in the second half of the 20th

century. Plate tectonic theory successfully explains numerous

observations about the Earth, including the distribution of earthquakes,

mountains, continents, and oceans.

The defining characteristic of all scientific knowledge, including theories, is the ability to make

falsifiable or testable

predictions.

The relevance and specificity of those predictions determine how

potentially useful the theory is. A would-be theory that makes no

observable predictions is not a scientific theory at all. Predictions

not sufficiently specific to be tested are similarly not useful. In both

cases, the term "theory" is not applicable.

A body of descriptions of

knowledge can be called a theory if it fulfills the following criteria:

- It makes falsifiable predictions with consistent accuracy across a broad area of scientific inquiry (such as mechanics).

- It is well-supported by many independent strands of evidence, rather than a single foundation.

- It is consistent with preexisting experimental results and at least

as accurate in its predictions as are any preexisting theories.

These qualities are certainly true of such established theories as

special and

general relativity,

quantum mechanics,

plate tectonics, the

modern evolutionary synthesis, etc.

Other criteria

In addition, scientists prefer to work with a theory that meets the following qualities:

- It can be subjected to minor adaptations to account for new data

that do not fit it perfectly, as they are discovered, thus increasing

its predictive capability over time.

- It is among the most parsimonious explanations, economical in the use of proposed entities or explanatory steps as per Occam's razor.

This is because for each accepted explanation of a phenomenon, there

may be an extremely large, perhaps even incomprehensible, number of

possible and more complex alternatives, because one can always burden

failing explanations with ad hoc hypotheses to prevent them from being falsified; therefore, simpler theories are preferable to more complex ones because they are more testable.

Definitions from scientific organizations

The

United States National Academy of Sciences defines scientific theories as follows:

The formal scientific definition of theory is quite

different from the everyday meaning of the word. It refers to a

comprehensive explanation of some aspect of nature that is supported by a

vast body of evidence. Many scientific theories are so well established

that no new evidence is likely to alter them substantially. For

example, no new evidence will demonstrate that the Earth does not orbit

around the sun (heliocentric theory), or that living things are not made

of cells (cell theory), that matter is not composed of atoms, or that

the surface of the Earth is not divided into solid plates that have

moved over geological timescales (the theory of plate tectonics)...One

of the most useful properties of scientific theories is that they can be

used to make predictions about natural events or phenomena that have

not yet been observed.

From the

American Association for the Advancement of Science:

A scientific theory is a well-substantiated explanation

of some aspect of the natural world, based on a body of facts that have

been repeatedly confirmed through observation and experiment. Such

fact-supported theories are not "guesses" but reliable accounts of the

real world. The theory of biological evolution is more than "just a

theory". It is as factual an explanation of the universe as the atomic

theory of matter or the germ theory of disease. Our understanding of

gravity is still a work in progress. But the phenomenon of gravity, like

evolution, is an accepted fact.

Note that the term

theory would not be appropriate for describing untested but intricate hypotheses or even

scientific models.

Formation

The

scientific method involves the proposal and testing of

hypotheses, by deriving

predictions

from the hypotheses about the results of future experiments, then

performing those experiments to see whether the predictions are valid.

This provides evidence either for or against the hypothesis. When enough

experimental results have been gathered in a particular area of

inquiry, scientists may propose an explanatory framework that accounts

for as many of these as possible. This explanation is also tested, and

if it fulfills the necessary criteria (see above), then the explanation

becomes a theory. This can take many years, as it can be difficult or

complicated to gather sufficient evidence.

Once all of the criteria have been met, it will be widely accepted by scientists (see

scientific consensus)

as the best available explanation of at least some phenomena. It will

have made predictions of phenomena that previous theories could not

explain or could not predict accurately, and it will have resisted

attempts at falsification. The strength of the evidence is evaluated by

the scientific community, and the most important experiments will have

been replicated by multiple independent groups.

Theories do not have to be perfectly accurate to be scientifically useful. For example, the predictions made by

classical mechanics

are known to be inaccurate in the relatistivic realm, but they are

almost exactly correct at the comparatively low velocities of common

human experience. In

chemistry, there are many

acid-base theories

providing highly divergent explanations of the underlying nature of

acidic and basic compounds, but they are very useful for predicting

their chemical behavior. Like all knowledge in science, no theory can ever be completely

certain, since it is possible that future experiments might conflict with the theory's predictions.

However, theories supported by the scientific consensus have the

highest level of certainty of any scientific knowledge; for example,

that all objects are subject to

gravity or that life on Earth

evolved from a

common ancestor.

Acceptance of a theory does not require that all of its major

predictions be tested, if it is already supported by sufficiently strong

evidence. For example, certain tests may be unfeasible or technically

difficult. As a result, theories may make predictions that have not yet

been confirmed or proven incorrect; in this case, the predicted results

may be described informally with the term "theoretical". These

predictions can be tested at a later time, and if they are incorrect,

this may lead to the revision or rejection of the theory.

Modification and improvement

If

experimental results contrary to a theory's predictions are observed,

scientists first evaluate whether the experimental design was sound, and

if so they confirm the results by independent

replication.

A search for potential improvements to the theory then begins.

Solutions may require minor or major changes to the theory, or none at

all if a satisfactory explanation is found within the theory's existing

framework.

Over time, as successive modifications build on top of each other,

theories consistently improve and greater predictive accuracy is

achieved. Since each new version of a theory (or a completely new

theory) must have more predictive and explanatory power than the last,

scientific knowledge consistently becomes more accurate over time.

If modifications to the theory or other explanations seem to be

insufficient to account for the new results, then a new theory may be

required. Since scientific knowledge is usually durable, this occurs

much less commonly than modification.

Furthermore, until such a theory is proposed and accepted, the previous

theory will be retained. This is because it is still the best available

explanation for many other phenomena, as verified by its predictive

power in other contexts. For example, it has been known since 1859 that

the observed

perihelion precession of Mercury violates Newtonian mechanics, but the theory remained the best explanation available until

relativity

was supported by sufficient evidence. Also, while new theories may be

proposed by a single person or by many, the cycle of modifications

eventually incorporates contributions from many different scientists.

After the changes, the accepted theory will explain more

phenomena and have greater predictive power (if it did not, the changes

would not be adopted); this new explanation will then be open to further

replacement or modification. If a theory does not require modification

despite repeated tests, this implies that the theory is very accurate.

This also means that accepted theories continue to accumulate evidence

over time, and the length of time that a theory (or any of its

principles) remains accepted often indicates the strength of its

supporting evidence.

Unification

In some cases, two or more theories may be replaced by a single

theory that explains the previous theories as approximations or special

cases, analogous to the way a theory is a unifying explanation for many

confirmed hypotheses; this is referred to as

unification of theories. For example,

electricity and

magnetism are now known to be two aspects of the same phenomenon, referred to as

electromagnetism.

When the predictions of different theories appear to contradict

each other, this is also resolved by either further evidence or

unification. For example, physical theories in the 19th century implied

that the

Sun could not have been burning long enough to allow certain geological changes as well as the

evolution of life. This was resolved by the discovery of

nuclear fusion, the main energy source of the Sun.

Contradictions can also be explained as the result of theories

approximating more fundamental (non-contradictory) phenomena. For

example,

atomic theory is an approximation of

quantum mechanics. Current theories describe three separate

fundamental phenomena of which all other theories are approximations; the potential unification of these is sometimes called the

Theory of Everything.

Example: Relativity

In 1905,

Albert Einstein published the principle of

special relativity, which soon became a theory. Special relativity predicted the alignment of the Newtonian principle of

Galilean invariance, also termed

Galilean relativity, with the electromagnetic field. By omitting from special relativity the

luminiferous aether, Einstein stated that

time dilation and

length contraction measured in an object in relative motion is

inertial—that is, the object exhibits constant

velocity, which is

speed with

direction, when measured by its observer. He thereby duplicated the

Lorentz transformation and the

Lorentz contraction

that had been hypothesized to resolve experimental riddles and inserted

into electrodynamic theory as dynamical consequences of the aether's

properties. An elegant theory, special relativity yielded its own

consequences, such as the

equivalence of mass and energy transforming into one another

and the resolution of the paradox that an excitation of the

electromagnetic field could be viewed in one reference frame as

electricity, but in another as magnetism.

Einstein sought to generalize the invariance principle to all reference frames, whether inertial or accelerating. Rejecting Newtonian gravitation—a

central force acting instantly at a distance—Einstein presumed a gravitational field. In 1907, Einstein's

equivalence principle implied that a free fall within a uniform gravitational field is equivalent to

inertial motion. By extending special relativity's effects into three dimensions,

general relativity extended length contraction into

space contraction,

conceiving of 4D space-time as the gravitational field that alters

geometrically and sets all local objects' pathways. Even massless

energy exerts gravitational motion on local objects by "curving" the

geometrical "surface" of 4D space-time. Yet unless the energy is vast,

its relativistic effects of contracting space and slowing time are

negligible when merely predicting motion. Although general relativity is

embraced as the more explanatory theory via

scientific realism, Newton's theory remains successful as merely a predictive theory via

instrumentalism. To calculate trajectories, engineers and NASA still uses Newton's equations, which are simpler to operate.

Theories and laws

Both scientific laws and scientific theories are produced from the

scientific method through the formation and testing of hypotheses, and

can predict the behavior of the natural world. Both are typically

well-supported by observations and/or experimental evidence. However, scientific laws are descriptive accounts of how nature will behave under certain conditions.

Scientific theories are broader in scope, and give overarching

explanations of how nature works and why it exhibits certain

characteristics. Theories are supported by evidence from many different

sources, and may contain one or several laws.

A common misconception is that scientific theories are

rudimentary ideas that will eventually graduate into scientific laws

when enough data and evidence have been accumulated. A theory does not

change into a scientific law with the accumulation of new or better

evidence. A theory will always remain a theory; a law will always remain

a law. Both theories and laws could potentially be falsified by countervailing evidence.

Theories and laws are also distinct from

hypotheses. Unlike hypotheses, theories and laws may be simply referred to as

scientific fact.

However, in science, theories are different from facts even when they are well supported. For example,

evolution is both a

theory and a fact.

About theories

Theories as axioms

The

logical positivists thought of scientific theories as statements in a

formal language.

First-order logic is an example of a formal language. The logical

positivists envisaged a similar scientific language. In addition to

scientific theories, the language also included observation sentences

("the sun rises in the east"), definitions, and mathematical statements.

The phenomena explained by the theories, if they could not be directly

observed by the senses (for example,

atoms and

radio waves), were treated as theoretical concepts. In this view, theories function as

axioms: predicted observations are derived from the theories much like

theorems are derived in

Euclidean geometry.

However, the predictions are then tested against reality to verify the

theories, and the "axioms" can be revised as a direct result.

The phrase "

the received view of theories" is used to describe this approach. Terms commonly associated with it are "

linguistic" (because theories are components of a language) and "

syntactic"

(because a language has rules about how symbols can be strung

together). Problems in defining this kind of language precisely, e.g.,

are objects seen in microscopes observed or are they theoretical

objects, led to the effective demise of logical positivism in the 1970s.

Theories as models

The

semantic view of theories, which identifies scientific theories with

models rather than

propositions, has replaced the received view as the dominant position in theory formulation in the philosophy of science.

A model is a logical framework intended to represent reality (a "model

of reality"), similar to the way that a map is a graphical model that

represents the territory of a city or country.

In this approach, theories are a specific category of models that fulfill the necessary criteria (see

above).

One can use language to describe a model; however, the theory is the

model (or a collection of similar models), and not the description of

the model. A model of the solar system, for example, might consist of

abstract objects that represent the sun and the planets. These objects

have associated properties, e.g., positions, velocities, and masses. The

model parameters, e.g., Newton's Law of Gravitation, determine how the

positions and velocities change with time. This model can then be tested

to see whether it accurately predicts future observations; astronomers

can verify that the positions of the model's objects over time match the

actual positions of the planets. For most planets, the Newtonian

model's predictions are accurate; for

Mercury, it is slightly inaccurate and the model of

general relativity must be used instead.

The word "

semantic"

refers to the way that a model represents the real world. The

representation (literally, "re-presentation") describes particular

aspects of a phenomenon or the manner of interaction among a set of

phenomena. For instance, a scale model of a house or of a solar system

is clearly not an actual house or an actual solar system; the aspects of

an actual house or an actual solar system represented in a scale model

are, only in certain limited ways, representative of the actual entity. A

scale model of a house is not a house; but to someone who wants to

learn about houses, analogous to a scientist who wants to understand reality, a sufficiently detailed scale model may suffice.

Differences between theory and model

Several commentators

have stated that the distinguishing characteristic of theories is that

they are explanatory as well as descriptive, while models are only

descriptive (although still predictive in a more limited sense).

Philosopher

Stephen Pepper

also distinguished between theories and models, and said in 1948 that

general models and theories are predicated on a "root" metaphor that

constrains how scientists theorize and model a phenomenon and thus

arrive at testable hypotheses.

Engineering practice makes a distinction between "mathematical

models" and "physical models"; the cost of fabricating a physical model

can be minimized by first creating a mathematical model using a computer

software package, such as a

computer aided design tool. The component parts are each themselves modelled, and the fabrication tolerances are specified. An

exploded view drawing

is used to lay out the fabrication sequence. Simulation packages for

displaying each of the subassemblies allow the parts to be rotated,

magnified, in realistic detail. Software packages for creating the bill

of materials for construction allows subcontractors to specialize in

assembly processes, which spreads the cost of manufacturing machinery

among multiple customers. See:

Computer-aided engineering,

Computer-aided manufacturing, and

3D printing

Assumptions in formulating theories

An assumption (or

axiom) is a statement that is accepted without evidence. For example, assumptions can be used as premises in a logical argument.

Isaac Asimov described assumptions as follows:

...it is incorrect to speak of an assumption as either

true or false, since there is no way of proving it to be either (If

there were, it would no longer be an assumption). It is better to

consider assumptions as either useful or useless, depending on whether

deductions made from them corresponded to reality...Since we must start

somewhere, we must have assumptions, but at least let us have as few

assumptions as possible.

Certain assumptions are necessary for all empirical claims (e.g. the assumption that

reality

exists). However, theories do not generally make assumptions in the

conventional sense (statements accepted without evidence). While

assumptions are often incorporated during the formation of new theories,

these are either supported by evidence (such as from previously

existing theories) or the evidence is produced in the course of

validating the theory. This may be as simple as observing that the

theory makes accurate predictions, which is evidence that any

assumptions made at the outset are correct or approximately correct

under the conditions tested.

Conventional assumptions, without evidence, may be used if the

theory is only intended to apply when the assumption is valid (or

approximately valid). For example, the

special theory of relativity assumes an

inertial frame of reference.

The theory makes accurate predictions when the assumption is valid, and

does not make accurate predictions when the assumption is not valid.

Such assumptions are often the point with which older theories are

succeeded by new ones (the

general theory of relativity works in non-inertial reference frames as well).

The term "assumption" is actually broader than its standard use,

etymologically speaking. The Oxford English Dictionary (OED) and online

Wiktionary indicate its Latin source as

assumere ("accept, to take to oneself, adopt, usurp"), which is a conjunction of

ad- ("to, towards, at") and

sumere (to take). The root survives, with shifted meanings, in the Italian

assumere and Spanish

sumir.

The first sense of "assume" in the OED is "to take unto (oneself),

receive, accept, adopt". The term was originally employed in religious

contexts as in "to receive up into heaven", especially "the reception of

the Virgin Mary into heaven, with body preserved from corruption",

(1297 CE) but it was also simply used to refer to "receive into

association" or "adopt into partnership". Moreover, other senses of

assumere included (i) "investing oneself with (an attribute)", (ii) "to

undertake" (especially in Law), (iii) "to take to oneself in appearance

only, to pretend to possess", and (iv) "to suppose a thing to be" (all

senses from OED entry on "assume"; the OED entry for "assumption" is

almost perfectly symmetrical in senses). Thus, "assumption" connotes

other associations than the contemporary standard sense of "that which

is assumed or taken for granted; a supposition, postulate" (only the

11th of 12 senses of "assumption", and the 10th of 11 senses of

"assume").

Descriptions

From philosophers of science

Karl Popper described the characteristics of a scientific theory as follows:

- It is easy to obtain confirmations, or verifications, for nearly every theory—if we look for confirmations.

- Confirmations should count only if they are the result of risky

predictions; that is to say, if, unenlightened by the theory in

question, we should have expected an event which was incompatible with

the theory—an event which would have refuted the theory.

- Every "good" scientific theory is a prohibition: it forbids certain

things to happen. The more a theory forbids, the better it is.

- A theory which is not refutable by any conceivable event is

non-scientific. Irrefutability is not a virtue of a theory (as people

often think) but a vice.

- Every genuine test of a theory is an attempt to falsify it, or to

refute it. Testability is falsifiability; but there are degrees of

testability: some theories are more testable, more exposed to

refutation, than others; they take, as it were, greater risks.

- Confirming evidence should not count except when it is the result of

a genuine test of the theory; and this means that it can be presented

as a serious but unsuccessful attempt to falsify the theory. (I now

speak in such cases of "corroborating evidence".)

- Some genuinely testable theories, when found to be false, might

still be upheld by their admirers—for example by introducing post hoc

(after the fact) some auxiliary hypothesis

or assumption, or by reinterpreting the theory post hoc in such a way

that it escapes refutation. Such a procedure is always possible, but it

rescues the theory from refutation only at the price of destroying, or

at least lowering, its scientific status, by tampering with evidence.

The temptation to tamper can be minimized by first taking the time to

write down the testing protocol before embarking on the scientific work.

Popper summarized these statements by saying that the central

criterion of the scientific status of a theory is its "falsifiability,

or refutability, or testability". Echoing this,

Stephen Hawking

states, "A theory is a good theory if it satisfies two requirements: It

must accurately describe a large class of observations on the basis of a

model that contains only a few arbitrary elements, and it must make

definite predictions about the results of future observations." He also

discusses the "unprovable but falsifiable" nature of theories, which is a

necessary consequence of inductive logic, and that "you can disprove a

theory by finding even a single observation that disagrees with the

predictions of the theory".

Several philosophers and historians of science have, however,

argued that Popper's definition of theory as a set of falsifiable

statements is wrong because, as

Philip Kitcher

has pointed out, if one took a strictly Popperian view of "theory",

observations of Uranus when first discovered in 1781 would have

"falsified" Newton's celestial mechanics. Rather, people suggested that

another planet influenced Uranus' orbit—and this prediction was indeed

eventually confirmed.

Kitcher agrees with Popper that "There is surely something right in the idea that a science can succeed only if it can fail."

He also says that scientific theories include statements that cannot be

falsified, and that good theories must also be creative. He insists we

view scientific theories as an "elaborate collection of statements",

some of which are not falsifiable, while others—those he calls

"auxiliary hypotheses", are.

According to Kitcher, good scientific theories must have three features:

- Unity: "A science should be unified…. Good theories consist of

just one problem-solving strategy, or a small family of problem-solving

strategies, that can be applied to a wide range of problems."

- Fecundity:

"A great scientific theory, like Newton's, opens up new areas of

research…. Because a theory presents a new way of looking at the world,

it can lead us to ask new questions, and so to embark on new and

fruitful lines of inquiry…. Typically, a flourishing science is

incomplete. At any time, it raises more questions than it can currently

answer. But incompleteness is not vice. On the contrary, incompleteness

is the mother of fecundity…. A good theory should be productive; it

should raise new questions and presume those questions can be answered

without giving up its problem-solving strategies."

- Auxiliary hypotheses

that are independently testable: "An auxiliary hypothesis ought to be

testable independently of the particular problem it is introduced to

solve, independently of the theory it is designed to save." (For

example, the evidence for the existence of Neptune is independent of the

anomalies in Uranus's orbit.)

Like other definitions of theories, including Popper's, Kitcher makes

it clear that a theory must include statements that have observational

consequences. But, like the observation of irregularities in the orbit

of Uranus, falsification is only one possible consequence of

observation. The production of new hypotheses is another possible and

equally important result.

Analogies and metaphors

The concept of a scientific theory has also been described using analogies and metaphors. For instance, the logical empiricist

Carl Gustav Hempel likened the structure of a scientific theory to a "complex spatial network:"

Its terms are represented by the knots, while the

threads connecting the latter correspond, in part, to the definitions

and, in part, to the fundamental and derivative hypotheses included in

the theory. The whole system floats, as it were, above the plane of

observation and is anchored to it by the rules of interpretation. These

might be viewed as strings which are not part of the network but link

certain points of the latter with specific places in the plane of

observation. By virtue of these interpretive connections, the network

can function as a scientific theory: From certain observational data, we

may ascend, via an interpretive string, to some point in the

theoretical network, thence proceed, via definitions and hypotheses, to

other points, from which another interpretive string permits a descent

to the plane of observation.

Michael Polanyi made an analogy between a theory and a map:

A theory is something other than myself. It may be set

out on paper as a system of rules, and it is the more truly a theory the

more completely it can be put down in such terms. Mathematical theory

reaches the highest perfection in this respect. But even a geographical

map fully embodies in itself a set of strict rules for finding one's way

through a region of otherwise uncharted experience. Indeed, all theory

may be regarded as a kind of map extended over space and time.

A scientific theory can also be thought of as a book that captures

the fundamental information about the world, a book that must be

researched, written, and shared. In 1623,

Galileo Galilei wrote:

Philosophy [i.e. physics] is written in this grand book—I

mean the universe—which stands continually open to our gaze, but it

cannot be understood unless one first learns to comprehend the language

and interpret the characters in which it is written. It is written in

the language of mathematics, and its characters are triangles, circles,

and other geometrical figures, without which it is humanly impossible to

understand a single word of it; without these, one is wandering around

in a dark labyrinth.

The book metaphor could also be applied in the following passage, by the contemporary philosopher of science

Ian Hacking:

I myself prefer an Argentine fantasy. God did not write a

Book of Nature of the sort that the old Europeans imagined. He wrote a

Borgesian library, each book of which is as brief as possible, yet each

book of which is inconsistent with every other. No book is redundant.

For every book there is some humanly accessible bit of Nature such that

that book, and no other, makes possible the comprehension, prediction

and influencing of what is going on…Leibniz said that God chose a world

which maximized the variety of phenomena while choosing the simplest

laws. Exactly so: but the best way to maximize phenomena and have

simplest laws is to have the laws inconsistent with each other, each

applying to this or that but none applying to all.

In physics

In

physics, the term

theory is generally used for a mathematical framework—derived from a small set of basic

postulates

(usually symmetries—like equality of locations in space or in time, or

identity of electrons, etc.)—that is capable of producing experimental

predictions for a given category of physical systems. A good example is

classical electromagnetism, which encompasses results derived from

gauge symmetry (sometimes called

gauge invariance) in a form of a few equations called

Maxwell's equations.

The specific mathematical aspects of classical electromagnetic theory

are termed "laws of electromagnetism," reflecting the level of

consistent and reproducible evidence that supports them. Within

electromagnetic theory generally, there are numerous hypotheses about

how electromagnetism applies to specific situations. Many of these

hypotheses are already considered to be adequately tested, with new ones

always in the making and perhaps untested. An example of the latter

might be the

radiation reaction force. As of 2009, its effects on the periodic motion of charges are detectable in

synchrotrons, but only as

averaged

effects over time. Some researchers are now considering experiments

that could observe these effects at the instantaneous level (i.e. not

averaged over time).

Examples

Note that many fields of inquiry do not have specific named theories, e.g.

developmental biology.

Scientific knowledge outside a named theory can still have a high level

of certainty, depending on the amount of evidence supporting it. Also

note that since theories draw evidence from many different fields, the

categorization is not absolute.

- Biology: cell theory, theory of evolution (modern evolutionary synthesis), germ theory, particulate inheritance theory, dual inheritance theory

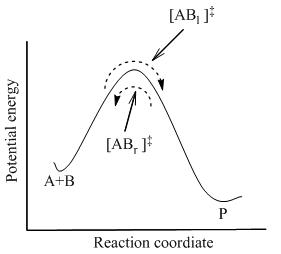

- Chemistry: collision theory, kinetic theory of gases, Lewis theory, molecular theory, molecular orbital theory, transition state theory, valence bond theory

- Physics: atomic theory, Big Bang theory, Dynamo theory, perturbation theory, theory of relativity (successor to classical mechanics), quantum field theory

- Other: Climate change theory (from climatology),[56] plate tectonics theory (from geology), theories of the origin of the Moon, theories for the Moon illusion

![{\displaystyle {\ce {AB <=>[k_1][k_{-1}] {A}+ {B}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8df558c371c7f125f5833608e30f847abe2601de)

![{\displaystyle {\ce {{A}+{B}<=>{[AB]^{\ddagger }}->{P}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0b873373ba74f1671f87574af29e3a0a9ba9c63d)

![{\displaystyle [{\ce {AB}}_{r}]^{\ddagger }=[{\ce {AB}}_{l}]^{\ddagger }={\frac {1}{2}}[{\ce {AB}}]^{\ddagger }}](https://wikimedia.org/api/rest_v1/media/math/render/svg/90abaaa14ee8a77731a50367fab640c94185a043)

![{\displaystyle K^{\ddagger \ominus }={\frac {\ce {[AB]^{\ddagger }}}{\ce {[A][B]}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/87608a9f0dbd11223a1722caf645739b4400b8b4)

![{\displaystyle [{\ce {AB}}]^{\ddagger }=K^{\ddagger \ominus }[{\ce {A}}][{\ce {B}}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6116d9ee717d297370d39b5b918d1e5d790ff3d4)

![{\displaystyle {\frac {d[{\ce {P}}]}{dt}}=k^{\ddagger \ominus }[{\ce {AB}}]^{\ddagger }=k^{\ddagger }K^{\ddagger }[{\ce {A}}][{\ce {B}}]=k[{\ce {A}}][{\ce {B}}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3e57c85cb84cdb2c75e3d0cfb8599f7f0d0230c0)